- Technical Support

- Find My Rep

You are here

The SAGE Handbook of Quantitative Methodology for the Social Sciences

- David Kaplan - University of Wisconsin - Madison, USA

- Description

Click 'Additional Materials' for downloadable samples "The 24 chapters in this Handbook span a wide range of topics, presenting the latest quantitative developments in scaling theory, measurement, categorical data analysis, multilevel models, latent variable models, and foundational issues. Each chapter reviews the historical context for the topic and then describes current work, including illustrative examples where appropriate. The level of presentation throughout the book is detailed enough to convey genuine understanding without overwhelming the reader with technical material. Ample references are given for readers who wish to pursue topics in more detail. The book will appeal to both researchers who wish to update their knowledge of specific quantitative methods, and students who wish to have an integrated survey of state-of- the-art quantitative methods." —Roger E. Millsap, Arizona State University "This handbook discusses important methodological tools and topics in quantitative methodology in easy to understand language. It is an exhaustive review of past and recent advances in each topic combined with a detailed discussion of examples and graphical illustrations. It will be an essential reference for social science researchers as an introduction to methods and quantitative concepts of great use." —Irini Moustaki, London School of Economics, U.K.

"David Kaplan and SAGE Publications are to be congratulated on the development of a new handbook on quantitative methods for the social sciences. The Handbook is more than a set of methodologies, it is a journey. This methodological journey allows the reader to experience scaling, tests and measurement, and statistical methodologies applied to categorical, multilevel, and latent variables. The journey concludes with a number of philosophical issues of interest to researchers in the social sciences. The new Handbook is a must purchase." —Neil H. Timm, University of Pittsburgh The SAGE Handbook of Quantitative Methodology for the Social Sciences is the definitive reference for teachers, students, and researchers of quantitative methods in the social sciences, as it provides a comprehensive overview of the major techniques used in the field. The contributors, top methodologists and researchers, have written about their areas of expertise in ways that convey the utility of their respective techniques, but, where appropriate, they also offer a fair critique of these techniques. Relevance to real-world problems in the social sciences is an essential ingredient of each chapter and makes this an invaluable resource.

The handbook is divided into six sections:

• Scaling • Testing and Measurement • Models for Categorical Data • Models for Multilevel Data • Models for Latent Variables • Foundational Issues

These sections, comprising twenty-four chapters, address topics in scaling and measurement, advances in statistical modeling methodologies, and broad philosophical themes and foundational issues that transcend many of the quantitative methodologies covered in the book.

The Handbook is indispensable to the teaching, study, and research of quantitative methods and will enable readers to develop a level of understanding of statistical techniques commensurate with the most recent, state-of-the-art, theoretical developments in the field. It provides the foundations for quantitative research, with cutting-edge insights on the effectiveness of each method, depending on the data and distinct research situation.

See what’s new to this edition by selecting the Features tab on this page. Should you need additional information or have questions regarding the HEOA information provided for this title, including what is new to this edition, please email [email protected] . Please include your name, contact information, and the name of the title for which you would like more information. For information on the HEOA, please go to http://ed.gov/policy/highered/leg/hea08/index.html .

For assistance with your order: Please email us at [email protected] or connect with your SAGE representative.

SAGE 2455 Teller Road Thousand Oaks, CA 91320 www.sagepub.com

“The 24 chapters in this Handbook span a wide range of topics, presenting the latest quantitative developments in scaling theory, measurement, categorical data analysis, multilevel models, latent variable models, and foundational issues. Each chapter reviews the historical context for the topic and then describes current work, including illustrative examples where appropriate. The level of presentation throughout the book is detailed enough to convey genuine understanding without overwhelming the reader with technical material. Ample references are given for readers who wish to pursue topics in more detail. The book will appeal to both researchers who wish to update their knowledge of specific quantitative methods, and students who wish to have an integrated survey of state-of- the-art quantitative methods.”

“This handbook discusses important methodological tools and topics in quantitative methodology in easy to understand language. It is an exhaustive review of past and recent advances in each topic combined with a detailed discussion of examples and graphical illustrations. It will be an essential reference for social science researchers as an introduction to methods and quantitative concepts of great use.”

“David Kaplan and SAGE Publications are to be congratulated on the development of a new handbook on quantitative methods for the social sciences. The Handbook is more than a set of methodologies, it is a journey. This methodological journey allows the reader to experience scaling, tests and measurement, and statistical methodologies applied to categorical, multilevel, and latent variables. The journey concludes with a number of philosophical issues of interest to researchers in the social sciences. The new Handbook is a must purchase.”

"David Kaplan has convened a panel of top-notch methodologians, who take on the challenge in the writing of The SAGE Handbook of Quantitative Methodology for the Social Sciences (SHQM). The result is an engrossing collection of chapters that are sure to add screwdrivers, wrenches, and the occasional buzzsaw to your toolbox. A notable strength of the SHQM is the generally structure of each chapter. The chapters of the SHQM are a worthy accomplishment. The SHQM is both well conceived and well executed, providing the reader with numerous insights and a broader sense for the available tools of the quantitative methodological trade. It is most likely that few readers will have the opportunity to read this book from cover to cover, but should they feel so inspired, they will find the effort both rewarding and thought provoking."

"The Handbook provides an excellent introduction to broad range of state-of-the-art quantitative methods applicable to the social sciences. It shows the breadth and depth of advanced quantitative methods used by social scientists from numerous interrelated disciplines, it is rich with examples of real-world applications of these methods, and it provides suggestions for further readings and study in these areas. It is well worth reading cover-to-cover, and it is a very useful addition to the reference libraries of all quantitative social scientists, applied statisticians, and graduate students."

- Provides a comprehensive overview of the major techniques used in the field.

- Top methodologists and researchers have written about their areas of expertise

- Relevance to real-world problems in the social sciences is an essential ingredient of each chapter and makes this an invaluable resource.

- Indispensable to the teaching, study, and research of quantitative methods.

- Provides the foundations for quantitative research, with cutting-edge insights on the effectiveness of each method.

Sample Materials & Chapters

Chapter 1. Dual Scaling

Chapter 3. Principal Components Analysis with Nonlinear Optimal Scaling Transfo

Chapter 5. Test Modeling

Select a Purchasing Option

This title is also available on SAGE Knowledge , the ultimate social sciences online library. If your library doesn’t have access, ask your librarian to start a trial .

This title is also available on SAGE Research Methods , the ultimate digital methods library. If your library doesn’t have access, ask your librarian to start a trial .

A Quick Guide to Quantitative Research in the Social Sciences

(12 reviews)

Christine Davies, Carmarthen, Wales

Copyright Year: 2020

Last Update: 2021

Publisher: University of Wales Trinity Saint David

Language: English

Formats Available

Conditions of use.

Learn more about reviews.

Reviewed by Jennifer Taylor, Assistant Professor, Texas A&M University-Corpus Christi on 4/18/24

This resource is a quick guide to quantitative research in the social sciences and not a comprehensive resource. It provides a VERY general overview of quantitative research but offers a good starting place for students new to research. It... read more

Comprehensiveness rating: 4 see less

This resource is a quick guide to quantitative research in the social sciences and not a comprehensive resource. It provides a VERY general overview of quantitative research but offers a good starting place for students new to research. It offers links and references to additional resources that are more comprehensive in nature.

Content Accuracy rating: 4

The content is relatively accurate. The measurement scale section is very sparse. Not all types of research designs or statistical methods are included, but it is a guide, so details are meant to be limited.

Relevance/Longevity rating: 4

The examples were interesting and appropriate. The content is up to date and will be useful for several years.

Clarity rating: 5

The text was clearly written. Tables and figures are not referenced in the text, which would have been nice.

Consistency rating: 5

The framework is consistent across chapters with terminology clearly highlighted and defined.

Modularity rating: 5

The chapters are subdivided into section that can be divided and assigned as reading in a course. Most chapters are brief and concise, unless elaboration is necessary, such as with the data analysis chapter. Again, this is a guide and not a comprehensive text, so sections are shorter and don't always include every subtopic that may be considered.

Organization/Structure/Flow rating: 5

The guide is well organized. I appreciate that the topics are presented in a logical and clear manner. The topics are provided in an order consistent with traditional research methods.

Interface rating: 5

The interface was easy to use and navigate. The images were clear and easy to read.

Grammatical Errors rating: 5

I did not notice any grammatical errors.

Cultural Relevance rating: 5

The materials are not culturally insensitive or offensive in any way.

I teach a Marketing Research course to undergraduates. I would consider using some of the chapters or topics included, especially the overview of the research designs and the analysis of data section.

Reviewed by Tiffany Kindratt, Assistant Professor, University of Texas at Arlington on 3/9/24

The text provides a brief overview of quantitative research topics that is geared towards research in the fields of education, sociology, business, and nursing. The author acknowledges that the textbook is not a comprehensive resource but offers... read more

Comprehensiveness rating: 3 see less

The text provides a brief overview of quantitative research topics that is geared towards research in the fields of education, sociology, business, and nursing. The author acknowledges that the textbook is not a comprehensive resource but offers references to other resources that can be used to deepen the knowledge. The text does not include a glossary or index. The references in the figures for each chapter are not included in the reference section. It would be helpful to include those.

Overall, the text is accurate. For example, Figure 1 on page 6 provides a clear overview of the research process. It includes general definitions of primary and secondary research. It would be helpful to include more details to explain some of the examples before they are presented. For instance, the example on page 5 was unclear how it pertains to the literature review section.

In general, the text is relevant and up-to-date. The text includes many inferences of moving from qualitative to quantitative analysis. This was surprising to me as a quantitative researcher. The author mentions that moving from a qualitative to quantitative approach should only be done when needed. As a predominantly quantitative researcher, I would not advice those interested in transitioning to using a qualitative approach that qualitative research would enhance their research—not something that should only be done if you have to.

Clarity rating: 4

The text is written in a clear manner. It would be helpful to the reader if there was a description of the tables and figures in the text before they are presented.

Consistency rating: 4

The framework for each chapter and terminology used are consistent.

Modularity rating: 4

The text is clearly divided into sections within each chapter. Overall, the chapters are a similar brief length except for the chapter on data analysis, which is much more comprehensive than others.

Organization/Structure/Flow rating: 4

The topics in the text are presented in a clear and logical order. The order of the text follows the conventional research methodology in social sciences.

I did not encounter any interface issues when reviewing this text. All links worked and there were no distortions of the images or charts that may confuse the reader.

Grammatical Errors rating: 3

There are some grammatical/typographical errors throughout. Of note, for Section 5 in the table of contents. “The” should be capitalized to start the title. In the title for Table 3, the “t” in typical should be capitalized.

Cultural Relevance rating: 4

The examples are culturally relevant. The text is geared towards learners in the UK, but examples are relevant for use in other countries (i.e., United States). I did not see any examples that may be considered culturally insensitive or offensive in any way.

I teach a course on research methods in a Bachelor of Science in Public Health program. I would consider using some of the text, particularly in the analysis chapter to supplement the current textbook in the future.

Reviewed by Finn Bell, Assistant Professor, University of Michigan, Dearborn on 1/3/24

For it being a quick guide and only 26 pages, it is very comprehensive, but it does not include an index or glossary. read more

For it being a quick guide and only 26 pages, it is very comprehensive, but it does not include an index or glossary.

Content Accuracy rating: 5

As far as I can tell, the text is accurate, error-free and unbiased.

Relevance/Longevity rating: 5

This text is up-to-date, and given the content, unlikely to become obsolete any time soon.

The text is very clear and accessible.

The text is internally consistent.

Given how short the text is, it seems unnecessary to divide it into smaller readings, nonetheless, it is clearly labelled such that an instructor could do so.

The text is well-organized and brings readers through basic quantitative methods in a logical, clear fashion.

Easy to navigate. Only one table that is split between pages, but not in a way that is confusing.

There were no noticeable grammatical errors.

The examples in this book don't give enough information to rate this effectively.

This text is truly a very quick guide at only 26 double-spaced pages. Nonetheless, Davies packs a lot of information on the basics of quantitative research methods into this text, in an engaging way with many examples of the concepts presented. This guide is more of a brief how-to that takes readers as far as how to select statistical tests. While it would be impossible to fully learn quantitative research from such a short text, of course, this resource provides a great introduction, overview, and refresher for program evaluation courses.

Reviewed by Shari Fedorowicz, Adjunct Professor, Bridgewater State University on 12/16/22

The text is indeed a quick guide for utilizing quantitative research. Appropriate and effective examples and diagrams were used throughout the text. The author clearly differentiates between use of quantitative and qualitative research providing... read more

Comprehensiveness rating: 5 see less

The text is indeed a quick guide for utilizing quantitative research. Appropriate and effective examples and diagrams were used throughout the text. The author clearly differentiates between use of quantitative and qualitative research providing the reader with the ability to distinguish two terms that frequently get confused. In addition, links and outside resources are provided to deepen the understanding as an option for the reader. The use of these links, coupled with diagrams and examples make this text comprehensive.

The content is mostly accurate. Given that it is a quick guide, the author chose a good selection of which types of research designs to include. However, some are not provided. For example, correlational or cross-correlational research is omitted and is not discussed in Section 3, but is used as a statistical example in the last section.

Examples utilized were appropriate and associated with terms adding value to the learning. The tables that included differentiation between types of statistical tests along with a parametric/nonparametric table were useful and relevant.

The purpose to the text and how to use this guide book is stated clearly and is established up front. The author is also very clear regarding the skill level of the user. Adding to the clarity are the tables with terms, definitions, and examples to help the reader unpack the concepts. The content related to the terms was succinct, direct, and clear. Many times examples or figures were used to supplement the narrative.

The text is consistent throughout from contents to references. Within each section of the text, the introductory paragraph under each section provides a clear understanding regarding what will be discussed in each section. The layout is consistent for each section and easy to follow.

The contents are visible and address each section of the text. A total of seven sections, including a reference section, is in the contents. Each section is outlined by what will be discussed in the contents. In addition, within each section, a heading is provided to direct the reader to the subtopic under each section.

The text is well-organized and segues appropriately. I would have liked to have seen an introductory section giving a narrative overview of what is in each section. This would provide the reader with the ability to get a preliminary glimpse into each upcoming sections and topics that are covered.

The book was easy to navigate and well-organized. Examples are presented in one color, links in another and last, figures and tables. The visuals supplemented the reading and placed appropriately. This provides an opportunity for the reader to unpack the reading by use of visuals and examples.

No significant grammatical errors.

The text is not offensive or culturally insensitive. Examples were inclusive of various races, ethnicities, and backgrounds.

This quick guide is a beneficial text to assist in unpacking the learning related to quantitative statistics. I would use this book to complement my instruction and lessons, or use this book as a main text with supplemental statistical problems and formulas. References to statistical programs were appropriate and were useful. The text did exactly what was stated up front in that it is a direct guide to quantitative statistics. It is well-written and to the point with content areas easy to locate by topic.

Reviewed by Sarah Capello, Assistant Professor, Radford University on 1/18/22

The text claims to provide "quick and simple advice on quantitative aspects of research in social sciences," which it does. There is no index or glossary, although vocabulary words are bolded and defined throughout the text. read more

The text claims to provide "quick and simple advice on quantitative aspects of research in social sciences," which it does. There is no index or glossary, although vocabulary words are bolded and defined throughout the text.

The content is mostly accurate. I would have preferred a few nuances to be hashed out a bit further to avoid potential reader confusion or misunderstanding of the concepts presented.

The content is current; however, some of the references cited in the text are outdated. Newer editions of those texts exist.

The text is very accessible and readable for a variety of audiences. Key terms are well-defined.

There are no content discrepancies within the text. The author even uses similarly shaped graphics for recurring purposes throughout the text (e.g., arrow call outs for further reading, rectangle call outs for examples).

The content is chunked nicely by topics and sections. If it were used for a course, it would be easy to assign different sections of the text for homework, etc. without confusing the reader if the instructor chose to present the content in a different order.

The author follows the structure of the research process. The organization of the text is easy to follow and comprehend.

All of the supplementary images (e.g., tables and figures) were beneficial to the reader and enhanced the text.

There are no significant grammatical errors.

I did not find any culturally offensive or insensitive references in the text.

This text does the difficult job of introducing the complicated concepts and processes of quantitative research in a quick and easy reference guide fairly well. I would not depend solely on this text to teach students about quantitative research, but it could be a good jumping off point for those who have no prior knowledge on this subject or those who need a gentle introduction before diving in to more advanced and complex readings of quantitative research methods.

Reviewed by J. Marlie Henry, Adjunct Faculty, University of Saint Francis on 12/9/21

Considering the length of this guide, this does a good job of addressing major areas that typically need to be addressed. There is a contents section. The guide does seem to be organized accordingly with appropriate alignment and logical flow of... read more

Considering the length of this guide, this does a good job of addressing major areas that typically need to be addressed. There is a contents section. The guide does seem to be organized accordingly with appropriate alignment and logical flow of thought. There is no glossary but, for a guide of this length, a glossary does not seem like it would enhance the guide significantly.

The content is relatively accurate. Expanding the content a bit more or explaining that the methods and designs presented are not entirely inclusive would help. As there are different schools of thought regarding what should/should not be included in terms of these designs and methods, simply bringing attention to that and explaining a bit more would help.

Relevance/Longevity rating: 3

This content needs to be updated. Most of the sources cited are seven or more years old. Even more, it would be helpful to see more currently relevant examples. Some of the source authors such as Andy Field provide very interesting and dynamic instruction in general, but they have much more current information available.

The language used is clear and appropriate. Unnecessary jargon is not used. The intent is clear- to communicate simply in a straightforward manner.

The guide seems to be internally consistent in terms of terminology and framework. There do not seem to be issues in this area. Terminology is internally consistent.

For a guide of this length, the author structured this logically into sections. This guide could be adopted in whole or by section with limited modifications. Courses with fewer than seven modules could also logically group some of the sections.

This guide does present with logical organization. The topics presented are conceptually sequenced in a manner that helps learners build logically on prior conceptualization. This also provides a simple conceptual framework for instructors to guide learners through the process.

Interface rating: 4

The visuals themselves are simple, but they are clear and understandable without distracting the learner. The purpose is clear- that of learning rather than visuals for the sake of visuals. Likewise, navigation is clear and without issues beyond a broken link (the last source noted in the references).

This guide seems to be free of grammatical errors.

It would be interesting to see more cultural integration in a guide of this nature, but the guide is not culturally insensitive or offensive in any way. The language used seems to be consistent with APA's guidelines for unbiased language.

Reviewed by Heng Yu-Ku, Professor, University of Northern Colorado on 5/13/21

The text covers all areas and ideas appropriately and provides practical tables, charts, and examples throughout the text. I would suggest the author also provides a complete research proposal at the end of Section 3 (page 10) and a comprehensive... read more

The text covers all areas and ideas appropriately and provides practical tables, charts, and examples throughout the text. I would suggest the author also provides a complete research proposal at the end of Section 3 (page 10) and a comprehensive research study as an Appendix after section 7 (page 26) to help readers comprehend information better.

For the most part, the content is accurate and unbiased. However, the author only includes four types of research designs used on the social sciences that contain quantitative elements: 1. Mixed method, 2) Case study, 3) Quasi-experiment, and 3) Action research. I wonder why the correlational research is not included as another type of quantitative research design as it has been introduced and emphasized in section 6 by the author.

I believe the content is up-to-date and that necessary updates will be relatively easy and straightforward to implement.

The text is easy to read and provides adequate context for any technical terminology used. However, the author could provide more detailed information about estimating the minimum sample size but not just refer the readers to use the online sample calculators at a different website.

The text is internally consistent in terms of terminology and framework. The author provides the right amount of information with additional information or resources for the readers.

The text includes seven sections. Therefore, it is easier for the instructor to allocate or divide the content into different weeks of instruction within the course.

Yes, the topics in the text are presented in a logical and clear fashion. The author provides clear and precise terminologies, summarizes important content in Table or Figure forms, and offers examples in each section for readers to check their understanding.

The interface of the book is consistent and clear, and all the images and charts provided in the book are appropriate. However, I did encounter some navigation problems as a couple of links are not working or requires permission to access those (pages 10 and 27).

No grammatical errors were found.

No culturally incentive or offensive in its language and the examples provided were found.

As the book title stated, this book provides “A Quick Guide to Quantitative Research in Social Science. It offers easy-to-read information and introduces the readers to the research process, such as research questions, research paradigms, research process, research designs, research methods, data collection, data analysis, and data discussion. However, some links are not working or need permissions to access them (pages 10 and 27).

Reviewed by Hsiao-Chin Kuo, Assistant Professor, Northeastern Illinois University on 4/26/21, updated 4/28/21

As a quick guide, it covers basic concepts related to quantitative research. It starts with WHY quantitative research with regard to asking research questions and considering research paradigms, then provides an overview of research design and... read more

As a quick guide, it covers basic concepts related to quantitative research. It starts with WHY quantitative research with regard to asking research questions and considering research paradigms, then provides an overview of research design and process, discusses methods, data collection and analysis, and ends with writing a research report. It also identifies its target readers/users as those begins to explore quantitative research. It would be helpful to include more examples for readers/users who are new to quantitative research.

Its content is mostly accurate and no bias given its nature as a quick guide. Yet, it is also quite simplified, such as its explanations of mixed methods, case study, quasi-experimental research, and action research. It provides resources for extended reading, yet more recent works will be helpful.

The book is relevant given its nature as a quick guide. It would be helpful to provide more recent works in its resources for extended reading, such as the section for Survey Research (p. 12). It would also be helpful to include more information to introduce common tools and software for statistical analysis.

The book is written with clear and understandable language. Important terms and concepts are presented with plain explanations and examples. Figures and tables are also presented to support its clarity. For example, Table 4 (p. 20) gives an easy-to-follow overview of different statistical tests.

The framework is very consistent with key points, further explanations, examples, and resources for extended reading. The sample studies are presented following the layout of the content, such as research questions, design and methods, and analysis. These examples help reinforce readers' understanding of these common research elements.

The book is divided into seven chapters. Each chapter clearly discusses an aspect of quantitative research. It can be easily divided into modules for a class or for a theme in a research method class. Chapters are short and provides additional resources for extended reading.

The topics in the chapters are presented in a logical and clear structure. It is easy to follow to a degree. Though, it would be also helpful to include the chapter number and title in the header next to its page number.

The text is easy to navigate. Most of the figures and tables are displayed clearly. Yet, there are several sections with empty space that is a bit confusing in the beginning. Again, it can be helpful to include the chapter number/title next to its page number.

Grammatical Errors rating: 4

No major grammatical errors were found.

There are no cultural insensitivities noted.

Given the nature and purpose of this book, as a quick guide, it provides readers a quick reference for important concepts and terms related to quantitative research. Because this book is quite short (27 pages), it can be used as an overview/preview about quantitative research. Teacher's facilitation/input and extended readings will be needed for a deeper learning and discussion about aspects of quantitative research.

Reviewed by Yang Cheng, Assistant Professor, North Carolina State University on 1/6/21

It covers the most important topics such as research progress, resources, measurement, and analysis of the data. read more

It covers the most important topics such as research progress, resources, measurement, and analysis of the data.

The book accurately describes the types of research methods such as mixed-method, quasi-experiment, and case study. It talks about the research proposal and key differences between statistical analyses as well.

The book pinpointed the significance of running a quantitative research method and its relevance to the field of social science.

The book clearly tells us the differences between types of quantitative methods and the steps of running quantitative research for students.

The book is consistent in terms of terminologies such as research methods or types of statistical analysis.

It addresses the headlines and subheadlines very well and each subheading should be necessary for readers.

The book was organized very well to illustrate the topic of quantitative methods in the field of social science.

The pictures within the book could be further developed to describe the key concepts vividly.

The textbook contains no grammatical errors.

It is not culturally offensive in any way.

Overall, this is a simple and quick guide for this important topic. It should be valuable for undergraduate students who would like to learn more about research methods.

Reviewed by Pierre Lu, Associate Professor, University of Texas Rio Grande Valley on 11/20/20

As a quick guide to quantitative research in social sciences, the text covers most ideas and areas. read more

As a quick guide to quantitative research in social sciences, the text covers most ideas and areas.

Mostly accurate content.

As a quick guide, content is highly relevant.

Succinct and clear.

Internally, the text is consistent in terms of terminology used.

The text is easily and readily divisible into smaller sections that can be used as assignments.

I like that there are examples throughout the book.

Easy to read. No interface/ navigation problems.

No grammatical errors detected.

I am not aware of the culturally insensitive description. After all, this is a methodology book.

I think the book has potential to be adopted as a foundation for quantitative research courses, or as a review in the first weeks in advanced quantitative course.

Reviewed by Sarah Fischer, Assistant Professor, Marymount University on 7/31/20

It is meant to be an overview, but it incredibly condensed and spends almost no time on key elements of statistics (such as what makes research generalizable, or what leads to research NOT being generalizable). read more

It is meant to be an overview, but it incredibly condensed and spends almost no time on key elements of statistics (such as what makes research generalizable, or what leads to research NOT being generalizable).

Content Accuracy rating: 1

Contains VERY significant errors, such as saying that one can "accept" a hypothesis. (One of the key aspect of hypothesis testing is that one either rejects or fails to reject a hypothesis, but NEVER accepts a hypothesis.)

Very relevant to those experiencing the research process for the first time. However, it is written by someone working in the natural sciences but is a text for social sciences. This does not explain the errors, but does explain why sometimes the author assumes things about the readers ("hail from more subjectivist territory") that are likely not true.

Clarity rating: 3

Some statistical terminology not explained clearly (or accurately), although the author has made attempts to do both.

Very consistently laid out.

Chapters are very short yet also point readers to outside texts for additional information. Easy to follow.

Generally logically organized.

Easy to navigate, images clear. The additional sources included need to linked to.

Minor grammatical and usage errors throughout the text.

Makes efforts to be inclusive.

The idea of this book is strong--short guides like this are needed. However, this book would likely be strengthened by a revision to reduce inaccuracies and improve the definitions and technical explanations of statistical concepts. Since the book is specifically aimed at the social sciences, it would also improve the text to have more examples that are based in the social sciences (rather than the health sciences or the arts).

Reviewed by Michelle Page, Assistant Professor, Worcester State University on 5/30/20

This text is exactly intended to be what it says: A quick guide. A basic outline of quantitative research processes, akin to cliff notes. The content provides only the essentials of a research process and contains key terms. A student or new... read more

This text is exactly intended to be what it says: A quick guide. A basic outline of quantitative research processes, akin to cliff notes. The content provides only the essentials of a research process and contains key terms. A student or new researcher would not be able to use this as a stand alone guide for quantitative pursuits without having a supplemental text that explains the steps in the process more comprehensively. The introduction does provide this caveat.

Content Accuracy rating: 3

There are no biases or errors that could be distinguished; however, it’s simplicity in content, although accurate for an outline of process, may lack a conveyance of the deeper meanings behind the specific processes explained about qualitative research.

The content is outlined in traditional format to highlight quantitative considerations for formatting research foundational pieces. The resources/references used to point the reader to literature sources can be easily updated with future editions.

The jargon in the text is simple to follow and provides adequate context for its purpose. It is simplified for its intention as a guide which is appropriate.

Each section of the text follows a consistent flow. Explanation of the research content or concept is defined and then a connection to literature is provided to expand the readers understanding of the section’s content. Terminology is consistent with the qualitative process.

As an “outline” and guide, this text can be used to quickly identify the critical parts of the quantitative process. Although each section does not provide deeper content for meaningful use as a stand alone text, it’s utility would be excellent as a reference for a course and can be used as an content guide for specific research courses.

The text’s outline and content are aligned and are in a logical flow in terms of the research considerations for quantitative research.

The only issue that the format was not able to provide was linkable articles. These would have to be cut and pasted into a browser. Functional clickable links in a text are very successful at leading the reader to the supplemental material.

No grammatical errors were noted.

This is a very good outline “guide” to help a new or student researcher to demystify the quantitative process. A successful outline of any process helps to guide work in a logical and systematic way. I think this simple guide is a great adjunct to more substantial research context.

Table of Contents

- Section 1: What will this resource do for you?

- Section 2: Why are you thinking about numbers? A discussion of the research question and paradigms.

- Section 3: An overview of the Research Process and Research Designs

- Section 4: Quantitative Research Methods

- Section 5: the data obtained from quantitative research

- Section 6: Analysis of data

- Section 7: Discussing your Results

Ancillary Material

About the book.

This resource is intended as an easy-to-use guide for anyone who needs some quick and simple advice on quantitative aspects of research in social sciences, covering subjects such as education, sociology, business, nursing. If you area qualitative researcher who needs to venture into the world of numbers, or a student instructed to undertake a quantitative research project despite a hatred for maths, then this booklet should be a real help.

The booklet was amended in 2022 to take into account previous review comments.

About the Contributors

Christine Davies , Ph.D

Contribute to this Page

Book series

Quantitative Methods in the Humanities and Social Sciences

About this book series.

- Thomas DeFanti,

- Anthony Grafton,

- Thomas E. Levy,

- Lev Manovich,

- Alyn Rockwood

Book titles in this series

Humanities data in r.

Exploring Networks, Geospatial Data, Images, and Text

- Taylor Arnold

- Lauren Tilton

- Copyright: 2024

Available Renditions

A Quantitative Portrait of Analytic Philosophy

Looking Through the Margins

- Eugenio Petrovich

Database Computing for Scholarly Research

Case Studies Using the Online Cultural and Historical Research Environment

- Sandra R. Schloen

- Miller C. Prosser

- Copyright: 2023

Who Wrote Citizen Kane?

Statistical Analysis of Disputed Co-Authorship

- Warren Buckland

Capturing the Senses

Digital Methods for Sensory Archaeologies

- Giacomo Landeschi

- Eleanor Betts

- Open Access

Publish with us

Advertisement

- Previous Article

- Next Article

Quantitative Methods in the Humanities: An Introduction

- Cite Icon Cite

- Permissions

- Article contents

- Figures & tables

- Supplementary Data

- Peer Review

- Search Site

A. E. C. M.; Quantitative Methods in the Humanities: An Introduction. The Journal of Interdisciplinary History 2020; 51 (1): 137–139. doi: https://doi.org/10.1162/jinh_r_01527

Download citation file:

- Ris (Zotero)

- Reference Manager

History is notoriously a “big tent” discipline. Because everything has a past, every subject has a history. The tools appropriate to ferret out those histories multiply just as easily as the topics, depending on the questions being asked and the nature of the evidence preserved (accidentally or otherwise) that might answer them. In what sense is History a coherent “discipline” at all? Is there more to hold it together than just a ferocious commitment to the past tense? Must historians adhere to a recognized and common methodology of practice, but of what might it consist, in the face of so much variety? These questions bedevil historians everywhere, especially when they are trying to figure out what their students should know and/or know how to do. Whatever the answers might be, these questions frame both the motivation for the book under review and its value for readers.

Written by two historians,...

Client Account

Sign in via your institution, email alerts, related articles, related book chapters, affiliations.

- Online ISSN 1530-9169

- Print ISSN 0022-1953

A product of The MIT Press

Mit press direct.

- About MIT Press Direct

Information

- Accessibility

- For Authors

- For Customers

- For Librarians

- Direct to Open

- Open Access

- Media Inquiries

- Rights and Permissions

- For Advertisers

- About the MIT Press

- The MIT Press Reader

- MIT Press Blog

- Seasonal Catalogs

- MIT Press Home

- Give to the MIT Press

- Direct Service Desk

- Terms of Use

- Privacy Statement

- Crossref Member

- COUNTER Member

- The MIT Press colophon is registered in the U.S. Patent and Trademark Office

This Feature Is Available To Subscribers Only

Sign In or Create an Account

Quantitative Research: A Successful Investigation in Natural and Social Sciences

Mohajan, Haradhan (2020): Quantitative Research: A Successful Investigation in Natural and Social Sciences. Published in: Journal of Economic Development, Environment and People , Vol. 9, No. 4 (31 December 2020): pp. 52-79.

Research is the framework used for the planning, implementation, and analysis of a study. The proper choice of a suitable research methodology can provide an effective and successful original research. A researcher can reach his/her expected goal by following any kind of research methodology. Quantitative research methodology is preferred by many researchers. This article presents and analyzes the design of quantitative research. It also discusses the proper use and the components of quantitative research methodology. It is used to quantify attitudes, opinions, behaviors, and other defined variables and generalize results from a larger sample population by the way of generating numerical data. The purpose of this study is to provide some important fundamental concepts of quantitative research to the common readers for the development of their future projects, articles and/or theses. An attempt has been taken here to study the aspects of the quantitative research methodology in some detail.

All papers reproduced by permission. Reproduction and distribution subject to the approval of the copyright owners.

Contact us: [email protected]

This repository has been built using EPrints software .

Qualitative and quantitative research in the humanities and social sciences: how natural language processing (NLP) can help

- Published: 23 September 2021

- Volume 56 , pages 2751–2781, ( 2022 )

Cite this article

- Roberto Franzosi ORCID: orcid.org/0000-0001-8367-5190 1 ,

- Wenqin Dong 2 &

- Yilin Dong 2

1179 Accesses

2 Citations

4 Altmetric

Explore all metrics

The paper describes computational tools that can be of great help to both qualitative and quantitative scholars in the humanities and social sciences who deal with words as data. The Java and Python tools described provide computer-automated ways of performing useful tasks: 1. check the filenames well-formedness; 2. find user-defined characters in English language stories (e.g., social actors, i.e., individuals, groups, organizations; animals) (“find the character”) via WordNet; 3. aggregate words into higher-level aggregates (e.g., “talk,” “say,” “write” are all verbs of “communication”) (“find the ancestor”) via WordNet; 4. evaluate human-created summaries of events taken from multiple sources where key actors found in the sources may have been left out in the summaries (“find the missing character”) via Stanford CoreNLP POS and NER annotators; 5. list the documents in an event cluster where names or locations present close similarities (“check the character’s name tag”) using Levenshtein word/edit distance and Stanford CoreNLP NER annotator; 6. list documents categorized into the wrong event cluster (“find the intruder”) via Stanford CoreNLP POS and NER annotators; 7. classify loose documents into most-likely event clusters (“find the character’s home”) via Stanford CoreNLP POS and NER annotators or date matcher; 8. find similarities between documents (“find the plagiarist”) using Lucene. These tools of automatic data checking can be applied to ongoing projects or completed projects to check data reliability. The NLP tools are designed with “a fourth grader” in mind, a user with no computer science background. Some five thousand newspaper articles from a project on racial violence (Georgia 1875–1935) are used to show how the tools work. But the tools have much wider applicability to a variety of problems of interest to both qualitative and quantitative scholars who deal with text as data.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

What’s in a text? Bridging the gap between quality and quantity in the digital era

Beyond lexical frequencies: using R for text analysis in the digital humanities

Text Mining and Big Textual Data: Relevant Statistical Models

On PEA see Koopmans and Rucht ( 2002 ) and (Hutter 2014 ); on PEA and its more rigorous methodological counterpart rooted in a linguistic theory of narrative and rhetoric, Quantitative Narrative Analysis (QNA), see Franzosi ( 2010 ).

See, for instance, Franzosi’s PC-ACE (Program for Computer-Assisted Coding of Events) at www.pc-ace.com (Franzosi 2010 ).

For recent surveys, see Evans and Aceves ( 2016 ), Edelmann et al. ( 2020 ).

The GitHub site will automatically install not only all the NLP Suite scripts but also Python and Anaconda required to run the scripts. It also provides extensive help on how to download and install a handful of external software required by some of the algorithms (e.g., Stanford CoreNLP, WordNet). The goal is to make it as easy as possible for non-technical users to take advantage of the tools with minimal investment.

We rely on the Python package openpyxl and ad hoc functions.

The newspaper collections found in Chronicling America of the Library of Congress ( http://chroniclingamerica.loc.gov/newspapers/ ), the Digital Library of Georgia ( http://dlg.galileo.usg.edu/MediaTypes/Newspapers.html?Welcome ), The Atlanta Constitution, Proquest, Readex.

Multiple cross-references are also possible, whereby a document deals with several different events.

Contrary to some protest event projects based on a single newspaper source (e.g., The New York Times in the “Dynamics of Collective Action, 1960–1995” project that involved several social scientists, notably, Doug McAdam, John McCarthy, Susan Olzak, Sarah Soule, and led to dozens of influential publications; see for all McAdam and Su 2002 ), the Georgia lynching project is based on multiple newspaper sources for each event.

Franzosi reports 1,600 distinct entries for subjects and objects and 7,000 for verbs for one of his projects (Franzosi 2010 : 93); similar figures are reported by Ericsson and Simon ( 1996 : 265–266) and Tilly ( 1995 : 414–415).

The most up-to-date numbers of terms are given in https://wordnet.princeton.edu/documentation/wnstats7wn .

A common critique of WordNet is that WordNet is better suited to account for concrete concepts than for abstract concepts. It is much easier to create hyponyms/hypernym relationships between “conifer” as a type of “tree”, a “tree” as a type of “plant”, and a “plant” as a type of “organism”. Not so easy to classify emotions like “fear” or “happiness” into hyponyms/hypernym relationships.

https://projects.csail.mit.edu/jwi/

The WordNet databases comprises both single words or combinations of two or more words that typically come together with a specific meaning (collocations, e.g., coming out, shut down, thumbs up, stand in line, customs duty). Over 80% of terms in the WordNet database are collocations, at least at the time of Miller et al.’s Introduction to WordNet manual (1993, p. 2). For the English language (but WordNet is available for some 200 languages) the database contains a very large set of terms. The most up-to-date numbers of terms are given in https://wordnet.princeton.edu/documentation/wnstats7wn .

Data aggregation is often referred to as “data reduction” in the social sciences and as “linguistic categorization” in linguistics (on linguistic categorization, see Taylor 2004 ; on verbs classification, Levin 1993 ; see also Franzosi 2010 : 61).

On the way up through the hierarchy, the script relies on the WordNet concepts of hypernym – the generic term used to designate a whole class of specific instances (Y is a hypernym of X if X is a (kind of) Y) – and holonym – the name of the whole of which the meronym names is a part. Y is a holonym of X if X is a part of Y.

Collocations are sets of two or more words that are usually together for a complete meaning, e.g., “coming out,” “sunny side up”. Over 80% of terms in the WordNet database are collocations, at least at the time of Miller et al.’s Introduction to WordNet manual (1993, p. 2). For the English language (but WordNet is available for some 200 languages) the database contains a very large set of terms. The most up-to-date numbers of terms in each category are given in https://wordnet.princeton.edu/documentation/wnstats7wn

The 25 top noun synsets are: act, animal, artifact, attribute, body, cognition, communication, event, feeling, food, group, location, motive, object, person, phenomenon, plant, possession, process, quantity, relation, shape, state, substance, time.

The 15 top verb synsets are: body, change, cognition, communication, competition, consumption, contact, creation, emotion, motion, perception, possession, social, stative, weather.

Unfortunately, there is no easy way to aggregate at levels lower than the top synsets. Wordnet is a linked graph where each node is a synset and synsets are interlinked by means of conceptual-semantic and lexical relations. In other words, it is not a simple tree structure: there is no way to tell at which level the synset is located at. For example, the synset “anger” can be traced from top level synset “feeling” and follows the path: feeling—> emotion—> anger. But it can also be traced from top level synset “state” and follows the path: state—> condition—> physiological condition—> arousal—> emotional arousal—> anger. In the first case, “anger” is at level 3 (assuming “feeling” and or other top synsets are level 1). In the second case, “anger” is at level 6. Programmatically, if one gives users more freedom to control the level of aggregating up, it is hard to build a user-friendly communication protocol. If the user wants to aggregate up to level 3 (two levels below the top synset), then should “anger” be considered as a level 3 synset? Does the user want “anger” to be considered as a level 3 synset? Since there is no clear definition of how far away a synset is from the root (top synsets), our algorithm aggregates all the way up to root.

Suppose that you wish to aggregate the verbs in your corpus under the label “violence.” WordNet top synsets for verbs do not include “violence” as a class. Verbs of violence may be listed under body, contact, social. You could use the Zoom IN/DOWN widget of Figure 24 to get a list of verbs in these top synsets, then manually go through the list to select only the verbs of violence of interest. That would mean go through manually the list of 956 verbs in the body class (e.g., to find there the verb “attack,” among others), the 2515 verbs of contact (e.g., to find there the verb “wrestle”), and the 1688 verbs of social (e.g., to find there the verb “abuse”). In total, 5159 distinct verbs. A restricted domain, for example newspaper articles of lynching, may have many fewer distinct verbs, indeed 2027, extracted using the lemma of the POS annotator for all the VB* tags. Whether using the WordNet dictionary (a better solution if the list of verbs of violence has to be used across different corpora) or the POS distinct verb tags, the dictionary list can then be used to annotate the documents in the corpus via the NLP Suite dictionary annotator GUI.

Current computational technology makes available a different approach to creating summaries: an automatic approach where summaries are generated automatically by a computer algorithm, rather than a human (Gambhir and Gupta 2017 ; Lloret and Palomar 2012 ; Nenkova and McKeown 2012 ).

We use the word “compilation”, rather than “summary”, since, by and large, we maintained the original newspaper language (e.g., the word “negro”, rather than “African American”) and original story line, however contrived the story may have appeared to be.

https://stanfordnlp.github.io/CoreNLP/ Manning et al. ( 2014 ).

More specifically, for locations, the NER tags used are: City, State_or_Province, Country. Several other NER values are also recognized and tagged (e.g., Numbers, Percentages, Money, Religion), but they are irrelevant in this context.

The column “List of Documents for Type of Error” may be split in several columns depending upon the number of documents found in error.

The algorithm can process all or selected NER values, comparing the associated word values either within a single event subdirectory or across all subdirectories (or all the files listed in a directory, for that matter).

We calculated the relativity index by using cosine similarity (Singhal 2001 ). We use the two list of NN, NNS, Location, Date, Person, and Organization from the j doc (L1) and from all other j-1 docs (L2) and compute cosine similarity between the two lists. We construct a vector from each list by mapping the word count onto each unique word. Then, relativity index is calculated as the cosine similarity between two vectors and n is the count of total unique words. For instance, L1 is {Alice: 2, doctor: 3, hospital: 1}, and L2 is {Bob:1, hospital: 2}. If we fix the order of all words as {Alice, doctor, hospital, Bob}, then the first vector (V1) is (2, 3, 1, 0), the second vector (V2) is (0, 0, 2, 1), and the length n of the vector is 4. The relativity is the dot product of two vectors divided by the product of two vector lengths. Documents with index of relativity significantly lower than the rest of the cluster are signalled as unlikely to belong to the cluster.

\({\text{relativity}}\;{\text{index}} = \frac{{\sum\nolimits_{i = 1}^{n} {\left( {V1_{i} V2_{i} } \right)} }}{{\sqrt {\sum\nolimits_{i = 1}^{n} {V1_{i}^{2} } } \sqrt {\sum\nolimits_{i = 1}^{n} {V2_{i}^{2} } } }}\)

The relativity index ranges from 0 to 1, where 0 means two documents are totally different, and 1 means two documents have exactly the same list of NN, NNS, Location, Date, Person, and Organization.

The bar chart displays the distribution of most frequent threshold index values as intervals, with most records in the 0.25 ~ 0.29 interval.

It should be noted that the use of the words plagiarism and plagiarist in this context should be taken with a grain of salt. First, the data do not tell us anything about who copied whom, but only that the two different newspapers shared content, wholly or in part; furthermore, the shared content may well have come from an unacknowledged wire service (on the development and spread of news wire services in the United States during the second half of the nineteenth century, see Brooker-Gross 1981 ; on computational tools for plagiarism and authorship attribution, see, for instance, Stein et al. 2011 ).

http://lucene.apache.org/core/downloads.html . For a summary of approaches to document similarities, see Forsyth and Sharoff ( 2014 ).

Other approaches are also available. After all, determining document similarity has been a major research area due to its wide application in information retrieval, document clustering, machine translation, etc. Existing approaches to determine document similarity can be grouped into two categories: knowledge-based similarity and content-based similarity (Benedetti et al., 2019 ).

Knowledge-based similarity approaches extract information from other sources to supplement the corpus, so as to draw from more document features to analyze. For example, Explicit Semantic Analysis (ESA) (Gabrilovich and Markovitch 2007 ) represents documents in high dimensional vectors based on the features extracted from both original articles and Wikipedia articles. Then, similarity of documents is calculated using vector space comparison algorithm. Since our main focus in this work is to detect plagiarism among texts in the same corpus, knowledge-based similarity approaches are not very fruitful.

Content-based similarity approaches focus on using only textual information contained in documents. Popular proposed techniques in this fields are Vector Space Models (Turney and Pantel 2010 ), probabilistic models such as Okapi BM-25 (Robertson and Zaragoza 2009 ). These methods all transform documents into some form of representations, and then either do a vector space comparison or query search match on the constructed representations.

document_duplicates.txt.

Users can specify different spans of temporal aggregation (e.g., year, quarter/year, month/year).

In this specific application, documents are newspapers where document name refers to the name of the paper (e.g., The New York Times) and document instance refers to a specific newspaper article (e.g., The New York Times_12-11-1912_1, referring to a The New York Times of December 11, 1912 on page 1). But the document name could refer to an ethnographic interview with document instance referring to an interviewer’s ID (by name or number), an interview’s location, time, or interviewee (by name or ID number).

The numbers in each row of the table add up to approximately the total number of newspaper articles in the corpus. This number of not exact due to the way the Lucene function “find top similar documents” computes similar documents with discrepancies numbering in the teens.

On the specific topic of lynching, see, for instance, the quantitative work by Beck and Tolnay ( 1990 ) or Franzosi et al. ( 2012 ) and the more qualitative work by Brundage ( 1993 ).

Aggarwal, C.C., Zhai, C.: A survey of text classification algorithms. In: Aggarwal, C.C., Zhai, C. (eds.) Mining Text Data, pp. 163–222. Springer, Boston (2012)

Chapter Google Scholar

Beck, E.M., Tolnay, S.: ‘The killing fields of the deep south: the market for cotton and the lynching of blacks, 1882–1930.’ Am. Sociol. Rev. 55 , 526–539 (1990)

Article Google Scholar

Beck, E.M., Tolnay, S.E.: Confirmed inventory of southern lynch victims, 1882–1930. Data file available from authors (2004).

Benedetti, F., Beneventano, D., Bergamaschi, S., Simonini, G.: Computing inter document similarity with Context Semantic Analysis. Inf. Syst. 80 , 136–147 (2019). https://doi.org/10.1016/j.is.2018.02.009

Białecki, A., Muir, R., & Ingersoll, G.: "Apache Lucene 4." SIGIR 2012 Workshop on Open Source Information Retrieval . August 16, 2012, Portland, OR, USA (2012).

Brundage, F.: Lynching in the New South: Georgia and Virginia, 1880–1930. University of Illinois Press, Urbana (1993)

Google Scholar

Johansson, J., Borg, M., Runeson, P., Mäntylä, M.V.:A replicated study on duplicate detection: using Apache Lucene to search among android defects. In: Proceedings of the 8th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement, 8. ACM (2014)

Brooker-Gross, S.R.: News wire services in the nineteenth-century United States. J. Hist. Geogr. 7 (2), 167–179 (1981)

Cooper, J.W., Coden, A.R. Brown, E.W.: Detecting similar documents using salient terms. In: Proceedings of the Eleventh International Conference on Information and Knowledge Management , 245–251 (2002)

Edelmann, A., Wolff, T., Montagne, D., Bail, C.A.: Computational social science and sociology. Ann. Rev. Sociol. 46 , 61–81 (2020)

Ericsson, K.A., Herbert, S.: Protocol Analysis: Verbal Reports as Data, 2nd edn. MIT Press, Cambridge, MA (1996)

Evans, J.A., Aceves, P.: Machine translation: mining text for social theory. Ann. Rev. Sociol. 42 , 21–50 (2016)

Fellbaum, C. (ed.): WordNet. An Electronic Lexical Database. MIT Press, Cambridge, MA (1998)

Forsyth, R.S., Sharoff, S.: Document dissimilarity within and across languages: a benchmarking study. Liter. Linguistic Comput 29 (1), 6–22 (2014)

Franzosi, R.: Quantitative Narrative Analysis, vol. 162. Sage, Thousand Oaks, CA (2010)

Book Google Scholar

Franzosi, R., De Fazio, G., Vicari, S.: Ways of measuring agency: an application of quantitative narrative analysis to lynchings in Georgia (1875–1930). Sociol. Methodol. 42 (1), 1–42 (2012)

Gabrilovich, E., Markovitch, S.: Computing semantic relatedness using wikipedia-based explicit semantic analysis. IJcAI 7 , 1606–1611 (2007)

Gambhir, M., Gupta, V.: Recent automatic text summarization techniques: a survey. Artif. Intell. Rev. 47 , 1–66 (2017)

Grimm, J., Grimm, W.: [1812, 1857]. The original folk and fairy tales of the brothers Grimm: The Complete First Edition. [ Kinder- und Hausmärchen. Children’s and Household Tales ]. Translated and Edited by Jack Zipes. Princeton, NJ: Princeton University Press (2014)

Hutter, S.: Protest event analysis and its offspring. In: Donatella della Porta (ed.) Methodological Practices in Social Movement Research. Oxford: Oxford University Press, pp. 335–367 (2014)

Jacobs, J.: English fairy tales (Collected by Joseph Jacobs, Illustrated by John D. Batten) . London: David Nutt (1890)

Klandermans, B., Staggenborg, S. (eds.): Methods of Social Movement Research. University of Minnesota Press, Minneapolis (2002)

Koopmans, R., Rucht, D.: Protest event analysis. In: Klandermans, Bert, Staggenborg, Suzanne (eds.) Methods of Social Movement Research, pp. 231–59. University of Minnesota Press, Minneapolis (2002)

Kowsari, K., Meimandi, K.J., Heidarysafa, M., Mendu, S., Barnes, L., Brown, D.: Text classification algorithms: a survey. Information 2019 (10), 150 (2019)

Labov, W.: Language in the Inner City. University of Pennsylvania Press, Philadelphia (1972)

Lansdall‐Welfare, T., Sudhahar, S., Thompson, J., Lewis, J., FindMyPast Newspaper Team, and Cristianini, N.: Content analysis of 150 years of british periodicals. Proceedings of the National Academy of Sciences (PNAS), PNAS , Published online January 9, 2017 E457–E465 (2017)

Lansdall-Welfare, T., Cristianini, N.: History playground: a tool for discovering temporal trends in massive textual corpora. Digit. Scholar. Human. 35 (2), 328–341 (2020)

Levenshtein, V.I.: Binary codes capable of correcting deletions, insertions, and reversals. Doklady Akademii Nauk SSSR, 163(4):845–848, 1965 (Russian). English translation in Soviet Physics Doklady , 10(8):707–710, 1966. (Doklady is Russian for "Report". Sometimes transliterated in English as Doclady or Dokladi.) (1966)

Levin, B.: English Verb Classes and Alternations. The University of Chicago Press, Chicago (1993)

Lloret, E., Palomar, M.: Text summarisation in progress: a literature review. Artif. Intell. Rev. 37 , 1–41 (2012)

MacEachren, A.M., Roth, R.E., O'Brien, J., Li, B., Swingley, D., and Gahegan, M.: Visual semiotics & uncertainty visualization: an empirical study. IEEE Transactions on Visualization and Computer Graphics , Vol. 18, No. 12, December 2012 (2012)

Manning, C.D., Surdeanu, M., Bauer, J., Finkel, J., Bethard, S.J. and McClosky, D.: The stanford CoreNLP natural language processing toolkit. Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations , pp. 55–60 (2014)

McAdam, D., Yang, Su.: The war at home: antiwar protests and congressional voting, 1965–1973. Am. Sociol. Rev. 67 (5), 696–721 (2002)

McCandless, M., Hatcher, E., Gospodnetic, O.: Lucene in Action, Second Edition Covers Apache Lucene 3.0. Manning Publications Co, Greenwich, CT (2010)

Miller, G.A.: WordNet: a lexical database for english. Commun. ACM 38 (11), 39–41 (1995)

Miller, G.A., Beckwith, R., Fellbaum, C., Gross, D., Miller, K.J.: Introduction to WordNet: an on-line lexical database. Int. J. Lexicogr. 3 (4), 235–244 (1990)

Nenkova, A., McKeown, K.: A survey of text summarization techniques. In: Aggarwal, C.C., Cheng, X.Z. (eds.) Mining Text Data, pp. 43–76. Springer, Boston (2012)

Murchú, T.Ó., Lawless, S.: The problem of time and space: the difficulties in visualising spatiotemporal change in historical data. Proc. Dig. Human. 7 (8), 12 (2014)

Panitch, L.: Corporatism: a growth industry reaches the monopoly stage. Can. J. Polit. Sci. 21 (4), 813–818 (1988)

Robertson, S., Zaragoza, H.: The probabilistic relevance framework BM25 and beyond. Found. Trends® Inf Retr. 3 (4), 333–389 (2009).

Singhal, A.: Modern information retrieval: a brief overview. Bull. IEEE Comput. Soc. Tech. Comm. Data Eng. 24 (4), 35–43 (2001)

Stein, B., Lipka, N., Prettenhofer, P.: Plagiarism and authorship analysis. Lang. Resour. Eval. 45 (1), 63–82 (2011)

Taylor, J.R.: Linguistic Categorization. Oxford University Press, Oxford (2004)

Tilly, C.: Popular Contention in Great Britain, 1758–1834. Harvard University Press, Cambridge, MA (1995)

Turney, P.D., Pantel, P.: From frequency to meaning: vector space models of semantics. J. Artif. Int. Res. 37 (1), 141–188 (2010)

Zhang, H., Pan, J.: CASM: a deep-learning approach for identifying collective action events with text and image data from social media. Sociol. Methodol. 49 (1), 1–57 (2019)

Zhang, Y., Li, J.L.: Research and improvement of search engine based on Lucene. Int. Conf. Intell. Human-Mach. Syst. Cybern. 2 , 270–273 (2009)

Download references

Author information

Authors and affiliations.

Department of Sociology/Linguistics Program, Emory University, Atlanta, GA, USA

Roberto Franzosi

Carnegie Mellon University, Pittsburgh, PA, USA

Wenqin Dong & Yilin Dong

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Roberto Franzosi .

Ethics declarations

Conflict of interest.

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

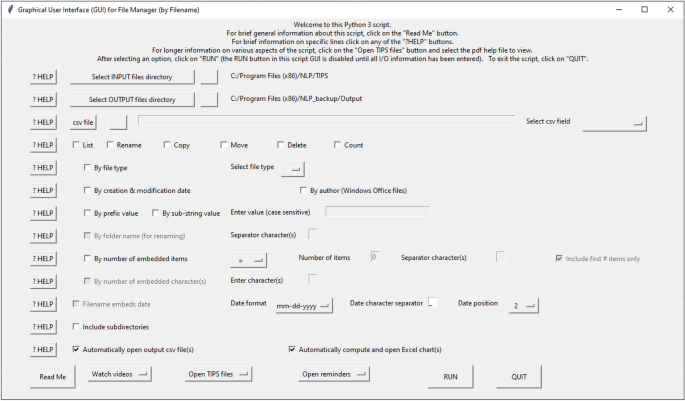

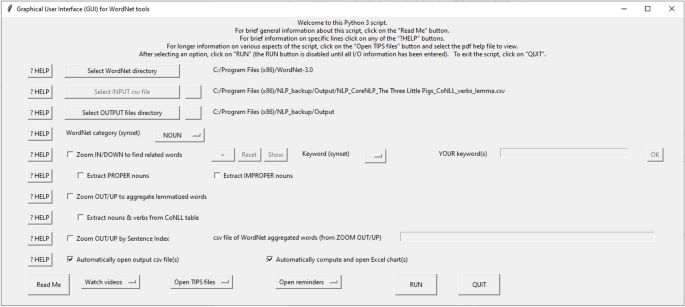

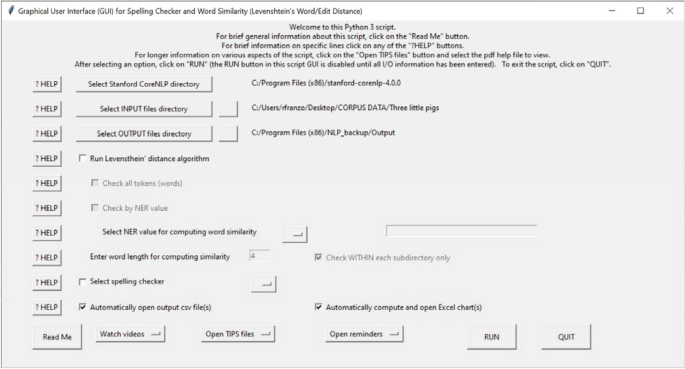

See Figs. 23 , 24 and 25

Screenshot of the Graphical User Interface (GUI) for the filename checker

Graphical User Interface (GUI) for WordNet options

Graphical User Interface (GUI) for Word Similarities

Rights and permissions

Reprints and permissions

About this article

Franzosi, R., Dong, W. & Dong, Y. Qualitative and quantitative research in the humanities and social sciences: how natural language processing (NLP) can help. Qual Quant 56 , 2751–2781 (2022). https://doi.org/10.1007/s11135-021-01235-2

Download citation

Accepted : 02 September 2021

Published : 23 September 2021

Issue Date : August 2022

DOI : https://doi.org/10.1007/s11135-021-01235-2

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Words as data

- Research in humanities and social sciences

- Social movements

- Natural language processing

- Computational linguistics

- Find a journal

- Publish with us

- Track your research

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 24 May 2024

Beyond probability-impact matrices in project risk management: A quantitative methodology for risk prioritisation

- F. Acebes ORCID: orcid.org/0000-0002-4525-2610 1 ,

- J. M. González-Varona 2 ,

- A. López-Paredes 2 &

- J. Pajares 1

Humanities and Social Sciences Communications volume 11 , Article number: 670 ( 2024 ) Cite this article

Metrics details

- Business and management

The project managers who deal with risk management are often faced with the difficult task of determining the relative importance of the various sources of risk that affect the project. This prioritisation is crucial to direct management efforts to ensure higher project profitability. Risk matrices are widely recognised tools by academics and practitioners in various sectors to assess and rank risks according to their likelihood of occurrence and impact on project objectives. However, the existing literature highlights several limitations to use the risk matrix. In response to the weaknesses of its use, this paper proposes a novel approach for prioritising project risks. Monte Carlo Simulation (MCS) is used to perform a quantitative prioritisation of risks with the simulation software MCSimulRisk. Together with the definition of project activities, the simulation includes the identified risks by modelling their probability and impact on cost and duration. With this novel methodology, a quantitative assessment of the impact of each risk is provided, as measured by the effect that it would have on project duration and its total cost. This allows the differentiation of critical risks according to their impact on project duration, which may differ if cost is taken as a priority objective. This proposal is interesting for project managers because they will, on the one hand, know the absolute impact of each risk on their project duration and cost objectives and, on the other hand, be able to discriminate the impacts of each risk independently on the duration objective and the cost objective.

Introduction

The European Commission ( 2023 ) defines a project as a temporary organizational structure designed to produce a unique product or service according to specified constraints, such as time, cost, and quality. As projects are inherently complex, they involve risks that must be effectively managed (Naderpour et al. 2019 ). However, achieving project objectives can be challenging due to unexpected developments, which often disrupt plans and budgets during project execution and lead to significant additional costs. The Standish Group ( 2022 ) notes that managing project uncertainty is of paramount importance, which renders risk management an indispensable discipline. Its primary goal is to identify a project’s risk profile and communicate it by enabling informed decision making to mitigate the impact of risks on project objectives, including budget and schedule adherence (Creemers et al. 2014 ).

Several methodologies and standards include a specific project risk management process (Axelos, 2023 ; European Commission, 2023 ; Project Management Institute, 2017 ; International Project Management Association, 2015 ; Simon et al. 1997 ), and there are even specific standards and guidelines for it (Project Management Institute, 2019 , 2009 ; International Organization for Standardization, 2018 ). Despite the differences in naming each phase or process that forms part of the risk management process, they all integrate risk identification, risk assessment, planning a response to the risk, and implementing this response. Apart from all this, a risk monitoring and control process is included. The “Risk Assessment” process comprises, in turn, risk assessments by qualitative methods and quantitative risk assessments.

A prevalent issue in managing project risks is identifying the significance of different sources of risks to direct future risk management actions and to sustain the project’s cost-effectiveness. For many managers busy with problems all over the place, one of the most challenging tasks is to decide which issues to work on first (Ward, 1999 ) or, in other words, which risks need to be paid more attention to avoid deviations from project objectives.

Given the many sources of risk and the impossibility of comprehensively addressing them, it is natural to prioritise identified risks. This process can be challenging because determining in advance which ones are the most significant factors, and how many risks merit detailed monitoring on an individual basis, can be complicated. Any approach that facilitates this prioritisation task, especially if it is simple, will be welcomed by those willing to use it (Ward, 1999 ).

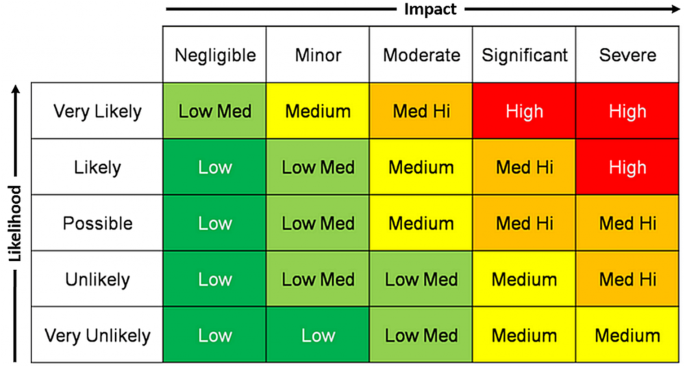

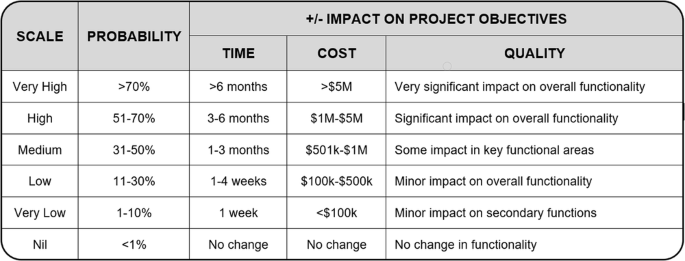

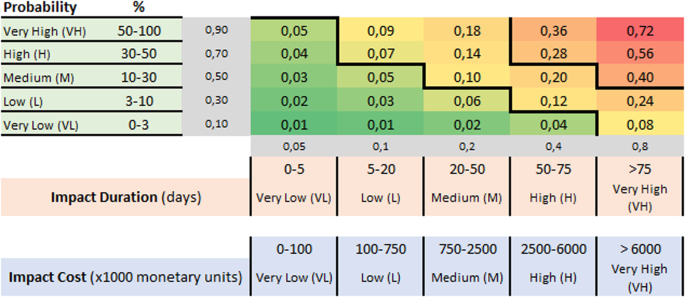

Risk matrices emerge as established familiar tools for assessing and ranking risks in many fields and industry sectors (Krisper, 2021 ; Qazi et al. 2021 ; Qazi and Simsekler, 2021 ; Monat and Doremus, 2020 ; Li et al. 2018 ). They are now so commonplace that everyone accepts and uses them without questioning them, along with their advantages and disadvantages. Risk matrices use the likelihood and potential impact of risks to inform decision making about prioritising identified risks (Proto et al. 2023 ). The methods that use the risk matrix confer higher priority to those risks in which the product of their likelihood and impact is the highest.

However, the probability-impact matrix has severe limitations (Goerlandt and Reniers, 2016 ; Duijm, 2015 ; Vatanpour et al. 2015 ; Ball and Watt, 2013 ; Levine, 2012 ; Cox, 2008 ; Cox et al. 2005 ). The main criticism levelled at this methodology is its failure to consider the complex interrelations between various risks and use precise estimates for probability and impact levels. Since then, increasingly more academics and practitioners are reluctant to resort to risk matrices (Qazi et al. 2021 ).

Motivated by the drawbacks of using risk matrices or probability-impact matrices, the following research question arises: Is it possible to find a methodology for project risk prioritisation that overcomes the limitations of the current probability-impact matrix?

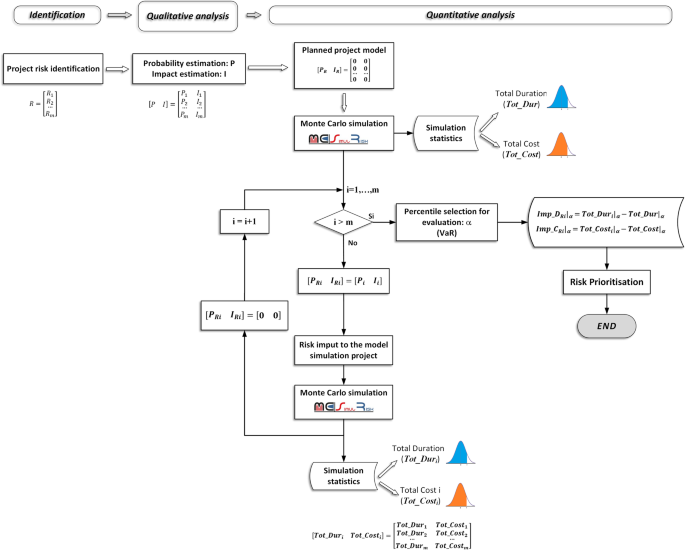

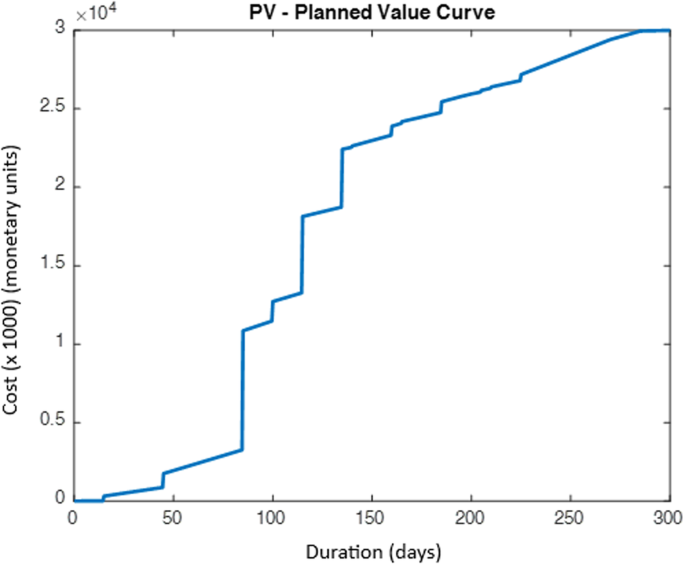

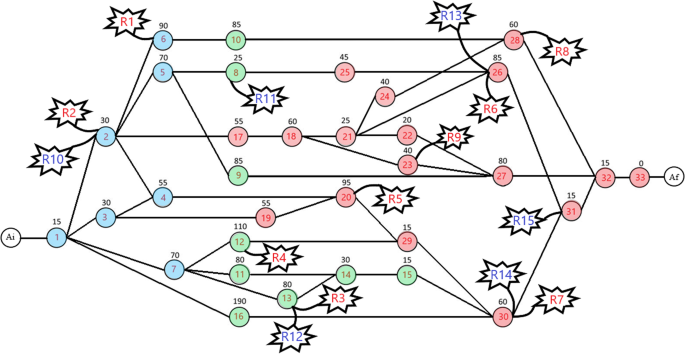

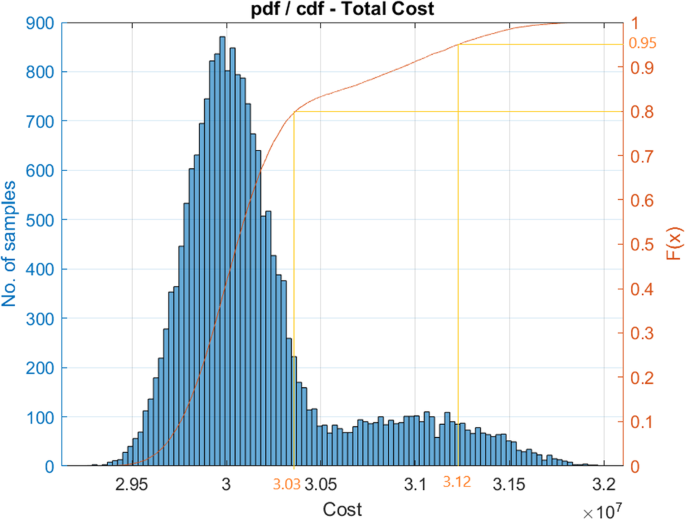

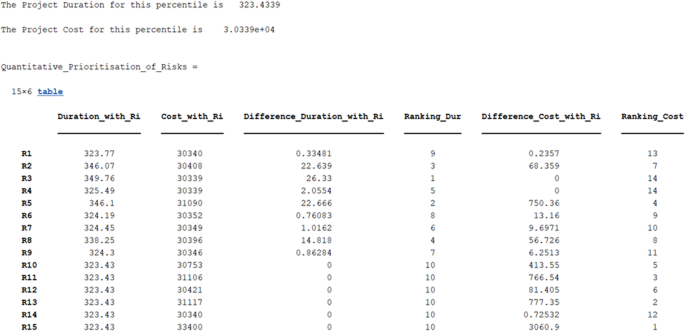

To answer this question, this paper proposes a methodology based on Monte Carlo Simulation that avoids using the probability-impact matrix and allows us to prioritise project risks by evaluating them quantitatively, and by assessing the impact of risks on project duration and the cost objectives. With the help of the ‘MCSimulRisk’ simulation software (Acebes et al. 2024 ; Acebes et al. 2023 ), this paper determines the impact of each risk on project duration objectives (quantified in time units) and cost objectives (quantified in monetary units). In this way, with the impact of all the risks, it is possible to establish their prioritisation based on their absolute (and not relative) importance for project objectives. The methodology allows quantified results to be obtained for each risk by differentiating between the project duration objective and its cost objective.