- Skip to main content

- Skip to FDA Search

- Skip to in this section menu

- Skip to footer links

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

U.S. Food and Drug Administration

- Search

- Menu

- For Patients

- Clinical Trials: What Patients Need to Know

What Are the Different Types of Clinical Research?

Different types of clinical research are used depending on what the researchers are studying. Below are descriptions of some different kinds of clinical research.

Treatment Research generally involves an intervention such as medication, psychotherapy, new devices, or new approaches to surgery or radiation therapy.

Prevention Research looks for better ways to prevent disorders from developing or returning. Different kinds of prevention research may study medicines, vitamins, vaccines, minerals, or lifestyle changes.

Diagnostic Research refers to the practice of looking for better ways to identify a particular disorder or condition.

Screening Research aims to find the best ways to detect certain disorders or health conditions.

Quality of Life Research explores ways to improve comfort and the quality of life for individuals with a chronic illness.

Genetic studies aim to improve the prediction of disorders by identifying and understanding how genes and illnesses may be related. Research in this area may explore ways in which a person’s genes make him or her more or less likely to develop a disorder. This may lead to development of tailor-made treatments based on a patient’s genetic make-up.

Epidemiological studies seek to identify the patterns, causes, and control of disorders in groups of people.

An important note: some clinical research is “outpatient,” meaning that participants do not stay overnight at the hospital. Some is “inpatient,” meaning that participants will need to stay for at least one night in the hospital or research center. Be sure to ask the researchers what their study requires.

Phases of clinical trials: when clinical research is used to evaluate medications and devices Clinical trials are a kind of clinical research designed to evaluate and test new interventions such as psychotherapy or medications. Clinical trials are often conducted in four phases. The trials at each phase have a different purpose and help scientists answer different questions.

Phase I trials Researchers test an experimental drug or treatment in a small group of people for the first time. The researchers evaluate the treatment’s safety, determine a safe dosage range, and identify side effects.

Phase II trials The experimental drug or treatment is given to a larger group of people to see if it is effective and to further evaluate its safety.

Phase III trials The experimental study drug or treatment is given to large groups of people. Researchers confirm its effectiveness, monitor side effects, compare it to commonly used treatments, and collect information that will allow the experimental drug or treatment to be used safely.

Phase IV trials Post-marketing studies, which are conducted after a treatment is approved for use by the FDA, provide additional information including the treatment or drug’s risks, benefits, and best use.

Examples of other kinds of clinical research Many people believe that all clinical research involves testing of new medications or devices. This is not true, however. Some studies do not involve testing medications and a person’s regular medications may not need to be changed. Healthy volunteers are also needed so that researchers can compare their results to results of people with the illness being studied. Some examples of other kinds of research include the following:

A long-term study that involves psychological tests or brain scans

A genetic study that involves blood tests but no changes in medication

A study of family history that involves talking to family members to learn about people’s medical needs and history.

- Clinical Trials

About Clinical Studies

Research: it's all about patients.

Mayo's mission is about the patient, the patient comes first. So the mission and research here, is to advance how we can best help the patient, how to make sure the patient comes first in care. So in many ways, it's a cycle. It can start with as simple as an idea, worked on in a laboratory, brought to the patient bedside, and if everything goes right, and let's say it's helpful or beneficial, then brought on as a standard approach. And I think that is one of the unique characteristics of Mayo's approach to research, that patient-centeredness. That really helps to put it in its own spotlight.

At Mayo Clinic, the needs of the patient come first. Part of this commitment involves conducting medical research with the goal of helping patients live longer, healthier lives.

Through clinical studies, which involve people who volunteer to participate in them, researchers can better understand how to diagnose, treat and prevent diseases or conditions.

Types of clinical studies

- Observational study. A type of study in which people are observed or certain outcomes are measured. No attempt is made by the researcher to affect the outcome — for example, no treatment is given by the researcher.

- Clinical trial (interventional study). During clinical trials, researchers learn if a new test or treatment works and is safe. Treatments studied in clinical trials might be new drugs or new combinations of drugs, new surgical procedures or devices, or new ways to use existing treatments. Find out more about the five phases of non-cancer clinical trials on ClinicalTrials.gov or the National Cancer Institute phases of cancer trials .

- Medical records research. Medical records research involves the use of information collected from medical records. By studying the medical records of large groups of people over long periods of time, researchers can see how diseases progress and which treatments and surgeries work best. Find out more about Minnesota research authorization .

Clinical studies may differ from standard medical care

A health care provider diagnoses and treats existing illnesses or conditions based on current clinical practice guidelines and available, approved treatments.

But researchers are constantly looking for new and better ways to prevent and treat disease. In their laboratories, they explore ideas and test hypotheses through discovery science. Some of these ideas move into formal clinical trials.

During clinical studies, researchers formally and scientifically gather new knowledge and possibly translate these findings into improved patient care.

Before clinical trials begin

This video demonstrates how discovery science works, what happens in the research lab before clinical studies begin, and how a discovery is transformed into a potential therapy ready to be tested in trials with human participants:

How clinical trials work

Trace the clinical trial journey from a discovery research idea to a viable translatable treatment for patients:

See a glossary of terms related to clinical studies, clinical trials and medical research on ClinicalTrials.gov.

Watch a video about clinical studies to help you prepare to participate.

Let's Talk About Clinical Research

Narrator: This presentation is a brief introduction to the terms, purposes, benefits and risks of clinical research.

If you have questions about the content of this program, talk with your health care provider.

What is clinical research?

Clinical research is a process to find new and better ways to understand, detect, control and treat health conditions. The scientific method is used to find answers to difficult health-related questions.

Ways to participate

There are many ways to participate in clinical research at Mayo Clinic. Three common ways are by volunteering to be in a study, by giving permission to have your medical record reviewed for research purposes, and by allowing your blood or tissue samples to be studied.

Types of clinical research

There are many types of clinical research:

- Prevention studies look at ways to stop diseases from occurring or from recurring after successful treatment.

- Screening studies compare detection methods for common conditions.

- Diagnostic studies test methods for early identification of disease in those with symptoms.

- Treatment studies test new combinations of drugs and new approaches to surgery, radiation therapy and complementary medicine.

- The role of inheritance or genetic studies may be independent or part of other research.

- Quality of life studies explore ways to manage symptoms of chronic illness or side effects of treatment.

- Medical records studies review information from large groups of people.

Clinical research volunteers

Participants in clinical research volunteer to take part. Participants may be healthy, at high risk for developing a disease, or already diagnosed with a disease or illness. When a study is offered, individuals may choose whether or not to participate. If they choose to participate, they may leave the study at any time.

Research terms

You will hear many terms describing clinical research. These include research study, experiment, medical research and clinical trial.

Clinical trial

A clinical trial is research to answer specific questions about new therapies or new ways of using known treatments. Clinical trials take place in phases. For a treatment to become standard, it usually goes through two or three clinical trial phases. The early phases look at treatment safety. Later phases continue to look at safety and also determine the effectiveness of the treatment.

Phase I clinical trial

A small number of people participate in a phase I clinical trial. The goals are to determine safe dosages and methods of treatment delivery. This may be the first time the drug or intervention is used with people.

Phase II clinical trial

Phase II clinical trials have more participants. The goals are to evaluate the effectiveness of the treatment and to monitor side effects. Side effects are monitored in all the phases, but this is a special focus of phase II.

Phase III clinical trial

Phase III clinical trials have the largest number of participants and may take place in multiple health care centers. The goal of a phase III clinical trial is to compare the new treatment to the standard treatment. Sometimes the standard treatment is no treatment.

Phase IV clinical trial

A phase IV clinical trial may be conducted after U.S. Food and Drug Administration approval. The goal is to further assess the long-term safety and effectiveness of a therapy. Smaller numbers of participants may be enrolled if the disease is rare. Larger numbers will be enrolled for common diseases, such as diabetes or heart disease.

Clinical research sponsors

Mayo Clinic funds clinical research at facilities in Rochester, Minnesota; Jacksonville, Florida; and Arizona, and in the Mayo Clinic Health System. Clinical research is conducted in partnership with other medical centers throughout the world. Other sponsors of research at Mayo Clinic include the National Institutes of Health, device or pharmaceutical companies, foundations and organizations.

Clinical research at Mayo Clinic

Dr. Hugh Smith, former chair of Mayo Clinic Board of Governors, stated, "Our commitment to research is based on our knowledge that medicine must be constantly moving forward, that we need to continue our efforts to better understand disease and bring the latest medical knowledge to our practice and to our patients."

This fits with the term "translational research," meaning what is learned in the laboratory goes quickly to the patient's bedside and what is learned at the bedside is taken back to the laboratory.

Ethics and safety of clinical research

All clinical research conducted at Mayo Clinic is reviewed and approved by Mayo's Institutional Review Board. Multiple specialized committees and colleagues may also provide review of the research. Federal rules help ensure that clinical research is conducted in a safe and ethical manner.

Institutional review board

An institutional review board (IRB) reviews all clinical research proposals. The goal is to protect the welfare and safety of human subjects. The IRB continues its review as research is conducted.

Consent process

Participants sign a consent form to ensure that they understand key facts about a study. Such facts include that participation is voluntary and they may withdraw at any time. The consent form is an informational document, not a contract.

Study activities

Staff from the study team describe the research activities during the consent process. The research may include X-rays, blood tests, counseling or medications.

Study design

During the consent process, you may hear different phrases related to study design. Randomized means you will be assigned to a group by chance, much like a flip of a coin. In a single-blinded study, participants do not know which treatment they are receiving. In a double-blinded study, neither the participant nor the research team knows which treatment is being administered.

Some studies use an inactive substance called a placebo.

Multisite studies allow individuals from many different locations or health care centers to participate.

Remuneration

If the consent form states remuneration is provided, you will be paid for your time and participation in the study.

Some studies may involve additional cost. To address costs in a study, carefully review the consent form and discuss questions with the research team and your insurance company. Medicare may cover routine care costs that are part of clinical trials. Medicaid programs in some states may also provide routine care cost coverage, as well.

When considering participation in a research study, carefully look at the benefits and risks. Benefits may include earlier access to new clinical approaches and regular attention from a research team. Research participation often helps others in the future.

Risks/inconveniences

Risks may include side effects. The research treatment may be no better than the standard treatment. More visits, if required in the study, may be inconvenient.

Weigh your risks and benefits

Consider your situation as you weigh the risks and benefits of participation prior to enrolling and during the study. You may stop participation in the study at any time.

Ask questions

Stay informed while participating in research:

- Write down questions you want answered.

- If you do not understand, say so.

- If you have concerns, speak up.

Website resources are available. The first website lists clinical research at Mayo Clinic. The second website, provided by the National Institutes of Health, lists studies occurring in the United States and throughout the world.

Additional information about clinical research may be found at the Mayo Clinic Barbara Woodward Lips Patient Education Center and the Stephen and Barbara Slaggie Family Cancer Education Center.

Clinical studies questions

- Phone: 800-664-4542 (toll-free)

- Contact form

Cancer-related clinical studies questions

- Phone: 855-776-0015 (toll-free)

International patient clinical studies questions

- Phone: 507-284-8884

- Email: [email protected]

Clinical Studies in Depth

Learning all you can about clinical studies helps you prepare to participate.

- Institutional Review Board

The Institutional Review Board protects the rights, privacy, and welfare of participants in research programs conducted by Mayo Clinic and its associated faculty, professional staff, and students.

More about research at Mayo Clinic

- Research Faculty

- Laboratories

- Core Facilities

- Centers & Programs

- Departments & Divisions

- Postdoctoral Fellowships

- Training Grant Programs

- Publications

Mayo Clinic Footer

- Request Appointment

- About Mayo Clinic

- About This Site

Legal Conditions and Terms

- Terms and Conditions

- Privacy Policy

- Notice of Privacy Practices

- Notice of Nondiscrimination

- Manage Cookies

Advertising

Mayo Clinic is a nonprofit organization and proceeds from Web advertising help support our mission. Mayo Clinic does not endorse any of the third party products and services advertised.

- Advertising and sponsorship policy

- Advertising and sponsorship opportunities

Reprint Permissions

A single copy of these materials may be reprinted for noncommercial personal use only. "Mayo," "Mayo Clinic," "MayoClinic.org," "Mayo Clinic Healthy Living," and the triple-shield Mayo Clinic logo are trademarks of Mayo Foundation for Medical Education and Research.

Principles of Research Methodology

A Guide for Clinical Investigators

- © 2012

- Phyllis G. Supino 0 ,

- Jeffrey S. Borer 1

, Cardiovascular Medicine, SUNY Downstate Medical Center, Brooklyn, USA

You can also search for this editor in PubMed Google Scholar

, Cardiovascualr Medicine, SUNY Downstate Medical Center, Brooklyn, USA

- Based on a highly regarded and popular lecture series on research methodology

- Comprehensive guide written by experts in the field

- Emphasizes the essentials and fundamentals of research methodologies

76k Accesses

23 Citations

7 Altmetric

This is a preview of subscription content, log in via an institution to check access.

Access this book

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Other ways to access

Licence this eBook for your library

Institutional subscriptions

About this book

Principles of Research Methodology: A Guide for Clinical Investigators is the definitive, comprehensive guide to understanding and performing clinical research. Designed for medical students, physicians, basic scientists involved in translational research, and other health professionals, this indispensable reference also addresses the unique challenges and demands of clinical research and offers clear guidance in becoming a more successful member of a medical research team and critical reader of the medical research literature. The book covers the entire research process, beginning with the conception of the research problem to publication of findings. Principles of Research Methodology: A Guide for Clinical Investigators comprehensively and concisely presents concepts in a manner that is relevant and engaging to read. The text combines theory and practical application to familiarize the reader with the logic of research design and hypothesis construction, the importance of research planning, the ethical basis of human subjects research, the basics of writing a clinical research protocol and scientific paper, the logic and techniques of data generation and management, and the fundamentals and implications of various sampling techniques and alternative statistical methodologies. Organized in thirteen easy to read chapters, the text emphasizes the importance of clearly-defined research questions and well-constructed hypothesis (reinforced throughout the various chapters) for informing methods and in guiding data interpretation. Written by prominent medical scientists and methodologists who have extensive personal experience in biomedical investigation and in teaching key aspects of research methodology to medical students, physicians and other health professionals, the authors expertly integrate theory with examples and employ language that is clear and useful for a general medical audience. A major contribution to the methodology literature, Principles of Research Methodology: A Guide for Clinical Investigators is an authoritative resource for all individuals who perform research, plan to perform it, or wish to understand it better.

Similar content being viewed by others

History of Research

Research (See Clinical Research; Research Ethics)

General Study Objectives

Table of contents (13 chapters), front matter, overview of the research process.

Phyllis G. Supino

Developing a Research Problem

- Phyllis G. Supino, Helen Ann Brown Epstein

The Research Hypothesis: Role and Construction

Design and interpretation of observational studies: cohort, case–control, and cross-sectional designs.

- Martin L. Lesser

Fundamental Issues in Evaluating the Impact of Interventions: Sources and Control of Bias

Protocol development and preparation for a clinical trial.

- Joseph A. Franciosa

Data Collection and Management in Clinical Research

- Mario Guralnik

Constructing and Evaluating Self-Report Measures

- Peter L. Flom, Phyllis G. Supino, N. Philip Ross

Selecting and Evaluating Secondary Data: The Role of Systematic Reviews and Meta-analysis

- Lorenzo Paladino, Richard H. Sinert

Sampling Methodology: Implications for Drawing Conclusions from Clinical Research Findings

- Richard C. Zink

Introductory Statistics in Medical Research

- Todd A. Durham, Gary G. Koch, Lisa M. LaVange

Ethical Issues in Clinical Research

- Eli A. Friedman

How to Prepare a Scientific Paper

Jeffrey S. Borer

Back Matter

From the reviews:

Editors and Affiliations

Bibliographic information.

Book Title : Principles of Research Methodology

Book Subtitle : A Guide for Clinical Investigators

Editors : Phyllis G. Supino, Jeffrey S. Borer

DOI : https://doi.org/10.1007/978-1-4614-3360-6

Publisher : Springer New York, NY

eBook Packages : Medicine , Medicine (R0)

Copyright Information : Springer Science+Business Media, LLC 2012

Hardcover ISBN : 978-1-4614-3359-0 Published: 22 June 2012

Softcover ISBN : 978-1-4939-4292-3 Published: 23 August 2016

eBook ISBN : 978-1-4614-3360-6 Published: 22 June 2012

Edition Number : 1

Number of Pages : XVI, 276

Topics : Oncology , Cardiology , Internal Medicine , Endocrinology , Neurology

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Open access

- Published: 07 September 2020

A tutorial on methodological studies: the what, when, how and why

- Lawrence Mbuagbaw ORCID: orcid.org/0000-0001-5855-5461 1 , 2 , 3 ,

- Daeria O. Lawson 1 ,

- Livia Puljak 4 ,

- David B. Allison 5 &

- Lehana Thabane 1 , 2 , 6 , 7 , 8

BMC Medical Research Methodology volume 20 , Article number: 226 ( 2020 ) Cite this article

40k Accesses

54 Citations

58 Altmetric

Metrics details

Methodological studies – studies that evaluate the design, analysis or reporting of other research-related reports – play an important role in health research. They help to highlight issues in the conduct of research with the aim of improving health research methodology, and ultimately reducing research waste.

We provide an overview of some of the key aspects of methodological studies such as what they are, and when, how and why they are done. We adopt a “frequently asked questions” format to facilitate reading this paper and provide multiple examples to help guide researchers interested in conducting methodological studies. Some of the topics addressed include: is it necessary to publish a study protocol? How to select relevant research reports and databases for a methodological study? What approaches to data extraction and statistical analysis should be considered when conducting a methodological study? What are potential threats to validity and is there a way to appraise the quality of methodological studies?

Appropriate reflection and application of basic principles of epidemiology and biostatistics are required in the design and analysis of methodological studies. This paper provides an introduction for further discussion about the conduct of methodological studies.

Peer Review reports

The field of meta-research (or research-on-research) has proliferated in recent years in response to issues with research quality and conduct [ 1 , 2 , 3 ]. As the name suggests, this field targets issues with research design, conduct, analysis and reporting. Various types of research reports are often examined as the unit of analysis in these studies (e.g. abstracts, full manuscripts, trial registry entries). Like many other novel fields of research, meta-research has seen a proliferation of use before the development of reporting guidance. For example, this was the case with randomized trials for which risk of bias tools and reporting guidelines were only developed much later – after many trials had been published and noted to have limitations [ 4 , 5 ]; and for systematic reviews as well [ 6 , 7 , 8 ]. However, in the absence of formal guidance, studies that report on research differ substantially in how they are named, conducted and reported [ 9 , 10 ]. This creates challenges in identifying, summarizing and comparing them. In this tutorial paper, we will use the term methodological study to refer to any study that reports on the design, conduct, analysis or reporting of primary or secondary research-related reports (such as trial registry entries and conference abstracts).

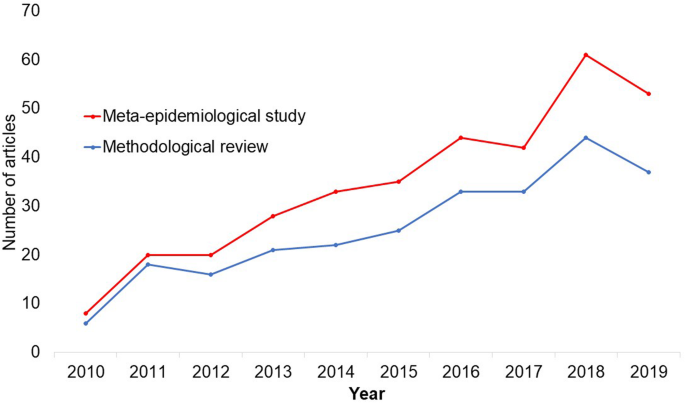

In the past 10 years, there has been an increase in the use of terms related to methodological studies (based on records retrieved with a keyword search [in the title and abstract] for “methodological review” and “meta-epidemiological study” in PubMed up to December 2019), suggesting that these studies may be appearing more frequently in the literature. See Fig. 1 .

Trends in the number studies that mention “methodological review” or “meta-

epidemiological study” in PubMed.

The methods used in many methodological studies have been borrowed from systematic and scoping reviews. This practice has influenced the direction of the field, with many methodological studies including searches of electronic databases, screening of records, duplicate data extraction and assessments of risk of bias in the included studies. However, the research questions posed in methodological studies do not always require the approaches listed above, and guidance is needed on when and how to apply these methods to a methodological study. Even though methodological studies can be conducted on qualitative or mixed methods research, this paper focuses on and draws examples exclusively from quantitative research.

The objectives of this paper are to provide some insights on how to conduct methodological studies so that there is greater consistency between the research questions posed, and the design, analysis and reporting of findings. We provide multiple examples to illustrate concepts and a proposed framework for categorizing methodological studies in quantitative research.

What is a methodological study?

Any study that describes or analyzes methods (design, conduct, analysis or reporting) in published (or unpublished) literature is a methodological study. Consequently, the scope of methodological studies is quite extensive and includes, but is not limited to, topics as diverse as: research question formulation [ 11 ]; adherence to reporting guidelines [ 12 , 13 , 14 ] and consistency in reporting [ 15 ]; approaches to study analysis [ 16 ]; investigating the credibility of analyses [ 17 ]; and studies that synthesize these methodological studies [ 18 ]. While the nomenclature of methodological studies is not uniform, the intents and purposes of these studies remain fairly consistent – to describe or analyze methods in primary or secondary studies. As such, methodological studies may also be classified as a subtype of observational studies.

Parallel to this are experimental studies that compare different methods. Even though they play an important role in informing optimal research methods, experimental methodological studies are beyond the scope of this paper. Examples of such studies include the randomized trials by Buscemi et al., comparing single data extraction to double data extraction [ 19 ], and Carrasco-Labra et al., comparing approaches to presenting findings in Grading of Recommendations, Assessment, Development and Evaluations (GRADE) summary of findings tables [ 20 ]. In these studies, the unit of analysis is the person or groups of individuals applying the methods. We also direct readers to the Studies Within a Trial (SWAT) and Studies Within a Review (SWAR) programme operated through the Hub for Trials Methodology Research, for further reading as a potential useful resource for these types of experimental studies [ 21 ]. Lastly, this paper is not meant to inform the conduct of research using computational simulation and mathematical modeling for which some guidance already exists [ 22 ], or studies on the development of methods using consensus-based approaches.

When should we conduct a methodological study?

Methodological studies occupy a unique niche in health research that allows them to inform methodological advances. Methodological studies should also be conducted as pre-cursors to reporting guideline development, as they provide an opportunity to understand current practices, and help to identify the need for guidance and gaps in methodological or reporting quality. For example, the development of the popular Preferred Reporting Items of Systematic reviews and Meta-Analyses (PRISMA) guidelines were preceded by methodological studies identifying poor reporting practices [ 23 , 24 ]. In these instances, after the reporting guidelines are published, methodological studies can also be used to monitor uptake of the guidelines.

These studies can also be conducted to inform the state of the art for design, analysis and reporting practices across different types of health research fields, with the aim of improving research practices, and preventing or reducing research waste. For example, Samaan et al. conducted a scoping review of adherence to different reporting guidelines in health care literature [ 18 ]. Methodological studies can also be used to determine the factors associated with reporting practices. For example, Abbade et al. investigated journal characteristics associated with the use of the Participants, Intervention, Comparison, Outcome, Timeframe (PICOT) format in framing research questions in trials of venous ulcer disease [ 11 ].

How often are methodological studies conducted?

There is no clear answer to this question. Based on a search of PubMed, the use of related terms (“methodological review” and “meta-epidemiological study”) – and therefore, the number of methodological studies – is on the rise. However, many other terms are used to describe methodological studies. There are also many studies that explore design, conduct, analysis or reporting of research reports, but that do not use any specific terms to describe or label their study design in terms of “methodology”. This diversity in nomenclature makes a census of methodological studies elusive. Appropriate terminology and key words for methodological studies are needed to facilitate improved accessibility for end-users.

Why do we conduct methodological studies?

Methodological studies provide information on the design, conduct, analysis or reporting of primary and secondary research and can be used to appraise quality, quantity, completeness, accuracy and consistency of health research. These issues can be explored in specific fields, journals, databases, geographical regions and time periods. For example, Areia et al. explored the quality of reporting of endoscopic diagnostic studies in gastroenterology [ 25 ]; Knol et al. investigated the reporting of p -values in baseline tables in randomized trial published in high impact journals [ 26 ]; Chen et al. describe adherence to the Consolidated Standards of Reporting Trials (CONSORT) statement in Chinese Journals [ 27 ]; and Hopewell et al. describe the effect of editors’ implementation of CONSORT guidelines on reporting of abstracts over time [ 28 ]. Methodological studies provide useful information to researchers, clinicians, editors, publishers and users of health literature. As a result, these studies have been at the cornerstone of important methodological developments in the past two decades and have informed the development of many health research guidelines including the highly cited CONSORT statement [ 5 ].

Where can we find methodological studies?

Methodological studies can be found in most common biomedical bibliographic databases (e.g. Embase, MEDLINE, PubMed, Web of Science). However, the biggest caveat is that methodological studies are hard to identify in the literature due to the wide variety of names used and the lack of comprehensive databases dedicated to them. A handful can be found in the Cochrane Library as “Cochrane Methodology Reviews”, but these studies only cover methodological issues related to systematic reviews. Previous attempts to catalogue all empirical studies of methods used in reviews were abandoned 10 years ago [ 29 ]. In other databases, a variety of search terms may be applied with different levels of sensitivity and specificity.

Some frequently asked questions about methodological studies

In this section, we have outlined responses to questions that might help inform the conduct of methodological studies.

Q: How should I select research reports for my methodological study?

A: Selection of research reports for a methodological study depends on the research question and eligibility criteria. Once a clear research question is set and the nature of literature one desires to review is known, one can then begin the selection process. Selection may begin with a broad search, especially if the eligibility criteria are not apparent. For example, a methodological study of Cochrane Reviews of HIV would not require a complex search as all eligible studies can easily be retrieved from the Cochrane Library after checking a few boxes [ 30 ]. On the other hand, a methodological study of subgroup analyses in trials of gastrointestinal oncology would require a search to find such trials, and further screening to identify trials that conducted a subgroup analysis [ 31 ].

The strategies used for identifying participants in observational studies can apply here. One may use a systematic search to identify all eligible studies. If the number of eligible studies is unmanageable, a random sample of articles can be expected to provide comparable results if it is sufficiently large [ 32 ]. For example, Wilson et al. used a random sample of trials from the Cochrane Stroke Group’s Trial Register to investigate completeness of reporting [ 33 ]. It is possible that a simple random sample would lead to underrepresentation of units (i.e. research reports) that are smaller in number. This is relevant if the investigators wish to compare multiple groups but have too few units in one group. In this case a stratified sample would help to create equal groups. For example, in a methodological study comparing Cochrane and non-Cochrane reviews, Kahale et al. drew random samples from both groups [ 34 ]. Alternatively, systematic or purposeful sampling strategies can be used and we encourage researchers to justify their selected approaches based on the study objective.

Q: How many databases should I search?

A: The number of databases one should search would depend on the approach to sampling, which can include targeting the entire “population” of interest or a sample of that population. If you are interested in including the entire target population for your research question, or drawing a random or systematic sample from it, then a comprehensive and exhaustive search for relevant articles is required. In this case, we recommend using systematic approaches for searching electronic databases (i.e. at least 2 databases with a replicable and time stamped search strategy). The results of your search will constitute a sampling frame from which eligible studies can be drawn.

Alternatively, if your approach to sampling is purposeful, then we recommend targeting the database(s) or data sources (e.g. journals, registries) that include the information you need. For example, if you are conducting a methodological study of high impact journals in plastic surgery and they are all indexed in PubMed, you likely do not need to search any other databases. You may also have a comprehensive list of all journals of interest and can approach your search using the journal names in your database search (or by accessing the journal archives directly from the journal’s website). Even though one could also search journals’ web pages directly, using a database such as PubMed has multiple advantages, such as the use of filters, so the search can be narrowed down to a certain period, or study types of interest. Furthermore, individual journals’ web sites may have different search functionalities, which do not necessarily yield a consistent output.

Q: Should I publish a protocol for my methodological study?

A: A protocol is a description of intended research methods. Currently, only protocols for clinical trials require registration [ 35 ]. Protocols for systematic reviews are encouraged but no formal recommendation exists. The scientific community welcomes the publication of protocols because they help protect against selective outcome reporting, the use of post hoc methodologies to embellish results, and to help avoid duplication of efforts [ 36 ]. While the latter two risks exist in methodological research, the negative consequences may be substantially less than for clinical outcomes. In a sample of 31 methodological studies, 7 (22.6%) referenced a published protocol [ 9 ]. In the Cochrane Library, there are 15 protocols for methodological reviews (21 July 2020). This suggests that publishing protocols for methodological studies is not uncommon.

Authors can consider publishing their study protocol in a scholarly journal as a manuscript. Advantages of such publication include obtaining peer-review feedback about the planned study, and easy retrieval by searching databases such as PubMed. The disadvantages in trying to publish protocols includes delays associated with manuscript handling and peer review, as well as costs, as few journals publish study protocols, and those journals mostly charge article-processing fees [ 37 ]. Authors who would like to make their protocol publicly available without publishing it in scholarly journals, could deposit their study protocols in publicly available repositories, such as the Open Science Framework ( https://osf.io/ ).

Q: How to appraise the quality of a methodological study?

A: To date, there is no published tool for appraising the risk of bias in a methodological study, but in principle, a methodological study could be considered as a type of observational study. Therefore, during conduct or appraisal, care should be taken to avoid the biases common in observational studies [ 38 ]. These biases include selection bias, comparability of groups, and ascertainment of exposure or outcome. In other words, to generate a representative sample, a comprehensive reproducible search may be necessary to build a sampling frame. Additionally, random sampling may be necessary to ensure that all the included research reports have the same probability of being selected, and the screening and selection processes should be transparent and reproducible. To ensure that the groups compared are similar in all characteristics, matching, random sampling or stratified sampling can be used. Statistical adjustments for between-group differences can also be applied at the analysis stage. Finally, duplicate data extraction can reduce errors in assessment of exposures or outcomes.

Q: Should I justify a sample size?

A: In all instances where one is not using the target population (i.e. the group to which inferences from the research report are directed) [ 39 ], a sample size justification is good practice. The sample size justification may take the form of a description of what is expected to be achieved with the number of articles selected, or a formal sample size estimation that outlines the number of articles required to answer the research question with a certain precision and power. Sample size justifications in methodological studies are reasonable in the following instances:

Comparing two groups

Determining a proportion, mean or another quantifier

Determining factors associated with an outcome using regression-based analyses

For example, El Dib et al. computed a sample size requirement for a methodological study of diagnostic strategies in randomized trials, based on a confidence interval approach [ 40 ].

Q: What should I call my study?

A: Other terms which have been used to describe/label methodological studies include “ methodological review ”, “methodological survey” , “meta-epidemiological study” , “systematic review” , “systematic survey”, “meta-research”, “research-on-research” and many others. We recommend that the study nomenclature be clear, unambiguous, informative and allow for appropriate indexing. Methodological study nomenclature that should be avoided includes “ systematic review” – as this will likely be confused with a systematic review of a clinical question. “ Systematic survey” may also lead to confusion about whether the survey was systematic (i.e. using a preplanned methodology) or a survey using “ systematic” sampling (i.e. a sampling approach using specific intervals to determine who is selected) [ 32 ]. Any of the above meanings of the words “ systematic” may be true for methodological studies and could be potentially misleading. “ Meta-epidemiological study” is ideal for indexing, but not very informative as it describes an entire field. The term “ review ” may point towards an appraisal or “review” of the design, conduct, analysis or reporting (or methodological components) of the targeted research reports, yet it has also been used to describe narrative reviews [ 41 , 42 ]. The term “ survey ” is also in line with the approaches used in many methodological studies [ 9 ], and would be indicative of the sampling procedures of this study design. However, in the absence of guidelines on nomenclature, the term “ methodological study ” is broad enough to capture most of the scenarios of such studies.

Q: Should I account for clustering in my methodological study?

A: Data from methodological studies are often clustered. For example, articles coming from a specific source may have different reporting standards (e.g. the Cochrane Library). Articles within the same journal may be similar due to editorial practices and policies, reporting requirements and endorsement of guidelines. There is emerging evidence that these are real concerns that should be accounted for in analyses [ 43 ]. Some cluster variables are described in the section: “ What variables are relevant to methodological studies?”

A variety of modelling approaches can be used to account for correlated data, including the use of marginal, fixed or mixed effects regression models with appropriate computation of standard errors [ 44 ]. For example, Kosa et al. used generalized estimation equations to account for correlation of articles within journals [ 15 ]. Not accounting for clustering could lead to incorrect p -values, unduly narrow confidence intervals, and biased estimates [ 45 ].

Q: Should I extract data in duplicate?

A: Yes. Duplicate data extraction takes more time but results in less errors [ 19 ]. Data extraction errors in turn affect the effect estimate [ 46 ], and therefore should be mitigated. Duplicate data extraction should be considered in the absence of other approaches to minimize extraction errors. However, much like systematic reviews, this area will likely see rapid new advances with machine learning and natural language processing technologies to support researchers with screening and data extraction [ 47 , 48 ]. However, experience plays an important role in the quality of extracted data and inexperienced extractors should be paired with experienced extractors [ 46 , 49 ].

Q: Should I assess the risk of bias of research reports included in my methodological study?

A : Risk of bias is most useful in determining the certainty that can be placed in the effect measure from a study. In methodological studies, risk of bias may not serve the purpose of determining the trustworthiness of results, as effect measures are often not the primary goal of methodological studies. Determining risk of bias in methodological studies is likely a practice borrowed from systematic review methodology, but whose intrinsic value is not obvious in methodological studies. When it is part of the research question, investigators often focus on one aspect of risk of bias. For example, Speich investigated how blinding was reported in surgical trials [ 50 ], and Abraha et al., investigated the application of intention-to-treat analyses in systematic reviews and trials [ 51 ].

Q: What variables are relevant to methodological studies?

A: There is empirical evidence that certain variables may inform the findings in a methodological study. We outline some of these and provide a brief overview below:

Country: Countries and regions differ in their research cultures, and the resources available to conduct research. Therefore, it is reasonable to believe that there may be differences in methodological features across countries. Methodological studies have reported loco-regional differences in reporting quality [ 52 , 53 ]. This may also be related to challenges non-English speakers face in publishing papers in English.

Authors’ expertise: The inclusion of authors with expertise in research methodology, biostatistics, and scientific writing is likely to influence the end-product. Oltean et al. found that among randomized trials in orthopaedic surgery, the use of analyses that accounted for clustering was more likely when specialists (e.g. statistician, epidemiologist or clinical trials methodologist) were included on the study team [ 54 ]. Fleming et al. found that including methodologists in the review team was associated with appropriate use of reporting guidelines [ 55 ].

Source of funding and conflicts of interest: Some studies have found that funded studies report better [ 56 , 57 ], while others do not [ 53 , 58 ]. The presence of funding would indicate the availability of resources deployed to ensure optimal design, conduct, analysis and reporting. However, the source of funding may introduce conflicts of interest and warrant assessment. For example, Kaiser et al. investigated the effect of industry funding on obesity or nutrition randomized trials and found that reporting quality was similar [ 59 ]. Thomas et al. looked at reporting quality of long-term weight loss trials and found that industry funded studies were better [ 60 ]. Kan et al. examined the association between industry funding and “positive trials” (trials reporting a significant intervention effect) and found that industry funding was highly predictive of a positive trial [ 61 ]. This finding is similar to that of a recent Cochrane Methodology Review by Hansen et al. [ 62 ]

Journal characteristics: Certain journals’ characteristics may influence the study design, analysis or reporting. Characteristics such as journal endorsement of guidelines [ 63 , 64 ], and Journal Impact Factor (JIF) have been shown to be associated with reporting [ 63 , 65 , 66 , 67 ].

Study size (sample size/number of sites): Some studies have shown that reporting is better in larger studies [ 53 , 56 , 58 ].

Year of publication: It is reasonable to assume that design, conduct, analysis and reporting of research will change over time. Many studies have demonstrated improvements in reporting over time or after the publication of reporting guidelines [ 68 , 69 ].

Type of intervention: In a methodological study of reporting quality of weight loss intervention studies, Thabane et al. found that trials of pharmacologic interventions were reported better than trials of non-pharmacologic interventions [ 70 ].

Interactions between variables: Complex interactions between the previously listed variables are possible. High income countries with more resources may be more likely to conduct larger studies and incorporate a variety of experts. Authors in certain countries may prefer certain journals, and journal endorsement of guidelines and editorial policies may change over time.

Q: Should I focus only on high impact journals?

A: Investigators may choose to investigate only high impact journals because they are more likely to influence practice and policy, or because they assume that methodological standards would be higher. However, the JIF may severely limit the scope of articles included and may skew the sample towards articles with positive findings. The generalizability and applicability of findings from a handful of journals must be examined carefully, especially since the JIF varies over time. Even among journals that are all “high impact”, variations exist in methodological standards.

Q: Can I conduct a methodological study of qualitative research?

A: Yes. Even though a lot of methodological research has been conducted in the quantitative research field, methodological studies of qualitative studies are feasible. Certain databases that catalogue qualitative research including the Cumulative Index to Nursing & Allied Health Literature (CINAHL) have defined subject headings that are specific to methodological research (e.g. “research methodology”). Alternatively, one could also conduct a qualitative methodological review; that is, use qualitative approaches to synthesize methodological issues in qualitative studies.

Q: What reporting guidelines should I use for my methodological study?

A: There is no guideline that covers the entire scope of methodological studies. One adaptation of the PRISMA guidelines has been published, which works well for studies that aim to use the entire target population of research reports [ 71 ]. However, it is not widely used (40 citations in 2 years as of 09 December 2019), and methodological studies that are designed as cross-sectional or before-after studies require a more fit-for purpose guideline. A more encompassing reporting guideline for a broad range of methodological studies is currently under development [ 72 ]. However, in the absence of formal guidance, the requirements for scientific reporting should be respected, and authors of methodological studies should focus on transparency and reproducibility.

Q: What are the potential threats to validity and how can I avoid them?

A: Methodological studies may be compromised by a lack of internal or external validity. The main threats to internal validity in methodological studies are selection and confounding bias. Investigators must ensure that the methods used to select articles does not make them differ systematically from the set of articles to which they would like to make inferences. For example, attempting to make extrapolations to all journals after analyzing high-impact journals would be misleading.

Many factors (confounders) may distort the association between the exposure and outcome if the included research reports differ with respect to these factors [ 73 ]. For example, when examining the association between source of funding and completeness of reporting, it may be necessary to account for journals that endorse the guidelines. Confounding bias can be addressed by restriction, matching and statistical adjustment [ 73 ]. Restriction appears to be the method of choice for many investigators who choose to include only high impact journals or articles in a specific field. For example, Knol et al. examined the reporting of p -values in baseline tables of high impact journals [ 26 ]. Matching is also sometimes used. In the methodological study of non-randomized interventional studies of elective ventral hernia repair, Parker et al. matched prospective studies with retrospective studies and compared reporting standards [ 74 ]. Some other methodological studies use statistical adjustments. For example, Zhang et al. used regression techniques to determine the factors associated with missing participant data in trials [ 16 ].

With regard to external validity, researchers interested in conducting methodological studies must consider how generalizable or applicable their findings are. This should tie in closely with the research question and should be explicit. For example. Findings from methodological studies on trials published in high impact cardiology journals cannot be assumed to be applicable to trials in other fields. However, investigators must ensure that their sample truly represents the target sample either by a) conducting a comprehensive and exhaustive search, or b) using an appropriate and justified, randomly selected sample of research reports.

Even applicability to high impact journals may vary based on the investigators’ definition, and over time. For example, for high impact journals in the field of general medicine, Bouwmeester et al. included the Annals of Internal Medicine (AIM), BMJ, the Journal of the American Medical Association (JAMA), Lancet, the New England Journal of Medicine (NEJM), and PLoS Medicine ( n = 6) [ 75 ]. In contrast, the high impact journals selected in the methodological study by Schiller et al. were BMJ, JAMA, Lancet, and NEJM ( n = 4) [ 76 ]. Another methodological study by Kosa et al. included AIM, BMJ, JAMA, Lancet and NEJM ( n = 5). In the methodological study by Thabut et al., journals with a JIF greater than 5 were considered to be high impact. Riado Minguez et al. used first quartile journals in the Journal Citation Reports (JCR) for a specific year to determine “high impact” [ 77 ]. Ultimately, the definition of high impact will be based on the number of journals the investigators are willing to include, the year of impact and the JIF cut-off [ 78 ]. We acknowledge that the term “generalizability” may apply differently for methodological studies, especially when in many instances it is possible to include the entire target population in the sample studied.

Finally, methodological studies are not exempt from information bias which may stem from discrepancies in the included research reports [ 79 ], errors in data extraction, or inappropriate interpretation of the information extracted. Likewise, publication bias may also be a concern in methodological studies, but such concepts have not yet been explored.

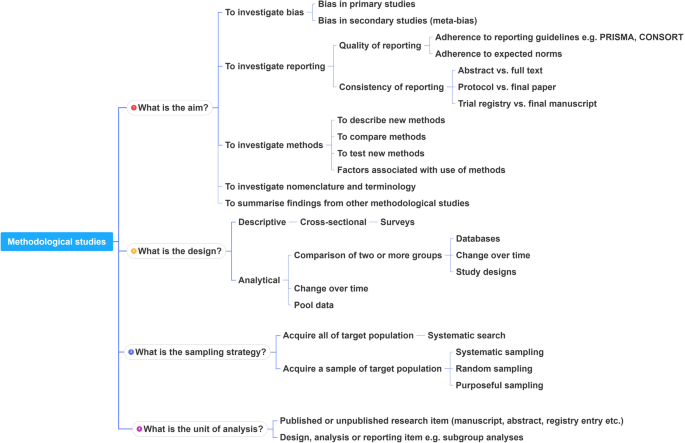

A proposed framework

In order to inform discussions about methodological studies, the development of guidance for what should be reported, we have outlined some key features of methodological studies that can be used to classify them. For each of the categories outlined below, we provide an example. In our experience, the choice of approach to completing a methodological study can be informed by asking the following four questions:

What is the aim?

Methodological studies that investigate bias

A methodological study may be focused on exploring sources of bias in primary or secondary studies (meta-bias), or how bias is analyzed. We have taken care to distinguish bias (i.e. systematic deviations from the truth irrespective of the source) from reporting quality or completeness (i.e. not adhering to a specific reporting guideline or norm). An example of where this distinction would be important is in the case of a randomized trial with no blinding. This study (depending on the nature of the intervention) would be at risk of performance bias. However, if the authors report that their study was not blinded, they would have reported adequately. In fact, some methodological studies attempt to capture both “quality of conduct” and “quality of reporting”, such as Richie et al., who reported on the risk of bias in randomized trials of pharmacy practice interventions [ 80 ]. Babic et al. investigated how risk of bias was used to inform sensitivity analyses in Cochrane reviews [ 81 ]. Further, biases related to choice of outcomes can also be explored. For example, Tan et al investigated differences in treatment effect size based on the outcome reported [ 82 ].

Methodological studies that investigate quality (or completeness) of reporting

Methodological studies may report quality of reporting against a reporting checklist (i.e. adherence to guidelines) or against expected norms. For example, Croituro et al. report on the quality of reporting in systematic reviews published in dermatology journals based on their adherence to the PRISMA statement [ 83 ], and Khan et al. described the quality of reporting of harms in randomized controlled trials published in high impact cardiovascular journals based on the CONSORT extension for harms [ 84 ]. Other methodological studies investigate reporting of certain features of interest that may not be part of formally published checklists or guidelines. For example, Mbuagbaw et al. described how often the implications for research are elaborated using the Evidence, Participants, Intervention, Comparison, Outcome, Timeframe (EPICOT) format [ 30 ].

Methodological studies that investigate the consistency of reporting

Sometimes investigators may be interested in how consistent reports of the same research are, as it is expected that there should be consistency between: conference abstracts and published manuscripts; manuscript abstracts and manuscript main text; and trial registration and published manuscript. For example, Rosmarakis et al. investigated consistency between conference abstracts and full text manuscripts [ 85 ].

Methodological studies that investigate factors associated with reporting

In addition to identifying issues with reporting in primary and secondary studies, authors of methodological studies may be interested in determining the factors that are associated with certain reporting practices. Many methodological studies incorporate this, albeit as a secondary outcome. For example, Farrokhyar et al. investigated the factors associated with reporting quality in randomized trials of coronary artery bypass grafting surgery [ 53 ].

Methodological studies that investigate methods

Methodological studies may also be used to describe methods or compare methods, and the factors associated with methods. Muller et al. described the methods used for systematic reviews and meta-analyses of observational studies [ 86 ].

Methodological studies that summarize other methodological studies

Some methodological studies synthesize results from other methodological studies. For example, Li et al. conducted a scoping review of methodological reviews that investigated consistency between full text and abstracts in primary biomedical research [ 87 ].

Methodological studies that investigate nomenclature and terminology

Some methodological studies may investigate the use of names and terms in health research. For example, Martinic et al. investigated the definitions of systematic reviews used in overviews of systematic reviews (OSRs), meta-epidemiological studies and epidemiology textbooks [ 88 ].

Other types of methodological studies

In addition to the previously mentioned experimental methodological studies, there may exist other types of methodological studies not captured here.

What is the design?

Methodological studies that are descriptive

Most methodological studies are purely descriptive and report their findings as counts (percent) and means (standard deviation) or medians (interquartile range). For example, Mbuagbaw et al. described the reporting of research recommendations in Cochrane HIV systematic reviews [ 30 ]. Gohari et al. described the quality of reporting of randomized trials in diabetes in Iran [ 12 ].

Methodological studies that are analytical

Some methodological studies are analytical wherein “analytical studies identify and quantify associations, test hypotheses, identify causes and determine whether an association exists between variables, such as between an exposure and a disease.” [ 89 ] In the case of methodological studies all these investigations are possible. For example, Kosa et al. investigated the association between agreement in primary outcome from trial registry to published manuscript and study covariates. They found that larger and more recent studies were more likely to have agreement [ 15 ]. Tricco et al. compared the conclusion statements from Cochrane and non-Cochrane systematic reviews with a meta-analysis of the primary outcome and found that non-Cochrane reviews were more likely to report positive findings. These results are a test of the null hypothesis that the proportions of Cochrane and non-Cochrane reviews that report positive results are equal [ 90 ].

What is the sampling strategy?

Methodological studies that include the target population

Methodological reviews with narrow research questions may be able to include the entire target population. For example, in the methodological study of Cochrane HIV systematic reviews, Mbuagbaw et al. included all of the available studies ( n = 103) [ 30 ].

Methodological studies that include a sample of the target population

Many methodological studies use random samples of the target population [ 33 , 91 , 92 ]. Alternatively, purposeful sampling may be used, limiting the sample to a subset of research-related reports published within a certain time period, or in journals with a certain ranking or on a topic. Systematic sampling can also be used when random sampling may be challenging to implement.

What is the unit of analysis?

Methodological studies with a research report as the unit of analysis

Many methodological studies use a research report (e.g. full manuscript of study, abstract portion of the study) as the unit of analysis, and inferences can be made at the study-level. However, both published and unpublished research-related reports can be studied. These may include articles, conference abstracts, registry entries etc.

Methodological studies with a design, analysis or reporting item as the unit of analysis

Some methodological studies report on items which may occur more than once per article. For example, Paquette et al. report on subgroup analyses in Cochrane reviews of atrial fibrillation in which 17 systematic reviews planned 56 subgroup analyses [ 93 ].

This framework is outlined in Fig. 2 .

A proposed framework for methodological studies

Conclusions

Methodological studies have examined different aspects of reporting such as quality, completeness, consistency and adherence to reporting guidelines. As such, many of the methodological study examples cited in this tutorial are related to reporting. However, as an evolving field, the scope of research questions that can be addressed by methodological studies is expected to increase.

In this paper we have outlined the scope and purpose of methodological studies, along with examples of instances in which various approaches have been used. In the absence of formal guidance on the design, conduct, analysis and reporting of methodological studies, we have provided some advice to help make methodological studies consistent. This advice is grounded in good contemporary scientific practice. Generally, the research question should tie in with the sampling approach and planned analysis. We have also highlighted the variables that may inform findings from methodological studies. Lastly, we have provided suggestions for ways in which authors can categorize their methodological studies to inform their design and analysis.

Availability of data and materials

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

Abbreviations

Consolidated Standards of Reporting Trials

Evidence, Participants, Intervention, Comparison, Outcome, Timeframe

Grading of Recommendations, Assessment, Development and Evaluations

Participants, Intervention, Comparison, Outcome, Timeframe

Preferred Reporting Items of Systematic reviews and Meta-Analyses

Studies Within a Review

Studies Within a Trial

Chalmers I, Glasziou P. Avoidable waste in the production and reporting of research evidence. Lancet. 2009;374(9683):86–9.

PubMed Google Scholar

Chan AW, Song F, Vickers A, Jefferson T, Dickersin K, Gotzsche PC, Krumholz HM, Ghersi D, van der Worp HB. Increasing value and reducing waste: addressing inaccessible research. Lancet. 2014;383(9913):257–66.

PubMed PubMed Central Google Scholar

Ioannidis JP, Greenland S, Hlatky MA, Khoury MJ, Macleod MR, Moher D, Schulz KF, Tibshirani R. Increasing value and reducing waste in research design, conduct, and analysis. Lancet. 2014;383(9912):166–75.

Higgins JP, Altman DG, Gotzsche PC, Juni P, Moher D, Oxman AD, Savovic J, Schulz KF, Weeks L, Sterne JA. The Cochrane Collaboration's tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928.

Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet. 2001;357.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009;6(7):e1000100.

Shea BJ, Hamel C, Wells GA, Bouter LM, Kristjansson E, Grimshaw J, Henry DA, Boers M. AMSTAR is a reliable and valid measurement tool to assess the methodological quality of systematic reviews. J Clin Epidemiol. 2009;62(10):1013–20.

Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, Moher D, Tugwell P, Welch V, Kristjansson E, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. Bmj. 2017;358:j4008.

Lawson DO, Leenus A, Mbuagbaw L. Mapping the nomenclature, methodology, and reporting of studies that review methods: a pilot methodological review. Pilot Feasibility Studies. 2020;6(1):13.

Puljak L, Makaric ZL, Buljan I, Pieper D. What is a meta-epidemiological study? Analysis of published literature indicated heterogeneous study designs and definitions. J Comp Eff Res. 2020.

Abbade LPF, Wang M, Sriganesh K, Jin Y, Mbuagbaw L, Thabane L. The framing of research questions using the PICOT format in randomized controlled trials of venous ulcer disease is suboptimal: a systematic survey. Wound Repair Regen. 2017;25(5):892–900.

Gohari F, Baradaran HR, Tabatabaee M, Anijidani S, Mohammadpour Touserkani F, Atlasi R, Razmgir M. Quality of reporting randomized controlled trials (RCTs) in diabetes in Iran; a systematic review. J Diabetes Metab Disord. 2015;15(1):36.

Wang M, Jin Y, Hu ZJ, Thabane A, Dennis B, Gajic-Veljanoski O, Paul J, Thabane L. The reporting quality of abstracts of stepped wedge randomized trials is suboptimal: a systematic survey of the literature. Contemp Clin Trials Commun. 2017;8:1–10.

Shanthanna H, Kaushal A, Mbuagbaw L, Couban R, Busse J, Thabane L: A cross-sectional study of the reporting quality of pilot or feasibility trials in high-impact anesthesia journals Can J Anaesthesia 2018, 65(11):1180–1195.

Kosa SD, Mbuagbaw L, Borg Debono V, Bhandari M, Dennis BB, Ene G, Leenus A, Shi D, Thabane M, Valvasori S, et al. Agreement in reporting between trial publications and current clinical trial registry in high impact journals: a methodological review. Contemporary Clinical Trials. 2018;65:144–50.

Zhang Y, Florez ID, Colunga Lozano LE, Aloweni FAB, Kennedy SA, Li A, Craigie S, Zhang S, Agarwal A, Lopes LC, et al. A systematic survey on reporting and methods for handling missing participant data for continuous outcomes in randomized controlled trials. J Clin Epidemiol. 2017;88:57–66.

CAS PubMed Google Scholar

Hernández AV, Boersma E, Murray GD, Habbema JD, Steyerberg EW. Subgroup analyses in therapeutic cardiovascular clinical trials: are most of them misleading? Am Heart J. 2006;151(2):257–64.

Samaan Z, Mbuagbaw L, Kosa D, Borg Debono V, Dillenburg R, Zhang S, Fruci V, Dennis B, Bawor M, Thabane L. A systematic scoping review of adherence to reporting guidelines in health care literature. J Multidiscip Healthc. 2013;6:169–88.

Buscemi N, Hartling L, Vandermeer B, Tjosvold L, Klassen TP. Single data extraction generated more errors than double data extraction in systematic reviews. J Clin Epidemiol. 2006;59(7):697–703.

Carrasco-Labra A, Brignardello-Petersen R, Santesso N, Neumann I, Mustafa RA, Mbuagbaw L, Etxeandia Ikobaltzeta I, De Stio C, McCullagh LJ, Alonso-Coello P. Improving GRADE evidence tables part 1: a randomized trial shows improved understanding of content in summary-of-findings tables with a new format. J Clin Epidemiol. 2016;74:7–18.

The Northern Ireland Hub for Trials Methodology Research: SWAT/SWAR Information [ https://www.qub.ac.uk/sites/TheNorthernIrelandNetworkforTrialsMethodologyResearch/SWATSWARInformation/ ]. Accessed 31 Aug 2020.

Chick S, Sánchez P, Ferrin D, Morrice D. How to conduct a successful simulation study. In: Proceedings of the 2003 winter simulation conference: 2003; 2003. p. 66–70.

Google Scholar

Mulrow CD. The medical review article: state of the science. Ann Intern Med. 1987;106(3):485–8.

Sacks HS, Reitman D, Pagano D, Kupelnick B. Meta-analysis: an update. Mount Sinai J Med New York. 1996;63(3–4):216–24.

CAS Google Scholar

Areia M, Soares M, Dinis-Ribeiro M. Quality reporting of endoscopic diagnostic studies in gastrointestinal journals: where do we stand on the use of the STARD and CONSORT statements? Endoscopy. 2010;42(2):138–47.

Knol M, Groenwold R, Grobbee D. P-values in baseline tables of randomised controlled trials are inappropriate but still common in high impact journals. Eur J Prev Cardiol. 2012;19(2):231–2.

Chen M, Cui J, Zhang AL, Sze DM, Xue CC, May BH. Adherence to CONSORT items in randomized controlled trials of integrative medicine for colorectal Cancer published in Chinese journals. J Altern Complement Med. 2018;24(2):115–24.

Hopewell S, Ravaud P, Baron G, Boutron I. Effect of editors' implementation of CONSORT guidelines on the reporting of abstracts in high impact medical journals: interrupted time series analysis. BMJ. 2012;344:e4178.

The Cochrane Methodology Register Issue 2 2009 [ https://cmr.cochrane.org/help.htm ]. Accessed 31 Aug 2020.

Mbuagbaw L, Kredo T, Welch V, Mursleen S, Ross S, Zani B, Motaze NV, Quinlan L. Critical EPICOT items were absent in Cochrane human immunodeficiency virus systematic reviews: a bibliometric analysis. J Clin Epidemiol. 2016;74:66–72.

Barton S, Peckitt C, Sclafani F, Cunningham D, Chau I. The influence of industry sponsorship on the reporting of subgroup analyses within phase III randomised controlled trials in gastrointestinal oncology. Eur J Cancer. 2015;51(18):2732–9.

Setia MS. Methodology series module 5: sampling strategies. Indian J Dermatol. 2016;61(5):505–9.

Wilson B, Burnett P, Moher D, Altman DG, Al-Shahi Salman R. Completeness of reporting of randomised controlled trials including people with transient ischaemic attack or stroke: a systematic review. Eur Stroke J. 2018;3(4):337–46.

Kahale LA, Diab B, Brignardello-Petersen R, Agarwal A, Mustafa RA, Kwong J, Neumann I, Li L, Lopes LC, Briel M, et al. Systematic reviews do not adequately report or address missing outcome data in their analyses: a methodological survey. J Clin Epidemiol. 2018;99:14–23.

De Angelis CD, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, Kotzin S, Laine C, Marusic A, Overbeke AJPM, et al. Is this clinical trial fully registered?: a statement from the International Committee of Medical Journal Editors*. Ann Intern Med. 2005;143(2):146–8.

Ohtake PJ, Childs JD. Why publish study protocols? Phys Ther. 2014;94(9):1208–9.

Rombey T, Allers K, Mathes T, Hoffmann F, Pieper D. A descriptive analysis of the characteristics and the peer review process of systematic review protocols published in an open peer review journal from 2012 to 2017. BMC Med Res Methodol. 2019;19(1):57.

Grimes DA, Schulz KF. Bias and causal associations in observational research. Lancet. 2002;359(9302):248–52.

Porta M (ed.): A dictionary of epidemiology, 5th edn. Oxford: Oxford University Press, Inc.; 2008.

El Dib R, Tikkinen KAO, Akl EA, Gomaa HA, Mustafa RA, Agarwal A, Carpenter CR, Zhang Y, Jorge EC, Almeida R, et al. Systematic survey of randomized trials evaluating the impact of alternative diagnostic strategies on patient-important outcomes. J Clin Epidemiol. 2017;84:61–9.

Helzer JE, Robins LN, Taibleson M, Woodruff RA Jr, Reich T, Wish ED. Reliability of psychiatric diagnosis. I. a methodological review. Arch Gen Psychiatry. 1977;34(2):129–33.

Chung ST, Chacko SK, Sunehag AL, Haymond MW. Measurements of gluconeogenesis and Glycogenolysis: a methodological review. Diabetes. 2015;64(12):3996–4010.

CAS PubMed PubMed Central Google Scholar

Sterne JA, Juni P, Schulz KF, Altman DG, Bartlett C, Egger M. Statistical methods for assessing the influence of study characteristics on treatment effects in 'meta-epidemiological' research. Stat Med. 2002;21(11):1513–24.

Moen EL, Fricano-Kugler CJ, Luikart BW, O’Malley AJ. Analyzing clustered data: why and how to account for multiple observations nested within a study participant? PLoS One. 2016;11(1):e0146721.

Zyzanski SJ, Flocke SA, Dickinson LM. On the nature and analysis of clustered data. Ann Fam Med. 2004;2(3):199–200.

Mathes T, Klassen P, Pieper D. Frequency of data extraction errors and methods to increase data extraction quality: a methodological review. BMC Med Res Methodol. 2017;17(1):152.

Bui DDA, Del Fiol G, Hurdle JF, Jonnalagadda S. Extractive text summarization system to aid data extraction from full text in systematic review development. J Biomed Inform. 2016;64:265–72.

Bui DD, Del Fiol G, Jonnalagadda S. PDF text classification to leverage information extraction from publication reports. J Biomed Inform. 2016;61:141–8.

Maticic K, Krnic Martinic M, Puljak L. Assessment of reporting quality of abstracts of systematic reviews with meta-analysis using PRISMA-A and discordance in assessments between raters without prior experience. BMC Med Res Methodol. 2019;19(1):32.

Speich B. Blinding in surgical randomized clinical trials in 2015. Ann Surg. 2017;266(1):21–2.

Abraha I, Cozzolino F, Orso M, Marchesi M, Germani A, Lombardo G, Eusebi P, De Florio R, Luchetta ML, Iorio A, et al. A systematic review found that deviations from intention-to-treat are common in randomized trials and systematic reviews. J Clin Epidemiol. 2017;84:37–46.

Zhong Y, Zhou W, Jiang H, Fan T, Diao X, Yang H, Min J, Wang G, Fu J, Mao B. Quality of reporting of two-group parallel randomized controlled clinical trials of multi-herb formulae: A survey of reports indexed in the Science Citation Index Expanded. Eur J Integrative Med. 2011;3(4):e309–16.

Farrokhyar F, Chu R, Whitlock R, Thabane L. A systematic review of the quality of publications reporting coronary artery bypass grafting trials. Can J Surg. 2007;50(4):266–77.

Oltean H, Gagnier JJ. Use of clustering analysis in randomized controlled trials in orthopaedic surgery. BMC Med Res Methodol. 2015;15:17.

Fleming PS, Koletsi D, Pandis N. Blinded by PRISMA: are systematic reviewers focusing on PRISMA and ignoring other guidelines? PLoS One. 2014;9(5):e96407.

Balasubramanian SP, Wiener M, Alshameeri Z, Tiruvoipati R, Elbourne D, Reed MW. Standards of reporting of randomized controlled trials in general surgery: can we do better? Ann Surg. 2006;244(5):663–7.

de Vries TW, van Roon EN. Low quality of reporting adverse drug reactions in paediatric randomised controlled trials. Arch Dis Child. 2010;95(12):1023–6.

Borg Debono V, Zhang S, Ye C, Paul J, Arya A, Hurlburt L, Murthy Y, Thabane L. The quality of reporting of RCTs used within a postoperative pain management meta-analysis, using the CONSORT statement. BMC Anesthesiol. 2012;12:13.

Kaiser KA, Cofield SS, Fontaine KR, Glasser SP, Thabane L, Chu R, Ambrale S, Dwary AD, Kumar A, Nayyar G, et al. Is funding source related to study reporting quality in obesity or nutrition randomized control trials in top-tier medical journals? Int J Obes. 2012;36(7):977–81.

Thomas O, Thabane L, Douketis J, Chu R, Westfall AO, Allison DB. Industry funding and the reporting quality of large long-term weight loss trials. Int J Obes. 2008;32(10):1531–6.

Khan NR, Saad H, Oravec CS, Rossi N, Nguyen V, Venable GT, Lillard JC, Patel P, Taylor DR, Vaughn BN, et al. A review of industry funding in randomized controlled trials published in the neurosurgical literature-the elephant in the room. Neurosurgery. 2018;83(5):890–7.

Hansen C, Lundh A, Rasmussen K, Hrobjartsson A. Financial conflicts of interest in systematic reviews: associations with results, conclusions, and methodological quality. Cochrane Database Syst Rev. 2019;8:Mr000047.

Kiehna EN, Starke RM, Pouratian N, Dumont AS. Standards for reporting randomized controlled trials in neurosurgery. J Neurosurg. 2011;114(2):280–5.

Liu LQ, Morris PJ, Pengel LH. Compliance to the CONSORT statement of randomized controlled trials in solid organ transplantation: a 3-year overview. Transpl Int. 2013;26(3):300–6.

Bala MM, Akl EA, Sun X, Bassler D, Mertz D, Mejza F, Vandvik PO, Malaga G, Johnston BC, Dahm P, et al. Randomized trials published in higher vs. lower impact journals differ in design, conduct, and analysis. J Clin Epidemiol. 2013;66(3):286–95.

Lee SY, Teoh PJ, Camm CF, Agha RA. Compliance of randomized controlled trials in trauma surgery with the CONSORT statement. J Trauma Acute Care Surg. 2013;75(4):562–72.