- Skip to primary navigation

- Skip to main content

- Skip to footer

Predictive Hub

PREDICT FAILURES WITH MACHINE LEARNING: REAL CASE STUDIES

The following case study reports the methods used and the results achieved by MIPU with a project whose objective was to avoid faults through the application of Machine Learning . The project has been developed for a client company working in the manufacturing industry .

PREVENT FAILURES WITH MACHINE LEARNING: THE PATH

Machine Learning applications for Predictive Maintenance are used to identify the occurrence of a failure, before this happens. Those who are familiar with the P-F Curve know that the quicker you identify a potential defect, the sooner you avoid machine downtime.

- – The first step of a Machine Learning analysis process requires the creation of an asset’s mathematical model. This model includes all the process parameters associated with that specific asset. These parameters normally are stored in a database, which acquires data from plant DCS, associated PLCs, electronic registers etc… For instance, if you’re designing a pump model, things as suction and discharge pressure, control valve position, bearing temperature and vibration are some good examples of parameters to include in the model. Most of the models have between 10 and 30 parameters, but there are models that have almost 100 parameters.

- – As second step , parameters historical data are imported into the model. This dataset is generally known as “training” data set and it normally includes a year of data. One-year dataset allows the model to take into consideration the seasonal variations of management operations. An expert in asset functioning knows which data are to be included or excluded within the training set, because he/she has specific competences in this field: a strong domain knowledge .

- – During the third step , the training dataset is used to develop an asset operational matrix . This matrix identifies how the machine should work in a precise moment, on the basis of the training data used to create it.

- – In the last step, the software constantly monitors the machine operations and predicts the values of the machine parameters according to the matrix that has received as input. If a parameter deviates from the prediction of the model with a significant percentage, the system creates an alert related to that specific parameter. Then, a technical analysis is executed on the asset in order to evaluate the change of condition and the reasons that might have caused it. (Can your software do it? If not, you may want to upgrade i t)

PREVENT FAILURES WITH MACHINE LEARNING: APPLICATIONS

Picture number 1 shows a bearing vibrational increment of a ventilator fan , caused by an oil leak. This condition generated an alarm. The solution created using Machine Learning predicted a bearing vibration of about 3,5mm, given the operating conditions. The bearing vibration slowly deviated from the predicted value, creating an alarm as soon as it reached the value of 4,7mm. Thus, the plant technical managers were alerted and through fan visual inspection they identified an oil leak. The ventilator vacuum was actually vacuuming up the oil spilled from the leak in the fan lodging. For this reason, there was no leak sign on the ground. The oil on fan blades accumulated dirt and debris, causing a rotation imbalance and consequently a vibration increment. The plant technical managers were able to take corrective actions to stop the leak before the bearing was damaged. .

Picture number 2 concerns the lubrication system of a big pulverizer. The lubrication system provides oil to the gearbox and to all the bearings. The asset model predicted a temperature of 90° F, but the real temperature reached 110° F. Therefore, the software generated an alarm for the plant technicians, who discovered that the control valve of the cooling water of the heat exchanger was not functioning. The control valve was replaced and the system started working again.

Picture number 3 is about an electro-hydraulic control (EHC) system that verifies the valve position, turbine velocity and security valves. In this case, the differential pressure through the EHC pump “A” filter began to increase. Technicians were alarmed in time and they were able to switch from pump “A” to pump“B”. In this way, it was possible to avoid the emergency shutdown of the turbine and all the connected damages.

To know more about this case study or to learn how to create machine learning models for your assets, contact us!

Altre Case History:

Machine performance optimization in the packaging sector.

Read More »

PREDICTIVE MAINTENANCE AND AI FOR THE MONITORING OF WIND TURBINES

ADVANCED PREDICTIVE ANALYSIS THROUGH MACHINE LEARNING

ARTIFICIAL INTELLIGENCE FOR TELECOMMUNICATIONS

Reduce the energy consumption of a production line.

DECREASING WASTE AND INCREASING COMPETITIVENESS

BEARING FAILURE DETECTION WITH WI-CARE 200 SERIES

MACHINE LEARNING FOR PREDICTIVE MAINTENANCE

SPARE PARTS WAREHOUSE MANAGEMENT OPTIMIZATION

WAREHOUSE EFFECTIVE MANAGEMENT, GROWING PRODUCTION

KEY PERFORMACE INDICATORS APPLIED TO A LARGE-SCALE RETAIL COMPANY

MANAGING AND MONITORING THE MAINTENANCE SERVICE

DATASHEET WI-CARE: TECHNICAL CHARACTERISTICS

ALL THE TECHNICAL SPECIFICATIONS OF OUR WIRELESS SENSOR

SUCCESSFUL BENCHMARKING OF ENERGY CONSUMPTION

SPOT CRITICAL AND INEFFICIENT ASSETS TO CUT CONSUMPTION

[email protected] | +39 0365 520098

MILAN | TURIN | VICENZA | ROME | BRESCIA | ROMANIA

VAT number 04172380984| REA BS-594066 | SHARE CAPITAL: € 114.000 | PEC: [email protected]

Informativa Privacy | Informativa Cookies | Codice Etico | Salute e Sicurezza | Politica Ambientale | Politica della Qualità | Credits

We organize AT LEAST one webinar per month on the topics of maintenance engineering, energy efficiency and the latest artificial intelligence trends.

Let’s keep in contact!

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

predictive-maintenance

Here are 157 public repositories matching this topic..., h1st-ai / h1st.

Power Tools for AI Engineers With Deadlines

- Updated Jul 31, 2023

- Jupyter Notebook

umbertogriffo / Predictive-Maintenance-using-LSTM

Example of Multiple Multivariate Time Series Prediction with LSTM Recurrent Neural Networks in Python with Keras.

- Updated Feb 12, 2024

firmai / datagene

DataGene - Identify How Similar TS Datasets Are to One Another (by @firmai )

- Updated Feb 8, 2022

jiaxiang-cheng / PyTorch-Transformer-for-RUL-Prediction

Transformer implementation with PyTorch for remaining useful life prediction on turbofan engine with NASA CMAPSS data set. Inspired by Mo, Y., Wu, Q., Li, X., & Huang, B. (2021). Remaining useful life estimation via transformer encoder enhanced by a gated convolutional unit. Journal of Intelligent Manufacturing, 1-10.

- Updated Oct 26, 2021

archd3sai / Predictive-Maintenance-of-Aircraft-Engine

In this project I aim to apply Various Predictive Maintenance Techniques to accurately predict the impending failure of an aircraft turbofan engine.

- Updated Aug 3, 2022

kpeters / exploring-nasas-turbofan-dataset

collection of predictive maintenance solutions for NASAs turbofan (CMAPSS) dataset

- Updated Jan 24, 2021

lestercardoz11 / fault-detection-for-predictive-maintenance-in-industry-4.0

This research project will illustrate the use of machine learning and deep learning for predictive analysis in industry 4.0.

- Updated Jul 11, 2021

ashishpatel26 / Predictive_Maintenance_using_Machine-Learning_Microsoft_Casestudy

Predictive_Maintenance_using_Machine-Learning_Microsoft_Casestudy

- Updated Apr 5, 2018

Charlie5DH / PredictiveMaintenance-and-Vibration-Resources

Papers and datasets for Vibration Analysis

- Updated Apr 5, 2024

kokikwbt / predictive-maintenance

Datasets for Predictive Maintenance

- Updated Dec 2, 2023

mohyunho / N-CMAPSS_DL

N-CMAPSS data preparation for Machine Learning and Deep Learning models. (Python source code for new CMAPSS dataset)

- Updated Apr 13, 2023

awslabs / aws-fleet-predictive-maintenance

Predictive Maintenance for Vehicle Fleets

- Updated Dec 22, 2022

Western-OC2-Lab / Vibration-Based-Fault-Diagnosis-with-Low-Delay

Python codes “Jupyter notebooks” for the paper entitled "A Hybrid Method for Condition Monitoring and Fault Diagnosis of Rolling Bearings With Low System Delay, IEEE Trans. on Instrumentation and Measurement, Aug. 2022. Techniques used: Wavelet Packet Transform (WPT) & Fast Fourier Transform (FFT). Application: vibration-based fault diagnosis.

- Updated May 16, 2024

imrahulr / Pred-Maintenance-Siemens

Predictive Maintenance System for Digital Factory Automation

- Updated Jun 5, 2019

SAP-samples / btp-ai-sustainability-bootcamp

This github repository contains the sample code and exercises of btp-ai-sustainability-bootcamp, which showcases how to build Intelligence and Sustainability into Your Solutions on SAP Business Technology Platform with SAP AI Core and SAP Analytics Cloud for Planning.

- Updated Dec 6, 2023

limingwu8 / Predictive-Maintenance

time-series prediction for predictive maintenance

- Updated Feb 19, 2019

dependable-cps / FDIA-PdM

False Data Injection Attacks in Internet of Things and Deep Learning enabled Predictive Analytics

- Updated Sep 9, 2020

Yi-Chen-Lin2019 / Predictive-maintenance-with-machine-learning

This project is about predictive maintenance with machine learning. It's a final project of my Computer Science AP degree.

- Updated Sep 29, 2022

mohyunho / NAS_transformer

Evolutionary Neural Architecture Search on Transformers for RUL Prediction

- Updated Apr 18, 2023

baggepinnen / MatrixProfile.jl

Time-series analysis using the Matrix profile in Julia

- Updated Oct 29, 2023

Improve this page

Add a description, image, and links to the predictive-maintenance topic page so that developers can more easily learn about it.

Curate this topic

Add this topic to your repo

To associate your repository with the predictive-maintenance topic, visit your repo's landing page and select "manage topics."

Scaling Up Deep Learning Based Predictive Maintenance for Commercial Machine Fleets: a Case Study

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Sensors and Machine Learning for Predictive Maintenance

Policy, Finance

Policy approach(es) used to catalyse investment: Development of a national, regional, or sectoral InfraTech strategy

Finance approach(es) used to catalyse investment: De-risking mechanisms or blended finance

Predictive maintenance utilises monitoring and advanced machine learning methods to develop predictive models about failure of physical and mechanical assets such as pipes, pumps, and motors. These aim to prevent failure and optimise maintenance of critical infrastructure by providing early warning and predictive actions to issues before they occur. Key components include sensors that are installed in the machines, a communication system that allows data to be transmitted in real-time between sensors and a centralised data platform, and machine-learning predictive analytics to identify patterns and generate actionable insights. Predictive maintenance tools enable asset management workforces to automatically diagnose problems of industrial assets’ breakdowns and inefficiencies and optimise maintenance scheduling ahead of asset failure as well as extend the life of the asset.

Traditional asset maintenance activities are beset by limited visibility of asset condition, infrequent monitoring, labour-intensive periodic maintenance, and manual data analysis processes. This leads to a slow response to asset deterioration, driving productivity losses, and unoptimised infrastructure capital and operational expenditure. Development of more sensitive and intelligent monitoring and modelling technologies have created opportunities to minimise labour needs and plan investments better.

Mechanical asset owners face inherent challenges with aging infrastructure and assets reaching their end of life. For example, a 2018 survey showed that USD 472.6 billion will be required over the next 20 years, to maintain and improve drinking water infrastructure in the USA. The majority of this (USD $312.6 billion) is for the replacement or refurbishment of ageing or deteriorating distribution assets [1] . The increasing need for water asset maintenance and renewal optimisation is evident in the USD $90 billion increase in 20-year investments required to repair, replace and renew existing infrastructure while there is a $30 billion decrease in investment requirements for new infrastructure [2] .

Knowing which asset to maintain, renew or refurbish will potentially defer substantial amounts of capital expenditure. Increased usage of predictive failure models will be an essential planning tool to shift towards more proactive maintenance and optimise maintenance budgets. Proactive programs will prevent catastrophic failure of water distribution networks, pipe bursts and leaks causing damage to property and public infrastructure. This will assist water utilities to maintain critical water services to communities, eliminate unplanned downtime, reduce maintenance costs, improve asset reliability, and enhance operational efficiency.

As more water utilities start to embrace digitalisation and generate large amounts of data, the technology can access better quality and quantity of data from various inputs to train the models and improve the precision of failures detected. This technology can also be further developed and applied to other sectors and scenarios such as with critical physical infrastructure such as energy generation, transport, and manufacturing. As infrastructure continues to age and renewal investment needs continue to grow, demand for more accurate and robust failure prediction models will grow and be more widely used in all industries. Predictive models from different industries can be combined to optimise maintenance of assets in close proximity i.e. pipes, electricity, communications, gas, roads, etc.

VALUE CREATED

Improving efficiency and reducing costs:

- Optimises capital investment through deferment of current premature rehabilitation and replacement tasks, rerouting the resources to the assets that are most likely to fail.

- Reduces operational expenditure and overhead cost investment by keeping assets at optimal conditions reducing power waste, reducing downtime and maintenance costs.

Enhancing economic, social and environmental value:

- Minimises the break rates of pipes that can cause water damage to surrounding infrastructure.

- Decreases traffic disruption and water service interruption by minimising unnecessary maintenance activities

- Extending useful life of assets and reducing material wastage.

- Minimises the health and safety risks of operators in carrying out rehabilitation work as well as reducing risks during operation and inspection with the remote visibility of the state of assets in real-time.

POLICY TOOLS AND LEVERS

Legislation and regulation: Governments can develop strategies to drive operators to invest in more efficient and sustainable operations of critical assets. Regulatory driven asset management plans can be implemented to maintain the efficiency of water infrastructure.

Funding and financing: Greater focus on committing funding to optimise and extend the life of existing assets rather than building new infrastructure is needed.

Transition of workforce capabilities: Training and upskilling workforce to have the skills to effectively interpret and action the insights from AI technologies.

RISKS AND MITIGATIONS

Implementation risk

Risk: Machine learning can only operate using good quality input data. There is a risk where incorrect data or lack of data can limit functionality or lead to incorrect actions, which can increase project costs and lead to poor infrastructure planning and investment.

Mitigation: Investing in sensors and monitoring solutions before investing in machine learning software.

Social risk

Risk: The shift from scheduled and reactive maintenance to predictive and proactive maintenance can create the need for re-training of workers to interpret and appropriately action results from predictive models.

Mitigation: Industry can assist through training and up-skilling programs to help mitigate these issues.

Safety and (Cyber)security risk

Risk: Control systems, especially in those located in the cloud, are at risk of cyber-attacks. Sensitive information about location and condition of critical infrastructure and potential attacks can have high risks on public health.

Mitigation: Organisations need to ensure a strong level of cyber security in their networks and data storage, for both local servers and cloud services. Focus should be on having strict data ownership models and the appropriate level of data security as needed by the application. Any implementation of data transfer and storage should be undertaken by suitable qualified and experienced professionals.

Example: Data61

Implementation: Sydney Water and Data61 are collaboratively researching advanced analytics approaches to solving water industry challenges, including water pipe failure prediction, predicting sewer chokes and prioritising active leakage detection areas.

Cost: Sydney Water found the potential to reduce maintenance and renewal costs by several million dollars over a four-year period and minimise inconvenience to customers from pipe breaks.

Timeframe: Projects are undertaken on case-by-case basis and can be completed within a few months. Example: Voda

Implementation: Voda AI software have assessed more than 1200 pipes for a Florida water utility, prioritising pipe monitoring, maintenance, and replacements.

Cost: Voda predicted 18 avoidable breaks saving the water utility more than $100,000 in reactive maintenance and preventing negative coverage of bursts.

Timeframe: Projects are undertaken on case-by-case basis and can be completed within a 12 months. Example: Movus

Implementation: The University of Queensland have installed the FitMachine on 22 chiller units, delivering 24/7 air-conditioning, on campus since March 2016 to detect the early warnings of failures, using machine-learning algorithms4.

Cost: The University of Queensland realised 135% return on their FitMachine investment. They saved up to $100,000 in repair costs by discovering and preventing machine failure ahead of time

Timeframe: Movus solution was implemented in a short time frame (within 6 months).

Attachments & Related Links

- Predictive maintenance in the automotive industry

UNLOCKED GREATER EFFICIENCY WITH MACHINE LEARNING

- Machine learning

- Predictive maintenance

- Sigma Technology

Jump to the Section

Predictive Maintenance: Improved Efficiency & Reduced Costs with Machine Learning

Predictive maintenance is a maintenance strategy that utilizes machine learning algorithms to analyze data from sensors, equipment logs, and other sources to predict when a machine is likely to fail. Machine learning algorithms can also help identify patterns and relationships in the data, enabling organizations to make more informed decisions about maintenance schedules and spare parts inventory management.

In the automotive industry, machine learning can be used to improve predictive maintenance by analyzing vast amounts of data from various sources, such as sensors, telematics systems, and maintenance logs. This data can be used to develop predictive models that identify patterns and relationships between various factors and equipment failures. For example, machine learning algorithms can analyze engine vibration and temperature data to predict when a component is likely to fail. Moreover, predictive models can analyze data on driving patterns, road conditions, and fuel consumption to determine the optimal time for maintenance activities such as oil changes and tire rotations.

Machine learning is a powerful tool that can help organizations in the automotive industry optimize their predictive maintenance programs and improve equipment efficiency, reducing costs and increasing overall operational efficiency. Yixin Zhang, Data Scientist at Sigma Technology Insight Solutions

In this case study, we uncover how Sigma Technology Insight Solutions supported the Swedish vehicle manufacturer and contributed to the development of the ML model to understand and predict the lifetime of brake pads that are used in their trucks.

About the client

The client is a Swedish manufacturer of heavy-duty commercial vehicles. The company is known for its commitment to safety, sustainability, and efficiency and offers a wide range of trucks for various applications, including long-haul, construction, and distribution. In addition, the company provides a range of services and solutions to its customers, including maintenance, financing, and telematics solutions.

The challenge: goals of predictive maintenance in the automotive industry

Brake pads are a c ritical component of a vehicle’s braking system and need to be maintained regularly to ensure their proper functioning. Predicting when brake pads are likely to fail can help prevent unexpected failures and reduce the risk of accidents. The client required a predictive maintenance solution for brake pads to calculate the lifetime of their brake pads. As a result, data-driven decisions would ensure the safety and reliability of vehicles, as well as reduce maintenance costs and increase the efficiency of flee t operations.

Our involvement

The client approached the team with a need for an expert data scientist to assist in the development of a machine-learning model. Yixin Zhang, a highly skilled Data Scientist at Sigma Technology Insight Solutions , joined the effort and provided crucial consulting services. The objective was to create a model that could predict the durability of brake pads based on historical data that included factors such as road quality, vehicle speed, temperature, and others.

However, th e team faced a challenge as some of the data was missing, which had to be restored and refilled to ensure the accuracy of the results. To overcome this, the team used advanced data restoration techniques to refill the missing data and ran the data through the model to identify data relations.

The end result of their efforts was an ML model written in Python that predicts the impact of various factors on brake pad durability. This tool will serve as a valuable asset to the client, allowing them to make data-driven decisions and continuously enhance their product offerings.

Further steps

The machine learning solution has the potential to be adapted and expanded to improve the wear resistance and durability of a wide range of spare parts beyond its current application. In addition to this, innovative technology could be leveraged in other industries, such as manufacturing and consumer electronics, to enhance the performance of their products and prolong their lifespan. The scalability of the ML solution makes it a versatile tool that can be applied in multiple contexts, delivering a significant competitive advantage to businesses that adopt it. Furthermore, the solution can be customized to meet the specific requirements of each industry, ensuring that it provides the optimal outcome in each case. The potential of the ML solution to improve the performance and longevity of products in various industries makes it an invaluable tool for organizations seeking to remain at the forefront of their respective fields.

Discover our Automotive IT Solutions

ROBERT ÅBERG

President at Sigma Technology Insight Solutions

Contact: [email protected]

Share this article:

Related articles

Sigma Technology starts partnership with digital delegations’ startup Delori

Route optimization algorithm – SAS® Nordic Hackathon

App enables intuitive worldwide access to employee information

COMBINING HIGH-QUALITY DESIGN AND CONNECTIVITY

Custom Content Management System for BMW Group

Enhancing Hybrid Work experience through digital interactions

Sigma and Lynk & Co evolve user information experience

Supporting premium chauffeur service with a digital booking app

Discover more articles in our insights library

This is the era of AI in predictive maintenance

Share This:

Maintenance has been evolving with new technologies and strategies since the days of CH Waddington during World War II who questioned why the Royal Air Force (RAF) was performing maintenance the way it was – grounding about half the planes at a time for maintenance following a mission. His theory was that the regular maintenance (preventive or planned maintenance) was increasing breakdowns. He and a handful of other scientists recommended performing maintenance based on the condition of the equipment. And after five months of trying the new procedure, the number of available planes at any given time increased by 61 percent.

Since then, manufacturers used preventive maintenance strategies including sensors placed in devices to determine when equipment might fail. But the results weren’t consistent because the data was difficult to access. Now, with today’s IIoT, machine learning and artificial intelligence, predictive maintenance is a reality.

What is Predictive Maintenance and What are the Benefits?

Predictive maintenance is based on detecting small changes and aberrations in normal operations which usually indicates a larger problem. From digital preventive maintenance came predictive maintenance (PdM) that uses data-driven maintenance strategies to analyze operation and predict and prepare for potential failures. With 24/7 remote monitoring, data-driven insights from machine learning and innovative predictive analytics technology to alert about potential equipment failures, manufacturers can benefit in many ways. Cost savings and ROI of predictive maintenance include:

- Reduced downtime

- More targeted maintenance

- Higher productivity

- Efficient inventory management

- Enhanced data analysis

- Reduced labor and material costs

- Increased plant safety

- Optimized maintenance activities

- Increased overall equipment effectiveness (OEE)

Predictive Maintenance via Condition-Based Monitoring

Another transformative step in evolving maintenance strategies and capabilities came the advent of condition-based monitoring (CBM) which monitors key performance indicators (KPIs) to identify anomalies. Companies can check through measurements, visual equipment inspections, reviews of performance data or scheduled tests, as well as through IoT and historical data. The KPIs are gathered at certain intervals, or continuously—as is done when a machine has internal sensors. CBM can be applied to all assets.

CBM, like all predictive maintenance, also operates on the principle that maintenance should only be performed when there are signs of decreasing equipment performance or an upcoming critical failure. Compared to traditional preventive maintenance, CBM only requires equipment to be shut down for maintenance on an as-needed basis, increasing the time between maintenance repairs.

CBM can reduce machine downtime by 30 to 60 percent and increase machine life by an average of 30 percent. Predictive maintenance plays a key role in detecting and addressing machine issues before it goes into complete failure mode. According to a PWC study , predictive maintenance improves uptime by 51%. Using predictive maintenance, companies can avoid accidents and can achieve increased safety for their employees and customers.

Implementing a Successful Condition-Based Maintenance Program

FactoryTalk® Analytics™ GuardianAI™ is a new software by Rockwell Automation that provides predictive maintenance insights via continuous condition-based monitoring. The software helps maintenance engineers get the right information at the right time to optimize maintenance activities and reduce unplanned downtime. Armed with this information, maintenance engineers have the insight to understand the current condition of the assets on the plant floor. They receive early notice as soon as an asset begins deviating from normal.

Use Your Existing Variable Frequency Drives as Sensors

When using FactoryTalk Analytics GuardianAI, there’s no need to purchase additional sensors or monitoring equipment. The software provides early warning of potential asset failures based on data that’s already available from variable frequency drives (VFDs). FactoryTalk Analytics GuardianAI software uses the VFD’s electrical signal to monitor the condition of a plant asset. When it detects a deviation in the electrical signal, it alerts the user to the anomaly so that manufacturers can investigate and plan the correct response. FactoryTalk Analytics GuardianAI provides premier integration with PowerFlex® 755, 755T and 6000T drives for key process applications like pumps, fans, and blowers.

No Data Science Required

When deploying innovative solutions in an operations environment, time to value is key. FactoryTalk Analytics GuardianAI software saves time with intuitive and streamlined workflows via a self-service, browser-based experience. Just deploy the application on an edge PC, specify your drive and asset information, and train the predictive maintenance model on live plant data with no impact to operations. When the training is complete, the software will automatically switch to monitoring mode and you can oversee the condition of your plant assets.

Starting from an overview of all assets, you can select any at risk asset to learn more about its condition. You’ll discover key information like the root cause of the deviation, how far it peaked above baseline and deviation duration. You can also include context about the severity of the failure risk and the estimated time to resolve the issue. These details support your maintenance team with the prioritization and planning required for repair.

Advance from anomaly detection to anomaly identification

FactoryTalk Analytics GuardianAI software comes out-of-the-box with embedded expertise about the most probable cause of failure for common plant asset types. If you’re monitoring a pump, fan or blower application, FactoryTalk Analytics GuardianAI understands and recognizes the electrical signature of the associated first principle faults and will provide this context when it alerts you of a deviation. By providing maintenance engineers with information about what type of failure is about to occur, you can reduce investigation time and minimize any downtime required.

The embedded expertise provides a great start for anomaly identification. But you’re not limited to the out-of-the-box functionality. You also have the flexibility to train FactoryTalk Analytics GuardianAI software on process specific faults. After you investigate and identify the source of the issue, you can label the anomaly. When the same issue occurs again, the software will recognize it and notify you.

Analyze at the edge

FactoryTalk Analytics GuardianAI software is deployed, learns and runs right at the edge for near real-time predictions.

Since CH Waddinton and his mission to keep RAF planes in the sky, manufacturers have been seeking to drive more efficient maintenance decision-making and derive more value from equipment. Evolving from reactive and proactive to preventive and predictive, maintenance engineers are now empowered with easy-to-use machine learning through an intuitive user experience that doesn’t require data science knowledge. Find out more at FactoryTalk Analytics GuardianAI | FactoryTalk (rockwellautomation.com)

Subscribe to Rockwell Automation and receive the latest news, thought leadership and information directly to your inbox.

Machine Learning and image analysis towards improved energy management in Industry 4.0: a practical case study on quality control

- Original Article

- Open access

- Published: 13 May 2024

- Volume 17 , article number 48 , ( 2024 )

Cite this article

You have full access to this open access article

- Mattia Casini 1 ,

- Paolo De Angelis 1 ,

- Marco Porrati 2 ,

- Paolo Vigo 1 ,

- Matteo Fasano 1 ,

- Eliodoro Chiavazzo 1 &

- Luca Bergamasco ORCID: orcid.org/0000-0001-6130-9544 1

155 Accesses

1 Altmetric

Explore all metrics

With the advent of Industry 4.0, Artificial Intelligence (AI) has created a favorable environment for the digitalization of manufacturing and processing, helping industries to automate and optimize operations. In this work, we focus on a practical case study of a brake caliper quality control operation, which is usually accomplished by human inspection and requires a dedicated handling system, with a slow production rate and thus inefficient energy usage. We report on a developed Machine Learning (ML) methodology, based on Deep Convolutional Neural Networks (D-CNNs), to automatically extract information from images, to automate the process. A complete workflow has been developed on the target industrial test case. In order to find the best compromise between accuracy and computational demand of the model, several D-CNNs architectures have been tested. The results show that, a judicious choice of the ML model with a proper training, allows a fast and accurate quality control; thus, the proposed workflow could be implemented for an ML-powered version of the considered problem. This would eventually enable a better management of the available resources, in terms of time consumption and energy usage.

Similar content being viewed by others

Towards Operation Excellence in Automobile Assembly Analysis Using Hybrid Image Processing

Deep Learning Based Algorithms for Welding Edge Points Detection

Artificial Intelligence: Prospect in Mechanical Engineering Field—A Review

Avoid common mistakes on your manuscript.

Introduction

An efficient use of energy resources in industry is key for a sustainable future (Bilgen, 2014 ; Ocampo-Martinez et al., 2019 ). The advent of Industry 4.0, and of Artificial Intelligence, have created a favorable context for the digitalisation of manufacturing processes. In this view, Machine Learning (ML) techniques have the potential for assisting industries in a better and smart usage of the available data, helping to automate and improve operations (Narciso & Martins, 2020 ; Mazzei & Ramjattan, 2022 ). For example, ML tools can be used to analyze sensor data from industrial equipment for predictive maintenance (Carvalho et al., 2019 ; Dalzochio et al., 2020 ), which allows identification of potential failures in advance, and thus to a better planning of maintenance operations with reduced downtime. Similarly, energy consumption optimization (Shen et al., 2020 ; Qin et al., 2020 ) can be achieved via ML-enabled analysis of available consumption data, with consequent adjustments of the operating parameters, schedules, or configurations to minimize energy consumption while maintaining an optimal production efficiency. Energy consumption forecast (Liu et al., 2019 ; Zhang et al., 2018 ) can also be improved, especially in industrial plants relying on renewable energy sources (Bologna et al., 2020 ; Ismail et al., 2021 ), by analysis of historical data on weather patterns and forecast, to optimize the usage of energy resources, avoid energy peaks, and leverage alternative energy sources or storage systems (Li & Zheng, 2016 ; Ribezzo et al., 2022 ; Fasano et al., 2019 ; Trezza et al., 2022 ; Mishra et al., 2023 ). Finally, ML tools can also serve for fault or anomaly detection (Angelopoulos et al., 2019 ; Md et al., 2022 ), which allows prompt corrective actions to optimize energy usage and prevent energy inefficiencies. Within this context, ML techniques for image analysis (Casini et al., 2024 ) are also gaining increasing interest (Chen et al., 2023 ), for their application to e.g. materials design and optimization (Choudhury, 2021 ), quality control (Badmos et al., 2020 ), process monitoring (Ho et al., 2021 ), or detection of machine failures by converting time series data from sensors to 2D images (Wen et al., 2017 ).

Incorporating digitalisation and ML techniques into Industry 4.0 has led to significant energy savings (Maggiore et al., 2021 ; Nota et al., 2020 ). Projects adopting these technologies can achieve an average of 15% to 25% improvement in energy efficiency in the processes where they were implemented (Arana-Landín et al., 2023 ). For instance, in predictive maintenance, ML can reduce energy consumption by optimizing the operation of machinery (Agrawal et al., 2023 ; Pan et al., 2024 ). In process optimization, ML algorithms can improve energy efficiency by 10-20% by analyzing and adjusting machine operations for optimal performance, thereby reducing unnecessary energy usage (Leong et al., 2020 ). Furthermore, the implementation of ML algorithms for optimal control can lead to energy savings of 30%, because these systems can make real-time adjustments to production lines, ensuring that machines operate at peak energy efficiency (Rahul & Chiddarwar, 2023 ).

In automotive manufacturing, ML-driven quality control can lead to energy savings by reducing the need for redoing parts or running inefficient production cycles (Vater et al., 2019 ). In high-volume production environments such as consumer electronics, novel computer-based vision models for automated detection and classification of damaged packages from intact packages can speed up operations and reduce waste (Shahin et al., 2023 ). In heavy industries like steel or chemical manufacturing, ML can optimize the energy consumption of large machinery. By predicting the optimal operating conditions and maintenance schedules, these systems can save energy costs (Mypati et al., 2023 ). Compressed air is one of the most energy-intensive processes in manufacturing. ML can optimize the performance of these systems, potentially leading to energy savings by continuously monitoring and adjusting the air compressors for peak efficiency, avoiding energy losses due to leaks or inefficient operation (Benedetti et al., 2019 ). ML can also contribute to reducing energy consumption and minimizing incorrectly produced parts in polymer processing enterprises (Willenbacher et al., 2021 ).

Here we focus on a practical industrial case study of brake caliper processing. In detail, we focus on the quality control operation, which is typically accomplished by human visual inspection and requires a dedicated handling system. This eventually implies a slower production rate, and inefficient energy usage. We thus propose the integration of an ML-based system to automatically perform the quality control operation, without the need for a dedicated handling system and thus reduced operation time. To this, we rely on ML tools able to analyze and extract information from images, that is, deep convolutional neural networks, D-CNNs (Alzubaidi et al., 2021 ; Chai et al., 2021 ).

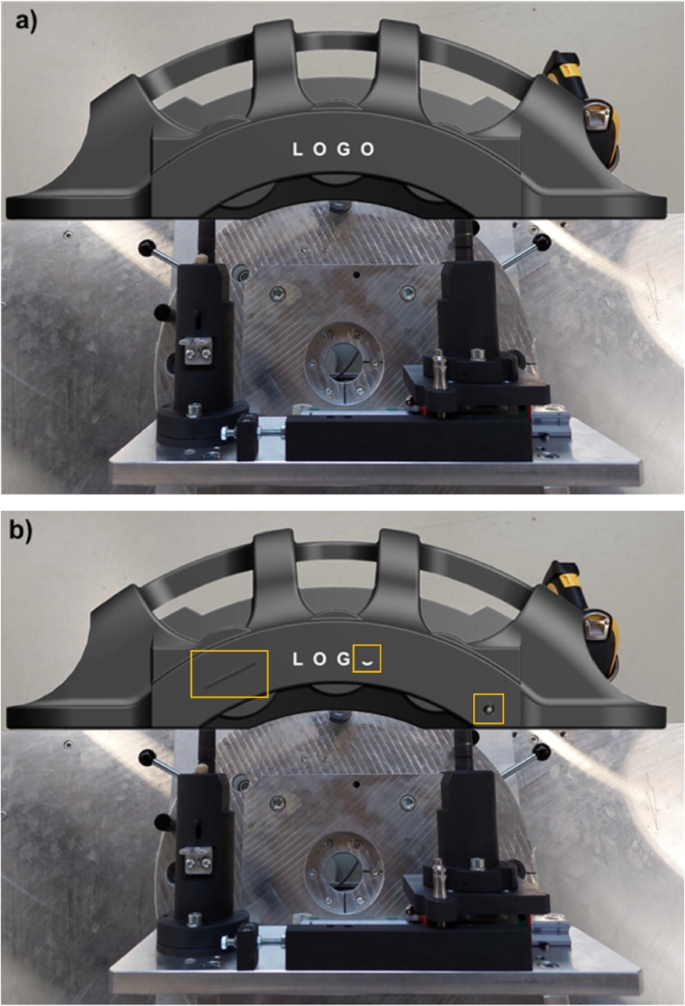

Sample 3D model (GrabCAD ) of the considered brake caliper: (a) part without defects, and (b) part with three sample defects, namely a scratch, a partially missing letter in the logo, and a circular painting defect (shown by the yellow squares, from left to right respectively)

A complete workflow for the purpose has been developed and tested on a real industrial test case. This includes: a dedicated pre-processing of the brake caliper images, their labelling and analysis using two dedicated D-CNN architectures (one for background removal, and one for defect identification), post-processing and analysis of the neural network output. Several different D-CNN architectures have been tested, in order to find the best model in terms of accuracy and computational demand. The results show that, a judicious choice of the ML model with a proper training, allows to obtain fast and accurate recognition of possible defects. The best-performing models, indeed, reach over 98% accuracy on the target criteria for quality control, and take only few seconds to analyze each image. These results make the proposed workflow compliant with the typical industrial expectations; therefore, in perspective, it could be implemented for an ML-powered version of the considered industrial problem. This would eventually allow to achieve better performance of the manufacturing process and, ultimately, a better management of the available resources in terms of time consumption and energy expense.

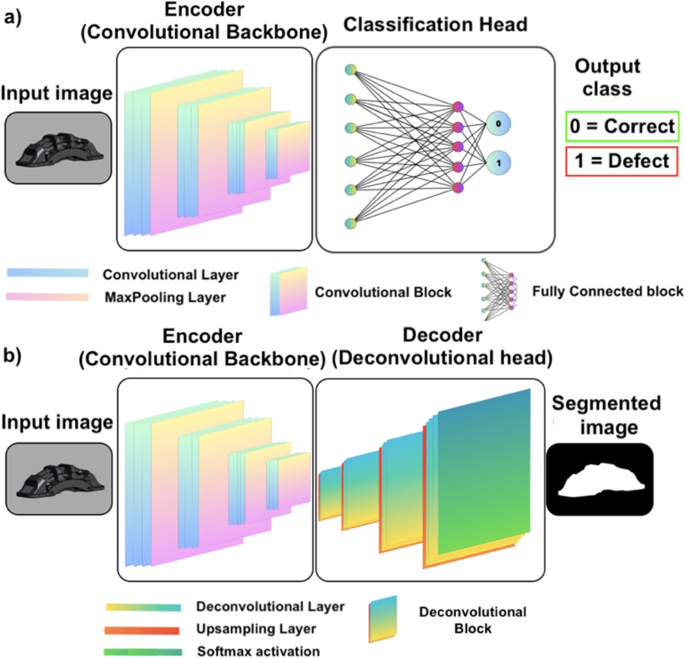

Different neural network architectures: convolutional encoder (a) and encoder-decoder (b)

The industrial quality control process that we target is the visual inspection of manufactured components, to verify the absence of possible defects. Due to industrial confidentiality reasons, a representative open-source 3D geometry (GrabCAD ) of the considered parts, similar to the original one, is shown in Fig. 1 . For illustrative purposes, the clean geometry without defects (Fig. 1 (a)) is compared to the geometry with three possible sample defects, namely: a scratch on the surface of the brake caliper, a partially missing letter in the logo, and a circular painting defect (highlighted by the yellow squares, from left to right respectively, in Fig. 1 (b)). Note that, one or multiple defects may be present on the geometry, and that other types of defects may also be considered.

Within the industrial production line, this quality control is typically time consuming, and requires a dedicated handling system with the associated slow production rate and energy inefficiencies. Thus, we developed a methodology to achieve an ML-powered version of the control process. The method relies on data analysis and, in particular, on information extraction from images of the brake calipers via Deep Convolutional Neural Networks, D-CNNs (Alzubaidi et al., 2021 ). The designed workflow for defect recognition is implemented in the following two steps: 1) removal of the background from the image of the caliper, in order to reduce noise and irrelevant features in the image, ultimately rendering the algorithms more flexible with respect to the background environment; 2) analysis of the geometry of the caliper to identify the different possible defects. These two serial steps are accomplished via two different and dedicated neural networks, whose architecture is discussed in the next section.

Convolutional Neural Networks (CNNs) pertain to a particular class of deep neural networks for information extraction from images. The feature extraction is accomplished via convolution operations; thus, the algorithms receive an image as an input, analyze it across several (deep) neural layers to identify target features, and provide the obtained information as an output (Casini et al., 2024 ). Regarding this latter output, different formats can be retrieved based on the considered architecture of the neural network. For a numerical data output, such as that required to obtain a classification of the content of an image (Bhatt et al., 2021 ), e.g. correct or defective caliper in our case, a typical layout of the network involving a convolutional backbone, and a fully-connected network can be adopted (see Fig. 2 (a)). On the other hand, if the required output is still an image, a more complex architecture with a convolutional backbone (encoder) and a deconvolutional head (decoder) can be used (see Fig. 2 (b)).

As previously introduced, our workflow targets the analysis of the brake calipers in a two-step procedure: first, the removal of the background from the input image (e.g. Fig. 1 ); second, the geometry of the caliper is analyzed and the part is classified as acceptable or not depending on the absence or presence of any defect, respectively. Thus, in the first step of the procedure, a dedicated encoder-decoder network (Minaee et al., 2021 ) is adopted to classify the pixels in the input image as brake or background. The output of this model will then be a new version of the input image, where the background pixels are blacked. This helps the algorithms in the subsequent analysis to achieve a better performance, and to avoid bias due to possible different environments in the input image. In the second step of the workflow, a dedicated encoder architecture is adopted. Here, the previous background-filtered image is fed to the convolutional network, and the geometry of the caliper is analyzed to spot possible defects and thus classify the part as acceptable or not. In this work, both deep learning models are supervised , that is, the algorithms are trained with the help of human-labeled data (LeCun et al., 2015 ). Particularly, the first algorithm for background removal is fed with the original image as well as with a ground truth (i.e. a binary image, also called mask , consisting of black and white pixels) which instructs the algorithm to learn which pixels pertain to the brake and which to the background. This latter task is usually called semantic segmentation in Machine Learning and Deep Learning (Géron, 2022 ). Analogously, the second algorithm is fed with the original image (without the background) along with an associated mask, which serves the neural networks with proper instructions to identify possible defects on the target geometry. The required pre-processing of the input images, as well as their use for training and validation of the developed algorithms, are explained in the next sections.

Image pre-processing

Machine Learning approaches rely on data analysis; thus, the quality of the final results is well known to depend strongly on the amount and quality of the available data for training of the algorithms (Banko & Brill, 2001 ; Chen et al., 2021 ). In our case, the input images should be well-representative for the target analysis and include adequate variability of the possible features to allow the neural networks to produce the correct output. In this view, the original images should include, e.g., different possible backgrounds, a different viewing angle of the considered geometry and a different light exposure (as local light reflections may affect the color of the geometry and thus the analysis). The creation of such a proper dataset for specific cases is not always straightforward; in our case, for example, it would imply a systematic acquisition of a large set of images in many different conditions. This would require, in turn, disposing of all the possible target defects on the real parts, and of an automatic acquisition system, e.g., a robotic arm with an integrated camera. Given that, in our case, the initial dataset could not be generated on real parts, we have chosen to generate a well-balanced dataset of images in silico , that is, based on image renderings of the real geometry. The key idea was that, if the rendered geometry is sufficiently close to a real photograph, the algorithms may be instructed on artificially-generated images and then tested on a few real ones. This approach, if properly automatized, clearly allows to easily produce a large amount of images in all the different conditions required for the analysis.

In a first step, starting from the CAD file of the brake calipers, we worked manually using the open-source software Blender (Blender ), to modify the material properties and achieve a realistic rendering. After that, defects were generated by means of Boolean (subtraction) operations between the geometry of the brake caliper and ad-hoc geometries for each defect. Fine tuning on the generated defects has allowed for a realistic representation of the different defects. Once the results were satisfactory, we developed an automated Python code for the procedures, to generate the renderings in different conditions. The Python code allows to: load a given CAD geometry, change the material properties, set different viewing angles for the geometry, add different types of defects (with given size, rotation and location on the geometry of the brake caliper), add a custom background, change the lighting conditions, render the scene and save it as an image.

In order to make the dataset as varied as possible, we introduced three light sources into the rendering environment: a diffuse natural lighting to simulate daylight conditions, and two additional artificial lights. The intensity of each light source and the viewing angle were then made vary randomly, to mimic different daylight conditions and illuminations of the object. This procedure was designed to provide different situations akin to real use, and to make the model invariant to lighting conditions and camera position. Moreover, to provide additional flexibility to the model, the training dataset of images was virtually expanded using data augmentation (Mumuni & Mumuni, 2022 ), where saturation, brightness and contrast were made randomly vary during training operations. This procedure has allowed to consistently increase the number and variety of the images in the training dataset.

The developed automated pre-processing steps easily allows for batch generation of thousands of different images to be used for training of the neural networks. This possibility is key for proper training of the neural networks, as the variability of the input images allows the models to learn all the possible features and details that may change during real operating conditions.

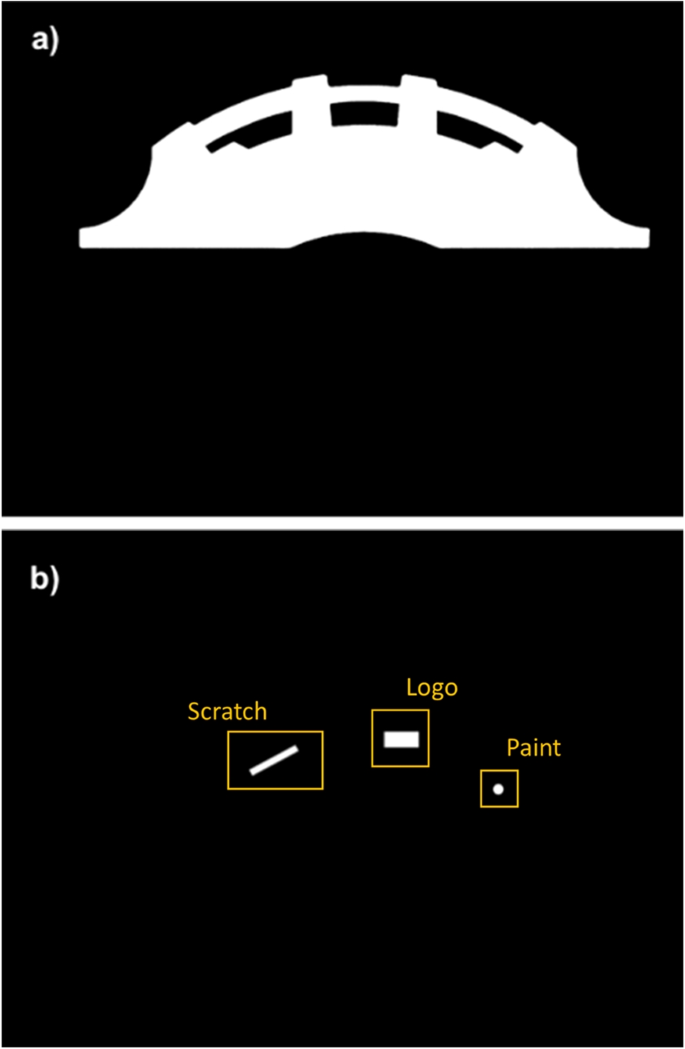

Examples of the ground truth for the two target tasks: background removal (a) and defects recognition (b)

The first tests using such virtual database have shown that, although the generated images were very similar to real photographs, the models were not able to properly recognize the target features in the real images. Thus, in a tentative to get closer to a proper set of real images, we decided to adopt a hybrid dataset, where the virtually generated images were mixed with the available few real ones. However, given that some possible defects were missing in the real images, we also decided to manipulate the images to introduce virtual defects on real images. The obtained dataset finally included more than 4,000 images, where 90% was rendered, and 10% was obtained from real images. To avoid possible bias in the training dataset, defects were present in 50% of the cases in both the rendered and real image sets. Thus, in the overall dataset, the real original images with no defects were 5% of the total.

Along with the code for the rendering and manipulation of the images, dedicated Python routines were developed to generate the corresponding data labelling for the supervised training of the networks, namely the image masks. Particularly, two masks were generated for each input image: one for the background removal operation, and one for the defect identification. In both cases, the masks consist of a binary (i.e. black and white) image where all the pixels of a target feature (i.e. the geometry or defect) are assigned unitary values (white); whereas, all the remaining pixels are blacked (zero values). An example of these masks in relation to the geometry in Fig. 1 is shown in Fig. 3 .

All the generated images were then down-sampled, that is, their resolution was reduced to avoid unnecessary large computational times and (RAM) memory usage while maintaining the required level of detail for training of the neural networks. Finally, the input images and the related masks were split into a mosaic of smaller tiles, to achieve a suitable size for feeding the images to the neural networks with even more reduced requirements on the RAM memory. All the tiles were processed, and the whole image reconstructed at the end of the process to visualize the overall final results.

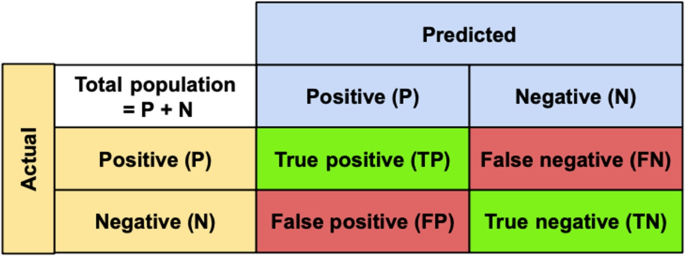

Confusion matrix for accuracy assessment of the neural networks models

Choice of the model

Within the scope of the present application, a wide range of possibly suitable models is available (Chen et al., 2021 ). In general, the choice of the best model for a given problem should be made on a case-by-case basis, considering an acceptable compromise between the achievable accuracy and computational complexity/cost. Too simple models can indeed be very fast in the response yet have a reduced accuracy. On the other hand, more complex models can generally provide more accurate results, although typically requiring larger amounts of data for training, and thus longer computational times and energy expense. Hence, testing has the crucial role to allow identification of the best trade-off between these two extreme cases. A benchmark for model accuracy can generally be defined in terms of a confusion matrix, where the model response is summarized into the following possibilities: True Positives (TP), True Negatives (TN), False Positives (FP) and False Negatives (FN). This concept can be summarized as shown in Fig. 4 . For the background removal, Positive (P) stands for pixels belonging to the brake caliper, while Negative (N) for background pixels. For the defect identification model, Positive (P) stands for non-defective geometry, whereas Negative (N) stands for defective geometries. With respect to these two cases, the True/False statements stand for correct or incorrect identification, respectively. The model accuracy can be therefore assessed as Géron ( 2022 )

Based on this metrics, the accuracy for different models can then be evaluated on a given dataset, where typically 80% of the data is used for training and the remaining 20% for validation. For the defect recognition stage, the following models were tested: VGG-16 (Simonyan & Zisserman, 2014 ), ResNet50, ResNet101, ResNet152 (He et al., 2016 ), Inception V1 (Szegedy et al., 2015 ), Inception V4 and InceptionResNet V2 (Szegedy et al., 2017 ). Details on the assessment procedure for the different models are provided in the Supplementary Information file. For the background removal stage, the DeepLabV3 \(+\) (Chen et al., 2018 ) model was chosen as the first option, and no additional models were tested as it directly provided satisfactory results in terms of accuracy and processing time. This gives preliminary indication that, from the point of view of the task complexity of the problem, the defect identification stage can be more demanding with respect to the background removal operation for the case study at hand. Besides the assessment of the accuracy according to, e.g., the metrics discussed above, additional information can be generally collected, such as too low accuracy (indicating insufficient amount of training data), possible bias of the models on the data (indicating a non-well balanced training dataset), or other specific issues related to missing representative data in the training dataset (Géron, 2022 ). This information helps both to correctly shape the training dataset, and to gather useful indications for the fine tuning of the model after its choice has been made.

Background removal

An initial bias of the model for background removal arose on the color of the original target geometry (red color). The model was indeed identifying possible red spots on the background as part of the target geometry as an unwanted output. To improve the model flexibility, and thus its accuracy on the identification of the background, the training dataset was expanded using data augmentation (Géron, 2022 ). This technique allows to artificially increase the size of the training dataset by applying various transformations to the available images, with the goal to improve the performance and generalization ability of the models. This approach typically involves applying geometric and/or color transformations to the original images; in our case, to account for different viewing angles of the geometry, different light exposures, and different color reflections and shadowing effects. These improvements of the training dataset proved to be effective on the performance for the background removal operation, with a validation accuracy finally ranging above 99% and model response time around 1-2 seconds. An example of the output of this operation for the geometry in Fig. 1 is shown in Fig. 5 .

While the results obtained were satisfactory for the original (red) color of the calipers, we decided to test the model ability to be applied on brake calipers of other colors as well. To this, the model was trained and tested on a grayscale version of the images of the calipers, which allows to completely remove any possible bias of the model on a specific color. In this case, the validation accuracy of the model was still obtained to range above 99%; thus, this approach was found to be particularly interesting to make the model suitable for background removal operation even on images including calipers of different colors.

Target geometry after background removal

Defect recognition

An overview of the performance of the tested models for the defect recognition operation on the original geometry of the caliper is reported in Table 1 (see also the Supplementary Information file for more details on the assessment of different models). The results report on the achieved validation accuracy ( \(A_v\) ) and on the number of parameters ( \(N_p\) ), with this latter being the total number of parameters that can be trained for each model (Géron, 2022 ) to determine the output. Here, this quantity is adopted as an indicator of the complexity of each model.

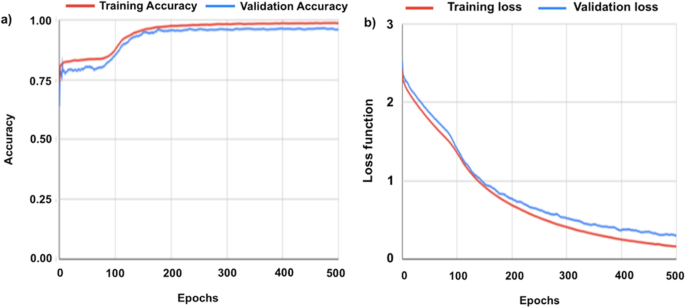

Accuracy (a) and loss function (b) curves for the Resnet101 model during training

As the results in Table 1 show, the VGG-16 model was quite unprecise for our dataset, eventually showing underfitting (Géron, 2022 ). Thus, we decided to opt for the Resnet and Inception families of models. Both these families of models have demonstrated to be suitable for handling our dataset, with slightly less accurate results being provided by the Resnet50 and InceptionV1. The best results were obtained using Resnet101 and InceptionV4, with very high final accuracy and fast processing time (in the order \(\sim \) 1 second). Finally, Resnet152 and InceptionResnetV2 models proved to be slightly too complex or slower for our case; they indeed provided excellent results but taking longer response times (in the order of \(\sim \) 3-5 seconds). The response time is indeed affected by the complexity ( \(N_p\) ) of the model itself, and by the hardware used. In our work, GPUs were used for training and testing all the models, and the hardware conditions were kept the same for all models.

Based on the results obtained, ResNet101 model was chosen as the best solution for our application, in terms of accuracy and reduced complexity. After fine-tuning operations, the accuracy that we obtained with this model reached nearly 99%, both in the validation and test datasets. This latter includes target real images, that the models have never seen before; thus, it can be used for testing of the ability of the models to generalize the information learnt during the training/validation phase.

The trend in the accuracy increase and loss function decrease during training of the Resnet101 model on the original geometry are shown in Fig. 6 (a) and (b), respectively. Particularly, the loss function quantifies the error between the predicted output during training of the model and the actual target values in the dataset. In our case, the loss function is computed using the cross-entropy function and the Adam optimiser (Géron, 2022 ). The error is expected to reduce during the training, which eventually leads to more accurate predictions of the model on previously-unseen data. The combination of accuracy and loss function trends, along with other control parameters, is typically used and monitored to evaluate the training process, and avoid e.g. under- or over-fitting problems (Géron, 2022 ). As Fig. 6 (a) shows, the accuracy experiences a sudden step increase during the very first training phase (epochs, that is, the number of times the complete database is repeatedly scrutinized by the model during its training (Géron, 2022 )). The accuracy then increases in a smooth fashion with the epochs, until an asymptotic value is reached both for training and validation accuracy. These trends in the two accuracy curves can generally be associated with a proper training; indeed, being the two curves close to each other may be interpreted as an absence of under-fitting problems. On the other hand, Fig. 6 (b) shows that the loss function curves are close to each other, with a monotonically-decreasing trend. This can be interpreted as an absence of over-fitting problems, and thus of proper training of the model.

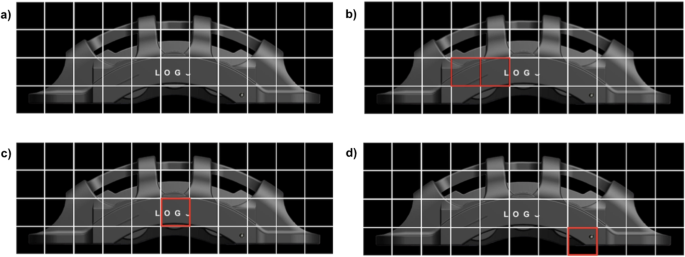

Final results of the analysis on the defect identification: (a) considered input geometry, (b), (c) and (d) identification of a scratch on the surface, partially missing logo, and painting defect respectively (highlighted in the red frames)

Finally, an example output of the overall analysis is shown in Fig. 7 , where the considered input geometry is shown (a), along with the identification of the defects (b), (c) and (d) obtained from the developed protocol. Note that, here the different defects have been separated in several figures for illustrative purposes; however, the analysis yields the identification of defects on one single image. In this work, a binary classification was performed on the considered brake calipers, where the output of the models allows to discriminate between defective or non-defective components based on the presence or absence of any of the considered defects. Note that, fine tuning of this discrimination is ultimately with the user’s requirements. Indeed, the model output yields as the probability (from 0 to 100%) of the possible presence of defects; thus, the discrimination between a defective or non-defective part is ultimately with the user’s choice of the acceptance threshold for the considered part (50% in our case). Therefore, stricter or looser criteria can be readily adopted. Eventually, for particularly complex cases, multiple models may also be used concurrently for the same task, and the final output defined based on a cross-comparison of the results from different models. As a last remark on the proposed procedure, note that here we adopted a binary classification based on the presence or absence of any defect; however, further classification of the different defects could also be implemented, to distinguish among different types of defects (multi-class classification) on the brake calipers.

Energy saving

Illustrative scenarios.

Given that the proposed tools have not yet been implemented and tested within a real industrial production line, we analyze here three perspective scenarios to provide a practical example of the potential for energy savings in an industrial context. To this, we consider three scenarios, which compare traditional human-based control operations and a quality control system enhanced by the proposed Machine Learning (ML) tools. Specifically, here we analyze a generic brake caliper assembly line formed by 14 stations, as outlined in Table 1 in the work by Burduk and Górnicka ( 2017 ). This assembly line features a critical inspection station dedicated to defect detection, around which we construct three distinct scenarios to evaluate the efficacy of traditional human-based control operations versus a quality control system augmented by the proposed ML-based tools, namely:

First Scenario (S1): Human-Based Inspection. The traditional approach involves a human operator responsible for the inspection tasks.

Second Scenario (S2): Hybrid Inspection. This scenario introduces a hybrid inspection system where our proposed ML-based automatic detection tool assists the human inspector. The ML tool analyzes the brake calipers and alerts the human inspector only when it encounters difficulties in identifying defects, specifically when the probability of a defect being present or absent falls below a certain threshold. This collaborative approach aims to combine the precision of ML algorithms with the experience of human inspectors, and can be seen as a possible transition scenario between the human-based and a fully-automated quality control operation.

Third Scenario (S3): Fully Automated Inspection. In the final scenario, we conceive a completely automated defect inspection station powered exclusively by our ML-based detection system. This setup eliminates the need for human intervention, relying entirely on the capabilities of the ML tools to identify defects.

For simplicity, we assume that all the stations are aligned in series without buffers, minimizing unnecessary complications in our estimations. To quantify the beneficial effects of implementing ML-based quality control, we adopt the Overall Equipment Effectiveness (OEE) as the primary metric for the analysis. OEE is a comprehensive measure derived from the product of three critical factors, as outlined by Nota et al. ( 2020 ): Availability (the ratio of operating time with respect to planned production time); Performance (the ratio of actual output with respect to the theoretical maximum output); and Quality (the ratio of the good units with respect to the total units produced). In this section, we will discuss the details of how we calculate each of these factors for the various scenarios.

To calculate Availability ( A ), we consider an 8-hour work shift ( \(t_{shift}\) ) with 30 minutes of breaks ( \(t_{break}\) ) during which we assume production stop (except for the fully automated scenario), and 30 minutes of scheduled downtime ( \(t_{sched}\) ) required for machine cleaning and startup procedures. For unscheduled downtime ( \(t_{unsched}\) ), primarily due to machine breakdowns, we assume an average breakdown probability ( \(\rho _{down}\) ) of 5% for each machine, with an average repair time of one hour per incident ( \(t_{down}\) ). Based on these assumptions, since the Availability represents the ratio of run time ( \(t_{run}\) ) to production time ( \(t_{pt}\) ), it can be calculated using the following formula:

with the unscheduled downtime being computed as follows:

where N is the number of machines in the production line and \(1-\left( 1-\rho _{down}\right) ^{N}\) represents the probability that at least one machine breaks during the work shift. For the sake of simplicity, the \(t_{down}\) is assumed constant regardless of the number of failures.

Table 2 presents the numerical values used to calculate Availability in the three scenarios. In the second scenario, we can observe that integrating the automated station leads to a decrease in the first factor of the OEE analysis, which can be attributed to the additional station for automated quality-control (and the related potential failure). This ultimately increases the estimation of the unscheduled downtime. In the third scenario, the detrimental effect of the additional station compensates the beneficial effect of the automated quality control on reducing the need for pauses during operator breaks; thus, the Availability for the third scenario yields as substantially equivalent to the first one (baseline).

The second factor of OEE, Performance ( P ), assesses the operational efficiency of production equipment relative to its maximum designed speed ( \(t_{line}\) ). This evaluation includes accounting for reductions in cycle speed and minor stoppages, collectively termed as speed losses . These losses are challenging to measure in advance, as performance is typically measured using historical data from the production line. For this analysis, we are constrained to hypothesize a reasonable estimate of 60 seconds of time lost to speed losses ( \(t_{losses}\) ) for each work cycle. Although this assumption may appear strong, it will become evident later that, within the context of this analysis – particularly regarding the impact of automated inspection on energy savings – the Performance (like the Availability) is only marginally influenced by introducing an automated inspection station. To account for the effect of automated inspection on the assembly line speed, we keep the time required by the other 13 stations ( \(t^*_{line}\) ) constant while varying the time allocated for visual inspection ( \(t_{inspect}\) ). According to Burduk and Górnicka ( 2017 ), the total operation time of the production line, excluding inspection, is 1263 seconds, with manual visual inspection taking 38 seconds. For the fully automated third scenario, we assume an inspection time of 5 seconds, which encloses the photo collection, pre-processing, ML-analysis, and post-processing steps. In the second scenario, instead, we add an additional time to the pure automatic case to consider the cases when the confidence of the ML model falls below 90%. We assume this happens once in every 10 inspections, which is a conservative estimate, higher than that we observed during model testing. This results in adding 10% of the human inspection time to the fully automated time. Thus, when \(t_{losses}\) are known, Performance can be expressed as follows:

The calculated values for Performance are presented in Table 3 , and we can note that the modification in inspection time has a negligible impact on this factor since it does not affect the speed loss or, at least to our knowledge, there is no clear evidence to suggest that the introduction of a new inspection station would alter these losses. Moreover, given the specific linear layout of the considered production line, the inspection time change has only a marginal effect on enhancing the production speed. However, this approach could potentially bias our scenario towards always favouring automation. To evaluate this hypothesis, a sensitivity analysis which explores scenarios where the production line operates at a faster pace will be discussed in the next subsection.

The last factor, Quality ( Q ), quantifies the ratio of compliant products out of the total products manufactured, effectively filtering out items that fail to meet the quality standards due to defects. Given the objective of our automated algorithm, we anticipate this factor of the OEE to be significantly enhanced by implementing the ML-based automated inspection station. To estimate it, we assume a constant defect probability for the production line ( \(\rho _{def}\) ) at 5%. Consequently, the number of defective products ( \(N_{def}\) ) during the work shift is calculated as \(N_{unit} \cdot \rho _{def}\) , where \(N_{unit}\) represents the average number of units (brake calipers) assembled on the production line, defined as:

To quantify defective units identified, we consider the inspection accuracy ( \(\rho _{acc}\) ), where for human visual inspection, the typical accuracy is 80% (Sundaram & Zeid, 2023 ), and for the ML-based station, we use the accuracy of our best model, i.e., 99%. Additionally, we account for the probability of the station mistakenly identifying a caliper as with a defect even if it is defect-free, i.e., the false negative rate ( \(\rho _{FN}\) ), defined as

In the absence of any reasonable evidence to justify a bias on one mistake over others, we assume a uniform distribution for both human and automated inspections regarding error preference, i.e. we set \(\rho ^{H}_{FN} = \rho ^{ML}_{FN} = \rho _{FN} = 50\%\) . Thus, the number of final compliant goods ( \(N_{goods}\) ), i.e., the calipers that are identified as quality-compliant, can be calculated as:

where \(N_{detect}\) is the total number of detected defective units, comprising TN (true negatives, i.e. correctly identified defective calipers) and FN (false negatives, i.e. calipers mistakenly identified as defect-free). The Quality factor can then be computed as:

Table 4 summarizes the Quality factor calculation, showcasing the substantial improvement brought by the ML-based inspection station due to its higher accuracy compared to human operators.

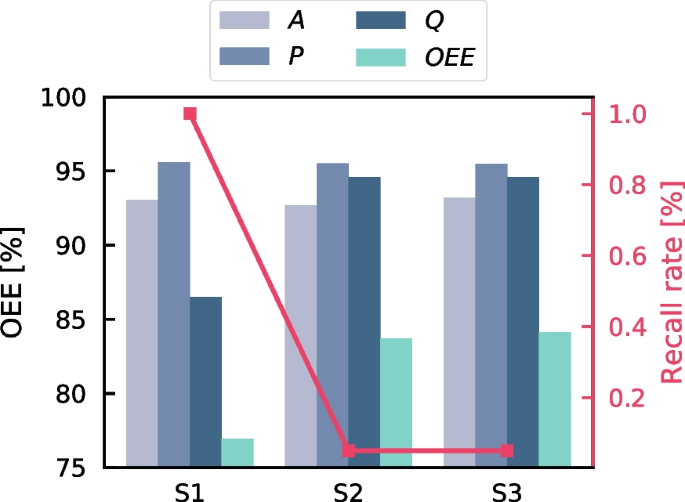

Overall Equipment Effectiveness (OEE) analysis for three scenarios (S1: Human-Based Inspection, S2: Hybrid Inspection, S3: Fully Automated Inspection). The height of the bars represents the percentage of the three factors A : Availability, P : Performance, and Q : Quality, which can be interpreted from the left axis. The green bars indicate the OEE value, derived from the product of these three factors. The red line shows the recall rate, i.e. the probability that a defective product is rejected by the client, with values displayed on the right red axis

Finally, we can determine the Overall Equipment Effectiveness by multiplying the three factors previously computed. Additionally, we can estimate the recall rate ( \(\rho _{R}\) ), which reflects the rate at which a customer might reject products. This is derived from the difference between the total number of defective units, \(N_{def}\) , and the number of units correctly identified as defective, TN , indicating the potential for defective brake calipers that may bypass the inspection process. In Fig. 8 we summarize the outcomes of the three scenarios. It is crucial to note that the scenarios incorporating the automated defect detector, S2 and S3, significantly enhance the Overall Equipment Effectiveness, primarily through substantial improvements in the Quality factor. Among these, the fully automated inspection scenario, S3, emerges as a slightly superior option, thanks to its additional benefit in removing the breaks and increasing the speed of the line. However, given the different assumptions required for this OEE study, we shall interpret these results as illustrative, and considering them primarily as comparative with the baseline scenario only. To analyze the sensitivity of the outlined scenarios on the adopted assumptions, we investigate the influence of the line speed and human accuracy on the results in the next subsection.

Sensitivity analysis

The scenarios described previously are illustrative and based on several simplifying hypotheses. One of such hypotheses is that the production chain layout operates entirely in series, with each station awaiting the arrival of the workpiece from the preceding station, resulting in a relatively slow production rate (1263 seconds). This setup can be quite different from reality, where slower operations can be accelerated by installing additional machines in parallel to balance the workload and enhance productivity. Moreover, we utilized a literature value of 80% for the accuracy of the human visual inspector operator, as reported by Sundaram and Zeid ( 2023 ). However, this accuracy can vary significantly due to factors such as the experience of the inspector and the defect type.

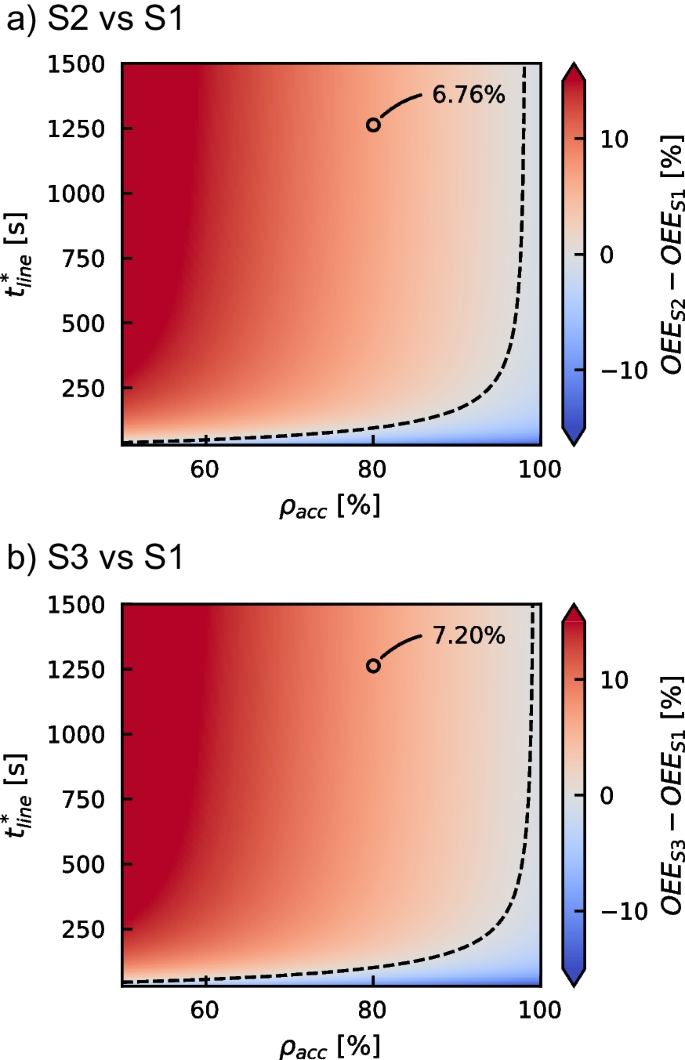

Effect of assembly time for stations (excluding visual inspection), \(t^*_{line}\) , and human inspection accuracy, \(\rho _{acc}\) , on the OEE analysis. (a) The subplot shows the difference between the scenario S2 (Hybrid Inspection) and the baseline scenario S1 (Human Inspection), while subplot (b) displays the difference between scenario S3 (Fully Automated Inspection) and the baseline. The maps indicate in red the values of \(t^*_{line}\) and \(\rho _{acc}\) where the integration of automated inspection stations can significantly improve OEE, and in blue where it may lower the score. The dashed lines denote the breakeven points, and the circled points pinpoint the values of the scenarios used in the “Illustrative scenario” Subsection.

A sensitivity analysis on these two factors was conducted to address these variations. The assembly time of the stations (excluding visual inspection), \(t^*_{line}\) , was varied from 60 s to 1500 s, and the human inspection accuracy, \(\rho _{acc}\) , ranged from 50% (akin to a random guesser) to 100% (representing an ideal visual inspector); meanwhile, the other variables were kept fixed.

The comparison of the OEE enhancement for the two scenarios employing ML-based inspection against the baseline scenario is displayed in the two maps in Fig. 9 . As the figure shows, due to the high accuracy and rapid response of the proposed automated inspection station, the area representing regions where the process may benefit energy savings in the assembly lines (indicated in red shades) is significantly larger than the areas where its introduction could degrade performance (indicated in blue shades). However, it can be also observed that the automated inspection could be superfluous or even detrimental in those scenarios where human accuracy and assembly speed are very high, indicating an already highly accurate workflow. In these cases, and particularly for very fast production lines, short times for quality control can be expected to be key (beyond accuracy) for the optimization.

Finally, it is important to remark that the blue region (areas below the dashed break-even lines) might expand if the accuracy of the neural networks for defect detection is lower when implemented in an real production line. This indicates the necessity for new rounds of active learning and an augment of the ratio of real images in the database, to eventually enhance the performance of the ML model.

Conclusions