- Python for Machine Learning

- Machine Learning with R

- Machine Learning Algorithms

- Math for Machine Learning

- Machine Learning Interview Questions

- ML Projects

- Deep Learning

- Computer vision

- Data Science

- Artificial Intelligence

Expected SARSA in Reinforcement Learning

- SARSA Reinforcement Learning

- Understanding Reinforcement Learning in-depth

- Sparse Rewards in Reinforcement Learning

- Reinforcement learning

- Epsilon-Greedy Algorithm in Reinforcement Learning

- Reinforcement learning from Human Feedback

- A Beginner's Guide to Deep Reinforcement Learning

- Introduction to Thompson Sampling | Reinforcement Learning

- Actor-Critic Algorithm in Reinforcement Learning

- Upper Confidence Bound Algorithm in Reinforcement Learning

- Model-Free Reinforcement Learning: An Overview

- Neural Logic Reinforcement Learning - An Introduction

- Genetic Algorithm for Reinforcement Learning : Python implementation

- ML | Reinforcement Learning Algorithm : Python Implementation using Q-learning

- 7 Applications of Reinforcement Learning in Real World

- Reinforcement Learning using PyTorch

- The Role of Reinforcement Learning in Autonomous Systems

- What is Compatible Function Approximation Theorem in Reinforcement Learning?

- What Is Meta-Learning in Machine Learning in R

Prerequisites: SARSA SARSA and Q-Learning technique in Reinforcement Learning are algorithms that uses Temporal Difference(TD) Update to improve the agent’s behaviour. Expected SARSA technique is an alternative for improving the agent’s policy. It is very similar to SARSA and Q-Learning, and differs in the action value function it follows.

Expected SARSA (State-Action-Reward-State-Action) is a reinforcement learning algorithm used for making decisions in an uncertain environment. It is a type of on-policy control method, meaning that it updates its policy while following it.

In Expected SARSA, the agent estimates the Q-value (expected reward) of each action in a given state, and uses these estimates to choose which action to take in the next state. The Q-value is defined as the expected cumulative reward that the agent will receive by taking a specific action in a specific state, and then following its policy from that state onwards.

The main difference between SARSA and Expected SARSA is in how they estimate the Q-value. SARSA estimates the Q-value using the Q-learning update rule, which selects the maximum Q-value of the next state and action pair. Expected SARSA, on the other hand, estimates the Q-value by taking a weighted average of the Q-values of all the possible actions in the next state. The weights are based on the probabilities of selecting each action in the next state, according to the current policy.

The steps of the Expected SARSA algorithm are as follows:

Initialize the Q-value estimates for each state-action pair to some initial value.

Repeat until convergence or a maximum number of iterations: a. Observe the current state. b. Choose an action according to the current policy, based on the estimated Q-values for that state. c. Observe the reward and the next state. d. Update the Q-value estimates for the current state-action pair, using the Expected SARSA update rule. e. Update the policy for the current state, based on the estimated Q-values.

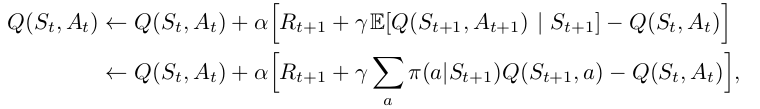

The Expected SARSA update rule is as follows:

Q(s, a) = Q(s, a) + α [R + γ ∑ π(a’|s’) Q(s’, a’) – Q(s, a)]

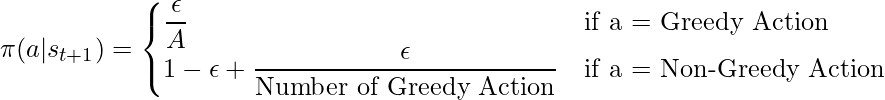

Q(s, a) is the Q-value estimate for state s and action a. α is the learning rate, which determines the weight given to new information. R is the reward received for taking action a in state s and transitioning to the next state s’. γ is the discount factor, which determines the importance of future rewards. π(a’|s’) is the probability of selecting action a’ in state s’, according to the current policy. Q(s’, a’) is the estimated Q-value for the next state-action pair. Expected SARSA is a useful algorithm for reinforcement learning in scenarios where the agent must make decisions based on uncertain and changing environments. Its ability to estimate the expected reward of each action in the next state, taking into account the current policy, makes it a useful tool for online decision-making tasks. We know that SARSA is an on-policy technique, Q-learning is an off-policy technique, but Expected SARSA can be use either as an on-policy or off-policy. This is where Expected SARSA is much more flexible compared to both these algorithms. Let’s compare the action-value function of all the three algorithms and find out what is different in Expected SARSA.

We see that Expected SARSA takes the weighted sum of all possible next actions with respect to the probability of taking that action. If the Expected Return is greedy with respect to the expected return, then this equation gets transformed to Q-Learning. Otherwise Expected SARSA is on-policy and computes the expected return for all actions, rather than randomly selecting an action like SARSA. Keeping the theory and the formulae in mind, let us compare all the three algorithms, with an experiment. We shall implement a Cliff Walker as our environment provided by the gym library Code: Python code to create the class Agent which will be inherited by the other agents to avoid duplicate code.

Code: Python code to create the SARSA Agent.

Code: Python code to create the Q-Learning Agent.

Python code to create an environment and Test all the three algorithms.

Output:

Conclusion: We have seen that Expected SARSA performs reasonably well in certain problems. It considers all possible outcomes before selecting a particular action. The fact that Expected SARSA can be used either as an off or on policy, is what makes this algorithm so dynamic.

Please Login to comment...

Similar reads.

- Computer Subject

- Machine Learning

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

RL-Coursera

Implementations of coursera reinforcement learning specialization.

Implementations of Coursera Reinforcement Learning Specialization.

1. Fundamentals of Reinforcement Learning

Week 2: Markov Decision Processes

- Assignment: K-armed Bandits and Exploration/Exploitation

Week 3: Value Functions & Bellman Equations

- No assignment

Week 4: Dynamic Programming

- Assignment: Optimal Policies with Dynamic Programming

2. Sample-based Learning Methods

Week 2: Monte Carlo Methods for Prediction & Control

Week 3: Temporal Difference Learning Methods for Prediction

- Assignment: Policy Evaluation with Temporal Difference Learning

Week 4: Temporal Difference Learning Methods for Control

- Assignment: Q-learning and Expected Sarsa

Week 5: Planning, Learning & Actiong

- Assignment: Dyna-Q and Dyna-Q+

3. Predictions and Control with Function Approximation

Week 1: On-policy Prediction with Approximation

- Assignment: Semi-gradient TD(0) with Stage Aggregation

Week 2: Constructing Features for Prediction

- Assignment: Semi-gradient TD with a Neural Network

Week 3: Function Approximation and Control

- Assignment: Episodic Sarsa with Function Approximation and Tile-coding

Week 4: Policy Gradient

- Assignment: Average Reward Softmax Actor-Critic with Tile-coding

4. A Complete Reinforcement Learning System (Capstone)

Lunar Lander Projects

Assignment: Build the Lunar Lander Agent

Assignment: Parameter Study

Sarsa, expected sarsa and Q-learning on the OpenAI taxi environment

In this post, we’ll see how three commonly-used reinforcement algorithms - sarsa, expected sarsa and q-learning - stack up on the OpenAI Gym Taxi (v2) environment.

Note: this post assumes that the reader is familiar with basic RL concepts. A good resource for learning these is the textbook by Sutton and Barto (2018), which is freely available online .

Sarsa, Expected Sarsa and Q-Learning Link to heading

So, what are these algorithms?

Let’s say our agent is currently at state s , and takes action a . Our action-value function, q(s,a) , gives us an estimate for how good this was for the agent. Initially this is set to 0, since our agent doesn’t know anything yet. We’ll need to update this function as our agent learns more about its environment.

The algorithms are similar, in that they all update q(s,a) after every time step. They do this by using information about the next reward $R_{t+1}$, and the action-value function for the next state and action, q(s’,a’) .

The algorithms are different in the exact way q(s,a) is updated. Each algorithm agrees that the agent ends up at s’ , but disagrees as to how to calculate the value of the next state, q(s’,a’) .

According to Sarsa , once the agent gets to s’ , it will follow its policy, $ \pi$. Knowing this information, we can sample an action a’ from $ \pi$ at state s’ , and use q(s’, a’) as the estimate of the next state:

$$ q(s,a) = q(s,a) + \alpha \left[ R_{t+1} + \gamma q(s’, a’) - q(s,a) \right]$$

Expected Sarsa, on the other hand, reasons that rather than sampling from $\pi$ to pick an action a’ by, we should just calculate the expected value of s’ . This way, the estimate of how good s’ is won’t fluctuate around, like it would when sampling an action from a distribution, but rather remain steady around the “average” outcome for the state.

$$ q(s,a) = q(s,a) + \alpha \left[ R_{t+1} + \gamma \sum_{a’} \pi (a’ | s’) q(s’, a’) - q(s,a) \right] $$

Q-learning takes a different approach. It assumes that when the agent is in state s’ , it will take the action a’ that it thinks is the best action. In other words, it will take the action a’ that maximises q(s’, a’) .

$$ q(s,a) = q(s,a) + \alpha \left[ R_{t+1} + \gamma \max_{a’} q(s’, a’) - q(s,a) \right] $$

So how differently do these algorithms perform? Let’s find out by using the Taxi environment in the OpenAI Gym.

OpenAI Gym Taxi Environment Link to heading

A taxi in its 5x5 environment

In this environment, the agent operates a taxi that must pick up and drop off passengers in a 5x5 grid.

The taxi can move in four directions: north, south, east and west. Passengers are picked up from one of four locations and then must be dropped off at their destination.

The taxi can take the following actions (numbering same as in the environment):

- 0: move south

- 1: move north

- 2: move east

- 3: move west

- 4: pick up passenger

- 5: drop off passenger

The agent recieves a reward of -1 on every timestep, and an additional reward of +20 for delivering the passenger. Attempting to pick up or drop off the passenger illegally gives a reward of -10.

An episode is finished either when a passenger completes their journey or after 200 time steps; whatever is sooner.

Creating the agent Link to heading

First thing to do is import all the libraries we’ll need:

We’re going to create a class called Agent. The idea is that we can specify a single parameter, method , and use that to determine which algorithm the agent uses to learn.

Our class starts like this.

Let’s look at the init() method first. In this method we’ll initialise environment parameters, like the number of squares the taxi can go in, or the number of actions the taxi can take. We’ll also set up the initial values for parameters $ \alpha$, $ \gamma$ ,$ \epsilon$, initialise our table of q-values and create our policy, which starts off as the equiprobable random policy.

Oh, and we’ll also define which environment we’d like to use. That’s what the line self.env = gym.make('Taxi-v2') does below.

Next we’ll create our function that runs an episode of the environment. I’ll put the code up here, then run through it below.

s = self.env.reset() is used to start a new episode. It resets the environment, placing the car in a random position and randomising the passenger/dropoff locations. The state that we start in is saved under the variable s .

Next we initialise our variables to update. The episode will end when done is set to True. The variable r_sum holds a running reward sum from the episode, and n_steps is used to track when we are getting close to the end of the episode. We also initialise gam = self.gamma . We want to decay gamma , the discount factor for future rewards, towards the end of the episode once we don’t have many time steps left.

Now we come to the actual episode itself. In each time step, we sample an action from our policy pi : a = np.argmax(np.cumsum(self.pi[s,:]) > np.random.random()) , and then use s_prime,r,done,info = self.env.step(a) to make the action, collect the reward, record the next state and check if the episode is finished (note: s_prime refers to s’ ).

At this point we update the estimate for q(s,a) and how we update it differs according to what method we are using. These lines of code just implement the equations from the first part of the post - not super interesting, really, but of great potential impact for learning our policy.

Next, it’s time to update our policy pi . That’s this bit of code:

First we find what the best action is for our current state. That is, if the agent is in state s , what a gives the highest q(s,a) ? This is what best_a represents above.

A printout of some states and their q-values. The best action is the one that maximises q(s,a). If there’s a tie, it’s better to split randomly.

Once we know the best action, we update the policy. We want to pick the best action with $ 1 - \epsilon$ chance and choose randomly with $ \epsilon$ chance. The if/else statement implements these probabilities.

Next we decay gamma a bit, but only if we’re close to the end of the episode. This is because with just a few time periods left, there should be more incentive for short-term behavior because long-term actions don’t have time to pay off. Finally, the reward is added to the reward sum and the state rolled forward.

Training the agent Link to heading

Here’s the code for training the agent, where we run lots of episodes in which the agent attempts to maximise its reward. We know that the Taxi environment will not change over time, so if the policy and action-value functions stabilise there isn’t any problem for us.

We start by initialising r_sums , a container that will hold the reward sum r_sum of every episode. Then we run the episode, and decrease epsilon and alpha after each episode. Why?

Decreasing epsilon (our exploration parameter) over time makes sense. Each episode, the agent becomes more and more confident what good and bad choices look like. Decreasing epsilon is required if we seek to maximise our reward sum, since exploratory actions typically aren’t optimal actions, and besides, we can be fairly sure what are good actions and bad actions by this point anyway. There is a similar argument for decreasing alpha (the learning rate) over time - we don’t want the estimates to jump around too much once we are confident in their validity.

Now we can create our agents and train them.

Which method is best? Link to heading

After training our agents we can compare their performance to each other. The criteria for comparison we’ll use is the best 100-epsiode average reward for each agent.

Let’s plot the 100-epsiode rolling cumulative reward over time:

The green line (sarsa) seems to be below the others fairly consistently, but it’s close.

Looks like the Sarsa agent tends to train slower than the other two, but not by a whole lot. At the end of 200000 episodes, however, it’s Expected Sarsa that’s delivered the best reward:

The best 100-episode streak gave this average return. Expected Sarsa comes out on top, but all three agents are close.

A 100-episode average reward of 9.50 isn’t too bad at all. In fact, it would put us on the leaderboard for this problem!

Viewing the policy Link to heading

It can be quite tricky to understand the difference between agents. Even if one agent does better than the other two, it isn’t straightforward to see the reasons why.

Visualising the policy is one way to find out. Here’s some code to render the environment and to see how the agent behaves. (credit to these guys. )

Below we demonstrate some differences in policy between the expected sarsa agent and the sarsa agent. While the expected sarsa agent has learned the optimal policy, the sarsa agent hasn’t yet - but it’s not far off. (Note: these policies are before the agent is fully trained (after around 15000 episodes)).

Expected Sarsa

Conclusion Link to heading

The three agents seemed to perform around the same on this task, with Sarsa being a little worse than the other two. This result doesn’t always hold - on some tasks (see “The Cliff” - Sutton and Barto (2018) ) they perform very differently, but here the results were similar. It would be interesting to see how a planning / n-step algorithm would perform on this task.

Subscribe to the PwC Newsletter

Join the community, edit method, add a method collection.

- ON-POLICY TD CONTROL

- OFF-POLICY TD CONTROL

Remove a collection

- ON-POLICY TD CONTROL -

- OFF-POLICY TD CONTROL -

Add A Method Component

Remove a method component.

Expected Sarsa

Expected Sarsa is like Q-learning but instead of taking the maximum over next state-action pairs, we use the expected value, taking into account how likely each action is under the current policy.

$$Q\left(S_{t}, A_{t}\right) \leftarrow Q\left(S_{t}, A_{t}\right) + \alpha\left[R_{t+1} + \gamma\sum_{a}\pi\left(a\mid{S_{t+1}}\right)Q\left(S_{t+1}, a\right) - Q\left(S_{t}, A_{t}\right)\right] $$

Except for this change to the update rule, the algorithm otherwise follows the scheme of Q-learning. It is more computationally expensive than Sarsa but it eliminates the variance due to the random selection of $A_{t+1}$.

Source: Sutton and Barto, Reinforcement Learning, 2nd Edition

Usage Over Time

Categories edit add remove.

You may also contact me at info [at] lazyprogrammer [dot] me

Newsletter Signup Successful

Thanks for signing up!

Reinforcement Learning Algorithms: Expected SARSA

In this post, we’ll extend our toolset for Reinforcement Learning by considering a new temporal difference (TD) method called Expected SARSA .

In my course, “ Artificial Intelligence: Reinforcement Learning in Python “, you learn about SARSA and Q-Learning, two popular TD methods. We’ll see how Expected SARSA unifies the two.

Before we continue, just a gentle reminder that the VIP discount coupons for Financial Engineering and PyTorch: Deep Learning and Artificial Intelligence are expiring in just one week!

CLICK HERE to get 75% OFF “Financial Engineering and Artificial Intelligence in python” Topics covered:

- Exploratory data analysis, significance testing, correlations

- Alpha and beta

- Time series analysis, simple moving average, exponentially-weighted moving average

- Holt-Winters exponential smoothing model

- ARIMA and SARIMA

- Efficient Market Hypothesis

- Random Walk Hypothesis

- Time series forecasting (“stock price prediction”)

- Modern portfolio theory

- Efficient frontier / Markowitz bullet

- Mean-variance optimization

- Maximizing the Sharpe ratio

- Convex optimization with Linear Programming and Quadratic Programming

- Capital Asset Pricing Model (CAPM)

- Algorithmic trading

CLICK HERE to get 75% OFF “PyTorch: Deep Learning and Artificial Intelligence in python”

- Machine learning basics (linear neurons)

- ANNs, CNNs, and RNNs for images and sequence data

- Time series forecasting and stock predictions (+ why all those fake data scientists are doing it wrong)

- NLP (natural language processing)

- Recommender systems

- Transfer learning for computer vision

- GANs (generative adversarial networks)

- Deep reinforcement learning and applying it by building a stock trading bot

Review of SARSA and Q-Learning

Let’s begin by reviewing the regular TD methods covered in my Reinforcement Learning course.

Your job in a reinforcement learning task is to program an agent (characterized by a policy) that interacts with an environment (characterized by state transition dynamics). A picture of this process (more precisely, this article discusses a Markov Decision Process) is shown below:

The agent reads in a state \( S_t \) and decides what action \( A_t \) to perform based on the state. This is called the policy and can be characterized by a probability distribution, \( \pi( A_t | S_t) \).

As the agent does this action, it changes the environment which results in the next state \( S_{t+1} \). A reward signal \( R_{t+1} \) is also given to the agent.

The goal of an agent is to maximize its sum of future rewards, called the return, \( G_t \). The discounted return is defined as:

$$ G_t \dot{=} R_{t+1} + \gamma R_{t+2} + \gamma^2 R_{t+3} + … + \gamma^{T – t – 1} R_T $$

One important relationship we can infer from the definition of the return is:

$$ G_t = R_{t + 1} + \gamma G_{t+1} $$

Since both the policy and environment transitions can be random, the return can also be random. Because of this, we can’t maximize “the” return (since there are many possible values the return can ultimately be), but only the expected return.

Taking the expected value of both sides and conditioning on the state \( S_t \), we arrive at the Bellman Equation:

$$ V_\pi(s) = E_\pi[ R_{t + 1} + \gamma V_\pi(S_{t+1}) | S_t = s] $$

If we condition on both the state \( S_t \) and the action \( A_t \), we get the Bellman Equation for the action-value:

$$ Q_\pi(s, a) = E_\pi \left[ R_{t + 1} + \gamma \sum_{a’} \pi(a’ |S_{t+1}) Q_\pi(S_{t+1}, a’) | S_t = s, A_t = a \right] $$

This will be the important relationship to consider when we learn about Expected SARSA.

Let’s go back a few steps.

The expected return given that the agent is in state \( S_t \) and performs action \( A_t \) at time \( t \) is given by the Q-table. Specifically:

$$ Q_\pi(s, a) = E_\pi[ G_t | S_t = s, A_t = a] $$

The Q-table can be used to determine what the best action will be since we can just choose whichever action \( a \) maximizes \( Q(s,a) \).

The problem is, we do not know \( Q(s,a) \)! Furthermore, we cannot calculate it directly since the expected value requires summing over the transition distribution \( p(s’, r | s, a) \).

Generally speaking, this is unknown. e.g. Imagine building a self-driving car.

The full Monte Carlo approach is to estimate the action-value using the sample average. i.e.

$$ Q_\pi(s, a) \approx \frac{1}{N}\sum_{i=1}^{N} G^{(i)}(s,a) $$

As you recall, it’s possible to convert the formula for the sample mean into a recursive update – something that looks a bit like gradient descent.

This is convenient so that we can update \(Q\) each time we receive a new sample without having to sum up all \(N\) values each time. If we did that, our computations would get longer and longer as \(N\) grows!

The recursive update looks like this:

$$ Q_\pi^{(N)}(s, a) \leftarrow Q_\pi^{(N-1)}(s, a) + \alpha \left[ G^{(N)}(s,a) – Q_\pi^{(N-1)}(s, a) \right] $$

This shows us how to get the \(N\)th estimate from the \(N-1\)th estimate. By setting \( \alpha = \frac{1}{N} \), we get exactly the sample mean (this was derived in the course).

The Temporal Difference approach uses “bootstrapping”, where we use existing values in \(Q_\pi(s, a)\) to estimate the expected return.

The SARSA update looks as follows:

$$ Q_\pi^{(N)}(s, a) \leftarrow Q_\pi^{(N-1)}(s, a) + \alpha \left[ r + \gamma Q_\pi^{(N-1)}(s’, a’) – Q_\pi^{(N-1)}(s, a) \right] $$

Essentially, we have replaced the old “target”:

$$ G(s,a) $$

with a new “target”:

$$ r + \gamma Q(s’, a’) $$

This should remind you of the right-hand side of the Bellman Equation for Q, with all the expected values removed.

What is the significance of this?

With Monte Carlo, we would have to wait until the entire episode is complete in order to compute the return (since the return is the sum of all future rewards).

For very long episodes, this means that it will take a long time before any updates are made to your agent.

For infinitely long episodes, it’s not possible to compute the return at all! (You’d have to wait an infinite amount of time to get an answer.)

TD learning uses the immediate reward \( r \) and “estimates the rest” using the existing value of \( Q \).

Therefore, your agent learns on each step, rather than waiting until the end of an episode.

The Q-Learning “target” is:

$$ r + \gamma \max_a’ Q(s’, a’) $$

The main difference between SARSA and Q-Learning is that SARSA is on-policy while Q-Learning is off-policy .

This is because Q-Learning always uses the max, even when that action wasn’t taken. SARSA uses action \( a’ \) – whichever action was actually chosen for the next step. Q-Learning learns the value of the policy in which you take the max (even though your behavior policy may be different – e.g. epsilon-greedy). SARSA learns the value of the behavior policy.

Expected SARSA

For Expected SARSA, the target is:

$$ r + \gamma \sum_{a’} \pi( a’ | s’) Q(s’, a’) $$

This can also be written as:

$$ r + \gamma E_\pi [Q(s’, a’) | s’] $$

Thus, Expected SARSA uses the expected action-value for the next state \( s’ \), over all actions \( a’ \), weighted according to the policy distribution.

Like regular SARSA, this should remind you of the Bellman Equation for Q (even more so than regular SARSA since it now properly sums over the policy distribution).

You can think of regular SARSA as merely drawing samples from the Expected SARSA target distribution. Put a different way, SARSA does what Expected SARSA does, in expectation.

So how does Expected SARSA unify SARSA and Q-Learning?

In fact, Q-Learning is merely a special case of Expected SARSA.

First, recognize that Expected SARSA can be on-policy or off-policy. In the off-policy case, one could act according to some behavior policy \( b(a | s) \), while the target is computed according to the target policy \( \pi( a | s) \).

If \( b(a | s) \) is epsilon-greedy while \( \pi(a | s) \) is greedy, then Expected SARSA is equivalent to Q-Learning.

On the other hand, Expected SARSA generalizes SARSA as well, as discussed above.

Practical Considerations

1) Aside from all the theory above, the update itself is actually quite simple to implement. In some cases, you may find that it works better than SARSA or Q-Learning. Thus, it is a worthy addition to your RL toolbox and could form a part of your strategy for building agents to solve RL problems.

2) It’s slower than SARSA and Q-Learning since it requires summing over the action space on each update. So, you may have to decide whether the additional computation requirements are worth whatever increase in performance you observe.

Monte Carlo with Importance Sampling for Reinforcement Learning

Coding interview questions – bioinformatics rosalind.info – finding a motif in dna (episode 18), post comments (3).

Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam.

Leave a reply

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

rehassachdeva/SARSA-Expected-SARSA-Q-Learning

Folders and files, repository files navigation, sarsa-expected-sarsa-q-learning.

- Python 100.0%

IMAGES

VIDEO

COMMENTS

The Q-learning agent learns the optimal policy, one that moves along the cliff and reaches the goal in as few steps as possible. However, since the agent does not follow the optimal policy and uses ϵ-greedy exploration, it occasionally falls off the cliff. The Expected Sarsa agent takes exploration into account and follows a safer path.

The Q-learning agent learns the optimal policy, one that moves along the cliff and reaches the goal in as few steps as possible. However, since the agent does not follow the optimal policy and uses ϵ \epsilon ϵ-greedy exploration, it occasionally falls off the cliff.The Expected Sarsa agent takes exploration into account and follows a safer path.

Saved searches Use saved searches to filter your results more quickly

Week 4: Temporal Difference Learning Methods for Control. Assignment: Q-learning and Expected Sarsa; Week 5: Planning, Learning & Actiong. Assignment: Dyna-Q and Dyna-Q+; 3. Predictions and Control with Function Approximation. Week 1: On-policy Prediction with Approximation.

Expected SARSA (State-Action-Reward-State-Action) is a reinforcement learning algorithm used for making decisions in an uncertain environment. It is a type of on-policy control method, meaning that it updates its policy while following it. In Expected SARSA, the agent estimates the Q-value (expected reward) of each action in a given state, and ...

Week 4: Temporal Difference Learning Methods for Control. Assignment: Q-learning and Expected Sarsa; Week 5: Planning, Learning & Actiong. Assignment: Dyna-Q and Dyna-Q+; 3. Predictions and Control with Function Approximation. Week 1: On-policy Prediction with Approximation. Assignment: Semi-gradient TD(0) with Stage Aggregation; Week 2 ...

In this post, we'll see how three commonly-used reinforcement algorithms - sarsa, expected sarsa and q-learning - stack up on the OpenAI Gym Taxi (v2) environment. Note: this post assumes that the reader is familiar with basic RL concepts. A good resource for learning these is the textbook by Sutton and Barto (2018), which is freely available ...

Expected Sarsa. Expected Sarsa is like Q-learning but instead of taking the maximum over next state-action pairs, we use the expected value, taking into account how likely each action is under the current policy. Q ( S t, A t) ← Q ( S t, A t) + α [ R t + 1 + γ ∑ a π ( a ∣ S t + 1) Q ( S t + 1, a) − Q ( S t, A t)] Except for this ...

The Q-Learning "target" is: $$ r + \gamma \max_a' Q(s', a') $$ The main difference between SARSA and Q-Learning is that SARSA is on-policy while Q-Learning is off-policy. This is because Q-Learning always uses the max, even when that action wasn't taken. SARSA uses action \( a' \) - whichever action was actually chosen for the ...

Source: Introduction to Reinforcement learning by Sutton and Barto —Chapter 6. The action A' in the above algorithm is given by following the same policy (ε-greedy over the Q values) because SARSA is an on-policy method.. ε-greedy policy. Epsilon-greedy policy is this: Generate a random number r ∈[0,1]; If r<ε choose an action derived from the Q values (which yields the maximum utility)

Comparison of Sarsa, Q-Learning and Expected Sarsa. I made a small change to the Sarsa implementation and used an ϵ-greedy policy and then implemented all 3 algorithms and compared them using ...

Q-learning is an important Reinforcement Learning algorithm. It is introduced here and compared with SARSA and 'Expected SARSA'.

Sutton and Barto state in the 2018-version of "Reinforcement Learning: An Introduction" in the context of Expected SARSA (p. 133) the following sentences: Expected SARSA is more complex . ... How can Exected-Sarsa learning from such policy be generally better than normal Sarsa learning from an $\epsilon$-greedy policy, ...

What Is SARSA. SARSA, which expands to State, Action, Reward, State, Action, is an on-policy value-based approach. As a form of value iteration, we need a value update rule. For SARSA, we show this in equation 3: (3) The Q-value update rule is what distinguishes SARSA from Q-learning. In SARSA we see that the time difference value is calculated ...

Expected SARSA: it's gonna be same as Q-learning instead of updating my Reward with the help of the greedy move in St+1 I take the expected reward of all actions : Q(St , At) ← Q(St , At) + α[Rt+1 + γE[Q(St+1, At+1)|St+1] − Q(St , At)] Temporal difference : The current Reward is gonna be updated using the observed reward Rt+1 and the ...

α is known as the learning rate. Note that, in QL, the agent observes the current state, Sₜ, takes an action, Aₜ, ,and observe reward Rₜ₊₁ and observes the next state Sₜ₊₁.While ...

Saved searches Use saved searches to filter your results more quickly

2. I would much appreciate if you could point me in the right direction regarding this question about targets for approximate q-function for SARSA, Expected SARSA, Q-learning (notation: S is the current state, A is the current action, R is the reward, S' is the next state and A' is the action chosen from that next state).

Although I know that SARSA is on-policy while Q-learning is off-policy, when looking at their formulas it's hard (to me) to see any difference between these two algorithms.. According to the book Reinforcement Learning: An Introduction (by Sutton and Barto). In the SARSA algorithm, given a policy, the corresponding action-value function Q (in the state s and action a, at timestep t), i.e. Q(s ...

Saved searches Use saved searches to filter your results more quickly

Saved searches Use saved searches to filter your results more quickly