- Methodology

- Open access

- Published: 11 October 2016

Reviewing the research methods literature: principles and strategies illustrated by a systematic overview of sampling in qualitative research

- Stephen J. Gentles 1 , 4 ,

- Cathy Charles 1 ,

- David B. Nicholas 2 ,

- Jenny Ploeg 3 &

- K. Ann McKibbon 1

Systematic Reviews volume 5 , Article number: 172 ( 2016 ) Cite this article

50k Accesses

25 Citations

13 Altmetric

Metrics details

Overviews of methods are potentially useful means to increase clarity and enhance collective understanding of specific methods topics that may be characterized by ambiguity, inconsistency, or a lack of comprehensiveness. This type of review represents a distinct literature synthesis method, although to date, its methodology remains relatively undeveloped despite several aspects that demand unique review procedures. The purpose of this paper is to initiate discussion about what a rigorous systematic approach to reviews of methods, referred to here as systematic methods overviews , might look like by providing tentative suggestions for approaching specific challenges likely to be encountered. The guidance offered here was derived from experience conducting a systematic methods overview on the topic of sampling in qualitative research.

The guidance is organized into several principles that highlight specific objectives for this type of review given the common challenges that must be overcome to achieve them. Optional strategies for achieving each principle are also proposed, along with discussion of how they were successfully implemented in the overview on sampling. We describe seven paired principles and strategies that address the following aspects: delimiting the initial set of publications to consider, searching beyond standard bibliographic databases, searching without the availability of relevant metadata, selecting publications on purposeful conceptual grounds, defining concepts and other information to abstract iteratively, accounting for inconsistent terminology used to describe specific methods topics, and generating rigorous verifiable analytic interpretations. Since a broad aim in systematic methods overviews is to describe and interpret the relevant literature in qualitative terms, we suggest that iterative decision making at various stages of the review process, and a rigorous qualitative approach to analysis are necessary features of this review type.

Conclusions

We believe that the principles and strategies provided here will be useful to anyone choosing to undertake a systematic methods overview. This paper represents an initial effort to promote high quality critical evaluations of the literature regarding problematic methods topics, which have the potential to promote clearer, shared understandings, and accelerate advances in research methods. Further work is warranted to develop more definitive guidance.

Peer Review reports

While reviews of methods are not new, they represent a distinct review type whose methodology remains relatively under-addressed in the literature despite the clear implications for unique review procedures. One of few examples to describe it is a chapter containing reflections of two contributing authors in a book of 21 reviews on methodological topics compiled for the British National Health Service, Health Technology Assessment Program [ 1 ]. Notable is their observation of how the differences between the methods reviews and conventional quantitative systematic reviews, specifically attributable to their varying content and purpose, have implications for defining what qualifies as systematic. While the authors describe general aspects of “systematicity” (including rigorous application of a methodical search, abstraction, and analysis), they also describe a high degree of variation within the category of methods reviews itself and so offer little in the way of concrete guidance. In this paper, we present tentative concrete guidance, in the form of a preliminary set of proposed principles and optional strategies, for a rigorous systematic approach to reviewing and evaluating the literature on quantitative or qualitative methods topics. For purposes of this article, we have used the term systematic methods overview to emphasize the notion of a systematic approach to such reviews.

The conventional focus of rigorous literature reviews (i.e., review types for which systematic methods have been codified, including the various approaches to quantitative systematic reviews [ 2 – 4 ], and the numerous forms of qualitative and mixed methods literature synthesis [ 5 – 10 ]) is to synthesize empirical research findings from multiple studies. By contrast, the focus of overviews of methods, including the systematic approach we advocate, is to synthesize guidance on methods topics. The literature consulted for such reviews may include the methods literature, methods-relevant sections of empirical research reports, or both. Thus, this paper adds to previous work published in this journal—namely, recent preliminary guidance for conducting reviews of theory [ 11 ]—that has extended the application of systematic review methods to novel review types that are concerned with subject matter other than empirical research findings.

Published examples of methods overviews illustrate the varying objectives they can have. One objective is to establish methodological standards for appraisal purposes. For example, reviews of existing quality appraisal standards have been used to propose universal standards for appraising the quality of primary qualitative research [ 12 ] or evaluating qualitative research reports [ 13 ]. A second objective is to survey the methods-relevant sections of empirical research reports to establish current practices on methods use and reporting practices, which Moher and colleagues [ 14 ] recommend as a means for establishing the needs to be addressed in reporting guidelines (see, for example [ 15 , 16 ]). A third objective for a methods review is to offer clarity and enhance collective understanding regarding a specific methods topic that may be characterized by ambiguity, inconsistency, or a lack of comprehensiveness within the available methods literature. An example of this is a overview whose objective was to review the inconsistent definitions of intention-to-treat analysis (the methodologically preferred approach to analyze randomized controlled trial data) that have been offered in the methods literature and propose a solution for improving conceptual clarity [ 17 ]. Such reviews are warranted because students and researchers who must learn or apply research methods typically lack the time to systematically search, retrieve, review, and compare the available literature to develop a thorough and critical sense of the varied approaches regarding certain controversial or ambiguous methods topics.

While systematic methods overviews , as a review type, include both reviews of the methods literature and reviews of methods-relevant sections from empirical study reports, the guidance provided here is primarily applicable to reviews of the methods literature since it was derived from the experience of conducting such a review [ 18 ], described below. To our knowledge, there are no well-developed proposals on how to rigorously conduct such reviews. Such guidance would have the potential to improve the thoroughness and credibility of critical evaluations of the methods literature, which could increase their utility as a tool for generating understandings that advance research methods, both qualitative and quantitative. Our aim in this paper is thus to initiate discussion about what might constitute a rigorous approach to systematic methods overviews. While we hope to promote rigor in the conduct of systematic methods overviews wherever possible, we do not wish to suggest that all methods overviews need be conducted to the same standard. Rather, we believe that the level of rigor may need to be tailored pragmatically to the specific review objectives, which may not always justify the resource requirements of an intensive review process.

The example systematic methods overview on sampling in qualitative research

The principles and strategies we propose in this paper are derived from experience conducting a systematic methods overview on the topic of sampling in qualitative research [ 18 ]. The main objective of that methods overview was to bring clarity and deeper understanding of the prominent concepts related to sampling in qualitative research (purposeful sampling strategies, saturation, etc.). Specifically, we interpreted the available guidance, commenting on areas lacking clarity, consistency, or comprehensiveness (without proposing any recommendations on how to do sampling). This was achieved by a comparative and critical analysis of publications representing the most influential (i.e., highly cited) guidance across several methodological traditions in qualitative research.

The specific methods and procedures for the overview on sampling [ 18 ] from which our proposals are derived were developed both after soliciting initial input from local experts in qualitative research and an expert health librarian (KAM) and through ongoing careful deliberation throughout the review process. To summarize, in that review, we employed a transparent and rigorous approach to search the methods literature, selected publications for inclusion according to a purposeful and iterative process, abstracted textual data using structured abstraction forms, and analyzed (synthesized) the data using a systematic multi-step approach featuring abstraction of text, summary of information in matrices, and analytic comparisons.

For this article, we reflected on both the problems and challenges encountered at different stages of the review and our means for selecting justifiable procedures to deal with them. Several principles were then derived by considering the generic nature of these problems, while the generalizable aspects of the procedures used to address them formed the basis of optional strategies. Further details of the specific methods and procedures used in the overview on qualitative sampling are provided below to illustrate both the types of objectives and challenges that reviewers will likely need to consider and our approach to implementing each of the principles and strategies.

Organization of the guidance into principles and strategies

For the purposes of this article, principles are general statements outlining what we propose are important aims or considerations within a particular review process, given the unique objectives or challenges to be overcome with this type of review. These statements follow the general format, “considering the objective or challenge of X, we propose Y to be an important aim or consideration.” Strategies are optional and flexible approaches for implementing the previous principle outlined. Thus, generic challenges give rise to principles, which in turn give rise to strategies.

We organize the principles and strategies below into three sections corresponding to processes characteristic of most systematic literature synthesis approaches: literature identification and selection ; data abstraction from the publications selected for inclusion; and analysis , including critical appraisal and synthesis of the abstracted data. Within each section, we also describe the specific methodological decisions and procedures used in the overview on sampling in qualitative research [ 18 ] to illustrate how the principles and strategies for each review process were applied and implemented in a specific case. We expect this guidance and accompanying illustrations will be useful for anyone considering engaging in a methods overview, particularly those who may be familiar with conventional systematic review methods but may not yet appreciate some of the challenges specific to reviewing the methods literature.

Results and discussion

Literature identification and selection.

The identification and selection process includes search and retrieval of publications and the development and application of inclusion and exclusion criteria to select the publications that will be abstracted and analyzed in the final review. Literature identification and selection for overviews of the methods literature is challenging and potentially more resource-intensive than for most reviews of empirical research. This is true for several reasons that we describe below, alongside discussion of the potential solutions. Additionally, we suggest in this section how the selection procedures can be chosen to match the specific analytic approach used in methods overviews.

Delimiting a manageable set of publications

One aspect of methods overviews that can make identification and selection challenging is the fact that the universe of literature containing potentially relevant information regarding most methods-related topics is expansive and often unmanageably so. Reviewers are faced with two large categories of literature: the methods literature , where the possible publication types include journal articles, books, and book chapters; and the methods-relevant sections of empirical study reports , where the possible publication types include journal articles, monographs, books, theses, and conference proceedings. In our systematic overview of sampling in qualitative research, exhaustively searching (including retrieval and first-pass screening) all publication types across both categories of literature for information on a single methods-related topic was too burdensome to be feasible. The following proposed principle follows from the need to delimit a manageable set of literature for the review.

Principle #1:

Considering the broad universe of potentially relevant literature, we propose that an important objective early in the identification and selection stage is to delimit a manageable set of methods-relevant publications in accordance with the objectives of the methods overview.

Strategy #1:

To limit the set of methods-relevant publications that must be managed in the selection process, reviewers have the option to initially review only the methods literature, and exclude the methods-relevant sections of empirical study reports, provided this aligns with the review’s particular objectives.

We propose that reviewers are justified in choosing to select only the methods literature when the objective is to map out the range of recognized concepts relevant to a methods topic, to summarize the most authoritative or influential definitions or meanings for methods-related concepts, or to demonstrate a problematic lack of clarity regarding a widely established methods-related concept and potentially make recommendations for a preferred approach to the methods topic in question. For example, in the case of the methods overview on sampling [ 18 ], the primary aim was to define areas lacking in clarity for multiple widely established sampling-related topics. In the review on intention-to-treat in the context of missing outcome data [ 17 ], the authors identified a lack of clarity based on multiple inconsistent definitions in the literature and went on to recommend separating the issue of how to handle missing outcome data from the issue of whether an intention-to-treat analysis can be claimed.

In contrast to strategy #1, it may be appropriate to select the methods-relevant sections of empirical study reports when the objective is to illustrate how a methods concept is operationalized in research practice or reported by authors. For example, one could review all the publications in 2 years’ worth of issues of five high-impact field-related journals to answer questions about how researchers describe implementing a particular method or approach, or to quantify how consistently they define or report using it. Such reviews are often used to highlight gaps in the reporting practices regarding specific methods, which may be used to justify items to address in reporting guidelines (for example, [ 14 – 16 ]).

It is worth recognizing that other authors have advocated broader positions regarding the scope of literature to be considered in a review, expanding on our perspective. Suri [ 10 ] (who, like us, emphasizes how different sampling strategies are suitable for different literature synthesis objectives) has, for example, described a two-stage literature sampling procedure (pp. 96–97). First, reviewers use an initial approach to conduct a broad overview of the field—for reviews of methods topics, this would entail an initial review of the research methods literature. This is followed by a second more focused stage in which practical examples are purposefully selected—for methods reviews, this would involve sampling the empirical literature to illustrate key themes and variations. While this approach is seductive in its capacity to generate more in depth and interpretive analytic findings, some reviewers may consider it too resource-intensive to include the second step no matter how selective the purposeful sampling. In the overview on sampling where we stopped after the first stage [ 18 ], we discussed our selective focus on the methods literature as a limitation that left opportunities for further analysis of the literature. We explicitly recommended, for example, that theoretical sampling was a topic for which a future review of the methods sections of empirical reports was justified to answer specific questions identified in the primary review.

Ultimately, reviewers must make pragmatic decisions that balance resource considerations, combined with informed predictions about the depth and complexity of literature available on their topic, with the stated objectives of their review. The remaining principles and strategies apply primarily to overviews that include the methods literature, although some aspects may be relevant to reviews that include empirical study reports.

Searching beyond standard bibliographic databases

An important reality affecting identification and selection in overviews of the methods literature is the increased likelihood for relevant publications to be located in sources other than journal articles (which is usually not the case for overviews of empirical research, where journal articles generally represent the primary publication type). In the overview on sampling [ 18 ], out of 41 full-text publications retrieved and reviewed, only 4 were journal articles, while 37 were books or book chapters. Since many books and book chapters did not exist electronically, their full text had to be physically retrieved in hardcopy, while 11 publications were retrievable only through interlibrary loan or purchase request. The tasks associated with such retrieval are substantially more time-consuming than electronic retrieval. Since a substantial proportion of methods-related guidance may be located in publication types that are less comprehensively indexed in standard bibliographic databases, identification and retrieval thus become complicated processes.

Principle #2:

Considering that important sources of methods guidance can be located in non-journal publication types (e.g., books, book chapters) that tend to be poorly indexed in standard bibliographic databases, it is important to consider alternative search methods for identifying relevant publications to be further screened for inclusion.

Strategy #2:

To identify books, book chapters, and other non-journal publication types not thoroughly indexed in standard bibliographic databases, reviewers may choose to consult one or more of the following less standard sources: Google Scholar, publisher web sites, or expert opinion.

In the case of the overview on sampling in qualitative research [ 18 ], Google Scholar had two advantages over other standard bibliographic databases: it indexes and returns records of books and book chapters likely to contain guidance on qualitative research methods topics; and it has been validated as providing higher citation counts than ISI Web of Science (a producer of numerous bibliographic databases accessible through institutional subscription) for several non-biomedical disciplines including the social sciences where qualitative research methods are prominently used [ 19 – 21 ]. While we identified numerous useful publications by consulting experts, the author publication lists generated through Google Scholar searches were uniquely useful to identify more recent editions of methods books identified by experts.

Searching without relevant metadata

Determining what publications to select for inclusion in the overview on sampling [ 18 ] could only rarely be accomplished by reviewing the publication’s metadata. This was because for the many books and other non-journal type publications we identified as possibly relevant, the potential content of interest would be located in only a subsection of the publication. In this common scenario for reviews of the methods literature (as opposed to methods overviews that include empirical study reports), reviewers will often be unable to employ standard title, abstract, and keyword database searching or screening as a means for selecting publications.

Principle #3:

Considering that the presence of information about the topic of interest may not be indicated in the metadata for books and similar publication types, it is important to consider other means of identifying potentially useful publications for further screening.

Strategy #3:

One approach to identifying potentially useful books and similar publication types is to consider what classes of such publications (e.g., all methods manuals for a certain research approach) are likely to contain relevant content, then identify, retrieve, and review the full text of corresponding publications to determine whether they contain information on the topic of interest.

In the example of the overview on sampling in qualitative research [ 18 ], the topic of interest (sampling) was one of numerous topics covered in the general qualitative research methods manuals. Consequently, examples from this class of publications first had to be identified for retrieval according to non-keyword-dependent criteria. Thus, all methods manuals within the three research traditions reviewed (grounded theory, phenomenology, and case study) that might contain discussion of sampling were sought through Google Scholar and expert opinion, their full text obtained, and hand-searched for relevant content to determine eligibility. We used tables of contents and index sections of books to aid this hand searching.

Purposefully selecting literature on conceptual grounds

A final consideration in methods overviews relates to the type of analysis used to generate the review findings. Unlike quantitative systematic reviews where reviewers aim for accurate or unbiased quantitative estimates—something that requires identifying and selecting the literature exhaustively to obtain all relevant data available (i.e., a complete sample)—in methods overviews, reviewers must describe and interpret the relevant literature in qualitative terms to achieve review objectives. In other words, the aim in methods overviews is to seek coverage of the qualitative concepts relevant to the methods topic at hand. For example, in the overview of sampling in qualitative research [ 18 ], achieving review objectives entailed providing conceptual coverage of eight sampling-related topics that emerged as key domains. The following principle recognizes that literature sampling should therefore support generating qualitative conceptual data as the input to analysis.

Principle #4:

Since the analytic findings of a systematic methods overview are generated through qualitative description and interpretation of the literature on a specified topic, selection of the literature should be guided by a purposeful strategy designed to achieve adequate conceptual coverage (i.e., representing an appropriate degree of variation in relevant ideas) of the topic according to objectives of the review.

Strategy #4:

One strategy for choosing the purposeful approach to use in selecting the literature according to the review objectives is to consider whether those objectives imply exploring concepts either at a broad overview level, in which case combining maximum variation selection with a strategy that limits yield (e.g., critical case, politically important, or sampling for influence—described below) may be appropriate; or in depth, in which case purposeful approaches aimed at revealing innovative cases will likely be necessary.

In the methods overview on sampling, the implied scope was broad since we set out to review publications on sampling across three divergent qualitative research traditions—grounded theory, phenomenology, and case study—to facilitate making informative conceptual comparisons. Such an approach would be analogous to maximum variation sampling.

At the same time, the purpose of that review was to critically interrogate the clarity, consistency, and comprehensiveness of literature from these traditions that was “most likely to have widely influenced students’ and researchers’ ideas about sampling” (p. 1774) [ 18 ]. In other words, we explicitly set out to review and critique the most established and influential (and therefore dominant) literature, since this represents a common basis of knowledge among students and researchers seeking understanding or practical guidance on sampling in qualitative research. To achieve this objective, we purposefully sampled publications according to the criterion of influence , which we operationalized as how often an author or publication has been referenced in print or informal discourse. This second sampling approach also limited the literature we needed to consider within our broad scope review to a manageable amount.

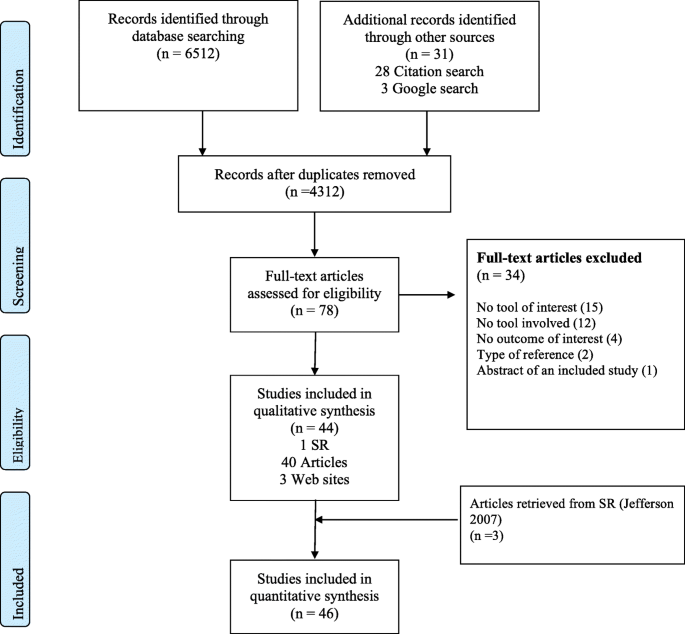

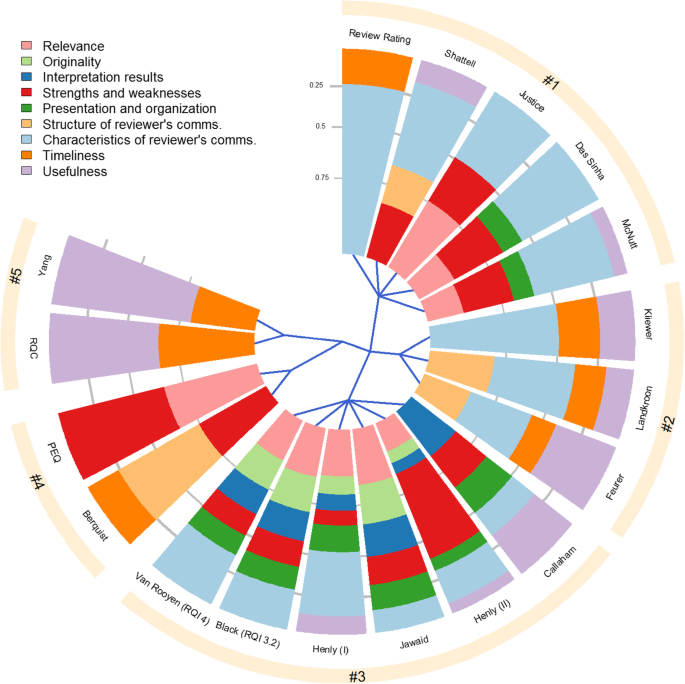

To operationalize this strategy of sampling for influence , we sought to identify both the most influential authors within a qualitative research tradition (all of whose citations were subsequently screened) and the most influential publications on the topic of interest by non-influential authors. This involved a flexible approach that combined multiple indicators of influence to avoid the dilemma that any single indicator might provide inadequate coverage. These indicators included bibliometric data (h-index for author influence [ 22 ]; number of cites for publication influence), expert opinion, and cross-references in the literature (i.e., snowball sampling). As a final selection criterion, a publication was included only if it made an original contribution in terms of novel guidance regarding sampling or a related concept; thus, purely secondary sources were excluded. Publish or Perish software (Anne-Wil Harzing; available at http://www.harzing.com/resources/publish-or-perish ) was used to generate bibliometric data via the Google Scholar database. Figure 1 illustrates how identification and selection in the methods overview on sampling was a multi-faceted and iterative process. The authors selected as influential, and the publications selected for inclusion or exclusion are listed in Additional file 1 (Matrices 1, 2a, 2b).

Literature identification and selection process used in the methods overview on sampling [ 18 ]

In summary, the strategies of seeking maximum variation and sampling for influence were employed in the sampling overview to meet the specific review objectives described. Reviewers will need to consider the full range of purposeful literature sampling approaches at their disposal in deciding what best matches the specific aims of their own reviews. Suri [ 10 ] has recently retooled Patton’s well-known typology of purposeful sampling strategies (originally intended for primary research) for application to literature synthesis, providing a useful resource in this respect.

Data abstraction

The purpose of data abstraction in rigorous literature reviews is to locate and record all data relevant to the topic of interest from the full text of included publications, making them available for subsequent analysis. Conventionally, a data abstraction form—consisting of numerous distinct conceptually defined fields to which corresponding information from the source publication is recorded—is developed and employed. There are several challenges, however, to the processes of developing the abstraction form and abstracting the data itself when conducting methods overviews, which we address here. Some of these problems and their solutions may be familiar to those who have conducted qualitative literature syntheses, which are similarly conceptual.

Iteratively defining conceptual information to abstract

In the overview on sampling [ 18 ], while we surveyed multiple sources beforehand to develop a list of concepts relevant for abstraction (e.g., purposeful sampling strategies, saturation, sample size), there was no way for us to anticipate some concepts prior to encountering them in the review process. Indeed, in many cases, reviewers are unable to determine the complete set of methods-related concepts that will be the focus of the final review a priori without having systematically reviewed the publications to be included. Thus, defining what information to abstract beforehand may not be feasible.

Principle #5:

Considering the potential impracticality of defining a complete set of relevant methods-related concepts from a body of literature one has not yet systematically read, selecting and defining fields for data abstraction must often be undertaken iteratively. Thus, concepts to be abstracted can be expected to grow and change as data abstraction proceeds.

Strategy #5:

Reviewers can develop an initial form or set of concepts for abstraction purposes according to standard methods (e.g., incorporating expert feedback, pilot testing) and remain attentive to the need to iteratively revise it as concepts are added or modified during the review. Reviewers should document revisions and return to re-abstract data from previously abstracted publications as the new data requirements are determined.

In the sampling overview [ 18 ], we developed and maintained the abstraction form in Microsoft Word. We derived the initial set of abstraction fields from our own knowledge of relevant sampling-related concepts, consultation with local experts, and reviewing a pilot sample of publications. Since the publications in this review included a large proportion of books, the abstraction process often began by flagging the broad sections within a publication containing topic-relevant information for detailed review to identify text to abstract. When reviewing flagged text, the reviewer occasionally encountered an unanticipated concept significant enough to warrant being added as a new field to the abstraction form. For example, a field was added to capture how authors described the timing of sampling decisions, whether before (a priori) or after (ongoing) starting data collection, or whether this was unclear. In these cases, we systematically documented the modification to the form and returned to previously abstracted publications to abstract any information that might be relevant to the new field.

The logic of this strategy is analogous to the logic used in a form of research synthesis called best fit framework synthesis (BFFS) [ 23 – 25 ]. In that method, reviewers initially code evidence using an a priori framework they have selected. When evidence cannot be accommodated by the selected framework, reviewers then develop new themes or concepts from which they construct a new expanded framework. Both the strategy proposed and the BFFS approach to research synthesis are notable for their rigorous and transparent means to adapt a final set of concepts to the content under review.

Accounting for inconsistent terminology

An important complication affecting the abstraction process in methods overviews is that the language used by authors to describe methods-related concepts can easily vary across publications. For example, authors from different qualitative research traditions often use different terms for similar methods-related concepts. Furthermore, as we found in the sampling overview [ 18 ], there may be cases where no identifiable term, phrase, or label for a methods-related concept is used at all, and a description of it is given instead. This can make searching the text for relevant concepts based on keywords unreliable.

Principle #6:

Since accepted terms may not be used consistently to refer to methods concepts, it is necessary to rely on the definitions for concepts, rather than keywords, to identify relevant information in the publication to abstract.

Strategy #6:

An effective means to systematically identify relevant information is to develop and iteratively adjust written definitions for key concepts (corresponding to abstraction fields) that are consistent with and as inclusive of as much of the literature reviewed as possible. Reviewers then seek information that matches these definitions (rather than keywords) when scanning a publication for relevant data to abstract.

In the abstraction process for the sampling overview [ 18 ], we noted the several concepts of interest to the review for which abstraction by keyword was particularly problematic due to inconsistent terminology across publications: sampling , purposeful sampling , sampling strategy , and saturation (for examples, see Additional file 1 , Matrices 3a, 3b, 4). We iteratively developed definitions for these concepts by abstracting text from publications that either provided an explicit definition or from which an implicit definition could be derived, which was recorded in fields dedicated to the concept’s definition. Using a method of constant comparison, we used text from definition fields to inform and modify a centrally maintained definition of the corresponding concept to optimize its fit and inclusiveness with the literature reviewed. Table 1 shows, as an example, the final definition constructed in this way for one of the central concepts of the review, qualitative sampling .

We applied iteratively developed definitions when making decisions about what specific text to abstract for an existing field, which allowed us to abstract concept-relevant data even if no recognized keyword was used. For example, this was the case for the sampling-related concept, saturation , where the relevant text available for abstraction in one publication [ 26 ]—“to continue to collect data until nothing new was being observed or recorded, no matter how long that takes”—was not accompanied by any term or label whatsoever.

This comparative analytic strategy (and our approach to analysis more broadly as described in strategy #7, below) is analogous to the process of reciprocal translation —a technique first introduced for meta-ethnography by Noblit and Hare [ 27 ] that has since been recognized as a common element in a variety of qualitative metasynthesis approaches [ 28 ]. Reciprocal translation, taken broadly, involves making sense of a study’s findings in terms of the findings of the other studies included in the review. In practice, it has been operationalized in different ways. Melendez-Torres and colleagues developed a typology from their review of the metasynthesis literature, describing four overlapping categories of specific operations undertaken in reciprocal translation: visual representation, key paper integration, data reduction and thematic extraction, and line-by-line coding [ 28 ]. The approaches suggested in both strategies #6 and #7, with their emphasis on constant comparison, appear to fall within the line-by-line coding category.

Generating credible and verifiable analytic interpretations

The analysis in a systematic methods overview must support its more general objective, which we suggested above is often to offer clarity and enhance collective understanding regarding a chosen methods topic. In our experience, this involves describing and interpreting the relevant literature in qualitative terms. Furthermore, any interpretative analysis required may entail reaching different levels of abstraction, depending on the more specific objectives of the review. For example, in the overview on sampling [ 18 ], we aimed to produce a comparative analysis of how multiple sampling-related topics were treated differently within and among different qualitative research traditions. To promote credibility of the review, however, not only should one seek a qualitative analytic approach that facilitates reaching varying levels of abstraction but that approach must also ensure that abstract interpretations are supported and justified by the source data and not solely the product of the analyst’s speculative thinking.

Principle #7:

Considering the qualitative nature of the analysis required in systematic methods overviews, it is important to select an analytic method whose interpretations can be verified as being consistent with the literature selected, regardless of the level of abstraction reached.

Strategy #7:

We suggest employing the constant comparative method of analysis [ 29 ] because it supports developing and verifying analytic links to the source data throughout progressively interpretive or abstract levels. In applying this approach, we advise a rigorous approach, documenting how supportive quotes or references to the original texts are carried forward in the successive steps of analysis to allow for easy verification.

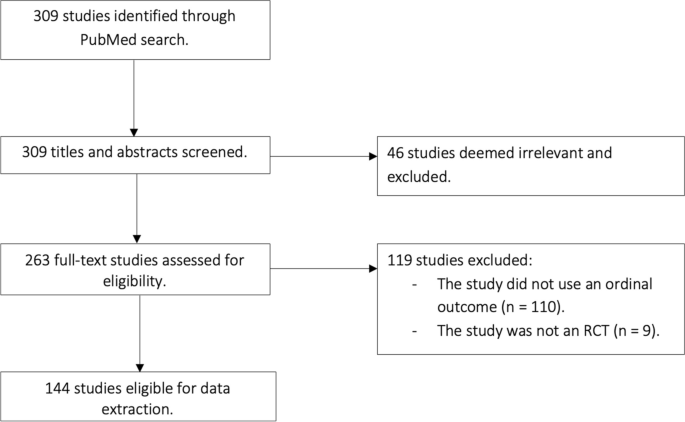

The analytic approach used in the methods overview on sampling [ 18 ] comprised four explicit steps, progressing in level of abstraction—data abstraction, matrices, narrative summaries, and final analytic conclusions (Fig. 2 ). While we have positioned data abstraction as the second stage of the generic review process (prior to Analysis), above, we also considered it as an initial step of analysis in the sampling overview for several reasons. First, it involved a process of constant comparisons and iterative decision-making about the fields to add or define during development and modification of the abstraction form, through which we established the range of concepts to be addressed in the review. At the same time, abstraction involved continuous analytic decisions about what textual quotes (ranging in size from short phrases to numerous paragraphs) to record in the fields thus created. This constant comparative process was analogous to open coding in which textual data from publications was compared to conceptual fields (equivalent to codes) or to other instances of data previously abstracted when constructing definitions to optimize their fit with the overall literature as described in strategy #6. Finally, in the data abstraction step, we also recorded our first interpretive thoughts in dedicated fields, providing initial material for the more abstract analytic steps.

Summary of progressive steps of analysis used in the methods overview on sampling [ 18 ]

In the second step of the analysis, we constructed topic-specific matrices , or tables, by copying relevant quotes from abstraction forms into the appropriate cells of matrices (for the complete set of analytic matrices developed in the sampling review, see Additional file 1 (matrices 3 to 10)). Each matrix ranged from one to five pages; row headings, nested three-deep, identified the methodological tradition, author, and publication, respectively; and column headings identified the concepts, which corresponded to abstraction fields. Matrices thus allowed us to make further comparisons across methodological traditions, and between authors within a tradition. In the third step of analysis, we recorded our comparative observations as narrative summaries , in which we used illustrative quotes more sparingly. In the final step, we developed analytic conclusions based on the narrative summaries about the sampling-related concepts within each methodological tradition for which clarity, consistency, or comprehensiveness of the available guidance appeared to be lacking. Higher levels of analysis thus built logically from the lower levels, enabling us to easily verify analytic conclusions by tracing the support for claims by comparing the original text of publications reviewed.

Integrative versus interpretive methods overviews

The analytic product of systematic methods overviews is comparable to qualitative evidence syntheses, since both involve describing and interpreting the relevant literature in qualitative terms. Most qualitative synthesis approaches strive to produce new conceptual understandings that vary in level of interpretation. Dixon-Woods and colleagues [ 30 ] elaborate on a useful distinction, originating from Noblit and Hare [ 27 ], between integrative and interpretive reviews. Integrative reviews focus on summarizing available primary data and involve using largely secure and well defined concepts to do so; definitions are used from an early stage to specify categories for abstraction (or coding) of data, which in turn supports their aggregation; they do not seek as their primary focus to develop or specify new concepts, although they may achieve some theoretical or interpretive functions. For interpretive reviews, meanwhile, the main focus is to develop new concepts and theories that integrate them, with the implication that the concepts developed become fully defined towards the end of the analysis. These two forms are not completely distinct, and “every integrative synthesis will include elements of interpretation, and every interpretive synthesis will include elements of aggregation of data” [ 30 ].

The example methods overview on sampling [ 18 ] could be classified as predominantly integrative because its primary goal was to aggregate influential authors’ ideas on sampling-related concepts; there were also, however, elements of interpretive synthesis since it aimed to develop new ideas about where clarity in guidance on certain sampling-related topics is lacking, and definitions for some concepts were flexible and not fixed until late in the review. We suggest that most systematic methods overviews will be classifiable as predominantly integrative (aggregative). Nevertheless, more highly interpretive methods overviews are also quite possible—for example, when the review objective is to provide a highly critical analysis for the purpose of generating new methodological guidance. In such cases, reviewers may need to sample more deeply (see strategy #4), specifically by selecting empirical research reports (i.e., to go beyond dominant or influential ideas in the methods literature) that are likely to feature innovations or instructive lessons in employing a given method.

In this paper, we have outlined tentative guidance in the form of seven principles and strategies on how to conduct systematic methods overviews, a review type in which methods-relevant literature is systematically analyzed with the aim of offering clarity and enhancing collective understanding regarding a specific methods topic. Our proposals include strategies for delimiting the set of publications to consider, searching beyond standard bibliographic databases, searching without the availability of relevant metadata, selecting publications on purposeful conceptual grounds, defining concepts and other information to abstract iteratively, accounting for inconsistent terminology, and generating credible and verifiable analytic interpretations. We hope the suggestions proposed will be useful to others undertaking reviews on methods topics in future.

As far as we are aware, this is the first published source of concrete guidance for conducting this type of review. It is important to note that our primary objective was to initiate methodological discussion by stimulating reflection on what rigorous methods for this type of review should look like, leaving the development of more complete guidance to future work. While derived from the experience of reviewing a single qualitative methods topic, we believe the principles and strategies provided are generalizable to overviews of both qualitative and quantitative methods topics alike. However, it is expected that additional challenges and insights for conducting such reviews have yet to be defined. Thus, we propose that next steps for developing more definitive guidance should involve an attempt to collect and integrate other reviewers’ perspectives and experiences in conducting systematic methods overviews on a broad range of qualitative and quantitative methods topics. Formalized guidance and standards would improve the quality of future methods overviews, something we believe has important implications for advancing qualitative and quantitative methodology. When undertaken to a high standard, rigorous critical evaluations of the available methods guidance have significant potential to make implicit controversies explicit, and improve the clarity and precision of our understandings of problematic qualitative or quantitative methods issues.

A review process central to most types of rigorous reviews of empirical studies, which we did not explicitly address in a separate review step above, is quality appraisal . The reason we have not treated this as a separate step stems from the different objectives of the primary publications included in overviews of the methods literature (i.e., providing methodological guidance) compared to the primary publications included in the other established review types (i.e., reporting findings from single empirical studies). This is not to say that appraising quality of the methods literature is not an important concern for systematic methods overviews. Rather, appraisal is much more integral to (and difficult to separate from) the analysis step, in which we advocate appraising clarity, consistency, and comprehensiveness—the quality appraisal criteria that we suggest are appropriate for the methods literature. As a second important difference regarding appraisal, we currently advocate appraising the aforementioned aspects at the level of the literature in aggregate rather than at the level of individual publications. One reason for this is that methods guidance from individual publications generally builds on previous literature, and thus we feel that ahistorical judgments about comprehensiveness of single publications lack relevance and utility. Additionally, while different methods authors may express themselves less clearly than others, their guidance can nonetheless be highly influential and useful, and should therefore not be downgraded or ignored based on considerations of clarity—which raises questions about the alternative uses that quality appraisals of individual publications might have. Finally, legitimate variability in the perspectives that methods authors wish to emphasize, and the levels of generality at which they write about methods, makes critiquing individual publications based on the criterion of clarity a complex and potentially problematic endeavor that is beyond the scope of this paper to address. By appraising the current state of the literature at a holistic level, reviewers stand to identify important gaps in understanding that represent valuable opportunities for further methodological development.

To summarize, the principles and strategies provided here may be useful to those seeking to undertake their own systematic methods overview. Additional work is needed, however, to establish guidance that is comprehensive by comparing the experiences from conducting a variety of methods overviews on a range of methods topics. Efforts that further advance standards for systematic methods overviews have the potential to promote high-quality critical evaluations that produce conceptually clear and unified understandings of problematic methods topics, thereby accelerating the advance of research methodology.

Hutton JL, Ashcroft R. What does “systematic” mean for reviews of methods? In: Black N, Brazier J, Fitzpatrick R, Reeves B, editors. Health services research methods: a guide to best practice. London: BMJ Publishing Group; 1998. p. 249–54.

Google Scholar

Cochrane handbook for systematic reviews of interventions. In. Edited by Higgins JPT, Green S, Version 5.1.0 edn: The Cochrane Collaboration; 2011.

Centre for Reviews and Dissemination: Systematic reviews: CRD’s guidance for undertaking reviews in health care . York: Centre for Reviews and Dissemination; 2009.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JPA, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700–0.

Barnett-Page E, Thomas J. Methods for the synthesis of qualitative research: a critical review. BMC Med Res Methodol. 2009;9(1):59.

Article PubMed PubMed Central Google Scholar

Kastner M, Tricco AC, Soobiah C, Lillie E, Perrier L, Horsley T, Welch V, Cogo E, Antony J, Straus SE. What is the most appropriate knowledge synthesis method to conduct a review? Protocol for a scoping review. BMC Med Res Methodol. 2012;12(1):1–1.

Article Google Scholar

Booth A, Noyes J, Flemming K, Gerhardus A. Guidance on choosing qualitative evidence synthesis methods for use in health technology assessments of complex interventions. In: Integrate-HTA. 2016.

Booth A, Sutton A, Papaioannou D. Systematic approaches to successful literature review. 2nd ed. London: Sage; 2016.

Hannes K, Lockwood C. Synthesizing qualitative research: choosing the right approach. Chichester: Wiley-Blackwell; 2012.

Suri H. Towards methodologically inclusive research syntheses: expanding possibilities. New York: Routledge; 2014.

Campbell M, Egan M, Lorenc T, Bond L, Popham F, Fenton C, Benzeval M. Considering methodological options for reviews of theory: illustrated by a review of theories linking income and health. Syst Rev. 2014;3(1):1–11.

Cohen DJ, Crabtree BF. Evaluative criteria for qualitative research in health care: controversies and recommendations. Ann Fam Med. 2008;6(4):331–9.

Tong A, Sainsbury P, Craig J. Consolidated criteria for reportingqualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19(6):349–57.

Article PubMed Google Scholar

Moher D, Schulz KF, Simera I, Altman DG. Guidance for developers of health research reporting guidelines. PLoS Med. 2010;7(2):e1000217.

Moher D, Tetzlaff J, Tricco AC, Sampson M, Altman DG. Epidemiology and reporting characteristics of systematic reviews. PLoS Med. 2007;4(3):e78.

Chan AW, Altman DG. Epidemiology and reporting of randomised trials published in PubMed journals. Lancet. 2005;365(9465):1159–62.

Alshurafa M, Briel M, Akl EA, Haines T, Moayyedi P, Gentles SJ, Rios L, Tran C, Bhatnagar N, Lamontagne F, et al. Inconsistent definitions for intention-to-treat in relation to missing outcome data: systematic review of the methods literature. PLoS One. 2012;7(11):e49163.

Article CAS PubMed PubMed Central Google Scholar

Gentles SJ, Charles C, Ploeg J, McKibbon KA. Sampling in qualitative research: insights from an overview of the methods literature. Qual Rep. 2015;20(11):1772–89.

Harzing A-W, Alakangas S. Google Scholar, Scopus and the Web of Science: a longitudinal and cross-disciplinary comparison. Scientometrics. 2016;106(2):787–804.

Harzing A-WK, van der Wal R. Google Scholar as a new source for citation analysis. Ethics Sci Environ Polit. 2008;8(1):61–73.

Kousha K, Thelwall M. Google Scholar citations and Google Web/URL citations: a multi‐discipline exploratory analysis. J Assoc Inf Sci Technol. 2007;58(7):1055–65.

Hirsch JE. An index to quantify an individual’s scientific research output. Proc Natl Acad Sci U S A. 2005;102(46):16569–72.

Booth A, Carroll C. How to build up the actionable knowledge base: the role of ‘best fit’ framework synthesis for studies of improvement in healthcare. BMJ Quality Safety. 2015;24(11):700–8.

Carroll C, Booth A, Leaviss J, Rick J. “Best fit” framework synthesis: refining the method. BMC Med Res Methodol. 2013;13(1):37.

Carroll C, Booth A, Cooper K. A worked example of “best fit” framework synthesis: a systematic review of views concerning the taking of some potential chemopreventive agents. BMC Med Res Methodol. 2011;11(1):29.

Cohen MZ, Kahn DL, Steeves DL. Hermeneutic phenomenological research: a practical guide for nurse researchers. Thousand Oaks: Sage; 2000.

Noblit GW, Hare RD. Meta-ethnography: synthesizing qualitative studies. Newbury Park: Sage; 1988.

Book Google Scholar

Melendez-Torres GJ, Grant S, Bonell C. A systematic review and critical appraisal of qualitative metasynthetic practice in public health to develop a taxonomy of operations of reciprocal translation. Res Synthesis Methods. 2015;6(4):357–71.

Article CAS Google Scholar

Glaser BG, Strauss A. The discovery of grounded theory. Chicago: Aldine; 1967.

Dixon-Woods M, Agarwal S, Young B, Jones D, Sutton A. Integrative approaches to qualitative and quantitative evidence. In: UK National Health Service. 2004. p. 1–44.

Download references

Acknowledgements

Not applicable.

There was no funding for this work.

Availability of data and materials

The systematic methods overview used as a worked example in this article (Gentles SJ, Charles C, Ploeg J, McKibbon KA: Sampling in qualitative research: insights from an overview of the methods literature. The Qual Rep 2015, 20(11):1772-1789) is available from http://nsuworks.nova.edu/tqr/vol20/iss11/5 .

Authors’ contributions

SJG wrote the first draft of this article, with CC contributing to drafting. All authors contributed to revising the manuscript. All authors except CC (deceased) approved the final draft. SJG, CC, KAB, and JP were involved in developing methods for the systematic methods overview on sampling.

Authors’ information

Competing interests.

The authors declare that they have no competing interests.

Consent for publication

Ethics approval and consent to participate, author information, authors and affiliations.

Department of Clinical Epidemiology and Biostatistics, McMaster University, Hamilton, Ontario, Canada

Stephen J. Gentles, Cathy Charles & K. Ann McKibbon

Faculty of Social Work, University of Calgary, Alberta, Canada

David B. Nicholas

School of Nursing, McMaster University, Hamilton, Ontario, Canada

Jenny Ploeg

CanChild Centre for Childhood Disability Research, McMaster University, 1400 Main Street West, IAHS 408, Hamilton, ON, L8S 1C7, Canada

Stephen J. Gentles

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Stephen J. Gentles .

Additional information

Cathy Charles is deceased

Additional file

Additional file 1:.

Submitted: Analysis_matrices. (DOC 330 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Gentles, S.J., Charles, C., Nicholas, D.B. et al. Reviewing the research methods literature: principles and strategies illustrated by a systematic overview of sampling in qualitative research. Syst Rev 5 , 172 (2016). https://doi.org/10.1186/s13643-016-0343-0

Download citation

Received : 06 June 2016

Accepted : 14 September 2016

Published : 11 October 2016

DOI : https://doi.org/10.1186/s13643-016-0343-0

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Systematic review

- Literature selection

- Research methods

- Research methodology

- Overview of methods

- Systematic methods overview

- Review methods

Systematic Reviews

ISSN: 2046-4053

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Mixed methods research: what it is and what it could be

- Open access

- Published: 29 March 2019

- Volume 48 , pages 193–216, ( 2019 )

Cite this article

You have full access to this open access article

- Rob Timans 1 ,

- Paul Wouters 2 &

- Johan Heilbron 3

112k Accesses

90 Citations

13 Altmetric

Explore all metrics

A Correction to this article was published on 06 May 2019

This article has been updated

Combining methods in social scientific research has recently gained momentum through a research strand called Mixed Methods Research (MMR). This approach, which explicitly aims to offer a framework for combining methods, has rapidly spread through the social and behavioural sciences, and this article offers an analysis of the approach from a field theoretical perspective. After a brief outline of the MMR program, we ask how its recent rise can be understood. We then delve deeper into some of the specific elements that constitute the MMR approach, and we engage critically with the assumptions that underlay this particular conception of using multiple methods. We conclude by offering an alternative view regarding methods and method use.

Similar content being viewed by others

Mixed Methods

Avoid common mistakes on your manuscript.

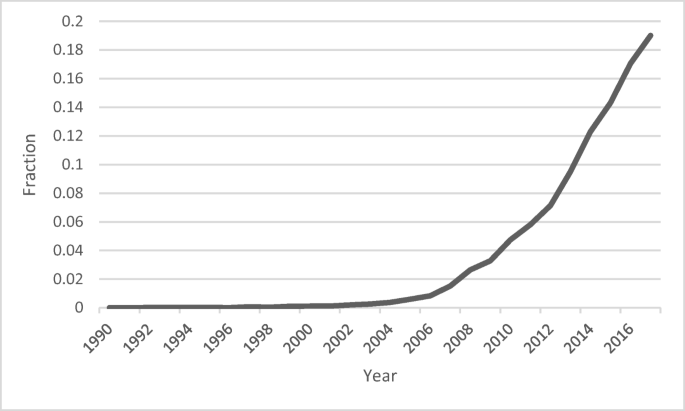

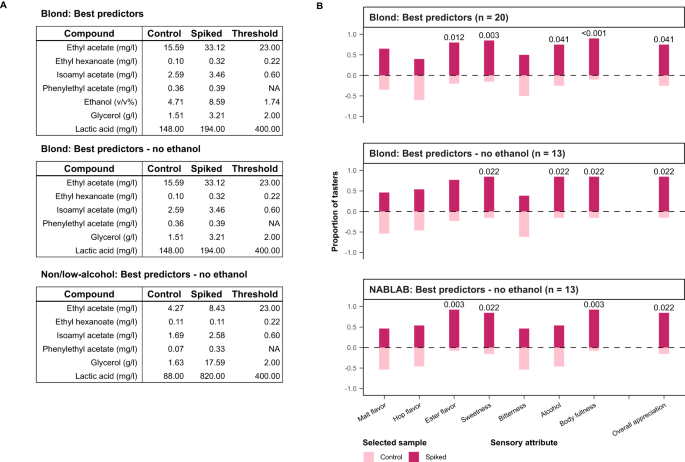

The interest in combining methods in social scientific research has a long history. Terms such as “triangulation,” “combining methods,” and “multiple methods” have been around for quite a while to designate using different methods of data analysis in empirical studies. However, this practice has gained new momentum through a research strand that has recently emerged and that explicitly aims to offer a framework for combining methods. This approach, which goes by the name of Mixed Methods Research (MMR), has rapidly become popular in the social and behavioural sciences. This can be seen, for instance, in Fig. 1 , where the number of publications mentioning “mixed methods” in the title or abstract in the Thomson Reuters Web of Science is depicted. The number increased rapidly over the past ten years, especially after 2006. Footnote 1

Fraction of the total of articles mentioning Mixed Method Research appearing in a given year, 1990–2017 (yearly values sum to 1). See footnote 1

The subject of mixed methods thus seems to have gained recognition among social scientists. The rapid rise of the number of articles mentioning the term raises various sociological questions. In this article, we address three of these questions. The first question concerns the degree to which the approach of MMR has become institutionalized within the field of the social sciences. Has MMR become a recognizable realm of knowledge production? Has its ascendance been accompanied by the production of textbooks, the founding of journals, and other indicators of institutionalization? The answer to this question provides an assessment of the current state of MMR. Once that is determined, the second question is how MMR’s rise can be understood. Where does the approach come from and how can its emergence and spread be understood? To answer this question, we use Pierre Bourdieu’s field analytical approach to science and academic institutions (Bourdieu 1975 , 1988 , 2004 , 2007 ; Bourdieu et al. 1991 ). We flesh out this approach in the next section. The third question concerns the substance of the MMR corpus seen in the light of the answers to the previous questions: how can we interpret the specific content of this approach in the context of its socio-historical genesis and institutionalization, and how can we understand its proposal for “mixing methods” in practice?

We proceed as follows. In the next section, we give an account of our theoretical approach. Then, in the third, we assess the degree of institutionalization of MMR, drawing on the indicators of academic institutionalization developed by Fleck et al. ( 2016 ). In the fourth section, we address the second question by examining the position of the academic entrepreneurs behind the rise of MMR. The aim is to understand these agents’ engagement in MMR, as well as its distinctive content as being informed by their position in this field. Viewing MMR as a position-taking of academic entrepreneurs, linked to their objective position in this field, allows us to reflect sociologically on the substance of the approach. We offer this reflection in the fifth section, where we indicate some problems with MMR. To get ahead of the discussion, these problems have to do with the framing of MMR as a distinct methodology and its specific conceptualization of data and methods of data analysis. We argue that these problems hinder fruitfully combining methods in a practical understanding of social scientific research. Finally, we conclude with some tentative proposals for an alternative view on combining methods.

A field approach

Our investigation of the rise and institutionalization of MMR relies on Bourdieu’s field approach. In general, field theory provides a model for the structural dimensions of practices. In fields, agents occupy a position relative to each other based on the differences in the volume and structure of their capital holdings. Capital can be seen as a resource that agents employ to exert power in the field. The distribution of the form of capital that is specific to the field serves as a principle of hierarchization in the field, differentiating those that hold more capital from those that hold less. This principle allows us to make a distinction between, respectively, the dominant and dominated factions in a field. However, in mature fields all agents—dominant and dominated—share an understanding of what is at stake in the field and tend to accept its principle of hierarchization. They are invested in the game, have an interest in it, and share the field’s illusio .

In the present case, we can interpret the various disciplines in the social sciences as more or less autonomous spaces that revolve around the shared stake in producing legitimate scientific knowledge by the standards of the field. What constitutes legitimate knowledge in these disciplinary fields, the production of which bestows scholars with prestige and an aura of competence, is in large part determined by the dominant agents in the field, who occupy positions in which most of the consecration of scientific work takes place. Scholars operating in a field are endowed with initial and accumulated field-specific capital, and are engaged in the struggle to gain additional capital (mainly scientific and intellectual prestige) in order to advance their position in the field. The main focus of these agents will generally be the disciplinary field in which they built their careers and invested their capital. These various disciplinary spaces are in turn part of a broader field of the social sciences in which the social status and prestige of the various disciplines is at stake. The ensuing disciplinary hierarchy is an important factor to take into account when analysing the circulation of new scientific products such as MMR. Furthermore, a distinction needs to be made between the academic and the scientific field. While the academic field revolves around universities and other degree-granting institutions, the stakes in the scientific field entail the production and valuation of knowledge. Of course, in modern science these fields are closely related, but they do not coincide (Gingras and Gemme 2006 ). For instance, part of the production of legitimate knowledge takes place outside of universities.

This framework makes it possible to contextualize the emergence of MMR in a socio-historical way. It also enables an assessment of some of the characteristics of MMR as a scientific product, since Bourdieu insists on the homology between the objective positions in a field and the position-takings of the agents who occupy these positions. As a new methodological approach, MMR is the result of the position-takings of its producers. The position-takings of the entrepreneurs at the core of MMR can therefore be seen as expressions in the struggles over the authority to define the proper methodology that underlies good scientific work regarding combining methods, and the potential rewards that come with being seen, by other agents, as authoritative on these matters. Possible rewards include a strengthened autonomy of the subfield of MMR and an improved position in the social-scientific field.

The role of these entrepreneurs or ‘intellectual leaders’ who can channel intellectual energy and can take the lead in institution building has been emphasised by sociologists of science as an important aspect of the production of knowledge that is visible and recognized as distinct in the larger scientific field (e.g., Mullins 1973 ; Collins 1998 ). According to Bourdieu, their position can, to a certain degree, explain the strategy they pursue and the options they perceive to be viable in the trade-off regarding the risks and potential rewards for their work.

We do not provide a full-fledged field analysis of MMR here. Rather, we use the concept as a heuristic device to account for the phenomenon of MMR in the social context in which it emerged and diffused. But first, we take stock of the current situation of MMR by focusing on the degree of institutionalization of MMR in the scientific field.

The institutionalization of mixed methods research

When discussing institutionalization, we have to be careful about what we mean by this term. More precisely, we need to be specific about the context and distinguish between institutionalization in the academic field and institutionalization within the scientific field (see Gingras and Gemme 2006 ; Sapiro et al. 2018 ). The first process refers to the establishment of degrees, curricula, faculties, etc., or to institutions tied to the academic bureaucracy and academic politics. The latter refers to the emergence of institutions that support the autonomization of scholarship such as scholarly associations and scientific journals. Since MMR is still a relatively young phenomenon and academic institutionalization tends to lag scientific institutionalization (e.g., for the case of sociology and psychology, see Sapiro et al. 2018 , p. 26), we mainly focus here on the latter dimension.

Drawing on criteria proposed by Fleck et al. ( 2016 ) for the institutionalization of academic disciplines, MMR seems to have achieved a significant degree of institutionalization within the scientific field. MMR quickly gained popularity in the first decade of the twenty-first century (e.g., Tashakkori and Teddlie 2010c , pp. 803–804). A distinct corpus of publications has been produced that aims to educate those interested in MMR and to function as a source of reference for researchers: there are a number of textbooks (e.g., Plowright 2010 ; Creswell and Plano Clark 2011 ; Teddlie and Tashakkori 2008 ); a handbook that is now in its second edition (Tashakkori and Teddlie 2003 , 2010a ); as well as a reader (Plano Clark and Creswell 2007 ). Furthermore, a journal (the Journal of Mixed Methods Research [ JMMR] ) was established in 2007. The JMMR was founded by the editors John Creswell and Abbas Tashakkori with the primary aim of “building an international and multidisciplinary community of mixed methods researchers.” Footnote 2 Contributions to the journal must “fit the definition of mixed methods research” Footnote 3 and explicitly integrate qualitative and quantitative aspects of research, either in an empirical study or in a more theoretical-methodologically oriented piece.

In addition, general textbooks on social research methods and methodology now increasingly devote sections to the issue of combining methods (e.g., Creswell 2008 ; Nagy Hesse-Biber and Leavy 2008 ; Bryman 2012 ), and MMR has been described as a “third paradigm” (Denscombe 2008 ), a “movement” (Bryman 2009 ), a “third methodology” (Tashakkori and Teddlie 2010b ), a “distinct approach” (Greene 2008 ) and an “emerging field” (Tashakkori and Teddlie 2011 ), defined by a common name (that sets it apart from other approaches to combining methods) and shared terminology (Tashakkori and Teddlie 2010b , p. 19). As a further indication of institutionalization, a research association (the Mixed Methods International Research Association—MMIRA) was founded in 2013 and its inaugural conference was held in 2014. Prior to this, there have been a number of conferences on MMR or occasions on which MMR was presented and discussed in other contexts. An example of the first is the conference on mixed method research design held in Basel in 2005. Starting also in 2005, the British Homerton School of Health Studies has organised a series of international conferences on mixed methods. Moreover, MMR was on the list of sessions in a number of conferences on qualitative research (see, e.g., Creswell 2012 ).

Another sign of institutionalization can be found in efforts to forge a common disciplinary identity by providing a narrative about its history. This involves the identification of precursors and pioneers as well as an interpretation of the process that gave rise to a distinctive set of ideas and practices. An explicit attempt to chart the early history of MMR is provided by Johnson and Gray ( 2010 ). They frame MMR as rooted in the philosophy of science, particularly as a way of thinking about science that has transcended some of the most salient historical oppositions in philosophy. Philosophers like Aristotle and Kant are portrayed as thinkers who sought to integrate opposing stances, forwarding “proto-mixed methods ideas” that exhibited the spirit of MMR (Johnson and Gray 2010 , p. 72, p. 86). In this capacity, they (as well as other philosophers like Vico and Montesquieu) are presented as part of MMR providing a philosophical validation of the project by presenting it as a continuation of ideas that have already been voiced by great thinkers in the past.

In the second edition of their textbook, Creswell and Plano Clark ( 2011 ) provide an overview of the history of MMR by identifying five historical stages: the first one being a precursor to the MMR approach, consisting of rather atomised attempts by different authors to combine methods in their research. For Creswell and Plano Clark, one of the earliest examples is Campbell and Fiske’s ( 1959 ) combination of quantitative methods to improve the validity of psychological scales that gave rise to the triangulation approach to research. However, they regard this and other studies that combined methods around that time, as “antecedents to (…) more systematic attempts to forge mixed methods into a complete research design” (Creswell and Plano Clark 2011 , p. 21), and hence label this stage as the “formative period” (ibid., p. 25). Their second stage consists of the emergence of MMR as an identifiable research strand, accompanied by a “paradigm debate” about the possibility of combining qualitative and quantitative data. They locate its beginnings in the late 1980s when researchers in various fields began to combine qualitative and quantitative methods (ibid., pp. 20–21). This provoked a discussion about the feasibility of combining data that were viewed as coming from very different philosophical points of view. The third stage, the “procedural development period,” saw an emphasis on developing more hands-on procedures for designing a mixed methods study, while stage four is identified as consisting of “advocacy and expansion” of MMR as a separate methodology, involving conferences, the establishment of a journal and the first edition of the aforementioned handbook (Tashakkori and Teddlie 2003 ). Finally, the fifth stage is seen as a “reflective period,” in which discussions about the unique philosophical underpinnings and the scientific position of MMR emerge.

Creswell and Plano Clark thus locate the emergence of “MMR proper” at the second stage, when researchers started to use both qualitative and quantitative methods within a single research effort. As reasons for the emergence of MMR at this stage they identify the growing complexity of research problems, the perception of qualitative research as a legitimate form of inquiry (also by quantitative researchers) and the increasing need qualitative researchers felt for generalising their findings. They therefore perceive the emergence of the practice of combining methods as a bottom up process that grew out of research practices, and at some point in time converged towards a more structural approach. Footnote 4 Historical accounts such as these add a cognitive dimension to the efforts to institutionalize MMR. They lay the groundwork for MMR as a separate subfield with its own identity, topics, problems and intellectual history. The use of terms such as “third paradigm” and “third methodology” also suggests that there is a tendency to perceive and promote MMR as a distinct and coherent way to do research.

In view of the brief exploration of the indicators of institutionalisation of MMR, it seems reasonable to conclude that MMR has become a recognizable and fairly institutionalized strand of research with its own identity and profile within the social scientific field. This can be seen both from the establishment of formal institutions (like associations and journals) and more informal ones that rely more on the tacit agreement between agents about “what MMR is” (an example of this, which we address later in the article, is the search for a common definition of MMR in order to fix the meaning of the term). The establishment of these institutions supports the autonomization of MMR and its emancipation from the field in which it originated, but in which it continues to be embedded. This way, it can be viewed as a semi-autonomous subfield within the larger field of the social sciences and as the result of a differentiation internal to this field (Steinmetz 2016 , p. 109). It is a space that is clearly embedded within this higher level field; for example, members of the subfield of MMR also qualify as members of the overarching field, and the allocation of the most valuable and current form of capital is determined there as well. Nevertheless, as a distinct subfield, it also has specific principles that govern the production of knowledge and the rewards of domination.

We return to the content and form of this specific knowledge later in the article. The next section addresses the question of the socio-genesis of MMR.

Where does mixed methods research come from?

The origins of the subfield of MMR lay in the broader field of social scientific disciplines. We interpret the positions of the scholars most involved in MMR (the “pioneers” or “scientific entrepreneurs”) as occupying particular positions within the larger academic and scientific field. Who, then, are the researchers at the heart of MMR? Leech ( 2010 ) interviewed 4 scholars (out of 6) that she identified as early developers of the field: Alan Bryman (UK; sociology), John Creswell (USA; educational psychology), Jennifer Greene (USA; educational psychology) and Janice Morse (USA; nursing and anthropology). Educated in the 1970s and early 1980s, all four of them indicated that they were initially trained in “quantitative methods” and later acquired skills in “qualitative methods.” For two of them (Bryman and Creswell) the impetus to learn qualitative methods was their involvement in writing on, and teaching of, research methods; for Greene and Morse the initial motivation was more instrumental and related to their concrete research activity at the time. Creswell describes himself as “a postpositivist in the 1970s, self-education as a constructivist through teaching qualitative courses in the 1980s, and advocacy for mixed methods (…) from the 1990s to the present” (Creswell 2011 , p. 269). Of this group, only Morse had the benefit of learning about qualitative methods as part of her educational training (in nursing and anthropology; Leech 2010 , p. 267). Independently, Creswell ( 2012 ) identified (in addition to Bryman, Greene and Morse) John Hunter, Allen Brewer (USA; Northwestern and Boston College) and Nigel Fielding (University of Surrey, UK) as important early movers in MMR.

The selections that Leech and Creswell make regarding the key actors are based on their close involvement with the “MMR movement.” It is corroborated by a simple analysis of the articles that appeared in the Journal of Mixed Methods Research ( JMMR ), founded in 2007 as an outlet for MMR.

Table 1 lists all the authors that have published in the issues of the journal since its first publication in 2007 and that have either received more than 14 (4%) of the citations allocated between the group of 343 authors (the TLCS score in Table 1 ), or have written more than 2 articles for the Journal (1.2% of all the articles that have appeared from 2007 until October 2013) together with their educational background (i.e., the discipline in which they completed their PhD).