The Historiography of Scientific Revolutions: A Philosophical Reflection

- Living reference work entry

- Later version available View entry history

- First Online: 16 May 2023

- Cite this living reference work entry

- Yafeng Shan ORCID: orcid.org/0000-0001-7736-1822 3

Part of the book series: Historiographies of Science ((HISTSC))

183 Accesses

Scientific revolution has been one of the most controversial topics in the history and philosophy of science. Yet there has been no consensus on what is the best unit of analysis in the historiography of scientific revolutions. Nor is there a consensus on what best explains the nature of scientific revolutions. This chapter provides a critical examination of the historiography of scientific revolutions. It begins with a brief introduction to the historical development of the concept of scientific revolution, followed by an overview of the five main philosophical accounts of scientific revolutions. It then challenges two historiographical assumptions of the philosophical analyses of scientific revolutions.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Bateson W (1902) Mendel’s principles of heredity: a Defence. Cambridge University Press, Cambridge

Book Google Scholar

Bowler PJ (1989) The Mendelian revolution: the emergence of Hereditarian concepts in modern science and society. The Athlone Press, London

Google Scholar

Burtt EA (1925) The metaphysical foundations of modern physical science. Kegan Paul, Trench, Trubner & Co., Ltd., London

Butterfield H (1949) The Origins of Modern Science 1300–1800. G. Bell & Sons, London

Cawdrey R (1604) A table alphabeticall, London

Chang H (2012) Is water H2O? Evidence, realism and pluralism. Springer, Dordrecht

Chang H (2014) Epistemic activities and Systems of Practice: units of analysis in philosophy of science after the practical turn. In: Soler L, Zwart S, Lynch M, Israel-Jost V (eds) Science after the practice turn in the philosophy, history and social studies of science. Routledge, New York/London, pp 67–79

Clairaut A-C (1754) Du Systeme Du Monde, Dans Les Principes de La Gravitation Universelle. Suite Des Memoires de Mathematique, et Dephysique, Tires Des Registres de l’Academie Royale Des Sciences de l’annee 2:465

Cohen IB (1985) Revolution in science. Harvard University Press, Cambridge, MA

Correns C (1900) G. Mendels Regel Über Das Verhalten Der Nachkommenschaft Der Rassenbastarde. Berichte Der Deutschen Botanischen Gesellschaft 18(4):158–168

Article Google Scholar

Crombie AC (1994) Styles of scientific thinking in the European tradition. Gerald Duckworth & Company, London

Dampier WC (1929) A history of science. Cambridge University Press, Cambridge

Darden L (1991) Theory change in science: strategies from Mendelian genetics. Oxford University Press, Oxford

Darden L (2005) Relations among Fields: Mendelian, Cytological and Molecular Mechanisms. Stud Hist Philos Biol Biomed Sci Stud Hist Phi Part C 36(2 Spec. Iss.):349–371

Darden L, Craver CF (2002) Strategies in the Interfield discovery of the mechanism of protein synthesis. Stud Hist Phil Biol Biomed Sci 33(1):1–28

Darden L, Maull N (1977) Interfield theories. Philos Sci 44(1):43–64

de Vries H (1889) Intracellulare Pangenesis. Gustav Fischer, Jean

de Vries H (1900a) Das Spaltungsgesetz Der Bastarde (Vorlaufige Mittheilung). Berichte Der Deutschen Botanischen Gesellschaft 18(3):83–90

de Vries H (1900b) Sur La Loi de Disjonction Des Hybrides. Comptes Rendus de I’Academie Des Sciences (Paris) 130:845–847

Delage Y (1903) L’hérédité et Les Grands Problèmes de La Biologie Générale, 2nd edn. Schleicher Frères, Paris

Diderot D (1754) Pensées Sur l’interpretation de La Nature

Encyclopaedia Britannica (1771) Vol 3. Edinburgh: A. Bell and C. MacFarquhar

Enfantin P, Saint-Simon H (1858) Science de l’homme, Physiologie Religieuse. Librairie Victor Masson, Paris

Fleck L (1935) Entstehung Und Entwicklung Einer Wissenschaftlichen Tatsache: Einführung in Die Lehre Vom Denkstil Und Denkkollektiv. Benno Schwabe & Co., Basel

Florio J (1611) Queen Anna’s New World of words, or, Dictionarie of the Italian and English tongues. Melch. Bradwood, London

Galton F (1889) Natural inheritance. Macmillan & Company, London/New York

Giere RN (2006) Scientific Perspectivism. The University of Chicago Press, Chicago/London

Hacking I (1994) Styles of scientific thinking or reasoning: a new analytic tool for historians and philosophers of the sciences. In: Gavroglu K, Christianidis J, Nicolaidis E (eds) Trends in the historiography of science. Kluwer Academic Publishers, Dordrecht, pp 31–48

Chapter Google Scholar

Hall AR (1954) The Scientific Revolution 1500–1800. Longmans Green and CO, London

Johnson S (1755) Dictionary of the English language. Longman, Hurts, Rees, Orme, and Brown, London

Kitcher P (1984) 1953 and all that: a tale of two sciences. Philos Rev 93(3):335–373

Kitcher P (1989) Explanatory unification and the causal structure of the world. In: Kitcher P, Salmon WC (eds) Scientific explanation. University of Minnesota Press, Minneapolis, MN, pp 410–505

Koyré A (1939) Études Galiléennes. Hermann, Paris

Kuhn TS (1962) The structure of scientific revolutions, 1st edn. The University of Chicago Press, Chicago, IL

Kuhn TS (1970a) Logic of discovery or psychology of research? In: Lakatos I, Musgrave A (eds) Criticism and the growth of knowledge. Cambridge University Press, Cambridge, pp 1–23

Kuhn TS (1970b) The structure of scientific revolutions, 2nd edn. University of Chicago Press, Chicago, IL

Lakatos I (1968) Criticism and the methodology of scientific research Programmes. Proc Aristot Soc 69:149–186

Lakatos I (1970) History of science and its rational reconstructions. PSA: Proceedings of the Biennial Meeting of the Philosophy of Science Association 1970:91–136

Lakatos I (1978) Falsification and the methodology of scientific research Programmes. In: Worrall J, Currie G (eds) The methodology of scientific research Programme. Cambridge University Press, Cambridge, pp 8–101

Laudan L (1977) Progress and its problems: toward a theory of scientific growth. University of California Press, Berkeley/Los Angeles

Laudan L (1981) A confutation of convergent realism. Philos Sci 48(1):19–49

Lavoisier AL (1790) Elements of chemistry (trans: Robert K). William Creech, London

MacLaurin C (1748) An account of sir Isaac Newton’s philosophical discoveries, London

Massimi M (2018) Four kinds of perspectival truth. Philos Phenomenol Res 96(2):342–359

Mendel G (1866) Versuche Über Pflanzenhybriden. Verhandlungen Des Naturforschenden Vereins Brünn IV (1865) (Abhandlungen): 3–47

Morgan TH (1926) The theory of the gene. Yale University Press, New Haven, CT

Müller-Wille S (2021) Gregor Mendel and the history of heredity. In: Dietrich MR, Borrello ME, Harman O (eds) Handbook of the historiography of biology. Springer, Cham, pp 105–126

Musgrave A (1976) Why did oxygen supplant phlogiston? Research Programmes in the chemical revolution. In: Howson C (ed) Sciences, method and appraisal in the physical sciences. Cambridge University Press, Cambridge, pp 181–209

Nagel E (1961) The structure of science: problems in the logic of scientific explanation, vol 29. Harcourt, Brace & World, New York, p 716

Olby RC (1985) Origins of Mendelism, 2nd edn. University of Chicago Press, Chicago, IL

Ornstein M (1913) The role of the scientific societies in the seventeenth century. Columbia University

Pearson K (1903) The law of ancestral heredity. Biometrika 2(2):211–229

Popper K (1959) The logic of scientific discovery, 1st edn. Hutchinson & Co., London

Popper K (1963) Truth, rationality, and the growth of scientific knowledge. In: Conjectures and refutations: the growth of scientific knowledge. Routledge and Kegan Paul, London, pp 215–250

Priestley J (1796) Experiments and observations relating to the analysis of Atmospherical air: also, farther experiments relating to the generation of air from water. To which are added, considerations on the doctrine of phlogiston, and the decomposition of water. Philadelphia

Psillos S (1999) Scientific realism: how science tracks truth. Routledge, London

Randall JH (1926) The making of the modern mind. Houghton, Mifflin and Company, Boston, MA

Renouvier C (1864) Essais de Critique Générale. Troisième Essai. Les Principes de La Nature. Ladrange, Paris

Robinson JH (1921) The scientific revolution. In: The mind in the making. Harper & Brothers Publishers, New York/London, pp 151–157

Sankey H (1994) The incommensurability thesis. Avebury, Aldershot

Schmaus W (2023) Cournot and Renouvier on scientific revolutions. J Gen Philos Sci 54:7–17

Shan Y (2020a) Doing integrated history and philosophy of science: a case study of the origin of genetics, Boston Studies in the Philosophy and History of Science, 1st edn. Springer, Cham

Shan Y (2020b) Kuhn’s ‘wrong turning’ and legacy today. Synthese 197(1):381–406

Shan Y (2021) Beyond Mendelism and biometry. Stud Hist Phil Sci 89:155–163

Shan Y (2022) The functional approach: scientific Progress as increased usefulness. In: Shan Y (ed) New Philosophical Perspectives on scientific Progress. Routledge, New York, pp 46–61

Smith P (1930) The scientific revolution. In: A history of modern culture. George Routledge & Sons, Ltd., London, pp 144–178

Stanford PK (2006) Exceeding our grasp. Oxford University Press, Oxford

Temple W (1731) Of Health and Long Life. In: The Works of Sir William Temple, Bart, London, pp 272–289

Waters CK (2004) What was classical genetics? Stud Hist Philos Sci Part A 35(4):783–809

Weismann A (1892) Das Keimplasma: Eine Theorie Der Vererbung. Gustav Fischer, Jena

Weldon WFR (1905) Theory of inheritance (unpublished). UCL Library, London

Whewell W (1847) The philosophy of the inductive sciences. A new edit, vol 2. John W. Parker, London

Woodbridge HE (1940) Sir William Temple: the man and his work. Modern Language Association, New York

Worrall J (1989) Structural realism: the best of both worlds? Dialectica 43(1–2):99–124

Wray KB (2011) Kuhn’s evolutionary social ppistemology. Cambridge University Press, Cambridge

Download references

Acknowledgements

An early draft of this chapter was presented at Evidence Seminar, University of Kent on 24 May 2022. I thank the audience there for the helpful comments and discussion.

Author information

Authors and affiliations.

Hong Kong University of Science and Technology, Hong Kong, China

Yafeng Shan

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Yafeng Shan .

Editor information

Editors and affiliations.

Universidade Federal de Minas Gerais, Belo Horizonte, Brazil

Mauro L. Condé

Universidade Federal de Goiás, Goiânia, Brazil

Marlon Salomon

Rights and permissions

Reprints and permissions

Copyright information

© 2023 Springer Nature Switzerland AG

About this entry

Cite this entry.

Shan, Y. (2023). The Historiography of Scientific Revolutions: A Philosophical Reflection. In: Condé, M.L., Salomon, M. (eds) Handbook for the Historiography of Science. Historiographies of Science. Springer, Cham. https://doi.org/10.1007/978-3-030-99498-3_12-1

Download citation

DOI : https://doi.org/10.1007/978-3-030-99498-3_12-1

Received : 11 August 2022

Accepted : 14 December 2022

Published : 16 May 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-99498-3

Online ISBN : 978-3-030-99498-3

eBook Packages : Springer Reference Humanities, Soc. Sciences and Law

- Publish with us

Policies and ethics

Chapter history

DOI: https://doi.org/10.1007/978-3-030-99498-3_12-2

DOI: https://doi.org/10.1007/978-3-030-99498-3_12-1

- Find a journal

- Track your research

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

World History Project - 1750 to the Present

Course: world history project - 1750 to the present > unit 3.

- BEFORE YOU WATCH: Origins of the Industrial Revolution

- WATCH: Origins of the Industrial Revolution

- READ: Scale of the Industrial Revolution

READ: The Scientific Revolution

- READ: The Industrial Revolution

- BEFORE YOU WATCH: Coal, Steam, and the Industrial Revolution

- WATCH: Coal, Steam, and the Industrial Revolution

- Origins of the Industrial Revolution

First read: preview and skimming for gist

Second read: key ideas and understanding content.

- What is the usual story of the Scientific Revolution?

- How does the author challenge the usual story of the Scientific Revolution?

- Who participated in the Scientific Revolution?

- What were some negative social effects of the Scientific Revolution?

- Does the author think the Scientific Revolution caused the Industrial Revolution?

Third read: evaluating and corroborating

- You just read an article about scale and the Industrial Revolution. In that article, the author questioned whether the Industrial Revolution happened in Britain because of local or global factors. What do you think explains the emergence of the Scientific Revolution in Europe during the sixteenth and seventeenth centuries? Was this the result of local or global processes?

- Using the networks frame, explain why the Scientific Revolution happened in Europe and how it might have led to the Industrial Revolution.

The Scientific Revolution

Was it revolutionary, was it european, whose revolution, did it cause the industrial revolution.

- The word other can refer to the otherness of marginalize people. Anyone not belonging to the most powerful or privileged class can be a type of “other” due to race, gender, religion, socio-economic status, etc.

- It’s hard to say exactly when people started thinking about race, but it’s definitely not a natural and ancient idea. Of course, people had a sense of others outside their community, who they often looked down upon, but that wasn’t the same as seeing people as different races. For Europeans in the medieval period, humans were sorted into Christians, Jews, and heathens.

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

11.5: Scientific Revolution

- Last updated

- Save as PDF

- Page ID 132401

Figure of the heavenly bodies — An illustration of the Ptolemaic geocentric system by Portuguese cosmographer and cartographer Bartolomeu Velho, 1568 (Bibliothèque Nationale, Paris) Source: https://commons.wikimedia.org/wiki/F...Velho_1568.jpg

Roots of the Scientific Revolution

The word "revolution" connotes a period of turmoil and social upheaval where ideas about the world change severely and a completely new era of academic thought is ushered in. Therefore, the Scientific Revolution (1543 – 1687) describes quite accurately what took place in the scientific community. Medieval scientific philosophy was abandoned in favor of the new methods proposed by Bacon, Galileo, Descartes, and Newton. The importance of experimentation to the scientific method was reaffirmed. The importance of God to science was for the most part invalidated. Last, the pursuit of science itself (rather than philosophy) gained validity on its own terms. The change to the medieval idea of science occurred for four reasons:

- Seventeenth-century scientists and philosophers were able to collaborate with members of the mathematical and astronomical communities to effect advances in all fields.

- Scientists realized the inadequacy of medieval experimental methods for their work and so felt the need to devise new methods (some of which we use today).

- Academics had access to a legacy of European, Greek, and Middle Eastern scientific philosophy they could use as a starting point (either by disproving or building on the theorems).

- Groups like the British Royal Society helped validate science as a field by providing an outlet for the publication of scientists' work.

These changes were not immediate, nor did they directly create the experimental method used today. But they did represent a step toward Enlightenment thinking (with an emphasis on reason) that was revolutionary for the time.

Science and Philosophy Before the Revolution

At the end of the sixteenth century, "natural philosopher" carried much more academic clout. So, the majority of the research on scientific theory was conducted not in the scientific realm, but in philosophy, where "scientific methods" like empiricism and teleology were promoted.

In the 17th century, empiricism and teleology existed as remnants of medieval thought that were utilized by philosophers. Empiricism is a theory that reality consists solely of what one physically experiences. Teleology is the idea that phenomena exist only because they have a purpose, i.e. because God wills them to do so. Both these theories did not have a need for fact-gathering, hypothesis writing, and controlled experimentation.

Robert Boyle (1627–1691) , an Irish-born English scientist, was an early supporter of the scientific method and the founder of modern chemistry. Source: https://commons.wikimedia.org/wiki/F...bert_Boyle.jpg

The defining feature of the scientific revolution lies in how much scientific thought changed during a period of only a century. Modern experimental methods incorporate Francis Bacon's focus on the use of controlled experiments and inductive reasoning, Galileo's emphasis on incorporation of established laws from all disciplines (math, astronomy, chemistry, biology, physics) in coming to a conclusion through mechanism, and Newton's method of composition. Each successive method strengthened the validity of the next.

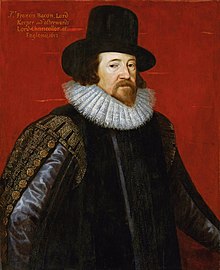

Francis Bacon (1561-1626)

Bacon represents the first step away from sixteenth-century thinking, in that he denied the validity of empiricism. He preferred inductive reasoning (drawing a general conclusion from a set of specific observations) to deductive reasoning (making an inference based on widely-accepted facts or premises).

He emphasized the necessity of fact-gathering as a first step in the scientific method , which could then be followed by carefully recorded and controlled (unbiased) experimentation. Further, experimentation should not be conducted to simply "see what happens" but "as a way of answering specific questions." Moreover, the main purpose of science was the betterment of human society and that experimentation should be applied to hard, real situations rather than to Aristotelian abstract ideas. His work influenced advances in chemistry and biology through the 18th century.

Justus Sustermans - Portrait of Galileo Galilei, 1636

Galileo Galilei (1564-1642)

Galileo argued that "an explanation of a scientific problem is truly begun when it is reduced to its basic terms of matter and motion," because only the most basic events occur because of one axiom.

For example, one can demonstrate the concept of "acceleration" in the laboratory with a ball and a slanted board. However, according to Galileo's reasoning, one would have to utilize the concepts of many different disciplines: the physics-based concepts of time and distance, the idea of gravity, force, and mass, or even the chemical composition of the element that is accelerating. All of these components could be individually broken down to their smallest elements in order for a scientist to fully understand the item as a whole.

This "mechanic" or "systemic" approach partially removed the burden of fact-gathering emphasized by Bacon. Galileo's experimental method aided advances in chemistry and biology by allowing biologists to explain the work of a muscle or any body function using existing ideas of motion, matter, energy, and other basic principles.

Robert Boyle (1627-1691)

Even though he made progress in the field of chemistry through Baconian experimentation, Boyle remained drawn to teleological explanations for scientific phenomena. For example, Boyle believed that because God establish some rules of motion and nature, phenomena must exist to serve a certain purpose within that established order.

Sir Isaac Newton (1643-1747)

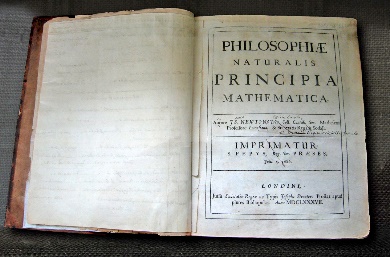

Newton composed a set of four rules for scientific reasoning stated in his famed Principia . His analytical method and laws lent well to experimentation with mathematical physics, where conclusions "could then be confirmed by direct observation. " Newton also refined Galileo's experimental method by making experiments and observations, followed by inducted conclusions that could only be overturned by the realization of other, more substantiated truths. Essentially, through his physical and mathematical approach to experimental design, Newton established a clear distinction between "natural philosophy" and "physical science. "

All of these natural philosophers built upon the work of their contemporaries. This collaboration became even simpler with the establishment of professional societies for scientists that published journals and provided forums for scientific discussion.

In Aristotelian metaphysics, abstractions dominated. All entities were understood as following rules based on their essences. Each substance had a proper or natural place. In astronomy, space was divided into two main regions. The stars resided in the higher region and were fixed in space. The planets and the moon resided in the lower region and revolved around the Earth. The sun also revolved around the Earth. Celestial and earthly motion was understood in terms of bodies seeking their natural resting places.

By the completion of the Scientific Revolution, most Aristotelian notions were overthrown. Space was reconceived as isotropic and non-hierarchical. Matter was seen as compositionally consistent throughout the universe. Motion was understood in terms of forces acting upon otherwise inert bodies. Finally, with Isaac Newton, a single constant called "gravity" was introduced as the fundamental operative force of the entire universe.

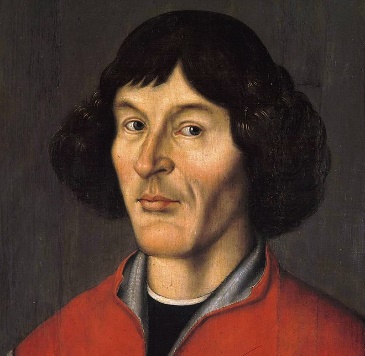

Nicolaus Copernicus (1473–1543) was a mathematician, lawyer, physician, and classicist. He was also a polyglot, a fluent speaker and writer in several languages, including Latin, Polish, German, Greek, and Italian. Most importantly for posterity, Copernicus was the astronomer credited with founding the field of modern astronomy.

Copernicus's major work, On the Revolutions of the Heavenly Spheres , was published in 1543, the year of his death. In this great work, he made a radical transformation of the system developed by Ptolemy (ca. 87–150 CE). Contrary to the Ptolemaic system, Copernicus posited a heliocentric model wherein the Earth and other planets, including Mercury, Venus, Mars, Jupiter, and Saturn, were carried by spheres around a stationary sun.

De revolutionibus, p. 9. Heliocentric model of the solar system in Copernicus' manuscript Source: https://en.Wikipedia.org/wiki/File:D...script_p9b.jpg

In the Ptolemaic system, the Earth had been figured as the stationary center of the universe around which the planets and sun revolved. In the Copernican system, on the other hand, the Earth was merely "another planet," that is, a "wandering star. "

Because his philosophy and theology held that God created only perfect order and harmony, Copernicus envisioned the planetary revolutions as perfectly circular. In order to account for some locations of planets and moons, he retained the auxiliary theory of epicycles that had been a part of the Ptolemaic system. In Copernicus's use of epicycles, the planets also circled the Earth while circling the sun.

Like a majority of Europeans of his time, Copernicus was an adherent of the Catholic Church. Yet a heliocentric universe ran contrary to the Christian, Earth-centered cosmology. That's why his On the Revolutions of the Heavenly Spheres was not published until the end of his life.

While Copernicus's ideas were revolutionary at the time, they did not cause a revolution in the study of astronomy until taken up by Galileo Galilei and Johannes Kepler several years later.

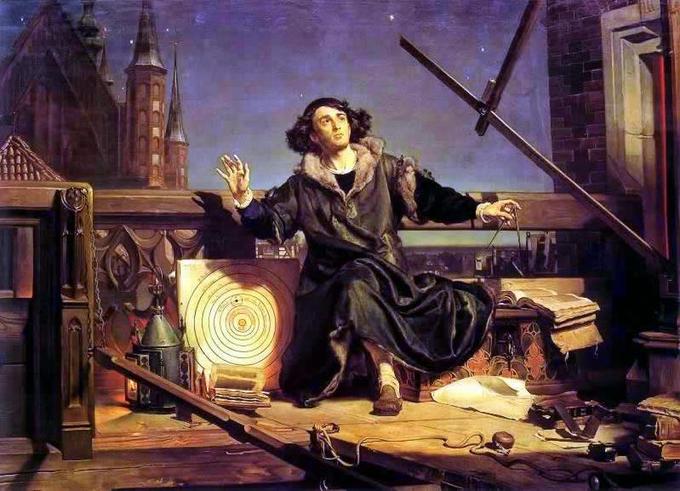

Jan Matejko-Astronomer Copernicus-Conversation with God Oil painting by the Polish artist Jan Matejko, finished in 1873, depicting Nicolaus Copernicus observing the heavens from a balcony by a tower near the cathedral in Frombork. Source: https://en.Wikipedia.org/wiki/Jan_Ma...n_with_God.jpg

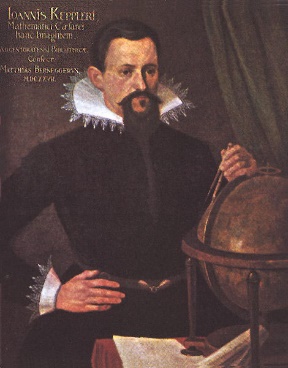

While accepting the central feature of Copernicus's model—a stationary sun and revolving planets—Johannes Kepler (1571- 1640) threw out the idea of epicycles. In their place, he introduced a wholly new conception of the universe that is still essentially accepted today. In his book The New Astronomy (1609), his "elliptical thesis" boldly declared that the planets, including the Earth, revolve around a stationary sun in ellipses, rather than in perfect circles.

Under Aristotle's metaphysics, an object's movement toward its "natural" resting place accounted for its motion. Kepler introduced the modern notion of physical forces as the causes of motion. For Kepler, a planet or other lifeless object is without an internal or active force of its own. Motion is derived only from external forces acting on the object. This particular insight was essential for Isaac Newton's breakthrough formulation of the law of gravity.

Attribution: Material modified from CK-12 6.3 The Scientific Revolution

What is a scientific hypothesis?

It's the initial building block in the scientific method.

Hypothesis basics

What makes a hypothesis testable.

- Types of hypotheses

- Hypothesis versus theory

Additional resources

Bibliography.

A scientific hypothesis is a tentative, testable explanation for a phenomenon in the natural world. It's the initial building block in the scientific method . Many describe it as an "educated guess" based on prior knowledge and observation. While this is true, a hypothesis is more informed than a guess. While an "educated guess" suggests a random prediction based on a person's expertise, developing a hypothesis requires active observation and background research.

The basic idea of a hypothesis is that there is no predetermined outcome. For a solution to be termed a scientific hypothesis, it has to be an idea that can be supported or refuted through carefully crafted experimentation or observation. This concept, called falsifiability and testability, was advanced in the mid-20th century by Austrian-British philosopher Karl Popper in his famous book "The Logic of Scientific Discovery" (Routledge, 1959).

A key function of a hypothesis is to derive predictions about the results of future experiments and then perform those experiments to see whether they support the predictions.

A hypothesis is usually written in the form of an if-then statement, which gives a possibility (if) and explains what may happen because of the possibility (then). The statement could also include "may," according to California State University, Bakersfield .

Here are some examples of hypothesis statements:

- If garlic repels fleas, then a dog that is given garlic every day will not get fleas.

- If sugar causes cavities, then people who eat a lot of candy may be more prone to cavities.

- If ultraviolet light can damage the eyes, then maybe this light can cause blindness.

A useful hypothesis should be testable and falsifiable. That means that it should be possible to prove it wrong. A theory that can't be proved wrong is nonscientific, according to Karl Popper's 1963 book " Conjectures and Refutations ."

An example of an untestable statement is, "Dogs are better than cats." That's because the definition of "better" is vague and subjective. However, an untestable statement can be reworded to make it testable. For example, the previous statement could be changed to this: "Owning a dog is associated with higher levels of physical fitness than owning a cat." With this statement, the researcher can take measures of physical fitness from dog and cat owners and compare the two.

Types of scientific hypotheses

In an experiment, researchers generally state their hypotheses in two ways. The null hypothesis predicts that there will be no relationship between the variables tested, or no difference between the experimental groups. The alternative hypothesis predicts the opposite: that there will be a difference between the experimental groups. This is usually the hypothesis scientists are most interested in, according to the University of Miami .

For example, a null hypothesis might state, "There will be no difference in the rate of muscle growth between people who take a protein supplement and people who don't." The alternative hypothesis would state, "There will be a difference in the rate of muscle growth between people who take a protein supplement and people who don't."

If the results of the experiment show a relationship between the variables, then the null hypothesis has been rejected in favor of the alternative hypothesis, according to the book " Research Methods in Psychology " (BCcampus, 2015).

There are other ways to describe an alternative hypothesis. The alternative hypothesis above does not specify a direction of the effect, only that there will be a difference between the two groups. That type of prediction is called a two-tailed hypothesis. If a hypothesis specifies a certain direction — for example, that people who take a protein supplement will gain more muscle than people who don't — it is called a one-tailed hypothesis, according to William M. K. Trochim , a professor of Policy Analysis and Management at Cornell University.

Sometimes, errors take place during an experiment. These errors can happen in one of two ways. A type I error is when the null hypothesis is rejected when it is true. This is also known as a false positive. A type II error occurs when the null hypothesis is not rejected when it is false. This is also known as a false negative, according to the University of California, Berkeley .

A hypothesis can be rejected or modified, but it can never be proved correct 100% of the time. For example, a scientist can form a hypothesis stating that if a certain type of tomato has a gene for red pigment, that type of tomato will be red. During research, the scientist then finds that each tomato of this type is red. Though the findings confirm the hypothesis, there may be a tomato of that type somewhere in the world that isn't red. Thus, the hypothesis is true, but it may not be true 100% of the time.

Scientific theory vs. scientific hypothesis

The best hypotheses are simple. They deal with a relatively narrow set of phenomena. But theories are broader; they generally combine multiple hypotheses into a general explanation for a wide range of phenomena, according to the University of California, Berkeley . For example, a hypothesis might state, "If animals adapt to suit their environments, then birds that live on islands with lots of seeds to eat will have differently shaped beaks than birds that live on islands with lots of insects to eat." After testing many hypotheses like these, Charles Darwin formulated an overarching theory: the theory of evolution by natural selection.

"Theories are the ways that we make sense of what we observe in the natural world," Tanner said. "Theories are structures of ideas that explain and interpret facts."

- Read more about writing a hypothesis, from the American Medical Writers Association.

- Find out why a hypothesis isn't always necessary in science, from The American Biology Teacher.

- Learn about null and alternative hypotheses, from Prof. Essa on YouTube .

Encyclopedia Britannica. Scientific Hypothesis. Jan. 13, 2022. https://www.britannica.com/science/scientific-hypothesis

Karl Popper, "The Logic of Scientific Discovery," Routledge, 1959.

California State University, Bakersfield, "Formatting a testable hypothesis." https://www.csub.edu/~ddodenhoff/Bio100/Bio100sp04/formattingahypothesis.htm

Karl Popper, "Conjectures and Refutations," Routledge, 1963.

Price, P., Jhangiani, R., & Chiang, I., "Research Methods of Psychology — 2nd Canadian Edition," BCcampus, 2015.

University of Miami, "The Scientific Method" http://www.bio.miami.edu/dana/161/evolution/161app1_scimethod.pdf

William M.K. Trochim, "Research Methods Knowledge Base," https://conjointly.com/kb/hypotheses-explained/

University of California, Berkeley, "Multiple Hypothesis Testing and False Discovery Rate" https://www.stat.berkeley.edu/~hhuang/STAT141/Lecture-FDR.pdf

University of California, Berkeley, "Science at multiple levels" https://undsci.berkeley.edu/article/0_0_0/howscienceworks_19

Sign up for the Live Science daily newsletter now

Get the world’s most fascinating discoveries delivered straight to your inbox.

Part of the San Andreas fault may be gearing up for an earthquake

Antarctica is covered in volcanoes, could they erupt?

Extremely rare marsupial mole that 'expertly navigates' sand dunes spotted in Western Australia

Most Popular

By Anna Gora December 27, 2023

By Anna Gora December 26, 2023

By Anna Gora December 25, 2023

By Emily Cooke December 23, 2023

By Victoria Atkinson December 22, 2023

By Anna Gora December 16, 2023

By Anna Gora December 15, 2023

By Anna Gora November 09, 2023

By Donavyn Coffey November 06, 2023

By Anna Gora October 31, 2023

By Anna Gora October 26, 2023

- 2 How and where to watch the April 8 solar eclipse online for free

- 3 NASA engineers discover why Voyager 1 is sending a stream of gibberish from outside our solar system

- 4 Giant coyote killed in southern Michigan turns out to be a gray wolf — despite the species vanishing from region 100 years ago

- 5 When is the next total solar eclipse after 2024 in North America?

- 2 Giant 'toe biter' water bugs discovered in Cyprus for the 1st time

- 3 When is the next total solar eclipse after 2024 in North America?

- 4 Watch live! The total solar eclipse has begun over North America.

- 5 8,200-year-old campsite of 'Paleo-Archaic' peoples discovered on US Air Force base in New Mexico

Revolutions in Science & the Enlightenment

The scientific revolution, roots of the scientific revolution.

The scientific revolution, which emphasized systematic experimentation as the most valid research method, resulted in developments in mathematics, physics, astronomy, biology, and chemistry. These developments transformed the views of society about nature.

Learning Objectives

Outline the changes that occurred during the Scientific Revolution that resulted in developments towards a new means for experimentation

Key Takeaways

- The scientific revolution was the emergence of modern science during the early modern period, when developments in mathematics, physics, astronomy, biology (including human anatomy), and chemistry transformed societal views about nature.

- The change to the medieval idea of science occurred for four reasons: collaboration, the derivation of new experimental methods, the ability to build on the legacy of existing scientific philosophy, and institutions that enabled academic publishing.

- Under the scientific method, which was defined and applied in the 17th century, natural and artificial circumstances were abandoned and a research tradition of systematic experimentation was slowly accepted throughout the scientific community.

- During the scientific revolution, changing perceptions about the role of the scientist in respect to nature, and the value of experimental or observed evidence, led to a scientific methodology in which empiricism played a large, but not absolute, role.

- As the scientific revolution was not marked by any single change, many new ideas contributed. Some of them were revolutions in their own fields.

- Science came to play a leading role in Enlightenment discourse and thought. Many Enlightenment writers and thinkers had backgrounds in the sciences, and associated scientific advancement with the overthrow of religion and traditional authority in favor of the development of free speech and thought.

- empiricism : A theory stating that knowledge comes only, or primarily, from sensory experience. It emphasizes evidence, especially the kind of evidence gathered through experimentation and by use of the scientific method.

- Galileo : An Italian thinker (1564-1642) and key figure in the scientific revolution who improved the telescope, made astronomical observations, and put forward the basic principle of relativity in physics.

- Baconian method : The investigative method developed by Sir Francis Bacon. It was put forward in Bacon’s book Novum Organum (1620), (or New Method), and was supposed to replace the methods put forward in Aristotle’s Organon. This method was influential upon the development of the scientific method in modern science, but also more generally in the early modern rejection of medieval Aristotelianism.

- scientific method : A body of techniques for investigating phenomena, acquiring new knowledge, or correcting and integrating previous knowledge, through the application of empirical or measurable evidence subject to specific principles of reasoning. It has characterized natural science since the 17th century, consisting in systematic observation, measurement, and experiment, and the formulation, testing, and modification of hypotheses.

- British Royal Society : A British learned society for science; possibly the oldest such society still in existence, having been founded in November 1660.

The scientific revolution was the emergence of modern science during the early modern period, when developments in mathematics, physics, astronomy, biology (including human anatomy), and chemistry transformed societal views about nature. The scientific revolution began in Europe toward the end of the Renaissance period, and continued through the late 18th century, influencing the intellectual social movement known as the Enlightenment. While its dates are disputed, the publication in 1543 of Nicolaus Copernicus ‘s De revolutionibus orbium coelestium ( On the Revolutions of the Heavenly Spheres ) is often cited as marking the beginning of the scientific revolution.

The scientific revolution was built upon the foundation of ancient Greek learning and science in the Middle Ages, as it had been elaborated and further developed by Roman/Byzantine science and medieval Islamic science. The Aristotelian tradition was still an important intellectual framework in the 17th century, although by that time natural philosophers had moved away from much of it. Key scientific ideas dating back to classical antiquity had changed drastically over the years, and in many cases been discredited. The ideas that remained (for example, Aristotle ‘s cosmology, which placed the Earth at the center of a spherical hierarchic cosmos, or the Ptolemaic model of planetary motion) were transformed fundamentally during the scientific revolution.

The change to the medieval idea of science occurred for four reasons:

- Seventeenth century scientists and philosophers were able to collaborate with members of the mathematical and astronomical communities to effect advances in all fields.

- Scientists realized the inadequacy of medieval experimental methods for their work and so felt the need to devise new methods (some of which we use today).

- Academics had access to a legacy of European, Greek, and Middle Eastern scientific philosophy that they could use as a starting point (either by disproving or building on the theorems).

- Institutions (for example, the British Royal Society) helped validate science as a field by providing an outlet for the publication of scientists’ work.

New Methods

Under the scientific method that was defined and applied in the 17th century, natural and artificial circumstances were abandoned, and a research tradition of systematic experimentation was slowly accepted throughout the scientific community. The philosophy of using an inductive approach to nature (to abandon assumption and to attempt to simply observe with an open mind) was in strict contrast with the earlier, Aristotelian approach of deduction, by which analysis of known facts produced further understanding. In practice, many scientists and philosophers believed that a healthy mix of both was needed—the willingness to both question assumptions, and to interpret observations assumed to have some degree of validity.

During the scientific revolution, changing perceptions about the role of the scientist in respect to nature, the value of evidence, experimental or observed, led towards a scientific methodology in which empiricism played a large, but not absolute, role. The term British empiricism came into use to describe philosophical differences perceived between two of its founders—Francis Bacon, described as empiricist, and René Descartes, who was described as a rationalist. Bacon’s works established and popularized inductive methodologies for scientific inquiry, often called the Baconian method , or sometimes simply the scientific method. His demand for a planned procedure of investigating all things natural marked a new turn in the rhetorical and theoretical framework for science, much of which still surrounds conceptions of proper methodology today. Correspondingly, Descartes distinguished between the knowledge that could be attained by reason alone (rationalist approach), as, for example, in mathematics, and the knowledge that required experience of the world, as in physics.

Thomas Hobbes, George Berkeley, and David Hume were the primary exponents of empiricism, and developed a sophisticated empirical tradition as the basis of human knowledge. The recognized founder of the approach was John Locke, who proposed in An Essay Concerning Human Understanding (1689) that the only true knowledge that could be accessible to the human mind was that which was based on experience.

Many new ideas contributed to what is called the scientific revolution. Some of them were revolutions in their own fields. These include:

- The heliocentric model that involved the radical displacement of the earth to an orbit around the sun (as opposed to being seen as the center of the universe). Copernicus’ 1543 work on the heliocentric model of the solar system tried to demonstrate that the sun was the center of the universe. The discoveries of Johannes Kepler and Galileo gave the theory credibility and the work culminated in Isaac Newton’s Principia, which formulated the laws of motion and universal gravitation that dominated scientists’ view of the physical universe for the next three centuries.

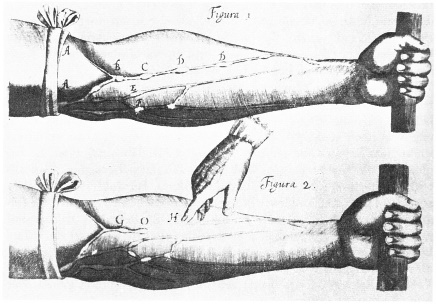

- Studying human anatomy based upon the dissection of human corpses, rather than the animal dissections, as practiced for centuries.

- Discovering and studying magnetism and electricity, and thus, electric properties of various materials.

- Modernization of disciplines (making them more as what they are today), including dentistry, physiology, chemistry, or optics.

- Invention of tools that deepened the understating of sciences, including mechanical calculator, steam digester (the forerunner of the steam engine), refracting and reflecting telescopes, vacuum pump, or mercury barometer.

The Shannon Portrait of the Hon. Robert Boyle F. R. S. (1627-1691): Robert Boyle (1627-1691), an Irish-born English scientist, was an early supporter of the scientific method and founder of modern chemistry. Boyle is known for his pioneering experiments on the physical properties of gases, his authorship of the Sceptical Chymist, his role in creating the Royal Society of London, and his philanthropy in the American colonies.

The Scientific Revolution and the Enlightenment

The scientific revolution laid the foundations for the Age of Enlightenment, which centered on reason as the primary source of authority and legitimacy, and emphasized the importance of the scientific method. By the 18th century, when the Enlightenment flourished, scientific authority began to displace religious authority, and disciplines until then seen as legitimately scientific (e.g., alchemy and astrology) lost scientific credibility.

Science came to play a leading role in Enlightenment discourse and thought. Many Enlightenment writers and thinkers had backgrounds in the sciences, and associated scientific advancement with the overthrow of religion and traditional authority in favor of the development of free speech and thought. Broadly speaking, Enlightenment science greatly valued empiricism and rational thought, and was embedded with the Enlightenment ideal of advancement and progress. At the time, science was dominated by scientific societies and academies, which had largely replaced universities as centers of scientific research and development. Societies and academies were also the backbone of the maturation of the scientific profession. Another important development was the popularization of science among an increasingly literate population. The century saw significant advancements in the practice of medicine, mathematics, and physics; the development of biological taxonomy; a new understanding of magnetism and electricity; and the maturation of chemistry as a discipline, which established the foundations of modern chemistry.

Isaac Newton’s Principia, developed the first set of unified scientific laws

Newton’s Principia formulated the laws of motion and universal gravitation, which dominated scientists’ view of the physical universe for the next three centuries. By deriving Kepler’s laws of planetary motion from his mathematical description of gravity, and then using the same principles to account for the trajectories of comets, the tides, the precession of the equinoxes, and other phenomena, Newton removed the last doubts about the validity of the heliocentric model of the cosmos. This work also demonstrated that the motion of objects on Earth and of celestial bodies could be described by the same principles. His laws of motion were to be the solid foundation of mechanics.

Physics and Mathematics

In the 16th and 17th centuries, European scientists began increasingly applying quantitative measurements to the measurement of physical phenomena on the earth, which translated into the rapid development of mathematics and physics.

Distinguish between the different key figures of the scientific revolution and their achievements in mathematics and physics

- The philosophy of using an inductive approach to nature was in strict contrast with the earlier, Aristotelian approach of deduction, by which analysis of known facts produced further understanding. In practice, scientists believed that a healthy mix of both was needed—the willingness to question assumptions, yet also to interpret observations assumed to have some degree of validity. That principle was particularly true for mathematics and physics.

- In the 16th and 17th centuries, European scientists began increasingly applying quantitative measurements to the measurement of physical phenomena on the earth.

- The Copernican Revolution, or the paradigm shift from the Ptolemaic model of the heavens to the heliocentric model with the sun at the center of the solar system, began with the publication of Copernicus’s De revolutionibus orbium coelestium, and ended with Newton’s work over a century later.

- Galileo showed a remarkably modern appreciation for the proper relationship between mathematics, theoretical physics, and experimental physics. His contributions to observational astronomy include the telescopic confirmation of the phases of Venus, the discovery of the four largest satellites of Jupiter, and the observation and analysis of sunspots.

- Newton’s Principia formulated the laws of motion and universal gravitation, which dominated scientists’ view of the physical universe for the next three centuries. He removed the last doubts about the validity of the heliocentric model of the solar system.

- The electrical science developed rapidly following the first discoveries of William Gilbert.

- scientific method : A body of techniques for investigating phenomena, acquiring new knowledge, or correcting and integrating previous knowledge that apply empirical or measurable evidence subject to specific principles of reasoning. It has characterized natural science since the 17th century, consisting in systematic observation, measurement, and experiment, and the formulation, testing, and modification of hypotheses.

- Copernican Revolution : The paradigm shift from the Ptolemaic model of the heavens, which described the cosmos as having Earth stationary at the center of the universe, to the heliocentric model with the sun at the center of the solar system. Beginning with the publication of Nicolaus Copernicus’s De revolutionibus orbium coelestium, contributions to the “revolution” continued, until finally ending with Isaac Newton’s work over a century later.

- scientific revolution : The emergence of modern science during the early modern period, when developments in mathematics, physics, astronomy, biology (including human anatomy), and chemistry transformed societal views about nature. It began in Europe towards the end of the Renaissance period, and continued through the late 18th century, influencing the intellectual social movement known as the Enlightenment.

Introduction

Under the scientific method that was defined and applied in the 17th century, natural and artificial circumstances were abandoned, and a research tradition of systematic experimentation was slowly accepted throughout the scientific community. The philosophy of using an inductive approach to nature—to abandon assumption and to attempt to simply observe with an open mind—was in strict contrast with the earlier, Aristotelian approach of deduction, by which analysis of known facts produced further understanding. In practice, many scientists (and philosophers) believed that a healthy mix of both was needed—the willingness to question assumptions, yet also to interpret observations assumed to have some degree of validity. That principle was particularly true for mathematics and physics. René Descartes, whose thought emphasized the power of reasoning but also helped establish the scientific method, distinguished between the knowledge that could be attained by reason alone (rationalist approach), which he thought was mathematics, and the knowledge that required experience of the world, which he thought was physics.

Mathematization

To the extent that medieval natural philosophers used mathematical problems, they limited social studies to theoretical analyses of local speed and other aspects of life. The actual measurement of a physical quantity, and the comparison of that measurement to a value computed on the basis of theory, was largely limited to the mathematical disciplines of astronomy and optics in Europe. In the 16th and 17th centuries, European scientists began increasingly applying quantitative measurements to the measurement of physical phenomena on Earth.

The Copernican Revolution

While the dates of the scientific revolution are disputed, the publication in 1543 of Nicolaus Copernicus’s De revolutionibus orbium coelestium ( On the Revolutions of the Heavenly Spheres ) is often cited as marking the beginning of the scientific revolution. The book proposed a heliocentric system contrary to the widely accepted geocentric system of that time. Tycho Brahe accepted Copernicus’s model but reasserted geocentricity. However, Tycho challenged the Aristotelian model when he observed a comet that went through the region of the planets. This region was said to only have uniform circular motion on solid spheres, which meant that it would be impossible for a comet to enter into the area. Johannes Kepler followed Tycho and developed the three laws of planetary motion. Kepler would not have been able to produce his laws without the observations of Tycho, because they allowed Kepler to prove that planets traveled in ellipses, and that the sun does not sit directly in the center of an orbit, but at a focus. Galileo Galilei came after Kepler and developed his own telescope with enough magnification to allow him to study Venus and discover that it has phases like a moon. The discovery of the phases of Venus was one of the more influential reasons for the transition from geocentrism to heliocentrism. Isaac Newton’s Philosophiæ Naturalis Principia Mathematica concluded the Copernican Revolution. The development of his laws of planetary motion and universal gravitation explained the presumed motion related to the heavens by asserting a gravitational force of attraction between two objects.

Other Advancements in Physics and Mathematics

Galileo was one of the first modern thinkers to clearly state that the laws of nature are mathematical. In broader terms, his work marked another step towards the eventual separation of science from both philosophy and religion, a major development in human thought. Galileo showed a remarkably modern appreciation for the proper relationship between mathematics, theoretical physics, and experimental physics. He understood the parabola, both in terms of conic sections and in terms of the ordinate (y) varying as the square of the abscissa (x). He further asserted that the parabola was the theoretically ideal trajectory of a uniformly accelerated projectile in the absence of friction and other disturbances.

Newton’s Principia formulated the laws of motion and universal gravitation, which dominated scientists’ view of the physical universe for the next three centuries. By deriving Kepler’s laws of planetary motion from his mathematical description of gravity, and then using the same principles to account for the trajectories of comets, the tides, the precession of the equinoxes, and other phenomena, Newton removed the last doubts about the validity of the heliocentric model of the cosmos. This work also demonstrated that the motion of objects on Earth, and of celestial bodies, could be described by the same principles. His prediction that Earth should be shaped as an oblate spheroid was later vindicated by other scientists. His laws of motion were to be the solid foundation of mechanics; his law of universal gravitation combined terrestrial and celestial mechanics into one great system that seemed to be able to describe the whole world in mathematical formulae. Newton also developed the theory of gravitation. After the exchanges with Robert Hooke, English natural philosopher, architect, and polymath, he worked out proof that the elliptical form of planetary orbits would result from a centripetal force inversely proportional to the square of the radius vector.

The scientific revolution also witnessed the development of modern optics. Kepler published Astronomiae Pars Optica ( The Optical Part of Astronomy ) in 1604. In it, he described the inverse-square law governing the intensity of light, reflection by flat and curved mirrors, and principles of pinhole cameras, as well as the astronomical implications of optics, such asparallax and the apparent sizes of heavenly bodies. Willebrord Snellius found the mathematical law of refraction, now known as Snell’s law, in 1621. Subsequently, Descartes showed, by using geometric construction and the law of refraction (also known as Descartes’ law), that the angular radius of a rainbow is 42°. He also independently discovered the law of reflection. Finally, Newton investigated the refraction of light, demonstrating that a prism could decompose white light into a spectrum of colors, and that a lens and a second prism could recompose the multicolored spectrum into white light. He also showed that the colored light does not change its properties by separating out a colored beam and shining it on various objects.

Portrait of Galileo Galilei by Giusto Sustermans, 1636

Galileo Galilei (1564-1642) improved the telescope, with which he made several important astronomical discoveries, including the four largest moons of Jupiter, the phases of Venus, and the rings of Saturn, and made detailed observations of sunspots. He developed the laws for falling bodies based on pioneering quantitative experiments, which he analyzed mathematically.

Dr. William Gilbert, in De Magnete , invented the New Latin word electricus from ἤλεκτρον ( elektron ), the Greek word for “amber.” Gilbert undertook a number of careful electrical experiments, in the course of which he discovered that many substances were capable of manifesting electrical properties. He also discovered that a heated body lost its electricity, and that moisture prevented the electrification of all bodies, due to the now well-known fact that moisture impaired the insulation of such bodies. He also noticed that electrified substances attracted all other substances indiscriminately, whereas a magnet only attracted iron. The many discoveries of this nature earned for Gilbert the title of “founder of the electrical science.”

Robert Boyle also worked frequently at the new science of electricity, and added several substances to Gilbert’s list of electrics. In 1675, he stated that electric attraction and repulsion can act across a vacuum. One of his important discoveries was that electrified bodies in a vacuum would attract light substances, this indicating that the electrical effect did not depend upon the air as a medium. He also added resin to the then known list of electrics. By the end of the 17th Century, researchers had developed practical means of generating electricity by friction with an anelectrostatic generator, but the development of electrostatic machines did not begin in earnest until the 18th century, when they became fundamental instruments in the studies about the new science of electricity. The first usage of the word electricity is ascribed to Thomas Browne in 1646 work. In 1729, Stephen Gray demonstrated that electricity could be “transmitted” through metal filaments.

Treasures of the RAS: Starry Messenger by Galileo Galilei : In 1610, Galileo published this book describing his observations of the sky with a new invention – the telescope. In it he describes his discovery of the moons of Jupiter, of stars too faint to be seen by the naked eye, and of mountains on the moon. The book was the first scientific publication to be based on data from a telescope. It was an important step towards our modern understanding of the solar system. The Latin title is Sidereus Nuncius , which translates as Starry Messenger , or Sidereal Message .

Though astronomy is the oldest of the natural sciences, its development during the scientific revolution entirely transformed societal views about nature by moving from geocentrism to heliocentrism.

Assess the work of both Copernicus and Kepler and their revolutionary ideas

- The development of astronomy during the period of the scientific revolution entirely transformed societal views about nature. The publication of Nicolaus Copernicus ‘ De revolutionibus in 1543 is often seen as marking the beginning of the time when scientific disciplines gradually transformed into the modern sciences as we know them today.

- Copernican heliocentrism is the name given to the astronomical model developed by Copernicus that positioned the sun near the center of the universe, motionless, with Earth and the other planets rotating around it in circular paths, modified by epicycles and at uniform speeds.

- For over a century, few astronomers were convinced by the Copernican system. Tycho Brahe went so far as to construct a cosmology precisely equivalent to that of Copernicus, but with the earth held fixed in the center of the celestial sphere, instead of the sun. However, Tycho’s idea also contributed to the defense of the heliocentric model.

- In 1596, Johannes Kepler published his first book, which was the first to openly endorse Copernican cosmology by an astronomer since the 1540s. Kepler’s work on Mars and planetary motion further confirmed the heliocentric theory.

- Galileo Galilei designed his own telescope, with which he made a number of critical astronomical observations. His observations and discoveries were among the most influential in the transition from geocentrism to heliocentrism.

- Isaac Newton developed further ties between physics and astronomy through his law of universal gravitation, and irreversibly confirmed and further developed heliocentrism.

- Copernicus : A Renaissance mathematician and astronomer (1473-1543), who formulated a heliocentric model of the universe which placed the sun, rather than the earth, at the center.

- epicycles : The geometric model used to explain the variations in speed and direction of the apparent motion of the moon, sun, and planets in the Ptolemaic system of astronomy.

- Copernican heliocentrism : The name given to the astronomical model developed by Nicolaus Copernicus and published in 1543. It positioned the sun near the center of the universe, motionless, with Earth and the other planets rotating around it in circular paths, modified by epicycles and at uniform speeds. It departed from the Ptolemaic system that prevailed in western culture for centuries, placing Earth at the center of the universe.

The Emergence of Modern Astronomy

While astronomy is the oldest of the natural sciences, dating back to antiquity, its development during the period of the scientific revolution entirely transformed the views of society about nature. The publication of the seminal work in the field of astronomy, Nicolaus Copernicus ‘ De revolutionibus orbium coelestium ( On the Revolutions of the Heavenly Spheres ) published in 1543, is, in fact, often seen as marking the beginning of the time when scientific disciplines, including astronomy, began to apply modern empirical research methods, and gradually transformed into the modern sciences as we know them today.

The Copernican Heliocentrism

Copernican heliocentrism is the name given to the astronomical model developed by Nicolaus Copernicus and published in 1543. It positioned the sun near the center of the universe, motionless, with Earth and the other planets rotating around it in circular paths, modified by epicycles and at uniform speeds. The Copernican model departed from the Ptolemaic system that prevailed in western culture for centuries, placing Earth at the center of the universe. Copernicus’ De revolutionibus marks the beginning of the shift away from a geocentric (and anthropocentric) universe with Earth at its center. Copernicus held that Earth is another planet revolving around the fixed sun once a year, and turning on its axis once a day. But while he put the sun at the center of the celestial spheres, he did not put it at the exact center of the universe, but near it. His system used only uniform circular motions, correcting what was seen by many as the chief inelegance in Ptolemy’s system.

From 1543 until about 1700, few astronomers were convinced by the Copernican system. Forty-five years after the publication of De Revolutionibus , the astronomer Tycho Brahe went so far as to construct a cosmology precisely equivalent to that of Copernicus, but with Earth held fixed in the center of the celestial sphere instead of the sun. However, Tycho challenged the Aristotelian model when he observed a comet that went through the region of the planets. This region was said to only have uniform circular motion on solid spheres, which meant that it would be impossible for a comet to enter into the area. Following Copernicus and Tycho, Johannes Kepler and Galileo Galilei, both working in the first decades of the 17th century, influentially defended, expanded and modified the heliocentric theory.

Johannes Kepler

Johannes Kepler was a German scientist who initially worked as Tycho’s assistant. In 1596, he published his first book, the Mysterium cosmographicum , which was the first to openly endorse Copernican cosmology by an astronomer since the 1540s. The book described his model that used Pythagorean mathematics and the five Platonic solids to explain the number of planets, their proportions, and their order. In 1600, Kepler set to work on the orbit of Mars, the second most eccentric of the six planets known at that time. This work was the basis of his next book, the Astronomia nova (1609). The book argued heliocentrism and ellipses for planetary orbits, instead of circles modified by epicycles. It contains the first two of his eponymous three laws of planetary motion (in 1619, the third law was published). The laws state the following:

- All planets move in elliptical orbits, with the sun at one focus.

- A line that connects a planet to the sun sweeps out equal areas in equal times.

- The time required for a planet to orbit the sun, called its period, is proportional to long axis of the ellipse raised to the 3/2 power. The constant of proportionality is the same for all the planets.

Galileo Galilei

Galileo Galilei was an Italian scientist who is sometimes referred to as the “father of modern observational astronomy.” Based on the designs of Hans Lippershey, he designed his own telescope, which he had improved to 30x magnification. Using this new instrument, Galileo made a number of astronomical observations, which he published in the Sidereus Nuncius in 1610. In this book, he described the surface of the moon as rough, uneven, and imperfect. His observations challenged Aristotle ’s claim that the moon was a perfect sphere, and the larger idea that the heavens were perfect and unchanging. While observing Jupiter over the course of several days, Galileo noticed four stars close to Jupiter whose positions were changing in a way that would be impossible if they were fixed stars. After much observation, he concluded these four stars were orbiting the planet Jupiter and were in fact moons, not stars. This was a radical discovery because, according to Aristotelian cosmology, all heavenly bodies revolve around Earth, and a planet with moons obviously contradicted that popular belief. While contradicting Aristotelian belief, it supported Copernican cosmology, which stated that Earth is a planet like all others.

In 1610, Galileo also observed that Venus had a full set of phases, similar to the phases of the moon, that we can observe from Earth. This was explainable by the Copernican system, which said that all phases of Venus would be visible due to the nature of its orbit around the sun, unlike the Ptolemaic system, which stated only some of Venus’s phases would be visible. Due to Galileo’s observations of Venus, Ptolemy’s system became highly suspect and the majority of leading astronomers subsequently converted to various heliocentric models, making his discovery one of the most influential in the transition from geocentrism to heliocentrism.

Heliocentric model of the solar system, Nicolas Copernicus, De revolutionibus, p. 9, from an original edition, currently at the Jagiellonian University in Cracow, Poland

Copernicus was a polyglot and polymath who obtained a doctorate in canon law and also practiced as a physician, classics scholar, translator, governor, diplomat, and economist. In 1517 he derived a quantity theory of money–a key concept in economics–and in 1519, he formulated a version of what later became known as Gresham’s law (also in economics).

Uniting Astronomy and Physics: Isaac Newton

Although the motions of celestial bodies had been qualitatively explained in physical terms since Aristotle introduced celestial movers in his Metaphysics and a fifth element in his On the Heavens , Johannes Kepler was the first to attempt to derive mathematical predictions of celestial motions from assumed physical causes. This led to the discovery of the three laws of planetary motion that carry his name.

Isaac Newton developed further ties between physics and astronomy through his law of universal gravitation. Realizing that the same force that attracted objects to the surface of Earth held the moon in orbit around the Earth, Newton was able to explain, in one theoretical framework, all known gravitational phenomena. Newton’s Principia (1687) formulated the laws of motion and universal gravitation, which dominated scientists’ view of the physical universe for the next three centuries. By deriving Kepler’s laws of planetary motion from his mathematical description of gravity, and then using the same principles to account for the trajectories of comets, the tides, the precession of the equinoxes, and other phenomena, Newton removed the last doubts about the validity of the heliocentric model of the cosmos. This work also demonstrated that the motion of objects on Earth and of celestial bodies could be described by the same principles. His laws of motion were to be the solid foundation of mechanics; his law of universal gravitation combined terrestrial and celestial mechanics into one great system that seemed to be able to describe the whole world in mathematical formulae.

Jan Matejko, Astronomer Copernicus, or Conversations with God, 1873: Oil painting by the Polish artist Jan Matejko depicting Nicolaus Copernicus observing the heavens from a balcony by a tower near the cathedral in Frombork. Currently, the painting is in the collection of the Jagiellonian University of Cracow, which purchased it from a private owner with money donated by the Polish public.

Johannes Kepler Biography (1571-1630) : Johannes Kepler was a German astronomer and mathematician, who played an important role in the 17th century scientific revolution.

- Jo Kent, The Impact of the Scientific Revolution: A Brief History of the Experimental Method in the 17th Century. June 12, 2014. Provided by : OpenStax CNX. Located at : http://cnx.org/content/m13245/1.1/ . License : CC BY: Attribution

- Royal Society. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Royal_Society . License : CC BY-SA: Attribution-ShareAlike

- Age of Enlightenment. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Age_of_Enlightenment . License : CC BY-SA: Attribution-ShareAlike

- Scientific method. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Scientific_method . License : CC BY-SA: Attribution-ShareAlike

- Baconian method. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Baconian_method . License : CC BY-SA: Attribution-ShareAlike

- Scientific revolution. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Scientific_revolution . License : CC BY-SA: Attribution-ShareAlike

- Renu00e9 Descartes. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Ren%C3%A9_Descartes . License : CC BY-SA: Attribution-ShareAlike

- Science in the Age of Enlightenment. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Science_in_the_Age_of_Enlightenment . License : CC BY-SA: Attribution-ShareAlike

- Galileo Galilei. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Galileo_Galilei . License : CC BY-SA: Attribution-ShareAlike

- NewtonsPrincipia.jpg. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Scientific_revolution#/media/File:NewtonsPrincipia.jpg . License : CC BY-SA: Attribution-ShareAlike

- The Shannon Portrait of the Hon Robert Boyle. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/File:The_Shannon_Portrait_of_the_Hon_Robert_Boyle.jpg . License : Public Domain: No Known Copyright

- Robert Boyle. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Robert_Boyle . License : CC BY-SA: Attribution-ShareAlike

- Scientific Revolution. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Scientific_revolution . License : CC BY-SA: Attribution-ShareAlike

- Isaac Newton. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Sir_Isaac_Newton . License : CC BY-SA: Attribution-ShareAlike

- Copernican Revolution. Provided by : Wiktionary. Located at : https://en.wikipedia.org/wiki/Copernican_Revolution . License : CC BY-SA: Attribution-ShareAlike

- Robert Hooke. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Robert_Hooke . License : CC BY-SA: Attribution-ShareAlike

- Treasures of the RAS: Starry Messenger by Galileo Galilei. Located at : http://www.youtube.com/watch?v=WlA_UYtt21c . License : Public Domain: No Known Copyright . License Terms : Standard YouTube license

- Justus Sustermans - Portrait of Galileo Galilei, 1636. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/File:Justus_Sustermans_-_Portrait_of_Galileo_Galilei,_1636.jpg . License : Public Domain: No Known Copyright

- Copernican Revolution. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Copernican_Revolution . License : CC BY-SA: Attribution-ShareAlike

- History of astronomy. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/History_of_astronomy . License : CC BY-SA: Attribution-ShareAlike

- Nicolaus Copernicus. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Nicolaus_Copernicus . License : CC BY-SA: Attribution-ShareAlike

- The New Astronomy and Cosmology of the Scientific Revolution: Nicolaus Copernicus, Tycho Brahe, and Johannes Kepler. Provided by : Saylor. Located at : https://en.wikipedia.org/wiki/Copernican_heliocentrism . License : CC BY: Attribution

- Deferent and epicycle. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Deferent_and_epicycle . License : CC BY-SA: Attribution-ShareAlike

- Astronomer Copernicus, or Conversations with God. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Astronomer_Copernicus,_or_Conversations_with_God . License : CC BY-SA: Attribution-ShareAlike

- Jan Matejko Astronomer Copernicus Conversation with God.. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Jan_Matejko%23mediaviewer/File:Jan_Matejko-Astronomer_Copernicus-Conversation_with_God.jpg . License : Public Domain: No Known Copyright

- Johannes Kepler Biography (1571-1630). Located at : http://www.youtube.com/watch?v=VB3V36LJpGc . License : Public Domain: No Known Copyright . License Terms : Standard YouTube license

- De Revolutionibus manuscript p9b. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/File:De_Revolutionibus_manuscript_p9b.jpg . License : Public Domain: No Known Copyright

- Herman Boerhaave. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Herman_Boerhaave . License : CC BY-SA: Attribution-ShareAlike

- Humorism. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/Humorism . License : CC BY-SA: Attribution-ShareAlike

- History of medicine. Provided by : Wikipedia. Located at : https://en.wikipedia.org/wiki/History_of_medicine . License : CC BY-SA: Attribution-ShareAlike

- William Harvey. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/William_Harvey . License : CC BY-SA: Attribution-ShareAlike

- Medical Renaissance. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Medical_Renaissance . License : CC BY-SA: Attribution-ShareAlike

- Andreas Vesalius. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Andreas_Vesalius . License : CC BY-SA: Attribution-ShareAlike

- Galen. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Galen . License : CC BY-SA: Attribution-ShareAlike

- Ambroise Paru00e9. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Ambroise_Par%C3%A9 . License : CC BY-SA: Attribution-ShareAlike

- Vesalius01. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/File:Vesalius01.jpg . License : Public Domain: No Known Copyright

- Vesalius Fabrica p174. Provided by : Wikipedia. Located at : http://en.wikipedia.org/wiki/Andreas_Vesalius%23mediaviewer/File:Vesalius_Fabrica_p174.jpg . License : Public Domain: No Known Copyright

Privacy Policy

- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Scientific Revolutions