How To Write A Research Paper

Step-By-Step Tutorial With Examples + FREE Template

By: Derek Jansen (MBA) | Expert Reviewer: Dr Eunice Rautenbach | March 2024

For many students, crafting a strong research paper from scratch can feel like a daunting task – and rightly so! In this post, we’ll unpack what a research paper is, what it needs to do , and how to write one – in three easy steps. 🙂

Overview: Writing A Research Paper

What (exactly) is a research paper.

- How to write a research paper

- Stage 1 : Topic & literature search

- Stage 2 : Structure & outline

- Stage 3 : Iterative writing

- Key takeaways

Let’s start by asking the most important question, “ What is a research paper? ”.

Simply put, a research paper is a scholarly written work where the writer (that’s you!) answers a specific question (this is called a research question ) through evidence-based arguments . Evidence-based is the keyword here. In other words, a research paper is different from an essay or other writing assignments that draw from the writer’s personal opinions or experiences. With a research paper, it’s all about building your arguments based on evidence (we’ll talk more about that evidence a little later).

Now, it’s worth noting that there are many different types of research papers , including analytical papers (the type I just described), argumentative papers, and interpretative papers. Here, we’ll focus on analytical papers , as these are some of the most common – but if you’re keen to learn about other types of research papers, be sure to check out the rest of the blog .

With that basic foundation laid, let’s get down to business and look at how to write a research paper .

Overview: The 3-Stage Process

While there are, of course, many potential approaches you can take to write a research paper, there are typically three stages to the writing process. So, in this tutorial, we’ll present a straightforward three-step process that we use when working with students at Grad Coach.

These three steps are:

- Finding a research topic and reviewing the existing literature

- Developing a provisional structure and outline for your paper, and

- Writing up your initial draft and then refining it iteratively

Let’s dig into each of these.

Need a helping hand?

Step 1: Find a topic and review the literature

As we mentioned earlier, in a research paper, you, as the researcher, will try to answer a question . More specifically, that’s called a research question , and it sets the direction of your entire paper. What’s important to understand though is that you’ll need to answer that research question with the help of high-quality sources – for example, journal articles, government reports, case studies, and so on. We’ll circle back to this in a minute.

The first stage of the research process is deciding on what your research question will be and then reviewing the existing literature (in other words, past studies and papers) to see what they say about that specific research question. In some cases, your professor may provide you with a predetermined research question (or set of questions). However, in many cases, you’ll need to find your own research question within a certain topic area.

Finding a strong research question hinges on identifying a meaningful research gap – in other words, an area that’s lacking in existing research. There’s a lot to unpack here, so if you wanna learn more, check out the plain-language explainer video below.

Once you’ve figured out which question (or questions) you’ll attempt to answer in your research paper, you’ll need to do a deep dive into the existing literature – this is called a “ literature search ”. Again, there are many ways to go about this, but your most likely starting point will be Google Scholar .

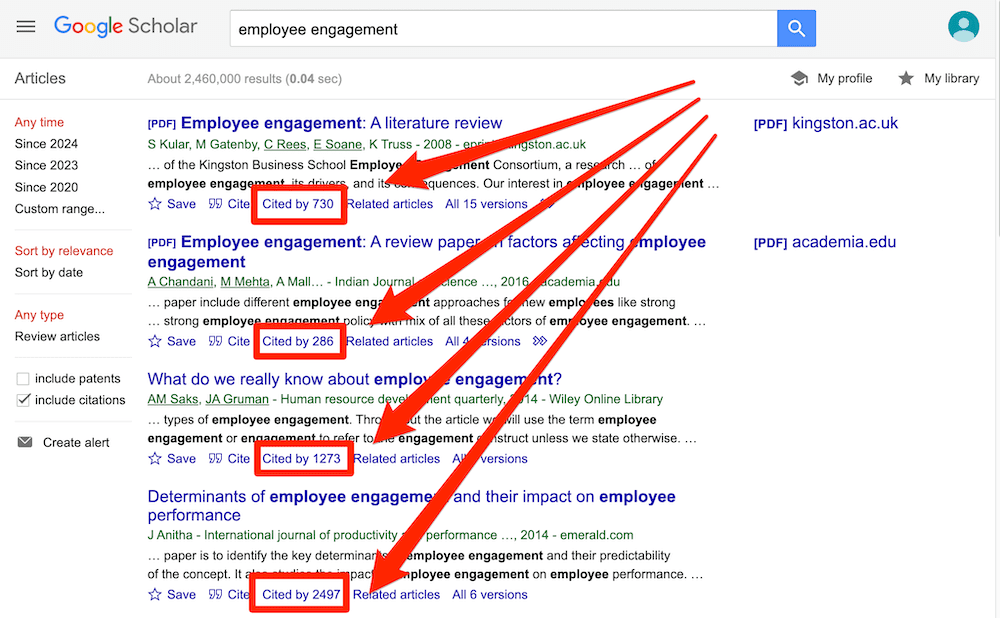

If you’re new to Google Scholar, think of it as Google for the academic world. You can start by simply entering a few different keywords that are relevant to your research question and it will then present a host of articles for you to review. What you want to pay close attention to here is the number of citations for each paper – the more citations a paper has, the more credible it is (generally speaking – there are some exceptions, of course).

Ideally, what you’re looking for are well-cited papers that are highly relevant to your topic. That said, keep in mind that citations are a cumulative metric , so older papers will often have more citations than newer papers – just because they’ve been around for longer. So, don’t fixate on this metric in isolation – relevance and recency are also very important.

Beyond Google Scholar, you’ll also definitely want to check out academic databases and aggregators such as Science Direct, PubMed, JStor and so on. These will often overlap with the results that you find in Google Scholar, but they can also reveal some hidden gems – so, be sure to check them out.

Once you’ve worked your way through all the literature, you’ll want to catalogue all this information in some sort of spreadsheet so that you can easily recall who said what, when and within what context. If you’d like, we’ve got a free literature spreadsheet that helps you do exactly that.

Step 2: Develop a structure and outline

With your research question pinned down and your literature digested and catalogued, it’s time to move on to planning your actual research paper .

It might sound obvious, but it’s really important to have some sort of rough outline in place before you start writing your paper. So often, we see students eagerly rushing into the writing phase, only to land up with a disjointed research paper that rambles on in multiple

Now, the secret here is to not get caught up in the fine details . Realistically, all you need at this stage is a bullet-point list that describes (in broad strokes) what you’ll discuss and in what order. It’s also useful to remember that you’re not glued to this outline – in all likelihood, you’ll chop and change some sections once you start writing, and that’s perfectly okay. What’s important is that you have some sort of roadmap in place from the start.

At this stage you might be wondering, “ But how should I structure my research paper? ”. Well, there’s no one-size-fits-all solution here, but in general, a research paper will consist of a few relatively standardised components:

- Introduction

- Literature review

- Methodology

Let’s take a look at each of these.

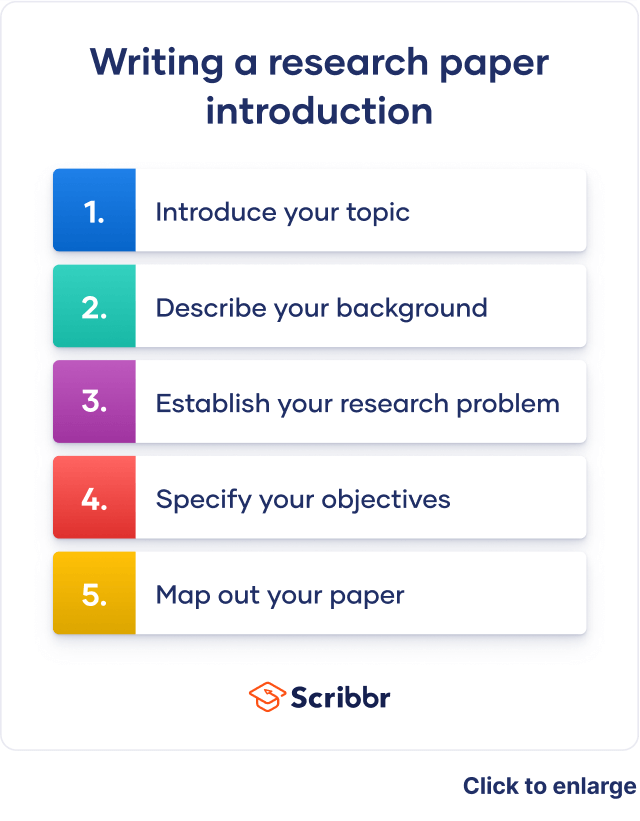

First up is the introduction section . As the name suggests, the purpose of the introduction is to set the scene for your research paper. There are usually (at least) four ingredients that go into this section – these are the background to the topic, the research problem and resultant research question , and the justification or rationale. If you’re interested, the video below unpacks the introduction section in more detail.

The next section of your research paper will typically be your literature review . Remember all that literature you worked through earlier? Well, this is where you’ll present your interpretation of all that content . You’ll do this by writing about recent trends, developments, and arguments within the literature – but more specifically, those that are relevant to your research question . The literature review can oftentimes seem a little daunting, even to seasoned researchers, so be sure to check out our extensive collection of literature review content here .

With the introduction and lit review out of the way, the next section of your paper is the research methodology . In a nutshell, the methodology section should describe to your reader what you did (beyond just reviewing the existing literature) to answer your research question. For example, what data did you collect, how did you collect that data, how did you analyse that data and so on? For each choice, you’ll also need to justify why you chose to do it that way, and what the strengths and weaknesses of your approach were.

Now, it’s worth mentioning that for some research papers, this aspect of the project may be a lot simpler . For example, you may only need to draw on secondary sources (in other words, existing data sets). In some cases, you may just be asked to draw your conclusions from the literature search itself (in other words, there may be no data analysis at all). But, if you are required to collect and analyse data, you’ll need to pay a lot of attention to the methodology section. The video below provides an example of what the methodology section might look like.

By this stage of your paper, you will have explained what your research question is, what the existing literature has to say about that question, and how you analysed additional data to try to answer your question. So, the natural next step is to present your analysis of that data . This section is usually called the “results” or “analysis” section and this is where you’ll showcase your findings.

Depending on your school’s requirements, you may need to present and interpret the data in one section – or you might split the presentation and the interpretation into two sections. In the latter case, your “results” section will just describe the data, and the “discussion” is where you’ll interpret that data and explicitly link your analysis back to your research question. If you’re not sure which approach to take, check in with your professor or take a look at past papers to see what the norms are for your programme.

Alright – once you’ve presented and discussed your results, it’s time to wrap it up . This usually takes the form of the “ conclusion ” section. In the conclusion, you’ll need to highlight the key takeaways from your study and close the loop by explicitly answering your research question. Again, the exact requirements here will vary depending on your programme (and you may not even need a conclusion section at all) – so be sure to check with your professor if you’re unsure.

Step 3: Write and refine

Finally, it’s time to get writing. All too often though, students hit a brick wall right about here… So, how do you avoid this happening to you?

Well, there’s a lot to be said when it comes to writing a research paper (or any sort of academic piece), but we’ll share three practical tips to help you get started.

First and foremost , it’s essential to approach your writing as an iterative process. In other words, you need to start with a really messy first draft and then polish it over multiple rounds of editing. Don’t waste your time trying to write a perfect research paper in one go. Instead, take the pressure off yourself by adopting an iterative approach.

Secondly , it’s important to always lean towards critical writing , rather than descriptive writing. What does this mean? Well, at the simplest level, descriptive writing focuses on the “ what ”, while critical writing digs into the “ so what ” – in other words, the implications. If you’re not familiar with these two types of writing, don’t worry! You can find a plain-language explanation here.

Last but not least, you’ll need to get your referencing right. Specifically, you’ll need to provide credible, correctly formatted citations for the statements you make. We see students making referencing mistakes all the time and it costs them dearly. The good news is that you can easily avoid this by using a simple reference manager . If you don’t have one, check out our video about Mendeley, an easy (and free) reference management tool that you can start using today.

Recap: Key Takeaways

We’ve covered a lot of ground here. To recap, the three steps to writing a high-quality research paper are:

- To choose a research question and review the literature

- To plan your paper structure and draft an outline

- To take an iterative approach to writing, focusing on critical writing and strong referencing

Remember, this is just a b ig-picture overview of the research paper development process and there’s a lot more nuance to unpack. So, be sure to grab a copy of our free research paper template to learn more about how to write a research paper.

You Might Also Like:

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Rubric Design

Main navigation, articulating your assessment values.

Reading, commenting on, and then assigning a grade to a piece of student writing requires intense attention and difficult judgment calls. Some faculty dread “the stack.” Students may share the faculty’s dim view of writing assessment, perceiving it as highly subjective. They wonder why one faculty member values evidence and correctness before all else, while another seeks a vaguely defined originality.

Writing rubrics can help address the concerns of both faculty and students by making writing assessment more efficient, consistent, and public. Whether it is called a grading rubric, a grading sheet, or a scoring guide, a writing assignment rubric lists criteria by which the writing is graded.

Why create a writing rubric?

- It makes your tacit rhetorical knowledge explicit

- It articulates community- and discipline-specific standards of excellence

- It links the grade you give the assignment to the criteria

- It can make your grading more efficient, consistent, and fair as you can read and comment with your criteria in mind

- It can help you reverse engineer your course: once you have the rubrics created, you can align your readings, activities, and lectures with the rubrics to set your students up for success

- It can help your students produce writing that you look forward to reading

How to create a writing rubric

Create a rubric at the same time you create the assignment. It will help you explain to the students what your goals are for the assignment.

- Consider your purpose: do you need a rubric that addresses the standards for all the writing in the course? Or do you need to address the writing requirements and standards for just one assignment? Task-specific rubrics are written to help teachers assess individual assignments or genres, whereas generic rubrics are written to help teachers assess multiple assignments.

- Begin by listing the important qualities of the writing that will be produced in response to a particular assignment. It may be helpful to have several examples of excellent versions of the assignment in front of you: what writing elements do they all have in common? Among other things, these may include features of the argument, such as a main claim or thesis; use and presentation of sources, including visuals; and formatting guidelines such as the requirement of a works cited.

- Then consider how the criteria will be weighted in grading. Perhaps all criteria are equally important, or perhaps there are two or three that all students must achieve to earn a passing grade. Decide what best fits the class and requirements of the assignment.

Consider involving students in Steps 2 and 3. A class session devoted to developing a rubric can provoke many important discussions about the ways the features of the language serve the purpose of the writing. And when students themselves work to describe the writing they are expected to produce, they are more likely to achieve it.

At this point, you will need to decide if you want to create a holistic or an analytic rubric. There is much debate about these two approaches to assessment.

Comparing Holistic and Analytic Rubrics

Holistic scoring .

Holistic scoring aims to rate overall proficiency in a given student writing sample. It is often used in large-scale writing program assessment and impromptu classroom writing for diagnostic purposes.

General tenets to holistic scoring:

- Responding to drafts is part of evaluation

- Responses do not focus on grammar and mechanics during drafting and there is little correction

- Marginal comments are kept to 2-3 per page with summative comments at end

- End commentary attends to students’ overall performance across learning objectives as articulated in the assignment

- Response language aims to foster students’ self-assessment

Holistic rubrics emphasize what students do well and generally increase efficiency; they may also be more valid because scoring includes authentic, personal reaction of the reader. But holistic sores won’t tell a student how they’ve progressed relative to previous assignments and may be rater-dependent, reducing reliability. (For a summary of advantages and disadvantages of holistic scoring, see Becker, 2011, p. 116.)

Here is an example of a partial holistic rubric:

Summary meets all the criteria. The writer understands the article thoroughly. The main points in the article appear in the summary with all main points proportionately developed. The summary should be as comprehensive as possible and should be as comprehensive as possible and should read smoothly, with appropriate transitions between ideas. Sentences should be clear, without vagueness or ambiguity and without grammatical or mechanical errors.

A complete holistic rubric for a research paper (authored by Jonah Willihnganz) can be downloaded here.

Analytic Scoring

Analytic scoring makes explicit the contribution to the final grade of each element of writing. For example, an instructor may choose to give 30 points for an essay whose ideas are sufficiently complex, that marshals good reasons in support of a thesis, and whose argument is logical; and 20 points for well-constructed sentences and careful copy editing.

General tenets to analytic scoring:

- Reflect emphases in your teaching and communicate the learning goals for the course

- Emphasize student performance across criterion, which are established as central to the assignment in advance, usually on an assignment sheet

- Typically take a quantitative approach, providing a scaled set of points for each criterion

- Make the analytic framework available to students before they write

Advantages of an analytic rubric include ease of training raters and improved reliability. Meanwhile, writers often can more easily diagnose the strengths and weaknesses of their work. But analytic rubrics can be time-consuming to produce, and raters may judge the writing holistically anyway. Moreover, many readers believe that writing traits cannot be separated. (For a summary of the advantages and disadvantages of analytic scoring, see Becker, 2011, p. 115.)

For example, a partial analytic rubric for a single trait, “addresses a significant issue”:

- Excellent: Elegantly establishes the current problem, why it matters, to whom

- Above Average: Identifies the problem; explains why it matters and to whom

- Competent: Describes topic but relevance unclear or cursory

- Developing: Unclear issue and relevance

A complete analytic rubric for a research paper can be downloaded here. In WIM courses, this language should be revised to name specific disciplinary conventions.

Whichever type of rubric you write, your goal is to avoid pushing students into prescriptive formulas and limiting thinking (e.g., “each paragraph has five sentences”). By carefully describing the writing you want to read, you give students a clear target, and, as Ed White puts it, “describe the ongoing work of the class” (75).

Writing rubrics contribute meaningfully to the teaching of writing. Think of them as a coaching aide. In class and in conferences, you can use the language of the rubric to help you move past generic statements about what makes good writing good to statements about what constitutes success on the assignment and in the genre or discourse community. The rubric articulates what you are asking students to produce on the page; once that work is accomplished, you can turn your attention to explaining how students can achieve it.

Works Cited

Becker, Anthony. “Examining Rubrics Used to Measure Writing Performance in U.S. Intensive English Programs.” The CATESOL Journal 22.1 (2010/2011):113-30. Web.

White, Edward M. Teaching and Assessing Writing . Proquest Info and Learning, 1985. Print.

Further Resources

CCCC Committee on Assessment. “Writing Assessment: A Position Statement.” November 2006 (Revised March 2009). Conference on College Composition and Communication. Web.

Gallagher, Chris W. “Assess Locally, Validate Globally: Heuristics for Validating Local Writing Assessments.” Writing Program Administration 34.1 (2010): 10-32. Web.

Huot, Brian. (Re)Articulating Writing Assessment for Teaching and Learning. Logan: Utah State UP, 2002. Print.

Kelly-Reilly, Diane, and Peggy O’Neil, eds. Journal of Writing Assessment. Web.

McKee, Heidi A., and Dànielle Nicole DeVoss DeVoss, Eds. Digital Writing Assessment & Evaluation. Logan, UT: Computers and Composition Digital Press/Utah State University Press, 2013. Web.

O’Neill, Peggy, Cindy Moore, and Brian Huot. A Guide to College Writing Assessment . Logan: Utah State UP, 2009. Print.

Sommers, Nancy. Responding to Student Writers . Macmillan Higher Education, 2013.

Straub, Richard. “Responding, Really Responding to Other Students’ Writing.” The Subject is Writing: Essays by Teachers and Students. Ed. Wendy Bishop. Boynton/Cook, 1999. Web.

White, Edward M., and Cassie A. Wright. Assigning, Responding, Evaluating: A Writing Teacher’s Guide . 5th ed. Bedford/St. Martin’s, 2015. Print.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Research paper

Writing a Research Paper Introduction | Step-by-Step Guide

Published on September 24, 2022 by Jack Caulfield . Revised on March 27, 2023.

The introduction to a research paper is where you set up your topic and approach for the reader. It has several key goals:

- Present your topic and get the reader interested

- Provide background or summarize existing research

- Position your own approach

- Detail your specific research problem and problem statement

- Give an overview of the paper’s structure

The introduction looks slightly different depending on whether your paper presents the results of original empirical research or constructs an argument by engaging with a variety of sources.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

Step 1: introduce your topic, step 2: describe the background, step 3: establish your research problem, step 4: specify your objective(s), step 5: map out your paper, research paper introduction examples, frequently asked questions about the research paper introduction.

The first job of the introduction is to tell the reader what your topic is and why it’s interesting or important. This is generally accomplished with a strong opening hook.

The hook is a striking opening sentence that clearly conveys the relevance of your topic. Think of an interesting fact or statistic, a strong statement, a question, or a brief anecdote that will get the reader wondering about your topic.

For example, the following could be an effective hook for an argumentative paper about the environmental impact of cattle farming:

A more empirical paper investigating the relationship of Instagram use with body image issues in adolescent girls might use the following hook:

Don’t feel that your hook necessarily has to be deeply impressive or creative. Clarity and relevance are still more important than catchiness. The key thing is to guide the reader into your topic and situate your ideas.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

This part of the introduction differs depending on what approach your paper is taking.

In a more argumentative paper, you’ll explore some general background here. In a more empirical paper, this is the place to review previous research and establish how yours fits in.

Argumentative paper: Background information

After you’ve caught your reader’s attention, specify a bit more, providing context and narrowing down your topic.

Provide only the most relevant background information. The introduction isn’t the place to get too in-depth; if more background is essential to your paper, it can appear in the body .

Empirical paper: Describing previous research

For a paper describing original research, you’ll instead provide an overview of the most relevant research that has already been conducted. This is a sort of miniature literature review —a sketch of the current state of research into your topic, boiled down to a few sentences.

This should be informed by genuine engagement with the literature. Your search can be less extensive than in a full literature review, but a clear sense of the relevant research is crucial to inform your own work.

Begin by establishing the kinds of research that have been done, and end with limitations or gaps in the research that you intend to respond to.

The next step is to clarify how your own research fits in and what problem it addresses.

Argumentative paper: Emphasize importance

In an argumentative research paper, you can simply state the problem you intend to discuss, and what is original or important about your argument.

Empirical paper: Relate to the literature

In an empirical research paper, try to lead into the problem on the basis of your discussion of the literature. Think in terms of these questions:

- What research gap is your work intended to fill?

- What limitations in previous work does it address?

- What contribution to knowledge does it make?

You can make the connection between your problem and the existing research using phrases like the following.

Now you’ll get into the specifics of what you intend to find out or express in your research paper.

The way you frame your research objectives varies. An argumentative paper presents a thesis statement, while an empirical paper generally poses a research question (sometimes with a hypothesis as to the answer).

Argumentative paper: Thesis statement

The thesis statement expresses the position that the rest of the paper will present evidence and arguments for. It can be presented in one or two sentences, and should state your position clearly and directly, without providing specific arguments for it at this point.

Empirical paper: Research question and hypothesis

The research question is the question you want to answer in an empirical research paper.

Present your research question clearly and directly, with a minimum of discussion at this point. The rest of the paper will be taken up with discussing and investigating this question; here you just need to express it.

A research question can be framed either directly or indirectly.

- This study set out to answer the following question: What effects does daily use of Instagram have on the prevalence of body image issues among adolescent girls?

- We investigated the effects of daily Instagram use on the prevalence of body image issues among adolescent girls.

If your research involved testing hypotheses , these should be stated along with your research question. They are usually presented in the past tense, since the hypothesis will already have been tested by the time you are writing up your paper.

For example, the following hypothesis might respond to the research question above:

The final part of the introduction is often dedicated to a brief overview of the rest of the paper.

In a paper structured using the standard scientific “introduction, methods, results, discussion” format, this isn’t always necessary. But if your paper is structured in a less predictable way, it’s important to describe the shape of it for the reader.

If included, the overview should be concise, direct, and written in the present tense.

- This paper will first discuss several examples of survey-based research into adolescent social media use, then will go on to …

- This paper first discusses several examples of survey-based research into adolescent social media use, then goes on to …

Full examples of research paper introductions are shown in the tabs below: one for an argumentative paper, the other for an empirical paper.

- Argumentative paper

- Empirical paper

Are cows responsible for climate change? A recent study (RIVM, 2019) shows that cattle farmers account for two thirds of agricultural nitrogen emissions in the Netherlands. These emissions result from nitrogen in manure, which can degrade into ammonia and enter the atmosphere. The study’s calculations show that agriculture is the main source of nitrogen pollution, accounting for 46% of the country’s total emissions. By comparison, road traffic and households are responsible for 6.1% each, the industrial sector for 1%. While efforts are being made to mitigate these emissions, policymakers are reluctant to reckon with the scale of the problem. The approach presented here is a radical one, but commensurate with the issue. This paper argues that the Dutch government must stimulate and subsidize livestock farmers, especially cattle farmers, to transition to sustainable vegetable farming. It first establishes the inadequacy of current mitigation measures, then discusses the various advantages of the results proposed, and finally addresses potential objections to the plan on economic grounds.

The rise of social media has been accompanied by a sharp increase in the prevalence of body image issues among women and girls. This correlation has received significant academic attention: Various empirical studies have been conducted into Facebook usage among adolescent girls (Tiggermann & Slater, 2013; Meier & Gray, 2014). These studies have consistently found that the visual and interactive aspects of the platform have the greatest influence on body image issues. Despite this, highly visual social media (HVSM) such as Instagram have yet to be robustly researched. This paper sets out to address this research gap. We investigated the effects of daily Instagram use on the prevalence of body image issues among adolescent girls. It was hypothesized that daily Instagram use would be associated with an increase in body image concerns and a decrease in self-esteem ratings.

The introduction of a research paper includes several key elements:

- A hook to catch the reader’s interest

- Relevant background on the topic

- Details of your research problem

and your problem statement

- A thesis statement or research question

- Sometimes an overview of the paper

Don’t feel that you have to write the introduction first. The introduction is often one of the last parts of the research paper you’ll write, along with the conclusion.

This is because it can be easier to introduce your paper once you’ve already written the body ; you may not have the clearest idea of your arguments until you’ve written them, and things can change during the writing process .

The way you present your research problem in your introduction varies depending on the nature of your research paper . A research paper that presents a sustained argument will usually encapsulate this argument in a thesis statement .

A research paper designed to present the results of empirical research tends to present a research question that it seeks to answer. It may also include a hypothesis —a prediction that will be confirmed or disproved by your research.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Caulfield, J. (2023, March 27). Writing a Research Paper Introduction | Step-by-Step Guide. Scribbr. Retrieved April 1, 2024, from https://www.scribbr.com/research-paper/research-paper-introduction/

Is this article helpful?

Jack Caulfield

Other students also liked, writing strong research questions | criteria & examples, writing a research paper conclusion | step-by-step guide, research paper format | apa, mla, & chicago templates, what is your plagiarism score.

Research Questions in Language Education and Applied Linguistics pp 431–435 Cite as

Writing Assessment Literacy

- Deborah Crusan 3

- First Online: 13 January 2022

197 Accesses

Part of the book series: Springer Texts in Education ((SPTE))

Although classroom writing assessment is a significant responsibility for writing teachers, many instructors lack an understanding of sound and effective assessment practices in the writing classroom aka Writing Assessment Literacy (WAL).

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Crusan, D. (2010). Assessment in the second language writing classroom . University of Michigan Press.

Book Google Scholar

Crusan, D., Plakans, L., & Gebril, A. (2016). Writing assessment literacy: Surveying second language teachers’ knowledge, beliefs, and practices. Assessing Writing, 28 , 43–56. https://doi.org/10.1016/j.asw.2016.03.001

Article Google Scholar

Lee, I. (2017). Classroom writing assessment and feedback in L2 school contexts . Springer.

Weigle, S. C. (2007). Teaching writing teachers about assessment. Journal of Second Language Writing, 16 , 194–209. https://doi.org/10.1016/j.jslw.2007.07.004

Download references

Author information

Authors and affiliations.

Department of English Language and Literatures, Wright State University, Dayton, OH, USA

Deborah Crusan

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Deborah Crusan .

Editor information

Editors and affiliations.

European Knowledge Development Institute, Ankara, Türkiye

Hassan Mohebbi

Higher Colleges of Technology (HCT), Dubai Men’s College, Dubai, United Arab Emirates

Christine Coombe

The Research Questions

In what ways have second language writing teachers obtained assessment knowledge?

What are the common beliefs held by second language writing teachers about writing assessment?

What are the assessment practices of second language writing teachers?

What is the impact of linguistic background on writing assessment knowledge, beliefs, and practices?

What is the impact of teaching experience on writing assessment knowledge, beliefs, and practices?

What skills are necessary for teachers to claim that they are literate in writing assessment?

How can teaching be enhanced through writing assessment literacy?

In what ways might teachers’ work be shaped by testing policies and practices?

How does context affect teacher practices in writing assessment?

Should teachers be required to provide evidence of writing assessment literacy? If so, how?

Suggested Resources

Crusan, D., Plakans, L., & Gebril, A. (2016). Writing assessment literacy: Surveying second language teachers’ knowledge, beliefs, and practices. Assessing Writing , 28 , 43–56. https://doi.org/10.1016/j.asw.2016.03.001 .

Claiming a teacher knowledge gap in all aspects of writing assessment, the authors explore ways in which writing teachers have obtained writing assessment literacy. Asserting that teachers often feel un(der)prepared for the assessment tasks they face in the writing classroom, the researchers surveyed 702 writing teachers from around the globe to establish evidence for this claim; the researchers found that although teachers professed education in assessment in general and writing assessment in particular, these same teachers worried that they are less than sure of their abilities in rubric creation, written corrective feedback, and justification of grades, crucial elements in the assessment of writing. The study also uncovered interesting notions about linguistic background: NNESTs reported higher levels of writing assessment literacy.

Fernando, W. (2018) . Show me your true colours: Scaffolding formative academic literacy assessment through an online learning platform. Assessing Writing, 36 , 63–76. https://doi.org/10.1016/j.asw.2018.03.005

In this paper, the author focuses on student writing processes and examines ways those processes are affected by students’ formative academic literacy assessment. Does formative academic literacy promote more engagement with composing processes and if so, what evidence supports this proposition? To investigate, the author asked students to use an online learning platform to generate data in the form of outlines/essays with feedback, student-generated digital artefacts, and questionnaires/follow-up interviews to answer two important questions: “(1) How can formative academic literacy assessment help students engage in composing processes and improve their writing? (2) How can online technology be used to facilitate and formatively assess student engagement with composing processes?” (p. 65). Her findings offer evidence for and indicate that students benefit from understanding their composition processes; additionally, this understanding is furthered by scaffolding formative academic literacy assessment through an online platform that uncovers and overcomes students’ difficulties as they learn to write.

Inbar-Lourie, O. (2017). Language Assessment Literacy. In: E. Shohamy, I. Or, & S. May (Eds.), Language Testing and Assessment: Encyclopedia of Language and Education (3rd ed.). Cham, Switzerland: Springer.

While this encyclopedia entry does not specifically address writing assessment literacy, it provides an excellent definition of language assessment literacy (LAL) and broadly informs the field of this definition and argues for the need for teachers to be assessment literate. Calling for the need to define a literacy framework in language assessment and citing as a matter still in need of resolution the divide between views of formative and dynamic assessment. Operationalization of a theoretical framework remains important, but contextualized definitions might be the more judicious way to approach this issue, since many different stakeholders (e.g. classroom teachers, students, parents, test developers) are involved.

Lam, R. (2015). Language assessment training in Hong Kong: Implications for language assessment literacy. Language Testing, 32 (2), 169–197. https://doi.org/10.1177/0265532214554321

Although this article is not specifically about writing assessment literacy, its author makes the same arguments for training of teachers as those who argue for writing assessment literacy for teachers. These arguments bring home the lack of teacher education in assessment in general and writing assessment in particular. Lam surveys various documents, conducts interviews, examines student assessment tasks, and five institutions in Hong Kong, targeting pre-service teachers being trained for the primary and secondary school settings. Lam uncovered five themes running through the data from which he distills three key issues: (1) local teacher education program support for further language assessment training, (2) taking care that the definition of LAL is understood from an ethical perspective, and (3) that those who administer the programs in Lam’s study collaborate to assure that pre-service teachers meet compulsory standards for language assessment literacy.

Xu, Y., & Brown, G. T. L. (2016). Teacher assessment literacy in practice: A reconceptualization. Teaching and Teacher Education, 58 , 149–162.

The authors synthesized 100 studies concerning teacher assessment literacy (TAL) to determine what has and has not worked in the advancement of TAL. Following this and based on their findings of their comprehensive literature review, the authors developed a conceptual framework of Teacher Assessment Literacy in Practice (TALiP), which calls for a crucial knowledge base for all teachers, but which the authors call necessary but not sufficient. They then go on to categorize other aspects that need to considered before a teacher can be fully assessment literate, calling the symbiosis between and among components reciprocal. Along with the knowledge base, elements include the ways in which teachers intellectualize assessment, contexts involved especially institutional and socio-cultural, TALiP (the framework’s primary notion), teacher learning, and ways (or if) in which teachers view themselves as competent assessors. The framework has implications for multiple platforms: pre-service teacher education, in-service teacher education, and teacher training challenges encountered when attempting expansion of TAL.

Rights and permissions

Reprints and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this chapter

Cite this chapter.

Crusan, D. (2021). Writing Assessment Literacy. In: Mohebbi, H., Coombe, C. (eds) Research Questions in Language Education and Applied Linguistics. Springer Texts in Education. Springer, Cham. https://doi.org/10.1007/978-3-030-79143-8_77

Download citation

DOI : https://doi.org/10.1007/978-3-030-79143-8_77

Published : 13 January 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-79142-1

Online ISBN : 978-3-030-79143-8

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- U.S. Locations

- UMGC Europe

- Learn Online

- Find Answers

- 855-655-8682

- Current Students

Online Guide to Writing and Research

Assessing your writing, explore more of umgc.

- Online Guide to Writing

Using Assessment to Improve Your Writing

When you assess your writing, you are committing to improve it. Your commitment to improve will certainly include making adjustments to papers and correcting errors. However, assessing your writing can also motivate you to practice writing. As with anything, practicing will help your writing improve. Below are a series of habits that can help as you practice your writing skills.

Learn from your mistakes.

Watch for patterns of strengths and weaknesses in your writing. Learn how to build on your strengths and address your weaknesses. Then, consciously and methodically work on improving them.

Analyze examples of good writing.

By understanding how other writers have succeeded in writing effectively, you can improve your skills and strategies. Keep a notebook of writing samples that includes your notations about what works and what does not.

Build your skills as you go along.

Work methodically on your writing, focusing on specific skills each time you write a paper. Keep a journal or course notebook so you can practice writing in a specific discipline. Do not wait until the last minute to work on your writing assignments.

Take advantage of informal writing assignments.

Some courses require you to keep a journal or notebook with questions, case-study discussions, or laboratory notes. Use these opportunities to practice writing topic sentences, thesis statements, and major and minor supports. Practice writing effective sentences in a unified paragraph, or practice freewriting. Use these shorter assignments as warm‑ups for the longer research papers.

Look for extra writing opportunities in all your courses.

When you participate in a group assignment, volunteer to take minutes or write summaries of group discussions. Produce written proceedings of oral presentations. Ask your instructor for extra credit for writing extra papers.

Take writing courses beyond your requirements.

All writing courses, including creative writing, will help you improve your writing skills. Take a variety of writing courses to help you broaden your vocabulary, style, sentence structure, organization, and critical thinking skills.

Take writing seminars offered by professional organizations.

Many professional organizations offer short writing courses to help you learn new skills or new kinds of writing. Other organizations offer Internet-based courses to teach new writing skills, review grammar, and build vocabulary.

Keep a journal or writing log.

Keep tabs of your writing plan and your improvement. Set aside 10 or 15 minutes daily to review what you are focusing on and practicing; set aside another 10 or 15 minutes to practice it. Set goals to learn a new writing skill each week or month.

Read, read, read.

By reading good writing, you will be able to identify solid writing models and improve your sentence structure, spelling, punctuation, and vocabulary.

Write, write, write.

Reading will help you write better. However, you should also practice what you have learned. Take every opportunity to practice effective techniques you have noticed while reading.

Mailing Address: 3501 University Blvd. East, Adelphi, MD 20783 This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License . © 2022 UMGC. All links to external sites were verified at the time of publication. UMGC is not responsible for the validity or integrity of information located at external sites.

Table of Contents: Online Guide to Writing

Chapter 1: College Writing

How Does College Writing Differ from Workplace Writing?

What Is College Writing?

Why So Much Emphasis on Writing?

Chapter 2: The Writing Process

Doing Exploratory Research

Getting from Notes to Your Draft

Introduction

Prewriting - Techniques to Get Started - Mining Your Intuition

Prewriting: Targeting Your Audience

Prewriting: Techniques to Get Started

Prewriting: Understanding Your Assignment

Rewriting: Being Your Own Critic

Rewriting: Creating a Revision Strategy

Rewriting: Getting Feedback

Rewriting: The Final Draft

Techniques to Get Started - Outlining

Techniques to Get Started - Using Systematic Techniques

Thesis Statement and Controlling Idea

Writing: Getting from Notes to Your Draft - Freewriting

Writing: Getting from Notes to Your Draft - Summarizing Your Ideas

Writing: Outlining What You Will Write

Chapter 3: Thinking Strategies

A Word About Style, Voice, and Tone

A Word About Style, Voice, and Tone: Style Through Vocabulary and Diction

Critical Strategies and Writing

Critical Strategies and Writing: Analysis

Critical Strategies and Writing: Evaluation

Critical Strategies and Writing: Persuasion

Critical Strategies and Writing: Synthesis

Developing a Paper Using Strategies

Kinds of Assignments You Will Write

Patterns for Presenting Information

Patterns for Presenting Information: Critiques

Patterns for Presenting Information: Discussing Raw Data

Patterns for Presenting Information: General-to-Specific Pattern

Patterns for Presenting Information: Problem-Cause-Solution Pattern

Patterns for Presenting Information: Specific-to-General Pattern

Patterns for Presenting Information: Summaries and Abstracts

Supporting with Research and Examples

Writing Essay Examinations

Writing Essay Examinations: Make Your Answer Relevant and Complete

Writing Essay Examinations: Organize Thinking Before Writing

Writing Essay Examinations: Read and Understand the Question

Chapter 4: The Research Process

Planning and Writing a Research Paper

Planning and Writing a Research Paper: Ask a Research Question

Planning and Writing a Research Paper: Cite Sources

Planning and Writing a Research Paper: Collect Evidence

Planning and Writing a Research Paper: Decide Your Point of View, or Role, for Your Research

Planning and Writing a Research Paper: Draw Conclusions

Planning and Writing a Research Paper: Find a Topic and Get an Overview

Planning and Writing a Research Paper: Manage Your Resources

Planning and Writing a Research Paper: Outline

Planning and Writing a Research Paper: Survey the Literature

Planning and Writing a Research Paper: Work Your Sources into Your Research Writing

Research Resources: Where Are Research Resources Found? - Human Resources

Research Resources: What Are Research Resources?

Research Resources: Where Are Research Resources Found?

Research Resources: Where Are Research Resources Found? - Electronic Resources

Research Resources: Where Are Research Resources Found? - Print Resources

Structuring the Research Paper: Formal Research Structure

Structuring the Research Paper: Informal Research Structure

The Nature of Research

The Research Assignment: How Should Research Sources Be Evaluated?

The Research Assignment: When Is Research Needed?

The Research Assignment: Why Perform Research?

Chapter 5: Academic Integrity

Academic Integrity

Giving Credit to Sources

Giving Credit to Sources: Copyright Laws

Giving Credit to Sources: Documentation

Giving Credit to Sources: Style Guides

Integrating Sources

Practicing Academic Integrity

Practicing Academic Integrity: Keeping Accurate Records

Practicing Academic Integrity: Managing Source Material

Practicing Academic Integrity: Managing Source Material - Paraphrasing Your Source

Practicing Academic Integrity: Managing Source Material - Quoting Your Source

Practicing Academic Integrity: Managing Source Material - Summarizing Your Sources

Types of Documentation

Types of Documentation: Bibliographies and Source Lists

Types of Documentation: Citing World Wide Web Sources

Types of Documentation: In-Text or Parenthetical Citations

Types of Documentation: In-Text or Parenthetical Citations - APA Style

Types of Documentation: In-Text or Parenthetical Citations - CSE/CBE Style

Types of Documentation: In-Text or Parenthetical Citations - Chicago Style

Types of Documentation: In-Text or Parenthetical Citations - MLA Style

Types of Documentation: Note Citations

Chapter 6: Using Library Resources

Finding Library Resources

Chapter 7: Assessing Your Writing

How Is Writing Graded?

How Is Writing Graded?: A General Assessment Tool

The Draft Stage

The Draft Stage: The First Draft

The Draft Stage: The Revision Process and the Final Draft

The Draft Stage: Using Feedback

The Research Stage

Chapter 8: Other Frequently Assigned Papers

Reviews and Reaction Papers: Article and Book Reviews

Reviews and Reaction Papers: Reaction Papers

Writing Arguments

Writing Arguments: Adapting the Argument Structure

Writing Arguments: Purposes of Argument

Writing Arguments: References to Consult for Writing Arguments

Writing Arguments: Steps to Writing an Argument - Anticipate Active Opposition

Writing Arguments: Steps to Writing an Argument - Determine Your Organization

Writing Arguments: Steps to Writing an Argument - Develop Your Argument

Writing Arguments: Steps to Writing an Argument - Introduce Your Argument

Writing Arguments: Steps to Writing an Argument - State Your Thesis or Proposition

Writing Arguments: Steps to Writing an Argument - Write Your Conclusion

Writing Arguments: Types of Argument

Appendix A: Books to Help Improve Your Writing

Dictionaries

General Style Manuals

Researching on the Internet

Special Style Manuals

Writing Handbooks

Appendix B: Collaborative Writing and Peer Reviewing

Collaborative Writing: Assignments to Accompany the Group Project

Collaborative Writing: Informal Progress Report

Collaborative Writing: Issues to Resolve

Collaborative Writing: Methodology

Collaborative Writing: Peer Evaluation

Collaborative Writing: Tasks of Collaborative Writing Group Members

Collaborative Writing: Writing Plan

General Introduction

Peer Reviewing

Appendix C: Developing an Improvement Plan

Working with Your Instructor’s Comments and Grades

Appendix D: Writing Plan and Project Schedule

Devising a Writing Project Plan and Schedule

Reviewing Your Plan with Others

By using our website you agree to our use of cookies. Learn more about how we use cookies by reading our Privacy Policy .

- Privacy Policy

Buy Me a Coffee

Home » Research Paper – Structure, Examples and Writing Guide

Research Paper – Structure, Examples and Writing Guide

Table of Contents

Research Paper

Definition:

Research Paper is a written document that presents the author’s original research, analysis, and interpretation of a specific topic or issue.

It is typically based on Empirical Evidence, and may involve qualitative or quantitative research methods, or a combination of both. The purpose of a research paper is to contribute new knowledge or insights to a particular field of study, and to demonstrate the author’s understanding of the existing literature and theories related to the topic.

Structure of Research Paper

The structure of a research paper typically follows a standard format, consisting of several sections that convey specific information about the research study. The following is a detailed explanation of the structure of a research paper:

The title page contains the title of the paper, the name(s) of the author(s), and the affiliation(s) of the author(s). It also includes the date of submission and possibly, the name of the journal or conference where the paper is to be published.

The abstract is a brief summary of the research paper, typically ranging from 100 to 250 words. It should include the research question, the methods used, the key findings, and the implications of the results. The abstract should be written in a concise and clear manner to allow readers to quickly grasp the essence of the research.

Introduction

The introduction section of a research paper provides background information about the research problem, the research question, and the research objectives. It also outlines the significance of the research, the research gap that it aims to fill, and the approach taken to address the research question. Finally, the introduction section ends with a clear statement of the research hypothesis or research question.

Literature Review

The literature review section of a research paper provides an overview of the existing literature on the topic of study. It includes a critical analysis and synthesis of the literature, highlighting the key concepts, themes, and debates. The literature review should also demonstrate the research gap and how the current study seeks to address it.

The methods section of a research paper describes the research design, the sample selection, the data collection and analysis procedures, and the statistical methods used to analyze the data. This section should provide sufficient detail for other researchers to replicate the study.

The results section presents the findings of the research, using tables, graphs, and figures to illustrate the data. The findings should be presented in a clear and concise manner, with reference to the research question and hypothesis.

The discussion section of a research paper interprets the findings and discusses their implications for the research question, the literature review, and the field of study. It should also address the limitations of the study and suggest future research directions.

The conclusion section summarizes the main findings of the study, restates the research question and hypothesis, and provides a final reflection on the significance of the research.

The references section provides a list of all the sources cited in the paper, following a specific citation style such as APA, MLA or Chicago.

How to Write Research Paper

You can write Research Paper by the following guide:

- Choose a Topic: The first step is to select a topic that interests you and is relevant to your field of study. Brainstorm ideas and narrow down to a research question that is specific and researchable.

- Conduct a Literature Review: The literature review helps you identify the gap in the existing research and provides a basis for your research question. It also helps you to develop a theoretical framework and research hypothesis.

- Develop a Thesis Statement : The thesis statement is the main argument of your research paper. It should be clear, concise and specific to your research question.

- Plan your Research: Develop a research plan that outlines the methods, data sources, and data analysis procedures. This will help you to collect and analyze data effectively.

- Collect and Analyze Data: Collect data using various methods such as surveys, interviews, observations, or experiments. Analyze data using statistical tools or other qualitative methods.

- Organize your Paper : Organize your paper into sections such as Introduction, Literature Review, Methods, Results, Discussion, and Conclusion. Ensure that each section is coherent and follows a logical flow.

- Write your Paper : Start by writing the introduction, followed by the literature review, methods, results, discussion, and conclusion. Ensure that your writing is clear, concise, and follows the required formatting and citation styles.

- Edit and Proofread your Paper: Review your paper for grammar and spelling errors, and ensure that it is well-structured and easy to read. Ask someone else to review your paper to get feedback and suggestions for improvement.

- Cite your Sources: Ensure that you properly cite all sources used in your research paper. This is essential for giving credit to the original authors and avoiding plagiarism.

Research Paper Example

Note : The below example research paper is for illustrative purposes only and is not an actual research paper. Actual research papers may have different structures, contents, and formats depending on the field of study, research question, data collection and analysis methods, and other factors. Students should always consult with their professors or supervisors for specific guidelines and expectations for their research papers.

Research Paper Example sample for Students:

Title: The Impact of Social Media on Mental Health among Young Adults

Abstract: This study aims to investigate the impact of social media use on the mental health of young adults. A literature review was conducted to examine the existing research on the topic. A survey was then administered to 200 university students to collect data on their social media use, mental health status, and perceived impact of social media on their mental health. The results showed that social media use is positively associated with depression, anxiety, and stress. The study also found that social comparison, cyberbullying, and FOMO (Fear of Missing Out) are significant predictors of mental health problems among young adults.

Introduction: Social media has become an integral part of modern life, particularly among young adults. While social media has many benefits, including increased communication and social connectivity, it has also been associated with negative outcomes, such as addiction, cyberbullying, and mental health problems. This study aims to investigate the impact of social media use on the mental health of young adults.

Literature Review: The literature review highlights the existing research on the impact of social media use on mental health. The review shows that social media use is associated with depression, anxiety, stress, and other mental health problems. The review also identifies the factors that contribute to the negative impact of social media, including social comparison, cyberbullying, and FOMO.

Methods : A survey was administered to 200 university students to collect data on their social media use, mental health status, and perceived impact of social media on their mental health. The survey included questions on social media use, mental health status (measured using the DASS-21), and perceived impact of social media on their mental health. Data were analyzed using descriptive statistics and regression analysis.

Results : The results showed that social media use is positively associated with depression, anxiety, and stress. The study also found that social comparison, cyberbullying, and FOMO are significant predictors of mental health problems among young adults.

Discussion : The study’s findings suggest that social media use has a negative impact on the mental health of young adults. The study highlights the need for interventions that address the factors contributing to the negative impact of social media, such as social comparison, cyberbullying, and FOMO.

Conclusion : In conclusion, social media use has a significant impact on the mental health of young adults. The study’s findings underscore the need for interventions that promote healthy social media use and address the negative outcomes associated with social media use. Future research can explore the effectiveness of interventions aimed at reducing the negative impact of social media on mental health. Additionally, longitudinal studies can investigate the long-term effects of social media use on mental health.

Limitations : The study has some limitations, including the use of self-report measures and a cross-sectional design. The use of self-report measures may result in biased responses, and a cross-sectional design limits the ability to establish causality.

Implications: The study’s findings have implications for mental health professionals, educators, and policymakers. Mental health professionals can use the findings to develop interventions that address the negative impact of social media use on mental health. Educators can incorporate social media literacy into their curriculum to promote healthy social media use among young adults. Policymakers can use the findings to develop policies that protect young adults from the negative outcomes associated with social media use.

References :

- Twenge, J. M., & Campbell, W. K. (2019). Associations between screen time and lower psychological well-being among children and adolescents: Evidence from a population-based study. Preventive medicine reports, 15, 100918.

- Primack, B. A., Shensa, A., Escobar-Viera, C. G., Barrett, E. L., Sidani, J. E., Colditz, J. B., … & James, A. E. (2017). Use of multiple social media platforms and symptoms of depression and anxiety: A nationally-representative study among US young adults. Computers in Human Behavior, 69, 1-9.

- Van der Meer, T. G., & Verhoeven, J. W. (2017). Social media and its impact on academic performance of students. Journal of Information Technology Education: Research, 16, 383-398.

Appendix : The survey used in this study is provided below.

Social Media and Mental Health Survey

- How often do you use social media per day?

- Less than 30 minutes

- 30 minutes to 1 hour

- 1 to 2 hours

- 2 to 4 hours

- More than 4 hours

- Which social media platforms do you use?

- Others (Please specify)

- How often do you experience the following on social media?

- Social comparison (comparing yourself to others)

- Cyberbullying

- Fear of Missing Out (FOMO)

- Have you ever experienced any of the following mental health problems in the past month?

- Do you think social media use has a positive or negative impact on your mental health?

- Very positive

- Somewhat positive

- Somewhat negative

- Very negative

- In your opinion, which factors contribute to the negative impact of social media on mental health?

- Social comparison

- In your opinion, what interventions could be effective in reducing the negative impact of social media on mental health?

- Education on healthy social media use

- Counseling for mental health problems caused by social media

- Social media detox programs

- Regulation of social media use

Thank you for your participation!

Applications of Research Paper

Research papers have several applications in various fields, including:

- Advancing knowledge: Research papers contribute to the advancement of knowledge by generating new insights, theories, and findings that can inform future research and practice. They help to answer important questions, clarify existing knowledge, and identify areas that require further investigation.

- Informing policy: Research papers can inform policy decisions by providing evidence-based recommendations for policymakers. They can help to identify gaps in current policies, evaluate the effectiveness of interventions, and inform the development of new policies and regulations.

- Improving practice: Research papers can improve practice by providing evidence-based guidance for professionals in various fields, including medicine, education, business, and psychology. They can inform the development of best practices, guidelines, and standards of care that can improve outcomes for individuals and organizations.

- Educating students : Research papers are often used as teaching tools in universities and colleges to educate students about research methods, data analysis, and academic writing. They help students to develop critical thinking skills, research skills, and communication skills that are essential for success in many careers.

- Fostering collaboration: Research papers can foster collaboration among researchers, practitioners, and policymakers by providing a platform for sharing knowledge and ideas. They can facilitate interdisciplinary collaborations and partnerships that can lead to innovative solutions to complex problems.

When to Write Research Paper

Research papers are typically written when a person has completed a research project or when they have conducted a study and have obtained data or findings that they want to share with the academic or professional community. Research papers are usually written in academic settings, such as universities, but they can also be written in professional settings, such as research organizations, government agencies, or private companies.

Here are some common situations where a person might need to write a research paper:

- For academic purposes: Students in universities and colleges are often required to write research papers as part of their coursework, particularly in the social sciences, natural sciences, and humanities. Writing research papers helps students to develop research skills, critical thinking skills, and academic writing skills.

- For publication: Researchers often write research papers to publish their findings in academic journals or to present their work at academic conferences. Publishing research papers is an important way to disseminate research findings to the academic community and to establish oneself as an expert in a particular field.

- To inform policy or practice : Researchers may write research papers to inform policy decisions or to improve practice in various fields. Research findings can be used to inform the development of policies, guidelines, and best practices that can improve outcomes for individuals and organizations.

- To share new insights or ideas: Researchers may write research papers to share new insights or ideas with the academic or professional community. They may present new theories, propose new research methods, or challenge existing paradigms in their field.

Purpose of Research Paper

The purpose of a research paper is to present the results of a study or investigation in a clear, concise, and structured manner. Research papers are written to communicate new knowledge, ideas, or findings to a specific audience, such as researchers, scholars, practitioners, or policymakers. The primary purposes of a research paper are:

- To contribute to the body of knowledge : Research papers aim to add new knowledge or insights to a particular field or discipline. They do this by reporting the results of empirical studies, reviewing and synthesizing existing literature, proposing new theories, or providing new perspectives on a topic.

- To inform or persuade: Research papers are written to inform or persuade the reader about a particular issue, topic, or phenomenon. They present evidence and arguments to support their claims and seek to persuade the reader of the validity of their findings or recommendations.

- To advance the field: Research papers seek to advance the field or discipline by identifying gaps in knowledge, proposing new research questions or approaches, or challenging existing assumptions or paradigms. They aim to contribute to ongoing debates and discussions within a field and to stimulate further research and inquiry.

- To demonstrate research skills: Research papers demonstrate the author’s research skills, including their ability to design and conduct a study, collect and analyze data, and interpret and communicate findings. They also demonstrate the author’s ability to critically evaluate existing literature, synthesize information from multiple sources, and write in a clear and structured manner.

Characteristics of Research Paper

Research papers have several characteristics that distinguish them from other forms of academic or professional writing. Here are some common characteristics of research papers:

- Evidence-based: Research papers are based on empirical evidence, which is collected through rigorous research methods such as experiments, surveys, observations, or interviews. They rely on objective data and facts to support their claims and conclusions.

- Structured and organized: Research papers have a clear and logical structure, with sections such as introduction, literature review, methods, results, discussion, and conclusion. They are organized in a way that helps the reader to follow the argument and understand the findings.

- Formal and objective: Research papers are written in a formal and objective tone, with an emphasis on clarity, precision, and accuracy. They avoid subjective language or personal opinions and instead rely on objective data and analysis to support their arguments.

- Citations and references: Research papers include citations and references to acknowledge the sources of information and ideas used in the paper. They use a specific citation style, such as APA, MLA, or Chicago, to ensure consistency and accuracy.

- Peer-reviewed: Research papers are often peer-reviewed, which means they are evaluated by other experts in the field before they are published. Peer-review ensures that the research is of high quality, meets ethical standards, and contributes to the advancement of knowledge in the field.

- Objective and unbiased: Research papers strive to be objective and unbiased in their presentation of the findings. They avoid personal biases or preconceptions and instead rely on the data and analysis to draw conclusions.

Advantages of Research Paper

Research papers have many advantages, both for the individual researcher and for the broader academic and professional community. Here are some advantages of research papers:

- Contribution to knowledge: Research papers contribute to the body of knowledge in a particular field or discipline. They add new information, insights, and perspectives to existing literature and help advance the understanding of a particular phenomenon or issue.

- Opportunity for intellectual growth: Research papers provide an opportunity for intellectual growth for the researcher. They require critical thinking, problem-solving, and creativity, which can help develop the researcher’s skills and knowledge.

- Career advancement: Research papers can help advance the researcher’s career by demonstrating their expertise and contributions to the field. They can also lead to new research opportunities, collaborations, and funding.

- Academic recognition: Research papers can lead to academic recognition in the form of awards, grants, or invitations to speak at conferences or events. They can also contribute to the researcher’s reputation and standing in the field.

- Impact on policy and practice: Research papers can have a significant impact on policy and practice. They can inform policy decisions, guide practice, and lead to changes in laws, regulations, or procedures.

- Advancement of society: Research papers can contribute to the advancement of society by addressing important issues, identifying solutions to problems, and promoting social justice and equality.

Limitations of Research Paper

Research papers also have some limitations that should be considered when interpreting their findings or implications. Here are some common limitations of research papers:

- Limited generalizability: Research findings may not be generalizable to other populations, settings, or contexts. Studies often use specific samples or conditions that may not reflect the broader population or real-world situations.

- Potential for bias : Research papers may be biased due to factors such as sample selection, measurement errors, or researcher biases. It is important to evaluate the quality of the research design and methods used to ensure that the findings are valid and reliable.

- Ethical concerns: Research papers may raise ethical concerns, such as the use of vulnerable populations or invasive procedures. Researchers must adhere to ethical guidelines and obtain informed consent from participants to ensure that the research is conducted in a responsible and respectful manner.

- Limitations of methodology: Research papers may be limited by the methodology used to collect and analyze data. For example, certain research methods may not capture the complexity or nuance of a particular phenomenon, or may not be appropriate for certain research questions.

- Publication bias: Research papers may be subject to publication bias, where positive or significant findings are more likely to be published than negative or non-significant findings. This can skew the overall findings of a particular area of research.

- Time and resource constraints: Research papers may be limited by time and resource constraints, which can affect the quality and scope of the research. Researchers may not have access to certain data or resources, or may be unable to conduct long-term studies due to practical limitations.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

How to Cite Research Paper – All Formats and...

Data Collection – Methods Types and Examples

Delimitations in Research – Types, Examples and...

Research Paper Format – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Scaffolding Methods for Research Paper Writing

- Resources & Preparation

- Instructional Plan

- Related Resources

Students will use scaffolding to research and organize information for writing a research paper. A research paper scaffold provides students with clear support for writing expository papers that include a question (problem), literature review, analysis, methodology for original research, results, conclusion, and references. Students examine informational text, use an inquiry-based approach, and practice genre-specific strategies for expository writing. Depending on the goals of the assignment, students may work collaboratively or as individuals. A student-written paper about color psychology provides an authentic model of a scaffold and the corresponding finished paper. The research paper scaffold is designed to be completed during seven or eight sessions over the course of four to six weeks.

Featured Resources

- Research Paper Scaffold : This handout guides students in researching and organizing the information they need for writing their research paper.

- Inquiry on the Internet: Evaluating Web Pages for a Class Collection : Students use Internet search engines and Web analysis checklists to evaluate online resources then write annotations that explain how and why the resources will be valuable to the class.

From Theory to Practice

- Research paper scaffolding provides a temporary linguistic tool to assist students as they organize their expository writing. Scaffolding assists students in moving to levels of language performance they might be unable to obtain without this support.

- An instructional scaffold essentially changes the role of the teacher from that of giver of knowledge to leader in inquiry. This relationship encourages creative intelligence on the part of both teacher and student, which in turn may broaden the notion of literacy so as to include more learning styles.

- An instructional scaffold is useful for expository writing because of its basis in problem solving, ownership, appropriateness, support, collaboration, and internalization. It allows students to start where they are comfortable, and provides a genre-based structure for organizing creative ideas.

- In order for students to take ownership of knowledge, they must learn to rework raw information, use details and facts, and write.

- Teaching writing should involve direct, explicit comprehension instruction, effective instructional principles embedded in content, motivation and self-directed learning, and text-based collaborative learning to improve middle school and high school literacy.

Common Core Standards