The Ultimate Guide to Qualitative Research - Part 1: The Basics

- Introduction and overview

- What is qualitative research?

- What is qualitative data?

- Examples of qualitative data

- Qualitative vs. quantitative research

- Mixed methods

- Qualitative research preparation

- Theoretical perspective

- Theoretical framework

- Literature reviews

Research question

- Conceptual framework

- Conceptual vs. theoretical framework

Data collection

- Qualitative research methods

- Focus groups

- Observational research

What is a case study?

Applications for case study research, what is a good case study, process of case study design, benefits and limitations of case studies.

- Ethnographical research

- Ethical considerations

- Confidentiality and privacy

- Power dynamics

- Reflexivity

Case studies

Case studies are essential to qualitative research , offering a lens through which researchers can investigate complex phenomena within their real-life contexts. This chapter explores the concept, purpose, applications, examples, and types of case studies and provides guidance on how to conduct case study research effectively.

Whereas quantitative methods look at phenomena at scale, case study research looks at a concept or phenomenon in considerable detail. While analyzing a single case can help understand one perspective regarding the object of research inquiry, analyzing multiple cases can help obtain a more holistic sense of the topic or issue. Let's provide a basic definition of a case study, then explore its characteristics and role in the qualitative research process.

Definition of a case study

A case study in qualitative research is a strategy of inquiry that involves an in-depth investigation of a phenomenon within its real-world context. It provides researchers with the opportunity to acquire an in-depth understanding of intricate details that might not be as apparent or accessible through other methods of research. The specific case or cases being studied can be a single person, group, or organization – demarcating what constitutes a relevant case worth studying depends on the researcher and their research question .

Among qualitative research methods , a case study relies on multiple sources of evidence, such as documents, artifacts, interviews , or observations , to present a complete and nuanced understanding of the phenomenon under investigation. The objective is to illuminate the readers' understanding of the phenomenon beyond its abstract statistical or theoretical explanations.

Characteristics of case studies

Case studies typically possess a number of distinct characteristics that set them apart from other research methods. These characteristics include a focus on holistic description and explanation, flexibility in the design and data collection methods, reliance on multiple sources of evidence, and emphasis on the context in which the phenomenon occurs.

Furthermore, case studies can often involve a longitudinal examination of the case, meaning they study the case over a period of time. These characteristics allow case studies to yield comprehensive, in-depth, and richly contextualized insights about the phenomenon of interest.

The role of case studies in research

Case studies hold a unique position in the broader landscape of research methods aimed at theory development. They are instrumental when the primary research interest is to gain an intensive, detailed understanding of a phenomenon in its real-life context.

In addition, case studies can serve different purposes within research - they can be used for exploratory, descriptive, or explanatory purposes, depending on the research question and objectives. This flexibility and depth make case studies a valuable tool in the toolkit of qualitative researchers.

Remember, a well-conducted case study can offer a rich, insightful contribution to both academic and practical knowledge through theory development or theory verification, thus enhancing our understanding of complex phenomena in their real-world contexts.

What is the purpose of a case study?

Case study research aims for a more comprehensive understanding of phenomena, requiring various research methods to gather information for qualitative analysis . Ultimately, a case study can allow the researcher to gain insight into a particular object of inquiry and develop a theoretical framework relevant to the research inquiry.

Why use case studies in qualitative research?

Using case studies as a research strategy depends mainly on the nature of the research question and the researcher's access to the data.

Conducting case study research provides a level of detail and contextual richness that other research methods might not offer. They are beneficial when there's a need to understand complex social phenomena within their natural contexts.

The explanatory, exploratory, and descriptive roles of case studies

Case studies can take on various roles depending on the research objectives. They can be exploratory when the research aims to discover new phenomena or define new research questions; they are descriptive when the objective is to depict a phenomenon within its context in a detailed manner; and they can be explanatory if the goal is to understand specific relationships within the studied context. Thus, the versatility of case studies allows researchers to approach their topic from different angles, offering multiple ways to uncover and interpret the data .

The impact of case studies on knowledge development

Case studies play a significant role in knowledge development across various disciplines. Analysis of cases provides an avenue for researchers to explore phenomena within their context based on the collected data.

This can result in the production of rich, practical insights that can be instrumental in both theory-building and practice. Case studies allow researchers to delve into the intricacies and complexities of real-life situations, uncovering insights that might otherwise remain hidden.

Types of case studies

In qualitative research , a case study is not a one-size-fits-all approach. Depending on the nature of the research question and the specific objectives of the study, researchers might choose to use different types of case studies. These types differ in their focus, methodology, and the level of detail they provide about the phenomenon under investigation.

Understanding these types is crucial for selecting the most appropriate approach for your research project and effectively achieving your research goals. Let's briefly look at the main types of case studies.

Exploratory case studies

Exploratory case studies are typically conducted to develop a theory or framework around an understudied phenomenon. They can also serve as a precursor to a larger-scale research project. Exploratory case studies are useful when a researcher wants to identify the key issues or questions which can spur more extensive study or be used to develop propositions for further research. These case studies are characterized by flexibility, allowing researchers to explore various aspects of a phenomenon as they emerge, which can also form the foundation for subsequent studies.

Descriptive case studies

Descriptive case studies aim to provide a complete and accurate representation of a phenomenon or event within its context. These case studies are often based on an established theoretical framework, which guides how data is collected and analyzed. The researcher is concerned with describing the phenomenon in detail, as it occurs naturally, without trying to influence or manipulate it.

Explanatory case studies

Explanatory case studies are focused on explanation - they seek to clarify how or why certain phenomena occur. Often used in complex, real-life situations, they can be particularly valuable in clarifying causal relationships among concepts and understanding the interplay between different factors within a specific context.

Intrinsic, instrumental, and collective case studies

These three categories of case studies focus on the nature and purpose of the study. An intrinsic case study is conducted when a researcher has an inherent interest in the case itself. Instrumental case studies are employed when the case is used to provide insight into a particular issue or phenomenon. A collective case study, on the other hand, involves studying multiple cases simultaneously to investigate some general phenomena.

Each type of case study serves a different purpose and has its own strengths and challenges. The selection of the type should be guided by the research question and objectives, as well as the context and constraints of the research.

The flexibility, depth, and contextual richness offered by case studies make this approach an excellent research method for various fields of study. They enable researchers to investigate real-world phenomena within their specific contexts, capturing nuances that other research methods might miss. Across numerous fields, case studies provide valuable insights into complex issues.

Critical information systems research

Case studies provide a detailed understanding of the role and impact of information systems in different contexts. They offer a platform to explore how information systems are designed, implemented, and used and how they interact with various social, economic, and political factors. Case studies in this field often focus on examining the intricate relationship between technology, organizational processes, and user behavior, helping to uncover insights that can inform better system design and implementation.

Health research

Health research is another field where case studies are highly valuable. They offer a way to explore patient experiences, healthcare delivery processes, and the impact of various interventions in a real-world context.

Case studies can provide a deep understanding of a patient's journey, giving insights into the intricacies of disease progression, treatment effects, and the psychosocial aspects of health and illness.

Asthma research studies

Specifically within medical research, studies on asthma often employ case studies to explore the individual and environmental factors that influence asthma development, management, and outcomes. A case study can provide rich, detailed data about individual patients' experiences, from the triggers and symptoms they experience to the effectiveness of various management strategies. This can be crucial for developing patient-centered asthma care approaches.

Other fields

Apart from the fields mentioned, case studies are also extensively used in business and management research, education research, and political sciences, among many others. They provide an opportunity to delve into the intricacies of real-world situations, allowing for a comprehensive understanding of various phenomena.

Case studies, with their depth and contextual focus, offer unique insights across these varied fields. They allow researchers to illuminate the complexities of real-life situations, contributing to both theory and practice.

Whatever field you're in, ATLAS.ti puts your data to work for you

Download a free trial of ATLAS.ti to turn your data into insights.

Understanding the key elements of case study design is crucial for conducting rigorous and impactful case study research. A well-structured design guides the researcher through the process, ensuring that the study is methodologically sound and its findings are reliable and valid. The main elements of case study design include the research question , propositions, units of analysis, and the logic linking the data to the propositions.

The research question is the foundation of any research study. A good research question guides the direction of the study and informs the selection of the case, the methods of collecting data, and the analysis techniques. A well-formulated research question in case study research is typically clear, focused, and complex enough to merit further detailed examination of the relevant case(s).

Propositions

Propositions, though not necessary in every case study, provide a direction by stating what we might expect to find in the data collected. They guide how data is collected and analyzed by helping researchers focus on specific aspects of the case. They are particularly important in explanatory case studies, which seek to understand the relationships among concepts within the studied phenomenon.

Units of analysis

The unit of analysis refers to the case, or the main entity or entities that are being analyzed in the study. In case study research, the unit of analysis can be an individual, a group, an organization, a decision, an event, or even a time period. It's crucial to clearly define the unit of analysis, as it shapes the qualitative data analysis process by allowing the researcher to analyze a particular case and synthesize analysis across multiple case studies to draw conclusions.

Argumentation

This refers to the inferential model that allows researchers to draw conclusions from the data. The researcher needs to ensure that there is a clear link between the data, the propositions (if any), and the conclusions drawn. This argumentation is what enables the researcher to make valid and credible inferences about the phenomenon under study.

Understanding and carefully considering these elements in the design phase of a case study can significantly enhance the quality of the research. It can help ensure that the study is methodologically sound and its findings contribute meaningful insights about the case.

Ready to jumpstart your research with ATLAS.ti?

Conceptualize your research project with our intuitive data analysis interface. Download a free trial today.

Conducting a case study involves several steps, from defining the research question and selecting the case to collecting and analyzing data . This section outlines these key stages, providing a practical guide on how to conduct case study research.

Defining the research question

The first step in case study research is defining a clear, focused research question. This question should guide the entire research process, from case selection to analysis. It's crucial to ensure that the research question is suitable for a case study approach. Typically, such questions are exploratory or descriptive in nature and focus on understanding a phenomenon within its real-life context.

Selecting and defining the case

The selection of the case should be based on the research question and the objectives of the study. It involves choosing a unique example or a set of examples that provide rich, in-depth data about the phenomenon under investigation. After selecting the case, it's crucial to define it clearly, setting the boundaries of the case, including the time period and the specific context.

Previous research can help guide the case study design. When considering a case study, an example of a case could be taken from previous case study research and used to define cases in a new research inquiry. Considering recently published examples can help understand how to select and define cases effectively.

Developing a detailed case study protocol

A case study protocol outlines the procedures and general rules to be followed during the case study. This includes the data collection methods to be used, the sources of data, and the procedures for analysis. Having a detailed case study protocol ensures consistency and reliability in the study.

The protocol should also consider how to work with the people involved in the research context to grant the research team access to collecting data. As mentioned in previous sections of this guide, establishing rapport is an essential component of qualitative research as it shapes the overall potential for collecting and analyzing data.

Collecting data

Gathering data in case study research often involves multiple sources of evidence, including documents, archival records, interviews, observations, and physical artifacts. This allows for a comprehensive understanding of the case. The process for gathering data should be systematic and carefully documented to ensure the reliability and validity of the study.

Analyzing and interpreting data

The next step is analyzing the data. This involves organizing the data , categorizing it into themes or patterns , and interpreting these patterns to answer the research question. The analysis might also involve comparing the findings with prior research or theoretical propositions.

Writing the case study report

The final step is writing the case study report . This should provide a detailed description of the case, the data, the analysis process, and the findings. The report should be clear, organized, and carefully written to ensure that the reader can understand the case and the conclusions drawn from it.

Each of these steps is crucial in ensuring that the case study research is rigorous, reliable, and provides valuable insights about the case.

The type, depth, and quality of data in your study can significantly influence the validity and utility of the study. In case study research, data is usually collected from multiple sources to provide a comprehensive and nuanced understanding of the case. This section will outline the various methods of collecting data used in case study research and discuss considerations for ensuring the quality of the data.

Interviews are a common method of gathering data in case study research. They can provide rich, in-depth data about the perspectives, experiences, and interpretations of the individuals involved in the case. Interviews can be structured , semi-structured , or unstructured , depending on the research question and the degree of flexibility needed.

Observations

Observations involve the researcher observing the case in its natural setting, providing first-hand information about the case and its context. Observations can provide data that might not be revealed in interviews or documents, such as non-verbal cues or contextual information.

Documents and artifacts

Documents and archival records provide a valuable source of data in case study research. They can include reports, letters, memos, meeting minutes, email correspondence, and various public and private documents related to the case.

These records can provide historical context, corroborate evidence from other sources, and offer insights into the case that might not be apparent from interviews or observations.

Physical artifacts refer to any physical evidence related to the case, such as tools, products, or physical environments. These artifacts can provide tangible insights into the case, complementing the data gathered from other sources.

Ensuring the quality of data collection

Determining the quality of data in case study research requires careful planning and execution. It's crucial to ensure that the data is reliable, accurate, and relevant to the research question. This involves selecting appropriate methods of collecting data, properly training interviewers or observers, and systematically recording and storing the data. It also includes considering ethical issues related to collecting and handling data, such as obtaining informed consent and ensuring the privacy and confidentiality of the participants.

Data analysis

Analyzing case study research involves making sense of the rich, detailed data to answer the research question. This process can be challenging due to the volume and complexity of case study data. However, a systematic and rigorous approach to analysis can ensure that the findings are credible and meaningful. This section outlines the main steps and considerations in analyzing data in case study research.

Organizing the data

The first step in the analysis is organizing the data. This involves sorting the data into manageable sections, often according to the data source or the theme. This step can also involve transcribing interviews, digitizing physical artifacts, or organizing observational data.

Categorizing and coding the data

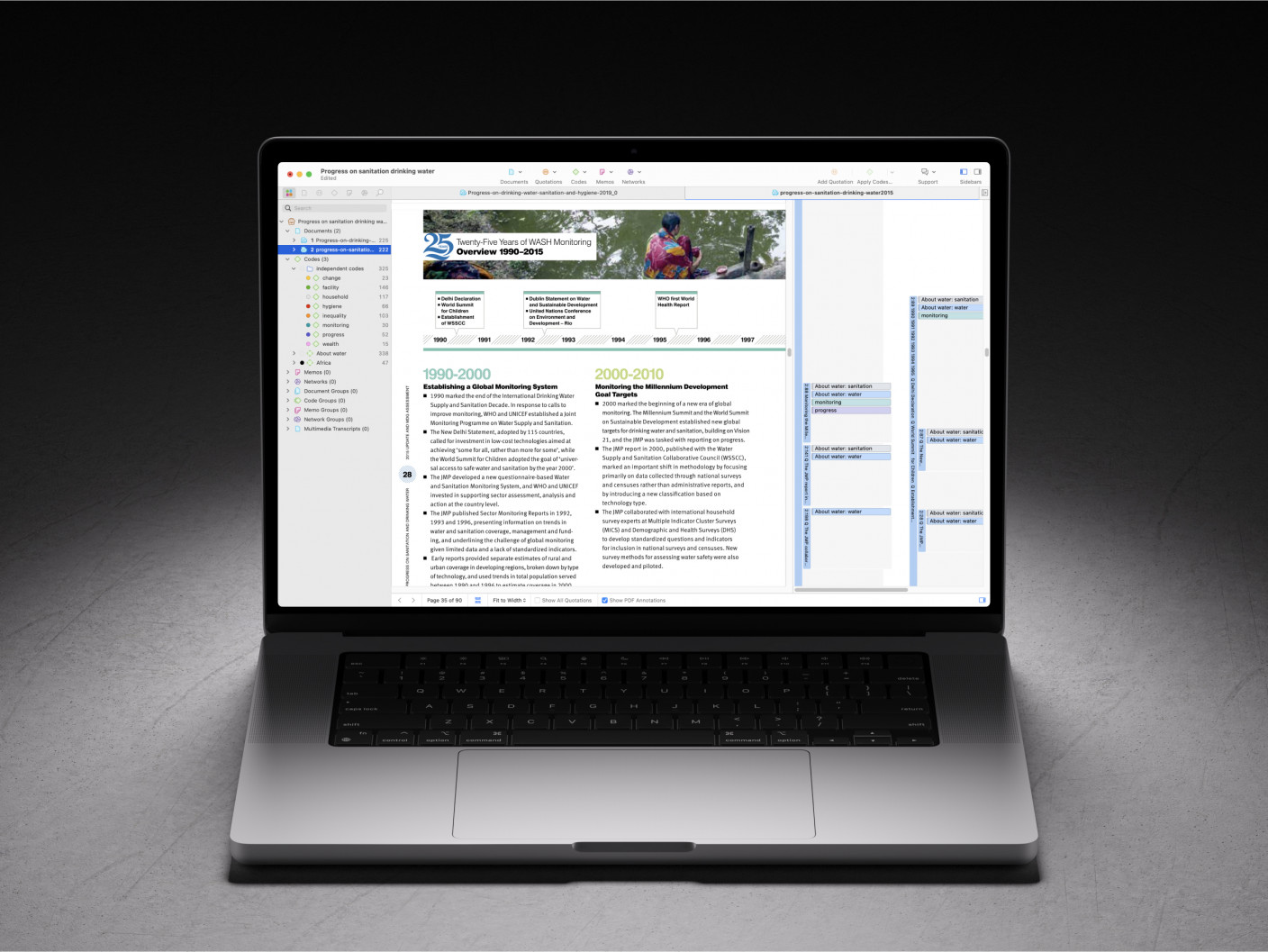

Once the data is organized, the next step is to categorize or code the data. This involves identifying common themes, patterns, or concepts in the data and assigning codes to relevant data segments. Coding can be done manually or with the help of software tools, and in either case, qualitative analysis software can greatly facilitate the entire coding process. Coding helps to reduce the data to a set of themes or categories that can be more easily analyzed.

Identifying patterns and themes

After coding the data, the researcher looks for patterns or themes in the coded data. This involves comparing and contrasting the codes and looking for relationships or patterns among them. The identified patterns and themes should help answer the research question.

Interpreting the data

Once patterns and themes have been identified, the next step is to interpret these findings. This involves explaining what the patterns or themes mean in the context of the research question and the case. This interpretation should be grounded in the data, but it can also involve drawing on theoretical concepts or prior research.

Verification of the data

The last step in the analysis is verification. This involves checking the accuracy and consistency of the analysis process and confirming that the findings are supported by the data. This can involve re-checking the original data, checking the consistency of codes, or seeking feedback from research participants or peers.

Like any research method , case study research has its strengths and limitations. Researchers must be aware of these, as they can influence the design, conduct, and interpretation of the study.

Understanding the strengths and limitations of case study research can also guide researchers in deciding whether this approach is suitable for their research question . This section outlines some of the key strengths and limitations of case study research.

Benefits include the following:

- Rich, detailed data: One of the main strengths of case study research is that it can generate rich, detailed data about the case. This can provide a deep understanding of the case and its context, which can be valuable in exploring complex phenomena.

- Flexibility: Case study research is flexible in terms of design , data collection , and analysis . A sufficient degree of flexibility allows the researcher to adapt the study according to the case and the emerging findings.

- Real-world context: Case study research involves studying the case in its real-world context, which can provide valuable insights into the interplay between the case and its context.

- Multiple sources of evidence: Case study research often involves collecting data from multiple sources , which can enhance the robustness and validity of the findings.

On the other hand, researchers should consider the following limitations:

- Generalizability: A common criticism of case study research is that its findings might not be generalizable to other cases due to the specificity and uniqueness of each case.

- Time and resource intensive: Case study research can be time and resource intensive due to the depth of the investigation and the amount of collected data.

- Complexity of analysis: The rich, detailed data generated in case study research can make analyzing the data challenging.

- Subjectivity: Given the nature of case study research, there may be a higher degree of subjectivity in interpreting the data , so researchers need to reflect on this and transparently convey to audiences how the research was conducted.

Being aware of these strengths and limitations can help researchers design and conduct case study research effectively and interpret and report the findings appropriately.

Ready to analyze your data with ATLAS.ti?

See how our intuitive software can draw key insights from your data with a free trial today.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Data Collection Methods | Step-by-Step Guide & Examples

Data Collection Methods | Step-by-Step Guide & Examples

Published on 4 May 2022 by Pritha Bhandari .

Data collection is a systematic process of gathering observations or measurements. Whether you are performing research for business, governmental, or academic purposes, data collection allows you to gain first-hand knowledge and original insights into your research problem .

While methods and aims may differ between fields, the overall process of data collection remains largely the same. Before you begin collecting data, you need to consider:

- The aim of the research

- The type of data that you will collect

- The methods and procedures you will use to collect, store, and process the data

To collect high-quality data that is relevant to your purposes, follow these four steps.

Table of contents

Step 1: define the aim of your research, step 2: choose your data collection method, step 3: plan your data collection procedures, step 4: collect the data, frequently asked questions about data collection.

Before you start the process of data collection, you need to identify exactly what you want to achieve. You can start by writing a problem statement : what is the practical or scientific issue that you want to address, and why does it matter?

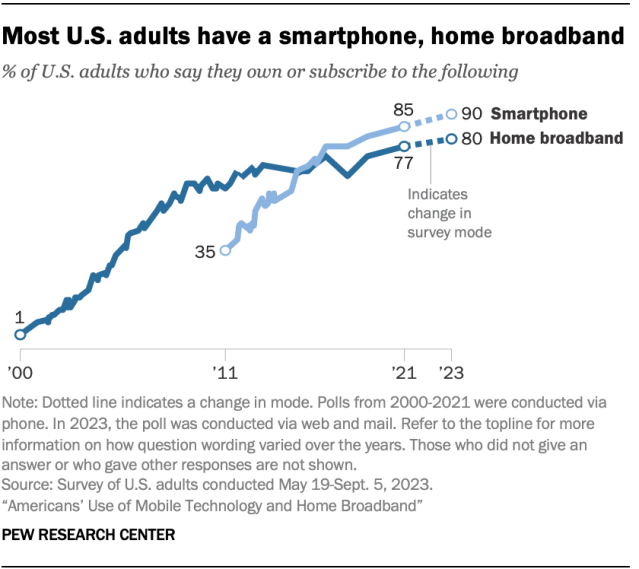

Next, formulate one or more research questions that precisely define what you want to find out. Depending on your research questions, you might need to collect quantitative or qualitative data :

- Quantitative data is expressed in numbers and graphs and is analysed through statistical methods .

- Qualitative data is expressed in words and analysed through interpretations and categorisations.

If your aim is to test a hypothesis , measure something precisely, or gain large-scale statistical insights, collect quantitative data. If your aim is to explore ideas, understand experiences, or gain detailed insights into a specific context, collect qualitative data.

If you have several aims, you can use a mixed methods approach that collects both types of data.

- Your first aim is to assess whether there are significant differences in perceptions of managers across different departments and office locations.

- Your second aim is to gather meaningful feedback from employees to explore new ideas for how managers can improve.

Prevent plagiarism, run a free check.

Based on the data you want to collect, decide which method is best suited for your research.

- Experimental research is primarily a quantitative method.

- Interviews , focus groups , and ethnographies are qualitative methods.

- Surveys , observations, archival research, and secondary data collection can be quantitative or qualitative methods.

Carefully consider what method you will use to gather data that helps you directly answer your research questions.

When you know which method(s) you are using, you need to plan exactly how you will implement them. What procedures will you follow to make accurate observations or measurements of the variables you are interested in?

For instance, if you’re conducting surveys or interviews, decide what form the questions will take; if you’re conducting an experiment, make decisions about your experimental design .

Operationalisation

Sometimes your variables can be measured directly: for example, you can collect data on the average age of employees simply by asking for dates of birth. However, often you’ll be interested in collecting data on more abstract concepts or variables that can’t be directly observed.

Operationalisation means turning abstract conceptual ideas into measurable observations. When planning how you will collect data, you need to translate the conceptual definition of what you want to study into the operational definition of what you will actually measure.

- You ask managers to rate their own leadership skills on 5-point scales assessing the ability to delegate, decisiveness, and dependability.

- You ask their direct employees to provide anonymous feedback on the managers regarding the same topics.

You may need to develop a sampling plan to obtain data systematically. This involves defining a population , the group you want to draw conclusions about, and a sample, the group you will actually collect data from.

Your sampling method will determine how you recruit participants or obtain measurements for your study. To decide on a sampling method you will need to consider factors like the required sample size, accessibility of the sample, and time frame of the data collection.

Standardising procedures

If multiple researchers are involved, write a detailed manual to standardise data collection procedures in your study.

This means laying out specific step-by-step instructions so that everyone in your research team collects data in a consistent way – for example, by conducting experiments under the same conditions and using objective criteria to record and categorise observations.

This helps ensure the reliability of your data, and you can also use it to replicate the study in the future.

Creating a data management plan

Before beginning data collection, you should also decide how you will organise and store your data.

- If you are collecting data from people, you will likely need to anonymise and safeguard the data to prevent leaks of sensitive information (e.g. names or identity numbers).

- If you are collecting data via interviews or pencil-and-paper formats, you will need to perform transcriptions or data entry in systematic ways to minimise distortion.

- You can prevent loss of data by having an organisation system that is routinely backed up.

Finally, you can implement your chosen methods to measure or observe the variables you are interested in.

The closed-ended questions ask participants to rate their manager’s leadership skills on scales from 1 to 5. The data produced is numerical and can be statistically analysed for averages and patterns.

To ensure that high-quality data is recorded in a systematic way, here are some best practices:

- Record all relevant information as and when you obtain data. For example, note down whether or how lab equipment is recalibrated during an experimental study.

- Double-check manual data entry for errors.

- If you collect quantitative data, you can assess the reliability and validity to get an indication of your data quality.

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organisations.

When conducting research, collecting original data has significant advantages:

- You can tailor data collection to your specific research aims (e.g., understanding the needs of your consumers or user testing your website).

- You can control and standardise the process for high reliability and validity (e.g., choosing appropriate measurements and sampling methods ).

However, there are also some drawbacks: data collection can be time-consuming, labour-intensive, and expensive. In some cases, it’s more efficient to use secondary data that has already been collected by someone else, but the data might be less reliable.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research , you also have to consider the internal and external validity of your experiment.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2022, May 04). Data Collection Methods | Step-by-Step Guide & Examples. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/research-methods/data-collection-guide/

Is this article helpful?

Pritha Bhandari

Other students also liked, qualitative vs quantitative research | examples & methods, triangulation in research | guide, types, examples, what is a conceptual framework | tips & examples.

- Statistics Tutorial

- Adjusted R-Squared

- Analysis of Variance

- Arithmetic Mean

- Arithmetic Median

- Arithmetic Mode

- Arithmetic Range

- Best Point Estimation

- Beta Distribution

- Binomial Distribution

- Black-Scholes model

- Central limit theorem

- Chebyshev's Theorem

- Chi-squared Distribution

- Chi Squared table

- Circular Permutation

- Cluster sampling

- Cohen's kappa coefficient

- Combination

- Combination with replacement

- Comparing plots

- Continuous Uniform Distribution

- Continuous Series Arithmetic Mean

- Continuous Series Arithmetic Median

- Continuous Series Arithmetic Mode

- Cumulative Frequency

- Co-efficient of Variation

- Correlation Co-efficient

- Cumulative plots

- Cumulative Poisson Distribution

- Data collection

- Data collection - Questionaire Designing

- Data collection - Observation

- Data collection - Case Study Method

- Data Patterns

- Deciles Statistics

- Discrete Series Arithmetic Mean

- Discrete Series Arithmetic Median

- Discrete Series Arithmetic Mode

- Exponential distribution

- F distribution

- F Test Table

- Frequency Distribution

- Gamma Distribution

- Geometric Mean

- Geometric Probability Distribution

- Goodness of Fit

- Gumbel Distribution

- Harmonic Mean

- Harmonic Number

- Harmonic Resonance Frequency

- Hypergeometric Distribution

- Hypothesis testing

- Individual Series Arithmetic Mean

- Individual Series Arithmetic Median

- Individual Series Arithmetic Mode

- Interval Estimation

- Inverse Gamma Distribution

- Kolmogorov Smirnov Test

- Laplace Distribution

- Linear regression

- Log Gamma Distribution

- Logistic Regression

- Mcnemar Test

- Mean Deviation

- Means Difference

- Multinomial Distribution

- Negative Binomial Distribution

- Normal Distribution

- Odd and Even Permutation

- One Proportion Z Test

- Outlier Function

- Permutation

- Permutation with Replacement

- Poisson Distribution

- Pooled Variance (r)

- Power Calculator

- Probability

- Probability Additive Theorem

- Probability Multiplecative Theorem

- Probability Bayes Theorem

- Probability Density Function

- Process Sigma

- Quadratic Regression Equation

- Qualitative Data Vs Quantitative Data

- Quartile Deviation

- Range Rule of Thumb

- Rayleigh Distribution

- Regression Intercept Confidence Interval

- Relative Standard Deviation

- Reliability Coefficient

- Required Sample Size

- Residual analysis

- Residual sum of squares

- Root Mean Square

- Sample planning

- Sampling methods

- Scatterplots

- Shannon Wiener Diversity Index

- Signal to Noise Ratio

- Simple random sampling

- Standard Deviation

- Standard Error ( SE )

- Standard normal table

- Statistical Significance

- Statistics Formulas

- Statistics Notation

- Stem and Leaf Plot

- Stratified sampling

- Student T Test

- Sum of Square

- T-Distribution Table

- Ti 83 Exponential Regression

- Transformations

- Trimmed Mean

- Type I & II Error

- Venn Diagram

- Weak Law of Large Numbers

- Statistics Useful Resources

- Statistics - Discussion

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

Statistics - Data collection - Case Study Method

Case study research is a qualitative research method that is used to examine contemporary real-life situations and apply the findings of the case to the problem under study. Case studies involve a detailed contextual analysis of a limited number of events or conditions and their relationships. It provides the basis for the application of ideas and extension of methods. It helps a researcher to understand a complex issue or object and add strength to what is already known through previous research.

STEPS OF CASE STUDY METHOD

In order to ensure objectivity and clarity, a researcher should adopt a methodical approach to case studies research. The following steps can be followed:

Identify and define the research questions - The researcher starts with establishing the focus of the study by identifying the research object and the problem surrounding it. The research object would be a person, a program, an event or an entity.

Select the cases - In this step the researcher decides on the number of cases to choose (single or multiple), the type of cases to choose (unique or typical) and the approach to collect, store and analyze the data. This is the design phase of the case study method.

Collect the data - The researcher now collects the data with the objective of gathering multiple sources of evidence with reference to the problem under study. This evidence is stored comprehensively and systematically in a format that can be referenced and sorted easily so that converging lines of inquiry and patterns can be uncovered.

Evaluate and analyze the data - In this step the researcher makes use of varied methods to analyze qualitative as well as quantitative data. The data is categorized, tabulated and cross checked to address the initial propositions or purpose of the study. Graphic techniques like placing information into arrays, creating matrices of categories, creating flow charts etc. are used to help the investigators to approach the data from different ways and thus avoid making premature conclusions. Multiple investigators may also be used to examine the data so that a wide variety of insights to the available data can be developed.

Presentation of Results - The results are presented in a manner that allows the reader to evaluate the findings in the light of the evidence presented in the report. The results are corroborated with sufficient evidence showing that all aspects of the problem have been adequately explored. The newer insights gained and the conflicting propositions that have emerged are suitably highlighted in the report.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Sage Choice

Continuing to enhance the quality of case study methodology in health services research

Shannon l. sibbald.

1 Faculty of Health Sciences, Western University, London, Ontario, Canada.

2 Department of Family Medicine, Schulich School of Medicine and Dentistry, Western University, London, Ontario, Canada.

3 The Schulich Interfaculty Program in Public Health, Schulich School of Medicine and Dentistry, Western University, London, Ontario, Canada.

Stefan Paciocco

Meghan fournie, rachelle van asseldonk, tiffany scurr.

Case study methodology has grown in popularity within Health Services Research (HSR). However, its use and merit as a methodology are frequently criticized due to its flexible approach and inconsistent application. Nevertheless, case study methodology is well suited to HSR because it can track and examine complex relationships, contexts, and systems as they evolve. Applied appropriately, it can help generate information on how multiple forms of knowledge come together to inform decision-making within healthcare contexts. In this article, we aim to demystify case study methodology by outlining its philosophical underpinnings and three foundational approaches. We provide literature-based guidance to decision-makers, policy-makers, and health leaders on how to engage in and critically appraise case study design. We advocate that researchers work in collaboration with health leaders to detail their research process with an aim of strengthening the validity and integrity of case study for its continued and advanced use in HSR.

Introduction

The popularity of case study research methodology in Health Services Research (HSR) has grown over the past 40 years. 1 This may be attributed to a shift towards the use of implementation research and a newfound appreciation of contextual factors affecting the uptake of evidence-based interventions within diverse settings. 2 Incorporating context-specific information on the delivery and implementation of programs can increase the likelihood of success. 3 , 4 Case study methodology is particularly well suited for implementation research in health services because it can provide insight into the nuances of diverse contexts. 5 , 6 In 1999, Yin 7 published a paper on how to enhance the quality of case study in HSR, which was foundational for the emergence of case study in this field. Yin 7 maintains case study is an appropriate methodology in HSR because health systems are constantly evolving, and the multiple affiliations and diverse motivations are difficult to track and understand with traditional linear methodologies.

Despite its increased popularity, there is debate whether a case study is a methodology (ie, a principle or process that guides research) or a method (ie, a tool to answer research questions). Some criticize case study for its high level of flexibility, perceiving it as less rigorous, and maintain that it generates inadequate results. 8 Others have noted issues with quality and consistency in how case studies are conducted and reported. 9 Reporting is often varied and inconsistent, using a mix of approaches such as case reports, case findings, and/or case study. Authors sometimes use incongruent methods of data collection and analysis or use the case study as a default when other methodologies do not fit. 9 , 10 Despite these criticisms, case study methodology is becoming more common as a viable approach for HSR. 11 An abundance of articles and textbooks are available to guide researchers through case study research, including field-specific resources for business, 12 , 13 nursing, 14 and family medicine. 15 However, there remains confusion and a lack of clarity on the key tenets of case study methodology.

Several common philosophical underpinnings have contributed to the development of case study research 1 which has led to different approaches to planning, data collection, and analysis. This presents challenges in assessing quality and rigour for researchers conducting case studies and stakeholders reading results.

This article discusses the various approaches and philosophical underpinnings to case study methodology. Our goal is to explain it in a way that provides guidance for decision-makers, policy-makers, and health leaders on how to understand, critically appraise, and engage in case study research and design, as such guidance is largely absent in the literature. This article is by no means exhaustive or authoritative. Instead, we aim to provide guidance and encourage dialogue around case study methodology, facilitating critical thinking around the variety of approaches and ways quality and rigour can be bolstered for its use within HSR.

Purpose of case study methodology

Case study methodology is often used to develop an in-depth, holistic understanding of a specific phenomenon within a specified context. 11 It focuses on studying one or multiple cases over time and uses an in-depth analysis of multiple information sources. 16 , 17 It is ideal for situations including, but not limited to, exploring under-researched and real-life phenomena, 18 especially when the contexts are complex and the researcher has little control over the phenomena. 19 , 20 Case studies can be useful when researchers want to understand how interventions are implemented in different contexts, and how context shapes the phenomenon of interest.

In addition to demonstrating coherency with the type of questions case study is suited to answer, there are four key tenets to case study methodologies: (1) be transparent in the paradigmatic and theoretical perspectives influencing study design; (2) clearly define the case and phenomenon of interest; (3) clearly define and justify the type of case study design; and (4) use multiple data collection sources and analysis methods to present the findings in ways that are consistent with the methodology and the study’s paradigmatic base. 9 , 16 The goal is to appropriately match the methods to empirical questions and issues and not to universally advocate any single approach for all problems. 21

Approaches to case study methodology

Three authors propose distinct foundational approaches to case study methodology positioned within different paradigms: Yin, 19 , 22 Stake, 5 , 23 and Merriam 24 , 25 ( Table 1 ). Yin is strongly post-positivist whereas Stake and Merriam are grounded in a constructivist paradigm. Researchers should locate their research within a paradigm that explains the philosophies guiding their research 26 and adhere to the underlying paradigmatic assumptions and key tenets of the appropriate author’s methodology. This will enhance the consistency and coherency of the methods and findings. However, researchers often do not report their paradigmatic position, nor do they adhere to one approach. 9 Although deliberately blending methodologies may be defensible and methodologically appropriate, more often it is done in an ad hoc and haphazard way, without consideration for limitations.

Cross-analysis of three case study approaches, adapted from Yazan 2015

The post-positive paradigm postulates there is one reality that can be objectively described and understood by “bracketing” oneself from the research to remove prejudice or bias. 27 Yin focuses on general explanation and prediction, emphasizing the formulation of propositions, akin to hypothesis testing. This approach is best suited for structured and objective data collection 9 , 11 and is often used for mixed-method studies.

Constructivism assumes that the phenomenon of interest is constructed and influenced by local contexts, including the interaction between researchers, individuals, and their environment. 27 It acknowledges multiple interpretations of reality 24 constructed within the context by the researcher and participants which are unlikely to be replicated, should either change. 5 , 20 Stake and Merriam’s constructivist approaches emphasize a story-like rendering of a problem and an iterative process of constructing the case study. 7 This stance values researcher reflexivity and transparency, 28 acknowledging how researchers’ experiences and disciplinary lenses influence their assumptions and beliefs about the nature of the phenomenon and development of the findings.

Defining a case

A key tenet of case study methodology often underemphasized in literature is the importance of defining the case and phenomenon. Researches should clearly describe the case with sufficient detail to allow readers to fully understand the setting and context and determine applicability. Trying to answer a question that is too broad often leads to an unclear definition of the case and phenomenon. 20 Cases should therefore be bound by time and place to ensure rigor and feasibility. 6

Yin 22 defines a case as “a contemporary phenomenon within its real-life context,” (p13) which may contain a single unit of analysis, including individuals, programs, corporations, or clinics 29 (holistic), or be broken into sub-units of analysis, such as projects, meetings, roles, or locations within the case (embedded). 30 Merriam 24 and Stake 5 similarly define a case as a single unit studied within a bounded system. Stake 5 , 23 suggests bounding cases by contexts and experiences where the phenomenon of interest can be a program, process, or experience. However, the line between the case and phenomenon can become muddy. For guidance, Stake 5 , 23 describes the case as the noun or entity and the phenomenon of interest as the verb, functioning, or activity of the case.

Designing the case study approach

Yin’s approach to a case study is rooted in a formal proposition or theory which guides the case and is used to test the outcome. 1 Stake 5 advocates for a flexible design and explicitly states that data collection and analysis may commence at any point. Merriam’s 24 approach blends both Yin and Stake’s, allowing the necessary flexibility in data collection and analysis to meet the needs.

Yin 30 proposed three types of case study approaches—descriptive, explanatory, and exploratory. Each can be designed around single or multiple cases, creating six basic case study methodologies. Descriptive studies provide a rich description of the phenomenon within its context, which can be helpful in developing theories. To test a theory or determine cause and effect relationships, researchers can use an explanatory design. An exploratory model is typically used in the pilot-test phase to develop propositions (eg, Sibbald et al. 31 used this approach to explore interprofessional network complexity). Despite having distinct characteristics, the boundaries between case study types are flexible with significant overlap. 30 Each has five key components: (1) research question; (2) proposition; (3) unit of analysis; (4) logical linking that connects the theory with proposition; and (5) criteria for analyzing findings.

Contrary to Yin, Stake 5 believes the research process cannot be planned in its entirety because research evolves as it is performed. Consequently, researchers can adjust the design of their methods even after data collection has begun. Stake 5 classifies case studies into three categories: intrinsic, instrumental, and collective/multiple. Intrinsic case studies focus on gaining a better understanding of the case. These are often undertaken when the researcher has an interest in a specific case. Instrumental case study is used when the case itself is not of the utmost importance, and the issue or phenomenon (ie, the research question) being explored becomes the focus instead (eg, Paciocco 32 used an instrumental case study to evaluate the implementation of a chronic disease management program). 5 Collective designs are rooted in an instrumental case study and include multiple cases to gain an in-depth understanding of the complexity and particularity of a phenomenon across diverse contexts. 5 , 23 In collective designs, studying similarities and differences between the cases allows the phenomenon to be understood more intimately (for examples of this in the field, see van Zelm et al. 33 and Burrows et al. 34 In addition, Sibbald et al. 35 present an example where a cross-case analysis method is used to compare instrumental cases).

Merriam’s approach is flexible (similar to Stake) as well as stepwise and linear (similar to Yin). She advocates for conducting a literature review before designing the study to better understand the theoretical underpinnings. 24 , 25 Unlike Stake or Yin, Merriam proposes a step-by-step guide for researchers to design a case study. These steps include performing a literature review, creating a theoretical framework, identifying the problem, creating and refining the research question(s), and selecting a study sample that fits the question(s). 24 , 25 , 36

Data collection and analysis

Using multiple data collection methods is a key characteristic of all case study methodology; it enhances the credibility of the findings by allowing different facets and views of the phenomenon to be explored. 23 Common methods include interviews, focus groups, observation, and document analysis. 5 , 37 By seeking patterns within and across data sources, a thick description of the case can be generated to support a greater understanding and interpretation of the whole phenomenon. 5 , 17 , 20 , 23 This technique is called triangulation and is used to explore cases with greater accuracy. 5 Although Stake 5 maintains case study is most often used in qualitative research, Yin 17 supports a mix of both quantitative and qualitative methods to triangulate data. This deliberate convergence of data sources (or mixed methods) allows researchers to find greater depth in their analysis and develop converging lines of inquiry. For example, case studies evaluating interventions commonly use qualitative interviews to describe the implementation process, barriers, and facilitators paired with a quantitative survey of comparative outcomes and effectiveness. 33 , 38 , 39

Yin 30 describes analysis as dependent on the chosen approach, whether it be (1) deductive and rely on theoretical propositions; (2) inductive and analyze data from the “ground up”; (3) organized to create a case description; or (4) used to examine plausible rival explanations. According to Yin’s 40 approach to descriptive case studies, carefully considering theory development is an important part of study design. “Theory” refers to field-relevant propositions, commonly agreed upon assumptions, or fully developed theories. 40 Stake 5 advocates for using the researcher’s intuition and impression to guide analysis through a categorical aggregation and direct interpretation. Merriam 24 uses six different methods to guide the “process of making meaning” (p178) : (1) ethnographic analysis; (2) narrative analysis; (3) phenomenological analysis; (4) constant comparative method; (5) content analysis; and (6) analytic induction.

Drawing upon a theoretical or conceptual framework to inform analysis improves the quality of case study and avoids the risk of description without meaning. 18 Using Stake’s 5 approach, researchers rely on protocols and previous knowledge to help make sense of new ideas; theory can guide the research and assist researchers in understanding how new information fits into existing knowledge.

Practical applications of case study research

Columbia University has recently demonstrated how case studies can help train future health leaders. 41 Case studies encompass components of systems thinking—considering connections and interactions between components of a system, alongside the implications and consequences of those relationships—to equip health leaders with tools to tackle global health issues. 41 Greenwood 42 evaluated Indigenous peoples’ relationship with the healthcare system in British Columbia and used a case study to challenge and educate health leaders across the country to enhance culturally sensitive health service environments.

An important but often omitted step in case study research is an assessment of quality and rigour. We recommend using a framework or set of criteria to assess the rigour of the qualitative research. Suitable resources include Caelli et al., 43 Houghten et al., 44 Ravenek and Rudman, 45 and Tracy. 46

New directions in case study

Although “pragmatic” case studies (ie, utilizing practical and applicable methods) have existed within psychotherapy for some time, 47 , 48 only recently has the applicability of pragmatism as an underlying paradigmatic perspective been considered in HSR. 49 This is marked by uptake of pragmatism in Randomized Control Trials, recognizing that “gold standard” testing conditions do not reflect the reality of clinical settings 50 , 51 nor do a handful of epistemologically guided methodologies suit every research inquiry.

Pragmatism positions the research question as the basis for methodological choices, rather than a theory or epistemology, allowing researchers to pursue the most practical approach to understanding a problem or discovering an actionable solution. 52 Mixed methods are commonly used to create a deeper understanding of the case through converging qualitative and quantitative data. 52 Pragmatic case study is suited to HSR because its flexibility throughout the research process accommodates complexity, ever-changing systems, and disruptions to research plans. 49 , 50 Much like case study, pragmatism has been criticized for its flexibility and use when other approaches are seemingly ill-fit. 53 , 54 Similarly, authors argue that this results from a lack of investigation and proper application rather than a reflection of validity, legitimizing the need for more exploration and conversation among researchers and practitioners. 55

Although occasionally misunderstood as a less rigourous research methodology, 8 case study research is highly flexible and allows for contextual nuances. 5 , 6 Its use is valuable when the researcher desires a thorough understanding of a phenomenon or case bound by context. 11 If needed, multiple similar cases can be studied simultaneously, or one case within another. 16 , 17 There are currently three main approaches to case study, 5 , 17 , 24 each with their own definitions of a case, ontological and epistemological paradigms, methodologies, and data collection and analysis procedures. 37

Individuals’ experiences within health systems are influenced heavily by contextual factors, participant experience, and intricate relationships between different organizations and actors. 55 Case study research is well suited for HSR because it can track and examine these complex relationships and systems as they evolve over time. 6 , 7 It is important that researchers and health leaders using this methodology understand its key tenets and how to conduct a proper case study. Although there are many examples of case study in action, they are often under-reported and, when reported, not rigorously conducted. 9 Thus, decision-makers and health leaders should use these examples with caution. The proper reporting of case studies is necessary to bolster their credibility in HSR literature and provide readers sufficient information to critically assess the methodology. We also call on health leaders who frequently use case studies 56 – 58 to report them in the primary research literature.

The purpose of this article is to advocate for the continued and advanced use of case study in HSR and to provide literature-based guidance for decision-makers, policy-makers, and health leaders on how to engage in, read, and interpret findings from case study research. As health systems progress and evolve, the application of case study research will continue to increase as researchers and health leaders aim to capture the inherent complexities, nuances, and contextual factors. 7

Case Study Research Method in Psychology

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Case studies are in-depth investigations of a person, group, event, or community. Typically, data is gathered from various sources using several methods (e.g., observations & interviews).

The case study research method originated in clinical medicine (the case history, i.e., the patient’s personal history). In psychology, case studies are often confined to the study of a particular individual.

The information is mainly biographical and relates to events in the individual’s past (i.e., retrospective), as well as to significant events that are currently occurring in his or her everyday life.

The case study is not a research method, but researchers select methods of data collection and analysis that will generate material suitable for case studies.

Freud (1909a, 1909b) conducted very detailed investigations into the private lives of his patients in an attempt to both understand and help them overcome their illnesses.

This makes it clear that the case study is a method that should only be used by a psychologist, therapist, or psychiatrist, i.e., someone with a professional qualification.

There is an ethical issue of competence. Only someone qualified to diagnose and treat a person can conduct a formal case study relating to atypical (i.e., abnormal) behavior or atypical development.

Famous Case Studies

- Anna O – One of the most famous case studies, documenting psychoanalyst Josef Breuer’s treatment of “Anna O” (real name Bertha Pappenheim) for hysteria in the late 1800s using early psychoanalytic theory.

- Little Hans – A child psychoanalysis case study published by Sigmund Freud in 1909 analyzing his five-year-old patient Herbert Graf’s house phobia as related to the Oedipus complex.

- Bruce/Brenda – Gender identity case of the boy (Bruce) whose botched circumcision led psychologist John Money to advise gender reassignment and raise him as a girl (Brenda) in the 1960s.

- Genie Wiley – Linguistics/psychological development case of the victim of extreme isolation abuse who was studied in 1970s California for effects of early language deprivation on acquiring speech later in life.

- Phineas Gage – One of the most famous neuropsychology case studies analyzes personality changes in railroad worker Phineas Gage after an 1848 brain injury involving a tamping iron piercing his skull.

Clinical Case Studies

- Studying the effectiveness of psychotherapy approaches with an individual patient

- Assessing and treating mental illnesses like depression, anxiety disorders, PTSD

- Neuropsychological cases investigating brain injuries or disorders

Child Psychology Case Studies

- Studying psychological development from birth through adolescence

- Cases of learning disabilities, autism spectrum disorders, ADHD

- Effects of trauma, abuse, deprivation on development

Types of Case Studies

- Explanatory case studies : Used to explore causation in order to find underlying principles. Helpful for doing qualitative analysis to explain presumed causal links.

- Exploratory case studies : Used to explore situations where an intervention being evaluated has no clear set of outcomes. It helps define questions and hypotheses for future research.

- Descriptive case studies : Describe an intervention or phenomenon and the real-life context in which it occurred. It is helpful for illustrating certain topics within an evaluation.

- Multiple-case studies : Used to explore differences between cases and replicate findings across cases. Helpful for comparing and contrasting specific cases.

- Intrinsic : Used to gain a better understanding of a particular case. Helpful for capturing the complexity of a single case.

- Collective : Used to explore a general phenomenon using multiple case studies. Helpful for jointly studying a group of cases in order to inquire into the phenomenon.

Where Do You Find Data for a Case Study?

There are several places to find data for a case study. The key is to gather data from multiple sources to get a complete picture of the case and corroborate facts or findings through triangulation of evidence. Most of this information is likely qualitative (i.e., verbal description rather than measurement), but the psychologist might also collect numerical data.

1. Primary sources

- Interviews – Interviewing key people related to the case to get their perspectives and insights. The interview is an extremely effective procedure for obtaining information about an individual, and it may be used to collect comments from the person’s friends, parents, employer, workmates, and others who have a good knowledge of the person, as well as to obtain facts from the person him or herself.

- Observations – Observing behaviors, interactions, processes, etc., related to the case as they unfold in real-time.

- Documents & Records – Reviewing private documents, diaries, public records, correspondence, meeting minutes, etc., relevant to the case.

2. Secondary sources

- News/Media – News coverage of events related to the case study.

- Academic articles – Journal articles, dissertations etc. that discuss the case.

- Government reports – Official data and records related to the case context.

- Books/films – Books, documentaries or films discussing the case.

3. Archival records

Searching historical archives, museum collections and databases to find relevant documents, visual/audio records related to the case history and context.

Public archives like newspapers, organizational records, photographic collections could all include potentially relevant pieces of information to shed light on attitudes, cultural perspectives, common practices and historical contexts related to psychology.

4. Organizational records

Organizational records offer the advantage of often having large datasets collected over time that can reveal or confirm psychological insights.

Of course, privacy and ethical concerns regarding confidential data must be navigated carefully.

However, with proper protocols, organizational records can provide invaluable context and empirical depth to qualitative case studies exploring the intersection of psychology and organizations.

- Organizational/industrial psychology research : Organizational records like employee surveys, turnover/retention data, policies, incident reports etc. may provide insight into topics like job satisfaction, workplace culture and dynamics, leadership issues, employee behaviors etc.

- Clinical psychology : Therapists/hospitals may grant access to anonymized medical records to study aspects like assessments, diagnoses, treatment plans etc. This could shed light on clinical practices.

- School psychology : Studies could utilize anonymized student records like test scores, grades, disciplinary issues, and counseling referrals to study child development, learning barriers, effectiveness of support programs, and more.

How do I Write a Case Study in Psychology?

Follow specified case study guidelines provided by a journal or your psychology tutor. General components of clinical case studies include: background, symptoms, assessments, diagnosis, treatment, and outcomes. Interpreting the information means the researcher decides what to include or leave out. A good case study should always clarify which information is the factual description and which is an inference or the researcher’s opinion.

1. Introduction

- Provide background on the case context and why it is of interest, presenting background information like demographics, relevant history, and presenting problem.

- Compare briefly to similar published cases if applicable. Clearly state the focus/importance of the case.

2. Case Presentation

- Describe the presenting problem in detail, including symptoms, duration,and impact on daily life.

- Include client demographics like age and gender, information about social relationships, and mental health history.

- Describe all physical, emotional, and/or sensory symptoms reported by the client.

- Use patient quotes to describe the initial complaint verbatim. Follow with full-sentence summaries of relevant history details gathered, including key components that led to a working diagnosis.

- Summarize clinical exam results, namely orthopedic/neurological tests, imaging, lab tests, etc. Note actual results rather than subjective conclusions. Provide images if clearly reproducible/anonymized.

- Clearly state the working diagnosis or clinical impression before transitioning to management.

3. Management and Outcome

- Indicate the total duration of care and number of treatments given over what timeframe. Use specific names/descriptions for any therapies/interventions applied.

- Present the results of the intervention,including any quantitative or qualitative data collected.

- For outcomes, utilize visual analog scales for pain, medication usage logs, etc., if possible. Include patient self-reports of improvement/worsening of symptoms. Note the reason for discharge/end of care.

4. Discussion

- Analyze the case, exploring contributing factors, limitations of the study, and connections to existing research.

- Analyze the effectiveness of the intervention,considering factors like participant adherence, limitations of the study, and potential alternative explanations for the results.

- Identify any questions raised in the case analysis and relate insights to established theories and current research if applicable. Avoid definitive claims about physiological explanations.

- Offer clinical implications, and suggest future research directions.

5. Additional Items

- Thank specific assistants for writing support only. No patient acknowledgments.

- References should directly support any key claims or quotes included.

- Use tables/figures/images only if substantially informative. Include permissions and legends/explanatory notes.

- Provides detailed (rich qualitative) information.

- Provides insight for further research.

- Permitting investigation of otherwise impractical (or unethical) situations.

Case studies allow a researcher to investigate a topic in far more detail than might be possible if they were trying to deal with a large number of research participants (nomothetic approach) with the aim of ‘averaging’.

Because of their in-depth, multi-sided approach, case studies often shed light on aspects of human thinking and behavior that would be unethical or impractical to study in other ways.

Research that only looks into the measurable aspects of human behavior is not likely to give us insights into the subjective dimension of experience, which is important to psychoanalytic and humanistic psychologists.

Case studies are often used in exploratory research. They can help us generate new ideas (that might be tested by other methods). They are an important way of illustrating theories and can help show how different aspects of a person’s life are related to each other.

The method is, therefore, important for psychologists who adopt a holistic point of view (i.e., humanistic psychologists ).

Limitations

- Lacking scientific rigor and providing little basis for generalization of results to the wider population.

- Researchers’ own subjective feelings may influence the case study (researcher bias).

- Difficult to replicate.

- Time-consuming and expensive.

- The volume of data, together with the time restrictions in place, impacted the depth of analysis that was possible within the available resources.

Because a case study deals with only one person/event/group, we can never be sure if the case study investigated is representative of the wider body of “similar” instances. This means the conclusions drawn from a particular case may not be transferable to other settings.

Because case studies are based on the analysis of qualitative (i.e., descriptive) data , a lot depends on the psychologist’s interpretation of the information she has acquired.

This means that there is a lot of scope for Anna O , and it could be that the subjective opinions of the psychologist intrude in the assessment of what the data means.

For example, Freud has been criticized for producing case studies in which the information was sometimes distorted to fit particular behavioral theories (e.g., Little Hans ).

This is also true of Money’s interpretation of the Bruce/Brenda case study (Diamond, 1997) when he ignored evidence that went against his theory.

Breuer, J., & Freud, S. (1895). Studies on hysteria . Standard Edition 2: London.

Curtiss, S. (1981). Genie: The case of a modern wild child .

Diamond, M., & Sigmundson, K. (1997). Sex Reassignment at Birth: Long-term Review and Clinical Implications. Archives of Pediatrics & Adolescent Medicine , 151(3), 298-304

Freud, S. (1909a). Analysis of a phobia of a five year old boy. In The Pelican Freud Library (1977), Vol 8, Case Histories 1, pages 169-306

Freud, S. (1909b). Bemerkungen über einen Fall von Zwangsneurose (Der “Rattenmann”). Jb. psychoanal. psychopathol. Forsch ., I, p. 357-421; GW, VII, p. 379-463; Notes upon a case of obsessional neurosis, SE , 10: 151-318.

Harlow J. M. (1848). Passage of an iron rod through the head. Boston Medical and Surgical Journal, 39 , 389–393.

Harlow, J. M. (1868). Recovery from the Passage of an Iron Bar through the Head . Publications of the Massachusetts Medical Society. 2 (3), 327-347.

Money, J., & Ehrhardt, A. A. (1972). Man & Woman, Boy & Girl : The Differentiation and Dimorphism of Gender Identity from Conception to Maturity. Baltimore, Maryland: Johns Hopkins University Press.

Money, J., & Tucker, P. (1975). Sexual signatures: On being a man or a woman.

Further Information

- Case Study Approach

- Case Study Method

- Enhancing the Quality of Case Studies in Health Services Research

- “We do things together” A case study of “couplehood” in dementia

- Using mixed methods for evaluating an integrative approach to cancer care: a case study

- Privacy Policy

Home » Data Collection – Methods Types and Examples

Data Collection – Methods Types and Examples

Table of Contents

Data Collection

Definition:

Data collection is the process of gathering and collecting information from various sources to analyze and make informed decisions based on the data collected. This can involve various methods, such as surveys, interviews, experiments, and observation.

In order for data collection to be effective, it is important to have a clear understanding of what data is needed and what the purpose of the data collection is. This can involve identifying the population or sample being studied, determining the variables to be measured, and selecting appropriate methods for collecting and recording data.

Types of Data Collection

Types of Data Collection are as follows:

Primary Data Collection

Primary data collection is the process of gathering original and firsthand information directly from the source or target population. This type of data collection involves collecting data that has not been previously gathered, recorded, or published. Primary data can be collected through various methods such as surveys, interviews, observations, experiments, and focus groups. The data collected is usually specific to the research question or objective and can provide valuable insights that cannot be obtained from secondary data sources. Primary data collection is often used in market research, social research, and scientific research.

Secondary Data Collection

Secondary data collection is the process of gathering information from existing sources that have already been collected and analyzed by someone else, rather than conducting new research to collect primary data. Secondary data can be collected from various sources, such as published reports, books, journals, newspapers, websites, government publications, and other documents.

Qualitative Data Collection

Qualitative data collection is used to gather non-numerical data such as opinions, experiences, perceptions, and feelings, through techniques such as interviews, focus groups, observations, and document analysis. It seeks to understand the deeper meaning and context of a phenomenon or situation and is often used in social sciences, psychology, and humanities. Qualitative data collection methods allow for a more in-depth and holistic exploration of research questions and can provide rich and nuanced insights into human behavior and experiences.

Quantitative Data Collection