10 Real World Data Science Case Studies Projects with Example

Top 10 Data Science Case Studies Projects with Examples and Solutions in Python to inspire your data science learning in 2023.

BelData science has been a trending buzzword in recent times. With wide applications in various sectors like healthcare , education, retail, transportation, media, and banking -data science applications are at the core of pretty much every industry out there. The possibilities are endless: analysis of frauds in the finance sector or the personalization of recommendations on eCommerce businesses. We have developed ten exciting data science case studies to explain how data science is leveraged across various industries to make smarter decisions and develop innovative personalized products tailored to specific customers.

Walmart Sales Forecasting Data Science Project

Downloadable solution code | Explanatory videos | Tech Support

Table of Contents

Data science case studies in retail , data science case study examples in entertainment industry , data analytics case study examples in travel industry , case studies for data analytics in social media , real world data science projects in healthcare, data analytics case studies in oil and gas, what is a case study in data science, how do you prepare a data science case study, 10 most interesting data science case studies with examples.

So, without much ado, let's get started with data science business case studies !

With humble beginnings as a simple discount retailer, today, Walmart operates in 10,500 stores and clubs in 24 countries and eCommerce websites, employing around 2.2 million people around the globe. For the fiscal year ended January 31, 2021, Walmart's total revenue was $559 billion showing a growth of $35 billion with the expansion of the eCommerce sector. Walmart is a data-driven company that works on the principle of 'Everyday low cost' for its consumers. To achieve this goal, they heavily depend on the advances of their data science and analytics department for research and development, also known as Walmart Labs. Walmart is home to the world's largest private cloud, which can manage 2.5 petabytes of data every hour! To analyze this humongous amount of data, Walmart has created 'Data Café,' a state-of-the-art analytics hub located within its Bentonville, Arkansas headquarters. The Walmart Labs team heavily invests in building and managing technologies like cloud, data, DevOps , infrastructure, and security.

Walmart is experiencing massive digital growth as the world's largest retailer . Walmart has been leveraging Big data and advances in data science to build solutions to enhance, optimize and customize the shopping experience and serve their customers in a better way. At Walmart Labs, data scientists are focused on creating data-driven solutions that power the efficiency and effectiveness of complex supply chain management processes. Here are some of the applications of data science at Walmart:

i) Personalized Customer Shopping Experience

Walmart analyses customer preferences and shopping patterns to optimize the stocking and displaying of merchandise in their stores. Analysis of Big data also helps them understand new item sales, make decisions on discontinuing products, and the performance of brands.

ii) Order Sourcing and On-Time Delivery Promise

Millions of customers view items on Walmart.com, and Walmart provides each customer a real-time estimated delivery date for the items purchased. Walmart runs a backend algorithm that estimates this based on the distance between the customer and the fulfillment center, inventory levels, and shipping methods available. The supply chain management system determines the optimum fulfillment center based on distance and inventory levels for every order. It also has to decide on the shipping method to minimize transportation costs while meeting the promised delivery date.

Here's what valued users are saying about ProjectPro

Director Data Analytics at EY / EY Tech

Ameeruddin Mohammed

ETL (Abintio) developer at IBM

Not sure what you are looking for?

iii) Packing Optimization

Also known as Box recommendation is a daily occurrence in the shipping of items in retail and eCommerce business. When items of an order or multiple orders for the same customer are ready for packing, Walmart has developed a recommender system that picks the best-sized box which holds all the ordered items with the least in-box space wastage within a fixed amount of time. This Bin Packing problem is a classic NP-Hard problem familiar to data scientists .

Whenever items of an order or multiple orders placed by the same customer are picked from the shelf and are ready for packing, the box recommendation system determines the best-sized box to hold all the ordered items with a minimum of in-box space wasted. This problem is known as the Bin Packing Problem, another classic NP-Hard problem familiar to data scientists.

Here is a link to a sales prediction data science case study to help you understand the applications of Data Science in the real world. Walmart Sales Forecasting Project uses historical sales data for 45 Walmart stores located in different regions. Each store contains many departments, and you must build a model to project the sales for each department in each store. This data science case study aims to create a predictive model to predict the sales of each product. You can also try your hands-on Inventory Demand Forecasting Data Science Project to develop a machine learning model to forecast inventory demand accurately based on historical sales data.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Amazon is an American multinational technology-based company based in Seattle, USA. It started as an online bookseller, but today it focuses on eCommerce, cloud computing , digital streaming, and artificial intelligence . It hosts an estimate of 1,000,000,000 gigabytes of data across more than 1,400,000 servers. Through its constant innovation in data science and big data Amazon is always ahead in understanding its customers. Here are a few data analytics case study examples at Amazon:

i) Recommendation Systems

Data science models help amazon understand the customers' needs and recommend them to them before the customer searches for a product; this model uses collaborative filtering. Amazon uses 152 million customer purchases data to help users to decide on products to be purchased. The company generates 35% of its annual sales using the Recommendation based systems (RBS) method.

Here is a Recommender System Project to help you build a recommendation system using collaborative filtering.

ii) Retail Price Optimization

Amazon product prices are optimized based on a predictive model that determines the best price so that the users do not refuse to buy it based on price. The model carefully determines the optimal prices considering the customers' likelihood of purchasing the product and thinks the price will affect the customers' future buying patterns. Price for a product is determined according to your activity on the website, competitors' pricing, product availability, item preferences, order history, expected profit margin, and other factors.

Check Out this Retail Price Optimization Project to build a Dynamic Pricing Model.

iii) Fraud Detection

Being a significant eCommerce business, Amazon remains at high risk of retail fraud. As a preemptive measure, the company collects historical and real-time data for every order. It uses Machine learning algorithms to find transactions with a higher probability of being fraudulent. This proactive measure has helped the company restrict clients with an excessive number of returns of products.

You can look at this Credit Card Fraud Detection Project to implement a fraud detection model to classify fraudulent credit card transactions.

New Projects

Let us explore data analytics case study examples in the entertainment indusry.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Netflix started as a DVD rental service in 1997 and then has expanded into the streaming business. Headquartered in Los Gatos, California, Netflix is the largest content streaming company in the world. Currently, Netflix has over 208 million paid subscribers worldwide, and with thousands of smart devices which are presently streaming supported, Netflix has around 3 billion hours watched every month. The secret to this massive growth and popularity of Netflix is its advanced use of data analytics and recommendation systems to provide personalized and relevant content recommendations to its users. The data is collected over 100 billion events every day. Here are a few examples of data analysis case studies applied at Netflix :

i) Personalized Recommendation System

Netflix uses over 1300 recommendation clusters based on consumer viewing preferences to provide a personalized experience. Some of the data that Netflix collects from its users include Viewing time, platform searches for keywords, Metadata related to content abandonment, such as content pause time, rewind, rewatched. Using this data, Netflix can predict what a viewer is likely to watch and give a personalized watchlist to a user. Some of the algorithms used by the Netflix recommendation system are Personalized video Ranking, Trending now ranker, and the Continue watching now ranker.

ii) Content Development using Data Analytics

Netflix uses data science to analyze the behavior and patterns of its user to recognize themes and categories that the masses prefer to watch. This data is used to produce shows like The umbrella academy, and Orange Is the New Black, and the Queen's Gambit. These shows seem like a huge risk but are significantly based on data analytics using parameters, which assured Netflix that they would succeed with its audience. Data analytics is helping Netflix come up with content that their viewers want to watch even before they know they want to watch it.

iii) Marketing Analytics for Campaigns

Netflix uses data analytics to find the right time to launch shows and ad campaigns to have maximum impact on the target audience. Marketing analytics helps come up with different trailers and thumbnails for other groups of viewers. For example, the House of Cards Season 5 trailer with a giant American flag was launched during the American presidential elections, as it would resonate well with the audience.

Here is a Customer Segmentation Project using association rule mining to understand the primary grouping of customers based on various parameters.

Get FREE Access to Machine Learning Example Codes for Data Cleaning , Data Munging, and Data Visualization

In a world where Purchasing music is a thing of the past and streaming music is a current trend, Spotify has emerged as one of the most popular streaming platforms. With 320 million monthly users, around 4 billion playlists, and approximately 2 million podcasts, Spotify leads the pack among well-known streaming platforms like Apple Music, Wynk, Songza, amazon music, etc. The success of Spotify has mainly depended on data analytics. By analyzing massive volumes of listener data, Spotify provides real-time and personalized services to its listeners. Most of Spotify's revenue comes from paid premium subscriptions. Here are some of the examples of case study on data analytics used by Spotify to provide enhanced services to its listeners:

i) Personalization of Content using Recommendation Systems

Spotify uses Bart or Bayesian Additive Regression Trees to generate music recommendations to its listeners in real-time. Bart ignores any song a user listens to for less than 30 seconds. The model is retrained every day to provide updated recommendations. A new Patent granted to Spotify for an AI application is used to identify a user's musical tastes based on audio signals, gender, age, accent to make better music recommendations.

Spotify creates daily playlists for its listeners, based on the taste profiles called 'Daily Mixes,' which have songs the user has added to their playlists or created by the artists that the user has included in their playlists. It also includes new artists and songs that the user might be unfamiliar with but might improve the playlist. Similar to it is the weekly 'Release Radar' playlists that have newly released artists' songs that the listener follows or has liked before.

ii) Targetted marketing through Customer Segmentation

With user data for enhancing personalized song recommendations, Spotify uses this massive dataset for targeted ad campaigns and personalized service recommendations for its users. Spotify uses ML models to analyze the listener's behavior and group them based on music preferences, age, gender, ethnicity, etc. These insights help them create ad campaigns for a specific target audience. One of their well-known ad campaigns was the meme-inspired ads for potential target customers, which was a huge success globally.

iii) CNN's for Classification of Songs and Audio Tracks

Spotify builds audio models to evaluate the songs and tracks, which helps develop better playlists and recommendations for its users. These allow Spotify to filter new tracks based on their lyrics and rhythms and recommend them to users like similar tracks ( collaborative filtering). Spotify also uses NLP ( Natural language processing) to scan articles and blogs to analyze the words used to describe songs and artists. These analytical insights can help group and identify similar artists and songs and leverage them to build playlists.

Here is a Music Recommender System Project for you to start learning. We have listed another music recommendations dataset for you to use for your projects: Dataset1 . You can use this dataset of Spotify metadata to classify songs based on artists, mood, liveliness. Plot histograms, heatmaps to get a better understanding of the dataset. Use classification algorithms like logistic regression, SVM, and Principal component analysis to generate valuable insights from the dataset.

Explore Categories

Below you will find case studies for data analytics in the travel and tourism industry.

Airbnb was born in 2007 in San Francisco and has since grown to 4 million Hosts and 5.6 million listings worldwide who have welcomed more than 1 billion guest arrivals in almost every country across the globe. Airbnb is active in every country on the planet except for Iran, Sudan, Syria, and North Korea. That is around 97.95% of the world. Using data as a voice of their customers, Airbnb uses the large volume of customer reviews, host inputs to understand trends across communities, rate user experiences, and uses these analytics to make informed decisions to build a better business model. The data scientists at Airbnb are developing exciting new solutions to boost the business and find the best mapping for its customers and hosts. Airbnb data servers serve approximately 10 million requests a day and process around one million search queries. Data is the voice of customers at AirBnB and offers personalized services by creating a perfect match between the guests and hosts for a supreme customer experience.

i) Recommendation Systems and Search Ranking Algorithms

Airbnb helps people find 'local experiences' in a place with the help of search algorithms that make searches and listings precise. Airbnb uses a 'listing quality score' to find homes based on the proximity to the searched location and uses previous guest reviews. Airbnb uses deep neural networks to build models that take the guest's earlier stays into account and area information to find a perfect match. The search algorithms are optimized based on guest and host preferences, rankings, pricing, and availability to understand users’ needs and provide the best match possible.

ii) Natural Language Processing for Review Analysis

Airbnb characterizes data as the voice of its customers. The customer and host reviews give a direct insight into the experience. The star ratings alone cannot be an excellent way to understand it quantitatively. Hence Airbnb uses natural language processing to understand reviews and the sentiments behind them. The NLP models are developed using Convolutional neural networks .

Practice this Sentiment Analysis Project for analyzing product reviews to understand the basic concepts of natural language processing.

iii) Smart Pricing using Predictive Analytics

The Airbnb hosts community uses the service as a supplementary income. The vacation homes and guest houses rented to customers provide for rising local community earnings as Airbnb guests stay 2.4 times longer and spend approximately 2.3 times the money compared to a hotel guest. The profits are a significant positive impact on the local neighborhood community. Airbnb uses predictive analytics to predict the prices of the listings and help the hosts set a competitive and optimal price. The overall profitability of the Airbnb host depends on factors like the time invested by the host and responsiveness to changing demands for different seasons. The factors that impact the real-time smart pricing are the location of the listing, proximity to transport options, season, and amenities available in the neighborhood of the listing.

Here is a Price Prediction Project to help you understand the concept of predictive analysis which is widely common in case studies for data analytics.

Uber is the biggest global taxi service provider. As of December 2018, Uber has 91 million monthly active consumers and 3.8 million drivers. Uber completes 14 million trips each day. Uber uses data analytics and big data-driven technologies to optimize their business processes and provide enhanced customer service. The Data Science team at uber has been exploring futuristic technologies to provide better service constantly. Machine learning and data analytics help Uber make data-driven decisions that enable benefits like ride-sharing, dynamic price surges, better customer support, and demand forecasting. Here are some of the real world data science projects used by uber:

i) Dynamic Pricing for Price Surges and Demand Forecasting

Uber prices change at peak hours based on demand. Uber uses surge pricing to encourage more cab drivers to sign up with the company, to meet the demand from the passengers. When the prices increase, the driver and the passenger are both informed about the surge in price. Uber uses a predictive model for price surging called the 'Geosurge' ( patented). It is based on the demand for the ride and the location.

ii) One-Click Chat

Uber has developed a Machine learning and natural language processing solution called one-click chat or OCC for coordination between drivers and users. This feature anticipates responses for commonly asked questions, making it easy for the drivers to respond to customer messages. Drivers can reply with the clock of just one button. One-Click chat is developed on Uber's machine learning platform Michelangelo to perform NLP on rider chat messages and generate appropriate responses to them.

iii) Customer Retention

Failure to meet the customer demand for cabs could lead to users opting for other services. Uber uses machine learning models to bridge this demand-supply gap. By using prediction models to predict the demand in any location, uber retains its customers. Uber also uses a tier-based reward system, which segments customers into different levels based on usage. The higher level the user achieves, the better are the perks. Uber also provides personalized destination suggestions based on the history of the user and their frequently traveled destinations.

You can take a look at this Python Chatbot Project and build a simple chatbot application to understand better the techniques used for natural language processing. You can also practice the working of a demand forecasting model with this project using time series analysis. You can look at this project which uses time series forecasting and clustering on a dataset containing geospatial data for forecasting customer demand for ola rides.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

7) LinkedIn

LinkedIn is the largest professional social networking site with nearly 800 million members in more than 200 countries worldwide. Almost 40% of the users access LinkedIn daily, clocking around 1 billion interactions per month. The data science team at LinkedIn works with this massive pool of data to generate insights to build strategies, apply algorithms and statistical inferences to optimize engineering solutions, and help the company achieve its goals. Here are some of the real world data science projects at LinkedIn:

i) LinkedIn Recruiter Implement Search Algorithms and Recommendation Systems

LinkedIn Recruiter helps recruiters build and manage a talent pool to optimize the chances of hiring candidates successfully. This sophisticated product works on search and recommendation engines. The LinkedIn recruiter handles complex queries and filters on a constantly growing large dataset. The results delivered have to be relevant and specific. The initial search model was based on linear regression but was eventually upgraded to Gradient Boosted decision trees to include non-linear correlations in the dataset. In addition to these models, the LinkedIn recruiter also uses the Generalized Linear Mix model to improve the results of prediction problems to give personalized results.

ii) Recommendation Systems Personalized for News Feed

The LinkedIn news feed is the heart and soul of the professional community. A member's newsfeed is a place to discover conversations among connections, career news, posts, suggestions, photos, and videos. Every time a member visits LinkedIn, machine learning algorithms identify the best exchanges to be displayed on the feed by sorting through posts and ranking the most relevant results on top. The algorithms help LinkedIn understand member preferences and help provide personalized news feeds. The algorithms used include logistic regression, gradient boosted decision trees and neural networks for recommendation systems.

iii) CNN's to Detect Inappropriate Content

To provide a professional space where people can trust and express themselves professionally in a safe community has been a critical goal at LinkedIn. LinkedIn has heavily invested in building solutions to detect fake accounts and abusive behavior on their platform. Any form of spam, harassment, inappropriate content is immediately flagged and taken down. These can range from profanity to advertisements for illegal services. LinkedIn uses a Convolutional neural networks based machine learning model. This classifier trains on a training dataset containing accounts labeled as either "inappropriate" or "appropriate." The inappropriate list consists of accounts having content from "blocklisted" phrases or words and a small portion of manually reviewed accounts reported by the user community.

Here is a Text Classification Project to help you understand NLP basics for text classification. You can find a news recommendation system dataset to help you build a personalized news recommender system. You can also use this dataset to build a classifier using logistic regression, Naive Bayes, or Neural networks to classify toxic comments.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Pfizer is a multinational pharmaceutical company headquartered in New York, USA. One of the largest pharmaceutical companies globally known for developing a wide range of medicines and vaccines in disciplines like immunology, oncology, cardiology, and neurology. Pfizer became a household name in 2010 when it was the first to have a COVID-19 vaccine with FDA. In early November 2021, The CDC has approved the Pfizer vaccine for kids aged 5 to 11. Pfizer has been using machine learning and artificial intelligence to develop drugs and streamline trials, which played a massive role in developing and deploying the COVID-19 vaccine. Here are a few data analytics case studies by Pfizer :

i) Identifying Patients for Clinical Trials

Artificial intelligence and machine learning are used to streamline and optimize clinical trials to increase their efficiency. Natural language processing and exploratory data analysis of patient records can help identify suitable patients for clinical trials. These can help identify patients with distinct symptoms. These can help examine interactions of potential trial members' specific biomarkers, predict drug interactions and side effects which can help avoid complications. Pfizer's AI implementation helped rapidly identify signals within the noise of millions of data points across their 44,000-candidate COVID-19 clinical trial.

ii) Supply Chain and Manufacturing

Data science and machine learning techniques help pharmaceutical companies better forecast demand for vaccines and drugs and distribute them efficiently. Machine learning models can help identify efficient supply systems by automating and optimizing the production steps. These will help supply drugs customized to small pools of patients in specific gene pools. Pfizer uses Machine learning to predict the maintenance cost of equipment used. Predictive maintenance using AI is the next big step for Pharmaceutical companies to reduce costs.

iii) Drug Development

Computer simulations of proteins, and tests of their interactions, and yield analysis help researchers develop and test drugs more efficiently. In 2016 Watson Health and Pfizer announced a collaboration to utilize IBM Watson for Drug Discovery to help accelerate Pfizer's research in immuno-oncology, an approach to cancer treatment that uses the body's immune system to help fight cancer. Deep learning models have been used recently for bioactivity and synthesis prediction for drugs and vaccines in addition to molecular design. Deep learning has been a revolutionary technique for drug discovery as it factors everything from new applications of medications to possible toxic reactions which can save millions in drug trials.

You can create a Machine learning model to predict molecular activity to help design medicine using this dataset . You may build a CNN or a Deep neural network for this data analyst case study project.

Access Data Science and Machine Learning Project Code Examples

9) Shell Data Analyst Case Study Project

Shell is a global group of energy and petrochemical companies with over 80,000 employees in around 70 countries. Shell uses advanced technologies and innovations to help build a sustainable energy future. Shell is going through a significant transition as the world needs more and cleaner energy solutions to be a clean energy company by 2050. It requires substantial changes in the way in which energy is used. Digital technologies, including AI and Machine Learning, play an essential role in this transformation. These include efficient exploration and energy production, more reliable manufacturing, more nimble trading, and a personalized customer experience. Using AI in various phases of the organization will help achieve this goal and stay competitive in the market. Here are a few data analytics case studies in the petrochemical industry:

i) Precision Drilling

Shell is involved in the processing mining oil and gas supply, ranging from mining hydrocarbons to refining the fuel to retailing them to customers. Recently Shell has included reinforcement learning to control the drilling equipment used in mining. Reinforcement learning works on a reward-based system based on the outcome of the AI model. The algorithm is designed to guide the drills as they move through the surface, based on the historical data from drilling records. It includes information such as the size of drill bits, temperatures, pressures, and knowledge of the seismic activity. This model helps the human operator understand the environment better, leading to better and faster results will minor damage to machinery used.

ii) Efficient Charging Terminals

Due to climate changes, governments have encouraged people to switch to electric vehicles to reduce carbon dioxide emissions. However, the lack of public charging terminals has deterred people from switching to electric cars. Shell uses AI to monitor and predict the demand for terminals to provide efficient supply. Multiple vehicles charging from a single terminal may create a considerable grid load, and predictions on demand can help make this process more efficient.

iii) Monitoring Service and Charging Stations

Another Shell initiative trialed in Thailand and Singapore is the use of computer vision cameras, which can think and understand to watch out for potentially hazardous activities like lighting cigarettes in the vicinity of the pumps while refueling. The model is built to process the content of the captured images and label and classify it. The algorithm can then alert the staff and hence reduce the risk of fires. You can further train the model to detect rash driving or thefts in the future.

Here is a project to help you understand multiclass image classification. You can use the Hourly Energy Consumption Dataset to build an energy consumption prediction model. You can use time series with XGBoost to develop your model.

10) Zomato Case Study on Data Analytics

Zomato was founded in 2010 and is currently one of the most well-known food tech companies. Zomato offers services like restaurant discovery, home delivery, online table reservation, online payments for dining, etc. Zomato partners with restaurants to provide tools to acquire more customers while also providing delivery services and easy procurement of ingredients and kitchen supplies. Currently, Zomato has over 2 lakh restaurant partners and around 1 lakh delivery partners. Zomato has closed over ten crore delivery orders as of date. Zomato uses ML and AI to boost their business growth, with the massive amount of data collected over the years from food orders and user consumption patterns. Here are a few examples of data analyst case study project developed by the data scientists at Zomato:

i) Personalized Recommendation System for Homepage

Zomato uses data analytics to create personalized homepages for its users. Zomato uses data science to provide order personalization, like giving recommendations to the customers for specific cuisines, locations, prices, brands, etc. Restaurant recommendations are made based on a customer's past purchases, browsing history, and what other similar customers in the vicinity are ordering. This personalized recommendation system has led to a 15% improvement in order conversions and click-through rates for Zomato.

You can use the Restaurant Recommendation Dataset to build a restaurant recommendation system to predict what restaurants customers are most likely to order from, given the customer location, restaurant information, and customer order history.

ii) Analyzing Customer Sentiment

Zomato uses Natural language processing and Machine learning to understand customer sentiments using social media posts and customer reviews. These help the company gauge the inclination of its customer base towards the brand. Deep learning models analyze the sentiments of various brand mentions on social networking sites like Twitter, Instagram, Linked In, and Facebook. These analytics give insights to the company, which helps build the brand and understand the target audience.

iii) Predicting Food Preparation Time (FPT)

Food delivery time is an essential variable in the estimated delivery time of the order placed by the customer using Zomato. The food preparation time depends on numerous factors like the number of dishes ordered, time of the day, footfall in the restaurant, day of the week, etc. Accurate prediction of the food preparation time can help make a better prediction of the Estimated delivery time, which will help delivery partners less likely to breach it. Zomato uses a Bidirectional LSTM-based deep learning model that considers all these features and provides food preparation time for each order in real-time.

Data scientists are companies' secret weapons when analyzing customer sentiments and behavior and leveraging it to drive conversion, loyalty, and profits. These 10 data science case studies projects with examples and solutions show you how various organizations use data science technologies to succeed and be at the top of their field! To summarize, Data Science has not only accelerated the performance of companies but has also made it possible to manage & sustain their performance with ease.

FAQs on Data Analysis Case Studies

A case study in data science is an in-depth analysis of a real-world problem using data-driven approaches. It involves collecting, cleaning, and analyzing data to extract insights and solve challenges, offering practical insights into how data science techniques can address complex issues across various industries.

To create a data science case study, identify a relevant problem, define objectives, and gather suitable data. Clean and preprocess data, perform exploratory data analysis, and apply appropriate algorithms for analysis. Summarize findings, visualize results, and provide actionable recommendations, showcasing the problem-solving potential of data science techniques.

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

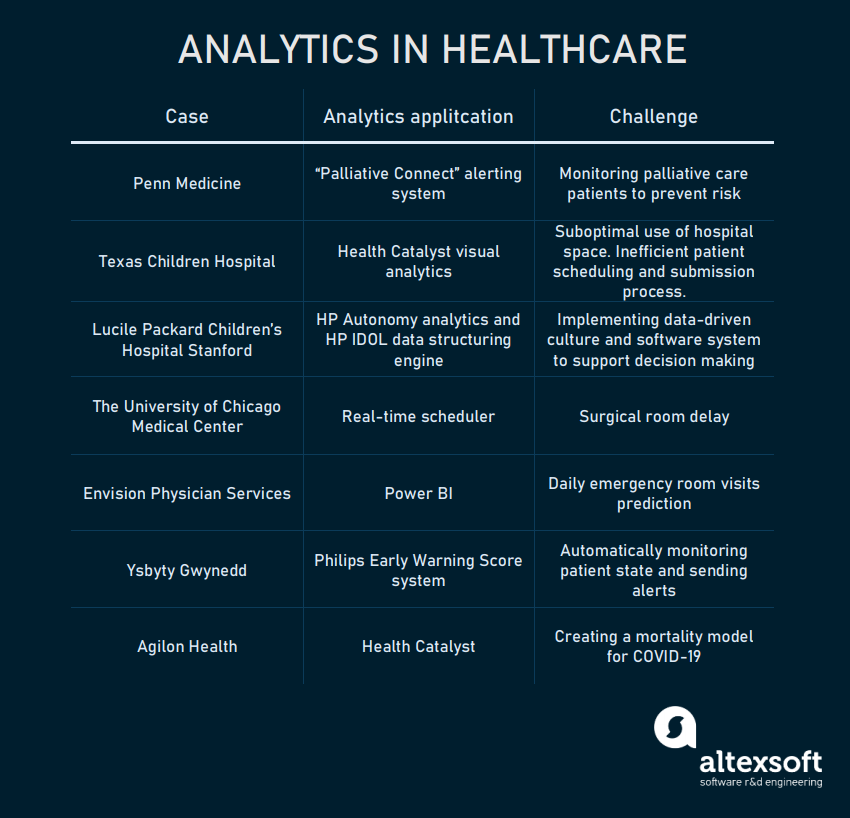

Top 20 Analytics Case Studies in 2024

Although the potential of Big Data and business intelligence are recognized by organizations, Gartner analyst Nick Heudecker says that the failure rate of analytics projects is close to 85%. Uncovering the power of analytics improves business operations, reduces costs, enhances decision-making , and enables the launching of more personalized products.

In this article, our research covers:

How to measure analytics success?

What are some analytics case studies.

According to Gartner CDO Survey, the top 3 critical success factors of analytics projects are:

- Creation of a data-driven culture within the organization,

- Data integration and data skills training across the organization,

- And implementation of a data management and analytics strategy.

The success of the process of analytics depends on asking the right question. It requires an understanding of the appropriate data required for each goal to be achieved. We’ve listed 20 successful analytics applications/case studies from different industries.

During our research, we examined that partnering with an analytics consultant helps organizations boost their success if organizations’ tech team lacks certain data skills.

*Vendors have not shared the client name

For more on analytics

If your organization is willing to implement an analytics solution but doesn’t know where to start, here are some of the articles we’ve written before that can help you learn more:

- AI in analytics: How AI is shaping analytics

- Edge Analytics in 2022: What it is, Why it matters & Use Cases

- Application Analytics: Tracking KPIs that lead to success

Finally, if you believe that your business would benefit from adopting an analytics solution, we have data-driven lists of vendors on our analytics hub and analytics platforms

We will help you choose the best solution tailored to your needs:

Cem has been the principal analyst at AIMultiple since 2017. AIMultiple informs hundreds of thousands of businesses (as per similarWeb) including 60% of Fortune 500 every month. Cem's work has been cited by leading global publications including Business Insider , Forbes, Washington Post , global firms like Deloitte , HPE, NGOs like World Economic Forum and supranational organizations like European Commission . You can see more reputable companies and media that referenced AIMultiple. Throughout his career, Cem served as a tech consultant, tech buyer and tech entrepreneur. He advised businesses on their enterprise software, automation, cloud, AI / ML and other technology related decisions at McKinsey & Company and Altman Solon for more than a decade. He also published a McKinsey report on digitalization. He led technology strategy and procurement of a telco while reporting to the CEO. He has also led commercial growth of deep tech company Hypatos that reached a 7 digit annual recurring revenue and a 9 digit valuation from 0 within 2 years. Cem's work in Hypatos was covered by leading technology publications like TechCrunch and Business Insider . Cem regularly speaks at international technology conferences. He graduated from Bogazici University as a computer engineer and holds an MBA from Columbia Business School.

To stay up-to-date on B2B tech & accelerate your enterprise:

Next to Read

14 case studies of manufacturing analytics in 2024, what is data virtualization benefits, case studies & top tools [2024], iot analytics: benefits, challenges, use cases & vendors [2024].

Your email address will not be published. All fields are required.

Related research

Exploring Analytics & AI in 2024: A Detailed Primer

Healthcare Analytics: Importance & Market Landscape in 2024

FOR EMPLOYERS

Top 10 real-world data science case studies.

Aditya Sharma

Aditya is a content writer with 5+ years of experience writing for various industries including Marketing, SaaS, B2B, IT, and Edtech among others. You can find him watching anime or playing games when he’s not writing.

Frequently Asked Questions

Real-world data science case studies differ significantly from academic examples. While academic exercises often feature clean, well-structured data and simplified scenarios, real-world projects tackle messy, diverse data sources with practical constraints and genuine business objectives. These case studies reflect the complexities data scientists face when translating data into actionable insights in the corporate world.

Real-world data science projects come with common challenges. Data quality issues, including missing or inaccurate data, can hinder analysis. Domain expertise gaps may result in misinterpretation of results. Resource constraints might limit project scope or access to necessary tools and talent. Ethical considerations, like privacy and bias, demand careful handling.

Lastly, as data and business needs evolve, data science projects must adapt and stay relevant, posing an ongoing challenge.

Real-world data science case studies play a crucial role in helping companies make informed decisions. By analyzing their own data, businesses gain valuable insights into customer behavior, market trends, and operational efficiencies.

These insights empower data-driven strategies, aiding in more effective resource allocation, product development, and marketing efforts. Ultimately, case studies bridge the gap between data science and business decision-making, enhancing a company's ability to thrive in a competitive landscape.

Key takeaways from these case studies for organizations include the importance of cultivating a data-driven culture that values evidence-based decision-making. Investing in robust data infrastructure is essential to support data initiatives. Collaborating closely between data scientists and domain experts ensures that insights align with business goals.

Finally, continuous monitoring and refinement of data solutions are critical for maintaining relevance and effectiveness in a dynamic business environment. Embracing these principles can lead to tangible benefits and sustainable success in real-world data science endeavors.

Data science is a powerful driver of innovation and problem-solving across diverse industries. By harnessing data, organizations can uncover hidden patterns, automate repetitive tasks, optimize operations, and make informed decisions.

In healthcare, for example, data-driven diagnostics and treatment plans improve patient outcomes. In finance, predictive analytics enhances risk management. In transportation, route optimization reduces costs and emissions. Data science empowers industries to innovate and solve complex challenges in ways that were previously unimaginable.

Hire remote developers

Tell us the skills you need and we'll find the best developer for you in days, not weeks.

Data Analytics Case Study Guide (Updated for 2024)

What are data analytics case study interviews.

When you’re trying to land a data analyst job, the last thing to stand in your way is the data analytics case study interview.

One reason they’re so challenging is that case studies don’t typically have a right or wrong answer.

Instead, case study interviews require you to come up with a hypothesis for an analytics question and then produce data to support or validate your hypothesis. In other words, it’s not just about your technical skills; you’re also being tested on creative problem-solving and your ability to communicate with stakeholders.

This article provides an overview of how to answer data analytics case study interview questions. You can find an in-depth course in the data analytics learning path .

How to Solve Data Analytics Case Questions

Check out our video below on How to solve a Data Analytics case study problem:

With data analyst case questions, you will need to answer two key questions:

- What metrics should I propose?

- How do I write a SQL query to get the metrics I need?

In short, to ace a data analytics case interview, you not only need to brush up on case questions, but you also should be adept at writing all types of SQL queries and have strong data sense.

These questions are especially challenging to answer if you don’t have a framework or know how to answer them. To help you prepare, we created this step-by-step guide to answering data analytics case questions.

We show you how to use a framework to answer case questions, provide example analytics questions, and help you understand the difference between analytics case studies and product metrics case studies .

Data Analytics Cases vs Product Metrics Questions

Product case questions sometimes get lumped in with data analytics cases.

Ultimately, the type of case question you are asked will depend on the role. For example, product analysts will likely face more product-oriented questions.

Product metrics cases tend to focus on a hypothetical situation. You might be asked to:

Investigate Metrics - One of the most common types will ask you to investigate a metric, usually one that’s going up or down. For example, “Why are Facebook friend requests falling by 10 percent?”

Measure Product/Feature Success - A lot of analytics cases revolve around the measurement of product success and feature changes. For example, “We want to add X feature to product Y. What metrics would you track to make sure that’s a good idea?”

With product data cases, the key difference is that you may or may not be required to write the SQL query to find the metric.

Instead, these interviews are more theoretical and are designed to assess your product sense and ability to think about analytics problems from a product perspective. Product metrics questions may also show up in the data analyst interview , but likely only for product data analyst roles.

Data Analytics Case Study Question: Sample Solution

Let’s start with an example data analytics case question :

You’re given a table that represents search results from searches on Facebook. The query column is the search term, the position column represents each position the search result came in, and the rating column represents the human rating from 1 to 5, where 5 is high relevance, and 1 is low relevance.

Each row in the search_events table represents a single search, with the has_clicked column representing if a user clicked on a result or not. We have a hypothesis that the CTR is dependent on the search result rating.

Write a query to return data to support or disprove this hypothesis.

search_results table:

search_events table

Step 1: With Data Analytics Case Studies, Start by Making Assumptions

Hint: Start by making assumptions and thinking out loud. With this question, focus on coming up with a metric to support the hypothesis. If the question is unclear or if you think you need more information, be sure to ask.

Answer. The hypothesis is that CTR is dependent on search result rating. Therefore, we want to focus on the CTR metric, and we can assume:

- If CTR is high when search result ratings are high, and CTR is low when the search result ratings are low, then the hypothesis is correct.

- If CTR is low when the search ratings are high, or there is no proven correlation between the two, then our hypothesis is not proven.

Step 2: Provide a Solution for the Case Question

Hint: Walk the interviewer through your reasoning. Talking about the decisions you make and why you’re making them shows off your problem-solving approach.

Answer. One way we can investigate the hypothesis is to look at the results split into different search rating buckets. For example, if we measure the CTR for results rated at 1, then those rated at 2, and so on, we can identify if an increase in rating is correlated with an increase in CTR.

First, I’d write a query to get the number of results for each query in each bucket. We want to look at the distribution of results that are less than a rating threshold, which will help us see the relationship between search rating and CTR.

This CTE aggregates the number of results that are less than a certain rating threshold. Later, we can use this to see the percentage that are in each bucket. If we re-join to the search_events table, we can calculate the CTR by then grouping by each bucket.

Step 3: Use Analysis to Backup Your Solution

Hint: Be prepared to justify your solution. Interviewers will follow up with questions about your reasoning, and ask why you make certain assumptions.

Answer. By using the CASE WHEN statement, I calculated each ratings bucket by checking to see if all the search results were less than 1, 2, or 3 by subtracting the total from the number within the bucket and seeing if it equates to 0.

I did that to get away from averages in our bucketing system. Outliers would make it more difficult to measure the effect of bad ratings. For example, if a query had a 1 rating and another had a 5 rating, that would equate to an average of 3. Whereas in my solution, a query with all of the results under 1, 2, or 3 lets us know that it actually has bad ratings.

Product Data Case Question: Sample Solution

In product metrics interviews, you’ll likely be asked about analytics, but the discussion will be more theoretical. You’ll propose a solution to a problem, and supply the metrics you’ll use to investigate or solve it. You may or may not be required to write a SQL query to get those metrics.

We’ll start with an example product metrics case study question :

Let’s say you work for a social media company that has just done a launch in a new city. Looking at weekly metrics, you see a slow decrease in the average number of comments per user from January to March in this city.

The company has been consistently growing new users in the city from January to March.

What are some reasons why the average number of comments per user would be decreasing and what metrics would you look into?

Step 1: Ask Clarifying Questions Specific to the Case

Hint: This question is very vague. It’s all hypothetical, so we don’t know very much about users, what the product is, and how people might be interacting. Be sure you ask questions upfront about the product.

Answer: Before I jump into an answer, I’d like to ask a few questions:

- Who uses this social network? How do they interact with each other?

- Has there been any performance issues that might be causing the problem?

- What are the goals of this particular launch?

- Has there been any changes to the comment features in recent weeks?

For the sake of this example, let’s say we learn that it’s a social network similar to Facebook with a young audience, and the goals of the launch are to grow the user base. Also, there have been no performance issues and the commenting feature hasn’t been changed since launch.

Step 2: Use the Case Question to Make Assumptions

Hint: Look for clues in the question. For example, this case gives you a metric, “average number of comments per user.” Consider if the clue might be helpful in your solution. But be careful, sometimes questions are designed to throw you off track.

Answer: From the question, we can hypothesize a little bit. For example, we know that user count is increasing linearly. That means two things:

- The decreasing comments issue isn’t a result of a declining user base.

- The cause isn’t loss of platform.

We can also model out the data to help us get a better picture of the average number of comments per user metric:

- January: 10000 users, 30000 comments, 3 comments/user

- February: 20000 users, 50000 comments, 2.5 comments/user

- March: 30000 users, 60000 comments, 2 comments/user

One thing to note: Although this is an interesting metric, I’m not sure if it will help us solve this question. For one, average comments per user doesn’t account for churn. We might assume that during the three-month period users are churning off the platform. Let’s say the churn rate is 25% in January, 20% in February and 15% in March.

Step 3: Make a Hypothesis About the Data

Hint: Don’t worry too much about making a correct hypothesis. Instead, interviewers want to get a sense of your product initiation and that you’re on the right track. Also, be prepared to measure your hypothesis.

Answer. I would say that average comments per user isn’t a great metric to use, because it doesn’t reveal insights into what’s really causing this issue.

That’s because it doesn’t account for active users, which are the users who are actually commenting. A better metric to investigate would be retained users and monthly active users.

What I suspect is causing the issue is that active users are commenting frequently and are responsible for the increase in comments month-to-month. New users, on the other hand, aren’t as engaged and aren’t commenting as often.

Step 4: Provide Metrics and Data Analysis

Hint: Within your solution, include key metrics that you’d like to investigate that will help you measure success.

Answer: I’d say there are a few ways we could investigate the cause of this problem, but the one I’d be most interested in would be the engagement of monthly active users.

If the growth in comments is coming from active users, that would help us understand how we’re doing at retaining users. Plus, it will also show if new users are less engaged and commenting less frequently.

One way that we could dig into this would be to segment users by their onboarding date, which would help us to visualize engagement and see how engaged some of our longest-retained users are.

If engagement of new users is the issue, that will give us some options in terms of strategies for addressing the problem. For example, we could test new onboarding or commenting features designed to generate engagement.

Step 5: Propose a Solution for the Case Question

Hint: In the majority of cases, your initial assumptions might be incorrect, or the interviewer might throw you a curveball. Be prepared to make new hypotheses or discuss the pitfalls of your analysis.

Answer. If the cause wasn’t due to a lack of engagement among new users, then I’d want to investigate active users. One potential cause would be active users commenting less. In that case, we’d know that our earliest users were churning out, and that engagement among new users was potentially growing.

Again, I think we’d want to focus on user engagement since the onboarding date. That would help us understand if we were seeing higher levels of churn among active users, and we could start to identify some solutions there.

Tip: Use a Framework to Solve Data Analytics Case Questions

Analytics case questions can be challenging, but they’re much more challenging if you don’t use a framework. Without a framework, it’s easier to get lost in your answer, to get stuck, and really lose the confidence of your interviewer. Find helpful frameworks for data analytics questions in our data analytics learning path and our product metrics learning path .

Once you have the framework down, what’s the best way to practice? Mock interviews with our coaches are very effective, as you’ll get feedback and helpful tips as you answer. You can also learn a lot by practicing P2P mock interviews with other Interview Query students. No data analytics background? Check out how to become a data analyst without a degree .

Finally, if you’re looking for sample data analytics case questions and other types of interview questions, see our guide on the top data analyst interview questions .

Data Analytics Case Studies that will inspire you.

- June 16, 2023 July 21, 2023

As an explorer in the world of big data Analytics, I’ve witnessed firsthand how the strategic or sometimes even Tactical application of data analytics can shape industries, transform businesses, and revolutionize the way we do business. However, data is boring and that’s why case studies that are real-world narratives of businesses from diverse industries—manufacturing, retail, finance, logistics, telecom, and insurance are so much more interesting.

All Data analytics case studies are a testament to the transformative power of data. Like for example Siemens, a global industrial giant, has leveraged data analytics to increase production efficiency, reducing production time by an astounding 20%. Retail behemoth Amazon has harnessed data analytics to personalize customer shopping experiences, while Bank of America uses it to identify fraudulent transactions, cutting its fraud losses by half.

You will agree that it’s a transformative force reshaping the way businesses operate, make decisions, and interact with their customers. In our company, we often liken it to a goldmine. The raw data, much like the rough ore, may not appear valuable at first glance. However, with the right tools, techniques, and a touch of analytical magic, we can extract precious insights hidden within, just as miners draw valuable gold from the earth.

It takes a huge amount of effort to Unravel the immense potential of data analytics to foster innovation, enhance decision-making, and improve customer experiences. In the data realm, the possibilities are as vast as the data itself.

How Data Analytics is Revolutionizing the Manufacturing Industry

Data Analytics is Revolutionizing the Manufacturing Industry. Around 80% of companies believe that data analytics will be key to their success in the near future. Already, 65% of them are seeing positive changes from using data analytics. One of the most substantial benefits is the cost-savings and manufacturers who use data analytics save about $1.2 million each year. It’s also helping improve product quality by 20% and reduce production costs by up to 15%. Some inspiration can be drawn from how these big companies are using data analytics to transform manufacturing.

- Siemens: Siemens is using data analytics to improve the efficiency of its production lines. The company has installed sensors on its equipment to collect data on production processes. This data is then analyzed to identify areas where efficiency can be improved. As a result of these efforts, Siemens has been able to reduce the time it takes to produce a product by 20%.

- General Electric: General Electric is using data analytics to improve the quality of its products. The company has developed a system that uses data analytics to identify potential defects in products before they are shipped to customers. This system has helped General Electric to reduce the number of defects in its products by 50%.

- Nike: Nike is using data analytics to improve the performance of its athletes. The company has developed a system that uses data analytics to track the performance of athletes during training. This data is then used to provide athletes with personalized training plans that help them to improve their performance.

These are just a few examples of how data analytics is being used in the manufacturing industry. As technology continues to develop, As the amount of data generated by manufacturing operations continues to grow, the potential benefits of data analytics are expected to rise proportionately. We can expect to see even more innovative and creative ways to use data analytics to improve manufacturing operations.

Retailers using data analytics to Personalize the Shopping Experience

Retail Industry is big, According to Statista , the global retail market generated 27 trillion U.S. dollars in 2022. This is expected to grow to 30 trillion U.S. dollars by 2024. The industry is also a major employment generator, according to the World Bank, the retail industry employed 627 million people in 2020. This number is expected to grow to 715 million people by 2030.

The retail industry is also a major source of innovation, as businesses are constantly finding new ways to reach customers and sell products. One of the key initiatives all major retailers are trying is personalization which is impossible without effective Data analytics.

Retailers have recognized that personalization not only enhances customer engagement but also amplifies customer lifetime value, with 67% of retailers asserting that it can heighten this value by up to 10%. Churn, a critical metric for retailers, can be substantially reduced through personalization. A few case studies which highlight the same

- Amazon: Amazon is using data analytics to personalize the shopping experience for its customers. The company collects data on customer purchase history, browsing behavior, and search history. This data is then used to recommend products that the customer is likely to be interested in. Amazon also uses data analytics to target customers with personalized advertising.

- Target: Target is using data analytics to predict customer behavior. The company collects data on customer purchase history, browsing behavior, and social media activity. This data is then used to predict when a customer is likely to make a purchase. Target can then send targeted marketing messages to these customers.

As retailers continue to accumulate more data on customer preferences, behaviors, and purchasing patterns, the potential benefits of personalization are expected to grow.

How Data Analytics is Helping Financial Institutions to Combat Fraud

Everyone is looking for easy money and that is the key reason why financial institutions are constantly under attack from fraudsters. In 2021, the global cost of fraud was estimated to be $5.8 trillion. Data analytics is helping financial institutions to combat fraud in a number of ways.

- Bank of America: Bank of America is using data analytics to combat fraud. The company collects data on customer transactions, account balances, and credit scores. This data is then used to identify fraudulent transactions. Bank of America has been able to reduce its fraud losses by 50% as a result of these efforts.

- Capital One: Capital One is using data analytics to personalize the lending experience for its customers. The company collects data on customer income, employment history, and credit scores. This data is then used to determine which customers are most likely to repay a loan. Capital One has been able to reduce its loan defaults by 20% as a result of these efforts.

- Wells Fargo: Wells Fargo is using data analytics to improve customer service. The company collects data on customer calls, emails, and social media interactions. This data is then used to identify areas where customer service can be improved. Wells Fargo has been able to reduce the number of customer complaints by 10% as a result of these efforts.

These are just a few examples of how data analytics is being used by financial institutions to combat fraud. As technology continues to develop, we can expect to see even more innovative and creative ways to use data analytics to combat fraud one example could be the use of Generative adversarial networks (GANs) a machine learning model which can be trained to combat fraud.

How Data Analytics Helps to reduce delivery time and Improve Supply Chain

Data analytics plays a crucial role in optimizing supply chains and reducing delivery times. This is a separate center of excellence created by companies that are known as Supply chain analytics . By collecting and analyzing vast amounts of data from various touchpoints in the supply chain, companies can make more informed decisions, anticipate problems, and create efficiencies that save both time and money. A study by McKinsey found that data analytics can help to improve supply chain efficiency by up to 30%.

- Walmart: Walmart is using data analytics to improve the efficiency of its supply chain. The company collects data on sales, inventory levels, and transportation costs. This data is then used to identify areas where efficiency can be improved. Walmart has been able to reduce its transportation costs by 10% as a result of these efforts.

- UPS: UPS is using data analytics to improve the efficiency of its delivery operations. The company collects data on weather conditions, traffic patterns, and customer behavior. This data is then used to optimize delivery routes and times. UPS has been able to reduce its delivery times by 5% as a result of these efforts.

- Amazon: Amazon is using data analytics to improve the efficiency of its fulfillment centers. The company collects data on product demand, inventory levels, and worker productivity. This data is then used to optimize the layout of fulfillment centers and the allocation of workers. Amazon has been able to increase the productivity of its fulfillment centers by 20% as a result of these efforts.c

The supply chain department now uses Real-time analytics, a Supply chain control tower that brings constant visibility of various KPIs of the organization.

How Telecom Companies are leveraging data analytics to improve various KPI

Telecommunication companies are increasingly turning to data analytics to boost their performance across various Key Performance Indicators (KPIs). By analyzing vast amounts of data generated from call records, network traffic, customer service interactions, and more, telecom companies are unlocking new avenues for growth and efficiency. A study by McKinsey found that data analytics can help telecom companies to reduce churn by up to 15%. There is more to it like

- AT&T: AT&T is using data analytics to improve the customer experience. The company collects data on customer usage patterns, service requests, and satisfaction ratings. This data is then used to improve customer service, identify areas for improvement, and develop new products and services. AT&T has been able to improve its customer satisfaction ratings by 10% as a result of these efforts.

- Verizon: Verizon is using data analytics to improve the performance of its network. The company collects data on network usage, traffic patterns, and outages. This data is then used to identify and address any bottlenecks or disruptions in the network. Verizon has been able to reduce the number of network outages by 50% as a result of these efforts.

- T-Mobile: T-Mobile is using data analytics to target marketing campaigns. The company collects data on customer demographics, interests, and purchase history. This data is then used to create targeted marketing campaigns that are more likely to be successful. T-Mobile has been able to increase its marketing ROI by 20% as a result of these efforts.

SCIKIQ has recently launched its Telecom Analytics Centre of Excellence and offers analytics for enhancing customer service, optimizing network performance, detecting and preventing fraud, predicting maintenance needs, and ensuring revenue assurance.

How Insurance companies are using Data Analytics to Improve various processes

In an industry where precision and risk management are key, insurance companies are finding data analytics to be a game-changer. According to a study by Capgemini, 70% of insurance companies are using data analytics to improve customer experience. A few Data Analytics case studies in this industry are

- State Farm: State Farm is using data analytics to improve the accuracy of its claims processing. The company collects data on customer claims, vehicle history, and weather conditions. This data is then used to automate the claims process and identify any potential fraud. State Farm has been able to reduce the time it takes to process claims by 50% as a result of these efforts.

- Progressive: Progressive is using data analytics to improve the underwriting process. The company collects data on customer driving history, credit scores, and demographics. This data is then used to price policies and identify high-risk customers. Progressive has been able to reduce its losses by 10% as a result of these efforts.

- Geico: Geico is using data analytics to personalize the customer experience. The company collects data on customer purchase history, browsing behavior, and social media activity. This data is then used to recommend products and services that the customer is likely to be interested in. Geico has been able to increase its customer retention rate by 5% as a result of these efforts.

Data analytics is key to Cyber Insurance as well. How Data Analytics is helping fight cybercrime with Cyber insurance, Download the Guide by SCIKIQ.

These Data Analytics case studies provide valuable insights into how organizations leverage data analytics to gain a competitive edge, make data-driven decisions, and achieve remarkable outcomes in their respective fields.

Explore what Data Analytics use cases can be applied to Manufacturing , Finance, Marketing, Telecom, and Banking.

By examining Data Analytics case studies & examples, we can gain inspiration, learn from best practices, and understand the transformative impact of data analytics on businesses of all sizes. Whether it’s optimizing supply chains, improving product quality, or predicting customer behavior, data analytics case studies highlight the immense potential of data-driven approaches to reshape industries and drive growth.

Explore what are the Top strategic goals of the modern CXO and how to achieve strategic goals with effective data management.

chandan Mishra

Driving efficiency: data analytics use cases in manufacturing excellence, bridging the gap between cyber insurance and effective data management, related product.

- Banking Blogs Cyber Data mangement Development Healthcare Logistics Retail SCIKIQ

LLM: Revolutionizing NLP Across Industries with Risks and Benefits

- April 12, 2024 April 12, 2024

- Agency Banking Data Governance Data mangement Development Healthcare Logistics Retail SCIKIQ SCIKIQ Supply chain Telecom

Governance of Data in Flight

- April 5, 2024 April 9, 2024

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Currently taking bookings for January 2024 >>

The Convergence Blog

The convergence - an online community space that's dedicated to empowering operators in the data industry by providing news and education about evergreen strategies, late-breaking data & ai developments, and free or low-cost upskilling resources that you need to thrive as a leader in the data & ai space., data analysis case study: learn from humana’s automated data analysis project.

Lillian Pierson, P.E.

Playback speed:

Got data? Great! Looking for that perfect data analysis case study to help you get started using it? You’re in the right place.

If you’ve ever struggled to decide what to do next with your data projects, to actually find meaning in the data, or even to decide what kind of data to collect, then KEEP READING…

Deep down, you know what needs to happen. You need to initiate and execute a data strategy that really moves the needle for your organization. One that produces seriously awesome business results.

But how you’re in the right place to find out..

As a data strategist who has worked with 10 percent of Fortune 100 companies, today I’m sharing with you a case study that demonstrates just how real businesses are making real wins with data analysis.

In the post below, we’ll look at:

- A shining data success story;

- What went on ‘under-the-hood’ to support that successful data project; and

- The exact data technologies used by the vendor, to take this project from pure strategy to pure success

If you prefer to watch this information rather than read it, it’s captured in the video below:

Here’s the url too: https://youtu.be/xMwZObIqvLQ

3 Action Items You Need To Take

To actually use the data analysis case study you’re about to get – you need to take 3 main steps. Those are:

- Reflect upon your organization as it is today (I left you some prompts below – to help you get started)

- Review winning data case collections (starting with the one I’m sharing here) and identify 5 that seem the most promising for your organization given it’s current set-up

- Assess your organization AND those 5 winning case collections. Based on that assessment, select the “QUICK WIN” data use case that offers your organization the most bang for it’s buck

Step 1: Reflect Upon Your Organization

Whenever you evaluate data case collections to decide if they’re a good fit for your organization, the first thing you need to do is organize your thoughts with respect to your organization as it is today.

Before moving into the data analysis case study, STOP and ANSWER THE FOLLOWING QUESTIONS – just to remind yourself:

- What is the business vision for our organization?

- What industries do we primarily support?

- What data technologies do we already have up and running, that we could use to generate even more value?

- What team members do we have to support a new data project? And what are their data skillsets like?

- What type of data are we mostly looking to generate value from? Structured? Semi-Structured? Un-structured? Real-time data? Huge data sets? What are our data resources like?

Jot down some notes while you’re here. Then keep them in mind as you read on to find out how one company, Humana, used its data to achieve a 28 percent increase in customer satisfaction. Also include its 63 percent increase in employee engagement! (That’s such a seriously impressive outcome, right?!)

Step 2: Review Data Case Studies

Here we are, already at step 2. It’s time for you to start reviewing data analysis case studies (starting with the one I’m sharing below). I dentify 5 that seem the most promising for your organization given its current set-up.

Humana’s Automated Data Analysis Case Study

The key thing to note here is that the approach to creating a successful data program varies from industry to industry .

Let’s start with one to demonstrate the kind of value you can glean from these kinds of success stories.

Humana has provided health insurance to Americans for over 50 years. It is a service company focused on fulfilling the needs of its customers. A great deal of Humana’s success as a company rides on customer satisfaction, and the frontline of that battle for customers’ hearts and minds is Humana’s customer service center.

Call centers are hard to get right. A lot of emotions can arise during a customer service call, especially one relating to health and health insurance. Sometimes people are frustrated. At times, they’re upset. Also, there are times the customer service representative becomes aggravated, and the overall tone and progression of the phone call goes downhill. This is of course very bad for customer satisfaction.

Humana wanted to use artificial intelligence to improve customer satisfaction (and thus, customer retention rates & profits per customer).

Humana wanted to find a way to use artificial intelligence to monitor their phone calls and help their agents do a better job connecting with their customers in order to improve customer satisfaction (and thus, customer retention rates & profits per customer ).

In light of their business need, Humana worked with a company called Cogito, which specializes in voice analytics technology.

Cogito offers a piece of AI technology called Cogito Dialogue. It’s been trained to identify certain conversational cues as a way of helping call center representatives and supervisors stay actively engaged in a call with a customer.

The AI listens to cues like the customer’s voice pitch.

If it’s rising, or if the call representative and the customer talk over each other, then the dialogue tool will send out electronic alerts to the agent during the call.

Humana fed the dialogue tool customer service data from 10,000 calls and allowed it to analyze cues such as keywords, interruptions, and pauses, and these cues were then linked with specific outcomes. For example, if the representative is receiving a particular type of cues, they are likely to get a specific customer satisfaction result.

The Outcome

Customers were happier, and customer service representatives were more engaged..

This automated solution for data analysis has now been deployed in 200 Humana call centers and the company plans to roll it out to 100 percent of its centers in the future.

The initiative was so successful, Humana has been able to focus on next steps in its data program. The company now plans to begin predicting the type of calls that are likely to go unresolved, so they can send those calls over to management before they become frustrating to the customer and customer service representative alike.

What does this mean for you and your business?

Well, if you’re looking for new ways to generate value by improving the quantity and quality of the decision support that you’re providing to your customer service personnel, then this may be a perfect example of how you can do so.

Humana’s Business Use Cases

Humana’s data analysis case study includes two key business use cases:

- Analyzing customer sentiment; and

- Suggesting actions to customer service representatives.

Analyzing Customer Sentiment

First things first, before you go ahead and collect data, you need to ask yourself who and what is involved in making things happen within the business.

In the case of Humana, the actors were:

- The health insurance system itself

- The customer, and

- The customer service representative

As you can see in the use case diagram above, the relational aspect is pretty simple. You have a customer service representative and a customer. They are both producing audio data, and that audio data is being fed into the system.

Humana focused on collecting the key data points, shown in the image below, from their customer service operations.

By collecting data about speech style, pitch, silence, stress in customers’ voices, length of call, speed of customers’ speech, intonation, articulation, silence, and representatives’ manner of speaking, Humana was able to analyze customer sentiment and introduce techniques for improved customer satisfaction.

Having strategically defined these data points, the Cogito technology was able to generate reports about customer sentiment during the calls.

Suggesting actions to customer service representatives.

The second use case for the Humana data program follows on from the data gathered in the first case.

In Humana’s case, Cogito generated a host of call analyses and reports about key call issues.

In the second business use case, Cogito was able to suggest actions to customer service representatives, in real-time , to make use of incoming data and help improve customer satisfaction on the spot.

The technology Humana used provided suggestions via text message to the customer service representative, offering the following types of feedback:

- The tone of voice is too tense

- The speed of speaking is high

- The customer representative and customer are speaking at the same time

These alerts allowed the Humana customer service representatives to alter their approach immediately , improving the quality of the interaction and, subsequently, the customer satisfaction.

The preconditions for success in this use case were:

- The call-related data must be collected and stored

- The AI models must be in place to generate analysis on the data points that are recorded during the calls

Evidence of success can subsequently be found in a system that offers real-time suggestions for courses of action that the customer service representative can take to improve customer satisfaction.

Thanks to this data-intensive business use case, Humana was able to increase customer satisfaction, improve customer retention rates, and drive profits per customer.

The Technology That Supports This Data Analysis Case Study

I promised to dip into the tech side of things. This is especially for those of you who are interested in the ins and outs of how projects like this one are actually rolled out.

Here’s a little rundown of the main technologies we discovered when we investigated how Cogito runs in support of its clients like Humana.