Hypothesis Testing: Null Hypothesis and Alternative Hypothesis

Join over 2 million students who advanced their careers with 365 Data Science. Learn from instructors who have worked at Meta, Spotify, Google, IKEA, Netflix, and Coca-Cola and master Python, SQL, Excel, machine learning, data analysis, AI fundamentals, and more.

Figuring out exactly what the null hypothesis and the alternative hypotheses are is not a walk in the park. Hypothesis testing is based on the knowledge that you can acquire by going over what we have previously covered about statistics in our blog.

So, if you don’t want to have a hard time keeping up, make sure you have read all the tutorials about confidence intervals , distributions , z-tables and t-tables .

We've also made a video on null hypothesis vs alternative hypothesis - you can watch it below or just scroll down if you prefer reading.

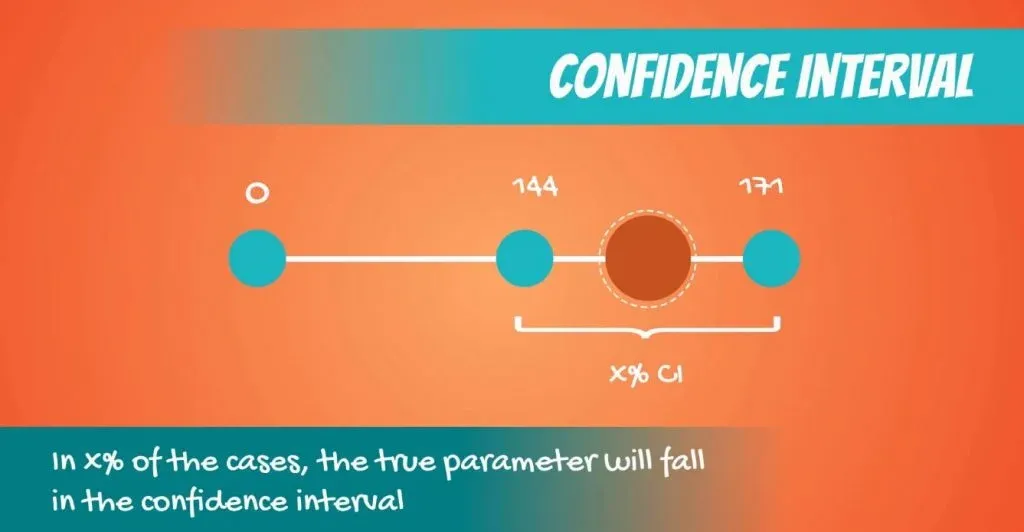

Confidence intervals provide us with an estimation of where the parameters are located. You can obtain them with our confidence interval calculator and learn more about them in the related article.

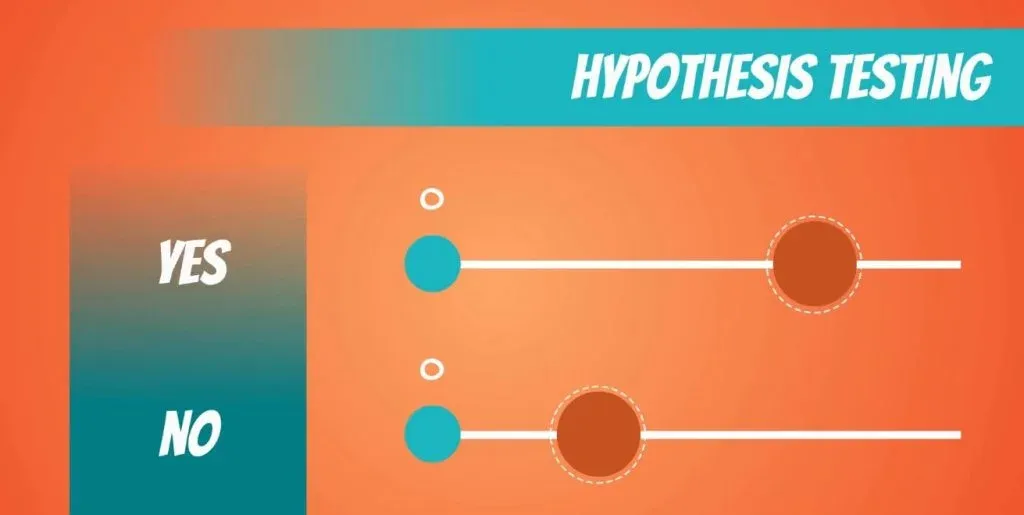

However, when we are making a decision, we need a yes or no answer. The correct approach, in this case, is to use a test .

Here we will start learning about one of the fundamental tasks in statistics - hypothesis testing !

The Hypothesis Testing Process

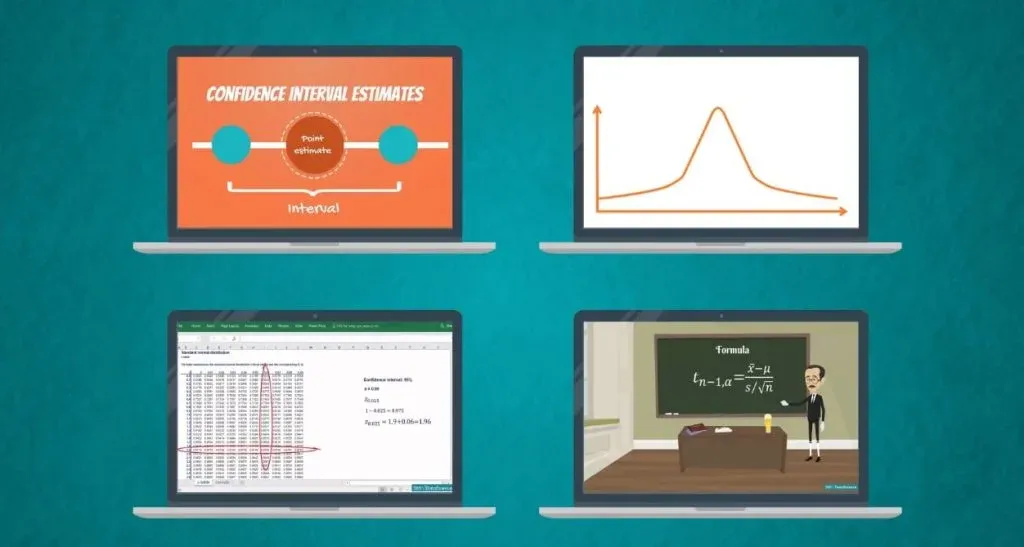

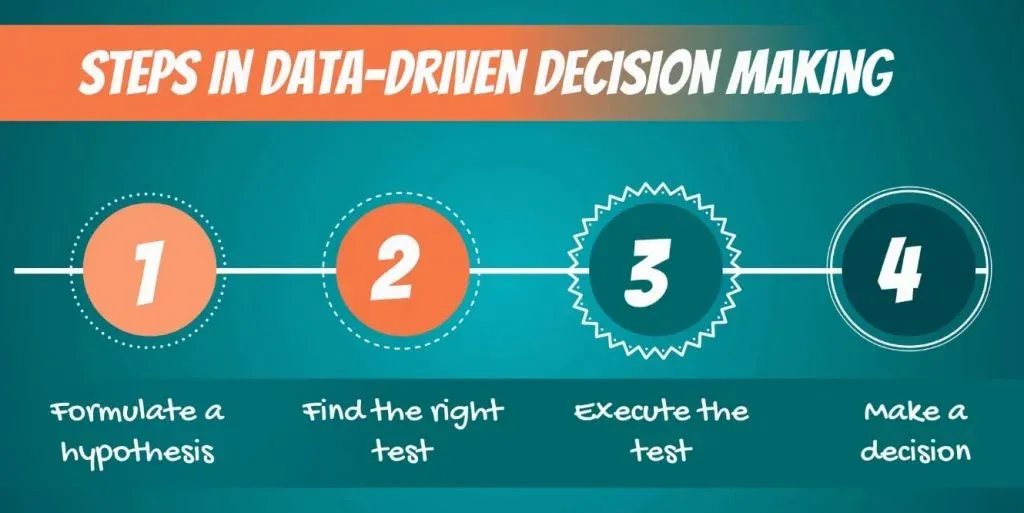

First off, let’s talk about data-driven decision-making. It consists of the following steps:

- First, we must formulate a hypothesis .

- After doing that, we have to find the right test for our hypothesis .

- Then, we execute the test.

- Finally, we make a decision based on the result.

Let’s start from the beginning.

What is a Hypothesis?

Though there are many ways to define it, the most intuitive must be:

“A hypothesis is an idea that can be tested.”

This is not the formal definition, but it explains the point very well.

So, if we say that apples in New York are expensive, this is an idea or a statement. However, it is not testable, until we have something to compare it with.

For instance, if we define expensive as: any price higher than $1.75 dollars per pound, then it immediately becomes a hypothesis .

What Cannot Be a Hypothesis?

An example may be: would the USA do better or worse under a Clinton administration, compared to a Trump administration? Statistically speaking, this is an idea , but there is no data to test it. Therefore, it cannot be a hypothesis of a statistical test.

Actually, it is more likely to be a topic of another discipline.

Conversely, in statistics, we may compare different US presidencies that have already been completed. For example, the Obama administration and the Bush administration, as we have data on both.

A Two-Sided Test

Alright, let’s get out of politics and get into hypotheses . Here’s a simple topic that CAN be tested.

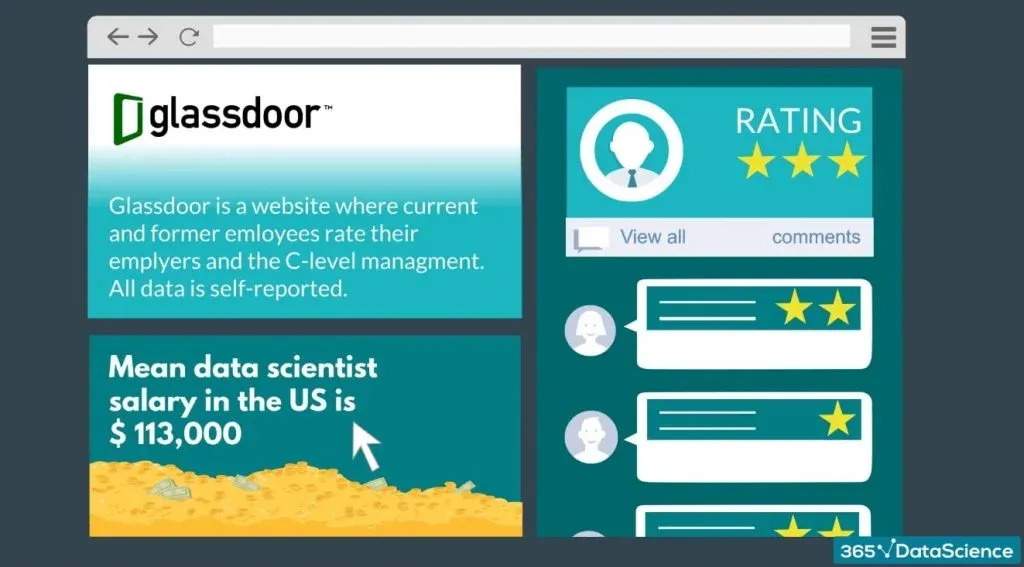

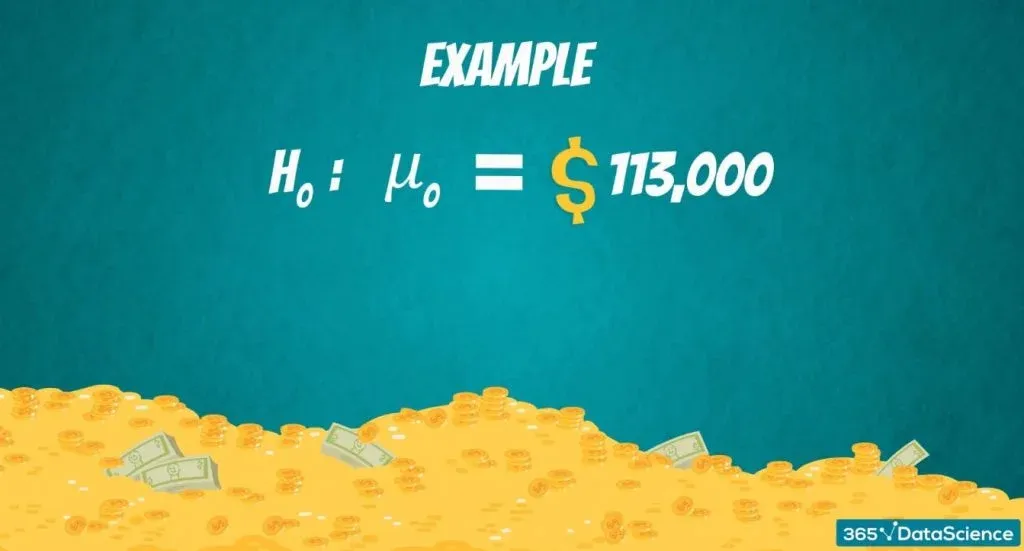

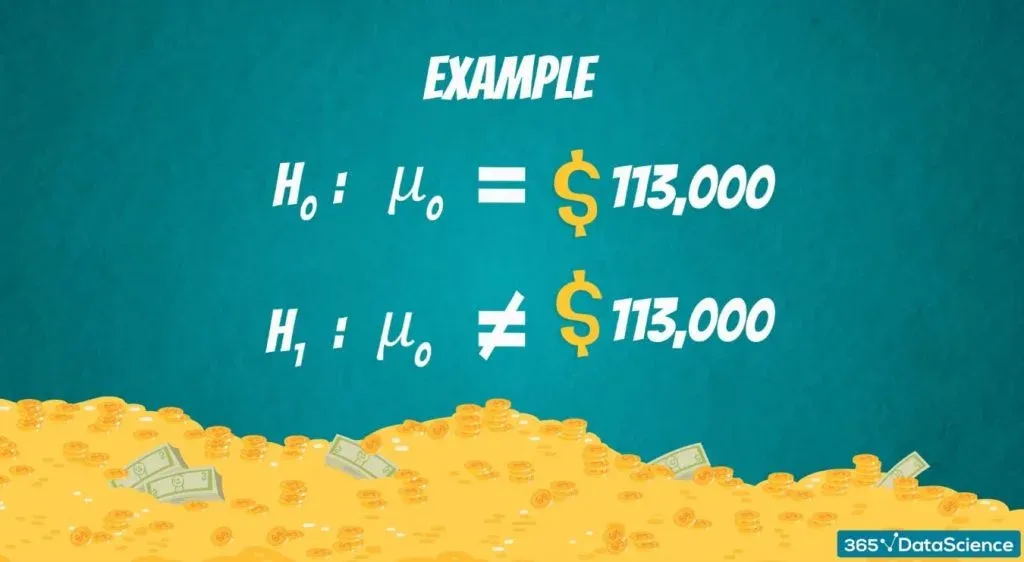

According to Glassdoor (the popular salary information website), the mean data scientist salary in the US is 113,000 dollars.

So, we want to test if their estimate is correct.

The Null and Alternative Hypotheses

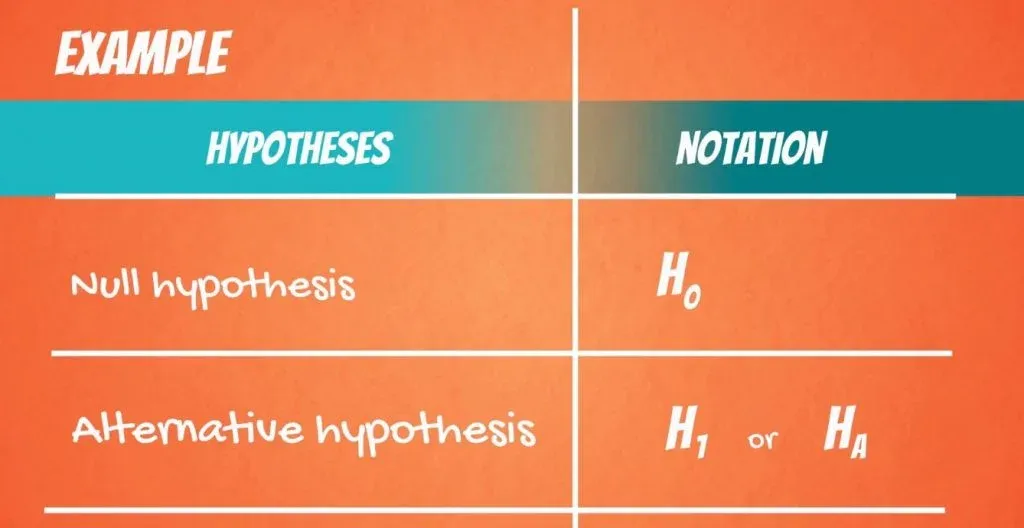

There are two hypotheses that are made: the null hypothesis , denoted H 0 , and the alternative hypothesis , denoted H 1 or H A .

The null hypothesis is the one to be tested and the alternative is everything else. In our example:

The null hypothesis would be: The mean data scientist salary is 113,000 dollars.

While the alternative : The mean data scientist salary is not 113,000 dollars.

Author's note: If you're interested in a data scientist career, check out our articles Data Scientist Career Path , 5 Business Basics for Data Scientists , Data Science Interview Questions , and 15 Data Science Consulting Companies Hiring Now .

An Example of a One-Sided Test

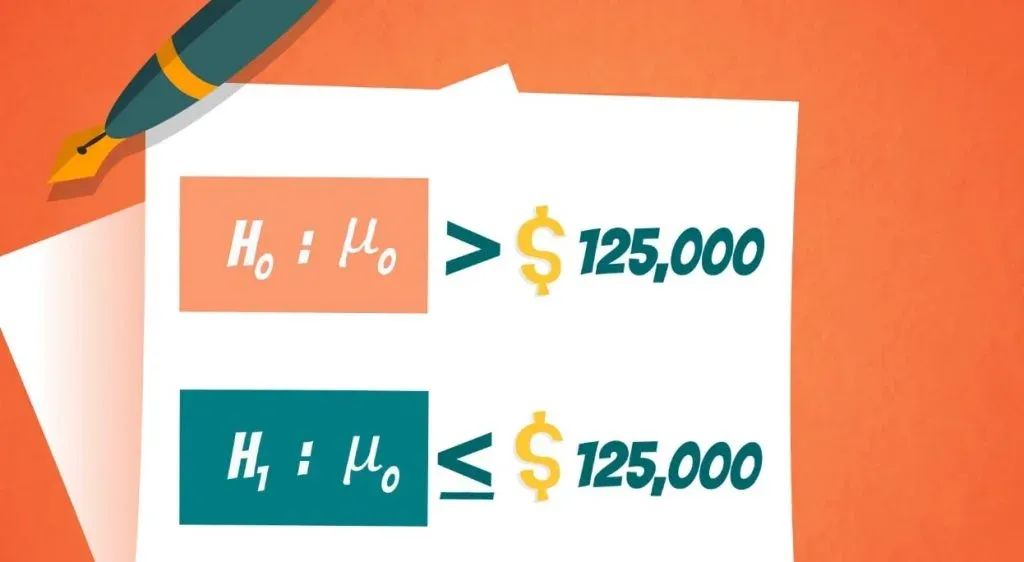

You can also form one-sided or one-tailed tests.

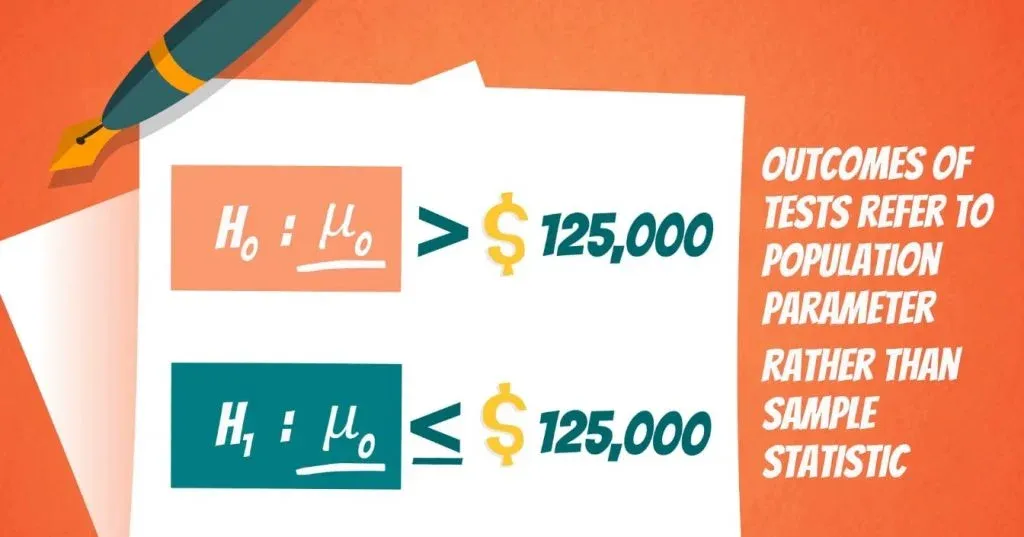

Say your friend, Paul, told you that he thinks data scientists earn more than 125,000 dollars per year. You doubt him, so you design a test to see who’s right.

The null hypothesis of this test would be: The mean data scientist salary is more than 125,000 dollars.

The alternative will cover everything else, thus: The mean data scientist salary is less than or equal to 125,000 dollars.

Important: The outcomes of tests refer to the population parameter rather than the sample statistic! So, the result that we get is for the population.

Important: Another crucial consideration is that, generally, the researcher is trying to reject the null hypothesis . Think about the null hypothesis as the status quo and the alternative as the change or innovation that challenges that status quo. In our example, Paul was representing the status quo, which we were challenging.

Let’s go over it once more. In statistics, the null hypothesis is the statement we are trying to reject. Therefore, the null hypothesis is the present state of affairs, while the alternative is our personal opinion.

Why Hypothesis Testing Works

Right now, you may be feeling a little puzzled. This is normal because this whole concept is counter-intuitive at the beginning. However, there is an extremely easy way to continue your journey of exploring it. By diving into the linked tutorial, you will find out why hypothesis testing actually works.

Interested in learning more? You can take your skills from good to great with our statistics course!

Try statistics course for free

Next Tutorial: Hypothesis Testing: Significance Level and Rejection Region

World-Class

Data Science

Learn with instructors from:

Iliya Valchanov

Co-founder of 365 Data Science

Iliya is a finance graduate with a strong quantitative background who chose the exciting path of a startup entrepreneur. He demonstrated a formidable affinity for numbers during his childhood, winning more than 90 national and international awards and competitions through the years. Iliya started teaching at university, helping other students learn statistics and econometrics. Inspired by his first happy students, he co-founded 365 Data Science to continue spreading knowledge. He authored several of the program’s online courses in mathematics, statistics, machine learning, and deep learning.

We Think you'll also like

Statistics Tutorials

False Positive vs. False Negative: Type I and Type II Errors in Statistical Hypothesis Testing

Hypothesis Testing with Z-Test: Significance Level and Rejection Region

Calculating and Using Covariance and Linear Correlation Coefficient

Examples of Numerical and Categorical Variables

- Onsite training

3,000,000+ delegates

15,000+ clients

1,000+ locations

- KnowledgePass

- Log a ticket

01344203999 Available 24/7

Hypothesis Testing in Data Science: It's Usage and Types

Hypothesis Testing in Data Science is a crucial method for making informed decisions from data. This blog explores its essential usage in analysing trends and patterns, and the different types such as null, alternative, one-tailed, and two-tailed tests, providing a comprehensive understanding for both beginners and advanced practitioners.

Exclusive 40% OFF

Training Outcomes Within Your Budget!

We ensure quality, budget-alignment, and timely delivery by our expert instructors.

Share this Resource

- Advanced Data Science Certification

- Data Science and Blockchain Training

- Big Data Analysis

- Python Data Science Course

- Advanced Data Analytics Course {location}

Table of Contents

1) What is Hypothesis Testing in Data Science?

2) Importance of Hypothesis Testing in Data Science

3) Types of Hypothesis Testing

4) Basic steps in Hypothesis Testing

5) Real-world use cases of Hypothesis Testing

6) Conclusion

What is Hypothesis Testing in Data Science?

Hypothesis Testing in Data Science is a statistical method used to assess the validity of assumptions or claims about a population based on sample data. It involves formulating two Hypotheses, the null Hypothesis (H0) and the alternative Hypothesis (Ha or H1), and then using statistical tests to find out if there is enough evidence to support the alternative Hypothesis.

Hypothetical Testing is a critical tool for making data-driven decisions, evaluating the significance of observed effects or differences, and drawing meaningful conclusions from data, allowing Data Scientists to uncover patterns, relationships, and insights that inform various domains, from medicine to business and beyond.

Unlock the power of data with our comprehensive Data Science & Analytics Training . Sign up now!

Importance of Hypothesis Testing in Data Science

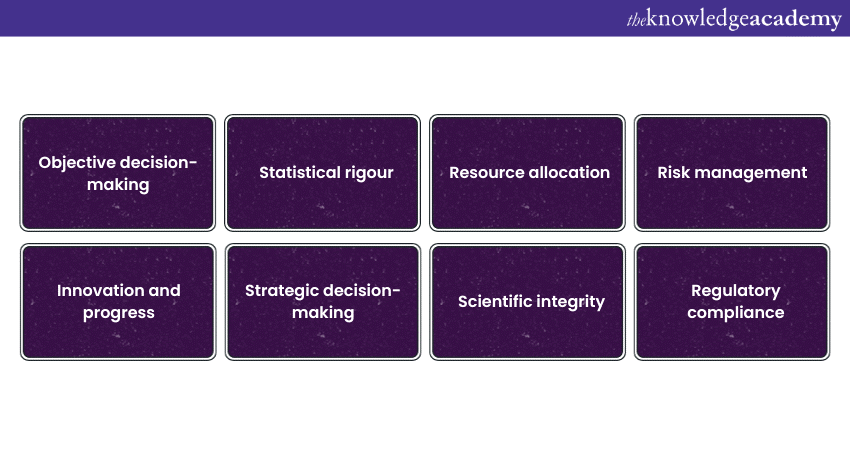

The significance of Hypothesis Testing in Data Science cannot be overstated. It serves as the cornerstone of data-driven decision-making. By systematically testing Hypotheses, Data Scientists can:

Objective decision-making

Hypothesis Testing provides a structured and impartial method for making decisions based on data. In a world where biases can skew perceptions, Data Scientists rely on this method to ensure that their conclusions are grounded in empirical evidence, making their decisions more objective and trustworthy.

Statistical rigour

Data Scientists deal with large amounts of data, and Hypothesis Testing helps them make sense of it. It quantifies the significance of observed patterns, differences, or relationships. This statistical rigour is essential in distinguishing between mere coincidences and meaningful findings, reducing the likelihood of making decisions based on random chance.

Resource allocation

Resources, whether they are financial, human, or time-related, are often limited. Hypothesis Testing enables efficient resource allocation by guiding Data Scientists towards strategies or interventions that are statistically significant. This ensures that efforts are directed where they are most likely to yield valuable results.

Risk management

In domains like healthcare and finance, where lives and livelihoods are at stake, Hypothesis Testing is a critical tool for risk assessment. For instance, in drug development, Hypothesis Testing is used to determine the safety and efficiency of new treatments, helping mitigate potential risks to patients.

Innovation and progress

Hypothesis Testing fosters innovation by providing a systematic framework to evaluate new ideas, products, or strategies. It encourages a cycle of experimentation, feedback, and improvement, driving continuous progress and innovation.

Strategic decision-making

Organisations base their strategies on data-driven insights. Hypothesis Testing enables them to make informed decisions about market trends, customer behaviour, and product development. These decisions are grounded in empirical evidence, increasing the likelihood of success.

Scientific integrity

In scientific research, Hypothesis Testing is integral to maintaining the integrity of research findings. It ensures that conclusions are drawn from rigorous statistical analysis rather than conjecture. This is essential for advancing knowledge and building upon existing research.

Regulatory compliance

Many industries, such as pharmaceuticals and aviation, operate under strict regulatory frameworks. Hypothesis Testing is essential for demonstrating compliance with safety and quality standards. It provides the statistical evidence required to meet regulatory requirements.

Supercharge your data skills with our Big Data and Analytics Training – register now!

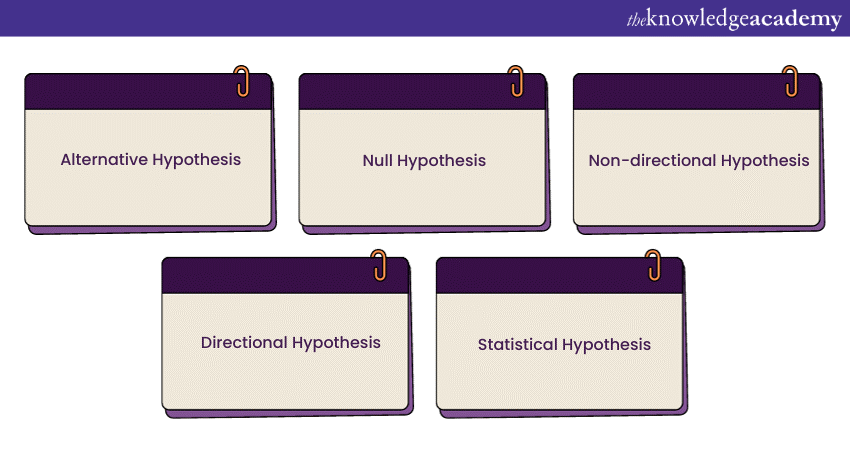

Types of Hypothesis Testing

Hypothesis Testing can be seen in several different types. In total, we have five types of Hypothesis Testing. They are described below as follows:

Alternative Hypothesis

The Alternative Hypothesis, denoted as Ha or H1, is the assertion or claim that researchers aim to support with their data analysis. It represents the opposite of the null Hypothesis (H0) and suggests that there is a significant effect, relationship, or difference in the population. In simpler terms, it's the statement that researchers hope to find evidence for during their analysis. For example, if you are testing a new drug's efficacy, the alternative Hypothesis might state that the drug has a measurable positive effect on patients' health.

Null Hypothesis

The Null Hypothesis, denoted as H0, is the default assumption in Hypothesis Testing. It posits that there is no significant effect, relationship, or difference in the population being studied. In other words, it represents the status quo or the absence of an effect. Researchers typically set out to challenge or disprove the Null Hypothesis by collecting and analysing data. Using the drug efficacy example again, the Null Hypothesis might state that the new drug has no effect on patients' health.

Non-directional Hypothesis

A Non-directional Hypothesis, also known as a two-tailed Hypothesis, is used when researchers are interested in whether there is any significant difference, effect, or relationship in either direction (positive or negative). This type of Hypothesis allows for the possibility of finding effects in both directions. For instance, in a study comparing the performance of two groups, a Non-directional Hypothesis would suggest that there is a significant difference between the groups, without specifying which group performs better.

Directional Hypothesis

A Directional Hypothesis, also called a one-tailed Hypothesis, is employed when researchers have a specific expectation about the direction of the effect, relationship, or difference they are investigating. In this case, the Hypothesis predicts an outcome in a particular direction—either positive or negative. For example, if you expect that a new teaching method will improve student test scores, a directional Hypothesis would state that the new method leads to higher test scores.

Statistical Hypothesis

A Statistical Hypothesis is a Hypothesis formulated in a way that it can be tested using statistical methods. It involves specific numerical values or parameters that can be measured or compared. Statistical Hypotheses are crucial for quantitative research and often involve means, proportions, variances, correlations, or other measurable quantities. These Hypotheses provide a precise framework for conducting statistical tests and drawing conclusions based on data analysis.

Want to unlock the power of Big Data Analysis? Join our Big Data Analysis Course today!

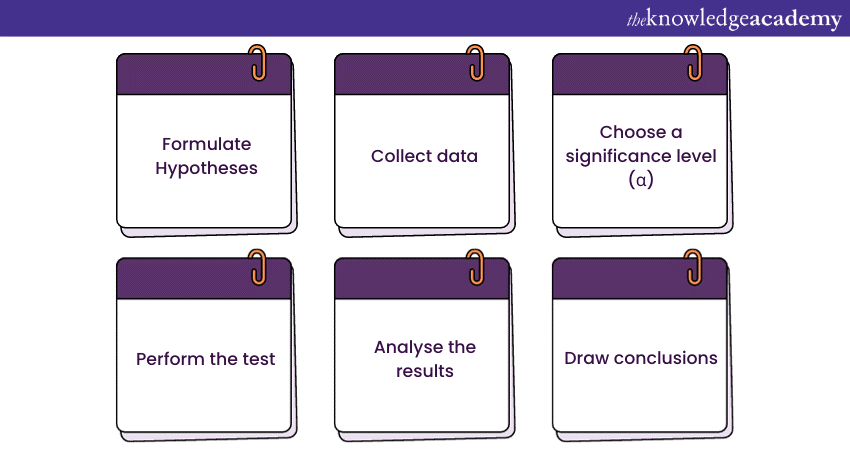

Basic steps in Hypothesis Testing

Hypothesis Testing is a systematic approach used in statistics to make informed decisions based on data. It is a critical tool in Data Science, research, and many other fields where data analysis is employed. The following are the basic steps involved in Hypothesis Testing:

1) Formulate Hypotheses

The first step in Hypothesis Testing is to clearly define your research question and translate it into two mutually exclusive Hypotheses:

a) Null Hypothesis (H0): This is the default assumption, often representing the status quo or the absence of an effect. It states that there is no significant difference, relationship, or effect in the population.

b) Alternative Hypothesis (Ha or H1): This is the statement that contradicts the null Hypothesis. It suggests that there is a significant difference, relationship, or effect in the population.

The formulation of these Hypotheses is crucial, as they serve as the foundation for your entire Hypothesis Testing process.

2) Collect data

With your Hypotheses in place, the next step is to gather relevant data through surveys, experiments, observations, or any other suitable method. The data collected should be representative of the population you are studying. The quality and quantity of data are essential factors in the success of your Hypothesis Testing.

3) Choose a significance level (α)

Before conducting the statistical test, you need to decide on the level of significance, denoted as α. The significance level represents the threshold for statistical significance and determines how confident you want to be in your results. A common choice is α = 0.05, which implies a 5% chance of making a Type I error (rejecting the null Hypothesis when it's true). You can choose a different α value based on the specific requirements of your analysis.

4) Perform the test

Based on the nature of your data and the Hypotheses you've formulated, select the appropriate statistical test. There are various tests available, including t-tests, chi-squared tests, ANOVA, regression analysis, and more. The chosen test should align with the type of data (e.g., continuous or categorical) and the research question (e.g., comparing means or testing for independence).

Execute the selected statistical test on your data to obtain test statistics and p-values. The test statistics quantify the difference or effect you are investigating, while the p-value represents the probability of obtaining the observed results if the null Hypothesis were true.

5) Analyse the results

Once you have the test statistics and p-value, it's time to interpret the results. The primary focus is on the p-value:

a) If the p-value is less than or equal to your chosen significance level (α), typically 0.05, you have evidence to reject the null Hypothesis. This shows that there is a significant difference, relationship, or effect in the population.

b) If the p-value is more than α, you fail to reject the null Hypothesis, showing that there is insufficient evidence to support the alternative Hypothesis.

6) Draw conclusions

Based on the analysis of the p-value and the comparison to the significance level, you can draw conclusions about your research question:

a) In case you reject the null Hypothesis, you can accept the alternative Hypothesis and make inferences based on the evidence provided by your data.

b) In case you fail to reject the null Hypothesis, you do not accept the alternative Hypothesis, and you acknowledge that there is no significant evidence to support your claim.

It's important to communicate your findings clearly, including the implications and limitations of your analysis.

Real-world use cases of Hypothesis Testing

The following are some of the real-world use cases of Hypothesis Testing.

a) Medical research: Hypothesis Testing is crucial in determining the efficacy of new medications or treatments. For instance, in a clinical trial, researchers use Hypothesis Testing to assess whether a new drug is significantly more effective than a placebo in treating a particular condition.

b) Marketing and advertising: Businesses employ Hypothesis Testing to evaluate the impact of marketing campaigns. A company may test whether a new advertising strategy leads to a significant increase in sales compared to the previous approach.

c) Manufacturing and quality control: Manufacturing industries use Hypothesis Testing to ensure product quality. For example, in the automotive industry, Hypothesis Testing can be applied to test whether a new manufacturing process results in a significant reduction in defects.

d) Education: In the field of education, Hypothesis Testing can be used to assess the effectiveness of teaching methods. Researchers may test whether a new teaching approach leads to statistically significant improvements in student performance.

e) Finance and investment: Investment strategies are often evaluated using Hypothesis Testing. Investors may test whether a new investment strategy outperforms a benchmark index over a specified period.

Conclusion

To sum it up, Hypothesis Testing in Data Science is a powerful tool that enables Data Scientists to make evidence-based decisions and draw meaningful conclusions from data. Understanding the types, methods, and steps involved in Hypothesis Testing is essential for any Data Scientist. By rigorously applying Hypothesis Testing techniques, you can gain valuable insights and drive informed decision-making in various domains.

Want to take your Data Science skills to the next level? Join our Big Data Analytics & Data Science Integration Course now!

Frequently Asked Questions

Upcoming data, analytics & ai resources batches & dates.

Fri 5th Jul 2024

Fri 1st Nov 2024

Get A Quote

WHO WILL BE FUNDING THE COURSE?

My employer

By submitting your details you agree to be contacted in order to respond to your enquiry

- Business Analysis

- Lean Six Sigma Certification

Share this course

Our biggest spring sale.

We cannot process your enquiry without contacting you, please tick to confirm your consent to us for contacting you about your enquiry.

By submitting your details you agree to be contacted in order to respond to your enquiry.

We may not have the course you’re looking for. If you enquire or give us a call on 01344203999 and speak to our training experts, we may still be able to help with your training requirements.

Or select from our popular topics

- ITIL® Certification

- Scrum Certification

- Change Management Certification

- Business Analysis Courses

- Microsoft Azure Certification

- Microsoft Excel Courses

- Microsoft Project

- Explore more courses

Press esc to close

Fill out your contact details below and our training experts will be in touch.

Fill out your contact details below

Thank you for your enquiry!

One of our training experts will be in touch shortly to go over your training requirements.

Back to Course Information

Fill out your contact details below so we can get in touch with you regarding your training requirements.

* WHO WILL BE FUNDING THE COURSE?

Preferred Contact Method

No preference

Back to course information

Fill out your training details below

Fill out your training details below so we have a better idea of what your training requirements are.

HOW MANY DELEGATES NEED TRAINING?

HOW DO YOU WANT THE COURSE DELIVERED?

Online Instructor-led

Online Self-paced

WHEN WOULD YOU LIKE TO TAKE THIS COURSE?

Next 2 - 4 months

WHAT IS YOUR REASON FOR ENQUIRING?

Looking for some information

Looking for a discount

I want to book but have questions

One of our training experts will be in touch shortly to go overy your training requirements.

Your privacy & cookies!

Like many websites we use cookies. We care about your data and experience, so to give you the best possible experience using our site, we store a very limited amount of your data. Continuing to use this site or clicking “Accept & close” means that you agree to our use of cookies. Learn more about our privacy policy and cookie policy cookie policy .

We use cookies that are essential for our site to work. Please visit our cookie policy for more information. To accept all cookies click 'Accept & close'.

9 Hypothesis testing

In scientific studies, you’ll often see phrases like “the results are statistically significant”. This points to a technique called hypothesis testing, where we use p-values, a type of probability, to test our initial assumption or hypothesis.

In hypothesis testing, rather than providing an estimate of the parameter we’re studying, we provide a probability that serves as evidence supporting or contradicting a specific hypothesis. The hypothesis usually involves whether a parameter is different from a predetermined value (often 0).

Hypothesis testing is used when you can phrase your research question in terms of whether a parameter differs from this predetermined value. It’s applied in various fields, asking questions such as: Does a medication extend the lives of cancer patients? Does an increase in gun sales correlate with more gun violence? Does class size affect test scores?

Take, for instance, the previously used example with colored beads. We might not be concerned about the exact proportion of blue beads, but instead ask: Are there more blue beads than red ones? This could be rephrased as asking if the proportion of blue beads is more than 0.5.

The initial hypothesis that the parameter equals the predetermined value is called the “null hypothesis”. It’s popular because it allows us to focus on the data’s properties under this null scenario. Once data is collected, we estimate the parameter and calculate the p-value, which is the probability of the estimate being as extreme as observed if the null hypothesis is true. If the p-value is small, it indicates the null hypothesis is unlikely, providing evidence against it.

We will see more examples of hypothesis testing in Chapter 17 .

9.1 p-values

Suppose we take a random sample of \(N=100\) and we observe \(52\) blue beads, which gives us \(\bar{X} = 0.52\) . This seems to be pointing to the existence of more blue than red beads since 0.52 is larger than 0.5. However, we know there is chance involved in this process and we could get a 52 even when the actual \(p=0.5\) . We call the assumption that \(p = 0.5\) a null hypothesis . The null hypothesis is the skeptic’s hypothesis.

We have observed a random variable \(\bar{X} = 0.52\) , and the p-value is the answer to the question: How likely is it to see a value this large, when the null hypothesis is true? If the p-value is small enough, we reject the null hypothesis and say that the results are statistically significant .

The p-value of 0.05 as a threshold for statistical significance is conventionally used in many areas of research. A cutoff of 0.01 is also used to define highly significance . The choice of 0.05 is somewhat arbitrary and was popularized by the British statistician Ronald Fisher in the 1920s. We do not recommend using these cutoff without justification and recommend avoiding the phrase statistically significant .

To obtain a p-value for our example, we write:

\[\mbox{Pr}(\mid \bar{X} - 0.5 \mid > 0.02 ) \]

assuming the \(p=0.5\) . Under the null hypothesis we know that:

\[ \sqrt{N}\frac{\bar{X} - 0.5}{\sqrt{0.5(1-0.5)}} \]

is standard normal. We, therefore, can compute the probability above, which is the p-value.

\[\mbox{Pr}\left(\sqrt{N}\frac{\mid \bar{X} - 0.5\mid}{\sqrt{0.5(1-0.5)}} > \sqrt{N} \frac{0.02}{ \sqrt{0.5(1-0.5)}}\right)\]

In this case, there is actually a large chance of seeing 52 or larger under the null hypothesis.

Keep in mind that there is a close connection between p-values and confidence intervals. If a 95% confidence interval of the spread does not include 0, we know that the p-value must be smaller than 0.05.

To learn more about p-values, you can consult any statistics textbook. However, in general, we prefer reporting confidence intervals over p-values because it gives us an idea of the size of the estimate. If we just report the p-value, we provide no information about the significance of the finding in the context of the problem.

We can show mathematically that if a \((1-\alpha)\times 100\) % confidence interval does not contain the null hypothesis value, the null hypothesis is rejected with a p-value as smaller or smaller than \(\alpha\) . So statistical significance can be determined from confidence intervals. However, unlike the confidence interval, the p-value does not provide an estimate of the magnitude of the effect. For this reason, we recommend avoiding p-values whenever you can compute a confidence interval.

Pollsters are not successful at providing correct confidence intervals, but rather at predicting who will win. When we took a 25 bead sample size, the confidence interval for the spread:

included 0. If this were a poll and we were forced to make a declaration, we would have to say it was a “toss-up”.

One problem with our poll results is that, given the sample size and the value of \(p\) , we would have to sacrifice the probability of an incorrect call to create an interval that does not include 0.

This does not mean that the election is close. It only means that we have a small sample size. In statistical textbooks, this is called lack of power . In the context of polls, power is the probability of detecting spreads different from 0.

By increasing our sample size, we lower our standard error, and thus, have a much better chance of detecting the direction of the spread.

9.3 Exercises

- Generate a sample of size \(N=1000\) from an urn model with 50% blue beads:

then, compute a p-value to test if \(p=0.5\) . Repeat this 10,000 times and report how often the p-value is lower than 0.05? How often is it lower than 0.01?

- Make a histogram of the p-values you generated in exercise 1. Which of the following seems to be true?

- The p-values are all 0.05.

- The p-values are normally distributed; CLT seems to hold.

- The p-values are uniformly distributed.

- The p-values are all less than 0.05.

Demonstrate, mathematically, why see the histogram we see in exercise 2.

Generate a sample of size \(N=1000\) from an urn model with 52% blue beads:

Compute a p-value to test if \(p=0.5\) . Repeat this 10,000 times and report how often the p-value is larger than 0.05? Note that you are computing 1 - power.

- Repeat exercise for but for the following values:

Plot power as a function of \(N\) with a different color curve for each value of p .

What Is a Null Hypothesis?

A null hypothesis may be a sort of hypothesis utilized in statistics that proposes that there’s no difference between certain characteristics of a population (or data-generating process). For example, a gambler could also be curious about whether a game of chance is fair. If it’s fair, then the expected earnings per play is 0 for […]

A null hypothesis may be a sort of hypothesis utilized in statistics that proposes that there’s no difference between certain characteristics of a population (or data-generating process).

For example, a gambler could also be curious about whether a game of chance is fair. If it’s fair, then the expected earnings per play is 0 for both players. If the sport isn’t fair, then the expected earnings are positive for one player and negative for the opposite. to check whether the sport is fair, the gambler collects earnings data from many repetitions of the sport , calculates the typical earnings from these data, then tests the null hypothesis that the expected earnings isn’t different from zero.

If the typical earnings from the sample data is sufficiently faraway from zero, then the gambler will reject the null hypothesis and conclude the choice hypothesis; namely, that the expected earnings per play is different from zero. If the typical earnings from the sample data are on the brink of zero, then the gambler won’t reject the null hypothesis, concluding instead that the difference between the typical from the info and 0 is explainable accidentally alone.

KEY TAKEAWAYS

A null hypothesis may be a sort of conjecture utilized in statistics that proposes that there’s no difference between certain characteristics of a population or data-generating process.

The alternative hypothesis proposes that there’s a difference.

Hypothesis testing provides a way to reject a null hypothesis within a particular confidence level. (Null hypotheses can’t be proven, though.)

How a Null Hypothesis Works

The null hypothesis, also referred to as the conjecture, assumes that any quite difference between the chosen characteristics that you simply see during a set of knowledge is thanks to chance. For instance, if the expected earnings for the game of chance are actually adequate to 0, then any difference between the typical earnings within the data and 0 is thanks to chance.

Statistical hypotheses are tested employing a four-step process. The primary step is for the analyst to state the 2 hypotheses in order that just one is often right. Subsequent step is to formulate an analysis plan, which outlines how the info is going to be evaluated. The third step is to hold out the plan and physically analyze the sample data. The fourth and final step is to research the results and either reject the null hypothesis, or claim that the observed differences are explainable accidentally alone.

Analysts look to reject the null hypothesis because it’s a robust conclusion. The choice conclusion, that the results are “explainable accidentally alone,” could also be a weak conclusion because it allows that factors aside from chance may be at work.

Analysts look to reject the null hypothesis to rule out some variable(s) as explaining the phenomena of interest.

Null Hypothesis Example

Here may be a simple example: a faculty principal reports that students in her school score a mean of seven out of 10 in exams. The null hypothesis is that the population mean is 7.0. To check this null hypothesis, we record marks of say 30 students (sample) from the whole student population of the varsity (say 300) and calculate the mean of that sample. We will then compare the (calculated) sample mean to the (claimed) population mean of seven .0 and plan to reject the null hypothesis. (The null hypothesis that the population mean is 7.0 can’t be proven using the sample data; it can only be rejected.)

Take another example: The annual return of a specific open-end fund is claimed to be 8%. Assume that open-end fund has been alive for 20 years. The null hypothesis is that the mean return is 8% for the open-end fund. We take a random sample of annual returns of the open-end fund for, say, five years (sample) and calculate the sample mean. We then compare the (calculated) sample mean to the (claimed) population mean (8%) to test the null hypothesis.

For the above examples, null hypotheses are:

Example A: Students within the school score a mean of seven out of 10 in exams.

Example B: Mean annual return of the open-end fund is 8% once a year.

For the needs of determining whether to reject the null hypothesis, the null hypothesis (abbreviated H0) is assumed, for the sake of argument, to be true. Then the likely range of possible values of the calculated statistic (e.g., average score on 30 students’ tests) is decided under this presumption (e.g., the range of plausible averages may range from 6.2 to 7.8 if the population mean is 7.0). Then, if the sample average is outside of this range, the null hypothesis is rejected. Otherwise, the difference is claimed to be “explainable accidentally alone,” being within the range that’s determined accidentally alone.

An important point to notice is that we are testing the null hypothesis because there’s a component of doubt about its validity. Whatever information that’s against the stated null hypothesis is captured within the Alternative Hypothesis (H1). For the above examples, the choice hypothesis would be:

Students score a mean that’s not adequate to 7.

The mean annual return of the open-end fund isn’t adequate to 8% once a year.

In other words, the choice hypothesis may be a direct contradiction of the null hypothesis.

Hypothesis Testing for Investments

As an example associated with financial markets, assume Alice sees that her investment strategy produces higher average returns than simply buying and holding a stock. The null hypothesis states that there’s no difference between the 2 average returns, and Alice is inclined to believe this until she proves otherwise. Refuting the null hypothesis would require showing statistical significance, which may be found employing a sort of tests. The choice hypothesis would state that the investment strategy features a higher average return than a standard buy-and-hold strategy.

The p-value is employed to work out the statistical significance of the results. A p-value that’s but or adequate to 0.05 is usually wont to indicate whether there’s evidence against the null hypothesis. If Alice conducts one among these tests, like a test using the traditional model, and proves that the difference between her returns and therefore the buy-and-hold returns is critical (p-value is a smaller amount than or adequate to 0.05), she will then refute the null hypothesis and conclude the choice hypothesis.

Weekly newsletter

No spam. Just the latest releases and tips, interesting articles, and exclusive interviews in your inbox every week.

You may also like

False negative.

While understanding the hypothesis, two errors can be quite confusing. These two errors are false negative and false positive. You can also refer to the false-negative error as type II error and false-positive as type I error. While you are learning, you might think these errors have no use and will only waste your time […]

Box Plot Review

A box plot or box and whisker plot help you display the database distribution on a five-number summary. The first quartile Q1 will be the minimum, the third quartile Q3 will be the median, and the fifth quartile Q5 will be the maximum. You can find the outliers and their values by using a box […]

Bayesian Networks

Creating a probabilistic model can be challenging but proves helpful in machine learning. To create such a graphical model, you need to find the probabilistic relationships between variables. Suppose you are creating a graphical representation of the variables. You need to represent the variables as nodes and conditional independence as the absence of edges. Graphical […]

Privacy Overview

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- PMC5635437.1 ; 2015 Aug 25

- PMC5635437.2 ; 2016 Jul 13

- ➤ PMC5635437.3; 2016 Oct 10

Null hypothesis significance testing: a short tutorial

Cyril pernet.

1 Centre for Clinical Brain Sciences (CCBS), Neuroimaging Sciences, The University of Edinburgh, Edinburgh, UK

Version Changes

Revised. amendments from version 2.

This v3 includes minor changes that reflect the 3rd reviewers' comments - in particular the theoretical vs. practical difference between Fisher and Neyman-Pearson. Additional information and reference is also included regarding the interpretation of p-value for low powered studies.

Peer Review Summary

Although thoroughly criticized, null hypothesis significance testing (NHST) remains the statistical method of choice used to provide evidence for an effect, in biological, biomedical and social sciences. In this short tutorial, I first summarize the concepts behind the method, distinguishing test of significance (Fisher) and test of acceptance (Newman-Pearson) and point to common interpretation errors regarding the p-value. I then present the related concepts of confidence intervals and again point to common interpretation errors. Finally, I discuss what should be reported in which context. The goal is to clarify concepts to avoid interpretation errors and propose reporting practices.

The Null Hypothesis Significance Testing framework

NHST is a method of statistical inference by which an experimental factor is tested against a hypothesis of no effect or no relationship based on a given observation. The method is a combination of the concepts of significance testing developed by Fisher in 1925 and of acceptance based on critical rejection regions developed by Neyman & Pearson in 1928 . In the following I am first presenting each approach, highlighting the key differences and common misconceptions that result from their combination into the NHST framework (for a more mathematical comparison, along with the Bayesian method, see Christensen, 2005 ). I next present the related concept of confidence intervals. I finish by discussing practical aspects in using NHST and reporting practice.

Fisher, significance testing, and the p-value

The method developed by ( Fisher, 1934 ; Fisher, 1955 ; Fisher, 1959 ) allows to compute the probability of observing a result at least as extreme as a test statistic (e.g. t value), assuming the null hypothesis of no effect is true. This probability or p-value reflects (1) the conditional probability of achieving the observed outcome or larger: p(Obs≥t|H0), and (2) is therefore a cumulative probability rather than a point estimate. It is equal to the area under the null probability distribution curve from the observed test statistic to the tail of the null distribution ( Turkheimer et al. , 2004 ). The approach proposed is of ‘proof by contradiction’ ( Christensen, 2005 ), we pose the null model and test if data conform to it.

In practice, it is recommended to set a level of significance (a theoretical p-value) that acts as a reference point to identify significant results, that is to identify results that differ from the null-hypothesis of no effect. Fisher recommended using p=0.05 to judge whether an effect is significant or not as it is roughly two standard deviations away from the mean for the normal distribution ( Fisher, 1934 page 45: ‘The value for which p=.05, or 1 in 20, is 1.96 or nearly 2; it is convenient to take this point as a limit in judging whether a deviation is to be considered significant or not’). A key aspect of Fishers’ theory is that only the null-hypothesis is tested, and therefore p-values are meant to be used in a graded manner to decide whether the evidence is worth additional investigation and/or replication ( Fisher, 1971 page 13: ‘it is open to the experimenter to be more or less exacting in respect of the smallness of the probability he would require […]’ and ‘no isolated experiment, however significant in itself, can suffice for the experimental demonstration of any natural phenomenon’). How small the level of significance is, is thus left to researchers.

What is not a p-value? Common mistakes

The p-value is not an indication of the strength or magnitude of an effect . Any interpretation of the p-value in relation to the effect under study (strength, reliability, probability) is wrong, since p-values are conditioned on H0. In addition, while p-values are randomly distributed (if all the assumptions of the test are met) when there is no effect, their distribution depends of both the population effect size and the number of participants, making impossible to infer strength of effect from them.

Similarly, 1-p is not the probability to replicate an effect . Often, a small value of p is considered to mean a strong likelihood of getting the same results on another try, but again this cannot be obtained because the p-value is not informative on the effect itself ( Miller, 2009 ). Because the p-value depends on the number of subjects, it can only be used in high powered studies to interpret results. In low powered studies (typically small number of subjects), the p-value has a large variance across repeated samples, making it unreliable to estimate replication ( Halsey et al. , 2015 ).

A (small) p-value is not an indication favouring a given hypothesis . Because a low p-value only indicates a misfit of the null hypothesis to the data, it cannot be taken as evidence in favour of a specific alternative hypothesis more than any other possible alternatives such as measurement error and selection bias ( Gelman, 2013 ). Some authors have even argued that the more (a priori) implausible the alternative hypothesis, the greater the chance that a finding is a false alarm ( Krzywinski & Altman, 2013 ; Nuzzo, 2014 ).

The p-value is not the probability of the null hypothesis p(H0), of being true, ( Krzywinski & Altman, 2013 ). This common misconception arises from a confusion between the probability of an observation given the null p(Obs≥t|H0) and the probability of the null given an observation p(H0|Obs≥t) that is then taken as an indication for p(H0) (see Nickerson, 2000 ).

Neyman-Pearson, hypothesis testing, and the α-value

Neyman & Pearson (1933) proposed a framework of statistical inference for applied decision making and quality control. In such framework, two hypotheses are proposed: the null hypothesis of no effect and the alternative hypothesis of an effect, along with a control of the long run probabilities of making errors. The first key concept in this approach, is the establishment of an alternative hypothesis along with an a priori effect size. This differs markedly from Fisher who proposed a general approach for scientific inference conditioned on the null hypothesis only. The second key concept is the control of error rates . Neyman & Pearson (1928) introduced the notion of critical intervals, therefore dichotomizing the space of possible observations into correct vs. incorrect zones. This dichotomization allows distinguishing correct results (rejecting H0 when there is an effect and not rejecting H0 when there is no effect) from errors (rejecting H0 when there is no effect, the type I error, and not rejecting H0 when there is an effect, the type II error). In this context, alpha is the probability of committing a Type I error in the long run. Alternatively, Beta is the probability of committing a Type II error in the long run.

The (theoretical) difference in terms of hypothesis testing between Fisher and Neyman-Pearson is illustrated on Figure 1 . In the 1 st case, we choose a level of significance for observed data of 5%, and compute the p-value. If the p-value is below the level of significance, it is used to reject H0. In the 2 nd case, we set a critical interval based on the a priori effect size and error rates. If an observed statistic value is below and above the critical values (the bounds of the confidence region), it is deemed significantly different from H0. In the NHST framework, the level of significance is (in practice) assimilated to the alpha level, which appears as a simple decision rule: if the p-value is less or equal to alpha, the null is rejected. It is however a common mistake to assimilate these two concepts. The level of significance set for a given sample is not the same as the frequency of acceptance alpha found on repeated sampling because alpha (a point estimate) is meant to reflect the long run probability whilst the p-value (a cumulative estimate) reflects the current probability ( Fisher, 1955 ; Hubbard & Bayarri, 2003 ).

The figure was prepared with G-power for a one-sided one-sample t-test, with a sample size of 32 subjects, an effect size of 0.45, and error rates alpha=0.049 and beta=0.80. In Fisher’s procedure, only the nil-hypothesis is posed, and the observed p-value is compared to an a priori level of significance. If the observed p-value is below this level (here p=0.05), one rejects H0. In Neyman-Pearson’s procedure, the null and alternative hypotheses are specified along with an a priori level of acceptance. If the observed statistical value is outside the critical region (here [-∞ +1.69]), one rejects H0.

Acceptance or rejection of H0?

The acceptance level α can also be viewed as the maximum probability that a test statistic falls into the rejection region when the null hypothesis is true ( Johnson, 2013 ). Therefore, one can only reject the null hypothesis if the test statistics falls into the critical region(s), or fail to reject this hypothesis. In the latter case, all we can say is that no significant effect was observed, but one cannot conclude that the null hypothesis is true. This is another common mistake in using NHST: there is a profound difference between accepting the null hypothesis and simply failing to reject it ( Killeen, 2005 ). By failing to reject, we simply continue to assume that H0 is true, which implies that one cannot argue against a theory from a non-significant result (absence of evidence is not evidence of absence). To accept the null hypothesis, tests of equivalence ( Walker & Nowacki, 2011 ) or Bayesian approaches ( Dienes, 2014 ; Kruschke, 2011 ) must be used.

Confidence intervals

Confidence intervals (CI) are builds that fail to cover the true value at a rate of alpha, the Type I error rate ( Morey & Rouder, 2011 ) and therefore indicate if observed values can be rejected by a (two tailed) test with a given alpha. CI have been advocated as alternatives to p-values because (i) they allow judging the statistical significance and (ii) provide estimates of effect size. Assuming the CI (a)symmetry and width are correct (but see Wilcox, 2012 ), they also give some indication about the likelihood that a similar value can be observed in future studies. For future studies of the same sample size, 95% CI give about 83% chance of replication success ( Cumming & Maillardet, 2006 ). If sample sizes however differ between studies, CI do not however warranty any a priori coverage.

Although CI provide more information, they are not less subject to interpretation errors (see Savalei & Dunn, 2015 for a review). The most common mistake is to interpret CI as the probability that a parameter (e.g. the population mean) will fall in that interval X% of the time. The correct interpretation is that, for repeated measurements with the same sample sizes, taken from the same population, X% of times the CI obtained will contain the true parameter value ( Tan & Tan, 2010 ). The alpha value has the same interpretation as testing against H0, i.e. we accept that 1-alpha CI are wrong in alpha percent of the times in the long run. This implies that CI do not allow to make strong statements about the parameter of interest (e.g. the mean difference) or about H1 ( Hoekstra et al. , 2014 ). To make a statement about the probability of a parameter of interest (e.g. the probability of the mean), Bayesian intervals must be used.

The (correct) use of NHST

NHST has always been criticized, and yet is still used every day in scientific reports ( Nickerson, 2000 ). One question to ask oneself is what is the goal of a scientific experiment at hand? If the goal is to establish a discrepancy with the null hypothesis and/or establish a pattern of order, because both requires ruling out equivalence, then NHST is a good tool ( Frick, 1996 ; Walker & Nowacki, 2011 ). If the goal is to test the presence of an effect and/or establish some quantitative values related to an effect, then NHST is not the method of choice since testing is conditioned on H0.

While a Bayesian analysis is suited to estimate that the probability that a hypothesis is correct, like NHST, it does not prove a theory on itself, but adds its plausibility ( Lindley, 2000 ). No matter what testing procedure is used and how strong results are, ( Fisher, 1959 p13) reminds us that ‘ […] no isolated experiment, however significant in itself, can suffice for the experimental demonstration of any natural phenomenon'. Similarly, the recent statement of the American Statistical Association ( Wasserstein & Lazar, 2016 ) makes it clear that conclusions should be based on the researchers understanding of the problem in context, along with all summary data and tests, and that no single value (being p-values, Bayesian factor or else) can be used support or invalidate a theory.

What to report and how?

Considering that quantitative reports will always have more information content than binary (significant or not) reports, we can always argue that raw and/or normalized effect size, confidence intervals, or Bayes factor must be reported. Reporting everything can however hinder the communication of the main result(s), and we should aim at giving only the information needed, at least in the core of a manuscript. Here I propose to adopt optimal reporting in the result section to keep the message clear, but have detailed supplementary material. When the hypothesis is about the presence/absence or order of an effect, and providing that a study has sufficient power, NHST is appropriate and it is sufficient to report in the text the actual p-value since it conveys the information needed to rule out equivalence. When the hypothesis and/or the discussion involve some quantitative value, and because p-values do not inform on the effect, it is essential to report on effect sizes ( Lakens, 2013 ), preferably accompanied with confidence or credible intervals. The reasoning is simply that one cannot predict and/or discuss quantities without accounting for variability. For the reader to understand and fully appreciate the results, nothing else is needed.

Because science progress is obtained by cumulating evidence ( Rosenthal, 1991 ), scientists should also consider the secondary use of the data. With today’s electronic articles, there are no reasons for not including all of derived data: mean, standard deviations, effect size, CI, Bayes factor should always be included as supplementary tables (or even better also share raw data). It is also essential to report the context in which tests were performed – that is to report all of the tests performed (all t, F, p values) because of the increase type one error rate due to selective reporting (multiple comparisons and p-hacking problems - Ioannidis, 2005 ). Providing all of this information allows (i) other researchers to directly and effectively compare their results in quantitative terms (replication of effects beyond significance, Open Science Collaboration, 2015 ), (ii) to compute power to future studies ( Lakens & Evers, 2014 ), and (iii) to aggregate results for meta-analyses whilst minimizing publication bias ( van Assen et al. , 2014 ).

[version 3; referees: 1 approved

Funding Statement

The author(s) declared that no grants were involved in supporting this work.

- Christensen R: Testing Fisher, Neyman, Pearson, and Bayes. The American Statistician. 2005; 59 ( 2 ):121–126. 10.1198/000313005X20871 [ CrossRef ] [ Google Scholar ]

- Cumming G, Maillardet R: Confidence intervals and replication: Where will the next mean fall? Psychological Methods. 2006; 11 ( 3 ):217–227. 10.1037/1082-989X.11.3.217 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Dienes Z: Using Bayes to get the most out of non-significant results. Front Psychol. 2014; 5 :781. 10.3389/fpsyg.2014.00781 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Fisher RA: Statistical Methods for Research Workers . (Vol. 5th Edition). Edinburgh, UK: Oliver and Boyd.1934. Reference Source [ Google Scholar ]

- Fisher RA: Statistical Methods and Scientific Induction. Journal of the Royal Statistical Society, Series B. 1955; 17 ( 1 ):69–78. Reference Source [ Google Scholar ]

- Fisher RA: Statistical methods and scientific inference . (2nd ed.). NewYork: Hafner Publishing,1959. Reference Source [ Google Scholar ]

- Fisher RA: The Design of Experiments . Hafner Publishing Company, New-York.1971. Reference Source [ Google Scholar ]

- Frick RW: The appropriate use of null hypothesis testing. Psychol Methods. 1996; 1 ( 4 ):379–390. 10.1037/1082-989X.1.4.379 [ CrossRef ] [ Google Scholar ]

- Gelman A: P values and statistical practice. Epidemiology. 2013; 24 ( 1 ):69–72. 10.1097/EDE.0b013e31827886f7 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Halsey LG, Curran-Everett D, Vowler SL, et al.: The fickle P value generates irreproducible results. Nat Methods. 2015; 12 ( 3 ):179–85. 10.1038/nmeth.3288 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Hoekstra R, Morey RD, Rouder JN, et al.: Robust misinterpretation of confidence intervals. Psychon Bull Rev. 2014; 21 ( 5 ):1157–1164. 10.3758/s13423-013-0572-3 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Hubbard R, Bayarri MJ: Confusion over measures of evidence (p’s) versus errors ([alpha]’s) in classical statistical testing. The American Statistician. 2003; 57 ( 3 ):171–182. 10.1198/0003130031856 [ CrossRef ] [ Google Scholar ]

- Ioannidis JP: Why most published research findings are false. PLoS Med. 2005; 2 ( 8 ):e124. 10.1371/journal.pmed.0020124 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Johnson VE: Revised standards for statistical evidence. Proc Natl Acad Sci U S A. 2013; 110 ( 48 ):19313–19317. 10.1073/pnas.1313476110 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Killeen PR: An alternative to null-hypothesis significance tests. Psychol Sci. 2005; 16 ( 5 ):345–353. 10.1111/j.0956-7976.2005.01538.x [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Kruschke JK: Bayesian Assessment of Null Values Via Parameter Estimation and Model Comparison. Perspect Psychol Sci. 2011; 6 ( 3 ):299–312. 10.1177/1745691611406925 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Krzywinski M, Altman N: Points of significance: Significance, P values and t -tests. Nat Methods. 2013; 10 ( 11 ):1041–1042. 10.1038/nmeth.2698 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Lakens D: Calculating and reporting effect sizes to facilitate cumulative science: a practical primer for t -tests and ANOVAs. Front Psychol. 2013; 4 :863. 10.3389/fpsyg.2013.00863 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Lakens D, Evers ER: Sailing From the Seas of Chaos Into the Corridor of Stability: Practical Recommendations to Increase the Informational Value of Studies. Perspect Psychol Sci. 2014; 9 ( 3 ):278–292. 10.1177/1745691614528520 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Lindley D: The philosophy of statistics. Journal of the Royal Statistical Society. 2000; 49 ( 3 ):293–337. 10.1111/1467-9884.00238 [ CrossRef ] [ Google Scholar ]

- Miller J: What is the probability of replicating a statistically significant effect? Psychon Bull Rev. 2009; 16 ( 4 ):617–640. 10.3758/PBR.16.4.617 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Morey RD, Rouder JN: Bayes factor approaches for testing interval null hypotheses. Psychol Methods. 2011; 16 ( 4 ):406–419. 10.1037/a0024377 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Neyman J, Pearson ES: On the Use and Interpretation of Certain Test Criteria for Purposes of Statistical Inference: Part I. Biometrika. 1928; 20A ( 1/2 ):175–240. 10.3389/fpsyg.2015.00245 [ CrossRef ] [ Google Scholar ]

- Neyman J, Pearson ES: On the problem of the most efficient tests of statistical hypotheses. Philos Trans R Soc Lond Ser A. 1933; 231 ( 694–706 ):289–337. 10.1098/rsta.1933.0009 [ CrossRef ] [ Google Scholar ]

- Nickerson RS: Null hypothesis significance testing: a review of an old and continuing controversy. Psychol Methods. 2000; 5 ( 2 ):241–301. 10.1037/1082-989X.5.2.241 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Nuzzo R: Scientific method: statistical errors. Nature. 2014; 506 ( 7487 ):150–152. 10.1038/506150a [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Open Science Collaboration. PSYCHOLOGY. Estimating the reproducibility of psychological science. Science. 2015; 349 ( 6251 ):aac4716. 10.1126/science.aac4716 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Rosenthal R: Cumulating psychology: an appreciation of Donald T. Campbell. Psychol Sci. 1991; 2 ( 4 ):213–221. 10.1111/j.1467-9280.1991.tb00138.x [ CrossRef ] [ Google Scholar ]

- Savalei V, Dunn E: Is the call to abandon p -values the red herring of the replicability crisis? Front Psychol. 2015; 6 :245. 10.3389/fpsyg.2015.00245 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Tan SH, Tan SB: The Correct Interpretation of Confidence Intervals. Proceedings of Singapore Healthcare. 2010; 19 ( 3 ):276–278. 10.1177/201010581001900316 [ CrossRef ] [ Google Scholar ]

- Turkheimer FE, Aston JA, Cunningham VJ: On the logic of hypothesis testing in functional imaging. Eur J Nucl Med Mol Imaging. 2004; 31 ( 5 ):725–732. 10.1007/s00259-003-1387-7 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- van Assen MA, van Aert RC, Nuijten MB, et al.: Why Publishing Everything Is More Effective than Selective Publishing of Statistically Significant Results. PLoS One. 2014; 9 ( 1 ):e84896. 10.1371/journal.pone.0084896 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Walker E, Nowacki AS: Understanding equivalence and noninferiority testing. J Gen Intern Med. 2011; 26 ( 2 ):192–196. 10.1007/s11606-010-1513-8 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Wasserstein RL, Lazar NA: The ASA’s Statement on p -Values: Context, Process, and Purpose. The American Statistician. 2016; 70 ( 2 ):129–133. 10.1080/00031305.2016.1154108 [ CrossRef ] [ Google Scholar ]

- Wilcox R: Introduction to Robust Estimation and Hypothesis Testing . Edition 3, Academic Press, Elsevier: Oxford, UK, ISBN: 978-0-12-386983-8.2012. Reference Source [ Google Scholar ]

Referee response for version 3

Dorothy vera margaret bishop.

1 Department of Experimental Psychology, University of Oxford, Oxford, UK

I can see from the history of this paper that the author has already been very responsive to reviewer comments, and that the process of revising has now been quite protracted.

That makes me reluctant to suggest much more, but I do see potential here for making the paper more impactful. So my overall view is that, once a few typos are fixed (see below), this could be published as is, but I think there is an issue with the potential readership and that further revision could overcome this.

I suspect my take on this is rather different from other reviewers, as I do not regard myself as a statistics expert, though I am on the more quantitative end of the continuum of psychologists and I try to keep up to date. I think I am quite close to the target readership , insofar as I am someone who was taught about statistics ages ago and uses stats a lot, but never got adequate training in the kinds of topic covered by this paper. The fact that I am aware of controversies around the interpretation of confidence intervals etc is simply because I follow some discussions of this on social media. I am therefore very interested to have a clear account of these issues.

This paper contains helpful information for someone in this position, but it is not always clear, and I felt the relevance of some of the content was uncertain. So here are some recommendations:

- As one previous reviewer noted, it’s questionable that there is a need for a tutorial introduction, and the limited length of this article does not lend itself to a full explanation. So it might be better to just focus on explaining as clearly as possible the problems people have had in interpreting key concepts. I think a title that made it clear this was the content would be more appealing than the current one.

- P 3, col 1, para 3, last sentence. Although statisticians always emphasise the arbitrary nature of p < .05, we all know that in practice authors who use other values are likely to have their analyses queried. I wondered whether it would be useful here to note that in some disciplines different cutoffs are traditional, e.g. particle physics. Or you could cite David Colquhoun’s paper in which he recommends using p < .001 ( http://rsos.royalsocietypublishing.org/content/1/3/140216) - just to be clear that the traditional p < .05 has been challenged.

What I can’t work out is how you would explain the alpha from Neyman-Pearson in the same way (though I can see from Figure 1 that with N-P you could test an alternative hypothesis, such as the idea that the coin would be heads 75% of the time).

‘By failing to reject, we simply continue to assume that H0 is true, which implies that one cannot….’ have ‘In failing to reject, we do not assume that H0 is true; one cannot argue against a theory from a non-significant result.’

I felt most readers would be interested to read about tests of equivalence and Bayesian approaches, but many would be unfamiliar with these and might like to see an example of how they work in practice – if space permitted.

- Confidence intervals: I simply could not understand the first sentence – I wondered what was meant by ‘builds’ here. I understand about difficulties in comparing CI across studies when sample sizes differ, but I did not find the last sentence on p 4 easy to understand.

- P 5: The sentence starting: ‘The alpha value has the same interpretation’ was also hard to understand, especially the term ‘1-alpha CI’. Here too I felt some concrete illustration might be helpful to the reader. And again, I also found the reference to Bayesian intervals tantalising – I think many readers won’t know how to compute these and something like a figure comparing a traditional CI with a Bayesian interval and giving a source for those who want to read on would be very helpful. The reference to ‘credible intervals’ in the penultimate paragraph is very unclear and needs a supporting reference – most readers will not be familiar with this concept.

P 3, col 1, para 2, line 2; “allows us to compute”

P 3, col 2, para 2, ‘probability of replicating’

P 3, col 2, para 2, line 4 ‘informative about’

P 3, col 2, para 4, line 2 delete ‘of’

P 3, col 2, para 5, line 9 – ‘conditioned’ is either wrong or too technical here: would ‘based’ be acceptable as alternative wording

P 3, col 2, para 5, line 13 ‘This dichotomisation allows one to distinguish’

P 3, col 2, para 5, last sentence, delete ‘Alternatively’.

P 3, col 2, last para line 2 ‘first’

P 4, col 2, para 2, last sentence is hard to understand; not sure if this is better: ‘If sample sizes differ between studies, the distribution of CIs cannot be specified a priori’

P 5, col 1, para 2, ‘a pattern of order’ – I did not understand what was meant by this

P 5, col 1, para 2, last sentence unclear: possible rewording: “If the goal is to test the size of an effect then NHST is not the method of choice, since testing can only reject the null hypothesis.’ (??)

P 5, col 1, para 3, line 1 delete ‘that’

P 5, col 1, para 3, line 3 ‘on’ -> ‘by’

P 5, col 2, para 1, line 4 , rather than ‘Here I propose to adopt’ I suggest ‘I recommend adopting’

P 5, col 2, para 1, line 13 ‘with’ -> ‘by’

P 5, col 2, para 1 – recommend deleting last sentence

P 5, col 2, para 2, line 2 ‘consider’ -> ‘anticipate’

P 5, col 2, para 2, delete ‘should always be included’

P 5, col 2, para 2, ‘type one’ -> ‘Type I’

I have read this submission. I believe that I have an appropriate level of expertise to confirm that it is of an acceptable scientific standard, however I have significant reservations, as outlined above.

The University of Edinburgh, UK

I wondered about changing the focus slightly and modifying the title to reflect this to say something like: Null hypothesis significance testing: a guide to commonly misunderstood concepts and recommendations for good practice

Thank you for the suggestion – you indeed saw the intention behind the ‘tutorial’ style of the paper.

- P 3, col 1, para 3, last sentence. Although statisticians always emphasise the arbitrary nature of p < .05, we all know that in practice authors who use other values are likely to have their analyses queried. I wondered whether it would be useful here to note that in some disciplines different cutoffs are traditional, e.g. particle physics. Or you could cite David Colquhoun’s paper in which he recommends using p < .001 ( http://rsos.royalsocietypublishing.org/content/1/3/140216) - just to be clear that the traditional p < .05 has been challenged.

I have added a sentence on this citing Colquhoun 2014 and the new Benjamin 2017 on using .005.

I agree that this point is always hard to appreciate, especially because it seems like in practice it makes little difference. I added a paragraph but using reaction times rather than a coin toss – thanks for the suggestion.

Added an example based on new table 1, following figure 1 – giving CI, equivalence tests and Bayes Factor (with refs to easy to use tools)

Changed builds to constructs (this simply means they are something we build) and added that the implication that probability coverage is not warranty when sample size change, is that we cannot compare CI.

I changed ‘ i.e. we accept that 1-alpha CI are wrong in alpha percent of the times in the long run’ to ‘, ‘e.g. a 95% CI is wrong in 5% of the times in the long run (i.e. if we repeat the experiment many times).’ – for Bayesian intervals I simply re-cited Morey & Rouder, 2011.

It is not the CI cannot be specified, it’s that the interval is not predictive of anything anymore! I changed it to ‘If sample sizes, however, differ between studies, there is no warranty that a CI from one study will be true at the rate alpha in a different study, which implies that CI cannot be compared across studies at this is rarely the same sample sizes’

I added (i.e. establish that A > B) – we test that conditions are ordered, but without further specification of the probability of that effect nor its size

Yes it works – thx

P 5, col 2, para 2, ‘type one’ -> ‘Type I’

Typos fixed, and suggestions accepted – thanks for that.

Stephen J. Senn

1 Luxembourg Institute of Health, Strassen, L-1445, Luxembourg

The revisions are OK for me, and I have changed my status to Approved.

I have read this submission. I believe that I have an appropriate level of expertise to confirm that it is of an acceptable scientific standard.

Referee response for version 2

On the whole I think that this article is reasonable, my main reservation being that I have my doubts on whether the literature needs yet another tutorial on this subject.

A further reservation I have is that the author, following others, stresses what in my mind is a relatively unimportant distinction between the Fisherian and Neyman-Pearson (NP) approaches. The distinction stressed by many is that the NP approach leads to a dichotomy accept/reject based on probabilities established in advance, whereas the Fisherian approach uses tail area probabilities calculated from the observed statistic. I see this as being unimportant and not even true. Unless one considers that the person carrying out a hypothesis test (original tester) is mandated to come to a conclusion on behalf of all scientific posterity, then one must accept that any remote scientist can come to his or her conclusion depending on the personal type I error favoured. To operate the results of an NP test carried out by the original tester, the remote scientist then needs to know the p-value. The type I error rate is then compared to this to come to a personal accept or reject decision (1). In fact Lehmann (2), who was an important developer of and proponent of the NP system, describes exactly this approach as being good practice. (See Testing Statistical Hypotheses, 2nd edition P70). Thus using tail-area probabilities calculated from the observed statistics does not constitute an operational difference between the two systems.

A more important distinction between the Fisherian and NP systems is that the former does not use alternative hypotheses(3). Fisher's opinion was that the null hypothesis was more primitive than the test statistic but that the test statistic was more primitive than the alternative hypothesis. Thus, alternative hypotheses could not be used to justify choice of test statistic. Only experience could do that.

Further distinctions between the NP and Fisherian approach are to do with conditioning and whether a null hypothesis can ever be accepted.

I have one minor quibble about terminology. As far as I can see, the author uses the usual term 'null hypothesis' and the eccentric term 'nil hypothesis' interchangeably. It would be simpler if the latter were abandoned.

Referee response for version 1

Marcel alm van assen.

1 Department of Methodology and Statistics, Tilburgh University, Tilburg, Netherlands

Null hypothesis significance testing (NHST) is a difficult topic, with misunderstandings arising easily. Many texts, including basic statistics books, deal with the topic, and attempt to explain it to students and anyone else interested. I would refer to a good basic text book, for a detailed explanation of NHST, or to a specialized article when wishing an explaining the background of NHST. So, what is the added value of a new text on NHST? In any case, the added value should be described at the start of this text. Moreover, the topic is so delicate and difficult that errors, misinterpretations, and disagreements are easy. I attempted to show this by giving comments to many sentences in the text.

Abstract: “null hypothesis significance testing is the statistical method of choice in biological, biomedical and social sciences to investigate if an effect is likely”. No, NHST is the method to test the hypothesis of no effect.

Intro: “Null hypothesis significance testing (NHST) is a method of statistical inference by which an observation is tested against a hypothesis of no effect or no relationship.” What is an ‘observation’? NHST is difficult to describe in one sentence, particularly here. I would skip this sentence entirely, here.

Section on Fisher; also explain the one-tailed test.

Section on Fisher; p(Obs|H0) does not reflect the verbal definition (the ‘or more extreme’ part).

Section on Fisher; use a reference and citation to Fisher’s interpretation of the p-value

Section on Fisher; “This was however only intended to be used as an indication that there is something in the data that deserves further investigation. The reason for this is that only H0 is tested whilst the effect under study is not itself being investigated.” First sentence, can you give a reference? Many people say a lot about Fisher’s intentions, but the good man is dead and cannot reply… Second sentence is a bit awkward, because the effect is investigated in a way, by testing the H0.

Section on p-value; Layout and structure can be improved greatly, by first again stating what the p-value is, and then statement by statement, what it is not, using separate lines for each statement. Consider adding that the p-value is randomly distributed under H0 (if all the assumptions of the test are met), and that under H1 the p-value is a function of population effect size and N; the larger each is, the smaller the p-value generally is.

Skip the sentence “If there is no effect, we should replicate the absence of effect with a probability equal to 1-p”. Not insightful, and you did not discuss the concept ‘replicate’ (and do not need to).

Skip the sentence “The total probability of false positives can also be obtained by aggregating results ( Ioannidis, 2005 ).” Not strongly related to p-values, and introduces unnecessary concepts ‘false positives’ (perhaps later useful) and ‘aggregation’.

Consider deleting; “If there is an effect however, the probability to replicate is a function of the (unknown) population effect size with no good way to know this from a single experiment ( Killeen, 2005 ).”

The following sentence; “ Finally, a (small) p-value is not an indication favouring a hypothesis . A low p-value indicates a misfit of the null hypothesis to the data and cannot be taken as evidence in favour of a specific alternative hypothesis more than any other possible alternatives such as measurement error and selection bias ( Gelman, 2013 ).” is surely not mainstream thinking about NHST; I would surely delete that sentence. In NHST, a p-value is used for testing the H0. Why did you not yet discuss significance level? Yes, before discussing what is not a p-value, I would explain NHST (i.e., what it is and how it is used).

Also the next sentence “The more (a priori) implausible the alternative hypothesis, the greater the chance that a finding is a false alarm ( Krzywinski & Altman, 2013 ; Nuzzo, 2014 ).“ is not fully clear to me. This is a Bayesian statement. In NHST, no likelihoods are attributed to hypotheses; the reasoning is “IF H0 is true, then…”.

Last sentence: “As Nickerson (2000) puts it ‘theory corroboration requires the testing of multiple predictions because the chance of getting statistically significant results for the wrong reasons in any given case is high’.” What is relation of this sentence to the contents of this section, precisely?

Next section: “For instance, we can estimate that the probability of a given F value to be in the critical interval [+2 +∞] is less than 5%” This depends on the degrees of freedom.

“When there is no effect (H0 is true), the erroneous rejection of H0 is known as type I error and is equal to the p-value.” Strange sentence. The Type I error is the probability of erroneously rejecting the H0 (so, when it is true). The p-value is … well, you explained it before; it surely does not equal the Type I error.

Consider adding a figure explaining the distinction between Fisher’s logic and that of Neyman and Pearson.

“When the test statistics falls outside the critical region(s)” What is outside?

“There is a profound difference between accepting the null hypothesis and simply failing to reject it ( Killeen, 2005 )” I agree with you, but perhaps you may add that some statisticians simply define “accept H0’” as obtaining a p-value larger than the significance level. Did you already discuss the significance level, and it’s mostly used values?

“To accept or reject equally the null hypothesis, Bayesian approaches ( Dienes, 2014 ; Kruschke, 2011 ) or confidence intervals must be used.” Is ‘reject equally’ appropriate English? Also using Cis, one cannot accept the H0.

Do you start discussing alpha only in the context of Cis?

“CI also indicates the precision of the estimate of effect size, but unless using a percentile bootstrap approach, they require assumptions about distributions which can lead to serious biases in particular regarding the symmetry and width of the intervals ( Wilcox, 2012 ).” Too difficult, using new concepts. Consider deleting.