- What is data observability? Reliable data & AI delivered.

- Detect anomalies ML-enabled data anomaly detection.

- Triage incidents Get the right alert to the right team.

- Resolve the root cause Fix data issues in minutes.

- Measure data quality Measure what matters.

- Optimize cost and performance Rightsize runtime.

- Integrations The interoperability you need.

- Data quality monitoring and testing Deploy and manage monitors and tests on one platform.

- Report and dashboard integrity Produce reliable data your company can trusts.

- Data mesh and self serve data Empower data producers and consumers to self-serve.

- Customer-facing data products Launch and maintain performant and reliable products.

- Cloud migrations Deploy your warehouse/lake, transformation, and BI tools with confidence.

- Infrastructure and cost management Optimize your cloud storage and compute spend.

- Financial services

- Advertising, media, and entertainment

- Healthcare and life sciences

- Case studies

- DQ calculator

- GigaOm Data Observability Radar

- IMPACT Data Summit

Updated Aug 09 2023

8 Data Quality Issues and How to Solve Them

Tim is a content creator at Monte Carlo who writes about data quality, technology, and snacks—occasionally in that order.

Share this article

Your data will never be perfect. But it could be a whole heck of a lot better. From the hundreds of hours we’ve spent talking to customers, it’s clear that data quality issues are some of the most pernicious challenges facing modern data teams. In fact, according to Gartner , data quality issues cost organizations an average of $12.9 million per year.

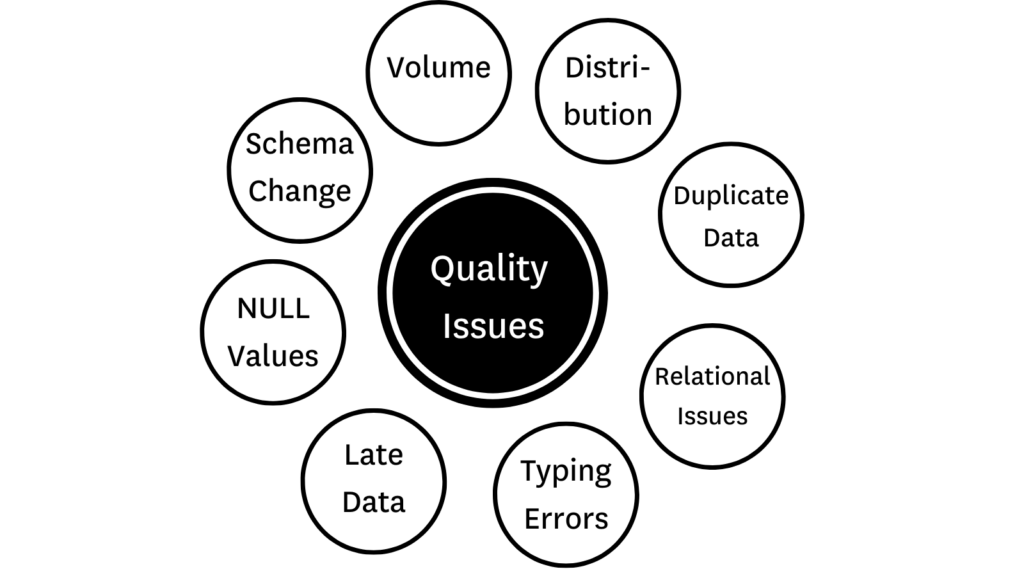

From business intelligence to machine learning, reliable data is the lifeblood of your data products. (That goes double for generative AI.) Bad data in—bad data products out. And that puts data quality at the top of every CTO’s priority list. In this post, we’ll look at 8 of the most common data quality issues affecting data pipelines, how they happen, and what you can do to find and resolve them. We’ll look at:

NULL values

- Schema change

Volume issues

Distribution errors, duplicate data, relational issues, typing errors, how to prioritize data quality issues.

Ready? Let’s dive in!

So, what are data quality issues?

Whether by human error or entropy (or the implacable forces of nature), data quality issues happen. Like all software and data applications, ETL/ELT systems are prone to failure from time-to-time.

As your data moves through your production pipelines, it meets near-countless opportunities for the quality of that data to be compromised. Data quality issues arise any time data is missing, broken, or otherwise erroneous based on the normal function of a given pipeline; and these data quality issues can arise at ingestion, in the warehouse, at transformation, and everywhere in between. Or the transformed data is impacted by anomalies at any point during production.Among other factors, data is considered low quality if:

- The data is stale, inaccurate, duplicative, or incomplete.

- The model fails to reflect reality.

- Or the transformed data is impacted by anomalies at any point during production.

As we alluded to previously, there’s no one single culprit of data quality issues. Data quality can be impacted by everything from software changes at the source all the way down to how an SDR inputs a country code. And just as important as discovering what the issue might be is discovering where it started. (Check out this article on data lineage to understand how relational maps can help you root cause and remediate data issues faster.)

So, with the basics out of the way, let’s look at some of those data quality issues in a bit more detail.

One of the most common data quality issues will arise from missing data, also known as NULL values. (Oh, those pesky NULL values).

NULL values occur when a field is left blank either intentionally or through a pipeline error owing to something like an API outage.

Let’s say you were querying the impact of a marketing program on sales lift by region, but the ‘region’ field was left blank on multiple records. Any rows where ‘region’ was missing would necessarily be excluded from your report, leading to inefficient future spend on regional marketing programs. Not cool.

The best way to solve for NULL values is with a NULL value test like dbt’s not_null test to validate whether values within a specified column of a model are missing after the model runs.

This generic test takes a model and column name, and fails whenever values from that column are NULL after the model runs. Source: dbt documentation.

Schema Changes

Probably the second most common data quality issue after NULL values is a broken pipeline caused by an upstream schema change.

Look, schema changes happen all the time . New features are developed. Software is updated. Data structures get re-evaluated. Sometimes a schema change is no big deal. The data team will know about the change ahead of time, and they’ll be ready to monitor it and adjust course if necessary.

However, sometimes the data team won’t know about it. And sometimes those schema changes are actually a very big deal.

Imagine your finance team is working on a revenue report for your board of directors. A couple days before they pull their report, one of your engineers pushes a code change to production that deletes a critical revenue column from a key table. That change might cause finance to under-report on revenue, impacting budgets, hiring, and everything in between.

It’s times like these that a schema change can wreak all kinds of havoc on your pipelines.

Detecting data quality issues caused by schema changes isn’t quite as cut and dry as a NULL value test. And resolution and prevention is another story entirely. The right mix of data quality checks, lineage mapping, and internal process is the key to detecting, resolving, and preventing the downstream impact of bad schema changes.

Check out this piece on overcoming schema changes to learn more.

How much data is entering your pipelines? Is it more than expected? Less? When these numbers fall outside your expected range, it’s considered a volume issue. And incorrect data volumes can do all kinds of funky things to your data products. Let’s look at some of the ways your volume can be incorrect.

Too little data

When data goes missing from your pipelines, it can quickly skew—or even break—a data model. Let’s say your data platform processes data from temperature sensors, and one of those sensors fails. What happens? You may get a bunch of crazy temperature values—or you may get nothing at all.

Too much data

Too much data might not sound like a problem (it is called big data afterall), but when rows populate out of proportion, it can slow model performance and increase compute costs. Monitoring data volume increases can help reduce costs and maintain the integrity of models by leveraging only clean high quality data that will drive impact for downstream users.

Volume tests

It’s important to identify data volume changes as quickly as possible. Volume tests identify when data volumes have changed due to either too much or too little data to uncover compromised models and validate the accuracy of your data.

In basic terms, volume tests validate the number of rows contained in critical tables. To get the most out of your volume tests, it’s important to tie your tests to a volume SLA (service-level agreement) .

SLIs (service-level indicators) are the metrics we use to measure performance against a given SLA. Volume tests can be used to measure SLIs by monitoring either table size or table growth relative to previous measurements.

For example, if you were measuring absolute table size, you could trigger an event when:

- The current total size (bytes or rows) decreases to a specific volume

- The current total size remains the same for a specific amount of time

The distribution of our data tells us whether our data reflects reality. So, whenever data skews outside an acceptable range, it’s considered to be a distribution error.

For example, if you’re a credit company who’s collecting and processing credit scores between 0 and 850 and you get a score that’s 2000, you know you have a distribution error. And a pretty serious one at that.

A data quality issue like this could simply be a sign of a few anomalous data points or it could indicate a more serious underlying issue at the data source.

Here’s a couple distribution errors to look out for:

Inaccurate data

When data is incorrectly represented, inaccurate data is injected into production pipelines. Inaccurate data could be as simple as an SDR adding an extra zero to a revenue number or a doctor incorrectly typing a patient’s weight.

Industries with strict regulatory needs, like healthcare and finance, should pay particular attention to these data quality issues.

Data variety

Just because a data point falls outside a normal distribution doesn’t necessarily mean it’s anomalous. It could be that it’s an early indicator of a new trend that hasn’t fully manifested in the data. However, it’s more likely that it’s an anomalous data point that will spell bigger issues down the road. When it comes to data variety, it’s a data quality issue that we’ll want to keep an eye on.

Distribution tests

Writing a distribution test in SQL that defines minimums and maximums for a given column will help you determine if a given table is representative of a normally distributed population.

dbt’s accepted_values test is an out-of-the-box test that allows the creator to define a range of acceptable distribution values for a given column.

Great Expectations ’ “unit tests” can also be adapted to monitor minimums and maximums like the zip_code test in the example below.

Another common data quality issue haunting your data pipelines is the specter of duplicate data. Duplicate data is any data record that’s been copied and shared into another data record in your database.

From spamming leads to needlessly driving up database costs, duplicate data has the power to damage everything from your reputation to your bottom line.

Duplication errors occur most often during data transfers from ingestion to storage, and can occur for a variety of reasons—from loose data aggregation processes to human typing errors.

The best way to monitor and discover duplicate data is with a uniqueness test. Uniqueness tests enable data teams to programmatically identify duplicate records during the normalization process before the data enters your production pipelines. If you’re leveraging dbt, you can take advantage of their unique test to identify duplicates, but uniqueness tests are also widely available for a variety of tools out-of-the-box depending on what you’ve integrated.

No, not that kind of relational issues. Relational or referential integrity refers to the relationship between tables in a database. A parent-child relationship, also known as a primary and foreign key, connotes the root data that’s joined across tables to create a given model. So, what happens if that parent data gets changed or deleted? Well, now you’ve got yourself a relational data quality issue.

Orphaned data models can’t produce functional data products. In the same way that removing the foundations of a building would necessarily cause that building to collapse, your data models will fall apart without access to the correct data from its primary key.

Maybe your marketing team is pulling a list of customer IDs together for a new nurture campaign. If those customer IDs don’t map back to the names and email addresses in your database, that nurture campaign is going to be over pretty quickly.

Referential integrity tests (also known as the relationships test in dbt) are a great way to monitor for altered or deleted parent data. Referential integrity ensures that any data reflected in a child table also has a corresponding parent table, and that no changes can be made to a parent or primary key without sharing those same changes across dependent tables.

The most human of data quality issues—typing errors. In a distributed professional landscape, the opportunity for little typing errors to make their way into your pipelines is virtually unlimited.

Maybe a prospect forgot a character in their email address or an analyst changed a row without realizing it. Maybe someone dropped a cheeseburger on their keyboard and added a few extra digits to a social security number. Who knows! These little inconsistencies may be fairly common, but that makes them all the more important to monitor. It’s important that those records are reconciled early and often to ensure you’re delivering the best possible data to your stakeholders.

A string-searching algorithm like RegEx can be used to validate a variety of common patterns like UUIDs, phone numbers, emails, numbers, escape characters, dates, etc., to ensure each string in a column matches a corresponding pattern—from phone numbers to blood types.

Whether you’re utilizing batch data or streaming, late data is a problem.

Fresh data paints an accurate real-time picture of its data source. But, when that data stops refreshing on time, it ceases to be useful for downstream users.

And in the great wide world of data quality issues, you can always count on downstream users to notice when their data is late.

In the same way you would write a SQL rule to verify volume SLIs, you can create a SQL rule to verify the freshness of your data based on a normal cadence. In this case, the SLI would be something like “hours since dataset refreshed.”

Monitoring how frequently your data is updated against predefined latency rules is critical for any production pipeline. In addition to manually writing your SQL rules, SQL tests are also available natively within certain ETL tools like the dbt source freshness command.

When it rains data quality issues, it pours. And when the day inevitably comes that you have more data quality issues than time to fire-fight, prioritization becomes the name of the game. So, how do you prioritize data quality issues? As in all things, it depends. Prioritizing data quality issues requires a deep understanding of the value of your data pipelines and how your data is actually being used across your platform. Some of the factors that can inform your triage practices include:

- What tables were impacted

- How many downstream users were impacted

- How critical the pipeline or table in question is to a given stakeholder

- And when the impacted tables will be needed next

One of the elements required to prioritize data quality issues well is an understanding of the lineage of your data. Knowing where the data came from and where it’s going is critical to understanding not just the potential damage of a data quality issue, but how to root-cause and solve it.

Which brings us neatly to our final point: the value of data observability for detecting, resolving, and preventing data quality issues at scale.

How data observability powers data quality at scale

Now that you’ve got a handle on some of the most common data quality issues, you might be saying to yourself, “that’s a lot of issues to monitor.” And you’d be right. Data reliability is a journey—and data testing is a much shorter leg of that journey than you might think. Monitoring data quality by hand is easy enough when you’re only writing tests for an organization of 30 with a handful of tables. But imagine how many typing errors, volume issues, and bad schema changes you’ll have when you’re a team of 300 and several hundred tables.

A whole lot more.

Remember, detecting data quality issues is only half the battle. Once you find them, you still need to root-cause and resolve them. In fact, Monte Carlo’s own research found that data engineers spend as much as 40% of their workday firefighting bad data.

As your data grows, your manual testing program will struggle to keep up. That’s where data observability comes in. Unlike manual query tests, data observability gives data teams comprehensive end-to-end coverage across their entire pipeline—from ingestion right down to their BI tooling.

What’s more, Monte Calro offers automated ML quality checks for some of the most common data quality issues—like freshness, volume, and schema changes—right out of the box. Delivering high quality data is about more than making sure tables are updated or that your distributions fall into an acceptable range. It’s about building data trust by delivering the most accurate and accessible data possible—whenever and however your stakeholders need it.

Interested in learning more about how Monte Carlo provides end-to-end coverage for the most common data quality issues? Let’s talk!

Read more posts.

Open Source Data Observability Tools: When Free Isn’t Always Better

The Role of Data Observability in Building Reliable GenAI Systems

How SeatGeek Reduced Data Incidents to Zero with Data Observability

How to Solve the “You’re Using THAT Table?!” Problem

Where the Data Silos Are

IMPACT 2021: The Data Observability Summit Videos Are Now Available On Demand

10 Common Data Quality Issues (And How to Solve Them)

Data quality is essential for any data-driven organization. Poor data quality results in unreliable analysis. High data quality enables actionable insights for both short-term operations and long-term planning. Identifying and correcting data quality issues can mean the difference between a successful business and a failing one.

What data quality issues is your organization likely to encounter? Read on to discover the ten most common data quality problems—and how to solve them.

Quick Takeaways

- Data quality issues can come from cross-system inconsistencies and human error

- The most common data quality issues include inaccurate data, incomplete data, duplicate data, and aging data

- Robust data quality monitoring can solve many data quality issues

1. Inaccurate Data

Gartner says that inaccurate data costs organizations $12.9 million a year , on average. Inaccurate data is data that is wrong—customer addresses with the wrong ZIP codes, misspelled customer names, or entries marred by simple human errors. Whatever the cause, whatever the issue, inaccurate data is unusable data. If you try to use it, it can throw off your entire analysis.

How can you solve the problem of inaccurate data? The first place to start is by automating data entry. The more you can automate, the fewer human errors you’ll find.

Next, you need a robust data quality monitoring solution, such as FirstEigen’s DataBuck, to identify and isolate inaccurate data. You can then try to fix the flawed fields by comparing the inaccurate data with a known accurate dataset. If the data is still inaccurate, you’ll have to delete it to keep it from contaminating your data analysis.

2. Incomplete Data

Another common data quality is incomplete data. These are data records with missing information in key fields—addresses with no ZIP codes, phone numbers without area codes, and demographic information without age or gender entered.

Incomplete data can result in flawed analysis. It can also make daily operations more problematic, as staff scurries to determine what data is missing and what it was supposed to be.

You can minimize this issue on the data entry front by requiring key fields to be completed before submission. Use systems that automatically flag and reject incomplete records when importing data from external sources. You can then try to complete any missing fields by comparing your data with another similar (and hopefully more complete data source).

3. Duplicate Data

When importing data from multiple sources, it’s not uncommon to end up with duplicate data. For example, if you’re importing customer lists from two sources, you may find several people who were customers of both retailers. You only want to count each customer once, which makes duplicative records a major issue.

Identifying duplicate records involves the process of “deduplication,” which uses various technologies to detect records with similar data. You can then delete all but one of the duplicate records—ideally, the one that better matches your internal schema. Even better, you may be able to merge the duplicative records, which can result in richer analysis as the two records might contain slightly different details that can complement each other.

4. Inconsistent Formatting

Much data can be formatted in a multitude of ways. Consider, for example, the many ways you can express a date—June 5, 2023, 6/5/2023, 6-5-23, or, in a less-structured format, the fifth of June, 2023. Different sources often use different formatting, so these inconsistencies can result in major data quality issues.

Working with different forms of measurement can cause similar issues. If one source uses metric measurements and another feet and inches, you must settle on an internal standard and ensure that all imported data correctly converts. Using the wrong measurements can be catastrophic—as when NASA lost a $125 million Mars Climate Orbiter because the Jet Propulsion Laboratory used metric measurements and contractor Lockheed Martin Astronautics worked with the English system of feet and pounds.

Solving this issue requires a data quality monitoring solution that profiles individual datasets and identifies these formatting issues. Once identified, it should be a simple matter of converting data from one format to another.

5. Cross-System Inconsistencies

Inconsistent formatting is often the result of combining data from two different systems. It’s common for two otherwise-similar systems to format data differently. These cross-system inconsistencies can cause major data quality issues if not identified and rectified.

You need to decide on one standard data format when working with data from multiple sources. All incoming data must then convert to that format, which can require using artificial intelligence (AI) and machine learning (ML) technologies that can automate the matching and conversion process.

6. Unstructured Data

While much of the third-party data you ingest will not conform to your standardized formatting, that might not be the worst problem you encounter. Some of the data you ingest may not be formatted at all.

Image Source

This unstructured data can contain valuable insights but doesn’t easily fit into most established systems. To convert unstructured data into structured records, use a data integration tool to identify and extract data from an unstructured dataset and convert it into a standardized format for use with your internal systems.

7. Dark Data

Hidden data, sometimes known as dark data , is collected and stored by an organization that is not actively used. IBM estimates that 80% of all data today is dark data . In many cases, it’s a wasted resource that many organizations don’t even know exists—even though it can account for more than half of an average organization’s data storage costs.

Dark data should either be used or deleted. To do either requires identifying this confidential data, evaluating its usability and usefulness, and making that data visible to key stakeholders in your organization.

8. Orphaned Data

Orphaned data isn’t hidden. It’s simply not readily usable. In most instances, data is orphaned when it’s not fully compatible with an existing system or not easily converted into a usable format. For example, a customer record that exists in one database but not in another could be classified as an orphan.

Data quality management software should be able to identify orphaned data. Thus identified, the cause of the inconsistency can be determined and, in many instances, rectified for full utilization of the orphaned data.

9. Stale Data

Data does not always age well. Old data becomes stale data that is more likely to be inaccurate. Consider customer addresses, for example. People today are increasingly mobile, meaning that addresses collected more than a few years previous are likely to reflect where customers used to live, not where they currently reside.

Older data needs constant culling from your system to mitigate this issue. It’s often easier and cheaper to delete data past a certain expiration date than to deal with the data quality issues of using that stale data.

10. Irrelevant Data

Many companies capture reams of data about each customer and every transaction. Not all of this data is immediately useful. Some of this data is ultimately irrelevant to the company’s business.

Capturing and storing irrelevant data increases an organization’s security and privacy risks. It’s better to keep only that data of immediate use to your company and either delete or not collect in the first place data of which you have little or no use.

Use DataBuck to Solve Your Data Quality Issues

When you want to solve your organization’s data quality issues, turn to FirstEigen. Our DataBuck solution uses AI and ML technologies to automate more than 70% of the data monitoring process. DataBuck identifies and fixes inaccurate, incomplete, duplicate, and inconsistent data, which improves your data quality and usability.

Contact FirstEigen today to learn more about solving data quality issues .

Check out these articles on Data Trustability, Observability, and Data Quality.

- 6 Key Data Quality Metrics You Should Be Tracking ( https://firsteigen.com/blog/6-key-data-quality-metrics-you-should-be-tracking/ )

- How to Scale Your Data Quality Operations with AI and ML ( https://firsteigen.com/blog/how-to-scale-your-data-quality-operations-with-ai-and-ml/ )

- 12 Things You Can Do to Improve Data Quality ( https://firsteigen.com/blog/12-things-you-can-do-to-improve-data-quality/ )

- How to Ensure Data Integrity During Cloud Migrations ( https://firsteigen.com/blog/how-to-ensure-data-integrity-during-cloud-migrations/ )

- Software Categories

Get results fast. Talk to an expert now.

855-718-1369

Common data quality issues & how to solve them (2024).

Key takeaways :

- Common data quality issues include inconsistency, inaccuracy, incompleteness, and duplication, which can severely impact decision-making processes.

- Solving these issues involves proactive measures such as implementing data validation rules and using data cleansing tools.

- Establishing a comprehensive data governance strategy ensures consistency, accuracy, and completeness in data over time.

Business intelligence (BI) software is a valuable tool that helps BI users make data-driven decisions. The quality of the data will determine the BI results that are used to make data-driven decisions, which means the data executed in BI software must be factual.

Included in this article are some best practice recommendations to ensure data quality.

In this article...

What are the most common data quality issues?

Duplicated data that can be counted more than once or incomplete data are common data quality issues. Inconsistent formats, patterns, or data missing relationship dependencies can significantly impact BI results used to make informed decisions. Inaccurate data can also lead to bad decisions. Poor data quality can financially suffocate a business through lost profits, missed opportunities, compliance violations, and misinformed decisions.

What is data validation?

The two types of data that need to be validated are structured and unstructured data. Structured data is already stored in a relational database management system (RDBMS) and uses a set of rules to check for format, consistency, uniqueness, and presence of data values. Unstructured data can be text, a Word document, internet clickstream records from a user browsing a business website, sensor data, or system logs from a network device or an application that further complicates the validation process.

Structured data is easier to validate using built-in features in an RDBMS or a basic artificial intelligence (AI) tool that can scan comment fields. On the other hand, unstructured data requires a more sophisticated AI tool like natural language processing (NLP) that can interpret the meaning of a sentence based on the context.

How to do data validation testing

Initial data validation testing can be configured into a data field when a programmer creates the data fields in a business application. Whether you are developing an application or an Excel spreadsheet with data fields, the validation requirements can be built into the data field to ensure only specific data values are saved in each field. For example, a field type that can only accept numbers will not allow a data value to be saved if the data value has alphabets or special characters in the data value.

If an area code or zip code field contains all numbers but does not meet the length requirement, an error message will appear stating the data value must have three or five numbers before the data value is saved.

There are several ways to check data values, and here are the most common data validation checks:

⦁ Data type only : When a field only accepts numbers or alphabets as valid entries

⦁ Code check : Occurs when a drop-down list contains a finite number of items a person can select, like the mobile phone brand you own

⦁ Range check : Range checking can select a number from 1 to 10 for a customer service evaluation

⦁ Format check : Ensures the data value is entered according to the predefined format, like a social security number or a specific number of characters required in a field

⦁ Uniqueness check : Used when a data value cannot have duplicate values in a data field, such as a social security number with no identical numbers

⦁ Consistency checks : logically confirms step one occurring before step two

Natural language processing is used to examine unstructured data using grammatical and semantic tools to determine the meaning of a sentence. In addition, advanced textual analysis tools can access social media sites and emails to help discover any popular trends a business can leverage. With unstructured data being 80% of businesses’ data today, successful companies must exploit this data source to help identify customers’ purchasing preferences and patterns.

What are the benefits of data validation testing?

Validating data from different data sources initially eliminates wasted time, manpower and monetary resources, and ensures the data is compatible with the established standards. After the different data sources are validated and saved in the required field format, it’s easy to integrate into a standard database in a data warehouse. Clean data increase business revenues, promotes cost effectiveness, and provides better decision-making that helps businesses exceed their marketing goals.

What are some challenges in data validation?

Unstructured data lacks a predefined data model, so it’s more difficult to process and analyze. Although both have challenges, validating unstructured data is far more challenging than validating structured data. Unstructured data can be very unreliable, especially from humans who exaggerate their information, so filtering out distorted information can be time-consuming. Sorting, managing, and keeping unstructured data organized is very difficult in its native format, and the schema-on-read allows unstructured data to be stored in a database.

Extracting data from a database that has not been validated before the data is saved can be extremely time-consuming if the effort is manual. Even validated data extracted from a database still needs to be validated. Anytime large databases are extracted from multiple sources it can be time-consuming to validate the databases, and the process is compounded when unstructured data is involved. Still, the availability of AI tools makes the validation process easier.

ALSO READ: What is Data Visualization & Why is it Important?

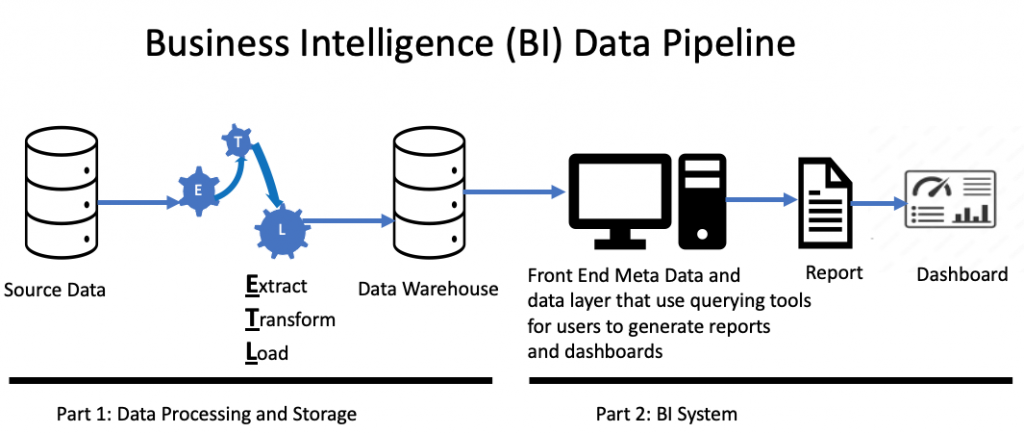

Testing BI data using the Extract Transform and Load (ETL) process

The ETL process is used to ensure structured and unstructured data from multiple systems is verified as valid before it’s moved into a database or data warehouse that will be used by BI analytical software. Data cleaning removes data values that will not be transformed or associated with the database in a data warehouse. The transformation process involves converting the data into a format or schema that the BI software can use to derive deeper insight into the BI results that help management make informed decisions. See Figure one for a pictorial representation of a BI data pipeline.

Check the data at the source

The first part of the BI data pipeline is when all the validation checks occur. Checking structured data at the source is not a complicated process unless there is a large volume of structured data to check. Once unwanted data is removed from your structured data, RDBMS validation features check data values before it’s extracted from an RDBMS. For example, using the RDBMS structured query language (SQL) or Excel power query, you can easily remove duplicate records and any missing data values by creating a filter to check for blank fields. Checking the accuracy or relevancy of structured data can be done using AI tools, but it will still require human participation to verify the accuracy and relevance of the data. Hevo is one of several data cleaning software tools available on the market that can perform this action in the ETL process.

Cleaning unstructured data involves pulling data from multiple sources that may cause duplicate data if added to any structured or unstructured data. For example, an email address, a unique username, or a home address in unstructured data can be used to identify and remove duplicate data already in source data.

Check the data after the transformation

The transformation process aims to convert unstructured data into a usable storage format and merge it with similar data in the data warehouse. Structured data can have its database in a data warehouse or merge with another database in the warehouse. Unstructured data is a more complicated process because unstructured data needs to be converted into a readable format first. Data parsing converts unstructured data into a readable format for computers and saves it in a different format.

Grammar-driven or data-driven parsing techniques in a data parsing tool like ProxyScape can be used on unstructured data such as scripting languages, communication protocols, and social media sites. However, there may still be a requirement to remove duplicate data, repair structural errors, address unwanted outliers by filtering, and address missing data. In addition, businesses can use the BI results from this type of unstructured data to improve their network management processes or products and services sold to customers.

Verify the data load

The clean, extracted, and transformed data is loaded into target sources in the data warehouse for execution. The clean and extract process ensures the data is consistent, accurate, and error-free before execution. You can verify the data is correctly loaded by testing a small sample size of 50 records from each source as long as you know the results before the sample test is run. When you’ve tested four or five sample sizes, and the results are what you expect, you have an indication that the data is loaded correctly and is accurate and true.

Review the BI test results

Business intelligence test results are the basis for companies making smarter business decisions. An error in the BI results can occur anywhere along the BI data pipeline. The BI data pipeline starts with the source data that goes through the ETL process, which puts the validated data in the data warehouse. The data layer is what a user uses to create the BI report or dashboard. For example, a user generates BI results in a report and compares the results of a known sales revenue report saved in an Excel spreadsheet against the BI results.

A BI testing strategy should include the following items:

⦁ A test plan that covers different data scenarios such as no data, valid data, and invalid data

⦁ A method for testing

⦁ Specific tests that are query intensive with a variety of BI results to compare against known results saved in a spreadsheet, flat file, or database.

How should you address data quality issues?

The recommended method for addressing data quality issues is at the source. Any data saved in an RDBMS can be rejected if not entered into a field in the prescribed format. The format can be numeric, alphanumeric, or alphabets with assigned lengths. Text fields and unstructured data can be challenging, but AI tools are available to validate these character fields.

How important is data quality in Business Intelligence (BI) software?

The quality of your business data is equal to the quality of the business decisions you make. Making a business decision based on flawed data can lead to losing customers and eventually business revenues if bad data is not identified as the reason for the downward spiral. Like the foundation of a house, accurate business data is foundational for a successful business since the data is used to make business decisions.

As foundational data is the core of a business, organizations need to have established policies enforced through data quality measurements.

There are five attributes of data quality to consider before the data can be defined as good data:

⦁ Completeness

⦁ Reliability

⦁ Relevance

⦁ Timeliness

The importance of continual data verification

The purpose of verification checks is to make sure the validation process has successfully occurred. Verification occurs after data is migrated or merged into the data warehouse by running a scripted sample test and reviewing the BI results. The verification process is necessary because it checks the validation of all data fields, and sample BI test results can verify the data is validated by producing known results. Therefore, verification is a continual process that should occur throughout the entire BI data pipeline anytime a new data source is added or modified.

Looking fir the latest data quality software solutions? Check out our Data Quality Software Buyer’s Guide

What are some common data quality issues?

Answer: Common data quality issues include inconsistency (varying data formats), inaccuracy (incorrect data), incompleteness (missing data), and duplication (repetitive data).

How can data quality issues be resolved?

Answer: Addressing data quality involves implementing data validation rules, using data cleansing tools, ensuring consistent data entry procedures, and establishing a comprehensive data governance strategy.

Looking for software? Try our Business Intelligence Product Selection Tool

Get FREE Expert Advice

How should our experts reach you?

Learn everything you need to know about Common Data Quality Issues & How to Solve Them (2024). Our experts will reach out to you shortly.

By clicking the button above, I confirm that I have read and agree to the Terms of Use and Privacy Policy.

10 Common Data Quality Issues in Reporting and Best Practices to Overcome Them

Table of contents

Data is only as useful as its accuracy. A small error, say a miscalculation, can make a big difference – impacting your decision-making.

No wonder data quality issues aren’t things to brush under the rug. Instead, you need to proactively resolve the quality issues for better, more data-informed decisions and business growth.

So, in this soup-to-nuts guide on data quality issues, we’ll bring to light top problems you need to be mindful of and how experts are solving them. In the end, we’ll also share the best solution for resolving data quality issues.

Ready to learn? Here’s the starter, followed by the details:

- Why is data quality an issue?

- Most common data quality issues in reporting

Why Is Data Quality an Issue?

Essentially, data quality relates to its accuracy, completeness, consistency, and validity.

Now if the quality of data at hand doesn’t align with this definition, you have a data quality issue. For example, if the data sample is incorrect, you have a quality issue. Similarly, if the data source isn’t reliable, you can’t make your decisions based on it.

By identifying data quality issues and correcting them, you have data that is fit for use. Without it, you have poor quality data that does more harm than good by leading to:

- Uninformed decision making

- Inaccurate problem analysis

- Poor customer relationships

- Poor performing business campaigns

The million-dollar question, however, is: are data quality issues so common that they can leave such dire impacts?

The answer: yes. 40.7% of our expert respondents confirm this by revealing that they find data quality issues very often. Moreover, 44.4% occasionally find quality issues. Only 14.8% say they rarely find issues in their data’s quality.

This makes it clear: you need to identify quality issues in your data reporting and take preventative and corrective measures.

Most Common Data Quality Issues in Reporting

Our experts say that the top two data quality issues they encounter are duplicate data and human error — a whopping 60% for each.

Around 55% say they struggle with inconsistent formats with 32% dealing with incomplete fields. About 22% also say they face different languages and measurements issue.

With that, let’s dig into the details. Here’s a list of the reporting data quality issues shared below:

- The person responsible doesn’t understand your system

- Human error

- Data overload

- Incorrect data attribution

- Missing or inaccurate data

- Data duplication

- Hidden data

- Outdated data

- Incomplete data

- Ambiguous data

1. The person responsible doesn’t understand your system

“The most common issue is that the person who created the report made an error because they did not fully understand your system or missed an important filter,” points out Bridget Chebo of We Are Working .

Consequently, you are left with report data that is inconsistent with your needs. Additionally, “the data you see isn’t telling you what you think it is,” Chebo says.

As a solution, Chebo advises: “ensure that each field, each automation is documented: what is its purpose/function, when it is used, what does it mean. Use help text so that users can see what a field is for when they hover over it. This will save time so they don’t have to dig around looking for field definitions.”

To this end, using reporting templates is a useful way to help people who put together reports. This kind of documentation also saves you time in explaining what your report requirements are to every other person.

Related : Reporting Strategy for Multiple Audiences: 6 Tips for Getting Started

2. Human error

Another common data quality issue in reports is human error.

To elaborate, “this is when employees or agents make typos, leading to data quality issues, errors, and incorrect data sets,” Stephen Curry from CocoSign highlights.

The solution? Curry recommends automating the reporting process. “Automation helped me overcome this because it minimizes the use of human effort and can be done by using AI to fill in expense reports instead of giving those tasks to employees. “

Speaking of the potential of automation, Curry writes: “AI can automatically log expenses transactions and direct purchases right away. I also use the right data strategy when analyzing because it minimizes the chances of getting an error from data capture.”

“Having the right data helps manage costs and optimize duty care while having data quality issues make your data less credible, so it’s best to manage them” Curry concludes.

Related : 90+ Free Marketing Automation Dashboard Templates

3. Data overload

“Our most common data quality issue is having too much data,” comments DebtHammer’s Jake Hill.

A heavy bucket load of data renders it useless – burying all the key insights. To add, “it can make it extremely difficult to sort through, organize, and prepare the data,” notes Hill.

“The longer it takes, the less effective our changing methods are because it takes longer to implement them. It can even be harder to identify trends or patterns, and it makes us more unlikely to get rid of outliers because they are harder to recognize.”

As a solution, the DebtHammer team has “implemented automation. All of our departments that provide data for our reports double-check their data first, and then our automated system cleans and organizes it for us. Not only is it more accurate, but it is way faster and can even identify trends for us.”

Related : Cleanup Your Bad CRM Data Like the Pros Do

PRO TIP: Measure Your Website Content Marketing Performance Like a Pro

To optimize your website’s content for conversion, you probably use Google Analytics 4 to learn how many people are interacting with your site, which pages brought them to the site in the first place, which pages they engage with the most, and more.

You may have to navigate multiple areas and reports within Google Analytics to get the data you want though. Now you can quickly assess your content performance in a single dashboard that monitors fundamental metrics, such as:

- Pageviews by page, country and source. Where are your visitors located, where do they come from, and which pages are they visiting the most?

- Conversions by page title. Which pages convert the best?

- Bounce rate by page title. Which pages encourage visitors to read further?

- Sessions by landing page. Which pages do new visitors view first?

- Total users by page title. How many users engaged with your website pages?

And more…

Now you can benefit from the experience of our Google Analytics 4 experts, who have put together a plug-and-play Databox template showing the most important metrics for measuring your website content marketing performance. It’s simple to implement and start using as a standalone dashboard or in marketing reports, and best of all, it’s free!

You can easily set it up in just a few clicks – no coding required.

To set up the dashboard, follow these 3 simple steps:

Step 1: Get the template

Step 2: Connect your Google Analytics 4 account with Databox.

Step 3: Watch your dashboard populate in seconds.

4. Incorrect data attribution

“As someone with experience in the SaaS space, the biggest data quality issue I see with products is attributing data to the wrong user or customer cohort,” outlines Kalo Yankulov from Encharge .

“For instance, I’ve seen several businesses that attribute the wrong conversion rates, as they fail to use cohorts. We’ve made that mistake as well.

When looking at our new customers in May, we had 22 new subscriptions out of 128 trials. This is a 17% trial to paid conversion, right? Wrong. Out of these 22 subscribers, only 14 have started a trial in May and are part of the May cohort. Which makes the trial conversion rate for this month slightly below 11%, not 17% as we initially thought,” Yankulov explains in detail.

Pixoul’s Devon Fata struggles similarly. “In my line of work, the issue tends to show up the most in marketing engagement metrics, since different platforms measure these things differently. It’s a struggle when I’m trying to measure the overall success of a campaign across multiple platforms when they all have different definitions of a look or a click.”

Now to resolve data incorrect attribution and to prevent it from contributing to wrong analysis in the future, Yankulov shares, “we have been doing our best to implement cohorts across all of our analytics. It’s a challenging but critical part of data quality.”

Related : What Is KPI Reporting? KPI Report Examples, Tips, and Best Practices

5. Missing or inaccurate data

Data inaccuracy can seriously impact decision-making. In fact, you can’t plan a campaign accurately or correctly estimate its results.

Andra Maraciuc from Data Resident shares experience with missing data. “While I was working as a Business Intelligence Analyst, the most common data quality issues we had were: inaccurate data [and] missing data.”

“The cause for both issues was human error. More specifically, coming from manual data entry errors. We tried to put extra effort into cleaning the data, but that was not enough.

The reports were always leading to incorrect conclusions.”

“The problem was deeply rooted in our data collection method,” Maraciuc elaborates. “We collected important financial data via free-form fields. This allowed users to type in basically anything they like or to leave fields blank. Users were inputting the same information in 6+ different formats, which from a data perspective is catastrophic.”

Maraciuc adds: “Here’s a specific example we encountered when collecting logistics costs. How we wanted the data to look like: $1000 The data we got instead: 1,000 or $1000, or 1000 USD or USD 1000 or 1000.00 or one thousand dollars, etc.”

So how did they solve it? “We asked our developers to remove ‘free-form fields’ and set the following rules:

- Allow users to only type digits

- Exclude special characters ($,%,^,*, etc)

- Exclude text characters

- Add field dedicated to currency (dropdown menu style)

For the missing data, rules were set to force users to not leave blank fields.”

The takeaway? “Any data quality issue needs to be addressed early on. If you can fix the issue from the roots, that’s the most efficient thing long term, especially when you have to deal with big data,” in Maraciuc’s words.

Related : Google Analytics Data: 10 Warning Signs Your Data Isn’t Reliable

6. Data duplication

At Cocodoc , Alina Clark writes, “Duplication of data has been the most common quality concern when it comes to data analysis and reporting for our business.”

“Simply put, duplication of data is impossible to avoid when you have multiple data collection channels. Any data collection systems that are siloed will result in duplicated data. That’s a reality that businesses like ours have to deal with.”

At Edoxi , Sharafudhin Mangalad shares they see the same issue. “Data inconsistency is one of the most common data quality issues in reporting when dealing with multiple data sources.

Many times, the same records might appear in multiple databases. Duplicate data create different problems that data-driven businesses face, and it can lead to revenue loss faster than any other issue with data.”

The solution? “Investing in a data duplication tool is the only antidote to data duplication,” Clark advises. “If anything, trying to manually eradicate duplicated data is too much of a task, especially given the enormous amounts of data collected these days.

Using a third-party data analytics company can also be a solution. Third-party data analytics takes care of duplicated data before it lands on your desk, but it may be a costly alternative compared to using a tool on your own.”

So while a data analysis tool might be costly, it saves you time and work. Not to forget, it leaves no room for human error and saves you dollars in the long haul by eradicating a leading data quality issue.

7. Hidden data

Hidden data refers to valuable information that is not being harnessed by the organization. This information often holds the potential to optimize processes and provide insights, yet remains unexploited, typically due to a lack of coherent and centralized data collection strategy within the company.

The accumulation of hidden data isn’t just a missed opportunity – it also reflects a lack of efficiency and alignment in a company’s data strategy. It can lead to inaccurate analytics, misguided decisions, and the underutilization of resources, impacting the overall data quality and integrity.

The best way to fix this problem is to centralize data collection and management. By bringing all data together in one place, a company can fully use its data, improving its quality and usefulness and helping to make better decisions.

8. Outdated data

Data, much like perishable goods, has a shelf-life and can quickly become obsolete, resulting in what is known as ‘data decay’.

Having data out of sync with reality can compromise the quality of the data and lead to misguided decisions or inaccurate predictions.

The problem of outdated data isn’t just an accuracy concern – it also reflects a lack of routine maintenance in a company’s data management strategy. The consequences can extend to poor strategic planning, inefficient operations, and compromised customer relationships, all of which can negatively impact business outcomes.

The most effective solution to prevent data decay is regularly reviewing and updating your data. By setting reminders to perform routine data checks, you can ensure your data remains fresh and relevant, enhancing its reliability and usefulness in decision-making processes. This proactive approach to data management can significantly improve data quality, leading to more accurate insights and better operational efficiency.

9. Incomplete data

Incomplete data refers to data sets that lack specific attributes, details, or records, creating an incomplete picture of the subject matter.

For a company’s data to provide genuine value, it must be accurate, relevant, and most importantly, complete. Ensuring data completeness requires a robust data management strategy that includes meticulous data collection, regular validation, and constant upkeep.

By implementing these practices, you can maintain the quality of your data, resulting in more reliable insights and effective decision-making.

10. Ambiguous data

Ambiguity in data refers to situations where information can be interpreted in multiple ways, leading to confusion and misunderstanding. This often occurs when data is improperly labeled, unstandardized, or lacks context, causing it to be unclear or open to various interpretations.

Companies should strive for clarity and consistency in data collection and management practices. This involves ensuring data is accurately labeled, properly contextualized, and adheres to standardized formats. Regular data audits can also be helpful in identifying and rectifying instances of ambiguity.

Get Rid of Data Quality Issues Today

In short, data inconsistency, inaccuracy, overload, and duplication are some of the leading problems that negatively impact the quality of data reporting. Not to mention, human error can lead to bigger issues down the line.

Want an all-in-one solution that solves these issues without requiring work from your end? Manage reporting via our reporting software .

All you have to do is plug in your data sources. From there, Databox takes on automatic uploading and updating of data from the various sources you’ve linked it to. At the end of the day, you get fresh data in an organized fashion on visually engaging screens.

So what are you waiting for? Gather, organize, and use data seamlessly – sign up for Databox today for free .

Get practical strategies that drive consistent growth

12 Tips for Developing a Successful Data Analytics Strategy

What Is Data Reporting and How to Create Data Reports for Your Business

What Is KPI Reporting? KPI Report Examples, Tips, and Best Practices

Build your first dashboard in 5 minutes or less

Latest from our blog

- Playmaker Spotlight: Tory Ferrall, Director of Revenue Operations March 27, 2024

- New in Databox: Safeguard Your Data With Advanced Security Settings March 18, 2024

- Metrics & KPIs

- vs. Tableau

- vs. Looker Studio

- vs. Klipfolio

- vs. Power BI

- vs. Whatagraph

- vs. AgencyAnalytics

- Product & Engineering

- Inside Databox

- Terms of Service

- Privacy Policy

- Talent Resources

- We're Hiring!

- Help Center

- API Documentation

IMAGES

VIDEO

COMMENTS

To solve this problem, you need a data quality management tool that automatically profiles the datasets, flagging quality concerns. Some may include adaptive rules that continue to learn from data, ensuring that discrepancies are resolved at the source and that data pipelines only supply trustworthy data.

And that puts data quality at the top of every CTO’s priority list. In this post, we’ll look at 8 of the most common data quality issues affecting data pipelines, how they happen, and what you can do to find and resolve them. We’ll look at: NULL values. Schema change. Volume issues.

For example, a sudden drop in customer engagement could be due to data quality problems. 2. Data profiling. Frequency distribution: Check the frequency distribution of your variables to detect outliers or anomalies. Summary statistics: Basic statistical measures like mean, median, and standard deviation can offer insights into data quality.

Robust data quality monitoring can solve many data quality issues. 1. Inaccurate Data. Gartner says that inaccurate data costs organizations $12.9 million a year, on average. Inaccurate data is data that is wrong—customer addresses with the wrong ZIP codes, misspelled customer names, or entries marred by simple human errors. Whatever the ...

Key takeaways: Common data quality issues include inconsistency, inaccuracy, incompleteness, and duplication, which can severely impact decision-making processes. Solving these issues involves proactive measures such as implementing data validation rules and using data cleansing tools. Establishing a comprehensive data governance strategy ...

No. 4: Use data profiling early and often. Data quality profiling is the process of examining data from an existing source and summarizing information about the data. It helps identify corrective actions to be taken and provides valuable insights that can be presented to the business to drive ideation on improvement plans.

The million-dollar question, however, is: are data quality issues so common that they can leave such dire impacts? The answer: yes. 40.7% of our expert respondents confirm this by revealing that they find data quality issues very often. Moreover, 44.4% occasionally find quality issues. Only 14.8% say they rarely find issues in their data’s ...

Data quality issues, if not treated early, will cascade and affect downstream; The only solution to these problems is to ensure that cleaning data is easy, quick, and natural. We need tools and technologies that help us, the data scientists, quickly identify and resolve data quality issues to use our valuable time in analytics and AI — the ...

Imagine having clean, good-quality data for all your analytics, machine learning and decision-making. Yes — I can’t imagine it either! The inherent characteristic of data is its quality, which will deteriorate even with the most robust controls. 100% accuracy and completeness don’t exist, which is also not the point.

2. Enlist data quality champions and data stewards. In connection with the first step, internal champions for a data quality program can help to evangelize its benefits. Data quality champions should come from all levels of the organization, from the C-suite to operational workers.