NN/g UX Podcast

By nielsen norman group, available on.

Bonus Episode: Design’s Role as AI Expands (Feat. Don Norman and Sarah Gibbons, VP at NN/g)

Nn/g ux podcast mar 28, 2024.

AI is changing faster than we can sometimes process and will likely do so for a while. The recent tech layoffs have also not spared UX professionals, adding to the uncertainty about our future roles in this rapidly changing environment. While there is still much we don't know about AI, Don Norman, co-founder of NN/g, and Sarah Gibbons, VP at NN/g, share their insights on the future roles of designers, encouraging professionals to think big in the wake of AI's advancements.

Related NN/g Articles & Training Courses

Generative UI and Outcome-Oriented Design

AI as a UX Assistant

- The 4 Degrees of Anthropomorphism of Generative AI

AI Chat Is Not (Always) the Answer

- AI-Powered Tools for UX Research: Issues and Limitations

- Prompt Structure in Conversations with Generative AI

Sycophancy in Generative-AI Chatbots

New Course: Practical AI for UX Professionals

Other Related Content

- Don Norman’s Website

0:00-1:12 - Intro

1:13-18:18 - What will design’s role be as AI expands?

18:18-23:15 - What is going to stay the same as AI expands?

23:16-end - Rapid Fire Questions

37. XR: Improving Learning Outcomes

Extended reality (XR) experiences hold the potential to transform the way we learn, work, and collaborate. Specifically, it can make educational experiences more interactive, engaging and ultimately drive higher learning outcomes. In this episode, we feature Jan Plass, who discusses the affordances of XR technology, provides examples of XR learning experiences and shares his expectations for its impact on the education landscape. Jan Plass is a Professor at New York University, Paulette Goddard Chair, and Director of CREATE. Learn more about Jan Plass : CREATE Lab Looking Inside Cells On the Morning You Wake Verizon AR/VR Learning Apps (i.e. Visceral Science, Mapper's Delight, UNSUNG, Looking Inside Cells etc.) NN/g ARTICLES & TRAINING COURSES Augmented/Virtual Reality vs. Computer Screens 10 Usability Heuristics Applied to Virtual Reality Virtual Reality and User Experience Emerging Patterns in Interface Design (full-day/2 half-day UXC course)

The Design of Everyday Things CHAPTERS: 0:00-3:13 - Intro 3:13-5:35 - What is XR? 5:35-8:54 - XR as a Learning Opportunity 8:54-12:19 - Examples of XR for learning 12:19-15:30 - Evidence of Learning with VR Technology 15:30-18.51 - Can VR features Induce Emotions and Result in Better Learning Outcomes? 18:51-23.45 - Comparing UX in 2D and 3D spaces? 23:45-27:41 - Accessibility and Inclusivity in XR 27:41 - XR Development: The Role of Affordances

36. AI & UX: Innovations, Challenges, and Impact

The surge of AI is currently changing the way we work and live. To avoid feeling left behind, it is important to engage with and understand these tools. This is true for UX designers just as much as almost everybody else. In this episode, we feature Henry Modisett from Perplexity AI and Kate Moran to get two insightful perspectives on the current state of AI and its connection to UX. Learn more about Henry Modisett Learn more about Kate Moran Perplexity.AI NN/g ARTICLES & TRAINING COURSES AI for UX: Getting Started (free article) AI & Machine Learning Will Change UX Research & Design (free video) AI Improves Employee Productivity by 66% (free article) AI-Powered Tools for UX Research: Issues and Limitations (free article) AI: First New UI Paradigm in 60 Years (free article) Sycophancy in Generative-AI Chatbots (free article) Information Foraging with Generative AI: A Study of 3 Chatbots (free article) The ELIZA Effect: Why We Love AI (free article) Practical AI for UX Professionals (full-day/2 half-day UXC course) CHAPTERS 0:00 Intro 1:43 Perplexity AI: History & Value Proposition 10:30 Perplexity AI: Next Steps & Future Developments 15:04 Making AI User Friendly: Generative Interfaces 24:33 Understanding AI as a Consumer Product 28:28 Mental Models for AI 30:41 An Outlook for AI 35:51 NN/g’s Role in the AI Movement 37:34 Anthropomorphism of AI 40:07 Dealing with the Fear of AI

35. Wireframing & Prototyping (feat. Leon Barnard, Content Manager, Balsamiq)

Wireframing is an incredibly useful design technique to flesh out conceptual ideas and communicate them to others. However, with the advent of powerful prototyping tools, some designers may think of these as irrelevant or outdated practices. Leon Barnard, Content Manager at Balsamiq and co-author of the book Wireframing for Everyone , discusses why he thinks wireframing is not only still relevant, but essential for maximizing creativity and innovative thinking.

- Connect with Leon Barnard

- Balsamiq Wireframing Academy Wireframing for Everyone (book) by Michael Angeles, Leon Barnard, & Billy Carlson

How to Draw a Wireframe (Even if You Can’t Draw) (free article)

UX Basics: Study Guide (collection of free articles)

UX Basic Training (UX certification course)

UX Deliverables (UX certification course)

Visual Design Fundamentals (UX certification course)

34. Data-Driven Decision-Making and Intranet Design (feat. Christian Knoebel and Charlie Kreitzberg, Princeton University)

We often talk about measuring progress, but it can sometimes be harder to do in practice than in theory, especially when you’re combining mixed research methods. However, some teams have managed to not only make sense of the data, but use it to make award-winning designs. In this episode we feature two members of Princeton University’s award-winning intranet team: Charlie Kreitzberg, Senior UX Advisor, and Christian Knoebel, Director Digital Strategy, and how they combined two frameworks: the 5D Rubric and pairLab, to bridge the gap between UX and product design.

Learn about the 5-D Rubric

Learn about pairLab

Related NN/g resources:

- 10 Best Intranets of 2022 (the year Princeton University won) (free article)

- Intranet & Enterprise Design Study Guide (free article)

- Past Intranet Design Annual: 2022 (the year Princeton University won) (534-page report)

- Intranet Design Annual: 2023 (most recent awards) (483-page report)

33. Tracking UX Progress with Metrics (feat. Dr. John Pagonis, UXMC, Qualitative and Quantitative Researcher)

Measuring a user experience can be intimidating at first, but it's an essential part of determining whether your work is moving in the right direction (and by how much). UX Master Certified Dr. John Pagonis shares his experiences from working with other organizations on measuring UX improvements and interpreting quantitative data.

Learn more about John Pagonis:

- Connect on LinkedIn

- Watch a past keynote talk: Usefulness measurement: A practical guide for all UXers (37 min video)

NN/g Resources about Usability and UX Metrics:

- Usefulness, Utility, and Usability by Jakob Nielsen, PhD (2-min video)

- The UX Unicorn Myth (decathlon analogy, explained, 2-min video)

- Usability 101 by Jakob Nielsen, PhD (free article)

- Measuring UX and ROI (full-day course for UX certification)

- UX Metrics and ROI by Kate Moran (297-page report)

NN/g Articles about Microsoft's Desirability Toolkit and Adjectives List

- Using the Microsoft Desirability Toolkit to Test Visual Appeal (free article)

- Microsoft Desirability Toolkit Product Reaction Words (free article)

Jeff Sauro, PhD on UX-Lite, UMUX-Lite, SUS, SEQ

(an aside: his website, MeasuringU.com , is an excellent resource)

- 10 Things To Know About The Single Ease Question (SEQ)

- Measuring Usability with the System Usability Scale (SUS)

- 5 Ways to Interpret a SUS Score

- Measuring Usability: From the SUS to the UMUX-Lite

- Evolution of the UX-Lite

Quick Update: Episodes Now On YouTube!

(But don't worry, we will still continue publishing future episodes on Spotify, Apple Podcasts, and many other platforms!)

Check out our YouTube Channel here

Go to our YouTube Podcast playlist

32. Conducting Research with Employees (feat. Angie Li, UXMC, Senior Manager of Product Design at Asurion)

Research with employees might sound easy in theory: you work in the same company as the people you need to observe or interview, and you might not even need to pay them for input. However, there's a bit more to it than meets the eye. In this episode, we feature UX Master Certified Angie Li, and she discusses what has helped her organize and run successful research with internal team members.

Learn more about Angie Li

NN/g Articles & Training Courses

- Employees as Usability-Test Participants (free article)

- Structuring Intranet Discovery & Design Research (free article)

- Ethnographic User Research (full-day/2 half-day UXC course)

- User Interviews (full-day/2 half-day UXC course)

- Usability Testing (full-day/2 half-day UXC course)

31. Service Design 101 (feat. Thomas Wilson, UXMC, Senior Principal Service Designer & Strategist)

Members of our UX Master Certified community are applying UX principles to their work in a range of different ways. In this episode, we interview Thomas Wilson, Senior Principal Service Designer at United Healthcare and discuss what service design is and what makes it challenging and rewarding.

Learn more about Thomas Wilson: LinkedIn

NN/g Service Design Resources:

- Service Blueprinting (full-day or half-day formats)

- Service Design Study Guide (free article with links to many more)

- Learn more about UX Master Certification

Some of the (many) people and organizations Thomas mentioned:

- Service Design Network

- Adam St. John Lawrence

- Marc Stickdorn

- Anthony Ulwick

30. Stakeholder Relationships (feat. Sarah Gibbons, Vice President at NN/g)

Building stakeholder relationships is no easy task but it is crucial for getting buy-in, aligning expectations and ultimately building trust. But, what even is a "stakeholder" and how do you know if you have good relationships built? In this episode, we feature a conversation between Samhita Tankala and Sarah Gibbons who discuss the challenges that come with stakeholder management and how to build successful relationships.

Learn more about Sarah Gibbons

Related NN/g articles, videos, and courses:

- Successful Stakeholder Relationships (UX Certification course)

- How to Sell UX: Translating UX to Business Value (Video)

- How to Collaborate with Stakeholders in UX Research (Article)

- UX Stories Communicate Designs (Article)

- Making a Case for UX in 3 Steps (Video)

- Stakeholder Analysis for UX Projects (Article)

- UX Stakeholders: Study Guide

Other sources Sarah mentioned:

- The Five Dysfunctions of a Team by Patrick Lencioni

29. UX Mentorship (feat. Tim Neusesser, UX Specialist at NN/g, and Travis Grawey, Director of Product Design at OfficeSpace)

The importance of mentorship for personal and professional development is an often-discussed topic, yet it can be challenging to find a mentor or become a mentor yourself. In this episode, we feature a conversation between a mentee, NN/g’s Tim Neusesser, and his mentor, Travis Grawey. In this episode they discuss how mentorship has impacted both of their careers.

Learn more about Tim Neusesser

Learn more about Travis Grawey (who mentors along with many others at ADPList )

Related NN/g articles & videos:

- Why You Need UX Mentoring (video)

- The C's of Great UX Mentorship (video)

- Finding UX Mentors (video)

- UX Careers Report (free report)

28. Games User Research (feat. Steve Bromley, author and games user researcher)

Have you ever wondered what it's like to usability test a video game? Or what goes on behind the scenes of gaming studios as they prepare for big release dates? In this episode, games user researcher Steve Bromley shares how he got into the field, and what makes games different from traditional user experiences.

Steve's Website: gamesuserresearch.com ; Steve's Book: How To Be A Games User Researcher

Other websites Steve Mentioned

- A Theory Of Fun For Game Design (Raph Koster)

- A Playful Production Process

- Game Developer Conference Videos

NN/g's Free Articles and Videos on Games & Gamification:

- 10 Usability Heuristics Applied to Video Games (article)

- Games User Research (article)

- Video Game Design and UX (video)

- Gamification in the User Experience (video)

- Psychology & UX Study Guide (free study guide)

NN/g UX Certification Courses (full-day or half-day formats)

- Persuasive & Emotional Design

- The Human Mind and Usability

27. Customer Journey Management (feat. Kim Salazar, Sr. UX Specialist at NN/g and Jochem van der Veer, CEO/Co-Founder of TheyDo)

If you ask experienced UX practitioners how to stay user-centric, you’ll inevitably hear something about the importance of customer journeys. However, as teams become more mature in their UX practices, the number of journeys being tracked and analyzed has been growing, sometimes faster than teams can manage, leading to scattered and uncoordinated redesign efforts. In this episode, we hear some tips about customer journey management from Kim Salazar of NN/g and Jochem van der Veer of TheyDo, a customer journey management platform.

Learn more about the episode guests:

Kim Salazar, Senior UX Specialist at NN/g

Jochem van der Veer, Co-Founder & CEO, TheyDo / TheyDo.com

Related NN/g courses & reports:

Customer-Journey Management (full-day / 2 half-days course)

- Operationalizing CX: Organizational Strategies for Delivering Superior Omnichannel Experiences (142-page report)

The Practice of Customer-Journey Mangement (free article)

What is Journey Management? (3 min video)

26. The Evolution of UX (feat. Dr. Jakob Nielsen, Co-Founder & Principal, NN/g)

The UX industry has recently seen what some call "unprecedented" shifts with layoffs and generative artificial intelligence rapidly changing how UX work is done. However, there have been similarly turbulent periods in tech, decades before. Dr. Jakob Nielsen reflects on the changes that have taken place over the near 25 years Nielsen Norman Group has been around, and discusses whether or not generative AI is just a phase or truly the next chapter of UX work.

Learn more about Dr. Jakob Nielsen

Past keynote speeches by Dr. Jakob Nielsen

Topics Covered:

- 1:45 - Dr. Jakob Nielsen's "origin story"

- 3:46 - What UX was like during the "dot-com boom"

- 6:30 - How the UX profession has changed since the "dot-com boom"

- 8:21 - Generative AI - a phase or the next chapter of UX work?

- 13:26 - How AI will shift the labor of UX away from pixel-pushing and toward orchestration/editorializing

- 16:58 - The future of UX: what keeps Dr. Jakob Nielsen inspired

- 19:34 - Balancing the pressure to build new features vs. fixing existing infrastructures (UX debt)

- 23:00 - Foundational UX ideologies: who is responsible for a good experience; matching how people actually behave

- 25:17 - Advice for people new to UX

- 27:40 - Advice for experienced UX professionals in preparing for a future with AI

25. Discount Usability: Expert Reviews and Heuristic Evaluations (feat. Evan Sunwall, UX Specialist, NNg)

With the recent surge in tech layoffs, a downsizing of UX labor means UX research is harder to do, meaning: research needs to be prioritized in really intentional ways. Discount inspection methods like expert reviews and heuristic evaluations can help identify high-priority design issues that need further research and design effort. In this episode, Evan Sunwall offers some insight into how to facilitate and communicate the results of these inspection methods.

Connect with Evan Sunwall on LinkedIn - https://www.linkedin.com/in/esunwall/

Evan's Recommended Further Reading on NN/g:

- Jakob Nielsen's 10 Usability Heuristics (article) The classic heuristics for effective interaction design used by many UX professionals to evaluate digital experiences.

- Heuristic Evaluation of User Interface (video)

- How to Conduct a Heuristic Evaluation (article)

- How to Conduct an Expert Review (article)

Other Books:

- Don't Make Me Think (book) - a very short and easy-to-read primer on usability and its role in creating successful products.

24. Artificial Intelligence: What Is It? What Is It Not? (feat. Susan Farrell, Principal UX Researcher at mmhmm.app)

The term artificial intelligence, AI, is having a bit of a boom, with the explosion in popularity of tools like ChatGPT, Lensa, DALL•E 2, and many others. The praises of AI have been equally met with skepticism and criticism, with cautionary tales about AI information quality, plagiarism, and other risks. Susan Farrell, the Principal UX Researcher at mmhmm, shares a bit about her experiences in researching chatbots and AI driven tools, and defines what AI is, what it isn’t, and what teams should consider when implementing AI systems. Susan Farrell's social media: LinkedIn ; Mastodon What Susan is working on: mmhmm.app NN/g courses referenced in this episode:

- Design Tradeoffs & UX Decision Making (full-day and 2-day course)

- Emerging Patterns in Interface Design (full-day and 2-day course)

Recommended Reading to deep dive into artificial intelligence & machine learning:

- Age of Invisible Machines - Robb Wilson

- The Promise and Terror of Artificial Intelligence - Os Keyes

- Becoming a chatbot: my life as a real estate AI’s human backup

- The Invisible Workforce that Makes AI Possible

- For Humans Learning Machine Learning

- What are large language models (LLMs), why have they become controversial?

- On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?

Interested in working for us? Check out our job posting and apply by Jan. 30, 2023.

23. Building Better Products with a Better Mindset (feat. Ryan Hudson-Peralta, Principal Experience Designer at Rocket Homes)

Building exceptional experiences usable by all is no easy feat. Focusing on what can be influenced and accepting what cannot be changed are two critical steps to crafting better products... and careers. We spoke with Ryan Hudson-Peralta, a principal experience designer at Rocket Homes, who often introduces himself as a "father, husband, international speaker, and designer... who just happens to be born without hands." Ryan shares a bit about his journey into the UX field, and how his mindset of curiosity and positivity allows him to create meaningful experiences with his designs and with his career.

Learn more about Ryan Hudson-Peralta: lookmomnohands.com

If you would like to learn about UX design, we have thousands of articles and videos at our website www.nngroup.com .

Full-Day Design Courses (also available in 2 half-day formats)

- UX Basic Training

- Web Page UX Design

- Application Design

- Mobile User Experience

- Designing Complex Apps for Specialized Domains

22. UX, Big Data, and Artificial Intelligence (feat. Kenya Oduor, Ph.D., founder of Lean Geeks)

How has the UX field changed over the years? What does the future of UX work look like if scope is wildly expanding? Will we be automated out of a job? What should teams do to ensure they’re not misinterpreting data? Kenya Oduor shares her thoughts on these questions and more, offering suggestions for UX professionals wishing to set themselves up for success in a future of coexistence with artificial intelligence systems and automation. (Surprisingly, these tips are quite helpful for planning and analyzing quantitative user research as well.)

Learn more about Kenya Oduor, Ph.D. and Lean Geeks: Bio , LinkedIn , Twitter , YouTube , LeanGeeks.net

Related NN/g Articles & Videos:

The Relationship Between Artificial Intelligence and User Experience (4 min video)

AI & Machine Learning Will Change UX Research & Design (11 min video)

10 Usability Heuristics in Interface Design (article)

Visibility of System Status (Usability Heuristic #1) (article)

Hierarchy of Trust: The 5 Experiential Levels of Commitment (article)

Related NN/g Course: Emerging Patterns in Interface Design (UXC course - full day or 2 half-days)

21. Making Your Visual Designs Work Harder For You (feat. Kelley Gordon, Digital Design Lead at NN/g)

Visual design takes a lot more work than just making things look pretty, and is a fundamental part of many user's experiences. Our digital design lead, Kelley Gordon, shares some practical tips for both designers and "non-designers" alike to strengthen visual designs and improve working relationships.

Read more about Kelley Gordon here

Follow us on Instagram @nngux

Full-day courses and 1-Hour Talks referenced in this episode:

- Design Critiques: What, How, and When (1-Hour Talk)

- Web Page UX Design (UXC Course)

- Visual Design Fundamentals (UXC Course)

20. What Makes a "Good" UX Professional?

19. Designing a UX Career (feat. Sarah Doody, Founder of the Career Strategy Lab)

Have you thought about your career lately? Chances are, you have - given the buzz around the record high voluntary resignation rates during this “Great Resignation.” In this episode, Sarah Doody, UX Researcher, Experience Designer, and Founder of the Career Strategy Lab, shares her thoughts on how UX professionals can design their ideal careers, whether they’re in the market for a new role or not. Read more about Sarah Doody:

- Website (sarahdoody.com)

- Twitter (@sarahdoody)

- LinkedIn (/in/sarahdoody)

- Instagram (@sarahdoodyux)

NN/g Resources on UX Careers:

- Jakob Nielsen: How to Start A New Career in UX (2-min video)

- UX Careers (free 90-page report)

- Growing in your UX Career: A Study Guide (article)

- Portfolios for UX Researchers (article)

Interested in working with us? Apply to this posting by April 4th

18. Presenting UX Work in a Compelling Way (feat. David Glazier, Senior Staff UX Designer at Illumina)

UX professionals often find themselves saddled with the burden of convincing others to value UX work. David Glazier, Sr. Staff UX Lead of Digital Experience at Illumina tells his story about how effective communication helped him get buy-in with stakeholders and key decision-makers.

Read more about David Glazier on LinkedIn

Courses & Talks mentioned in this episode:

- Storytelling to Present UX Work (live, full-day UX Certification course)

- VR & User Research (1-hour recorded talk by David Glazier)

- Presenting to Stakeholders (1-hour recorded talk, related

More articles, videos, and upcoming training can be found at www.nngroup.com

17. User Research Trends: What's Changed and What Hasn't (feat. Erin May and JH Forster, hosts of Awkward Silences)

There is no question that user research has changed over the last two years, but how significant are those changes? The answer is complex, but User Interviews' Erin May (VP Growth & Marketing) and JH Forster (VP Product) demystify these trends and share their observations and hopes for the years to come.

Check out the Awkward Silences podcast by User Interviews

Erin May: Twitter (@erinhmay) , LinkedIn

John-Henry (JH) Forster: Twitter (@jhforster) , LinkedIn

User Interviews: Twitter , LinkedIn , Facebook

Recruiting and Screening Candidate for User Research Studies (article)

Qualitative Research Study Guide (article)

Quantitative Research Study Guide (article)

Related 1-Hour Talks:

Remote Research Trends (1-Hour Talk)

How Inclusive Design Expands Business Value (1-Hour Talk)

Upcoming Training Events:

Virtual UX Conference January 8-21 (2 half-day format)

Qualitative Research Series April 4-8 (5-day training event)

User Interviews Links:

The State of User Research 2021 Report

User Research Field Guides

Recruit 3 research participants for free on UserInterviews.com

16. What's up with DesignOps? (feat. Kate Kaplan, Insights Architect at NN/g)

As design teams mature in size and scope, the importance of intentionally designing and systematizing processes, approaches, and tools becomes increasingly difficult to ignore. Kate Kaplan shares insights from her research studying design teams and offers tips for those seeking to initiate and lead DesignOps efforts as a way to make design more impactful at their organizations.

Learn more about Kate Kaplan on LinkedIn and nngroup.com

NN/g Resources Mentioned:

- DesignOps 101 (free article)

- DesignOps Maturity (free article)

- 6 Levels of UX Maturity (free article)

- UX Maturity Stage 3: Emergent (free article)

- DesignOps: DesignOps: Scaling UX Design and User Research (UXC course)

Other Links Mentioned: Rosenfeld Media’s DesignOps Summit: https://rosenfeldmedia.com/designopssummit2021/

A note on companies to follow, from Kate: "There are so many teams at different organizations publicly sharing and publishing their DesignOps journeys and experiments, and I think that willingness to share is amazing and points to the greater community being built around DesignOps. Some that come top of mind to follow are Salesforce, Cisco, IBM, AirBnB, Pinterest, Athena Health, Atlassian. Most of these companies have internal design blogs or medium channels where they share their approaches."

15. The Metaverse, Blockchain, and UX (feat. Geoff Robertson, Founder & UX Specialist at Chockablock)

A two-part podcast episode discussing the implications of decentralized computing and mixed reality on the future of UX work.

Geoff's Website: geoffrobertson.me

1-Hour Talks discussed in the episode:

- Blockchain 101

- Blockchain & UX

Related courses:

- Emerging Patterns in Interface Design (UX Certification eligible course)

Upcoming online events:

- Intranet and Employee Experience Symposium: Nov 9-10

- Qualitative Research Series (5-Days): Nov 15-19

14. Keeping Product Visions User-Centered (feat. Anna Kaley, UX Specialist at NN/g)

Product visions can align a team and inspire a better future state. When visions stem from real user needs, the ideas that follow have the greatest potential for success. NN/g UX Specialist Anna Kaley discusses keeping users at the center of product development, and shares insights from next weeks' UX Vision and Strategy Series, presented alongside Chief Designer, Sarah Gibbons.

Read more about Anna Kaley (NN/g bio)

Referenced courses and training series:

- UX Vision and Strategy Series (Oct 18-22, 2021) with Anna Kaley and Sarah Gibbons

- Product and UX: Building Partnerships for Better Outcomes

- Being a UX Leader: Essential Skills for Any UX Practitioner

- Lean UX and Agile

Related (free) videos & articles:

- UX Vision (3-min video by Anna Kaley) Create an aspirational view of the experience users will have with your product, service, or organization in the future. This isn't fluff, but will guide a unified design strategy. Here are 5 steps to creating a UX vision.

- UX Roadmaps: Definition and Components (article by Sarah Gibbons)

13. Special Edition: What's the "UX hill" you would die on?

To kick off Season 2, we're releasing a special edition episode, inspired by a tweet posted by @AllisonGrayce in Feb 2021 , where she asks followers, "what's a #ux hill you regularly die on?" Host Therese Fessenden asks both NN/g team members and members of the UXC and UXMC community what issues, topics, and principles they fiercely stand by, and shares their answers.

Guests and submissions featured in the episode (in order):

Chris Callaghan (UXMC) - UX and Optimisation Director (Manchester, UK) Twitter: @CallaghanDesign

Kara Pernice - Sr. VP at Nielsen Norman Group Bio: nngroup.com/people/kara-pernice

Mary Formanek (UXMC) - Senior User Experience + Product Lead Engineer (Arizona, US) Mary's Article: "Label Your Icons: No, we can’t read your mind. Please label your icons." Tiktok: @UXwithMary

Ben Shih - UX Consultant and Product Designer (Stockholm, Sweden) Portfolio: benshih.design

Rachel Krause - UX Specialist at Nielsen Norman Group Bio: nngroup.com/people/rachel-krause/

Anna Kaley - UX Specialist at Nielsen Norman Group Bio: nngroup.com/people/anna-kaley

You can find also information about the upcoming UX Vision and Strategy Series with Anna Kaley and Sarah Gibbons here.

12. ResearchOps (feat. Kara Pernice, Sr. VP at NN/g)

One might think user research gets easier when there are more people available to do it; but managing research initiatives at scale can be a difficult task in itself. In this episode, Kara Pernice, Senior VP at NN/g shares her experience and insights about managing UX research operations.

Kara Pernice's Articles and Videos (NN/g bio)

NN/g courses and articles referenced in this episode:

- ResearchOps (UX certification course)

- ResearchOps (article)

- Research Repositories for Tracking UX Research and Growing Your ResearchOps (article)

- Design Systems 101 (article)

- DesignOps (UX certification course)

Research repository tools mentioned:

- Consider.ly

Other ResearchOps pioneers and communities:

- Kate Towsey's Work (articles on Medium)

- Leveling up your Ops and Research — a strategic look at scaling research and Ops (by Brigette Metzler)

- ResearchOps Community (and Slack channel )

...and if you were curious what research papers launched Kara into her UX career, here are two of them:

- Nielsen, J. (1990). Big paybacks from 'discount' usability engineering. IEEE Software 7, 3 (May), 107-108.

- Nielsen, J. (1992). Finding usability problems through heuristic evaluation. Proc. ACM CHI'92 (Monterey, CA, 3-7 May), 373-380.

11. Solo UX: How to Be a One-Person UX Team (feat. Garrett Goldfield, UX Specialist at NN/g)

Advocating for UX work is hard. It's even harder when you're the only UX professional on your team. That said, there is still hope for one-person UX teams, and Garrett Goldfield shares his recommendations on how to make the most out of limited time and resources, and how to lead the charge in shifting corporate culture toward a more human-centered future.

Read more about Garrett Goldfield (NN/g bio)

Resources & courses cited in this episode:

- Episode 1. What is UX, anyway? (feat. Dr. Jakob Nielsen, the usability guru) (previous NN/g UX Podcast episode)

- The One-Person UX Team (UX Certification course)

- The Human Mind and Usability (UX Certification course)

10. On Delight, Emotion, and UX - Flipping the Script with UX Specialists Therese Fessenden & Rachel Krause

To celebrate our first podcast milestone, we flipped the script. NN/g UX Specialist Rachel Krause guest-hosts this episode, and interviews host Therese Fessenden about the concept of "delight" in user experience: what it is, why the pursuit of delight can often be a short-sighted and misunderstood endeavor, and how a more holistic approach to interpreting and anticipating user needs can more reliably lead to an experience that delights beyond a single interaction.

Read more about the hosts:

- Therese Fessenden's Articles & Videos (NN/g bio)

- Rachel Krause's Articles & Videos (NN/g bio)

Free resources cited in this episode:

- A Theory of User Delight: Why Usability Is the Foundation for Delightful Experiences (free article)

- Design for Emotion (by Daniel Ruston, UX Lead at Google Design)

- Principles of Emotional Design (Intuit case study by Garron Engstrom)

- How Delightful! 4 Principles for Designing Experience-Centric Products (Autodesk MLP case study by Maria Giudice)

- Research: Perspective-Taking Doesn’t Help You Understand What Others Want (HBR article by Tal Eyal, Mary Steffel, Nicholas Epley)

- Harvard Psychiatrist Identifies 7 Skills to Help You Get Along With Anybody (Inc. article by Carmine Gallo about Helen Riess' work)

Other resources cited in this episode:

- Emerging Patterns in Interface Design (UX Certification course)

- Persuasive and Emotional Design (UX Certification course)

- DesignOps: Scaling UX Design and User Research (UX Certification course)

- Designing for Emotion by Aarron Walter (book)

9. You Are Not the User: How the the False Consensus Effect Can Lead Good Design Astray (feat. Alita Joyce, UX Specialist at NN/g)

Does having more experience in the UX industry enable you to make better design decisions by intuition? Does user research ever become a waste of time if some research already exists in academic papers? The answer, it seems, is not that simple. In this episode, UX Specialists Alita Joyce and Therese Fessenden discuss why, after all these years doing independent user research, you should still test your interfaces and research with your own customers. Read more: Alita Joyce's Articles & Videos (NN/g bio)

- Viral video (by @tired_actor) "The Square Hole" (TikTok video)

- The False-Consensus Effect (free article)

- The “False Consensus Effect”: An Egocentric Bias in Social Perception and Attribution Processes (PDF of full study by Ross, Greene, and House)

- 10 Usability Heuristics for User Interface Design (free article)

- How to Conduct a Heuristic Evaluation (free article)

- 10 Usability Heuristics Applied to Video Games (free article)

- Don Norman - Changing Role of the Designer Part 2: Community Based Design (4 min video)

- Adam Grant - The "I’m Not Biased" Bias (Tweet about NBC Sunday Spotlight feature)

- The Human Mind and Usability (UX Certification course)

- Persuasive and Emotional Design (UX Certification course)

- Democratizing Innovation by Eric Von Hippel (book)

- Thinking, Fast and Slow by Daniel Kahneman (book)

8. Thinking Beyond Interactions: Omnichannel Experiences and CX (feat. Kim Salazar, Sr. UX Specialist at NN/g)

What does it take to create a great customer experience? As it turns out: a lot more than just a series of great interactions. Kim Salazar, Sr. UX Specialist, shares her expertise on what omnichannel experiences are, why they matter for CX, and how having a mature CX means fundamentally changing how we view and handle UX work.

Resources cited in this episode

- Ep. 1 - What is UX, anyway? (feat. Dr. Jakob Nielsen, the usability guru) (our inaugural podcast episode)

- CX Transformation (full-day course)

- Journey Mapping to Understand Customer Needs (full-day course)

- Kim's Articles & Videos (NN/g bio)

Other related articles & videos

- What is Omnichannel UX? (2-min video)

- User Experience vs. Customer Experience: What’s The Difference? (free article + 4 min video)

- Good Customer Experience Demands Organizational Fluidity (free article)

7. Lessen Digital Misery with Complex Apps (feat. Page Laubheimer, Sr. UX Specialist at NN/g)

"Keep it simple," is one of many great UX mantras... but how exactly does someone "keep it simple" when working with complex applications? In this episode, Page Laubheimer, Senior UX Specialist with NN/g, shares his expertise in information architecture (IA) and complex app design, recommends a few ideas to "lessen digital misery" on business-to-business (B2B) and enterprise applications, and offers advice for when you have to redesign a legacy application that is part of a user's everyday life.

Resources cited in this episode:

- Designing Complex Apps for Specialized Domains (full-day course)

- Data Visualizations for Dashboards (4-min video)

- Dashboards: Making Charts and Graphs Easier to Understand (NN/g article)

- Why I Now Use “Four-Threshold” Flags On Dashboards (Nick Desbarats' article)

- Tesler's Law (Wikipedia article)

- Page's NN/g Articles and Videos (bio page)

6. Ethics in UX (feat. Maria Rosala, UX Specialist at NN/g)

Aren't all "user-centered" designs ethical by default if we're giving people what they want? Not exactly. Maria Rosala, UX Specialist at NN/g, shares her thoughts about how we can be better researchers and designers by asking critical questions about our research and design decisions, evaluating important tradeoffs, and ensuring we include the right people in our research and design process.

Resources Cited in this Episode:

- User Interviews (UX Certification course)

- Design Tradeoffs and UX Decision Frameworks (UX Certification course)

- Ethics in User Research (1-hr online seminar)

- You Are Not The User: The False-Consensus Effect (free article)

- TED Talk: How I'm fighting bias in algorithms | Joy Buolamwini (9 min YouTube video)

Also related: How Inclusive Design Expands Business Value (1-hr online seminar)

5. ROI: The Business Value of UX (feat. Kate Moran, Sr. UX Specialist at NN/g)

How do you evaluate the impact of UX work? As the world faces another bout of pandemic lockdowns, UX teams are finding themselves in a tough position of justifying their work to business decision-makers. Kate Moran, Senior UX Specialist at NN/g, shares insights from her recently published report on UX Metrics and ROI (return on investment) and offers advice on how to prove your worth. In this episode, we cover: what ROI is and why it matters, avoiding common pitfalls when calculating ROI, and how to stay growth-oriented without harming long-term business strategy.

- UX Metrics and ROI report (297-page report)

- Measuring UX and ROI (full-day course)

- Myths of Calculating ROI (free article)

- Don't Shame Your Users Into Converting (4-min video)

- Stop Shaming Your Users for Micro Conversions (free article)

- Kate's NN/g Articles and Videos (bio page)

4. Creativity During a Crisis (feat. Aurora Harley, Sr. UX Specialist at NN/g)

"Diamonds are made under pressure," but what does that mean for creativity and productivity? Aurora Harley, Senior UX Specialist, shares some advice on staying creative and productive during times of stress, thoughts on reframing constraints as opportunities, and tips for nurturing an environment that fosters innovative thought regardless of distance. Resources Cited in this Episode

- Effective Ideation Techniques for UX Design (full-day course)

- Changing Role of the Designer (Don Norman) (5 min video)

- Ideation Techniques for a One-Person UX Team (2 min video)

- Remote Ideation - Synchronous or Asynchronous Techniques (4 min video)

- Troubleshooting Group Ideation: 10 Fixes for More and Better UX Ideas (free article)

3. UX Careers: Growing in (and out of) Your Current Role (feat. Rachel Krause, UX Specialist at NN/g)

What does a typical UX career path look like? Are there even typical career paths in UX? How can you become a UX leader? What should new and seasoned UX professionals keep in mind as they move through their careers? Rachel Krause, a UX Specialist at Nielsen Norman Group, shares her insights from her research on the UX profession, and perspectives from her own journey into the field of user experience.

- What a UX Career Looks Like Today (free article)

- User Experience Careers (90-page report)

- Management vs. Specialization as UX Career Growth (3 min video)

- The State of UX Job Descriptions (3 min video)

- Being a UX Leader: Essential Skills for Any UX Practitioner (full-day course)

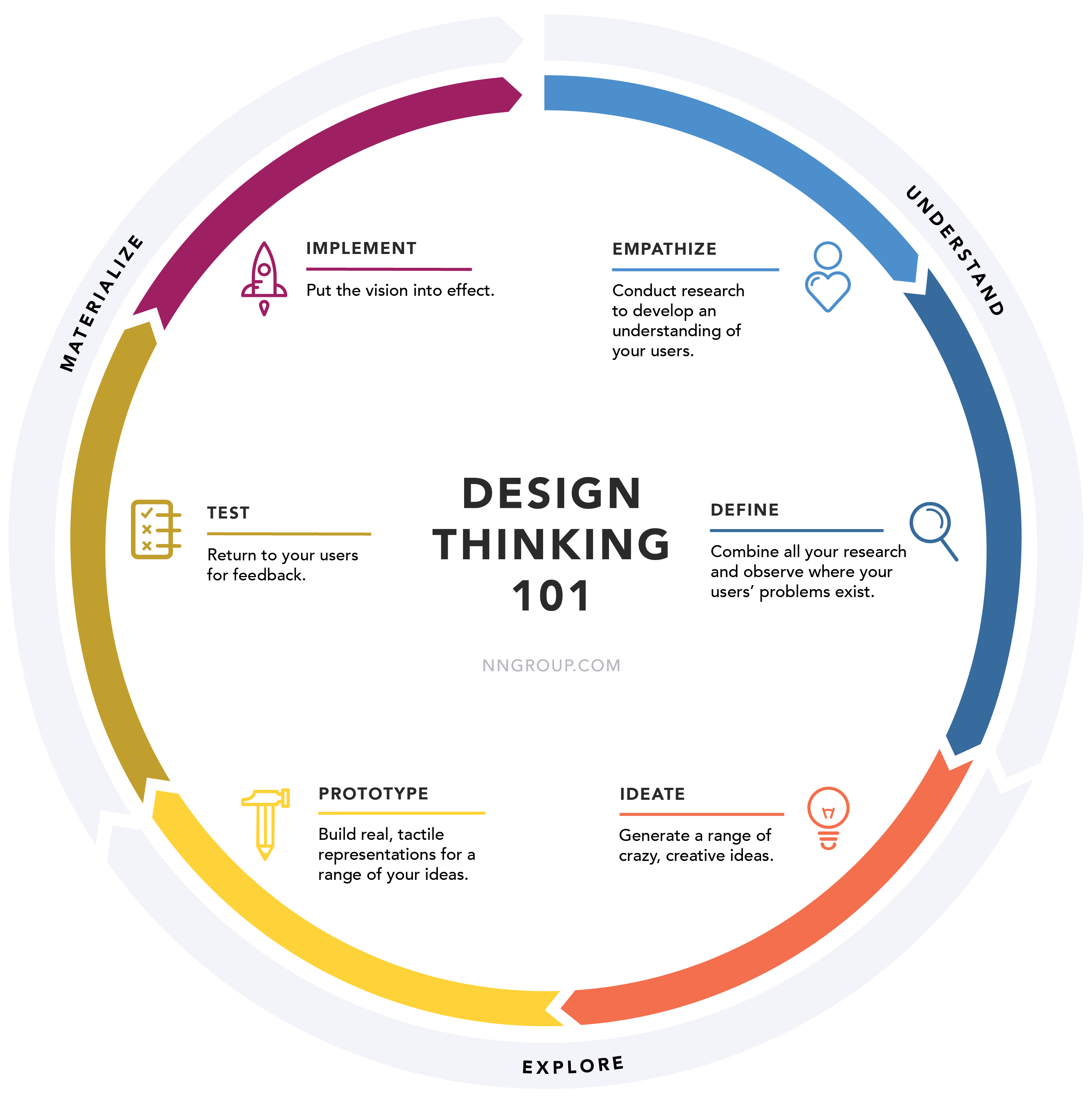

2. Empathy, Adaptability, and Design (feat. Sarah Gibbons, Chief Designer at NN/g)

Sarah Gibbons & Therese Fessenden discuss empathy and adaptability as critical design skills (perhaps now more than ever) and share thoughts on how design plays a bigger role than meets the eye.

- NN/g Instagram: @nngux

- Service Blueprinting (full-day course)

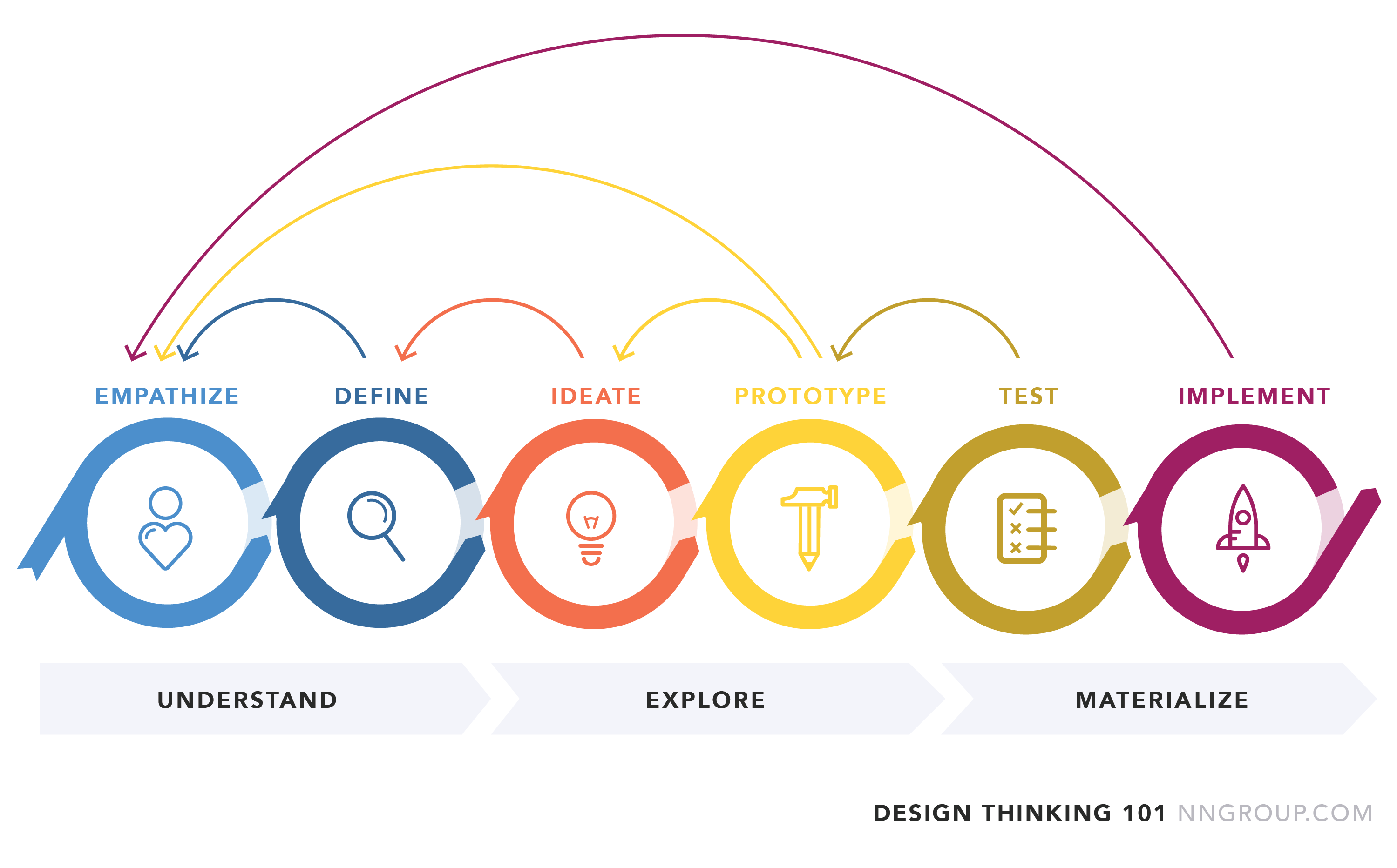

- Design Thinking is Like Cooking (2 min video)

- UX Roadmaps (full-day course)

- UX Roadmaps: Definition and Components (free article)

- Sarah's NN/g Articles and Videos (bio page)

Also related: Brené on Comparative Suffering, the 50/50 Myth, and Settling the Ball ( Unlocking Us with Brené Brown podcast episode)

1. What is UX, anyway? (feat. Dr. Jakob Nielsen, the usability guru)

Nielsen Norman Group is celebrating its 22nd anniversary by launching our very own NN/g UX Podcast. Join host Therese Fessenden as she interviews NN/g co-founder Dr. Jakob Nielsen on the most important questions about our industry: What really is "user experience," anyway? And what is the state of UX now, compared to when NN/g started 22 years ago?

- The Definition of User Experience (article)

- WAP Mobile Phones Field Study Findings (article)

- Describing UX to Family and Friends (3-min video)

- Usability 101: Introduction to Usability (article)

- User Experience vs. Customer Experience (article)

- Jakob Nielsen's Profile, Articles, and Videos

- Artificial Intelligence

- Product Management

- UX Research

Unlocking the Power of Research Repositories for Product Managers

Zita Gombár

Bence Mózer

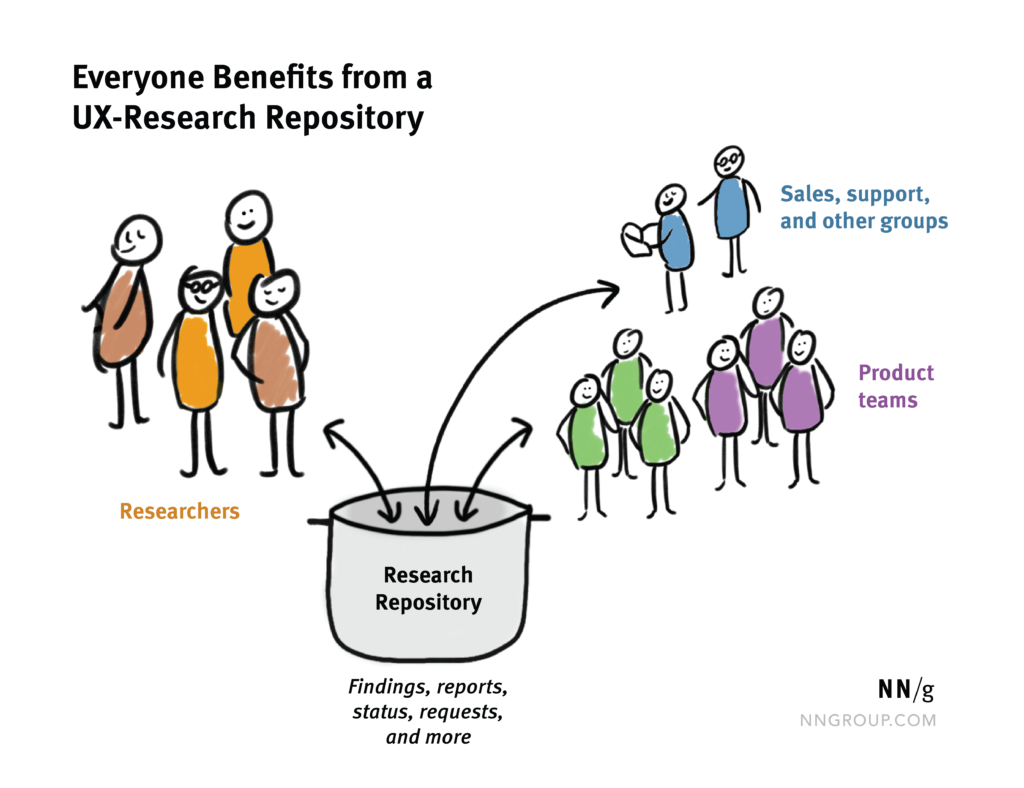

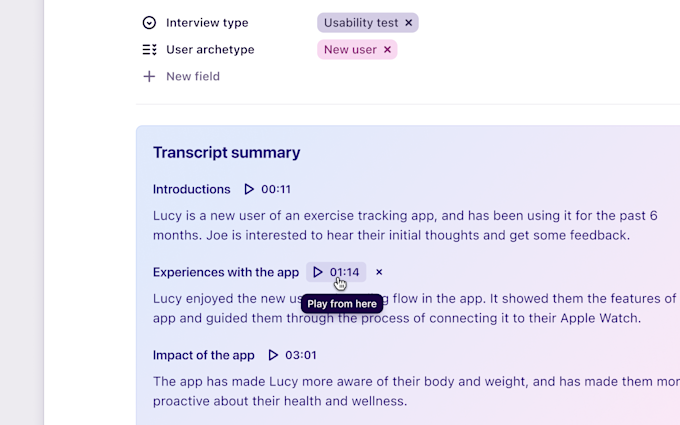

A centralized system is required to develop a good understanding of your users across teams and across your firm. Here's when research repositories come in handy. They bring together various points of user input and feedback. So, whether you're a UX researcher, designer, or a product manager, this post is for you.

Have you ever wished for faster answers to your research questions? If your team has already conducted a few studies, you likely have a solid understanding of your users and may already have the answers you need.

However, various other departments within your organization are likely also receiving feedback from customers. How do you connect your data with insights from other teams? And how can you ensure that people have access to research data when they need it? Discover the solution in a research repository.

Based on our extensive experience working with diverse clients, including large enterprises and NGOs, UX Studio has developed a comprehensive ebook on research repositories for UX research . This resource covers all the essential aspects, starting with the definition and key principles of research repositories, along with insights on building and maintaining them for long-term success. We would also like to provide you with a sneak peek from the book about why a research repository is crucial for your organization.

What is a research repository?

Any system that keeps research data and notes that can be quickly retrieved, accessed, and used by the entire team is referred to as a research repository (or research library). Let’s look at the key components of this definition.

A research repository is a system that stores all of your research data, notes, and documentation (such as research plans, interview guides, scripts, personas, competitor analysis, etc.) connected to the study. It allows for easy search and access by the entire team.

Let’s take a closer look at the elements of this definition:

Storage system.

A system of this type is any tool you use to store and organize your research data. This can take various forms and structures. It could be an all-in-one application, a file-sharing system, a database, or a wiki.

Research data.

Any information that helps you understand your users can be considered research data. It makes no difference what format is used. Text, images, videos, or recordings can all be used to collect research data. Notes, transcripts, or snippets of customer feedback can also be used.

Ease of use.

Anyone on your team can access, search, explore, and combine research data if it is simple to use. Developers, designers, customer success representatives, and product managers are all examples of this. Any of them can gain access to the research repository in order to learn more about users. The researcher is no longer the gatekeeper when it comes to understanding users.

Since it’s a massive collection of research, the research repository is also the team’s go-to place for learning about users and their pain points. Instead of searching three different locations for reports, all research information is centralized in one single place.

How can a research repository help your company?

As a company starts doing more and more user research, this means more studies, more reports, and a whole lot of information that you cannot really access unless you know who worked on what.

If you work in a company without a research repository, you probably rely a lot on file sharing software like SharePoint or Google Drive. This means you spend a lot of time navigating through folders and files to find what you’re looking for (if you can find it at all), as well as sharing file links to distribute your work and findings.

How often do you wish for a simpler way of organizing all this data?

Let’s explore how research repositories can elevate your research work!

The go-to place to learn about users

Since it’s a massive collection of research, the research repository is the team’s go-to place for learning about users and their pain points. Instead of searching three different locations for reports, all research information is centralized in one single place.

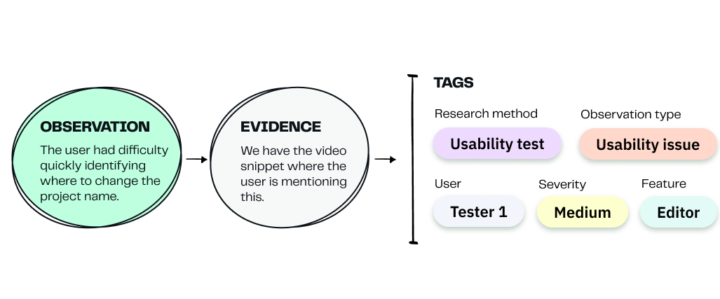

Speeding up research.

Whenever you have a new research question, you can start by reviewing existing data. Since it’s all organized according to tags, you don’t have to go through multiple reports to find it. This way, if there’s relevant information, you get the answers faster.

Get more value from original research.

If research observations are no longer tied to report findings, they can be reused to answer other questions. Of course, if they’re relevant. This builds on the previous point of speeding up research. Also, it allows you to get more value from original studies.

No more repeated research.

As reports get buried and lost in file-sharing systems, so does the information they contain. We briefly mentioned this before. But you’re probably familiar with the situation. Someone performed a study on a feature at some point. Let’s say that another person joins the team and wants to learn about that feature. Without any knowledge of existing research, researchers start a new study for the same question. If, on the other hand, all the research data is centralized, you can see what questions have already been asked.

Enable evidence-based decisions.

Probably this is one of the biggest wins for a research repository. It allows teams to see the big issues that need to be solved. Also, teams get to see on their own, how these issues come up. On top of that, they can now use that data to prioritize projects and resources. This makes it easier than using gut feelings or personal opinions.

Anyone can learn about users – to increase UX research maturity.

By default, the researcher is the person who knows everything about users and their problems. A research repository opens up this knowledge to anyone who is interested. With a bit of time and patience, everybody can get to know users.

You can prioritize your roadmap.

Putting all the data together will give you an overall view of the user experience. This, in turn, will help you see what areas you need to prioritize on your roadmap.

Yes, it takes time and resources to set up and maintain a research repository. But the benefits are clearly worth that investment. Even more so since information, along with access to it are essential for high-performing teams. Besides research, it is about building trust and transparency across your team and giving them what they need to make the right decisions.

When to use a research repository?

Whether you are thinking about setting up a research system for an ongoing project or you would like to organize your existing insights, there are a few things to consider.

Long term, ongoing research.

This is common for in-house research. It may also occur if the user research is outsourced to a third party. Data will begin to pile up at some point in long-term, ongoing research. It will become more difficult to locate information as it accumulates. We’ve also discussed the issues that may arise if you only rely on reports. In this case, you will undoubtedly require a solution to organize and structure all of the research findings.

This is the first major scenario in which you should strongly consider establishing a research repository. Even if you’re a one-person team, and you’re the only one doing the research, it’s a good idea to start promoting research repositories. Explain your situation to your manager or team .

Multiple researchers are working on the same project.

It doesn’t really matter whether this is a short-term or long-term project. When multiple researchers are working on the same project, they require a solution that will assist them in compiling all of the data. You can collect all of the observations using a research repository. Even if the two researchers discuss their findings, using a research repository increases the likelihood that important data will not be overlooked.

Good products are developed from great insights. However, teams require access to these insights in order to integrate them into the product. This is where research repositories can be helpful.

Setting up a research repository may take some time, but it is a great investment for scaling research operations in the long run and increasing UX research maturity. Despite the initial effort required, a research repository can take your entire research to the next level. For this, you can use the tools you have at hand such as Notion or Google Sheet, or you can try out dedicated tools such as Dovetail or Condens .

At UX Studio, we have assisted numerous companies in setting up their research processes, enabling them to conduct in-house research and enhance their product development with a user-centered approach.

For comprehensive guidance on research repositories, we invite you to download our complete book here .

Do you want to build your in-house research team or create your own repository?

As a top UI/UX design agency , UX studio has successfully handled over 250 collaborations with clients worldwide.

Should you want to improve the design and performance of your digital product, message us to book a consultation with us. Our experts would be happy to assist with the UX strategy, product and user research, or UX/UI design.

Let's talk

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 29 August 2023

re3data – Indexing the Global Research Data Repository Landscape Since 2012

- Heinz Pampel ORCID: orcid.org/0000-0003-3334-2771 1 , 2 ,

- Nina Leonie Weisweiler ORCID: orcid.org/0000-0001-6967-9443 2 ,

- Dorothea Strecker ORCID: orcid.org/0000-0002-9754-3807 1 ,

- Michael Witt 3 ,

- Paul Vierkant 4 ,

- Kirsten Elger 5 ,

- Roland Bertelmann 2 ,

- Matthew Buys 4 ,

- Lea Maria Ferguson 2 ,

- Maxi Kindling 6 ,

- Rachael Kotarski ORCID: orcid.org/0000-0001-6843-7960 7 &

- Vivien Petras 1

Scientific Data volume 10 , Article number: 571 ( 2023 ) Cite this article

2992 Accesses

2 Citations

25 Altmetric

Metrics details

For more than ten years, re3data, a global registry of research data repositories (RDRs), has been helping scientists, funding agencies, libraries, and data centers with finding, identifying, and referencing RDRs. As the world’s largest directory of RDRs, re3data currently describes over 3,000 RDRs on the basis of a comprehensive metadata schema. The service allows searching for RDRs of any type and from all disciplines, and users can filter results based on a wide range of characteristics. The re3data RDR descriptions are available as Open Data accessible through an API and are utilized by numerous Open Science services. re3data is engaged in various initiatives and projects concerning data management and is mentioned in the policies of many scientific institutions, funding organizations, and publishers. This article reflects on the ten-year experience of running re3data and discusses ten key issues related to the management of an Open Science service that caters to RDRs worldwide.

Similar content being viewed by others

SciSciNet: A large-scale open data lake for the science of science research

Zihang Lin, Yian Yin, … Dashun Wang

PANGAEA - Data Publisher for Earth & Environmental Science

Janine Felden, Lars Möller, … Frank Oliver Glöckner

A dataset describing data discovery and reuse practices in research

Kathleen Gregory

Introduction

In the 2010s, making research data publicly accessible gained importance: Terms such as e-science 1 and cyberscience 2 were shaping discourses about scientific work in the digital age. Various discussions within the scientific community 3 , 4 , 5 , 6 , 7 , 8 resulted in an increased awareness of the value of permanent access to research data. Policy recommendations of the Organization for Economic Co-operation and Development (OECD) 9 or the European Commission 10 reflected this shift.

The need for professional data management was increasingly emphasized with the publication of the now widely recognized FAIR Data Principles 11 . Researchers, academic institutions, and funders started to address this issue in policies 12 , initiatives and networks 13 , 14 , 15 , and infrastructures 16 , 17 , 18 , 19 . For example, the National Science Foundation (NSF) in the United States published a Data Sharing Policy in 2011, in which the funding agency required beneficiaries to provide information about data handling in a Data Management Plan 20 . In Germany, the German Research Foundation (DFG) published a similar statement regarding access to research data in the 2010s 21 , 22 .

The handling of research data was also discussed in library and computing center communities: In 2009, the German Initiative for Networked Information (DINI), a network of information infrastructure providers, published a position paper on the need for research data management (RDM) at higher education institutions 23 . Through the discussions within DINI, the need for a registry of RDRs became evident. At the time, the Directory of Open Access Repositories (OpenDOAR) 24 had already established itself as a directory of subject and institutional Open Access repositories. However, there was no comparable directory for RDRs, and it remained unclear how many repositories dedicated to research data existed.

In 2011, a consortium of research institutions in Germany submitted a proposal to the German Research Foundation (DFG), asking for funding to develop ‘re3data – Registry of Research Data Repositories’ 25 . Members of the consortium were the Karlsruhe Institute of Technology (KIT), the Humboldt-Universität zu Berlin, and the Helmholtz Open Science Office at the GFZ German Research Centre for Geosciences. The DFG approved the proposal in the same year. The project aimed to develop a service that would help researchers identify suitable RDRs to store their research data. re3data went online in 2012, and already listed 400 RDRs one year later 26 .

While working on the registry, the project team in Germany became aware of a similar initiative in the USA. With support from the Institute of Museum and Library Services, Purdue and Pennsylvania State University libraries developed Databib, a ‘curated, global, online catalog of research data repositories’ 27 . Databib went online in the same year 28 . At the time, RDRs were indexed and curated by library staff at re3data partner institutions, whereas Databib had established an international editorial board to curate RDR descriptions 27 . Databib and re3data signed a Memorandum of Understanding in 2012, and, following excellent cooperation, the two services merged in 2014 29 . The merger brought together successful ideas from each service: The metadata schemas were combined, resulting in version 2.2 of the re3data metadata schema 30 , and the sets of RDR descriptions were merged. The international editorial board of Databib was expanded to include re3data editors. Development of the IT infrastructure of re3data continued, combining the expertise both services had built. For operating the service, a management duo was installed, comprising a member each from institutions representing re3data and Databib.

The two services have always been closely corresponding with DataCite, an international not-for-profit organization that aims to ensure that research outputs and resources are openly available and connected so that their reuse can advance knowledge across and between disciplines, now and in the future 31 . In this process, the main objective was to cover the interests of the global community of operators more comprehensively. In 2015, the DataCite Executive Board and the General Assembly decided to enter into an agreement with re3data, making re3data a DataCite partner service 29 . In 2017, re3data won the Oberly Award for Bibliography in the Agricultural or Natural Sciences from the American Libraries Association 32 .

Today, re3data is the largest directory of RDRs worldwide, indexing over 3,000 RDRs as of March 2023. re3data is widely used by academic institutions, funding organizations, publishers, journals, and various other stakeholders, such as the European Open Science Cloud (EOSC) and the National Research Data Infrastructure in Germany (NFDI). re3data metadata is also used to monitor and study the landscape of RDRs, and it is reused by numerous tools and services. Third-party-funded projects support the continuous development of the service. Currently, the DFG is funding the development of the service within the project re3data COREF 33 , 34 . In addition, the project partners DataCite and KIT bring the re3data perspective into EOSC projects such as FAIRsFAIR (completed) 35 and FAIR-IMPACT 29 .

This article outlines the decade-long experience of managing a widely used registry that supports a diverse and global community of stakeholders. The article is clustered around ten key issues that have emerged over time. For each of the ten issues, we first present a brief definition from the perspective of re3data. We then describe our approach to addressing the issue, and finally, we offer a reflection on our work.

The section outlines ten key issues that have emerged in the last ten years of operating re3data.

For re3data, Open Science means providing unrestricted access to the re3data metadata and schema, transparency of the indexing process, as well as open communication with the community of global RDRs.

At all times, re3data has been committed to Open Science by striving to be transparent and by sharing metadata. The openness of re3data pertains not only to the handling of its metadata and the associated infrastructure, but also to collaborative engagements with the community of research data stewards and other stakeholders in the field of research data management.

An example of this is the development of the re3data metadata schema: The initial version of the schema integrated a request for comments that allowed stakeholders to offer suggestions and improvements 26 . This participatory approach, accompanied by a public relations campaign, has yielded positive outcomes. Numerous experts engaged in the request for comments and contributed their perspective and expertise. Based on the positive feedback, we subsequently integrated a participatory phase in further updates of the metadata schema 30 , 36 .

In addition to this general commitment to openness, re3data has made its metadata available under the Creative Commons deed CC0. Due to adopting this highly permissive license, re3data metadata is strongly utilized by other parties, thereby enabling the development of new and innovative services and tools. Moreover, adaptable Jupyter Notebooks 37 have been published to facilitate the use of the re3data metadata. Additionally, workshops 38 have been arranged to support individuals in working with the notebooks and re3data data in general.

As a registry of RDRs, re3data also promotes Open Science by helping researchers find suitable repositories for publishing their data. For researchers who are looking for a repository that supports Open Science practices, re3data offers concise information on repository openness via its icon system. A recent analysis showed that most repositories indexed in re3data are considered ‘open’ 39 .

Lessons learned

The extensive reuse of re3data metadata increases its overall value, and participatory phases allow for incorporating different perspectives and experiences.

Quality assurance

For re3data, quality assurance encompasses all processes to ensure a service that meets the needs of a global community, as well as verifiably high-quality information.

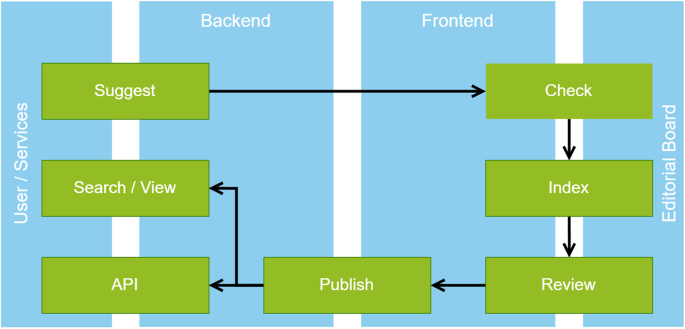

High-quality RDR descriptions are at the core of re3data. Therefore, continuous efforts ensure that re3data metadata describes appropriately and correctly. Figure 1 shows the editorial process in re3data. Anyone, for example RDR operators, can submit repositories to be indexed in re3data by providing the repository name, URL, and some other core properties via a web form 40 . The re3data editorial board analyzes if the suggested RDR conforms with the re3data registration policy 40 . The policy requires that the RDR is operated by a legal entity, such as a library or university, and that the terms of use are clearly communicated. Additionally, the RDR must have a focus on storing and providing access to research data. If an RDR meets these requirements, it is indexed based on the re3data metadata schema. A member of the editorial board creates an initial RDR description, which is then reviewed by another editor. This approach has proven effective in resolving any inconsistencies in interpreting RDR characteristics. An indexing manual explains how the schema is to be applied and helps to ensure consistency between RDR descriptions. Once this review is complete, the RDR description is made publicly visible.

Schematic overview of the editorial process in re3data.

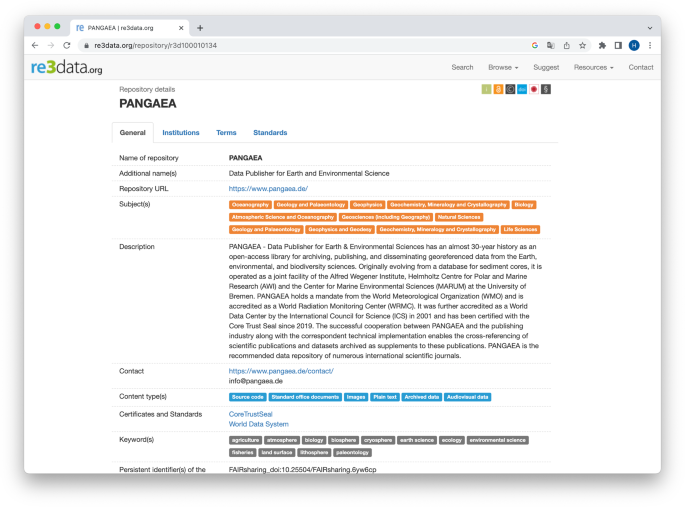

re3data applies a number of measures to ensure the long-term quality and consistency of RDR descriptions, including automated quality checks. For example, it is periodically checked whether the URLs of the RDR still resolve – if not, the entry of a RDR is reexamined. Figure 2 shows a screenshot of a re3data RDR description.

Screenshot of the re3data description of the research data repository PANGAEA 97 .

The re3data metadata schema on which RDR descriptions are based is reviewed and updated regularly to ensure that users’ changing information needs are met. Operators of an RDR, as well as any other person, can suggest changes to RDR descriptions by submitting a change request. A link for filing a change request can be found at the bottom of each RDR description in re3data. Once a change request has been submitted, a member of the editorial board will review the proposed changes and verify them against information on the RDR website. If the change request is deemed valid, the RDR description will be adapted accordingly.

As part of the project re3data COREF, quality assurance practices at RDRs were systematically investigated. The aim was to understand how RDRs ensure high-quality data, and to better reflect these measures in the metadata schema. The results of the study 41 , which were based on a survey among RDR operators, show that approaches to quality assurance are diverse and depend on the mission and scope of the RDR. However, RDRs are key actors in enabling quality assurance. Furthermore, there is a path dependence of data review on the review process of textual publications. In addition to the study, a workshop 42 , 43 was held with CoreTrustSeal that focused on quality assurance measures RDRs have implemented. CoreTrustSeal is a RDR certification organization launched in 2017 that defines requirements for base-level certification for RDRs 44 .

Combining manual and automated verification was shown to be most effective in ensuring that RDR descriptions remain consistent while meeting users’ diverse information needs.

Community engagement

For re3data, community engagement encompasses all activities that ensure interaction with the global RDR community in a participatory process.

Collaboration has always been a central principle for re3data. This is reflected in the fact that research communities, RDR providers, and other relevant stakeholders contribute significantly to the completeness and accuracy of the re3data metadata as well as its further technical and conceptual development. Examples include the participatory phase during the revision of the metadata schema, the involvement of important stakeholders in the development of the re3data Conceptual Model for User Stories 45 , 46 , or the activities that investigate data quality assurance at RDRs.

re3data engages in collaborations in various forms with diverse stakeholders, for example:

In collaboration with the Canadian Data Repositories Inventory Project and later with the Digital Research Alliance of Canada, both initiatives aiming at describing the Canadian landscape of RDRs comprehensively, descriptions of Canadian RDRs in re3data were improved, and additional RDRs were indexed 47 , 48 .

A collaboration initiative was initiated in Germany with the Helmholtz Metadata Collaboration (HMC). In this initiative, the descriptions of research data infrastructures within the Helmholtz Association are being reviewed and enhanced 49 .

re3data also engages in international networks, particularly within the Research Data Alliance (RDA). Activities focus on several RDA working and interest groups 50 , 51 , 52 that touch on topics relevant to RDR registries.

Combining strategies of engagement connects the service to its stakeholders and creates opportunities for collaboration and innovation.

Interoperability

For re3data, interoperability means facilitating interactions and metadata exchange with the global RDR community by relying on established standards.

Interoperability is a necessary condition to integrate a service into a global network of diverse stakeholders. International standards must be implemented to achieve this, for example with the re3data API 53 . The API can be used to query various parameters of an RDR as expressed in the metadata schema. The API enables the machine readability and integration of re3data metadata into other services. The re3data API is based on the RESTful API concept and is well-documented. Applying the HATEOAS principles 54 enables the decoupling of clients and servers, and thus allows for independent development of server functionality. This results in a robust interface that promotes interoperability and reduces barriers to future use. Also, re3data supports OpenSearch, a standard that enables interaction with search results in a format suitable for syndication and aggregation.

Interoperability also guides the development of the metadata schema: Established vocabularies and standards are used to describe RDRs wherever possible. Examples of standards used in the metadata schema include:

ISO 639-3 for language information, for example a RDR name

ISO 8601 for the use of date information on a RDR

DFG Classification of Subject Areas for subject information on a RDR

In addition, re3data pursues interoperability by jointly working on a mapping between the DFG Classification of Subject Areas used by re3data and the OECD Fields of Science classification used by DataCite 55 .

re3data records whether an RDR has obtained formal certification, for example by World Data System (WDS) or CoreTrustSeal. The certification status, along with other properties, is visualized by the re3data icon system that makes the core properties of RDRs easily accessible visually. The icon system provides information about the openness of the RDR and its data collection, the use of PID systems, as well as the certification status. The icon system can also be integrated into RDR websites via badges 56 . Figure 3 shows an example of a re3data badge.

The re3data badge integrated in the research data repository Health Atlas.

re3data captures information that might be relevant to metadata aggregator services, including API URLs, as well as the metadata standard(s) used. In offering this information in a standardized form, re3data fosters the development of services that span multiple collections, such as data portals. For example, as part of the FAIRsFAIR project work, re3data metadata has been integrated into DataCite Commons 57 to embed repository information in the DataCite PID Graph. This step not only improves the discoverability of repositories that support research data management in accordance with the FAIR principles but also serves as a basis for the development of new services such as the FAIR assessment tool F-UJI 35 , 58 .

The adherence to established standards facilitates the reuse of re3data metadata and increases the integration of the service into the broader Open Science landscape.

Developement

For re3data, continuous development ensures that the service is able to respond dynamically to evolving requirements of the global RDR community.

Maintaining a registry for an international community poses a significant challenge, particularly the continued provision of reliable technical operations and a governance structure capable of responding adequately to user demands. re3data has found suitable solutions to these challenges, which have enabled the service to be in operation for more than ten years. The long-standing collaboration with DataCite has contributed to this success. Participation in third-party-funded projects has facilitated the collaborative development of core service elements together with partners. Participation in committees such as those surrounding EOSC and RDA, as well as active engagement with the RDR community, have motivated discussions about changing requirements and led to the continuous evolution of the registry.

Responsibilities for specific tasks are divided among several entities, such as a working group responsible for guiding future directions of the service and the editorial board responsible for maintaining re3data metadata. In addition, there are teams responsible for technology as well as for outreach and communication. The working group includes experts from DataCite and other stakeholders, who discuss current requirements, prioritize developments, and ensure coordination with RDR operators worldwide. In addition to these entities, coordination with third-party-funded projects involving re3data is ongoing.

Continuous and agile development addresses the users’ constantly evolving needs. Operating a registry that meets those needs in the long term requires flexibility.

Sustainability

For re3data, sustainability means ensuring a long-term and reliable service to the global RDR community.

Maintaining the sustainable operation of a service like re3data beyond an initial project phase is a challenge. For re3data, the consortium model has proven effective, as the service is supported by a wide range of scientific institutions. This model, which is embedded in the governance of re3data, allows the operation of the service to be sustained through self-funding while also enabling important developments to be undertaken within the scope of third-party projects. Thanks to funding received from the DFG (re3data COREF project) and the European Union’s Horizon 2020 program (FAIRsFAIR project), significant investments have been made in the IT infrastructure and overall advancement of the service in recent years.

A strategy based on diverse revenue streams contributes to securing funding for the service long-term.

For re3data, being mentioned in policies comes with a responsibility for operating a reliable service and maintaining high-quality metadata for the global RDR community.

During the development of the re3data service, the partners engaged in dialogues with various stakeholders that were interested in using the registry to refer to RDRs in their policies. They might do this, for example, to recommend or mandate the use of RDRs in general for publishing research data, or the use of a specific RDR. Today, re3data is mentioned in the policies of several funding agencies, scientific institutions, and journals. These actors use re3data to identify RDRs operated by specific academic institutions that were developed using funding from a funding organization, or that store data that are the basis of a journal article. Examples of policies and policy guidance documents that refer to re3data:

Academic institutions:

Brandon University, Canada 59

Technische Universität Berlin, Germany 60

University of Edinburgh, United Kingdom 61

University of Eastern Finland 62

Western Norway University of Applied Sciences 63

Bill & Melinda Gates Foundation, USA 64

European Commission 65 and ERC, EU 66

National Science Foundation (NSF), USA 67

NIH, USA 68

Journals and Publishers:

Taylor & Francis, United Kingdom 69

Springer Nature, United Kingdom 70

Sage, United Kingdom 71

Wiley, Germany 72

Regular searches are conducted to track mentions of re3data in policies. On the re3data website, a list of policies referring to re3data is maintained and regularly updated 73 .

As a result of being mentioned in policies so frequently, re3data receives inquiries from researchers for information on listed RDRs almost daily. These inquiries are usually forwarded to the RDR directly.

Policies represent firm support for research data management by academic institutions, funders, and journals and publishers. By facilitating the search for and referencing of RDRs in policies, re3data further promotes Open Science practices.

For re3data, data reuse is one of the main objectives, ensuring that third parties can rely on re3data metadata to build services that support the global RDR community.

Because re3data metadata are published as open data, third parties are free to integrate it into their systems. Several service operators have already taken advantage of this opportunity. In general, there are three types of services that work with re3data data:

Services for finding and describing RDRs: These services usually work with a subset of re3data metadata. Sometimes, the data is manually curated, and then integrated into external services based on specific parameters. Examples include:

DARIAH-EU has developed its Data Deposit Recommendation Service based on a subset of re3data metadata, which helps humanities researchers find suitable RDRs 74 , 75 .

The American Geophysical Union (AGU) has utilized re3data metadata to create a dedicated gateway for RDRs in the geosciences with its Repository Finder tool 76 , 77 , which was later incorporated into the DataCite Commons web search interface.

Services for monitoring the landscape of RDRs: These services analyze re3data metadata using specific parameters and visualize the results. Examples include:

OpenAIRE has integrated re3data metadata into its Open Science Observatory to provide information on RDRs that are part of OpenAIRE 78 .

The European Commission operates the Open Science Monitor, a dashboard that analyzes re3data metadata. The following metrics are displayed: number of RDRs by subject, number of RDRs by access type, and number of RDRs by country 79 , 80 .

Services for assessing RDRs: These services use re3data metadata and other data sources to evaluate RDRs more comprehensively. Examples include:

The F-UJI Automated FAIR Data Assessment Tool is a web-based service that assesses the degree to which individual datasets conform to the FAIR Data principles. The tool utilizes re3data metadata to evaluate characteristics of the RDR that store the datasets 81 .

Charité Metrics Dashboard, a dashboard on responsible research practices from the Berlin Institute of Health at Charité in Berlin, Germany, builds on F-UJI data and combines this information with additional re3data metadata 82 .

These examples underscore the value Open Science tools like re3data generate by making their data openly available without restrictions. As a result of the permissive licensing, re3data metadata can be used for new and innovative applications, establishing re3data as a vital data provider for the global Open Science community.

Permissive licensing and extensive collaboration have turned re3data into a key data provider in the Open Science ecosystem.

Metadata for research

For re3data, providing RDR descriptions also means offering metadata that enables analyses of the global RDR community.

In research disciplines studying data infrastructures, for example library and information science or science and technology studies, re3data is regularly used for information on the state of research infrastructures. As re3data has been mapping the landscape of data infrastructures for ten years, it has evolved into a tool that is used for monitoring Open Science activities, research data management, and other topics. Studies reusing re3data metadata include analyses of the overall RDR landscape, the landscape of RDRs in a specific domain, or the RDR landscape of a region or country. Some examples of studies reusing re3data metadata for research are:

Overall studies: Boyd 83 examined the extent to which RDR exhibit properties of infrastructures. Khan & Ahangar 84 and Hansson & Dahlgren 85 focused on the openness of RDRs from a global perspective.