21 Great Examples of Discourse Analysis

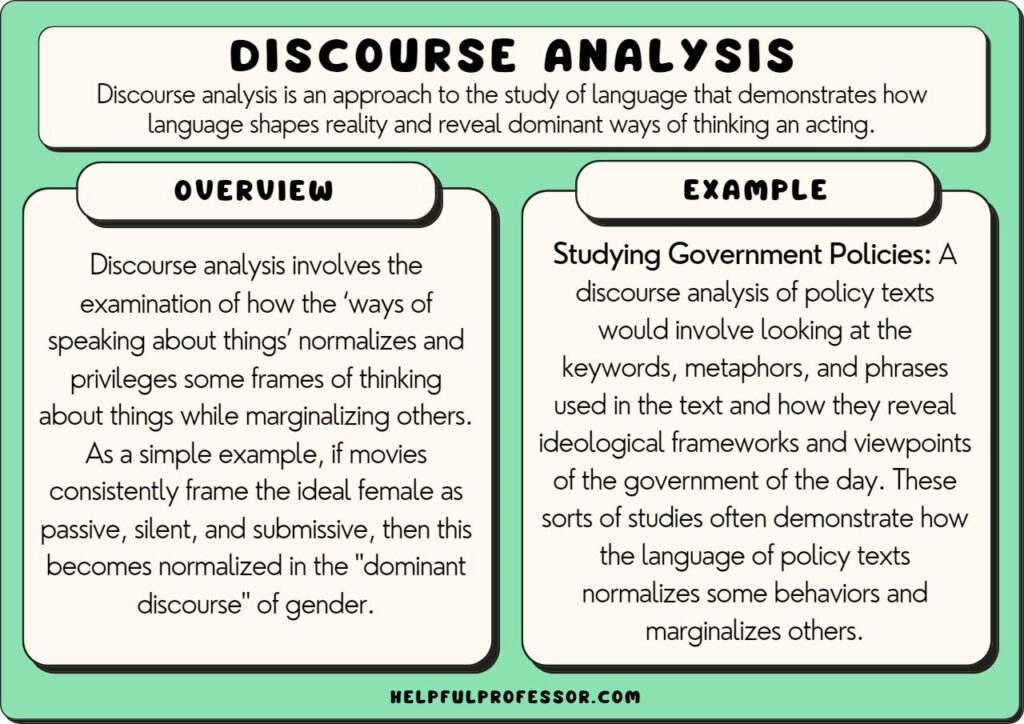

Discourse analysis is an approach to the study of language that demonstrates how language shapes reality. It usually takes the form of a textual or content analysis .

Discourse is understood as a way of perceiving, framing, and viewing the world.

For example:

- A dominant discourse of gender often positions women as gentle and men as active heroes.

- A dominant discourse of race often positions whiteness as the norm and colored bodies as ‘others’ (see: social construction of race )

Through discourse analysis, scholars look at texts and examine how those texts shape discourse.

In other words, it involves the examination of how the ‘ways of speaking about things’ normalizes and privileges some frames of thinking about things while marginalizing others.

As a simple example, if movies consistently frame the ideal female as passive, silent, and submissive, then society comes to think that this is how women should behave and makes us think that this is normal , so women who don’t fit this mold are abnormal .

Instead of seeing this as just the way things are, discourse analysts know that norms are produced in language and are not necessarily as natural as we may have assumed.

Examples of Discourse Analysis

1. language choice in policy texts.

A study of policy texts can reveal ideological frameworks and viewpoints of the writers of the policy. These sorts of studies often demonstrate how policy texts often categorize people in ways that construct social hierarchies and restrict people’s agency .

Examples include:

2. Newspaper Bias

Conducting a critical discourse analysis of newspapers involves gathering together a quorum of newspaper articles based on a pre-defined range and scope (e.g. newspapers from a particular set of publishers within a set date range).

Then, the researcher conducts a close examination of the texts to examine how they frame subjects (i.e. people, groups of people, etc.) from a particular ideological, political, or cultural perspective.

3. Language in Interviews

Discourse analysis can also be utilized to analyze interview transcripts. While coding methods to identify themes are the most common methods for analyzing interviews, discourse analysis is a valuable approach when looking at power relations and the framing of subjects through speech.

4. Television Analysis

Discourse analysis is commonly used to explore ideologies and framing devices in television shows and advertisements.

Due to the fact advertising is not just textual but rather multimodal , scholars often mix a discourse analytic methodology (i.e. exploring how television constructs dominant ways of thinking) with semiotic methods (i.e. exploration of how color, movement, font choice, and so on create meaning).

I did this, for example, in my PhD (listed below).

5. Film Critique

Scholars can explore discourse in film in a very similar way to how they study discourse in television shows. This can include the framing of sexuality gender, race, nationalism, and social class in films.

A common example is the study of Disney films and how they construct idealized feminine and masculine identities that children should aspire toward.

6. Analysis of Political Speech

Political speeches have also been subject to a significant amount of discourse analysis. These studies generally explore how influential politicians indicate a shift in policy and frame those policy shifts in the context of underlying ideological assumptions.

9. Examining Marketing Texts

Advertising is more present than ever in the context of neoliberal capitalism. As a result, it has an outsized role in shaping public discourse. Critical discourse analyses of advertising texts tend to explore how advertisements, and the capitalist context that underpins their proliferation, normalize gendered, racialized, and class-based discourses.

11. Analyzing Lesson Plans

As written texts, lesson plans can be analyzed for how they construct discourses around education as well as student and teacher identities. These texts tend to examine how teachers and governing bodies in education prioritize certain ideologies around what and how to learn. These texts can enter into discussions around the ‘history wars’ (what and whose history should be taught) as well as ideological approaches to religious and language learning.

12. Looking at Graffiti

One of my favorite creative uses of discourse analysis is in the study of graffiti. By looking at graffiti, researchers can identify how youth countercultures and counter discourses are spread through subversive means. These counterdiscourses offer ruptures where dominant discourses can be unsettled and displaced.

Get a Pdf of this article for class

Enjoy subscriber-only access to this article’s pdf

The Origins of Discourse Analysis

1. foucault.

French philosopher Michel Foucault is a central thinker who shaped discourse analysis. His work in studies like Madness and Civilization and The History of Sexuality demonstrate how our ideas about insanity and sexuality have been shaped through language.

The ways the church speaks about sex, for example, shapes people’s thoughts and feelings about it.

The church didn’t simply make sex a silent taboo. Rather, it actively worked to teach people that desire was a thing of evil, forcing them to suppress their desires.

Over time, society at large developed a suppressed normative approach to the concept of sex that is not necessarily normal except for the fact that the church reiterates that this is the only acceptable way of thinking about the topic.

Similarly, in Madness and Civilization , a discourse around insanity was examined. Medical discourse pathologized behaviors that were ‘abnormal’ as signs of insanity. Were the dominant medical discourse to change, it’s possible that abnormal people would no longer be seen as insane.

One clear example of this is homosexuality. Up until the 1990s, being gay was seen in medical discourse as an illness. Today, most of Western society sees that this way of looking at homosexuality was extremely damaging and exclusionary, and yet at the time, because it was the dominant discourse, people didn’t question it.

2. Norman Fairclough

Fairclough (2013), inspired by Foucault, created some key methodological frameworks for conducting discourse analysis.

Fairclough was one of the first scholars to articulate some frameworks around exploring ‘text as discourse’ and provided key tools for scholars to conduct analyses of newspaper and policy texts.

Today, most methodology chapters in dissertations that use discourse analysis will have extensive discussions of Fairclough’s methods.

Discourse analysis is a popular primary research method in media studies, cultural studies, education studies, and communication studies. It helps scholars to show how texts and language have the power to shape people’s perceptions of reality and, over time, shift dominant ways of framing thought. It also helps us to see how power flows thought texts, creating ‘in-groups’ and ‘out-groups’ in society.

Key examples of discourse analysis include the study of television, film, newspaper, advertising, political speeches, and interviews.

Al Kharusi, R. (2017). Ideologies of Arab media and politics: a CDA of Al Jazeera debates on the Yemeni revolution. PhD Dissertation: University of Hertfordshire.

Alaazi, D. A., Ahola, A. N., Okeke-Ihejirika, P., Yohani, S., Vallianatos, H., & Salami, B. (2021). Immigrants and the Western media: a CDA of newspaper framings of African immigrant parenting in Canada. Journal of Ethnic and Migration Studies , 47 (19), 4478-4496. Doi: https://doi.org/10.1080/1369183X.2020.1798746

Al-Khawaldeh, N. N., Khawaldeh, I., Bani-Khair, B., & Al-Khawaldeh, A. (2017). An exploration of graffiti on university’s walls: A corpus-based discourse analysis study. Indonesian Journal of Applied Linguistics , 7 (1), 29-42. Doi: https://doi.org/10.17509/ijal.v7i1.6856

Alsaraireh, M. Y., Singh, M. K. S., & Hajimia, H. (2020). Critical DA of gender representation of male and female characters in the animation movie, Frozen. Linguistica Antverpiensia , 104-121.

Baig, F. Z., Khan, K., & Aslam, M. J. (2021). Child Rearing and Gender Socialisation: A Feminist CDA of Kids’ Popular Fictional Movies. Journal of Educational Research and Social Sciences Review (JERSSR) , 1 (3), 36-46.

Barker, M. E. (2021). Exploring Canadian Integration through CDA of English Language Lesson Plans for Immigrant Learners. Canadian Journal of Applied Linguistics/Revue canadienne de linguistique appliquée , 24 (1), 75-91. Doi: https://doi.org/10.37213/cjal.2021.28959

Coleman, B. (2017). An Ideological Unveiling: Using Critical Narrative and Discourse Analysis to Examine Discursive White Teacher Identity. AERA Online Paper Repository .

Drew, C. (2013). Soak up the goodness: Discourses of Australian childhoods on television advertisements, 2006-2012. PhD Dissertation: Australian Catholic University. Doi: https://doi.org/10.4226/66/5a9780223babd

Fairclough, N. (2013). Critical discourse analysis: The critical study of language . London: Routledge.

Foucault, M. (1990). The history of sexuality: An introduction . London: Vintage.

Foucault, M. (2003). Madness and civilization . New York: Routledge.

Hahn, A. D. (2018). Uncovering the ideologies of internationalization in lesson plans through CDA. The New English Teacher , 12 (1), 121-121.

Isti’anah, A. (2018). Rohingya in media: CDA of Myanmar and Bangladesh newspaper headlines. Language in the Online and Offline World , 6 , 18-23. Doi: http://repository.usd.ac.id/id/eprint/25962

Khan, M. H., Adnan, H. M., Kaur, S., Qazalbash, F., & Ismail, I. N. (2020). A CDA of anti-Muslim rhetoric in Donald Trump’s historic 2016 AIPAC policy speech. Journal of Muslim Minority Affairs , 40 (4), 543-558. Doi: https://doi.org/10.1080/13602004.2020.1828507

Louise Cooper, K., Luck, L., Chang, E., & Dixon, K. (2021). What is the practice of spiritual care? A CDA of registered nurses’ understanding of spirituality. Nursing Inquiry , 28 (2), e12385. Doi: https://doi.org/10.1111/nin.12385

Mohammadi, D., Momeni, S., & Labafi, S. (2021). Representation of Iranians family’s life style in TV advertising (Case study: food ads). Religion & Communication , 27 (58), 333-379.

Munro, M. (2018) House price inflation in the news: a CDA of newspaper coverage in the UK. Housing Studies, 33(7), pp. 1085-1105. doi: 10.1080/02673037.2017.1421911

Ravn, I. M., Frederiksen, K., & Beedholm, K. (2016). The chronic responsibility: a CDA of Danish chronic care policies. Qualitative Health Research , 26 (4), 545-554. Doi: https://doi.org/10.1177%2F1049732315570133

Sengul, K. (2019). Critical discourse analysis in political communication research: a case study of right-wing populist discourse in Australia. Communication Research and Practice , 5 (4), 376-392. Doi: https://doi.org/10.1080/22041451.2019.1695082

Serafis, D., Kitis, E. D., & Archakis, A. (2018). Graffiti slogans and the construction of collective identity: evidence from the anti-austerity protests in Greece. Text & Talk , 38 (6), 775-797. Doi: https://doi.org/10.1515/text-2018-0023

Suphaborwornrat, W., & Punkasirikul, P. (2022). A Multimodal CDA of Online Soft Drink Advertisements. LEARN Journal: Language Education and Acquisition Research Network , 15 (1), 627-653.

Symes, C., & Drew, C. (2017). Education on the rails: a textual ethnography of university advertising in mobile contexts. Critical Studies in Education , 58 (2), 205-223. Doi: https://doi.org/10.1080/17508487.2016.1252783

Thomas, S. (2005). The construction of teacher identities in educational policy documents: A critical discourse analysis. Critical Studies in Education , 46 (2), 25-44. Doi: https://doi.org/10.1080/17508480509556423

Chris Drew (PhD)

Dr. Chris Drew is the founder of the Helpful Professor. He holds a PhD in education and has published over 20 articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education. [Image Descriptor: Photo of Chris]

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 5 Top Tips for Succeeding at University

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 50 Durable Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 100 Consumer Goods Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 30 Globalization Pros and Cons

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Content Analysis | Guide, Methods & Examples

Content Analysis | Guide, Methods & Examples

Published on July 18, 2019 by Amy Luo . Revised on June 22, 2023.

Content analysis is a research method used to identify patterns in recorded communication. To conduct content analysis, you systematically collect data from a set of texts, which can be written, oral, or visual:

- Books, newspapers and magazines

- Speeches and interviews

- Web content and social media posts

- Photographs and films

Content analysis can be both quantitative (focused on counting and measuring) and qualitative (focused on interpreting and understanding). In both types, you categorize or “code” words, themes, and concepts within the texts and then analyze the results.

Table of contents

What is content analysis used for, advantages of content analysis, disadvantages of content analysis, how to conduct content analysis, other interesting articles.

Researchers use content analysis to find out about the purposes, messages, and effects of communication content. They can also make inferences about the producers and audience of the texts they analyze.

Content analysis can be used to quantify the occurrence of certain words, phrases, subjects or concepts in a set of historical or contemporary texts.

Quantitative content analysis example

To research the importance of employment issues in political campaigns, you could analyze campaign speeches for the frequency of terms such as unemployment , jobs , and work and use statistical analysis to find differences over time or between candidates.

In addition, content analysis can be used to make qualitative inferences by analyzing the meaning and semantic relationship of words and concepts.

Qualitative content analysis example

To gain a more qualitative understanding of employment issues in political campaigns, you could locate the word unemployment in speeches, identify what other words or phrases appear next to it (such as economy, inequality or laziness ), and analyze the meanings of these relationships to better understand the intentions and targets of different campaigns.

Because content analysis can be applied to a broad range of texts, it is used in a variety of fields, including marketing, media studies, anthropology, cognitive science, psychology, and many social science disciplines. It has various possible goals:

- Finding correlations and patterns in how concepts are communicated

- Understanding the intentions of an individual, group or institution

- Identifying propaganda and bias in communication

- Revealing differences in communication in different contexts

- Analyzing the consequences of communication content, such as the flow of information or audience responses

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

- Unobtrusive data collection

You can analyze communication and social interaction without the direct involvement of participants, so your presence as a researcher doesn’t influence the results.

- Transparent and replicable

When done well, content analysis follows a systematic procedure that can easily be replicated by other researchers, yielding results with high reliability .

- Highly flexible

You can conduct content analysis at any time, in any location, and at low cost – all you need is access to the appropriate sources.

Focusing on words or phrases in isolation can sometimes be overly reductive, disregarding context, nuance, and ambiguous meanings.

Content analysis almost always involves some level of subjective interpretation, which can affect the reliability and validity of the results and conclusions, leading to various types of research bias and cognitive bias .

- Time intensive

Manually coding large volumes of text is extremely time-consuming, and it can be difficult to automate effectively.

If you want to use content analysis in your research, you need to start with a clear, direct research question .

Example research question for content analysis

Is there a difference in how the US media represents younger politicians compared to older ones in terms of trustworthiness?

Next, you follow these five steps.

1. Select the content you will analyze

Based on your research question, choose the texts that you will analyze. You need to decide:

- The medium (e.g. newspapers, speeches or websites) and genre (e.g. opinion pieces, political campaign speeches, or marketing copy)

- The inclusion and exclusion criteria (e.g. newspaper articles that mention a particular event, speeches by a certain politician, or websites selling a specific type of product)

- The parameters in terms of date range, location, etc.

If there are only a small amount of texts that meet your criteria, you might analyze all of them. If there is a large volume of texts, you can select a sample .

2. Define the units and categories of analysis

Next, you need to determine the level at which you will analyze your chosen texts. This means defining:

- The unit(s) of meaning that will be coded. For example, are you going to record the frequency of individual words and phrases, the characteristics of people who produced or appear in the texts, the presence and positioning of images, or the treatment of themes and concepts?

- The set of categories that you will use for coding. Categories can be objective characteristics (e.g. aged 30-40 , lawyer , parent ) or more conceptual (e.g. trustworthy , corrupt , conservative , family oriented ).

Your units of analysis are the politicians who appear in each article and the words and phrases that are used to describe them. Based on your research question, you have to categorize based on age and the concept of trustworthiness. To get more detailed data, you also code for other categories such as their political party and the marital status of each politician mentioned.

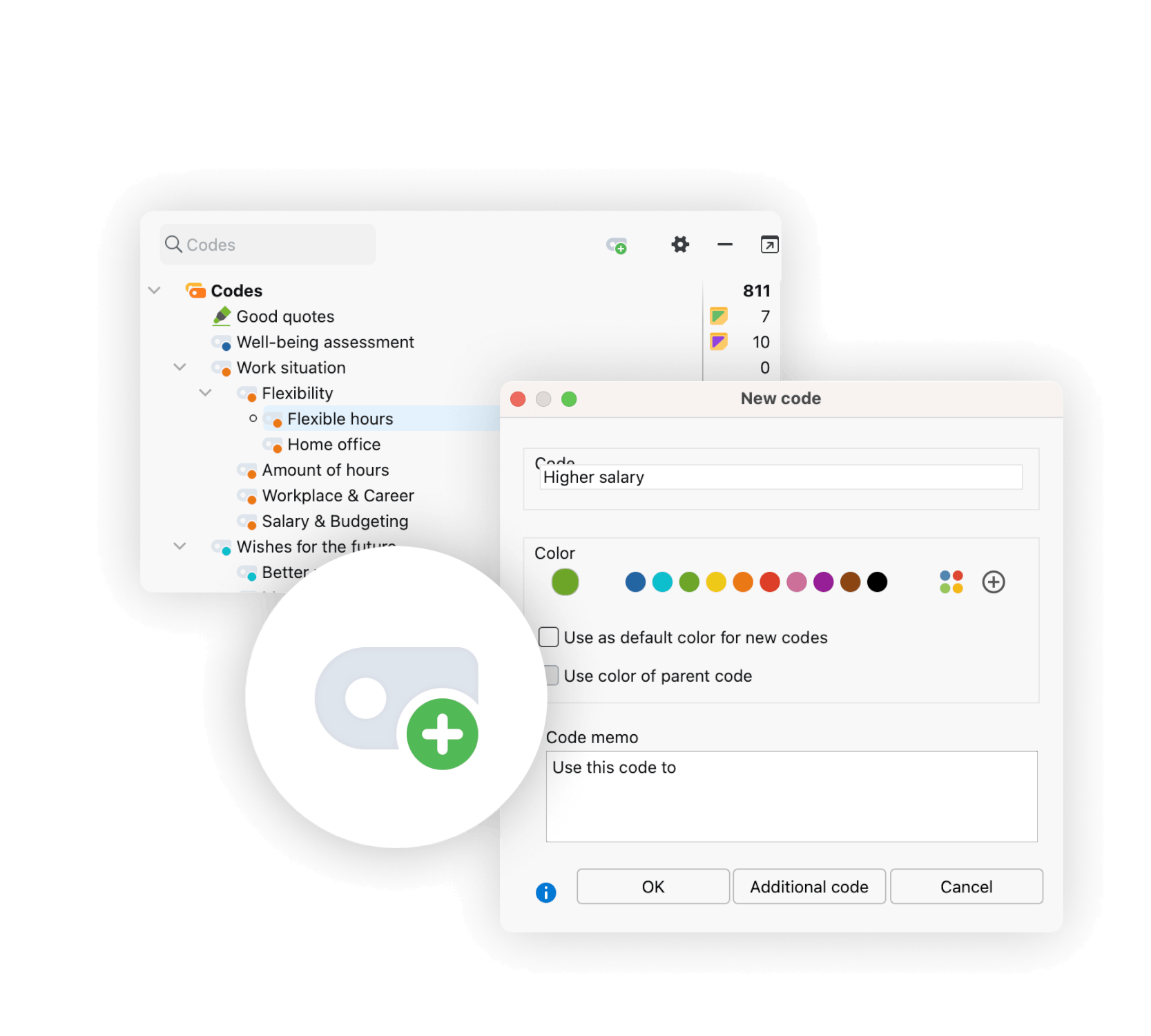

3. Develop a set of rules for coding

Coding involves organizing the units of meaning into the previously defined categories. Especially with more conceptual categories, it’s important to clearly define the rules for what will and won’t be included to ensure that all texts are coded consistently.

Coding rules are especially important if multiple researchers are involved, but even if you’re coding all of the text by yourself, recording the rules makes your method more transparent and reliable.

In considering the category “younger politician,” you decide which titles will be coded with this category ( senator, governor, counselor, mayor ). With “trustworthy”, you decide which specific words or phrases related to trustworthiness (e.g. honest and reliable ) will be coded in this category.

4. Code the text according to the rules

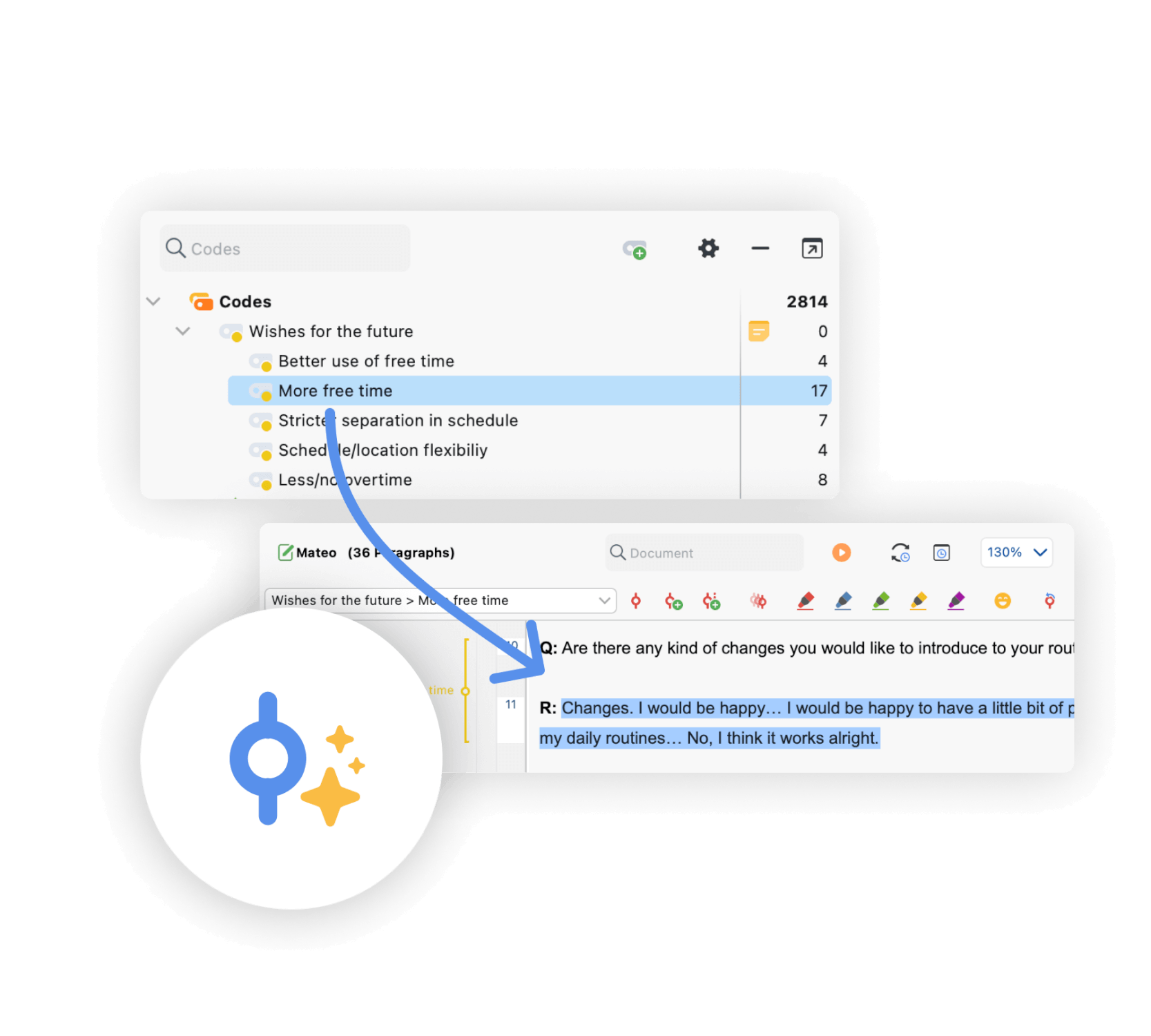

You go through each text and record all relevant data in the appropriate categories. This can be done manually or aided with computer programs, such as QSR NVivo , Atlas.ti and Diction , which can help speed up the process of counting and categorizing words and phrases.

Following your coding rules, you examine each newspaper article in your sample. You record the characteristics of each politician mentioned, along with all words and phrases related to trustworthiness that are used to describe them.

5. Analyze the results and draw conclusions

Once coding is complete, the collected data is examined to find patterns and draw conclusions in response to your research question. You might use statistical analysis to find correlations or trends, discuss your interpretations of what the results mean, and make inferences about the creators, context and audience of the texts.

Let’s say the results reveal that words and phrases related to trustworthiness appeared in the same sentence as an older politician more frequently than they did in the same sentence as a younger politician. From these results, you conclude that national newspapers present older politicians as more trustworthy than younger politicians, and infer that this might have an effect on readers’ perceptions of younger people in politics.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Measures of central tendency

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Thematic analysis

- Cohort study

- Peer review

- Ethnography

Research bias

- Implicit bias

- Cognitive bias

- Conformity bias

- Hawthorne effect

- Availability heuristic

- Attrition bias

- Social desirability bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Luo, A. (2023, June 22). Content Analysis | Guide, Methods & Examples. Scribbr. Retrieved April 2, 2024, from https://www.scribbr.com/methodology/content-analysis/

Is this article helpful?

Other students also liked

Qualitative vs. quantitative research | differences, examples & methods, descriptive research | definition, types, methods & examples, reliability vs. validity in research | difference, types and examples, what is your plagiarism score.

- Privacy Policy

Buy Me a Coffee

Home » Discourse Analysis – Methods, Types and Examples

Discourse Analysis – Methods, Types and Examples

Table of Contents

Discourse Analysis

Definition:

Discourse Analysis is a method of studying how people use language in different situations to understand what they really mean and what messages they are sending. It helps us understand how language is used to create social relationships and cultural norms.

It examines language use in various forms of communication such as spoken, written, visual or multi-modal texts, and focuses on how language is used to construct social meaning and relationships, and how it reflects and reinforces power dynamics, ideologies, and cultural norms.

Types of Discourse Analysis

Some of the most common types of discourse analysis are:

Conversation Analysis

This type of discourse analysis focuses on analyzing the structure of talk and how participants in a conversation make meaning through their interaction. It is often used to study face-to-face interactions, such as interviews or everyday conversations.

Critical discourse Analysis

This approach focuses on the ways in which language use reflects and reinforces power relations, social hierarchies, and ideologies. It is often used to analyze media texts or political speeches, with the aim of uncovering the hidden meanings and assumptions that are embedded in these texts.

Discursive Psychology

This type of discourse analysis focuses on the ways in which language use is related to psychological processes such as identity construction and attribution of motives. It is often used to study narratives or personal accounts, with the aim of understanding how individuals make sense of their experiences.

Multimodal Discourse Analysis

This approach focuses on analyzing not only language use, but also other modes of communication, such as images, gestures, and layout. It is often used to study digital or visual media, with the aim of understanding how different modes of communication work together to create meaning.

Corpus-based Discourse Analysis

This type of discourse analysis uses large collections of texts, or corpora, to analyze patterns of language use across different genres or contexts. It is often used to study language use in specific domains, such as academic writing or legal discourse.

Descriptive Discourse

This type of discourse analysis aims to describe the features and characteristics of language use, without making any value judgments or interpretations. It is often used in linguistic studies to describe grammatical structures or phonetic features of language.

Narrative Discourse

This approach focuses on analyzing the structure and content of stories or narratives, with the aim of understanding how they are constructed and how they shape our understanding of the world. It is often used to study personal narratives or cultural myths.

Expository Discourse

This type of discourse analysis is used to study texts that explain or describe a concept, process, or idea. It aims to understand how information is organized and presented in such texts and how it influences the reader’s understanding of the topic.

Argumentative Discourse

This approach focuses on analyzing texts that present an argument or attempt to persuade the reader or listener. It aims to understand how the argument is constructed, what strategies are used to persuade, and how the audience is likely to respond to the argument.

Discourse Analysis Conducting Guide

Here is a step-by-step guide for conducting discourse analysis:

- What are you trying to understand about the language use in a particular context?

- What are the key concepts or themes that you want to explore?

- Select the data: Decide on the type of data that you will analyze, such as written texts, spoken conversations, or media content. Consider the source of the data, such as news articles, interviews, or social media posts, and how this might affect your analysis.

- Transcribe or collect the data: If you are analyzing spoken language, you will need to transcribe the data into written form. If you are using written texts, make sure that you have access to the full text and that it is in a format that can be easily analyzed.

- Read and re-read the data: Read through the data carefully, paying attention to key themes, patterns, and discursive features. Take notes on what stands out to you and make preliminary observations about the language use.

- Develop a coding scheme : Develop a coding scheme that will allow you to categorize and organize different types of language use. This might include categories such as metaphors, narratives, or persuasive strategies, depending on your research question.

- Code the data: Use your coding scheme to analyze the data, coding different sections of text or spoken language according to the categories that you have developed. This can be a time-consuming process, so consider using software tools to assist with coding and analysis.

- Analyze the data: Once you have coded the data, analyze it to identify patterns and themes that emerge. Look for similarities and differences across different parts of the data, and consider how different categories of language use are related to your research question.

- Interpret the findings: Draw conclusions from your analysis and interpret the findings in relation to your research question. Consider how the language use in your data sheds light on broader cultural or social issues, and what implications it might have for understanding language use in other contexts.

- Write up the results: Write up your findings in a clear and concise way, using examples from the data to support your arguments. Consider how your research contributes to the broader field of discourse analysis and what implications it might have for future research.

Applications of Discourse Analysis

Here are some of the key areas where discourse analysis is commonly used:

- Political discourse: Discourse analysis can be used to analyze political speeches, debates, and media coverage of political events. By examining the language used in these contexts, researchers can gain insight into the political ideologies, values, and agendas that underpin different political positions.

- Media analysis: Discourse analysis is frequently used to analyze media content, including news reports, television shows, and social media posts. By examining the language used in media content, researchers can understand how media narratives are constructed and how they influence public opinion.

- Education : Discourse analysis can be used to examine classroom discourse, student-teacher interactions, and educational policies. By analyzing the language used in these contexts, researchers can gain insight into the social and cultural factors that shape educational outcomes.

- Healthcare : Discourse analysis is used in healthcare to examine the language used by healthcare professionals and patients in medical consultations. This can help to identify communication barriers, cultural differences, and other factors that may impact the quality of healthcare.

- Marketing and advertising: Discourse analysis can be used to analyze marketing and advertising messages, including the language used in product descriptions, slogans, and commercials. By examining these messages, researchers can gain insight into the cultural values and beliefs that underpin consumer behavior.

When to use Discourse Analysis

Discourse analysis is a valuable research methodology that can be used in a variety of contexts. Here are some situations where discourse analysis may be particularly useful:

- When studying language use in a particular context: Discourse analysis can be used to examine how language is used in a specific context, such as political speeches, media coverage, or healthcare interactions. By analyzing language use in these contexts, researchers can gain insight into the social and cultural factors that shape communication.

- When exploring the meaning of language: Discourse analysis can be used to examine how language is used to construct meaning and shape social reality. This can be particularly useful in fields such as sociology, anthropology, and cultural studies.

- When examining power relations: Discourse analysis can be used to examine how language is used to reinforce or challenge power relations in society. By analyzing language use in contexts such as political discourse, media coverage, or workplace interactions, researchers can gain insight into how power is negotiated and maintained.

- When conducting qualitative research: Discourse analysis can be used as a qualitative research method, allowing researchers to explore complex social phenomena in depth. By analyzing language use in a particular context, researchers can gain rich and nuanced insights into the social and cultural factors that shape communication.

Examples of Discourse Analysis

Here are some examples of discourse analysis in action:

- A study of media coverage of climate change: This study analyzed media coverage of climate change to examine how language was used to construct the issue. The researchers found that media coverage tended to frame climate change as a matter of scientific debate rather than a pressing environmental issue, thereby undermining public support for action on climate change.

- A study of political speeches: This study analyzed political speeches to examine how language was used to construct political identity. The researchers found that politicians used language strategically to construct themselves as trustworthy and competent leaders, while painting their opponents as untrustworthy and incompetent.

- A study of medical consultations: This study analyzed medical consultations to examine how language was used to negotiate power and authority between doctors and patients. The researchers found that doctors used language to assert their authority and control over medical decisions, while patients used language to negotiate their own preferences and concerns.

- A study of workplace interactions: This study analyzed workplace interactions to examine how language was used to construct social identity and maintain power relations. The researchers found that language was used to construct a hierarchy of power and status within the workplace, with those in positions of authority using language to assert their dominance over subordinates.

Purpose of Discourse Analysis

The purpose of discourse analysis is to examine the ways in which language is used to construct social meaning, relationships, and power relations. By analyzing language use in a systematic and rigorous way, discourse analysis can provide valuable insights into the social and cultural factors that shape communication and interaction.

The specific purposes of discourse analysis may vary depending on the research context, but some common goals include:

- To understand how language constructs social reality: Discourse analysis can help researchers understand how language is used to construct meaning and shape social reality. By analyzing language use in a particular context, researchers can gain insight into the cultural and social factors that shape communication.

- To identify power relations: Discourse analysis can be used to examine how language use reinforces or challenges power relations in society. By analyzing language use in contexts such as political discourse, media coverage, or workplace interactions, researchers can gain insight into how power is negotiated and maintained.

- To explore social and cultural norms: Discourse analysis can help researchers understand how social and cultural norms are constructed and maintained through language use. By analyzing language use in different contexts, researchers can gain insight into how social and cultural norms are reproduced and challenged.

- To provide insights for social change: Discourse analysis can provide insights that can be used to promote social change. By identifying problematic language use or power imbalances, researchers can provide insights that can be used to challenge social norms and promote more equitable and inclusive communication.

Characteristics of Discourse Analysis

Here are some key characteristics of discourse analysis:

- Focus on language use: Discourse analysis is centered on language use and how it constructs social meaning, relationships, and power relations.

- Multidisciplinary approach: Discourse analysis draws on theories and methodologies from a range of disciplines, including linguistics, anthropology, sociology, and psychology.

- Systematic and rigorous methodology: Discourse analysis employs a systematic and rigorous methodology, often involving transcription and coding of language data, in order to identify patterns and themes in language use.

- Contextual analysis : Discourse analysis emphasizes the importance of context in shaping language use, and takes into account the social and cultural factors that shape communication.

- Focus on power relations: Discourse analysis often examines power relations and how language use reinforces or challenges power imbalances in society.

- Interpretive approach: Discourse analysis is an interpretive approach, meaning that it seeks to understand the meaning and significance of language use from the perspective of the participants in a particular discourse.

- Emphasis on reflexivity: Discourse analysis emphasizes the importance of reflexivity, or self-awareness, in the research process. Researchers are encouraged to reflect on their own positionality and how it may shape their interpretation of language use.

Advantages of Discourse Analysis

Discourse analysis has several advantages as a methodological approach. Here are some of the main advantages:

- Provides a detailed understanding of language use: Discourse analysis allows for a detailed and nuanced understanding of language use in specific social contexts. It enables researchers to identify patterns and themes in language use, and to understand how language constructs social reality.

- Emphasizes the importance of context : Discourse analysis emphasizes the importance of context in shaping language use. By taking into account the social and cultural factors that shape communication, discourse analysis provides a more complete understanding of language use than other approaches.

- Allows for an examination of power relations: Discourse analysis enables researchers to examine power relations and how language use reinforces or challenges power imbalances in society. By identifying problematic language use, discourse analysis can contribute to efforts to promote social justice and equality.

- Provides insights for social change: Discourse analysis can provide insights that can be used to promote social change. By identifying problematic language use or power imbalances, researchers can provide insights that can be used to challenge social norms and promote more equitable and inclusive communication.

- Multidisciplinary approach: Discourse analysis draws on theories and methodologies from a range of disciplines, including linguistics, anthropology, sociology, and psychology. This multidisciplinary approach allows for a more holistic understanding of language use in social contexts.

Limitations of Discourse Analysis

Some Limitations of Discourse Analysis are as follows:

- Time-consuming and resource-intensive: Discourse analysis can be a time-consuming and resource-intensive process. Collecting and transcribing language data can be a time-consuming task, and analyzing the data requires careful attention to detail and a significant investment of time and resources.

- Limited generalizability: Discourse analysis is often focused on a particular social context or community, and therefore the findings may not be easily generalized to other contexts or populations. This means that the insights gained from discourse analysis may have limited applicability beyond the specific context being studied.

- Interpretive nature: Discourse analysis is an interpretive approach, meaning that it relies on the interpretation of the researcher to identify patterns and themes in language use. This subjectivity can be a limitation, as different researchers may interpret language data differently.

- Limited quantitative analysis: Discourse analysis tends to focus on qualitative analysis of language data, which can limit the ability to draw statistical conclusions or make quantitative comparisons across different language uses or contexts.

- Ethical considerations: Discourse analysis may involve the collection and analysis of sensitive language data, such as language related to trauma or marginalization. Researchers must carefully consider the ethical implications of collecting and analyzing this type of data, and ensure that the privacy and confidentiality of participants is protected.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Cluster Analysis – Types, Methods and Examples

Discriminant Analysis – Methods, Types and...

MANOVA (Multivariate Analysis of Variance) –...

Documentary Analysis – Methods, Applications and...

ANOVA (Analysis of variance) – Formulas, Types...

Graphical Methods – Types, Examples and Guide

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Critical Discourse Analysis | Definition, Guide & Examples

Critical Discourse Analysis | Definition, Guide & Examples

Published on 5 May 2022 by Amy Luo . Revised on 5 December 2022.

Discourse analysis is a research method for studying written or spoken language in relation to its social context. It aims to understand how language is used in real-life situations.

When you do discourse analysis, you might focus on:

- The purposes and effects of different types of language

- Cultural rules and conventions in communication

- How values, beliefs, and assumptions are communicated

- How language use relates to its social, political, and historical context

Discourse analysis is a common qualitative research method in many humanities and social science disciplines, including linguistics, sociology, anthropology, psychology, and cultural studies. It is also called critical discourse analysis.

Table of contents

What is discourse analysis used for, how is discourse analysis different from other methods, how to conduct discourse analysis.

Conducting discourse analysis means examining how language functions and how meaning is created in different social contexts. It can be applied to any instance of written or oral language, as well as non-verbal aspects of communication, such as tone and gestures.

Materials that are suitable for discourse analysis include:

- Books, newspapers, and periodicals

- Marketing material, such as brochures and advertisements

- Business and government documents

- Websites, forums, social media posts, and comments

- Interviews and conversations

By analysing these types of discourse, researchers aim to gain an understanding of social groups and how they communicate.

Prevent plagiarism, run a free check.

Unlike linguistic approaches that focus only on the rules of language use, discourse analysis emphasises the contextual meaning of language.

It focuses on the social aspects of communication and the ways people use language to achieve specific effects (e.g., to build trust, to create doubt, to evoke emotions, or to manage conflict).

Instead of focusing on smaller units of language, such as sounds, words, or phrases, discourse analysis is used to study larger chunks of language, such as entire conversations, texts, or collections of texts. The selected sources can be analysed on multiple levels.

Discourse analysis is a qualitative and interpretive method of analysing texts (in contrast to more systematic methods like content analysis ). You make interpretations based on both the details of the material itself and on contextual knowledge.

There are many different approaches and techniques you can use to conduct discourse analysis, but the steps below outline the basic structure you need to follow.

Step 1: Define the research question and select the content of analysis

To do discourse analysis, you begin with a clearly defined research question . Once you have developed your question, select a range of material that is appropriate to answer it.

Discourse analysis is a method that can be applied both to large volumes of material and to smaller samples, depending on the aims and timescale of your research.

Step 2: Gather information and theory on the context

Next, you must establish the social and historical context in which the material was produced and intended to be received. Gather factual details of when and where the content was created, who the author is, who published it, and whom it was disseminated to.

As well as understanding the real-life context of the discourse, you can also conduct a literature review on the topic and construct a theoretical framework to guide your analysis.

Step 3: Analyse the content for themes and patterns

This step involves closely examining various elements of the material – such as words, sentences, paragraphs, and overall structure – and relating them to attributes, themes, and patterns relevant to your research question.

Step 4: Review your results and draw conclusions

Once you have assigned particular attributes to elements of the material, reflect on your results to examine the function and meaning of the language used. Here, you will consider your analysis in relation to the broader context that you established earlier to draw conclusions that answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Luo, A. (2022, December 05). Critical Discourse Analysis | Definition, Guide & Examples. Scribbr. Retrieved 2 April 2024, from https://www.scribbr.co.uk/research-methods/discourse-analysis-explained/

Is this article helpful?

Other students also liked

Case study | definition, examples & methods, how to do thematic analysis | guide & examples, content analysis | a step-by-step guide with examples.

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Papyrology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Emotions

- History of Agriculture

- History of Education

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Evolution

- Language Reference

- Language Acquisition

- Language Variation

- Language Families

- Lexicography

- Linguistic Anthropology

- Linguistic Theories

- Linguistic Typology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Media

- Music and Religion

- Music and Culture

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Clinical Neuroscience

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Toxicology

- Medical Oncology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Psychology

- Cognitive Neuroscience

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Ethics

- Business Strategy

- Business History

- Business and Technology

- Business and Government

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic History

- Economic Systems

- Economic Methodology

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Behaviour

- Political Economy

- Political Institutions

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Theory

- Politics and Law

- Public Policy

- Public Administration

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

19 Content Analysis

Lindsay Prior, School of Sociology, Social Policy, and Social Work, Queen's University

- Published: 02 September 2020

- Cite Icon Cite

- Permissions Icon Permissions

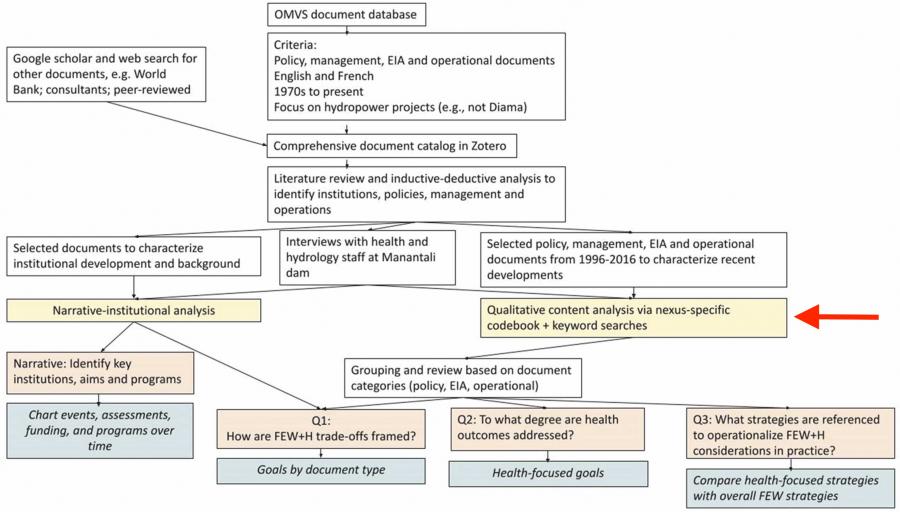

In this chapter, the focus is on ways in which content analysis can be used to investigate and describe interview and textual data. The chapter opens with a contextualization of the method and then proceeds to an examination of the role of content analysis in relation to both quantitative and qualitative modes of social research. Following the introductory sections, four kinds of data are subjected to content analysis. These include data derived from a sample of qualitative interviews ( N = 54), textual data derived from a sample of health policy documents ( N = 6), data derived from a single interview relating to a “case” of traumatic brain injury, and data gathered from fifty-four abstracts of academic papers on the topic of “well-being.” Using a distinctive and somewhat novel style of content analysis that calls on the notion of semantic networks, the chapter shows how the method can be used either independently or in conjunction with other forms of inquiry (including various styles of discourse analysis) to analyze data and also how it can be used to verify and underpin claims that arise from analysis. The chapter ends with an overview of the different ways in which the study of “content”—especially the study of document content—can be positioned in social scientific research projects.

What Is Content Analysis?

In his 1952 text on the subject of content analysis, Bernard Berelson traced the origins of the method to communication research and then listed what he called six distinguishing features of the approach. As one might expect, the six defining features reflect the concerns of social science as taught in the 1950s, an age in which the calls for an “objective,” “systematic,” and “quantitative” approach to the study of communication data were first heard. The reference to the field of “communication” was nothing less than a reflection of a substantive social scientific interest over the previous decades in what was called public opinion and specifically attempts to understand why and how a potential source of critical, rational judgment on political leaders (i.e., the views of the public) could be turned into something to be manipulated by dictators and demagogues. In such a context, it is perhaps not so surprising that in one of the more popular research methods texts of the decade, the terms content analysis and communication analysis are used interchangeably (see Goode & Hatt, 1952 , p. 325).

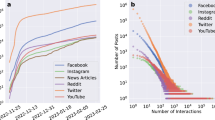

Academic fashions and interests naturally change with available technology, and these days we are more likely to focus on the individualization of communications through Twitter and the like, rather than of mass newspaper readership or mass radio audiences, yet the prevailing discourse on content analysis has remained much the same as it was in Berleson’s day. Thus, Neuendorf ( 2002 ), for example, continued to define content analysis as “the systematic, objective, quantitative analysis of message characteristics” (p. 1). Clearly, the centrality of communication as a basis for understanding and using content analysis continues to hold, but in this chapter I will try to show that, rather than locate the use of content analysis in disembodied “messages” and distantiated “media,” we would do better to focus on the fact that communication is a building block of social life itself and not merely a system of messages that are transmitted—in whatever form—from sender to receiver. To put that statement in another guise, we must note that communicative action (to use the phraseology of Habermas, 1987 ) rests at the very base of the lifeworld, and one very important way of coming to grips with that world is to study the content of what people say and write in the course of their everyday lives.

My aim is to demonstrate various ways in which content analysis (henceforth CTA) can be used and developed to analyze social scientific data as derived from interviews and documents. It is not my intention to cover the history of CTA or to venture into forms of literary analysis or to demonstrate each and every technique that has ever been deployed by content analysts. (Many of the standard textbooks deal with those kinds of issues much more fully than is possible here. See, for example, Babbie, 2013 ; Berelson, 1952 ; Bryman, 2008 , Krippendorf, 2004 ; Neuendorf, 2002 ; and Weber, 1990 ). Instead, I seek to recontextualize the use of the method in a framework of network thinking and to link the use of CTA to specific problems of data analysis. As will become evident, my exposition of the method is grounded in real-world problems. Those problems are drawn from my own research projects and tend to reflect my academic interests—which are almost entirely related to the analysis of the ways in which people talk and write about aspects of health, illness, and disease. However, lest the reader be deterred from going any further, I should emphasize that the substantive issues that I elect to examine are secondary if not tertiary to my main objective—which is to demonstrate how CTA can be integrated into a range of research designs and add depth and rigor to the analysis of interview and inscription data. To that end, in the next section I aim to clear our path to analysis by dealing with some issues that touch on the general position of CTA in the research armory, especially its location in the schism that has developed between quantitative and qualitative modes of inquiry.

The Methodological Context of Content Analysis

Content analysis is usually associated with the study of inscription contained in published reports, newspapers, adverts, books, web pages, journals, and other forms of documentation. Hence, nearly all of Berelson’s ( 1952 ) illustrations and references to the method relate to the analysis of written records of some kind, and where speech is mentioned, it is almost always in the form of broadcast and published political speeches (such as State of the Union addresses). This association of content analysis with text and documentation is further underlined in modern textbook discussions of the method. Thus, Bryman ( 2008 ), for example, defined CTA as “an approach to the analysis of documents and texts , that seek to quantify content in terms of pre-determined categories” (2008, p. 274, emphasis in original), while Babbie ( 2013 ) stated that CTA is “the study of recorded human communications” (2013, p. 295), and Weber referred to it as a method to make “valid inferences from text” (1990, p. 9). It is clear then that CTA is viewed as a text-based method of analysis, though extensions of the method to other forms of inscriptional material are also referred to in some discussions. Thus, Neuendorf ( 2002 ), for example, rightly referred to analyses of film and television images as legitimate fields for the deployment of CTA and by implication analyses of still—as well as moving—images such as photographs and billboard adverts. Oddly, in the traditional or standard paradigm of CTA, the method is solely used to capture the “message” of a text or speech; it is not used for the analysis of a recipient’s response to or understanding of the message (which is normally accessed via interview data and analyzed in other and often less rigorous ways; see, e.g., Merton, 1968 ). So, in this chapter I suggest that we can take things at least one small step further by using CTA to analyze speech (especially interview data) as well as text.

Standard textbook discussions of CTA usually refer to it as a “nonreactive” or “unobtrusive” method of investigation (see, e.g., Babbie, 2013 , p. 294), and a large part of the reason for that designation is because of its focus on already existing text (i.e., text gathered without intrusion into a research setting). More important, however (and to underline the obvious), CTA is primarily a method of analysis rather than of data collection. Its use, therefore, must be integrated into wider frames of research design that embrace systematic forms of data collection as well as forms of data analysis. Thus, routine strategies for sampling data are often required in designs that call on CTA as a method of analysis. These latter can be built around random sampling methods or even techniques of “theoretical sampling” (Glaser & Strauss, 1967 ) so as to identify a suitable range of materials for CTA. Content analysis can also be linked to styles of ethnographic inquiry and to the use of various purposive or nonrandom sampling techniques. For an example, see Altheide ( 1987 ).

The use of CTA in a research design does not preclude the use of other forms of analysis in the same study, because it is a technique that can be deployed in parallel with other methods or with other methods sequentially. For example, and as I will demonstrate in the following sections, one might use CTA as a preliminary analytical strategy to get a grip on the available data before moving into specific forms of discourse analysis. In this respect, it can be as well to think of using CTA in, say, the frame of a priority/sequence model of research design as described by Morgan ( 1998 ).