An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Front Med (Lausanne)

Rethinking clinical decision-making to improve clinical reasoning

Salvatore corrao.

1 Department of Internal Medicine, National Relevance and High Specialization Hospital Trust ARNAS Civico, Palermo, Italy

2 Dipartimento di Promozione della Salute Materno Infantile, Medicina Interna e Specialistica di Eccellenza “G. D’Alessandro” (PROMISE), University of Palermo, Palermo, Italy

Christiano Argano

Associated data.

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Improving clinical reasoning techniques is the right way to facilitate decision-making from prognostic, diagnostic, and therapeutic points of view. However, the process to do that is to fill knowledge gaps by studying and growing experience and knowing some cognitive aspects to raise the awareness of thinking mechanisms to avoid cognitive errors through correct educational training. This article examines clinical approaches and educational gaps in training medical students and young doctors. The authors explore the core elements of clinical reasoning, including metacognition, reasoning errors and cognitive biases, reasoning strategies, and ways to improve decision-making. The article addresses the dual-process theory of thought and the new Default Mode Network (DMN) theory. The reader may consider the article a first-level guide to deepen how to think and not what to think, knowing that this synthesis results from years of study and reasoning in clinical practice and educational settings.

Introduction

Clinical reasoning is based on complex and multifaceted cognitive processes, and the level of cognition is perhaps the most relevant factor that impacts the physician’s clinical reasoning. These topics have inspired considerable interest in the last years ( 1 , 2 ). According to Croskerry ( 3 ) and Croskerry and Norman ( 4 ), over 40 affective and cognitive biases may impact clinical reasoning. In addition, it should not be forgotten that both the processes and the subject matter are complex.

In medicine, there are thousands of known diagnoses, each with different complexity. Moreover, in line with Hammond’s view, a fundamental uncertainty will inevitably fail ( 5 ). Any mistake or failure in the diagnostic process leads to a delayed diagnosis, a misdiagnosis, or a missed diagnosis. The particular context in which a medical decision is made is highly relevant to the reasoning process and outcome ( 6 ).

More recently, there has been renewed interest in diagnostic reasoning, primarily diagnostic errors. Many researchers deepen inside the processes underpinning cognition, developing new universal reasoning and decision-making model: The Dual Process Theory.

This theory has a prompt implementation in medical decision-making and provides a comprehensive framework for understanding the gamma of theoretical approaches taken into consideration previously. This model has critical practical applications for medical decision-making and may be used as a model for teaching decision reasoning. Given this background, this manuscript must be considered a first-level guide to understanding how to think and not what to think, deepening clinical decision-making and providing tools for improving clinical reasoning.

Too much attention to the tip of the iceberg

The New England Journal of Medicine has recently published a fascinating article ( 7 ) in the “Perspective” section, whereon we must all reflect on it. The title is “At baseline” (the basic condition). Dr. Bergl, from the Department of Medicine of the Medical College of Wisconsin (Milwaukee), raised that his trainees no longer wonder about the underlying pathology but are focused solely on solving the acute problem. He wrote that, for many internal medicine teams, the question is not whether but to what extent we should juggle the treatment of critical health problems of patients with care for their coexisting chronic conditions. Doctors are under high pressure to discharge, and then they move patients to the next stage of treatment without questioning the reason that decompensated the clinical condition. Suppose the chronic condition or baseline was not the fundamental goal of our performance. In that case, our juggling is highly inconsistent because we are working on an intermediate outcome curing only the decompensation phase of a disease. Dr. Bergl raises another essential matter. Perhaps equally disturbing, by adopting a collective “base” mentality, we unintentionally create a group of doctors who prioritize productivity rather than developing critical skills and curiosity. We agree that empathy and patience are two other crucial elements in the training process of future internists. Nevertheless, how much do we stimulate all these qualities? Perhaps are not all part of cultural backgrounds necessary for a correct patient approach, the proper clinical reasoning, and balanced communication skills?

On the other hand, a chronic baseline condition is not always the real reason that justifies acute hospitalization. The lack of a careful approach to the baseline and clinical reasoning focused on the patient leads to this superficiality. We are focusing too much on our students’ practical skills and the amount of knowledge to learn. On the other hand, we do not teach how to think and the cognitive mechanisms of clinical reasoning.

Time to rethink the way of thinking and teaching courses

Back in 1910, John Dewey wrote in his book “How We Think” ( 8 ), “The aim of education should be to teach us rather how to think than what to think—rather improve our minds to enable us to think for ourselves than to load the memory with the thoughts of other men.”

Clinical reasoning concerns how to think and make the best decision-making process associated with the clinical practice ( 9 ). The core elements of clinical reasoning ( 10 ) can be summarized in:

- 1. Evidence-based skills,

- 2. Interpretation and use of diagnostic tests,

- 3. Understanding cognitive biases,

- 4. Human factors,

- 5. Metacognition (thinking about thinking), and

- 6. Patient-centered evidence-based medicine.

All these core elements are crucial for the best way of clinical reasoning. Each of them needs a correct learning path to be used in combination with developing the best thinking strategies ( Table 1 ). Reasoning strategies allow us to combine and synthesize diverse data into one or more diagnostic hypotheses, make the complex trade-off between the benefits and risks of tests and treatments, and formulate plans for patient management ( 10 ).

Set of some reasoning strategies (view the text for explanations).

However, among the abovementioned core element of clinical reasoning, two are often missing in the learning paths of students and trainees: metacognition and understanding cognitive biases.

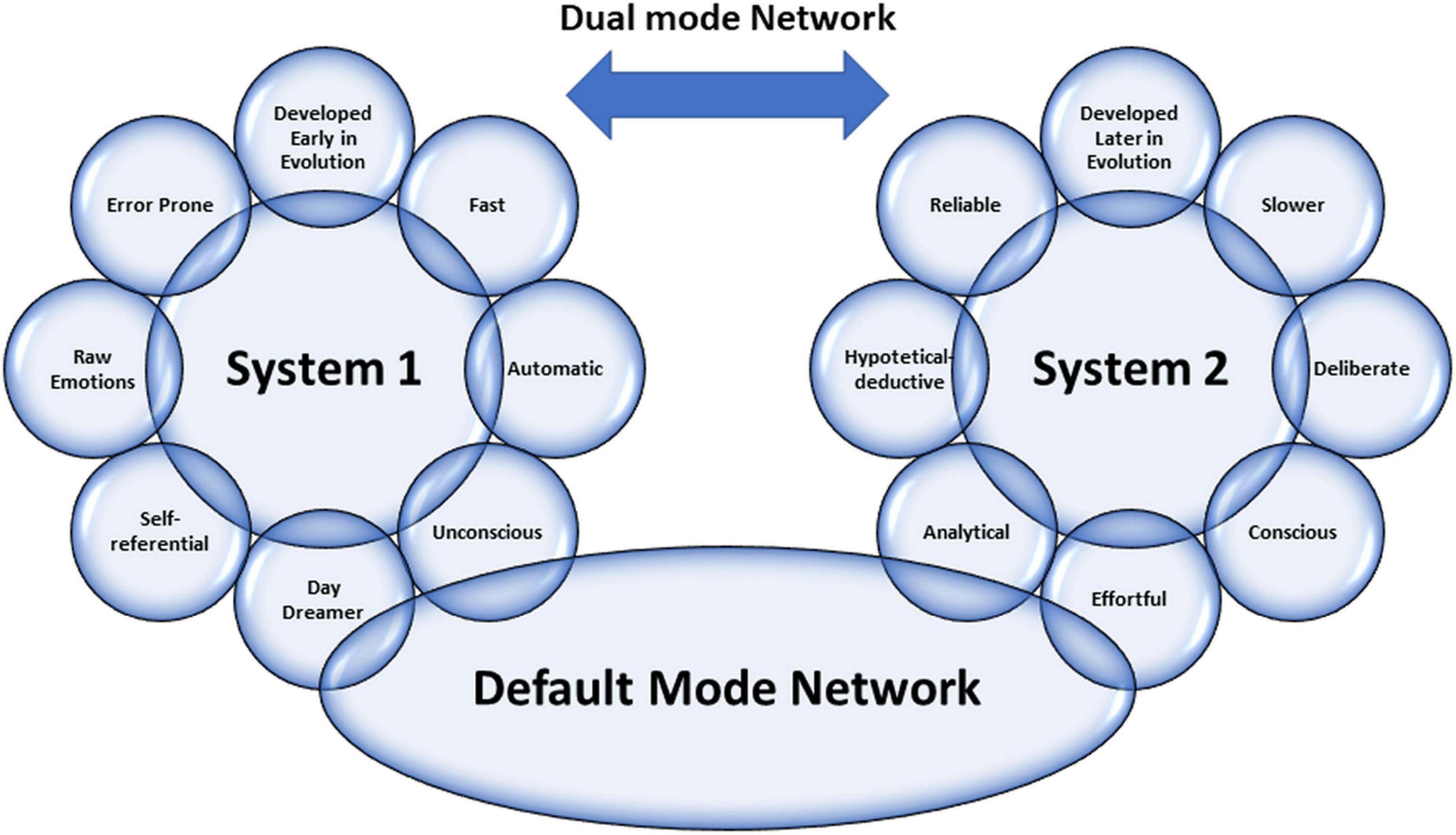

Metacognition

We have to recall cognitive psychology, which investigates human thinking and describes how the human brain has two distinct mental processes that influence reasoning and decision-making. The first form of cognition is an ancient mechanism of thought shared with other animals where speed is more important than accuracy. In this case, thinking is characterized by a fast, intuitive way that uses pattern recognition and automated processes. The second one is a product of evolution, particularly in human beings, indicated by an analytical and hypothetical-deductive slow, controlled, but highly consuming way of thinking. Today, the psychology of thinking calls this idea “the dual-process theory of thought” ( 11 – 14 ). The Nobel Prize in Economic Sciences awardee Daniel Kahneman has extensively studied the dichotomy between the two modes of thought, calling them fast and slow thinking. “System 1” is fast, instinctive, and emotional; “System 2” is slower, more deliberative, and more logical ( 15 ). Different cerebral zones are involved: “System 1” includes the dorsomedial prefrontal cortex, the pregenual medial prefrontal cortex, and the ventromedial prefrontal cortex; “System 2” encompasses the dorsolateral prefrontal cortex. Glucose utilization is massive when System 2 is performing ( 16 ). System 1 is the leading way of thought used. None could live permanently in a deliberate, slow, effortful way. Driving a car, eating, and performing many activities over time become automatic and subconscious.

A recent brilliant review of Gronchi and Giovannelli ( 17 ) explores those things. Typically, when a mental effort is required for tasks requiring attention, every individual is subject to a phenomenon called “ego-depletion.” When forced to do something, each one has fewer cognitive resources available to activate slow thinking and thus is less able to exert self-control ( 18 , 19 ). In the same way, much clinical decision-making becomes intuitive rather than analytical, a phenomenon strongly affected by individual differences ( 20 , 21 ). Experimental evidence by functional magnetic resonance imaging and positron emission tomography studies supports that the “resting state” is spontaneously active during periods of “passivity” ( 22 – 25 ). The brain regions involved include the medial prefrontal cortex, the posterior cingulate cortex, the inferior parietal lobule, the lateral temporal cortex, the dorsal medial prefrontal cortex, and the hippocampal formation ( 26 ). Findings reporting high-metabolic activity in these regions at rest ( 27 ) constituted the first clear evidence of a cohesive default mode in the brain ( 28 ), leading to the widely acknowledged introduction of the Default Mode Network (DMN) concept. The DMN contains the medial prefrontal cortex, the posterior cingulate cortex, the inferior parietal lobule, the lateral temporal cortex, the dorsal medial prefrontal cortex, and the hippocampal formation. Lower activity levels characterize the DMN during goal-directed cognition and higher activity levels when an individual is awake and involved in the mental processes requiring low externally directed attention. All that is the neural basis of spontaneous cognition ( 26 ) that is responsible for thinking using internal representations. This paradigm is growing the idea of stimulus-independent thoughts (SITs), defined by Buckner et al. ( 26 ) as “thoughts about something other than events originating from the environment” that is covert and not directed toward the performance of a specific task. Very recently, the role of the DMN was highlighted in automatic behavior (the rapid selection of a response to a particular and predictable context) ( 29 ), as opposed to controlled decision making, suggesting that the DMN plays a role in the autopilot mode of brain functioning.

In light of these premises, everyone can pause to analyze what he is doing, improving self-control to avoid “ego-depletion.” Thus, one can actively switch between one type of thinking and the other. The ability to make this switch makes the physician more performing. In addition, a physician can be trained to understand the ways of thinking and which type of thinking is engaged in various situations. This way, experience and methodology knowledge can energize Systems 1 and 2 and how they interact, avoiding cognitive errors. Figure 1 summarizes all the concepts abovementioned about the Dual Mode Network and its relationship with the DMN.

Graphical representation of the characteristics of Dual Mode Network, including the relationship between the two systems by Default Mode Network (view the text for explanations).

Emotional intelligence is another crucial factor in boosting clinical reasoning for the best decision-making applied to a single patient. Emotional intelligence recognizes one’s emotions. Those others label different feelings appropriately and use emotional information to guide thinking and behavior, adjust emotions, and create empathy, adapt to environments, and achieve goals ( 30 ). According to the phenomenological account of Fuchs, bodily perception (proprioception) has a crucial role in understanding others ( 31 ). In this sense, the proprioceptive skills of a physician can help his empathic understanding become elementary for empathy and communication with the patient. In line with Fuchs’ view, empathic understanding encompasses a bodily resonance and mediates contextual knowledge about the patient. For medical education, empathy should help to relativize the singular experience, helping to prevent that own position becomes exclusive, bringing oneself out of the center of one’s own perspective.

Reasoning errors and cognitive biases

Errors in reasoning play a significant role in diagnostic errors and may compromise patient safety and quality of care. A recently published review by Norman et al. ( 32 ) examined clinical reasoning errors and how to avoid them. To simplify this complex issue, almost five types of diagnostic errors can be recognized: no-fault errors, system errors, errors due to the knowledge gap, errors due to misinterpretation, and cognitive biases ( 9 ). Apart from the first type of error, which is due to unavoidable errors due to various factors, we want to mention cognitive biases. They may occur at any stage of the reasoning process and may be linked to intuition and analytical systems. The most frequent cognitive biases in medicine are anchoring, confirmation bias, premature closure, search satisficing, posterior probability error, outcome bias, and commission bias ( 33 ). Anchoring is characterized by latching onto a particular aspect at the initial consultation, and then one refuses to change one’s mind about the importance of the later stages of reasoning. Confirmation bias ignores the evidence against an initial diagnosis. Premature closure leads to a misleading diagnosis by stopping the diagnostic process before all the information has been gathered or verified. Search satisficing blinds other additional diagnoses once the first diagnosis is made posterior probability error shortcuts to the usual patient diagnosis for previously recognized clinical presentations. Outcome bias impinges on our desire for a particular outcome that alters our judgment (e.g., a surgeon blaming sepsis on pneumonia rather than an anastomotic leak). Finally, commission bias is the tendency toward action rather than inaction, assuming that only good can come from doing something (rather than “watching and waiting”). These biases are only representative of the other types, and biases often work together. For example, in overconfidence bias (the tendency to believe we know more than we do), too much faith is placed in opinion instead of gathered evidence. This bias can be augmented by the anchoring effect or availability bias (when things are at the forefront of your mind because you have seen several cases recently or have been studying that condition in particular), and finally by commission bias—with disastrous results.

Novice vs. expert approaches

The reasoning strategies used by novices are different from those used by experts ( 34 ). Experts can usually gather beneficial information with highly effective problem-solving strategies. Heuristics are commonly, and most often successfully, used. The expert has a saved bank of illness scripts to compare and contrast the current case using more often type 1 thinking with much better results than the novice. Novices have little experience with their problems, do not have time to build a bank of illness scripts, and have no memories of previous similar cases and actions in such cases. Therefore, their mind search strategies will be weak, slow, and ponderous. Heuristics are poor and more often unsuccessful. They will consider a more comprehensive range of diagnostic possibilities and take longer to select approaches to discriminate among them. A novice needs specific knowledge and specific experience to become an expert. In our opinion, he also needs special training in the different ways of thinking. It is possible to study patterns, per se as well. It is, therefore, likely to guide the growth of knowledge for both fast thinking and slow one.

Moreover, learning by osmosis has traditionally been the method to move the novice toward expert capabilities by gradually gaining experience while observing experts’ reasoning. However, it seems likely that explicit teaching of clinical reasoning could make this process quicker and more effective. In this sense, an increased need for training and clinical knowledge along with the skill to apply the acquired knowledge is necessary. Students should learn disease pathophysiology, treatment concepts, and interdisciplinary team communication developing clinical decision-making through case-series-derived knowledge combining associative and procedural learning processes such as “Vienna Summer School on Oncology” ( 35 ).

Moreover, a refinement of the training of communicative skills is needed. Improving communication skills training for medical students and physicians should be the university’s primary goal. In fact, adequate communication leads to a correct diagnosis with 76% accuracy ( 36 ). The main challenge for students and physicians is the ability to respond to patients’ individual needs in an empathic and appreciated way. In this regard, it should be helpful to apply qualitative studies through the adoption of a semi-structured or structured interview using face-to-face in-depth interviews and e-learning platforms which can foster interdisciplinary learning by developing expertise for the clinical reasoning and decision-making in each area and integrating them. They could be effective tools to develop clinical reasoning and decision-making competencies and acquire effective communication skills to manage the relationship with patient ( 37 – 40 ).

Clinical reasoning ways

Clinical reasoning is complex: it often requires different mental processes operating simultaneously during the same clinical encounter and other procedures for different situations. The dual-process theory describes how humans have two distinct approaches to decision-making ( 41 ). When one uses heuristics, fast-thinking (system 1) is used ( 42 ). However, complex cases need slow analytical thinking or both systems involved ( 15 , 43 , 44 ). Slow thinking can use different ways of reasoning: deductive, hypothetic-deductive, inductive, abductive, probabilistic, rule-based/categorical/deterministic, and causal reasoning ( 9 ). We think that abductive and causal reasoning need further explanation. Abductive reasoning is necessary when no deductive argument (from general assumption to particular conclusion) nor inductive (the opposite of deduction) may be claimed.

In the real world, we often face a situation where we have information and move backward to the likely cause. We ask ourselves, what is the most plausible answer? What theory best explains this information? Abduction is just a process of choosing the hypothesis that would best explain the available evidence. On the other hand, causal reasoning uses knowledge of medical sciences to provide additional diagnostic information. For example, in a patient with dyspnea, if considering heart failure as a casual diagnosis, a raised BNP would be expected, and a dilated vena cava yet. Other diagnostic possibilities must be considered in the absence of these confirmatory findings (e.g., pneumonia). Causal reasoning does not produce hypotheses but is typically used to confirm or refute theories generated using other reasoning strategies.

Hypothesis generation and modification using deduction, induction/abduction, rule-based, causal reasoning, or mental shortcuts (heuristics and rule of thumbs) is the cognitive process for making a diagnosis ( 9 ). Clinicians develop a hypothesis, which may be specific or general, relating a particular situation to knowledge and experience. This process is referred to as generating a differential diagnosis. The process we use to produce a differential diagnosis from memory is unclear. The hypotheses chosen may be based on likelihood but might also reflect the need to rule out the worst-case scenario, even if the probability should always be considered.

Given the complexity of the involved process, there are numerous causes for failure in clinical reasoning. These can occur in any reasoning and at any stage in the process ( 33 ). We must be aware of subconscious errors in our thinking processes. Cognitive biases are subconscious deviations in judgment leading to perceptual distortion, inaccurate assessment, and misleading interpretation. From an evolutionary point of view, they have developed because, often, speed is more important than accuracy. Biases occur due to information processing heuristics, the brain’s limited capacity to process information, social influence, and emotional and moral motivations.

Heuristics are mind shortcuts and are not all bad. They refer to experience-based techniques for decision-making. Sometimes they may lead to cognitive biases (see above). They are also essential for mental processes, expressed by expert intuition that plays a vital role in clinical practice. Intuition is a heuristic that derives from a natural and direct outgrowth of experiences that are unconsciously linked to form patterns. Pattern recognition is just a quick shortcut commonly used by experts. Alternatively, we can create patterns by studying differently and adequately in a notional way that accumulates information. The heuristic that rules out the worst-case scenario is a forcing mind function that commits the clinician to consider the worst possible illness that might explain a particular clinical presentation and take steps to ensure it has been effectively excluded. The heuristic that considers the least probable diagnoses is a helpful approach to uncommon clinical pictures and thinking about and searching for a rare unrecognized condition. Clinical guidelines, scores, and decision rules function as externally constructed heuristics, usually to ensure the best evidence for the diagnosis and treatment of patients.

Hence, heuristics are helpful mind shortcuts, but the exact mechanisms may lead to errors. Fast-and-frugal tree and take-the-best heuristic are two formal models for deciding on the uncertainty domain ( 45 ).

In the recent times, clinicians have faced dramatic changes in the pattern of patients acutely admitted to hospital wards. Patients become older and older with comorbidities, rare diseases are frequent as a whole ( 46 ), new technologies are growing in a logarithmic way, and sustainability of the healthcare system is an increasingly important problem. In addition, uncommon clinical pictures represent a challenge for clinicians ( 47 – 50 ). In our opinion, it is time to claim clinical reasoning as a crucial way to deal with all complex matters. At first, we must ask ourselves if we have lost the teachings of ancient masters. Second, we have to rethink medical school courses and training ones. In this way, cognitive debiasing is needed to become a well-calibrated clinician. Fundamental tools are the comprehensive knowledge of nature and the extent of biases other than studying cognitive processes, including the interaction between fast and slow thinking. Cognitive debiasing requires the development of good mindware and the awareness that one debiasing strategy will not work for all biases. Finally, debiasing is generally a complicated process and requires lifelong maintenance.

We must remember that medicine is an art that operates in the field of science and must be able to cope with uncertainty. Managing uncertainty is the skill we have to develop against an excess of confidence that can lead to error. Sound clinical reasoning is directly linked to patient safety and quality of care.

Data availability statement

Author contributions.

SC and CA drafted the work and revised it critically. Both authors have approved the submission of the manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- Clinical problem...

Clinical problem solving and diagnostic decision making: selective review of the cognitive literature

- Related content

- Peer review

This article has a correction. Please see:

- Clinical problem solving and diagnostic decision making: selective review of the cognitive literature - November 02, 2006

- Arthur S Elstein , professor ( aelstein{at}uic.edu ) ,

- Alan Schwarz , assistant professor of clinical decision making.

- Department of Medical Education, University of Illinois College of Medicine, Chicago, IL 60612-7309, USA

- Correspondence to: A S Elstein

This is the fourth in a series of five articles

This article reviews our current understanding of the cognitive processes involved in diagnostic reasoning in clinical medicine. It describes and analyses the psychological processes employed in identifying and solving diagnostic problems and reviews errors and pitfalls in diagnostic reasoning in the light of two particularly influential approaches: problem solving 1 , 2 , 3 and decision making. 4 , 5 , 6 , 7 , 8 Problem solving research was initially aimed at describing reasoning by expert physicians, to improve instruction of medical students and house officers. Psychological decision research has been influenced from the start by statistical models of reasoning under uncertainty, and has concentrated on identifying departures from these standards.

Summary points

Problem solving and decision making are two paradigms for psychological research on clinical reasoning, each with its own assumptions and methods

The choice of strategy for diagnostic problem solving depends on the perceived difficulty of the case and on knowledge of content as well as strategy

Final conclusions should depend both on prior belief and strength of the evidence

Conclusions reached by Bayes's theorem and clinical intuition may conflict

Because of cognitive limitations, systematic biases and errors result from employing simpler rather than more complex cognitive strategies

Evidence based medicine applies decision theory to clinical diagnosis

Problem solving

Diagnosis as selecting a hypothesis.

The earliest psychological formulation viewed diagnostic reasoning as a process of testing hypotheses. Solutions to difficult diagnostic problems were found by generating a limited number of hypotheses early in the diagnostic process and using them to guide subsequent collection of data. 1 Each hypothesis can be used to predict what additional findings ought to be present if it were true, and the diagnostic process is a guided search for these findings. Experienced physicians form hypotheses and their diagnostic plan rapidly, and the quality of their hypotheses is higher than that of novices. Novices struggle to develop a plan and some have difficulty moving beyond collection of data to considering possibilities.

It is possible to collect data thoroughly but nevertheless to ignore, to misunderstand, or to misinterpret some findings, but also possible for a clinician to be too economical in collecting data and yet to interpret accurately what is available. Accuracy and thoroughness are analytically separable.

Pattern recognition or categorisation

Expertise in problem solving varies greatly between individual clinicians and is highly dependent on the clinician's mastery of the particular domain. 9 This finding challenges the hypothetico-deductive model of clinical reasoning, since both successful and unsuccessful diagnosticians use hypothesis testing. It appears that diagnostic accuracy does not depend as much on strategy as on mastery of content. Further, the clinical reasoning of experts in familiar situations frequently does not involve explicit testing of hypotheses. 3 10 , 11 , 12 Their speed, efficiency, and accuracy suggest that they may not even use the same reasoning processes as novices. 11 It is likely that experienced physicians use a hypothetico-deductive strategy only with difficult cases and that clinical reasoning is more a matter of pattern recognition or direct automatic retrieval. What are the patterns? What is retrieved? These questions signal a shift from the study of judgment to the study of the organisation and retrieval of memories.

Problem solving strategies

Hypothesis testing

Pattern recognition (categorisation)

By specific instances

By general prototypes

Viewing the process of diagnosis assigning a case to a category brings some other issues into clearer view. How is a new case categorised? Two competing answers to this question have been put forward and research evidence supports both. Category assignment can be based on matching the case to a specific instance (“instance based” or “exemplar based” recognition) or to a more abstract prototype. In the former, a new case is categorised by its resemblance to memories of instances previously seen. 3 11 This model is supported by the fact that clinical diagnosis is strongly affected by context—for example, the location of a skin rash on the body—even when the context ought to be irrelevant. 12

The prototype model holds that clinical experience facilitates the construction of mental models, abstractions, or prototypes. 2 13 Several characteristics of experts support this view—for instance, they can better identify the additional findings needed to complete a clinical picture and relate the findings to an overall concept of the case. These features suggest that better diagnosticians have constructed more diversified and abstract sets of semantic relations, a network of links between clinical features and diagnostic categories. 14

The controversy about the methods used in diagnostic reasoning can be resolved by recognising that clinicians approach problems flexibly; the method they select depends upon the perceived characteristics of the problem. Easy cases can be solved by pattern recognition: difficult cases need systematic generation and testing of hypotheses. Whether a diagnostic problem is easy or difficult is a function of the knowledge and experience of the clinician.

The strategies reviewed are neither proof against error nor always consistent with statistical rules of inference. Errors that can occur in difficult cases in internal medicine include failure to generate the correct hypothesis; misperception or misreading the evidence, especially visual cues; and misinterpretations of the evidence. 15 16 Many diagnostic problems are so complex that the correct solution is not contained in the initial set of hypotheses. Restructuring and reformulating should occur as data are obtained and the clinical picture evolves. However, a clinician may quickly become psychologically committed to a particular hypothesis, making it more difficult to restructure the problem.

Decision making

Diagnosis as opinion revision.

From the point of view of decision theory, reaching a diagnosis means updating opinion with imperfect information (the clinical evidence). 8 17 The standard rule for this task is Bayes's theorem. The pretest probability is either the known prevalence of the disease or the clinician's subjective impression of the probability of disease before new information is acquired. The post-test probability, the probability of disease given new information, is a function of two variables, pretest probability and the strength of the evidence, measured by a “likelihood ratio.”

Bayes's theorem tells us how we should reason, but it does not claim to describe how opinions are revised. In our experience, clinicians trained in methods of evidence based medicine are more likely than untrained clinicians to use a Bayesian approach to interpreting findings. 18 Nevertheless, probably only a minority of clinicians use it in daily practice and informal methods of opinion revision still predominate. Bayes's theorem directs attention to two major classes of errors in clinical reasoning: in the assessment of either pretest probability or the strength of the evidence. The psychological study of diagnostic reasoning from this viewpoint has focused on errors in both components, and on the simplifying rules or heuristics that replace more complex procedures. Consequently, this approach has become widely known as “heuristics and biases.” 4 19

Errors in estimation of probability

Availability —People are apt to overestimate the frequency of vivid or easily recalled events and to underestimate the frequency of events that are either very ordinary or difficult to recall. Diseases or injuries that receive considerable media attention are often thought of as occurring more commonly than they actually do. This psychological principle is exemplified clinically in the overemphasis of rare conditions, because unusual cases are more memorable than routine problems.

Representativeness —Representativeness refers to estimating the probability of disease by judging how similar a case is to a diagnostic category or prototype. It can lead to overestimation of probability either by causing confusion of post-test probability with test sensitivity or by leading to neglect of base rates and implicitly considering all hypotheses equally likely. This is an error, because if a case resembles disease A and disease B equally, and A is much more common than B, then the case is more likely to be an instance of A. Representativeness is associated with the “conjunction fallacy”—incorrectly concluding that the probability of a joint event (such as the combination of findings to form a typical clinical picture) is greater than the probability of any one of these events alone.

Heuristics and biases

Availability

Representativeness

Probability transformations

Effect of description detail

Conservatism

Anchoring and adjustment

Order effects

Decision theory assumes that in psychological processing of probabilities, they are not transformed from the ordinary probability scale. Prospect theory was formulated as a descriptive account of choices involving gambling on two outcomes, 20 and cumulative prospect theory extends the theory to cases with multiple outcomes. 21 Both prospect theory and cumulative prospect theory propose that, in decision making, small probabilities are overweighted and large probabilities underweighted, contrary to the assumption of standard decision theory. This “compression” of the probability scale explains why the difference between 99% and 100% is psychologically much greater than the difference between, say, 60% and 61%. 22

Support theory

Support theory proposes that the subjective probability of an event is inappropriately influenced by how detailed the description is. More explicit descriptions yield higher probability estimates than compact, condensed descriptions, even when the two refer to exactly the same events. Clinically, support theory predicts that a longer, more detailed case description will be assigned a higher subjective probability of the index disease than a brief abstract of the same case, even if they contain the same information about that disease. Thus, subjective assessments of events, while often necessary in clinical practice, can be affected by factors unrelated to true prevalence. 23

Errors in revision of probability

In clinical case discussions, data are presented sequentially, and diagnostic probabilities are not revised as much as is implied by Bayes's theorem 8 ; this phenomenon is called conservatism. One explanation is that diagnostic opinions are revised up or down from an initial anchor, which is either given in the problem or subjectively formed. Final opinions are sensitive to the starting point (the “anchor”), and the shift (“adjustment”) from it is typically insufficient. 4 Both biases will lead to collecting more information than is necessary to reach a desired level of diagnostic certainty.

It is difficult for everyday judgment to keep separate accounts of the probability of a disease and the benefits that accrue from detecting it. Probability revision errors that are systematically linked to the perceived cost of mistakes show the difficulties experienced in separating assessments of probability from values, as required by standard decision theory. There is a tendency to overestimate the probability of more serious but treatable diseases, because a clinician would hate to miss one. 24

Bayes's theorem implies that clinicians given identical information should reach the same diagnostic opinion, regardless of the order in which information is presented. However, final opinions are also affected by the order of presentation of information. Information presented later in a case is given more weight than information presented earlier. 25

Other errors identified in data interpretation include simplifying a diagnostic problem by interpreting findings as consistent with a single hypothesis, forgetting facts inconsistent with a favoured hypothesis, overemphasising positive findings, and discounting negative findings. From a Bayesian standpoint, these are all errors in assessing the diagnostic value of clinical evidence—that is, errors in implicit likelihood ratios.

Educational implications

Two recent innovations in medical education, problem based learning and evidence based medicine, are consistent with the educational implications of this research. Problem based learning can be understood as an effort to introduce the formulation and testing of clinical hypotheses into the preclinical curriculum. 26 The theory of cognition and instruction underlying this reform is that since experienced physicians use this strategy with difficult problems, and since practically any clinical situation selected for instructional purposes will be difficult for students, it makes sense to provide opportunities for students to practise problem solving with cases graded in difficulty. The finding of case specificity showed the limits of teaching a general problem solving strategy. Expertise in problem solving can be separated from content analytically, but not in practice. This realisation shifted the emphasis towards helping students acquire a functional organisation of content with clinically usable schemas. This goal became the new rationale for problem based learning. 27

Evidence based medicine is the most recent, and by most standards the most successful, effort to date to apply statistical decision theory in clinical medicine. 18 It teaches Bayes's theorem, and residents and medical students quickly learn how to interpret diagnostic studies and how to use a computer based nomogram to compute post-test probabilities and to understand the output. 28

We have selectively reviewed 30 years of psychological research on clinical diagnostic reasoning. The problem solving approach has focused on diagnosis as hypothesis testing, pattern matching, or categorisation. The errors in reasoning identified from this perspective include failure to generate the correct hypothesis; misperceiving or misreading the evidence, especially visual cues; and misinterpreting the evidence. The decision making approach views diagnosis as opinion revision with imperfect information. Heuristics and biases in estimation and revision of probability have been the subject of intense scrutiny within this research tradition. Both research paradigms understand judgment errors as a natural consequence of limitations in our cognitive capacities and of the human tendency to adopt short cuts in reasoning.

Both approaches have focused more on the mistakes made by both experts and novices than on what they get right, possibly leading to overestimation of the frequency of the mistakes catalogued in this article. The reason for this focus seems clear enough: from the standpoint of basic research, errors tell us a great deal about fundamental cognitive processes, just as optical illusions teach us about the functioning of the visual system. From the educational standpoint, clinical instruction and training should focus more on what needs improvement than on what learners do correctly; to improve performance requires identifying errors. But, in conclusion, we emphasise, firstly, that the prevalence of these errors has not been established; secondly, we believe that expert clinical reasoning is very likely to be right in the majority of cases; and, thirdly, despite the expansion of statistically grounded decision supports, expert judgment will still be needed to apply general principles to specific cases.

Series editor J A Knottnerus

Preparation of this review was supported in part by grant RO1 LM5630 from the National Library of Medicine.

Competing interests None declared.

“The Evidence Base of Clinical Diagnosis,” edited by J A Knottnerus, can be purchased through the BMJ Bookshop ( http://www.bmjbookshop.com/ )

- Elstein AS ,

- Shulman LS ,

- Bordage G ,

- Schmidt HG ,

- Norman GR ,

- Boshuizen HPA

- Kahneman D ,

- Sox HC Jr . ,

- Higgins MC ,

- Mellers BA ,

- Schwartz A ,

- Chapman GB ,

- Sonnenberg F

- Glasziou P ,

- Pliskin J ,

- Brooks LR ,

- Coblentz CL ,

- Lemieux M ,

- Kassirer JP ,

- Kopelman RI

- Sackett DL ,

- Haynes RB ,

- Guyatt GH ,

- Richardson WS ,

- Rosenberg W ,

- Tversky A ,

- Fischhoff B ,

- Bostrom A ,

- Quadrell M J

- Redelmeier DA ,

- Koehler DJ ,

- Liberman V ,

- Wallsten TS

- Bergus GR ,

- Search Search Search …

- Search Search …

Clinical Reasoning and Critical Thinking

There are a number of ways to evaluate problems we face on a daily basis, but in the medical field no two are more important than clinical reasoning and critical thinking. Here’s everything you need to know about the two, why they are used, and how they are interconnected.

Clinical reasoning is a cognitive process applied by nurses, clinicians, and other health professionals to analyze a clinical case, evaluate data, and prescribe a treatment plan. Critical thinking is the capability to analyze data and form a judgement on the basis of that information.

As you might recognize, clinical reasoning and critical thinking go hand in hand when evaluating information and creating treatment plans based on the available data. The depth of these two thinking methods goes far beyond mere analysis, however, and the rest of this article will define the two and dive into how clinical reasoning and critical thinking are used.

What Is Clinical Reasoning?

Believe it or not, there’s no agreed-upon definition for the term clinical reasoning, yet it’s widely used and well understood in the medical field. This phenomenon is likely due to the fact that clinical thinking is an offshoot of critical thinking that is applied to the treatment of patients.

The role of nurses, doctors, clinicians, and health professionals is often multifaceted, taking into account numerous sources of information in order to evaluate the patient’s condition, develop a course of treatment, and monitor that course of treatment for success.

Of course, clinical reasoning also relies heavily on prior cases, which themselves probably relied on some degree of clinical reasoning. That data is evaluated, and the patient’s health care professionals will assess whether more data needs to be gathered before making a decision about the treatment plan.

This process can be broken up into three general steps, although it’s important to reiterate that “clinical reasoning” doesn’t have a definite process that clinicians must follow; rather, it’s a mindset that is part and parcel of a patient-centric mentality.

Evaluating Data

Applying clinical reasoning starts with a fairly simple premise. If the patient comes in complaining of a headache, then some of the most logical causes a nurse or clinician might consider would be stress, a migraine, or certain infections.

This prompts them to ask more questions to evaluate the data further. Where is the headache located? Does the headache occur in relation to a particular event? Are there any other symptoms the patient is experiencing?

The answers to these questions can help narrow down the patient’s condition thanks to the historical precedents for and research on headaches and their underlying causes. At the very least, evaluating the data that the patient provides in light of prior cases is beneficial to eliminating the possibility of certain conditions.

Gathering More Data

Based on this initial information provided by the patient and gathered by physician tools, the physician can use this representation of the problem to guide the process.

Based on that initial information, the physician might be confident enough to prescribe a course of treatment based on a wealth of prior knowledge and information.

In other cases, however, more data needs to be gathered, and the physician will use clinical reasoning to repeat the information gathering process until they obtain a level of confidence that allows them to move forward with treatment.

Based on the information, the physician might issue a final diagnosis or suggest management actions to help the patient recover.

Taking Action

After the clinician has evaluated the information to the degree that they are confident making a diagnosis of the patient’s ailment, they can then evaluate the right course of treatment.

Is this an illness that can be treated with rest and an OTC medicine? Will surgery be required? Does the intensity of the illness warrant a higher dosage?

These questions, broad as they may be, are informed by the information gathering process to create an accurate picture of the problem and develop a solution. Again, that solution can be behavior modification, treatment, or a combination of both.

At this point, a physician should also manage the recovery process. Is the treatment working as expected? Are there any complications? How might we manage any complications that do arise?

If clinical reasoning as a whole seems particularly broad, it’s probably because it is. At its core, clinical reasoning is just the judgement of a healthcare professional to make accurate diagnoses, initiate treatment, and improve the patient’s condition.

How Are Clinical Reasoning and Critical Thinking Linked?

Clinical reasoning is a form of critical thinking that is applied to the medical field. Critical thinking skills help physicians evaluate credible information and assess alternative solutions, all of which is geared towards the question: how can I help the patient recover?

Critical thinking is an important skill for making judgement based on a rational, objective, and impartial thought process. It’s important in research, academic pursuits, and, obviously, clinical reasoning.

Physicians and clinicians, for example, apply their clinical reasoning skills to evaluate what treatments have the best success rate, how different factors of the patient’s condition can affect their recovery, and how alternative treatment methods might be used to improve the patient’s recovery process.

In particular, these health care professionals are evaluating the relevancy of information, the source of that information, and how that information can be used to better the treatment of patients in the future.

A systematic approach to clinical reasoning is a core aspect of critical thinking and crucial to the success of clinical judgement. A lapse in judgement or poor clinical reasoning can be harmful, costly, and extremely impactful on the patient’s wellbeing, which is likely why solid clinical reasoning is such a valuable skill in the world of medicine.

Final Thoughts

Clinical reasoning is a skill that you will develop in your years of schooling when entering the medical field, and the process of gathering information, evaluating information, and developing a course of treatment is strongly linked to the skill of critical thinking.

You may also like

Jobs That Require Critical Thinking Skills

Most jobs require specific skills to be effective and successful. Critical thinking skills are some of the most important skills you should […]

Divergent Thinking Tools: Unleashing Your Creative Potential

Divergent thinking is an essential component of creativity and problem-solving. It involves generating a wide range of ideas and potential solutions in […]

What is Associative Thinking and Why it Matters in Today’s Workplace

Associative thinking is a cognitive process that allows the mind to connect seemingly unrelated concepts and ideas. This type of thinking is […]

The Role of Critical Thinking in Modern Business: Enhancing Decision-Making and Problem-Solving

In today’s fast-paced and complex business landscape, the ability to think critically has become increasingly important. Critical thinking skills are essential to […]

Thinking Critically About the Quality of Critical Thinking Definitions and Measures

- First Online: 20 April 2016

Cite this chapter

- Lily Fountain 4

Part of the book series: Innovation and Change in Professional Education ((ICPE,volume 13))

1334 Accesses

1 Citations

Leading policy and professional organizations are in agreement that critical thinking is a key competency for health professionals to function in our complex health care environment. There is far less clarity in professions education literature about what it really means or how to best measure it in providers and students. Therefore, in order to clarify terminology and describe the context, definitions, and measures in critical thinking research, a systematic review using the keywords “critical thinking” or the associated term “clinical reasoning,” cross keywords nurse and physician, resulting in 43 studies is presented in this chapter. Results indicated that an explicit definition was not provided in 43 % of the studies, 70 % ascribed equivalency to the terms critical thinking, clinical reasoning, clinical judgment, problem-solving, or decision-making, and 40 % used researcher-made study-specific measures. Full alignment of definition and measure was found in 47 % of studies, 42 % of studies reported validity for obtained scores, and reliability was documented in 54 % of studies. Keyword analysis identified six common constructs in nursing studies of critical thinking in nursing: individual interest, knowledge, relational reasoning, prioritization, inference, and evaluation, along with three contextual factors: patient assessment, caring, and environmental resource assessment. Recommendations for future research include increased use of purposeful samples spanning multiple levels of provider experience; documentation of effect sizes, means, SD, and n; adherence to official standards for reliability and validity requiring documentation of both previous and current datasets as appropriate; and greater use of factor analysis to validate scales or regression to test models. Finally, critical thinking research needs to use explicit definitions that are part and parcel of the measures that operationalize the construct, and alternative measures need to be developed that line up the attributes of the definition with the attributes measured in the instrument. Once we can accurately describe and measure critical thinking, we can better ensure that rising members of the professions can apply this vital competency to patient care.

- Critical Thinking

- Interrater Reliability

- Clinical Reasoning

- Explicit Definition

- Implicit Measurement

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Abrami, P. C., Bernard, R. M., Borokhovski, E., Wade, A., Surkes, M. A., Tamim, R., & Zhang, D. (2008). Instructional interventions affecting critical thinking skills and dispositions: A stage 1 meta-analysis. Review of Educational Research, 78 , 1102–1134. doi: 10.3102/0034654308326084

Article Google Scholar

Ajjawi, R., & Higgs, J. (2008). Learning to reason: A journey of professional socialization. Advances in Health Sciences Education, 13 , 133–150. doi: 10.1007/s10459-006-9032-4

Alexander, P. A., & Murphy, P. K. (2000). The research base for APA’s learner-centered psychological principles. In N. Lambert & B. McCombs (Eds.), How students learn (pp. 25–60). Washington, DC: American Psychological Association.

Google Scholar

American Association of Colleges of Nursing. (2008). Essentials of baccalaureate education for professional nursing practice . Washington, DC: American Association of Colleges of Nursing.

American Educational Research Association, American Psychological Association, National Council on Measurement in Education. (1999). Standards for educational and psychological testing . Washington, DC: American Educational Research Association.

American Educational Research Association, American Psychological Association, National Council on Measurement in Education. (2014). Standards for educational and psychological testing . Washington, DC: American Educational Research Association.

Bashir, M., Afzal, M. T., & Azeem, M. (2008). Reliability and validity of qualitative research. Pakistani Journal of Statistics and Operation Research, IV (1), 35–45.

Benner, P. E., Hughes, R. G., & Sutphen, M. (2008). Clinical reasoning, decision-making, and action: Thinking critically and clinically. In R. G. Hughes (Ed.), Patient safety and quality: An evidence-based handbook for nurses. AHRQ Publication No. 08-0043 . Rockville, MD: Agency for Healthcare Research and Quality.

Blondy, L. C. (2011). Measurement and comparison of nursing faculty members’ critical thinking skills. Western Journal of Nursing Research, 33 , 180–195. doi: 10.1177/0193945910381596

Brunt, B. A. (2005). Critical thinking in nursing: An integrated review. Journal of Continuing Education in Nursing, 36 , 60–67.

Carpenter, C. B., & Doig, J. C. (1988). Assessing critical thinking across the curriculum. New Directions for Teaching and Learning, 34 , 33–46. doi: 10.1002/tl.37219883405

Chan, Z. C. Y. (2013). A systematic review of critical thinking in nursing education. Nurse Education Today, 33 , 236–240.

Cook, D. A., & Beckman, T. J. (2006). Current concepts in validity and reliability for psychometric instruments: Theory and application. The American Journal of Medicine, 119 , 166.e7–166.e16.

Cooke, M., Irby, D. M., & O’Brien, B. C. (2010). Educating physicians: A call for reform of medical school and residency . San Francisco: Jossey-Bass.

Cooper, H., Hedges, L. V., & Valentine, J. C. (2009). The handbook of research synthesis and meta-analysis (2nd ed.). New York: Russell Sage Foundation.

Creswell, J. W. (1994). Research design: Qualitative and quantitative approaches . Thousand Oaks, CA: Sage Publications.

Creswell, J. W. (2014). Research design: Qualitative, quantitative, and mixed methods approaches . Thousand Oaks, CA: Sage Publications.

Crocker, L. M., & Algina, J. (2006). Introduction to classical and modern test theory . Mason, OH: Wadsworth Group/Thomas Learning.

Cruz, D. M., Pimenta, C. M., & Lunney, M. (2009). Improving critical thinking and clinical reasoning with a continuing education course. The Journal of Continuing Education in Nursing, 40 , 121–127. doi: 10.3928/00220124-20090301-05

Dinsmore, D. H., Alexander, P. A., & Loughlin, S. M. (2008). The impact of new learning environments in an engineering design course. Instructional Science, 36 , 375–393. doi: 10.1007/s11251-008-9061-x

Drennan, J. (2010). Critical thinking as an outcome of a master’s degree in nursing programme. Journal of Advanced Nursing, 66 , 422–431. doi: 10.1111/j.1365-2648.2009.05170.x

Ennis, R. H. (1989). Critical thinking and subject specificity: Clarification and needed research. Educational Researcher, 18 , 4–10. doi: 10.3102/0013189X018003004

Ennis, R. H. (1991). Critical thinking: A streamlined conception. Teaching Philosophy, 14 , 5–24.

Evidence for Policy and Practice Information (EPPI). (N.D.). Different types of review . Retrieved from http://eppi.ioe.ac.uk/cms/default.aspx?tabid=1915&language=en-US

Facione, P. A. (1990). Critical thinking: A statement of expert consensus for purposes of educational assessment and instruction . Millbrae, CA: The California Academic Press.

Forneris, S. G., & Peden-McAlpine, C. (2007). Evaluation of a reflective learning intervention to improve critical thinking in novice nurses. Journal of Advanced Nursing, 57 , 410–421. doi: 10.1111/j.1365-2648.2006.04120.x

Funkesson, K. H., Anbäckena, E.-M., & Ek, A.-C. (2007). Nurses’ reasoning process during care planning taking pressure ulcer prevention as an example: A think-aloud study. International Journal of Nursing Studies, 44 , 1109–1119. doi: 10.1016/j.ijnurstu.2006.04.016

Göransson, K. E., Ehnfors, M., Fonteyn, M. E., & Ehrenberg, A. (2007). Thinking strategies used by registered nurses during emergency department triage. Journal of Advanced Nursing, 61 , 163–172. doi: 10.1111/j.1365-2648.2007.04473.x

Harden, R. M., Grant, J., Buckley, G., & Hart, I. R. (2000). Best evidence medical education. Advances in Health Sciences Education, 5 , 71–90. doi: 10.1023/A:1009896431203

Higgs, J., & Jones, M. (Eds.). (2000). Clinical reasoning for health professionals . Boston: Butterworth Heinemann.

Huang, G. C., Newman, L. R., & Schwartzstein, R. M. (2014). Critical thinking in health professions education: Summary and consensus statements of the millennium conference 2011. Teaching and Learning in Medicine, 26 (1), 95–102.

Institute of Medicine. (2010). The future of nursing: Leading change, advancing health . Washington, D.C.: National Academies Press.

Interprofessional Education Collaborative Expert Panel. (2011). Core competencies for interprofessional collaborative practice: Report of an expert panel . Washington, D.C.: Interprofessional Education Collaborative.

Johansson, M. E., Pilhammar, E., & Willman, A. (2009). Nurses’ clinical reasoning concerning management of peripheral venous cannulae. Journal of Clinical Nursing, 18 , 3366–3375. doi: 10.1111/j.1365-2702.2009.02973.x

Johnson, M. (2004). What’s wrong with nursing education research? Nurse Education Today, 24 , 585–588. doi: 10.1016/j.nedt.2004.09.005

Johnson, D., Flagg, A., & Dremsa, T. L. (2008). Effects of using human patient simulator (HPS™) versus a CD-ROM on cognition and critical thinking. Medical Education Online, 13 (1), 1–9. doi: 10.3885/meo.2008.T0000118

Kane, M. T. (1992). An argument-based approach to validity. Psychological Bulletin, 112 , 527–535. doi: 10.1037/0033-2909.112.3.527

Kataoka-Yahiro, M., & Saylor, C. (1994). A critical thinking model for nursing judgment. Journal of Nursing Education, 33 , 351–356.

Kimberlin, C. L., & Winterstein, A. G. (2008). Validity and reliability of measurement instruments used in research. American Journal of Health-System Pharmacy, 65 , 2276–2284.

Krupat, E., Sprague, J. M., Wolpaw, D., Haidet, P., Hatem, D., & O’Brien, B. (2011). Thinking critically about critical thinking: Ability, disposition or both? Medical Education, 45 (6), 625–635.

Kuiper, R. A., Heinrich, C., Matthias, A., Graham, M. J., & Bell-Kotwall, L. (2008). Debriefing with the OPT model of clinical reasoning during high fidelity patient simulation. International Journal of Nursing Education Scholarship, 5 (1), Article 17. doi: 10.2202/1548-923X.1466

Mamede, S., Schmidt, H. G., Rikers, R. M., Penaforte, J. C., & Coelho-Filho, J. M. (2007). Breaking down automaticity: Case ambiguity and the shift to reflective approaches in clinical reasoning. Medical Education, 41 , 1185–1192. doi: 10.1111/j.1365-2923.2007.02921.x

McAllister, M., Billett, S., Moyle, W., & Zimmer-Gembeck, M. (2009). Use of a think-aloud procedure to explore the relationship between clinical reasoning and solution-focused training in self-harm for emergency nurses. Journal of Psychiatric and Mental Health Nursing, 16 , 121–128. doi: 10.1111/j.1365-2850.2008.01339.x

McCartney, K., Burchinal, M. R., & Bub, K. L. (2006). Best practices in quantitative methods for developmentalists. Monographs of the Society for Research in Child Development, 71, 285. Chapter II, Measurement issues. doi: 10.1111/j.1540-5834.2006.00403.x

Nikopoulou-Smyrni, P., & Nikopoulos, C. K. (2007). A new integrated model of clinical reasoning: Development, description and preliminary assessment in patients with stroke. Disability and Rehabilitation, 29 , 1129–1138. doi: 10.1080/09638280600948318

Norman, G. (2005). Research in clinical reasoning: Past history and current trends. Medical Education, 39 , 418–427. doi: 10.1111/j.1365-2929.2005.02127.x

Ovretviet, J. (2011). BMJ Qual Saf 2011. 20 (Suppl1), i18ei23. doi: 10.1136/bmjqs.2010.045955

Patel, V. L., Arocha, J. F., & Zhang, J. (2004). Thinking and reasoning in medicine. In Keith Holyoak (Ed.), Cambridge handbook of thinking and reasoning . Cambridge, UK: Cambridge University Press.

Pintrich, P. R., Smith, D. A., Garcia, T., & McKeachie, W. J. (1991). A manual for the use of the Motivated Strategies for Learning Questionnaire (MSLQ) . Ann Arbor, MI: University of Michigan National Center for Research to Improve Postsecondary Teaching and Learning.

Ralston, P. A., & Bays, C. L. (2013). Enhancing critical thinking across the undergraduate experience: an exemplar from engineering. American Journal of Engineering Education (AJEE), 4 (2), 119–126.

Ratanawongsa, N., Thomas, P. A., Marinopoulos, S. S., Dorman, T., Wilson, L. M., Ashar, B. H., et al. (2008). The reported reliability and validity of methods for evaluating continuing medical education: A systematic review. Academic Medicine, 83 , 274–283. doi: 10.1097/ACM.0b013e3181637925

Rogers, J. C., & Holm, M. B. (1983). Clinical reasoning: The ethics, science, and art. American Journal of Occupational Therapy, 37 , 601–616. doi: 10.5014/ajot.37.9.601

Ross, D., Loeffler, K., Schipper, S., Vandermeer, B., & Allan, G. M. (2013). Do scores on three commonly used measures of critical thinking correlate with academic success of health professions trainees? A systematic review and meta-analysis. Academic Medicine, 88 (5), 724–734.

Schell, R., & Kaufman, D. (2009). Critical thinking in a collaborative online PBL tutorial. Journal of Educational Computing Research, 41 , 155–170. doi: 10.2190/EC.41.2.b

Simmons, B. (2010). Clinical reasoning: Concept analysis. Journal of Advanced Nursing, 66 , 1151–1158. doi: 10.1111/j.1365-2648.2010.05262.x

Simpson, E., & Courtney, M. D. (2002). Critical thinking in nursing education: A literature review. International Journal of Nursing Practice, 8 (April), 89–98. doi: 10.1046/j.1440-172x.2002.00340.x

Tanner, C. A. (1997). Spock would have been a terrible nurse and other issues related to critical thinking. Journal of Nursing Education, 36 , 3–4.

Turner, P. (2005). Critical thinking in nursing education and practice as defined in the literature. Nursing Education Perspectives, 26 , 272–277.

Walsh, C. M., & Seldomridge, L. A. (2006a). Critical thinking: Back to square two. Journal of Nursing Education, 45 , 212–219.

Walsh, C. M., & Seldomridge, L. A. (2006b). Measuring critical thinking: One step forward, one step back. Nurse Educator, 31 , 159–162.

Waltz, C. F. (2010). Measurement in nursing and health research (4th ed.). Philadelphia: Springer.

Watson, R., Stimpson, A., Topping, A., & Porock, D. (2002). Clinical competence assessment in nursing: A systematic review. Journal of Advanced Nursing, 39 , 421–431.

Wolpaw, T., Papp, K. K., & Bordage, G. (2009). Using SNAPPS to facilitate the expression of clinical reasoning and uncertainties: A randomized comparison group trial. Academic Medicine, 84 , 517–524. doi: 10.1097/ACM.0b013e31819a8cbf

Context (n. d.). In Merriam-Webster.com. Retrieved April 11, 2012 from http://www.merriam-webster.com/dictionary/context .

Alexander, P. A., Dinsmore, D. L., Fox, E., Grossnickle, E., Loughlin, S. M., Maggioni, L., Parkinson, M. M., & Winters, F. I. (2011). Higher order thinking and knowledge: Domain-general and domain-specific trends and future directions. In G. Shraw & D. H. Robinson (Eds.), Assessment of higher order thinking skills (pp. 47-88). Charlotte, NC: Information Age Publishing.

Fonteyn, M. E., & Cahill, M. (1998). The use of clinical logs to improve nursing students’ metacognition: A pilot study. Journal of Advanced Nursing, 28(1), 149-154.

Download references

Author information

Authors and affiliations.

Faculty of Nursing, University of Maryland School of Nursing, Baltimore, MD, USA

Lily Fountain

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Lily Fountain .

Editor information

Editors and affiliations.

University of California, Los Angeles, California, USA

Paul F. Wimmers

Alverno College, Milwaukee, Wisconsin, USA

Marcia Mentkowski

Rights and permissions

Reprints and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Fountain, L. (2016). Thinking Critically About the Quality of Critical Thinking Definitions and Measures. In: Wimmers, P., Mentkowski, M. (eds) Assessing Competence in Professional Performance across Disciplines and Professions. Innovation and Change in Professional Education, vol 13. Springer, Cham. https://doi.org/10.1007/978-3-319-30064-1_10

Download citation

DOI : https://doi.org/10.1007/978-3-319-30064-1_10

Published : 20 April 2016

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-30062-7

Online ISBN : 978-3-319-30064-1

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Get started with computers

- Learn Microsoft Office

- Apply for a job

- Improve my work skills

- Design nice-looking docs

- Getting Started

- Smartphones & Tablets

- Typing Tutorial

- Online Learning

- Basic Internet Skills

- Online Safety

- Social Media

- Zoom Basics

- Google Docs

- Google Sheets

- Career Planning

- Resume Writing

- Cover Letters

- Job Search and Networking

- Business Communication

- Entrepreneurship 101

- Careers without College

- Job Hunt for Today

- 3D Printing

- Freelancing 101

- Personal Finance

- Sharing Economy

- Decision-Making

- Graphic Design

- Photography

- Image Editing

- Learning WordPress

- Language Learning

- Critical Thinking

- For Educators

- Translations

- Staff Picks

- English expand_more expand_less

Critical Thinking and Decision-Making - What is Critical Thinking?

Critical thinking and decision-making -, what is critical thinking, critical thinking and decision-making what is critical thinking.

Critical Thinking and Decision-Making: What is Critical Thinking?

Lesson 1: what is critical thinking, what is critical thinking.

Critical thinking is a term that gets thrown around a lot. You've probably heard it used often throughout the years whether it was in school, at work, or in everyday conversation. But when you stop to think about it, what exactly is critical thinking and how do you do it ?

Watch the video below to learn more about critical thinking.

Simply put, critical thinking is the act of deliberately analyzing information so that you can make better judgements and decisions . It involves using things like logic, reasoning, and creativity, to draw conclusions and generally understand things better.

This may sound like a pretty broad definition, and that's because critical thinking is a broad skill that can be applied to so many different situations. You can use it to prepare for a job interview, manage your time better, make decisions about purchasing things, and so much more.

The process

As humans, we are constantly thinking . It's something we can't turn off. But not all of it is critical thinking. No one thinks critically 100% of the time... that would be pretty exhausting! Instead, it's an intentional process , something that we consciously use when we're presented with difficult problems or important decisions.

Improving your critical thinking

In order to become a better critical thinker, it's important to ask questions when you're presented with a problem or decision, before jumping to any conclusions. You can start with simple ones like What do I currently know? and How do I know this? These can help to give you a better idea of what you're working with and, in some cases, simplify more complex issues.

Real-world applications

Let's take a look at how we can use critical thinking to evaluate online information . Say a friend of yours posts a news article on social media and you're drawn to its headline. If you were to use your everyday automatic thinking, you might accept it as fact and move on. But if you were thinking critically, you would first analyze the available information and ask some questions :

- What's the source of this article?

- Is the headline potentially misleading?

- What are my friend's general beliefs?

- Do their beliefs inform why they might have shared this?

After analyzing all of this information, you can draw a conclusion about whether or not you think the article is trustworthy.

Critical thinking has a wide range of real-world applications . It can help you to make better decisions, become more hireable, and generally better understand the world around you.

/en/problem-solving-and-decision-making/why-is-it-so-hard-to-make-decisions/content/

- Reference Manager

- Simple TEXT file

People also looked at

Perspective article, rethinking clinical decision-making to improve clinical reasoning.

- 1 Department of Internal Medicine, National Relevance and High Specialization Hospital Trust ARNAS Civico, Palermo, Italy

- 2 Dipartimento di Promozione della Salute Materno Infantile, Medicina Interna e Specialistica di Eccellenza “G. D’Alessandro” (PROMISE), University of Palermo, Palermo, Italy

Improving clinical reasoning techniques is the right way to facilitate decision-making from prognostic, diagnostic, and therapeutic points of view. However, the process to do that is to fill knowledge gaps by studying and growing experience and knowing some cognitive aspects to raise the awareness of thinking mechanisms to avoid cognitive errors through correct educational training. This article examines clinical approaches and educational gaps in training medical students and young doctors. The authors explore the core elements of clinical reasoning, including metacognition, reasoning errors and cognitive biases, reasoning strategies, and ways to improve decision-making. The article addresses the dual-process theory of thought and the new Default Mode Network (DMN) theory. The reader may consider the article a first-level guide to deepen how to think and not what to think, knowing that this synthesis results from years of study and reasoning in clinical practice and educational settings.

Introduction

Clinical reasoning is based on complex and multifaceted cognitive processes, and the level of cognition is perhaps the most relevant factor that impacts the physician’s clinical reasoning. These topics have inspired considerable interest in the last years ( 1 , 2 ). According to Croskerry ( 3 ) and Croskerry and Norman ( 4 ), over 40 affective and cognitive biases may impact clinical reasoning. In addition, it should not be forgotten that both the processes and the subject matter are complex.

In medicine, there are thousands of known diagnoses, each with different complexity. Moreover, in line with Hammond’s view, a fundamental uncertainty will inevitably fail ( 5 ). Any mistake or failure in the diagnostic process leads to a delayed diagnosis, a misdiagnosis, or a missed diagnosis. The particular context in which a medical decision is made is highly relevant to the reasoning process and outcome ( 6 ).

More recently, there has been renewed interest in diagnostic reasoning, primarily diagnostic errors. Many researchers deepen inside the processes underpinning cognition, developing new universal reasoning and decision-making model: The Dual Process Theory.

This theory has a prompt implementation in medical decision-making and provides a comprehensive framework for understanding the gamma of theoretical approaches taken into consideration previously. This model has critical practical applications for medical decision-making and may be used as a model for teaching decision reasoning. Given this background, this manuscript must be considered a first-level guide to understanding how to think and not what to think, deepening clinical decision-making and providing tools for improving clinical reasoning.

Too much attention to the tip of the iceberg

The New England Journal of Medicine has recently published a fascinating article ( 7 ) in the “Perspective” section, whereon we must all reflect on it. The title is “At baseline” (the basic condition). Dr. Bergl, from the Department of Medicine of the Medical College of Wisconsin (Milwaukee), raised that his trainees no longer wonder about the underlying pathology but are focused solely on solving the acute problem. He wrote that, for many internal medicine teams, the question is not whether but to what extent we should juggle the treatment of critical health problems of patients with care for their coexisting chronic conditions. Doctors are under high pressure to discharge, and then they move patients to the next stage of treatment without questioning the reason that decompensated the clinical condition. Suppose the chronic condition or baseline was not the fundamental goal of our performance. In that case, our juggling is highly inconsistent because we are working on an intermediate outcome curing only the decompensation phase of a disease. Dr. Bergl raises another essential matter. Perhaps equally disturbing, by adopting a collective “base” mentality, we unintentionally create a group of doctors who prioritize productivity rather than developing critical skills and curiosity. We agree that empathy and patience are two other crucial elements in the training process of future internists. Nevertheless, how much do we stimulate all these qualities? Perhaps are not all part of cultural backgrounds necessary for a correct patient approach, the proper clinical reasoning, and balanced communication skills?

On the other hand, a chronic baseline condition is not always the real reason that justifies acute hospitalization. The lack of a careful approach to the baseline and clinical reasoning focused on the patient leads to this superficiality. We are focusing too much on our students’ practical skills and the amount of knowledge to learn. On the other hand, we do not teach how to think and the cognitive mechanisms of clinical reasoning.

Time to rethink the way of thinking and teaching courses

Back in 1910, John Dewey wrote in his book “How We Think” ( 8 ), “The aim of education should be to teach us rather how to think than what to think—rather improve our minds to enable us to think for ourselves than to load the memory with the thoughts of other men.”

Clinical reasoning concerns how to think and make the best decision-making process associated with the clinical practice ( 9 ). The core elements of clinical reasoning ( 10 ) can be summarized in:

1. Evidence-based skills,

2. Interpretation and use of diagnostic tests,

3. Understanding cognitive biases,

4. Human factors,

5. Metacognition (thinking about thinking), and

6. Patient-centered evidence-based medicine.