Robots and Artificial Intelligence Report (Assessment)

Introduction, impact on organizations, impact on employees, how society is influenced, recommendations.

The world is approaching an era with a new technological structure, where robots and devices powered with artificial intelligence will be extensively used both in production and in personal life. Currently, manufacturers of such devices and machinery are often labeling their products intellectual. However, at the current stage of development, it is merely marketing. Substantial research is needed to make contemporary machines intelligent. Although the technology does not yet exist in its final form, many are already pondering the possible positive and negative impacts of robots and artificial intelligence.

One the one hand, with artificial intelligence and fully autonomous robots, organizations will be able to optimize their spending and increase the speed of development and production of their commodities. On the other hand, employees are concerned that they will be laid off because their responsibilities might be taken away by machinery. Outside of the organizational context, artificial intelligence and robots are likely to provide additional comfort and convenience to people in their personal lives. This paper explores the benefits and disadvantages of robots and AI in the context of business, job market, and society.

Artificial intelligence and robots can bring many benefits to organizations, mainly due to the capacity for extensive automation. However, automation is a vague term, and it is necessary to clearly outline what aspects of organizational processes can be automated. On the contrary, there are concerns with security and ethics. Furthermore, AI development, due to its novelty, continues to stay as one of the most expensive areas of research.

Positive Effects

Customer relationship is one of the most critical areas for every organization. Currently, replying to emails, answering chat messages and phone calls, and resolving client issues require trained personnel. At the same time, companies collect enormous amounts of customer data that is of no use if not applied to solve problems. Artificial intelligence and robots may solve this issue by analyzing the vast array of data and learning to respond to customer inquiries (Ransbotham, Kiron, Gerbert, & Reeves, 2017). Not only will it lead to a reduction in the number of customer service agents, but it may also lead to a more pleasant client experience. That is because while one human specialist can handle only one person, a software program can handle thousands of requests simultaneously.

To perceive any meaning from terabytes of semi-structured and unstructured information, data specialists of companies need to work tirelessly and for considerable amounts of time. Artificial intelligence can automate these data mining tasks – new data is analyzed immediately after getting added to databases, and the autonomous program automatically scans for patterns and anomalies (von Krogh, 2018). The technology may be used to discover insights and gain a competitive advantage in the market.

AI-powered robots may replace humans in some areas of a company’s operations. For instance, some hotels are using such robots to automate check-ins and check-outs, provide more convenient customer experience through 24/7 support service (Wirtz, 2019). Operational automation is also possible in manufacturing facilities where string temperature levels must be maintained (Wirtz, 2019). Stock refilling is a potential use case for stores and restaurants. Although not everything can be automated, a substantial portion of companies’ activities can be run through the use of intelligent robot systems.

Administrative tasks can also be eased with the help of artificial intelligence. For instance, current use cases include aiding the recruitment department (Hughes, Robert, Frady, & Arroyos, 2019). An intelligent software system can automatically analyze thousands of resumes and filter those that are not suitable (Hughes et al., 2019). There are several benefits of an automated recruitment process – a substantial amount of financial resources is saved because there is no need to hire a recruitment agency, and all applications will be considered objectively, with no bias and discrimination.

The recruitment process is not the only human resources department function an intelligent software system may help with. Organizations are often challenged by the need to schedule workers according to workload (Hughes et al., 2019). HR managers also need to consider which workers work well together, and what task needs which employee. Artificial intelligence may automate much of these responsibilities – it can assign more workers to a particular shift when more customers are expected, and choose employees that work together much more effectively than others (Hughes et al., 2019). Both organizations and employees benefit from such functions because companies will have optimized scheduling, and workers will be more satisfied because of more productive relationships.

Adverse Impacts

Despite many benefits, there are also limitations of artificial intelligence and robotics. The technology relies on the availability of data, and often such information is unstructured, is of poor quality, and inconsistent (Webber, Detjen, MacLean, & Thomas, 2019). Therefore, it is challenging for a company with no access to a large pool of data to develop an intelligent system. Currently, only companies like Google, Facebook, Uber, and Apple, that gather terabytes of data each minute have the capacity to build sophisticated and useful AI-powered systems.

Any company that is planning to adopt AI and robotics to achieve new business objectives should be ready for high expenditures. Because of a shortage of skilled professionals that are able to develop and operate reliable AI solutions, the cost of producing a required software system is high. Such a situation makes AI a prerogative of rich companies and virtually impossible for those who only want to try the technology to see whether it is suitable at the moment.

For the majority of workers, their managers and supervisors are the sources of mentorship and advice. A recent study suggests that robots can also serve as guidance because the majority of employees trust robots more than their managers (Brougham & Haar, 2018). The primary advantage of robot managers over their human counterparts is that they provide unbiased and objective advice. Besides, robots are able to work for 24 hours, which allows employees to get answers to their questions much sooner than they receive now.

As stated in the paper before, artificial intelligence and robots can contribute significantly to the recruitment process with unbiased assistance. It is beneficial not only to enterprises but also to employees because they will have an equal opportunity for receiving the job (Hughes et al., 2019). Also, recommendation systems may allow people with little or no experience to be recognized by companies (Hughes et al., 2019). Traditional barriers will cease to exist if hiring managers will start to depend on intelligent systems heavily.

One significant benefit of robots over humans is that they are never physically tired. This attribute can be proven to be especially beneficial if robots are used to aid people with tedious and repetitive tasks (Cesta, Cortellessa, Orlandini, & Umbrico, 2018). However, for this approach to work, companies need to consider robots not as an eventual replacement but as colleagues to human employees. In such a scenario, human workers deal with unpredictable and non-trivial tasks, while robots relieve them from doing repetitive tasks and duties that may have caused physical harm.

Robots powered with artificial intelligence have the potential to become effective teambuilders. There are efforts to build a system that accepts responses and commentaries from team members and gives targeted feedback, which may be used to enhance the relationship between team members (Webber, Detjen, MacLean, & Thomas, 2019). The system can also be used at a different stage – when forming new teams, by carefully inspecting the available data, the system may give recommendations on which employees will be the most effective in a team considering their skillsets (Webber et al., 2019). While AI cannot become a replacement for human involvement in team building activities, it can positively influence groups through systematic interventions.

Despite many positive effects, artificial intelligence and robots may serve as the most detrimental agents to human employment. Due to the capacity of being automated, robots and AI may replace humans in many areas of activity. For instance, with the emergence of autonomous vehicles, drivers may lose their jobs. The list of jobs that are under the risk of being diminished by robots is long. It includes support specialists, proofreaders, receptionists, machinery operators, factory workers, taxi and bus drivers, soldiers, and farmers (Brougham & Haar, 2018).

Some claim that, while taking away many opportunities from people, artificial intelligence and robots will create other jobs that humans will need to occupy (Brougham & Haar, 2018). However, skeptics state that artificial intelligence will harm the middle class and increase the gap between highly skilled employees and regular workers (Brougham & Haar, 2018). AI is only an emerging technology, but employees and companies will need to be ready for its adverse influences.

Society has been significantly influenced by technology, and this trend will continue as artificial intelligence and robots get more sophisticated. As progress is made in the field of AI and robotics, the technology will blend into people’s lives, and it will become challenging to distinguish between what is a technology and what is not (Helbing, 2019). This uniform integration has many benefits, such as convenience and comfort. However, because technology is power, some critics claim that people will need to view these advancements from the standpoint of citizens, not consumers (Helbing, 2019).

Artificial intelligence relies heavily on the data people generate in order to train and provide better results (Helbing, 2019). As the sole owners of their personal data, people will need to be able to control how this data is used and for what purposes. In the wrong hands or the corrupt system, this information may be used to influence citizens (Helbing, 2019). Therefore, it is reasonable to claim that, as artificial intelligence and robots get more advanced, society will strive for more transparency in how their personal data is used.

There are three recommendations worth making, and each one of them relates to one potential effect of artificial intelligence and robots. There is a widespread belief that intelligent systems will eventually replace human beings in many industries and jobs (Brougham & Haar, 2018). Not only will it have a detrimental effect on those who will lose their jobs, but it will also harm society’s current structure. One way of mitigating these consequences is to design robots and AI not to replace human employees but assist them in jobs they are performing for increasing productivity.

In the contemporary world, people produce enormous amounts of data, which is collected both by the government and private companies. Current laws require enterprises to use personal data of their customers in such a way that their private information is not exposed to third-parties (Helbing, 2019).

As artificial intelligence gets more developed, current laws may become obsolete. The government should demand companies to be much more transparent in how the data is used. Furthermore, the government should require companies to undertake security measures so that personal information is not used by an intelligent system to impose harm on people. A relatively recent case of Cambridge Analytica shows how the public can be manipulated if personal data results in the wrong hands. Public awareness of AI and robots’ implications should also be increased.

It is already known that artificial intelligence and robotics are the next chapters in the history of digital technology. Present versions of artificial intelligence have partial success in identifying and curing cancer, predicting the weather, analyzing the image from cameras and other sensors to drive a car autonomously, and much more. Organizations and businesses are the first ones to utilize the technology to maximize their profits and minimize their expenditure while keeping the quality of products and services at the highest levels. There are many benefits of the technology, including significant automation in many areas of organizational activity, and employee assistance.

People, however, should also remember the downsides – many people are likely to lose their jobs, and companies need to make substantial investments before artificial intelligence and robots are entirely usable. To mitigate some of the adverse consequences, companies will need to think about using AI and robots to assist employees and not to replace them. The government should also be involved – it must ensure that personal data of customers is safe. Efforts should also be made to increase public awareness about the implications of artificial intelligence and robots.

Brougham, D., & Haar, J. (2018). Smart technology, artificial intelligence, robotics, and algorithms (STARA): Employees’ perceptions of our future workplace. Journal of Management & Organization , 24 (2), 239-257.

Cesta, A., Cortellessa, G., Orlandini, A., & Umbrico, A. (2018). Towards flexible assistive robots using artificial intelligence . Web.

Helbing, D. (2019). Towards digital enlightenment . Cham, Switzerland: Springer International Publishing.

Hughes, C., Robert, L., Frady, K., & Arroyos, A. (2019). Managing technology and middle- and low-skilled employees . Bingley, UK: Emerald Group Publishing.

Ransbotham, S., Kiron, D., Gerbert, P., & Reeves, M. (2017). Reshaping business with artificial intelligence: Closing the gap between ambition and action. MIT Sloan Management Review , 59 (1).

von Krogh, G. (2018). Artificial intelligence in organizations: New opportunities for phenomenon-based theorizing. Academy of Management Discoveries , 4 (4), 404-409.

Webber, S. S., Detjen, J., MacLean, T. L., & Thomas, D. (2019). Team challenges: Is artificial intelligence the solution? Business Horizons , 62 (6), 741-750.

Wirtz, J. (2019). Organizational ambidexterity: Cost-effective service excellence, service robots, and artificial intelligence . Web.

- Chicago (A-D)

- Chicago (N-B)

IvyPanda. (2024, March 24). Robots and Artificial Intelligence. https://ivypanda.com/essays/robots-and-artificial-intelligence/

"Robots and Artificial Intelligence." IvyPanda , 24 Mar. 2024, ivypanda.com/essays/robots-and-artificial-intelligence/.

IvyPanda . (2024) 'Robots and Artificial Intelligence'. 24 March.

IvyPanda . 2024. "Robots and Artificial Intelligence." March 24, 2024. https://ivypanda.com/essays/robots-and-artificial-intelligence/.

1. IvyPanda . "Robots and Artificial Intelligence." March 24, 2024. https://ivypanda.com/essays/robots-and-artificial-intelligence/.

Bibliography

IvyPanda . "Robots and Artificial Intelligence." March 24, 2024. https://ivypanda.com/essays/robots-and-artificial-intelligence/.

- Robotics and Artificial Intelligence in Organizations

- Robotic Visual Recognition and Robotics in Healthcare

- Amazon’s AI-Powered Home Robots

- Artificial Intelligence in “I, Robot” by Alex Proyas

- Questionable Future of Robotics

- Is the Robotics Development Helpful or Harmful?

- Robotics in Construction: Automated and Semi-Automated Devices

- Robotics' Sociopolitical and Economic Implications

- Why Artificial Intelligence Will Not Replace Human in Near Future?

- The Use of Robotics in the Operating Room

- Attraction of Investment for Robotization of Production

- Natural Language Processing in Business

- Robots in Today's Society: Artificial Intelligence

- Neural Networks and Stocks Trading

- Computer Financial Systems and the Labor Market

These 5 robots could soon become part of our everyday lives

Recent advances in artificial intelligence (AI) are leading to the emergence of a new class of robot. Image: Quartz

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Pieter Abbeel

.chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} Explore and monitor how .chakra .wef-15eoq1r{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;color:#F7DB5E;}@media screen and (min-width:56.5rem){.chakra .wef-15eoq1r{font-size:1.125rem;}} Artificial Intelligence is affecting economies, industries and global issues

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, artificial intelligence.

- Recent advances in artificial intelligence (AI) are leading to the emergence of a new class of robot.

- In the next five years, our households and workplaces will become dependent upon the role of robots, says Pieter Abbeel, the founder of UC Berkeley Robot Learning Lab.

- Here he outlines a few standout examples.

People often ask me about the real-life potential for inhumane, merciless systems like Hal 9000 or the Terminator to destroy our society.

Growing up in Belgium and away from Hollywood, my initial impressions of robots were not so violent. In retrospect, my early positive affiliations with robots likely fueled my drive to build machines to make our everyday lives more enjoyable. Robots working alongside humans to manage day-to-day mundane tasks was a world I wanted to help create.

Now, many years later, after emigrating to the United States, finishing my PhD under Andrew Ng , starting the Berkeley Robot Learning Lab , and co-founding Covariant , I’m convinced that robots are becoming sophisticated enough to be the allies and helpful teammates that I hoped for as a child.

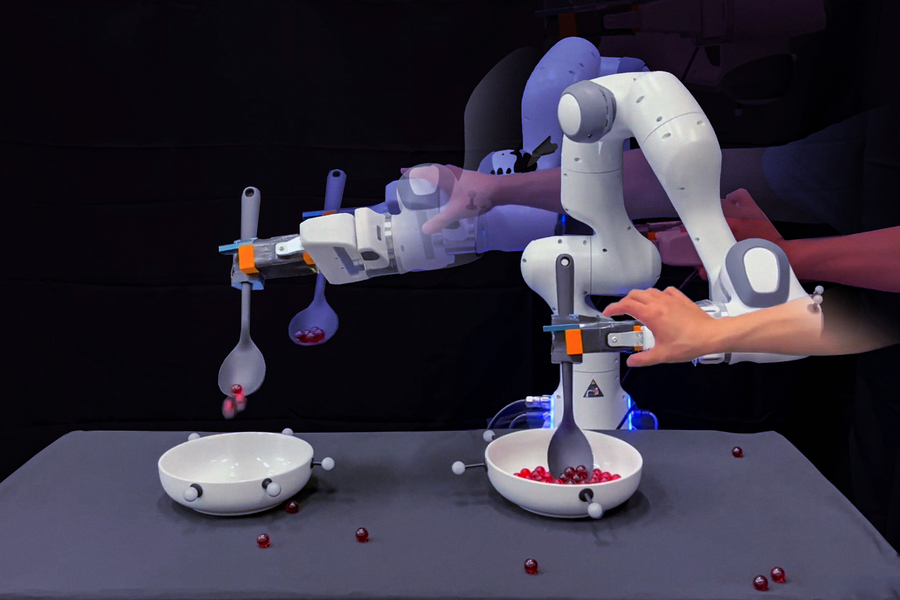

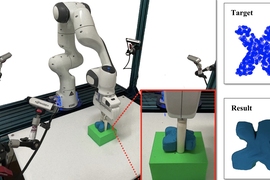

Recent advances in artificial intelligence (AI) are leading to the emergence of a new class of robot. These are machines that go beyond the traditional bots running preprogrammed motions; these are robots that can see, learn, think, and react to their surroundings.

While we may not personally witness or interact with robots directly in our daily lives, there will be a day over the next five years in which our households and workplaces are dependent upon the role of robots to run smoothly. Here are a few standout examples, drawn from some of my guests on The Robot Brains Podcast .

Robots that deliver medical supplies to extremely remote places

After spending months in Africa and South America talking to medical and disaster relief providers, Keenan Wyrobek foresaw how AI-powered drone technology could make a positive impact. He started Zipline , which provides drones to handle important and dangerous deliveries. Now shipping one ton of products a day, the company is helping communities in need by using robots to accomplish critical deliveries (they’re even delivering in parts of the US ).

Robots that automate recycling

Recycling is one of the most important activities we can do for a healthier planet. However, it’s a massive undertaking. Consider that each human being produces almost 5 lbs of waste a day and there are 7.8 billion of us. The real challenge comes in with second sorting—the separation process applied once the easy-to-sort materials have been filtered. Matanya Horowitz sat down with me to explain how AMP Robotics helps facilities across the globe save and reuse valuable materials that are worth billions of dollars but were traditionally lost to landfills.

Robots that handle dangerous, repetitive warehouse tasks

Marc Segura of ABB , a robotics firm started in 1988, shared real stories from warehouses across the globe in which robots are managing jobs that have high-accident rates or long-term health consequences for humans. With robots that are strong enough to lift one-ton cars with just one arm, and other robots that can build delicate computer chips (a task that can cause long-term vision impairments for a person), there are a whole range of machines handling tasks not fit for humans.

Have you read?

How to prevent mass extinction in the ocean using ai, robots and 3d printers, get a grip: how geckos are inspiring robotics , robots to help nurses on the frontlines.

Long before covid-19 started calling our attention to the overworked nature of being a healthcare worker, Andrea Thomas of Diligent Robots noticed the issue. She spoke with me about the inspiration for designing Moxi, a nurse helper. Now being used in Dallas hospitals , the robots help clinical staff with tasks that don’t involve interacting with patients. Nurses have reported lowered stress levels as mundane errands like supply stocking is automatically handled. Moxi is even adding a bit of cheer to patients’ days as well.

Robots that run indoor farms

Picking and sorting the harvest is the most time-sensitive and time-consuming task on a farm. Getting it right can make a massive difference to the crop’s return. I got the chance to speak with AppHarvest ’s Josh Lessing , who built the world’s first “cross-crop” AI, Virgo, that learned how to pick all different types of produce. Virgo can switch between vastly different shapes, densities, and growth scenarios, meaning one day it can pick tomatoes, the next cucumbers, and after that, strawberries. Virgo currently operates at the AppHarvest greenhouses in Kentucky to grow non-GMO, chemical-free produce.

The robot future has already begun

Collaborating with software-driven co-workers is no longer the future; it’s now. Perhaps you’ve already seen some examples. You’ll be seeing a lot more in the decade to come.

Pieter Abbeel is the director of the Berkeley Robot Learning Lab and a co-founder of Covariant, an AI robotics firm. Subscribe to his podcast wherever you like to listen.

Don't miss any update on this topic

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

Related topics:

The agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} weekly.

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Artificial Intelligence .chakra .wef-17xejub{-webkit-flex:1;-ms-flex:1;flex:1;justify-self:stretch;-webkit-align-self:stretch;-ms-flex-item-align:stretch;align-self:stretch;} .chakra .wef-nr1rr4{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-nr1rr4{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-nr1rr4{font-size:1rem;}} See all

Microchips – their past, present and future

Victoria Masterson

March 27, 2024

Technology’s tipping point: Why now is the time to earn trust in AI

Margot Edelman

March 21, 2024

3 tech pioneers on the biggest AI breakthroughs – and what they expect will come next

From the world wide web to AI: 11 technology milestones that changed our lives

Stephen Holroyd

March 14, 2024

Here's how investors are navigating the opportunities and pitfalls of the AI era

Chris Gillam and Judy Wade

How to navigate the ethical dilemmas posed by the future of digital identity

Matt Price and Anna Schilling

The present and future of AI

Finale doshi-velez on how ai is shaping our lives and how we can shape ai.

Finale Doshi-Velez, the John L. Loeb Professor of Engineering and Applied Sciences. (Photo courtesy of Eliza Grinnell/Harvard SEAS)

How has artificial intelligence changed and shaped our world over the last five years? How will AI continue to impact our lives in the coming years? Those were the questions addressed in the most recent report from the One Hundred Year Study on Artificial Intelligence (AI100), an ongoing project hosted at Stanford University, that will study the status of AI technology and its impacts on the world over the next 100 years.

The 2021 report is the second in a series that will be released every five years until 2116. Titled “Gathering Strength, Gathering Storms,” the report explores the various ways AI is increasingly touching people’s lives in settings that range from movie recommendations and voice assistants to autonomous driving and automated medical diagnoses .

Barbara Grosz , the Higgins Research Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) is a member of the standing committee overseeing the AI100 project and Finale Doshi-Velez , Gordon McKay Professor of Computer Science, is part of the panel of interdisciplinary researchers who wrote this year’s report.

We spoke with Doshi-Velez about the report, what it says about the role AI is currently playing in our lives, and how it will change in the future.

Q: Let's start with a snapshot: What is the current state of AI and its potential?

Doshi-Velez: Some of the biggest changes in the last five years have been how well AIs now perform in large data regimes on specific types of tasks. We've seen [DeepMind’s] AlphaZero become the best Go player entirely through self-play, and everyday uses of AI such as grammar checks and autocomplete, automatic personal photo organization and search, and speech recognition become commonplace for large numbers of people.

In terms of potential, I'm most excited about AIs that might augment and assist people. They can be used to drive insights in drug discovery, help with decision making such as identifying a menu of likely treatment options for patients, and provide basic assistance, such as lane keeping while driving or text-to-speech based on images from a phone for the visually impaired. In many situations, people and AIs have complementary strengths. I think we're getting closer to unlocking the potential of people and AI teams.

There's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: Over the course of 100 years, these reports will tell the story of AI and its evolving role in society. Even though there have only been two reports, what's the story so far?

There's actually a lot of change even in five years. The first report is fairly rosy. For example, it mentions how algorithmic risk assessments may mitigate the human biases of judges. The second has a much more mixed view. I think this comes from the fact that as AI tools have come into the mainstream — both in higher stakes and everyday settings — we are appropriately much less willing to tolerate flaws, especially discriminatory ones. There's also been questions of information and disinformation control as people get their news, social media, and entertainment via searches and rankings personalized to them. So, there's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: What is the responsibility of institutes of higher education in preparing students and the next generation of computer scientists for the future of AI and its impact on society?

First, I'll say that the need to understand the basics of AI and data science starts much earlier than higher education! Children are being exposed to AIs as soon as they click on videos on YouTube or browse photo albums. They need to understand aspects of AI such as how their actions affect future recommendations.

But for computer science students in college, I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc. I'm really excited that Harvard has the Embedded EthiCS program to provide some of this education. Of course, this is an addition to standard good engineering practices like building robust models, validating them, and so forth, which is all a bit harder with AI.

I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc.

Q: Your work focuses on machine learning with applications to healthcare, which is also an area of focus of this report. What is the state of AI in healthcare?

A lot of AI in healthcare has been on the business end, used for optimizing billing, scheduling surgeries, that sort of thing. When it comes to AI for better patient care, which is what we usually think about, there are few legal, regulatory, and financial incentives to do so, and many disincentives. Still, there's been slow but steady integration of AI-based tools, often in the form of risk scoring and alert systems.

In the near future, two applications that I'm really excited about are triage in low-resource settings — having AIs do initial reads of pathology slides, for example, if there are not enough pathologists, or get an initial check of whether a mole looks suspicious — and ways in which AIs can help identify promising treatment options for discussion with a clinician team and patient.

Q: Any predictions for the next report?

I'll be keen to see where currently nascent AI regulation initiatives have gotten to. Accountability is such a difficult question in AI, it's tricky to nurture both innovation and basic protections. Perhaps the most important innovation will be in approaches for AI accountability.

Topics: AI / Machine Learning , Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Finale Doshi-Velez

Herchel Smith Professor of Computer Science

Press Contact

Leah Burrows | 617-496-1351 | [email protected]

Related News

Alumni profile: Jacomo Corbo, Ph.D. '08

Racing into the future of machine learning

AI / Machine Learning , Computer Science

Ph.D. student Monteiro Paes named Apple Scholar in AI/ML

Monteiro Paes studies fairness and arbitrariness in machine learning models

AI / Machine Learning , Applied Mathematics , Awards , Graduate Student Profile

A new phase for Harvard Quantum Computing Club

SEAS students place second at MIT quantum hackathon

Computer Science , Quantum Engineering , Undergraduate Student Profile

Robotics and artificial intelligence

Intelligent machines could shape the future of science and society.

Updated 27 March 2024

Image credit: Peter Crowther

At the end of the twentieth century, computing was transformed from the preserve of laboratories and industry to a ubiquitous part of everyday life. We are now living through the early stages of a similarly rapid revolution in robotics and artificial intelligence — and the effect on society could be just as enormous.

This collection will be updated throughout 2024, with stories from journalists and research from across the Nature Portfolio journals . Check back throughout the year for the latest additions, or sign up to Nature Briefing: AI and Robotics to receive weekly email updates on this collection and other goings-on in AI and robotics.

Original journalism from Nature .

Robot, repair thyself: laying the foundations for self-healing machines

Advances in materials science and sensing could deliver robots that can mend themselves and feel pain. By Simon Makin

29 February 2024

This cyborg cockroach could be the future of earthquake search and rescue

From drivable bionic animals to machines made from muscle, biohybrid robots are on their way to a variety of uses. By Liam Drew

7 December 2023

How robots can learn to follow a moral code

Ethical artificial intelligence aims to impart human values on machine-learning systems. By Neil Savage

26 October 2023

A test of artificial intelligence

With debate raging over the abilities of modern AI systems, scientists are struggling to effectively assess machine intelligence. By Michael Eisenstein

14 September 2023

Robots need better batteries

As mobile machines travel further from the grid, they'll need lightweight and efficient power sources. By Jeff Hecht

29 June 2023

Synthetic data could be better than real data

Machine-generated data sets have the potential to improve privacy and representation in artificial intelligence, if researchers can find the right balance between accuracy and fakery. By Neil Savage

27 April 2023

Why artificial intelligence needs to understand consquences

A machine with a grasp of cause and effect could learn more like a human, through imagination and regret. By Neil Savage

24 February 2023

Abandoned: The human cost of neurotechnology failure

When the makers of electronic implants abandon their projects, people who rely on the devices have everything to lose. By Liam Drew

6 December 2022

Bioinspired robots walk, swim, slither and fly

Engineers look to nature for ideas on how to make robots move through the world. By Neil Savage

29 September 2022

Learning over a lifetime

Artificial-intelligence researchers turn to lifelong learning in the hopes of making machine intelligence more adaptable. By Neil Savage

20 July 2022

Teaching robots to touch

Robots have become increasingly adept at interacting with the world around them. But to fulfil their potential, they also need a sense of touch. By Marcus Woo

26 May 2022

Miniature medical robots step out from sci-fi

Tiny machines that deliver therapeutic payloads to precise locations in the body are the stuff of science fiction. But some researchers are trying to turn them into a clinical reality. By Anthony King

29 March 2022

Breaking into the black box of artificial intelligence

Scientists are finding ways to explain the inner workings of complex machine-learning models. By Neil Savage

Machine-generated data sets could improve privacy and representation in artificial intelligence, if researchers can find the right balance between accuracy and fakery. By Neil Savage

Why artificial intelligence needs to understand consequences

Eager for more.

Good news — more stories on robotics and artificial intelligence will be published here throughout the year. Click below to sign up for weekly email updates from Nature Briefing: AI and Robotics .

Research and reviews

Curated from the Nature Portfolio journals.

Nature is pleased to acknowledge financial support from FII Institute in producing this Outlook supplement. Nature maintains full independence in all editorial decisions related to the content. About this content.

The supporting organization retains sole responsibility for the following message:

FII Institute is a global non-profit foundation with an investment arm and one agenda: Impact on Humanity. Committed to ESG principles, we foster the brightest minds and transform ideas into real-world solutions in five focus areas: AI and Robotics, Education, Healthcare, and Sustainability.

We are in the right place at the right time – when decision makers, investors, and an engaged generation of youth come together in aspiration, energized and ready for change. We harness that energy into three pillars – THINK, XCHANGE, ACT – and invest in the innovations that make a difference globally.

Join us to own, co-create and actualize a brighter, more sustainable future for humanity.

Visit the FII Institute website .

SPONSOR FEATURES

Sponsor retains sole responsibility for the content of the below articles.

Will ChatGPT give us a lesson in education?

There might be a learning curve as AI tools grow in popularity, but this technology offers teachers opportunities to help pupils acquire new skills around formulating questions and in critical thinking.

The challenge of making moral machines

Artificial intelligence has the potential to improve industries, markets and lives – but only if we can trust the algorithms.

- Privacy Policy

- Use of cookies

- Legal notice

- Terms & Conditions

- Accessibility statement

- Table of Contents

- Random Entry

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Advanced Tools

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Ethics of Artificial Intelligence and Robotics

Artificial intelligence (AI) and robotics are digital technologies that will have significant impact on the development of humanity in the near future. They have raised fundamental questions about what we should do with these systems, what the systems themselves should do, what risks they involve, and how we can control these.

After the Introduction to the field (§1), the main themes (§2) of this article are: Ethical issues that arise with AI systems as objects , i.e., tools made and used by humans. This includes issues of privacy (§2.1) and manipulation (§2.2), opacity (§2.3) and bias (§2.4), human-robot interaction (§2.5), employment (§2.6), and the effects of autonomy (§2.7). Then AI systems as subjects , i.e., ethics for the AI systems themselves in machine ethics (§2.8) and artificial moral agency (§2.9). Finally, the problem of a possible future AI superintelligence leading to a “singularity” (§2.10). We close with a remark on the vision of AI (§3).

For each section within these themes, we provide a general explanation of the ethical issues , outline existing positions and arguments , then analyse how these play out with current technologies and finally, what policy consequences may be drawn.

1.1 Background of the Field

1.2 ai & robotics, 1.3 a note on policy, 2.1 privacy & surveillance, 2.2 manipulation of behaviour, 2.3 opacity of ai systems, 2.4 bias in decision systems, 2.5 human-robot interaction, 2.6 automation and employment, 2.7 autonomous systems, 2.8 machine ethics, 2.9 artificial moral agents, 2.10 singularity, research organizations, conferences, policy documents, other relevant pages, related entries, 1. introduction.

The ethics of AI and robotics is often focused on “concerns” of various sorts, which is a typical response to new technologies. Many such concerns turn out to be rather quaint (trains are too fast for souls); some are predictably wrong when they suggest that the technology will fundamentally change humans (telephones will destroy personal communication, writing will destroy memory, video cassettes will make going out redundant); some are broadly correct but moderately relevant (digital technology will destroy industries that make photographic film, cassette tapes, or vinyl records); but some are broadly correct and deeply relevant (cars will kill children and fundamentally change the landscape). The task of an article such as this is to analyse the issues and to deflate the non-issues.

Some technologies, like nuclear power, cars, or plastics, have caused ethical and political discussion and significant policy efforts to control the trajectory these technologies, usually only once some damage is done. In addition to such “ethical concerns”, new technologies challenge current norms and conceptual systems, which is of particular interest to philosophy. Finally, once we have understood a technology in its context, we need to shape our societal response, including regulation and law. All these features also exist in the case of new AI and Robotics technologies—plus the more fundamental fear that they may end the era of human control on Earth.

The ethics of AI and robotics has seen significant press coverage in recent years, which supports related research, but also may end up undermining it: the press often talks as if the issues under discussion were just predictions of what future technology will bring, and as though we already know what would be most ethical and how to achieve that. Press coverage thus focuses on risk, security (Brundage et al. 2018, in the Other Internet Resources section below, hereafter [OIR]), and prediction of impact (e.g., on the job market). The result is a discussion of essentially technical problems that focus on how to achieve a desired outcome. Current discussions in policy and industry are also motivated by image and public relations, where the label “ethical” is really not much more than the new “green”, perhaps used for “ethics washing”. For a problem to qualify as a problem for AI ethics would require that we do not readily know what the right thing to do is. In this sense, job loss, theft, or killing with AI is not a problem in ethics, but whether these are permissible under certain circumstances is a problem. This article focuses on the genuine problems of ethics where we do not readily know what the answers are.

A last caveat: The ethics of AI and robotics is a very young field within applied ethics, with significant dynamics, but few well-established issues and no authoritative overviews—though there is a promising outline (European Group on Ethics in Science and New Technologies 2018) and there are beginnings on societal impact (Floridi et al. 2018; Taddeo and Floridi 2018; S. Taylor et al. 2018; Walsh 2018; Bryson 2019; Gibert 2019; Whittlestone et al. 2019), and policy recommendations (AI HLEG 2019 [OIR]; IEEE 2019). So this article cannot merely reproduce what the community has achieved thus far, but must propose an ordering where little order exists.

The notion of “artificial intelligence” (AI) is understood broadly as any kind of artificial computational system that shows intelligent behaviour, i.e., complex behaviour that is conducive to reaching goals. In particular, we do not wish to restrict “intelligence” to what would require intelligence if done by humans , as Minsky had suggested (1985). This means we incorporate a range of machines, including those in “technical AI”, that show only limited abilities in learning or reasoning but excel at the automation of particular tasks, as well as machines in “general AI” that aim to create a generally intelligent agent.

AI somehow gets closer to our skin than other technologies—thus the field of “philosophy of AI”. Perhaps this is because the project of AI is to create machines that have a feature central to how we humans see ourselves, namely as feeling, thinking, intelligent beings. The main purposes of an artificially intelligent agent probably involve sensing, modelling, planning and action, but current AI applications also include perception, text analysis, natural language processing (NLP), logical reasoning, game-playing, decision support systems, data analytics, predictive analytics, as well as autonomous vehicles and other forms of robotics (P. Stone et al. 2016). AI may involve any number of computational techniques to achieve these aims, be that classical symbol-manipulating AI, inspired by natural cognition, or machine learning via neural networks (Goodfellow, Bengio, and Courville 2016; Silver et al. 2018).

Historically, it is worth noting that the term “AI” was used as above ca. 1950–1975, then came into disrepute during the “AI winter”, ca. 1975–1995, and narrowed. As a result, areas such as “machine learning”, “natural language processing” and “data science” were often not labelled as “AI”. Since ca. 2010, the use has broadened again, and at times almost all of computer science and even high-tech is lumped under “AI”. Now it is a name to be proud of, a booming industry with massive capital investment (Shoham et al. 2018), and on the edge of hype again. As Erik Brynjolfsson noted, it may allow us to

virtually eliminate global poverty, massively reduce disease and provide better education to almost everyone on the planet. (quoted in Anderson, Rainie, and Luchsinger 2018)

While AI can be entirely software, robots are physical machines that move. Robots are subject to physical impact, typically through “sensors”, and they exert physical force onto the world, typically through “actuators”, like a gripper or a turning wheel. Accordingly, autonomous cars or planes are robots, and only a minuscule portion of robots is “humanoid” (human-shaped), like in the movies. Some robots use AI, and some do not: Typical industrial robots blindly follow completely defined scripts with minimal sensory input and no learning or reasoning (around 500,000 such new industrial robots are installed each year (IFR 2019 [OIR])). It is probably fair to say that while robotics systems cause more concerns in the general public, AI systems are more likely to have a greater impact on humanity. Also, AI or robotics systems for a narrow set of tasks are less likely to cause new issues than systems that are more flexible and autonomous.

Robotics and AI can thus be seen as covering two overlapping sets of systems: systems that are only AI, systems that are only robotics, and systems that are both. We are interested in all three; the scope of this article is thus not only the intersection, but the union, of both sets.

Policy is only one of the concerns of this article. There is significant public discussion about AI ethics, and there are frequent pronouncements from politicians that the matter requires new policy, which is easier said than done: Actual technology policy is difficult to plan and enforce. It can take many forms, from incentives and funding, infrastructure, taxation, or good-will statements, to regulation by various actors, and the law. Policy for AI will possibly come into conflict with other aims of technology policy or general policy. Governments, parliaments, associations, and industry circles in industrialised countries have produced reports and white papers in recent years, and some have generated good-will slogans (“trusted/responsible/humane/human-centred/good/beneficial AI”), but is that what is needed? For a survey, see Jobin, Ienca, and Vayena (2019) and V. Müller’s list of PT-AI Policy Documents and Institutions .

For people who work in ethics and policy, there might be a tendency to overestimate the impact and threats from a new technology, and to underestimate how far current regulation can reach (e.g., for product liability). On the other hand, there is a tendency for businesses, the military, and some public administrations to “just talk” and do some “ethics washing” in order to preserve a good public image and continue as before. Actually implementing legally binding regulation would challenge existing business models and practices. Actual policy is not just an implementation of ethical theory, but subject to societal power structures—and the agents that do have the power will push against anything that restricts them. There is thus a significant risk that regulation will remain toothless in the face of economical and political power.

Though very little actual policy has been produced, there are some notable beginnings: The latest EU policy document suggests “trustworthy AI” should be lawful, ethical, and technically robust, and then spells this out as seven requirements: human oversight, technical robustness, privacy and data governance, transparency, fairness, well-being, and accountability (AI HLEG 2019 [OIR]). Much European research now runs under the slogan of “responsible research and innovation” (RRI), and “technology assessment” has been a standard field since the advent of nuclear power. Professional ethics is also a standard field in information technology, and this includes issues that are relevant in this article. Perhaps a “code of ethics” for AI engineers, analogous to the codes of ethics for medical doctors, is an option here (Véliz 2019). What data science itself should do is addressed in (L. Taylor and Purtova 2019). We also expect that much policy will eventually cover specific uses or technologies of AI and robotics, rather than the field as a whole. A useful summary of an ethical framework for AI is given in (European Group on Ethics in Science and New Technologies 2018: 13ff). On general AI policy, see Calo (2018) as well as Crawford and Calo (2016); Stahl, Timmermans, and Mittelstadt (2016); Johnson and Verdicchio (2017); and Giubilini and Savulescu (2018). A more political angle of technology is often discussed in the field of “Science and Technology Studies” (STS). As books like The Ethics of Invention (Jasanoff 2016) show, concerns in STS are often quite similar to those in ethics (Jacobs et al. 2019 [OIR]). In this article, we discuss the policy for each type of issue separately rather than for AI or robotics in general.

2. Main Debates

In this section we outline the ethical issues of human use of AI and robotics systems that can be more or less autonomous—which means we look at issues that arise with certain uses of the technologies which would not arise with others. It must be kept in mind, however, that technologies will always cause some uses to be easier, and thus more frequent, and hinder other uses. The design of technical artefacts thus has ethical relevance for their use (Houkes and Vermaas 2010; Verbeek 2011), so beyond “responsible use”, we also need “responsible design” in this field. The focus on use does not presuppose which ethical approaches are best suited for tackling these issues; they might well be virtue ethics (Vallor 2017) rather than consequentialist or value-based (Floridi et al. 2018). This section is also neutral with respect to the question whether AI systems truly have “intelligence” or other mental properties: It would apply equally well if AI and robotics are merely seen as the current face of automation (cf. Müller forthcoming-b).

There is a general discussion about privacy and surveillance in information technology (e.g., Macnish 2017; Roessler 2017), which mainly concerns the access to private data and data that is personally identifiable. Privacy has several well recognised aspects, e.g., “the right to be let alone”, information privacy, privacy as an aspect of personhood, control over information about oneself, and the right to secrecy (Bennett and Raab 2006). Privacy studies have historically focused on state surveillance by secret services but now include surveillance by other state agents, businesses, and even individuals. The technology has changed significantly in the last decades while regulation has been slow to respond (though there is the Regulation (EU) 2016/679)—the result is a certain anarchy that is exploited by the most powerful players, sometimes in plain sight, sometimes in hiding.

The digital sphere has widened greatly: All data collection and storage is now digital, our lives are increasingly digital, most digital data is connected to a single Internet, and there is more and more sensor technology in use that generates data about non-digital aspects of our lives. AI increases both the possibilities of intelligent data collection and the possibilities for data analysis. This applies to blanket surveillance of whole populations as well as to classic targeted surveillance. In addition, much of the data is traded between agents, usually for a fee.

At the same time, controlling who collects which data, and who has access, is much harder in the digital world than it was in the analogue world of paper and telephone calls. Many new AI technologies amplify the known issues. For example, face recognition in photos and videos allows identification and thus profiling and searching for individuals (Whittaker et al. 2018: 15ff). This continues using other techniques for identification, e.g., “device fingerprinting”, which are commonplace on the Internet (sometimes revealed in the “privacy policy”). The result is that “In this vast ocean of data, there is a frighteningly complete picture of us” (Smolan 2016: 1:01). The result is arguably a scandal that still has not received due public attention.

The data trail we leave behind is how our “free” services are paid for—but we are not told about that data collection and the value of this new raw material, and we are manipulated into leaving ever more such data. For the “big 5” companies (Amazon, Google/Alphabet, Microsoft, Apple, Facebook), the main data-collection part of their business appears to be based on deception, exploiting human weaknesses, furthering procrastination, generating addiction, and manipulation (Harris 2016 [OIR]). The primary focus of social media, gaming, and most of the Internet in this “surveillance economy” is to gain, maintain, and direct attention—and thus data supply. “Surveillance is the business model of the Internet” (Schneier 2015). This surveillance and attention economy is sometimes called “surveillance capitalism” (Zuboff 2019). It has caused many attempts to escape from the grasp of these corporations, e.g., in exercises of “minimalism” (Newport 2019), sometimes through the open source movement, but it appears that present-day citizens have lost the degree of autonomy needed to escape while fully continuing with their life and work. We have lost ownership of our data, if “ownership” is the right relation here. Arguably, we have lost control of our data.

These systems will often reveal facts about us that we ourselves wish to suppress or are not aware of: they know more about us than we know ourselves. Even just observing online behaviour allows insights into our mental states (Burr and Christianini 2019) and manipulation (see below section 2.2 ). This has led to calls for the protection of “derived data” (Wachter and Mittelstadt 2019). With the last sentence of his bestselling book, Homo Deus , Harari asks about the long-term consequences of AI:

What will happen to society, politics and daily life when non-conscious but highly intelligent algorithms know us better than we know ourselves? (2016: 462)

Robotic devices have not yet played a major role in this area, except for security patrolling, but this will change once they are more common outside of industry environments. Together with the “Internet of things”, the so-called “smart” systems (phone, TV, oven, lamp, virtual assistant, home,…), “smart city” (Sennett 2018), and “smart governance”, they are set to become part of the data-gathering machinery that offers more detailed data, of different types, in real time, with ever more information.

Privacy-preserving techniques that can largely conceal the identity of persons or groups are now a standard staple in data science; they include (relative) anonymisation , access control (plus encryption), and other models where computation is carried out with fully or partially encrypted input data (Stahl and Wright 2018); in the case of “differential privacy”, this is done by adding calibrated noise to encrypt the output of queries (Dwork et al. 2006; Abowd 2017). While requiring more effort and cost, such techniques can avoid many of the privacy issues. Some companies have also seen better privacy as a competitive advantage that can be leveraged and sold at a price.

One of the major practical difficulties is to actually enforce regulation, both on the level of the state and on the level of the individual who has a claim. They must identify the responsible legal entity, prove the action, perhaps prove intent, find a court that declares itself competent … and eventually get the court to actually enforce its decision. Well-established legal protection of rights such as consumer rights, product liability, and other civil liability or protection of intellectual property rights is often missing in digital products, or hard to enforce. This means that companies with a “digital” background are used to testing their products on the consumers without fear of liability while heavily defending their intellectual property rights. This “Internet Libertarianism” is sometimes taken to assume that technical solutions will take care of societal problems by themselves (Mozorov 2013).

The ethical issues of AI in surveillance go beyond the mere accumulation of data and direction of attention: They include the use of information to manipulate behaviour, online and offline, in a way that undermines autonomous rational choice. Of course, efforts to manipulate behaviour are ancient, but they may gain a new quality when they use AI systems. Given users’ intense interaction with data systems and the deep knowledge about individuals this provides, they are vulnerable to “nudges”, manipulation, and deception. With sufficient prior data, algorithms can be used to target individuals or small groups with just the kind of input that is likely to influence these particular individuals. A ’nudge‘ changes the environment such that it influences behaviour in a predictable way that is positive for the individual, but easy and cheap to avoid (Thaler & Sunstein 2008). There is a slippery slope from here to paternalism and manipulation.

Many advertisers, marketers, and online sellers will use any legal means at their disposal to maximise profit, including exploitation of behavioural biases, deception, and addiction generation (Costa and Halpern 2019 [OIR]). Such manipulation is the business model in much of the gambling and gaming industries, but it is spreading, e.g., to low-cost airlines. In interface design on web pages or in games, this manipulation uses what is called “dark patterns” (Mathur et al. 2019). At this moment, gambling and the sale of addictive substances are highly regulated, but online manipulation and addiction are not—even though manipulation of online behaviour is becoming a core business model of the Internet.

Furthermore, social media is now the prime location for political propaganda. This influence can be used to steer voting behaviour, as in the Facebook-Cambridge Analytica “scandal” (Woolley and Howard 2017; Bradshaw, Neudert, and Howard 2019) and—if successful—it may harm the autonomy of individuals (Susser, Roessler, and Nissenbaum 2019).

Improved AI “faking” technologies make what once was reliable evidence into unreliable evidence—this has already happened to digital photos, sound recordings, and video. It will soon be quite easy to create (rather than alter) “deep fake” text, photos, and video material with any desired content. Soon, sophisticated real-time interaction with persons over text, phone, or video will be faked, too. So we cannot trust digital interactions while we are at the same time increasingly dependent on such interactions.

One more specific issue is that machine learning techniques in AI rely on training with vast amounts of data. This means there will often be a trade-off between privacy and rights to data vs. technical quality of the product. This influences the consequentialist evaluation of privacy-violating practices.

The policy in this field has its ups and downs: Civil liberties and the protection of individual rights are under intense pressure from businesses’ lobbying, secret services, and other state agencies that depend on surveillance. Privacy protection has diminished massively compared to the pre-digital age when communication was based on letters, analogue telephone communications, and personal conversation and when surveillance operated under significant legal constraints.

While the EU General Data Protection Regulation (Regulation (EU) 2016/679) has strengthened privacy protection, the US and China prefer growth with less regulation (Thompson and Bremmer 2018), likely in the hope that this provides a competitive advantage. It is clear that state and business actors have increased their ability to invade privacy and manipulate people with the help of AI technology and will continue to do so to further their particular interests—unless reined in by policy in the interest of general society.

Opacity and bias are central issues in what is now sometimes called “data ethics” or “big data ethics” (Floridi and Taddeo 2016; Mittelstadt and Floridi 2016). AI systems for automated decision support and “predictive analytics” raise “significant concerns about lack of due process, accountability, community engagement, and auditing” (Whittaker et al. 2018: 18ff). They are part of a power structure in which “we are creating decision-making processes that constrain and limit opportunities for human participation” (Danaher 2016b: 245). At the same time, it will often be impossible for the affected person to know how the system came to this output, i.e., the system is “opaque” to that person. If the system involves machine learning, it will typically be opaque even to the expert, who will not know how a particular pattern was identified, or even what the pattern is. Bias in decision systems and data sets is exacerbated by this opacity. So, at least in cases where there is a desire to remove bias, the analysis of opacity and bias go hand in hand, and political response has to tackle both issues together.

Many AI systems rely on machine learning techniques in (simulated) neural networks that will extract patterns from a given dataset, with or without “correct” solutions provided; i.e., supervised, semi-supervised or unsupervised. With these techniques, the “learning” captures patterns in the data and these are labelled in a way that appears useful to the decision the system makes, while the programmer does not really know which patterns in the data the system has used. In fact, the programs are evolving, so when new data comes in, or new feedback is given (“this was correct”, “this was incorrect”), the patterns used by the learning system change. What this means is that the outcome is not transparent to the user or programmers: it is opaque. Furthermore, the quality of the program depends heavily on the quality of the data provided, following the old slogan “garbage in, garbage out”. So, if the data already involved a bias (e.g., police data about the skin colour of suspects), then the program will reproduce that bias. There are proposals for a standard description of datasets in a “datasheet” that would make the identification of such bias more feasible (Gebru et al. 2018 [OIR]). There is also significant recent literature about the limitations of machine learning systems that are essentially sophisticated data filters (Marcus 2018 [OIR]). Some have argued that the ethical problems of today are the result of technical “shortcuts” AI has taken (Cristianini forthcoming).

There are several technical activities that aim at “explainable AI”, starting with (Van Lent, Fisher, and Mancuso 1999; Lomas et al. 2012) and, more recently, a DARPA programme (Gunning 2017 [OIR]). More broadly, the demand for

a mechanism for elucidating and articulating the power structures, biases, and influences that computational artefacts exercise in society (Diakopoulos 2015: 398)

is sometimes called “algorithmic accountability reporting”. This does not mean that we expect an AI to “explain its reasoning”—doing so would require far more serious moral autonomy than we currently attribute to AI systems (see below §2.10 ).

The politician Henry Kissinger pointed out that there is a fundamental problem for democratic decision-making if we rely on a system that is supposedly superior to humans, but cannot explain its decisions. He says we may have “generated a potentially dominating technology in search of a guiding philosophy” (Kissinger 2018). Danaher (2016b) calls this problem “the threat of algocracy” (adopting the previous use of ‘algocracy’ from Aneesh 2002 [OIR], 2006). In a similar vein, Cave (2019) stresses that we need a broader societal move towards more “democratic” decision-making to avoid AI being a force that leads to a Kafka-style impenetrable suppression system in public administration and elsewhere. The political angle of this discussion has been stressed by O’Neil in her influential book Weapons of Math Destruction (2016), and by Yeung and Lodge (2019).

In the EU, some of these issues have been taken into account with the (Regulation (EU) 2016/679), which foresees that consumers, when faced with a decision based on data processing, will have a legal “right to explanation”—how far this goes and to what extent it can be enforced is disputed (Goodman and Flaxman 2017; Wachter, Mittelstadt, and Floridi 2016; Wachter, Mittelstadt, and Russell 2017). Zerilli et al. (2019) argue that there may be a double standard here, where we demand a high level of explanation for machine-based decisions despite humans sometimes not reaching that standard themselves.

Automated AI decision support systems and “predictive analytics” operate on data and produce a decision as “output”. This output may range from the relatively trivial to the highly significant: “this restaurant matches your preferences”, “the patient in this X-ray has completed bone growth”, “application to credit card declined”, “donor organ will be given to another patient”, “bail is denied”, or “target identified and engaged”. Data analysis is often used in “predictive analytics” in business, healthcare, and other fields, to foresee future developments—since prediction is easier, it will also become a cheaper commodity. One use of prediction is in “predictive policing” (NIJ 2014 [OIR]), which many fear might lead to an erosion of public liberties (Ferguson 2017) because it can take away power from the people whose behaviour is predicted. It appears, however, that many of the worries about policing depend on futuristic scenarios where law enforcement foresees and punishes planned actions, rather than waiting until a crime has been committed (like in the 2002 film “Minority Report”). One concern is that these systems might perpetuate bias that was already in the data used to set up the system, e.g., by increasing police patrols in an area and discovering more crime in that area. Actual “predictive policing” or “intelligence led policing” techniques mainly concern the question of where and when police forces will be needed most. Also, police officers can be provided with more data, offering them more control and facilitating better decisions, in workflow support software (e.g., “ArcGIS”). Whether this is problematic depends on the appropriate level of trust in the technical quality of these systems, and on the evaluation of aims of the police work itself. Perhaps a recent paper title points in the right direction here: “AI ethics in predictive policing: From models of threat to an ethics of care” (Asaro 2019).

Bias typically surfaces when unfair judgments are made because the individual making the judgment is influenced by a characteristic that is actually irrelevant to the matter at hand, typically a discriminatory preconception about members of a group. So, one form of bias is a learned cognitive feature of a person, often not made explicit. The person concerned may not be aware of having that bias—they may even be honestly and explicitly opposed to a bias they are found to have (e.g., through priming, cf. Graham and Lowery 2004). On fairness vs. bias in machine learning, see Binns (2018).

Apart from the social phenomenon of learned bias, the human cognitive system is generally prone to have various kinds of “cognitive biases”, e.g., the “confirmation bias”: humans tend to interpret information as confirming what they already believe. This second form of bias is often said to impede performance in rational judgment (Kahnemann 2011)—though at least some cognitive biases generate an evolutionary advantage, e.g., economical use of resources for intuitive judgment. There is a question whether AI systems could or should have such cognitive bias.

A third form of bias is present in data when it exhibits systematic error, e.g., “statistical bias”. Strictly, any given dataset will only be unbiased for a single kind of issue, so the mere creation of a dataset involves the danger that it may be used for a different kind of issue, and then turn out to be biased for that kind. Machine learning on the basis of such data would then not only fail to recognise the bias, but codify and automate the “historical bias”. Such historical bias was discovered in an automated recruitment screening system at Amazon (discontinued early 2017) that discriminated against women—presumably because the company had a history of discriminating against women in the hiring process. The “Correctional Offender Management Profiling for Alternative Sanctions” (COMPAS), a system to predict whether a defendant would re-offend, was found to be as successful (65.2% accuracy) as a group of random humans (Dressel and Farid 2018) and to produce more false positives and less false negatives for black defendants. The problem with such systems is thus bias plus humans placing excessive trust in the systems. The political dimensions of such automated systems in the USA are investigated in Eubanks (2018).

There are significant technical efforts to detect and remove bias from AI systems, but it is fair to say that these are in early stages: see UK Institute for Ethical AI & Machine Learning (Brownsword, Scotford, and Yeung 2017; Yeung and Lodge 2019). It appears that technological fixes have their limits in that they need a mathematical notion of fairness, which is hard to come by (Whittaker et al. 2018: 24ff; Selbst et al. 2019), as is a formal notion of “race” (see Benthall and Haynes 2019). An institutional proposal is in (Veale and Binns 2017).

Human-robot interaction (HRI) is an academic fields in its own right, which now pays significant attention to ethical matters, the dynamics of perception from both sides, and both the different interests present in and the intricacy of the social context, including co-working (e.g., Arnold and Scheutz 2017). Useful surveys for the ethics of robotics include Calo, Froomkin, and Kerr (2016); Royakkers and van Est (2016); Tzafestas (2016); a standard collection of papers is Lin, Abney, and Jenkins (2017).

While AI can be used to manipulate humans into believing and doing things (see section 2.2 ), it can also be used to drive robots that are problematic if their processes or appearance involve deception, threaten human dignity, or violate the Kantian requirement of “respect for humanity”. Humans very easily attribute mental properties to objects, and empathise with them, especially when the outer appearance of these objects is similar to that of living beings. This can be used to deceive humans (or animals) into attributing more intellectual or even emotional significance to robots or AI systems than they deserve. Some parts of humanoid robotics are problematic in this regard (e.g., Hiroshi Ishiguro’s remote-controlled Geminoids), and there are cases that have been clearly deceptive for public-relations purposes (e.g. on the abilities of Hanson Robotics’ “Sophia”). Of course, some fairly basic constraints of business ethics and law apply to robots, too: product safety and liability, or non-deception in advertisement. It appears that these existing constraints take care of many concerns that are raised. There are cases, however, where human-human interaction has aspects that appear specifically human in ways that can perhaps not be replaced by robots: care, love, and sex.

2.5.1 Example (a) Care Robots

The use of robots in health care for humans is currently at the level of concept studies in real environments, but it may become a usable technology in a few years, and has raised a number of concerns for a dystopian future of de-humanised care (A. Sharkey and N. Sharkey 2011; Robert Sparrow 2016). Current systems include robots that support human carers/caregivers (e.g., in lifting patients, or transporting material), robots that enable patients to do certain things by themselves (e.g., eat with a robotic arm), but also robots that are given to patients as company and comfort (e.g., the “Paro” robot seal). For an overview, see van Wynsberghe (2016); Nørskov (2017); Fosch-Villaronga and Albo-Canals (2019), for a survey of users Draper et al. (2014).

One reason why the issue of care has come to the fore is that people have argued that we will need robots in ageing societies. This argument makes problematic assumptions, namely that with longer lifespan people will need more care, and that it will not be possible to attract more humans to caring professions. It may also show a bias about age (Jecker forthcoming). Most importantly, it ignores the nature of automation, which is not simply about replacing humans, but about allowing humans to work more efficiently. It is not very clear that there really is an issue here since the discussion mostly focuses on the fear of robots de-humanising care, but the actual and foreseeable robots in care are assistive robots for classic automation of technical tasks. They are thus “care robots” only in a behavioural sense of performing tasks in care environments, not in the sense that a human “cares” for the patients. It appears that the success of “being cared for” relies on this intentional sense of “care”, which foreseeable robots cannot provide. If anything, the risk of robots in care is the absence of such intentional care—because less human carers may be needed. Interestingly, caring for something, even a virtual agent, can be good for the carer themselves (Lee et al. 2019). A system that pretends to care would be deceptive and thus problematic—unless the deception is countered by sufficiently large utility gain (Coeckelbergh 2016). Some robots that pretend to “care” on a basic level are available (Paro seal) and others are in the making. Perhaps feeling cared for by a machine, to some extent, is progress for come patients.

2.5.2 Example (b) Sex Robots

It has been argued by several tech optimists that humans will likely be interested in sex and companionship with robots and be comfortable with the idea (Levy 2007). Given the variation of human sexual preferences, including sex toys and sex dolls, this seems very likely: The question is whether such devices should be manufactured and promoted, and whether there should be limits in this touchy area. It seems to have moved into the mainstream of “robot philosophy” in recent times (Sullins 2012; Danaher and McArthur 2017; N. Sharkey et al. 2017 [OIR]; Bendel 2018; Devlin 2018).

Humans have long had deep emotional attachments to objects, so perhaps companionship or even love with a predictable android is attractive, especially to people who struggle with actual humans, and already prefer dogs, cats, birds, a computer or a tamagotchi . Danaher (2019b) argues against (Nyholm and Frank 2017) that these can be true friendships, and is thus a valuable goal. It certainly looks like such friendship might increase overall utility, even if lacking in depth. In these discussions there is an issue of deception, since a robot cannot (at present) mean what it says, or have feelings for a human. It is well known that humans are prone to attribute feelings and thoughts to entities that behave as if they had sentience,even to clearly inanimate objects that show no behaviour at all. Also, paying for deception seems to be an elementary part of the traditional sex industry.

Finally, there are concerns that have often accompanied matters of sex, namely consent (Frank and Nyholm 2017), aesthetic concerns, and the worry that humans may be “corrupted” by certain experiences. Old fashioned though this may seem, human behaviour is influenced by experience, and it is likely that pornography or sex robots support the perception of other humans as mere objects of desire, or even recipients of abuse, and thus ruin a deeper sexual and erotic experience. In this vein, the “Campaign Against Sex Robots” argues that these devices are a continuation of slavery and prostitution (Richardson 2016).