Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Step 1. Ask a question

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

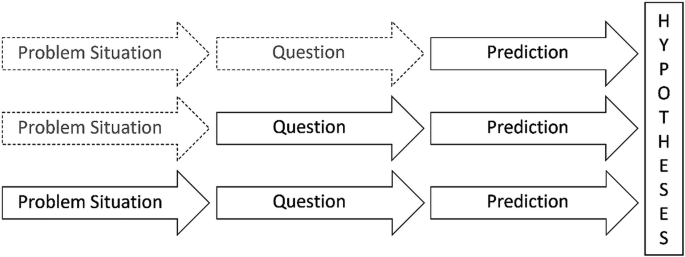

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis . The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

- H 0 : The number of lectures attended by first-year students has no effect on their final exam scores.

- H 1 : The number of lectures attended by first-year students has a positive effect on their final exam scores.

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). How to Write a Strong Hypothesis | Steps & Examples. Scribbr. Retrieved April 1, 2024, from https://www.scribbr.com/methodology/hypothesis/

Is this article helpful?

Shona McCombes

Other students also liked, construct validity | definition, types, & examples, what is a conceptual framework | tips & examples, operationalization | a guide with examples, pros & cons, what is your plagiarism score.

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- *New* Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

A Beginner’s Guide to Hypothesis Testing in Business

- 30 Mar 2021

Becoming a more data-driven decision-maker can bring several benefits to your organization, enabling you to identify new opportunities to pursue and threats to abate. Rather than allowing subjective thinking to guide your business strategy, backing your decisions with data can empower your company to become more innovative and, ultimately, profitable.

If you’re new to data-driven decision-making, you might be wondering how data translates into business strategy. The answer lies in generating a hypothesis and verifying or rejecting it based on what various forms of data tell you.

Below is a look at hypothesis testing and the role it plays in helping businesses become more data-driven.

Access your free e-book today.

What Is Hypothesis Testing?

To understand what hypothesis testing is, it’s important first to understand what a hypothesis is.

A hypothesis or hypothesis statement seeks to explain why something has happened, or what might happen, under certain conditions. It can also be used to understand how different variables relate to each other. Hypotheses are often written as if-then statements; for example, “If this happens, then this will happen.”

Hypothesis testing , then, is a statistical means of testing an assumption stated in a hypothesis. While the specific methodology leveraged depends on the nature of the hypothesis and data available, hypothesis testing typically uses sample data to extrapolate insights about a larger population.

Hypothesis Testing in Business

When it comes to data-driven decision-making, there’s a certain amount of risk that can mislead a professional. This could be due to flawed thinking or observations, incomplete or inaccurate data , or the presence of unknown variables. The danger in this is that, if major strategic decisions are made based on flawed insights, it can lead to wasted resources, missed opportunities, and catastrophic outcomes.

The real value of hypothesis testing in business is that it allows professionals to test their theories and assumptions before putting them into action. This essentially allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.

As one example, consider a company that wishes to launch a new marketing campaign to revitalize sales during a slow period. Doing so could be an incredibly expensive endeavor, depending on the campaign’s size and complexity. The company, therefore, may wish to test the campaign on a smaller scale to understand how it will perform.

In this example, the hypothesis that’s being tested would fall along the lines of: “If the company launches a new marketing campaign, then it will translate into an increase in sales.” It may even be possible to quantify how much of a lift in sales the company expects to see from the effort. Pending the results of the pilot campaign, the business would then know whether it makes sense to roll it out more broadly.

Related: 9 Fundamental Data Science Skills for Business Professionals

Key Considerations for Hypothesis Testing

1. alternative hypothesis and null hypothesis.

In hypothesis testing, the hypothesis that’s being tested is known as the alternative hypothesis . Often, it’s expressed as a correlation or statistical relationship between variables. The null hypothesis , on the other hand, is a statement that’s meant to show there’s no statistical relationship between the variables being tested. It’s typically the exact opposite of whatever is stated in the alternative hypothesis.

For example, consider a company’s leadership team that historically and reliably sees $12 million in monthly revenue. They want to understand if reducing the price of their services will attract more customers and, in turn, increase revenue.

In this case, the alternative hypothesis may take the form of a statement such as: “If we reduce the price of our flagship service by five percent, then we’ll see an increase in sales and realize revenues greater than $12 million in the next month.”

The null hypothesis, on the other hand, would indicate that revenues wouldn’t increase from the base of $12 million, or might even decrease.

Check out the video below about the difference between an alternative and a null hypothesis, and subscribe to our YouTube channel for more explainer content.

2. Significance Level and P-Value

Statistically speaking, if you were to run the same scenario 100 times, you’d likely receive somewhat different results each time. If you were to plot these results in a distribution plot, you’d see the most likely outcome is at the tallest point in the graph, with less likely outcomes falling to the right and left of that point.

With this in mind, imagine you’ve completed your hypothesis test and have your results, which indicate there may be a correlation between the variables you were testing. To understand your results' significance, you’ll need to identify a p-value for the test, which helps note how confident you are in the test results.

In statistics, the p-value depicts the probability that, assuming the null hypothesis is correct, you might still observe results that are at least as extreme as the results of your hypothesis test. The smaller the p-value, the more likely the alternative hypothesis is correct, and the greater the significance of your results.

3. One-Sided vs. Two-Sided Testing

When it’s time to test your hypothesis, it’s important to leverage the correct testing method. The two most common hypothesis testing methods are one-sided and two-sided tests , or one-tailed and two-tailed tests, respectively.

Typically, you’d leverage a one-sided test when you have a strong conviction about the direction of change you expect to see due to your hypothesis test. You’d leverage a two-sided test when you’re less confident in the direction of change.

4. Sampling

To perform hypothesis testing in the first place, you need to collect a sample of data to be analyzed. Depending on the question you’re seeking to answer or investigate, you might collect samples through surveys, observational studies, or experiments.

A survey involves asking a series of questions to a random population sample and recording self-reported responses.

Observational studies involve a researcher observing a sample population and collecting data as it occurs naturally, without intervention.

Finally, an experiment involves dividing a sample into multiple groups, one of which acts as the control group. For each non-control group, the variable being studied is manipulated to determine how the data collected differs from that of the control group.

Learn How to Perform Hypothesis Testing

Hypothesis testing is a complex process involving different moving pieces that can allow an organization to effectively leverage its data and inform strategic decisions.

If you’re interested in better understanding hypothesis testing and the role it can play within your organization, one option is to complete a course that focuses on the process. Doing so can lay the statistical and analytical foundation you need to succeed.

Do you want to learn more about hypothesis testing? Explore Business Analytics —one of our online business essentials courses —and download our Beginner’s Guide to Data & Analytics .

About the Author

- Guide: Hypothesis Testing

Daniel Croft

Daniel Croft is an experienced continuous improvement manager with a Lean Six Sigma Black Belt and a Bachelor's degree in Business Management. With more than ten years of experience applying his skills across various industries, Daniel specializes in optimizing processes and improving efficiency. His approach combines practical experience with a deep understanding of business fundamentals to drive meaningful change.

- Last Updated: September 8, 2023

- Learn Lean Sigma

In the world of data-driven decision-making, Hypothesis Testing stands as a cornerstone methodology. It serves as the statistical backbone for a multitude of sectors, from manufacturing and logistics to healthcare and finance. But what exactly is Hypothesis Testing, and why is it so indispensable? Simply put, it’s a technique that allows you to validate or invalidate claims about a population based on sample data. Whether you’re looking to streamline a manufacturing process, optimize logistics, or improve customer satisfaction, Hypothesis Testing offers a structured approach to reach conclusive, data-supported decisions.

The graphical example above provides a simplified snapshot of a hypothesis test. The bell curve represents a normal distribution, the green area is where you’d accept the null hypothesis ( H 0), and the red area is the “rejection zone,” where you’d favor the alternative hypothesis ( Ha ). The vertical blue line represents the threshold value or “critical value,” beyond which you’d reject H 0.

Here’s a graphical example of a hypothesis test, which you can include in the introduction section of your guide. In this graph:

- The curve represents a standard normal distribution, often encountered in hypothesis tests.

- The green-shaded area signifies the “Acceptance Region,” where you would fail to reject the null hypothesis ( H 0).

- The red-shaded areas are the “Rejection Regions,” where you would reject H 0 in favor of the alternative hypothesis ( Ha ).

- The blue dashed lines indicate the “Critical Values” (±1.96), which are the thresholds for rejecting H 0.

This graphical representation serves as a conceptual foundation for understanding the mechanics of hypothesis testing. It visually illustrates what it means to accept or reject a hypothesis based on a predefined level of significance.

Table of Contents

What is hypothesis testing.

Hypothesis testing is a structured procedure in statistics used for drawing conclusions about a larger population based on a subset of that population, known as a sample. The method is widely used across different industries and sectors for a variety of purposes. Below, we’ll dissect the key components of hypothesis testing to provide a more in-depth understanding.

The Hypotheses: H 0 and Ha

In every hypothesis test, there are two competing statements:

- Null Hypothesis ( H 0) : This is the “status quo” hypothesis that you are trying to test against. It is a statement that asserts that there is no effect or difference. For example, in a manufacturing setting, the null hypothesis might state that a new production process does not improve the average output quality.

- Alternative Hypothesis ( Ha or H 1) : This is what you aim to prove by conducting the hypothesis test. It is the statement that there is an effect or difference. Using the same manufacturing example, the alternative hypothesis might state that the new process does improve the average output quality.

Significance Level ( α )

Before conducting the test, you decide on a “Significance Level” ( α ), typically set at 0.05 or 5%. This level represents the probability of rejecting the null hypothesis when it is actually true. Lower α values make the test more stringent, reducing the chances of a ‘false positive’.

Data Collection

You then proceed to gather data, which is usually a sample from a larger population. The quality of your test heavily relies on how well this sample represents the population. The data can be collected through various means such as surveys, observations, or experiments.

Statistical Test

Depending on the nature of the data and what you’re trying to prove, different statistical tests can be applied (e.g., t-test, chi-square test , ANOVA , etc.). These tests will compute a test statistic (e.g., t , 2 χ 2, F , etc.) based on your sample data.

Here are graphical examples of the distributions commonly used in three different types of statistical tests: t-test, Chi-square test, and ANOVA (Analysis of Variance), displayed side by side for comparison.

- Graph 1 (Leftmost): This graph represents a t-distribution, often used in t-tests. The t-distribution is similar to the normal distribution but tends to have heavier tails. It is commonly used when the sample size is small or the population variance is unknown.

Chi-square Test

- Graph 2 (Middle): The Chi-square distribution is used in Chi-square tests, often for testing independence or goodness-of-fit. Unlike the t-distribution, the Chi-square distribution is not symmetrical and only takes on positive values.

ANOVA (F-distribution)

- Graph 3 (Rightmost): The F-distribution is used in Analysis of Variance (ANOVA), a statistical test used to analyze the differences between group means. Like the Chi-square distribution, the F-distribution is also not symmetrical and takes only positive values.

These visual representations provide an intuitive understanding of the different statistical tests and their underlying distributions. Knowing which test to use and when is crucial for conducting accurate and meaningful hypothesis tests.

Decision Making

The test statistic is then compared to a critical value determined by the significance level ( α ) and the sample size. This comparison will give you a p-value. If the p-value is less than α , you reject the null hypothesis in favor of the alternative hypothesis. Otherwise, you fail to reject the null hypothesis.

Interpretation

Finally, you interpret the results in the context of what you were investigating. Rejecting the null hypothesis might mean implementing a new process or strategy, while failing to reject it might lead to a continuation of current practices.

To sum it up, hypothesis testing is not just a set of formulas but a methodical approach to problem-solving and decision-making based on data. It’s a crucial tool for anyone interested in deriving meaningful insights from data to make informed decisions.

Why is Hypothesis Testing Important?

Hypothesis testing is a cornerstone of statistical and empirical research, serving multiple functions in various fields. Let’s delve into each of the key areas where hypothesis testing holds significant importance:

Data-Driven Decisions

In today’s complex business environment, making decisions based on gut feeling or intuition is not enough; you need data to back up your choices. Hypothesis testing serves as a rigorous methodology for making decisions based on data. By setting up a null hypothesis and an alternative hypothesis, you can use statistical methods to determine which is more likely to be true given a data sample. This structured approach eliminates guesswork and adds empirical weight to your decisions, thereby increasing their credibility and effectiveness.

Risk Management

Hypothesis testing allows you to assign a ‘p-value’ to your findings, which is essentially the probability of observing the given sample data if the null hypothesis is true. This p-value can be directly used to quantify risk. For instance, a p-value of 0.05 implies there’s a 5% risk of rejecting the null hypothesis when it’s actually true. This is invaluable in scenarios like product launches or changes in operational processes, where understanding the risk involved can be as crucial as the decision itself.

Here’s an example to help you understand the concept better.

The graph above serves as a graphical representation to help explain the concept of a ‘p-value’ and its role in quantifying risk in hypothesis testing. Here’s how to interpret the graph:

Elements of the Graph

- The curve represents a Standard Normal Distribution , which is often used to represent z-scores in hypothesis testing.

- The red-shaded area on the right represents the Rejection Region . It corresponds to a 5% risk ( α =0.05) of rejecting the null hypothesis when it is actually true. This is the area where, if your test statistic falls, you would reject the null hypothesis.

- The green-shaded area represents the Acceptance Region , with a 95% level of confidence. If your test statistic falls in this region, you would fail to reject the null hypothesis.

- The blue dashed line is the Critical Value (approximately 1.645 in this example). If your standardized test statistic (z-value) exceeds this point, you enter the rejection region, and your p-value becomes less than 0.05, leading you to reject the null hypothesis.

Relating to Risk Management

The p-value can be directly related to risk management. For example, if you’re considering implementing a new manufacturing process, the p-value quantifies the risk of that decision. A low p-value (less than α ) would mean that the risk of rejecting the null hypothesis (i.e., going ahead with the new process) when it’s actually true is low, thus indicating a lower risk in implementing the change.

Quality Control

In sectors like manufacturing, automotive, and logistics, maintaining a high level of quality is not just an option but a necessity. Hypothesis testing is often employed in quality assurance and control processes to test whether a certain process or product conforms to standards. For example, if a car manufacturing line claims its error rate is below 5%, hypothesis testing can confirm or disprove this claim based on a sample of products. This ensures that quality is not compromised and that stakeholders can trust the end product.

Resource Optimization

Resource allocation is a significant challenge for any organization. Hypothesis testing can be a valuable tool in determining where resources will be most effectively utilized. For instance, in a manufacturing setting, you might want to test whether a new piece of machinery significantly increases production speed. A hypothesis test could provide the statistical evidence needed to decide whether investing in more of such machinery would be a wise use of resources.

In the realm of research and development, hypothesis testing can be a game-changer. When developing a new product or process, you’ll likely have various theories or hypotheses. Hypothesis testing allows you to systematically test these, filtering out the less likely options and focusing on the most promising ones. This not only speeds up the innovation process but also makes it more cost-effective by reducing the likelihood of investing in ideas that are statistically unlikely to be successful.

In summary, hypothesis testing is a versatile tool that adds rigor, reduces risk, and enhances the decision-making and innovation processes across various sectors and functions.

This graphical representation makes it easier to grasp how the p-value is used to quantify the risk involved in making a decision based on a hypothesis test.

Step-by-Step Guide to Hypothesis Testing

To make this guide practical and helpful if you are new learning about the concept we will explain each step of the process and follow it up with an example of the method being applied to a manufacturing line, and you want to test if a new process reduces the average time it takes to assemble a product.

Step 1: State the Hypotheses

The first and foremost step in hypothesis testing is to clearly define your hypotheses. This sets the stage for your entire test and guides the subsequent steps, from data collection to decision-making. At this stage, you formulate two competing hypotheses:

Null Hypothesis ( H 0)

The null hypothesis is a statement that there is no effect or no difference, and it serves as the hypothesis that you are trying to test against. It’s the default assumption that any kind of effect or difference you suspect is not real, and is due to chance. Formulating a clear null hypothesis is crucial, as your statistical tests will be aimed at challenging this hypothesis.

In a manufacturing context, if you’re testing whether a new assembly line process has reduced the time it takes to produce an item, your null hypothesis ( H 0) could be:

H 0:”The new process does not reduce the average assembly time.”

Alternative Hypothesis ( Ha or H 1)

The alternative hypothesis is what you want to prove. It is a statement that there is an effect or difference. This hypothesis is considered only after you find enough evidence against the null hypothesis.

Continuing with the manufacturing example, the alternative hypothesis ( Ha ) could be:

Ha :”The new process reduces the average assembly time.”

Types of Alternative Hypothesis

Depending on what exactly you are trying to prove, the alternative hypothesis can be:

- Two-Sided : You’re interested in deviations in either direction (greater or smaller).

- One-Sided : You’re interested in deviations only in one direction (either greater or smaller).

Scenario: Reducing Assembly Time in a Car Manufacturing Plant

You are a continuous improvement manager at a car manufacturing plant. One of the assembly lines has been struggling with longer assembly times, affecting the overall production schedule. A new assembly process has been proposed, promising to reduce the assembly time per car. Before rolling it out on the entire line, you decide to conduct a hypothesis test to see if the new process actually makes a difference. Null Hypothesis ( H 0) In this context, the null hypothesis would be the status quo, asserting that the new assembly process doesn’t reduce the assembly time per car. Mathematically, you could state it as: H 0:The average assembly time per car with the new process ≥ The average assembly time per car with the old process. Or simply: H 0:”The new process does not reduce the average assembly time per car.” Alternative Hypothesis ( Ha or H 1) The alternative hypothesis is what you aim to prove — that the new process is more efficient. Mathematically, it could be stated as: Ha :The average assembly time per car with the new process < The average assembly time per car with the old process Or simply: Ha :”The new process reduces the average assembly time per car.” Types of Alternative Hypothesis In this example, you’re only interested in knowing if the new process reduces the time, making it a One-Sided Alternative Hypothesis .

Step 2: Determine the Significance Level ( α )

Once you’ve clearly stated your null and alternative hypotheses, the next step is to decide on the significance level, often denoted by α . The significance level is a threshold below which the null hypothesis will be rejected. It quantifies the level of risk you’re willing to accept when making a decision based on the hypothesis test.

What is a Significance Level?

The significance level, usually expressed as a percentage, represents the probability of rejecting the null hypothesis when it is actually true. Common choices for α are 0.05, 0.01, and 0.10, representing 5%, 1%, and 10% levels of significance, respectively.

- 5% Significance Level ( α =0.05) : This is the most commonly used level and implies that you are willing to accept a 5% chance of rejecting the null hypothesis when it is true.

- 1% Significance Level ( α =0.01) : This is a more stringent level, used when you want to be more sure of your decision. The risk of falsely rejecting the null hypothesis is reduced to 1%.

- 10% Significance Level ( α =0.10) : This is a more lenient level, used when you are willing to take a higher risk. Here, the chance of falsely rejecting the null hypothesis is 10%.

Continuing with the manufacturing example, let’s say you decide to set α =0.05, meaning you’re willing to take a 5% risk of concluding that the new process is effective when it might not be.

How to Choose the Right Significance Level?

Choosing the right significance level depends on the context and the consequences of making a wrong decision. Here are some factors to consider:

- Criticality of Decision : For highly critical decisions with severe consequences if wrong, a lower α like 0.01 may be appropriate.

- Resource Constraints : If the cost of collecting more data is high, you may choose a higher α to make a decision based on a smaller sample size.

- Industry Standards : Sometimes, the choice of α may be dictated by industry norms or regulatory guidelines.

By the end of Step 2, you should have a well-defined significance level that will guide the rest of your hypothesis testing process. This level serves as the cut-off for determining whether the observed effect or difference in your sample is statistically significant or not.

Continuing the Scenario: Reducing Assembly Time in a Car Manufacturing Plant

After formulating the hypotheses, the next step is to set the significance level ( α ) that will be used to interpret the results of the hypothesis test. This is a critical decision as it quantifies the level of risk you’re willing to accept when making a conclusion based on the test. Setting the Significance Level Given that assembly time is a critical factor affecting the production schedule, and ultimately, the company’s bottom line, you decide to be fairly stringent in your test. You opt for a commonly used significance level: α = 0.05 This means you are willing to accept a 5% chance of rejecting the null hypothesis when it is actually true. In practical terms, if you find that the p-value of the test is less than 0.05, you will conclude that the new process significantly reduces assembly time and consider implementing it across the entire line. Why α = 0.05 ? Industry Standard : A 5% significance level is widely accepted in many industries, including manufacturing, for hypothesis testing. Risk Management : By setting α = 0.05 , you’re limiting the risk of concluding that the new process is effective when it may not be to just 5%. Balanced Approach : This level offers a balance between being too lenient (e.g., α=0.10) and too stringent (e.g., α=0.01), making it a reasonable choice for this scenario.

Step 3: Collect and Prepare the Data

After stating your hypotheses and setting the significance level, the next vital step is data collection. The data you collect serves as the basis for your hypothesis test, so it’s essential to gather accurate and relevant data.

Types of Data

Depending on your hypothesis, you’ll need to collect either:

- Quantitative Data : Numerical data that can be measured. Examples include height, weight, and temperature.

- Qualitative Data : Categorical data that represent characteristics. Examples include colors, gender, and material types.

Data Collection Methods

Various methods can be used to collect data, such as:

- Surveys and Questionnaires : Useful for collecting qualitative data and opinions.

- Observation : Collecting data through direct or participant observation.

- Experiments : Especially useful in scientific research where control over variables is possible.

- Existing Data : Utilizing databases, records, or any other data previously collected.

Sample Size

The sample size ( n ) is another crucial factor. A larger sample size generally gives more accurate results, but it’s often constrained by resources like time and money. The choice of sample size might also depend on the statistical test you plan to use.

Continuing with the manufacturing example, suppose you decide to collect data on the assembly time of 30 randomly chosen products, 15 made using the old process and 15 made using the new process. Here, your sample size n =30.

Data Preparation

Once data is collected, it often needs to be cleaned and prepared for analysis. This could involve:

- Removing Outliers : Outliers can skew the results and provide an inaccurate picture.

- Data Transformation : Converting data into a format suitable for statistical analysis.

- Data Coding : Categorizing or labeling data, necessary for qualitative data.

By the end of Step 3, you should have a dataset that is ready for statistical analysis. This dataset should be representative of the population you’re interested in and prepared in a way that makes it suitable for hypothesis testing.

With the hypotheses stated and the significance level set, you’re now ready to collect the data that will serve as the foundation for your hypothesis test. Given that you’re testing a change in a manufacturing process, the data will most likely be quantitative, representing the assembly time of cars produced on the line. Data Collection Plan You decide to use a Random Sampling Method for your data collection. For two weeks, assembly times for randomly selected cars will be recorded: one week using the old process and another week using the new process. Your aim is to collect data for 40 cars from each process, giving you a sample size ( n ) of 80 cars in total. Types of Data Quantitative Data : In this case, you’re collecting numerical data representing the assembly time in minutes for each car. Data Preparation Data Cleaning : Once the data is collected, you’ll need to inspect it for any anomalies or outliers that could skew your results. For example, if a significant machine breakdown happened during one of the weeks, you may need to adjust your data or collect more. Data Transformation : Given that you’re dealing with time, you may not need to transform your data, but it’s something to consider, depending on the statistical test you plan to use. Data Coding : Since you’re dealing with quantitative data in this scenario, coding is likely unnecessary unless you’re planning to categorize assembly times into bins (e.g., ‘fast’, ‘medium’, ‘slow’) for some reason. Example Data Points: Car_ID Process_Type Assembly_Time_Minutes 1 Old 38.53 2 Old 35.80 3 Old 36.96 4 Old 39.48 5 Old 38.74 6 Old 33.05 7 Old 36.90 8 Old 34.70 9 Old 34.79 … … … The complete dataset would contain 80 rows: 40 for the old process and 40 for the new process.

Step 4: Conduct the Statistical Test

After you have your hypotheses, significance level, and collected data, the next step is to actually perform the statistical test. This step involves calculations that will lead to a test statistic, which you’ll then use to make your decision regarding the null hypothesis.

Choose the Right Test

The first task is to decide which statistical test to use. The choice depends on several factors:

- Type of Data : Quantitative or Qualitative

- Sample Size : Large or Small

- Number of Groups or Categories : One-sample, Two-sample, or Multiple groups

For instance, you might choose a t-test for comparing means of two groups when you have a small sample size. Chi-square tests are often used for categorical data, and ANOVA is used for comparing means across more than two groups.

Calculation of Test Statistic

Once you’ve chosen the appropriate statistical test, the next step is to calculate the test statistic. This involves using the sample data in a specific formula for the chosen test.

Obtain the p-value

After calculating the test statistic, the next step is to find the p-value associated with it. The p-value represents the probability of observing the given test statistic if the null hypothesis is true.

- A small p-value (< α ) indicates strong evidence against the null hypothesis, so you reject the null hypothesis.

- A large p-value (> α ) indicates weak evidence against the null hypothesis, so you fail to reject the null hypothesis.

Make the Decision

You now compare the p-value to the predetermined significance level ( α ):

- If p < α , you reject the null hypothesis in favor of the alternative hypothesis.

- If p > α , you fail to reject the null hypothesis.

In the manufacturing case, if your calculated p-value is 0.03 and your α is 0.05, you would reject the null hypothesis, concluding that the new process effectively reduces the average assembly time.

By the end of Step 4, you will have either rejected or failed to reject the null hypothesis, providing a statistical basis for your decision-making process.

Now that you have collected and prepared your data, the next step is to conduct the actual statistical test to evaluate the null and alternative hypotheses. In this case, you’ll be comparing the mean assembly times between cars produced using the old and new processes to determine if the new process is statistically significantly faster. Choosing the Right Test Given that you have two sets of independent samples (old process and new process), a Two-sample t-test for Equality of Means seems appropriate for comparing the average assembly times. Preparing Data for Minitab Firstly, you would prepare your data in an Excel sheet or CSV file with one column for the assembly times using the old process and another column for the assembly times using the new process. Import this file into Minitab. Steps to Perform the Two-sample t-test in Minitab Open Minitab : Launch the Minitab software on your computer. Import Data : Navigate to File > Open and import your data file. Navigate to the t-test Menu : Go to Stat > Basic Statistics > 2-Sample t... . Select Columns : In the dialog box, specify the columns corresponding to the old and new process assembly times under “Sample 1” and “Sample 2.” Options : Click on Options and make sure that you set the confidence level to 95% (which corresponds to α = 0.05 ). Run the Test : Click OK to run the test. In this example output, the p-value is 0.0012, which is less than the significance level α = 0.05 . Hence, you would reject the null hypothesis. The t-statistic is -3.45, indicating that the mean of the new process is statistically significantly less than the mean of the old process, which aligns with your alternative hypothesis. Showing the data displayed as a Box plot in the below graphic it is easy to see the new process is statistically significantly better.

Why do a Hypothesis test?

You might ask, after all this why do a hypothesis test and not just look at the averages, which is a good question. While looking at average times might give you a general idea of which process is faster, hypothesis testing provides several advantages that a simple comparison of averages doesn’t offer:

Statistical Significance

Account for Random Variability : Hypothesis testing considers not just the averages, but also the variability within each group. This allows you to make more robust conclusions that account for random chance.

Quantify the Evidence : With hypothesis testing, you obtain a p-value that quantifies the strength of the evidence against the null hypothesis. A simple comparison of averages doesn’t provide this level of detail.

Control Type I Error : Hypothesis testing allows you to control the probability of making a Type I error (i.e., rejecting a true null hypothesis). This is particularly useful in settings where the consequences of such an error could be costly or risky.

Quantify Risk : Hypothesis testing provides a structured way to make decisions based on a predefined level of risk (the significance level, α ).

Decision-making Confidence

Objective Decision Making : The formal structure of hypothesis testing provides an objective framework for decision-making. This is especially useful in a business setting where decisions often have to be justified to stakeholders.

Replicability : The statistical rigor ensures that the results are replicable. Another team could perform the same test and expect to get similar results, which is not necessarily the case when comparing only averages.

Additional Insights

Understanding of Variability : Hypothesis testing often involves looking at measures of spread and distribution, not just the mean. This can offer additional insights into the processes you’re comparing.

Basis for Further Analysis : Once you’ve performed a hypothesis test, you can often follow it up with other analyses (like confidence intervals for the difference in means, or effect size calculations) that offer more detailed information.

In summary, while comparing averages is quicker and simpler, hypothesis testing provides a more reliable, nuanced, and objective basis for making data-driven decisions.

Step 5: Interpret the Results and Make Conclusions

Having conducted the statistical test and obtained the p-value, you’re now at a stage where you can interpret these results in the context of the problem you’re investigating. This step is crucial for transforming the statistical findings into actionable insights.

Interpret the p-value

The p-value you obtained tells you the significance of your results:

- Low p-value ( p < α ) : Indicates that the results are statistically significant, and it’s unlikely that the observed effects are due to random chance. In this case, you generally reject the null hypothesis.

- High p-value ( p > α ) : Indicates that the results are not statistically significant, and the observed effects could well be due to random chance. Here, you generally fail to reject the null hypothesis.

Relate to Real-world Context

You should then relate these statistical conclusions to the real-world context of your problem. This is where your expertise in your specific field comes into play.

In our manufacturing example, if you’ve found a statistically significant reduction in assembly time with a p-value of 0.03 (which is less than the α level of 0.05), you can confidently conclude that the new manufacturing process is more efficient. You might then consider implementing this new process across the entire assembly line.

Make Recommendations

Based on your conclusions, you can make recommendations for action or further study. For example:

- Implement Changes : If the test results are significant, consider making the changes on a larger scale.

- Further Research : If the test results are not clear or not significant, you may recommend further studies or data collection.

- Review Methodology : If you find that the results are not as expected, it might be useful to review the methodology and see if the test was conducted under the right conditions and with the right test parameters.

Document the Findings

Lastly, it’s essential to document all the steps taken, the methodology used, the data collected, and the conclusions drawn. This documentation is not only useful for any further studies but also for auditing purposes or for stakeholders who may need to understand the process and the findings.

By the end of Step 5, you’ll have turned the raw statistical findings into meaningful conclusions and actionable insights. This is the final step in the hypothesis testing process, making it a complete, robust method for informed decision-making.

You’ve successfully conducted the hypothesis test and found strong evidence to reject the null hypothesis in favor of the alternative: The new assembly process is statistically significantly faster than the old one. It’s now time to interpret these results in the context of your business operations and make actionable recommendations. Interpretation of Results Statistical Significance : The p-value of 0.0012 is well below the significance level of = 0.05 α = 0.05 , indicating that the results are statistically significant. Practical Significance : The boxplot and t-statistic (-3.45) suggest not just statistical, but also practical significance. The new process appears to be both consistently and substantially faster. Risk Assessment : The low p-value allows you to reject the null hypothesis with a high degree of confidence, meaning the risk of making a Type I error is minimal. Business Implications Increased Productivity : Implementing the new process could lead to an increase in the number of cars produced, thereby enhancing productivity. Cost Savings : Faster assembly time likely translates to lower labor costs. Quality Control : Consider monitoring the quality of cars produced under the new process closely to ensure that the speedier assembly does not compromise quality. Recommendations Implement New Process : Given the statistical and practical significance of the findings, recommend implementing the new process across the entire assembly line. Monitor and Adjust : Implement a control phase where the new process is monitored for both speed and quality. This could involve additional hypothesis tests or control charts. Communicate Findings : Share the results and recommendations with stakeholders through a formal presentation or report, emphasizing both the statistical rigor and the potential business benefits. Review Resource Allocation : Given the likely increase in productivity, assess if resources like labor and parts need to be reallocated to optimize the workflow further.

By following this step-by-step guide, you’ve journeyed through the rigorous yet enlightening process of hypothesis testing. From stating clear hypotheses to interpreting the results, each step has paved the way for making informed, data-driven decisions that can significantly impact your projects, business, or research.

Hypothesis testing is more than just a set of formulas or calculations; it’s a holistic approach to problem-solving that incorporates context, statistics, and strategic decision-making. While the process may seem daunting at first, each step serves a crucial role in ensuring that your conclusions are both statistically sound and practically relevant.

- McKenzie, C.R., 2004. Hypothesis testing and evaluation . Blackwell handbook of judgment and decision making , pp.200-219.

- Park, H.M., 2015. Hypothesis testing and statistical power of a test.

- Eberhardt, L.L., 2003. What should we do about hypothesis testing? . The Journal of wildlife management , pp.241-247.

Q: What is hypothesis testing in the context of Lean Six Sigma?

A: Hypothesis testing is a statistical method used in Lean Six Sigma to determine whether there is enough evidence in a sample of data to infer that a certain condition holds true for the entire population. In the Lean Six Sigma process, it’s commonly used to validate the effectiveness of process improvements by comparing performance metrics before and after changes are implemented. A null hypothesis ( H 0 ) usually represents no change or effect, while the alternative hypothesis ( H 1 ) indicates a significant change or effect.

Q: How do I determine which statistical test to use for my hypothesis?

A: The choice of statistical test for hypothesis testing depends on several factors, including the type of data (nominal, ordinal, interval, or ratio), the sample size, the number of samples (one sample, two samples, paired), and whether the data distribution is normal. For example, a t-test is used for comparing the means of two groups when the data is normally distributed, while a Chi-square test is suitable for categorical data to test the relationship between two variables. It’s important to choose the right test to ensure the validity of your hypothesis testing results.

Q: What is a p-value, and how does it relate to hypothesis testing?

A: A p-value is a probability value that helps you determine the significance of your results in hypothesis testing. It represents the likelihood of obtaining a result at least as extreme as the one observed during the test, assuming that the null hypothesis is true. In hypothesis testing, if the p-value is lower than the predetermined significance level (commonly α = 0.05 ), you reject the null hypothesis, suggesting that the observed effect is statistically significant. If the p-value is higher, you fail to reject the null hypothesis, indicating that there is not enough evidence to support the alternative hypothesis.

Q: Can you explain Type I and Type II errors in hypothesis testing?

A: Type I and Type II errors are potential errors that can occur in hypothesis testing. A Type I error, also known as a “false positive,” occurs when the null hypothesis is true, but it is incorrectly rejected. It is equivalent to a false alarm. On the other hand, a Type II error, or a “false negative,” happens when the null hypothesis is false, but it is erroneously failed to be rejected. This means a real effect or difference was missed. The risk of a Type I error is represented by the significance level ( α ), while the risk of a Type II error is denoted by β . Minimizing these errors is crucial for the reliability of hypothesis tests in continuous improvement projects.

Daniel Croft is a seasoned continuous improvement manager with a Black Belt in Lean Six Sigma. With over 10 years of real-world application experience across diverse sectors, Daniel has a passion for optimizing processes and fostering a culture of efficiency. He's not just a practitioner but also an avid learner, constantly seeking to expand his knowledge. Outside of his professional life, Daniel has a keen Investing, statistics and knowledge-sharing, which led him to create the website learnleansigma.com, a platform dedicated to Lean Six Sigma and process improvement insights.

Free Lean Six Sigma Templates

Improve your Lean Six Sigma projects with our free templates. They're designed to make implementation and management easier, helping you achieve better results.

Other Guides

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Hypothesis Testing – A Deep Dive into Hypothesis Testing, The Backbone of Statistical Inference

- September 21, 2023

Explore the intricacies of hypothesis testing, a cornerstone of statistical analysis. Dive into methods, interpretations, and applications for making data-driven decisions.

In this Blog post we will learn:

- What is Hypothesis Testing?

- Steps in Hypothesis Testing 2.1. Set up Hypotheses: Null and Alternative 2.2. Choose a Significance Level (α) 2.3. Calculate a test statistic and P-Value 2.4. Make a Decision

- Example : Testing a new drug.

- Example in python

1. What is Hypothesis Testing?

In simple terms, hypothesis testing is a method used to make decisions or inferences about population parameters based on sample data. Imagine being handed a dice and asked if it’s biased. By rolling it a few times and analyzing the outcomes, you’d be engaging in the essence of hypothesis testing.

Think of hypothesis testing as the scientific method of the statistics world. Suppose you hear claims like “This new drug works wonders!” or “Our new website design boosts sales.” How do you know if these statements hold water? Enter hypothesis testing.

2. Steps in Hypothesis Testing

- Set up Hypotheses : Begin with a null hypothesis (H0) and an alternative hypothesis (Ha).

- Choose a Significance Level (α) : Typically 0.05, this is the probability of rejecting the null hypothesis when it’s actually true. Think of it as the chance of accusing an innocent person.

- Calculate Test statistic and P-Value : Gather evidence (data) and calculate a test statistic.

- p-value : This is the probability of observing the data, given that the null hypothesis is true. A small p-value (typically ≤ 0.05) suggests the data is inconsistent with the null hypothesis.

- Decision Rule : If the p-value is less than or equal to α, you reject the null hypothesis in favor of the alternative.

2.1. Set up Hypotheses: Null and Alternative

Before diving into testing, we must formulate hypotheses. The null hypothesis (H0) represents the default assumption, while the alternative hypothesis (H1) challenges it.

For instance, in drug testing, H0 : “The new drug is no better than the existing one,” H1 : “The new drug is superior .”

2.2. Choose a Significance Level (α)

When You collect and analyze data to test H0 and H1 hypotheses. Based on your analysis, you decide whether to reject the null hypothesis in favor of the alternative, or fail to reject / Accept the null hypothesis.

The significance level, often denoted by $α$, represents the probability of rejecting the null hypothesis when it is actually true.

In other words, it’s the risk you’re willing to take of making a Type I error (false positive).

Type I Error (False Positive) :

- Symbolized by the Greek letter alpha (α).

- Occurs when you incorrectly reject a true null hypothesis . In other words, you conclude that there is an effect or difference when, in reality, there isn’t.

- The probability of making a Type I error is denoted by the significance level of a test. Commonly, tests are conducted at the 0.05 significance level , which means there’s a 5% chance of making a Type I error .

- Commonly used significance levels are 0.01, 0.05, and 0.10, but the choice depends on the context of the study and the level of risk one is willing to accept.

Example : If a drug is not effective (truth), but a clinical trial incorrectly concludes that it is effective (based on the sample data), then a Type I error has occurred.

Type II Error (False Negative) :

- Symbolized by the Greek letter beta (β).

- Occurs when you accept a false null hypothesis . This means you conclude there is no effect or difference when, in reality, there is.

- The probability of making a Type II error is denoted by β. The power of a test (1 – β) represents the probability of correctly rejecting a false null hypothesis.

Example : If a drug is effective (truth), but a clinical trial incorrectly concludes that it is not effective (based on the sample data), then a Type II error has occurred.

Balancing the Errors :

In practice, there’s a trade-off between Type I and Type II errors. Reducing the risk of one typically increases the risk of the other. For example, if you want to decrease the probability of a Type I error (by setting a lower significance level), you might increase the probability of a Type II error unless you compensate by collecting more data or making other adjustments.

It’s essential to understand the consequences of both types of errors in any given context. In some situations, a Type I error might be more severe, while in others, a Type II error might be of greater concern. This understanding guides researchers in designing their experiments and choosing appropriate significance levels.

2.3. Calculate a test statistic and P-Value

Test statistic : A test statistic is a single number that helps us understand how far our sample data is from what we’d expect under a null hypothesis (a basic assumption we’re trying to test against). Generally, the larger the test statistic, the more evidence we have against our null hypothesis. It helps us decide whether the differences we observe in our data are due to random chance or if there’s an actual effect.

P-value : The P-value tells us how likely we would get our observed results (or something more extreme) if the null hypothesis were true. It’s a value between 0 and 1. – A smaller P-value (typically below 0.05) means that the observation is rare under the null hypothesis, so we might reject the null hypothesis. – A larger P-value suggests that what we observed could easily happen by random chance, so we might not reject the null hypothesis.

2.4. Make a Decision

Relationship between $α$ and P-Value

When conducting a hypothesis test:

We then calculate the p-value from our sample data and the test statistic.

Finally, we compare the p-value to our chosen $α$:

- If $p−value≤α$: We reject the null hypothesis in favor of the alternative hypothesis. The result is said to be statistically significant.

- If $p−value>α$: We fail to reject the null hypothesis. There isn’t enough statistical evidence to support the alternative hypothesis.

3. Example : Testing a new drug.

Imagine we are investigating whether a new drug is effective at treating headaches faster than drug B.

Setting Up the Experiment : You gather 100 people who suffer from headaches. Half of them (50 people) are given the new drug (let’s call this the ‘Drug Group’), and the other half are given a sugar pill, which doesn’t contain any medication.

- Set up Hypotheses : Before starting, you make a prediction:

- Null Hypothesis (H0): The new drug has no effect. Any difference in healing time between the two groups is just due to random chance.

- Alternative Hypothesis (H1): The new drug does have an effect. The difference in healing time between the two groups is significant and not just by chance.

Calculate Test statistic and P-Value : After the experiment, you analyze the data. The “test statistic” is a number that helps you understand the difference between the two groups in terms of standard units.

For instance, let’s say:

- The average healing time in the Drug Group is 2 hours.

- The average healing time in the Placebo Group is 3 hours.

The test statistic helps you understand how significant this 1-hour difference is. If the groups are large and the spread of healing times in each group is small, then this difference might be significant. But if there’s a huge variation in healing times, the 1-hour difference might not be so special.

Imagine the P-value as answering this question: “If the new drug had NO real effect, what’s the probability that I’d see a difference as extreme (or more extreme) as the one I found, just by random chance?”

For instance:

- P-value of 0.01 means there’s a 1% chance that the observed difference (or a more extreme difference) would occur if the drug had no effect. That’s pretty rare, so we might consider the drug effective.

- P-value of 0.5 means there’s a 50% chance you’d see this difference just by chance. That’s pretty high, so we might not be convinced the drug is doing much.

- If the P-value is less than ($α$) 0.05: the results are “statistically significant,” and they might reject the null hypothesis , believing the new drug has an effect.

- If the P-value is greater than ($α$) 0.05: the results are not statistically significant, and they don’t reject the null hypothesis , remaining unsure if the drug has a genuine effect.

4. Example in python

For simplicity, let’s say we’re using a t-test (common for comparing means). Let’s dive into Python:

Making a Decision : “The results are statistically significant! p-value < 0.05 , The drug seems to have an effect!” If not, we’d say, “Looks like the drug isn’t as miraculous as we thought.”

5. Conclusion

Hypothesis testing is an indispensable tool in data science, allowing us to make data-driven decisions with confidence. By understanding its principles, conducting tests properly, and considering real-world applications, you can harness the power of hypothesis testing to unlock valuable insights from your data.

More Articles

Correlation – connecting the dots, the role of correlation in data analysis, sampling and sampling distributions – a comprehensive guide on sampling and sampling distributions, law of large numbers – a deep dive into the world of statistics, central limit theorem – a deep dive into central limit theorem and its significance in statistics, skewness and kurtosis – peaks and tails, understanding data through skewness and kurtosis”, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

LEARN STATISTICS EASILY

Learn Data Analysis Now!

A Comprehensive Guide to Hypotheses Tests in Statistics

You will learn the essentials of hypothesis tests, from fundamental concepts to practical applications in statistics.

- Null and alternative hypotheses guide hypothesis tests.

- Significance level and p-value aid decision-making.

- Parametric tests assume specific probability distributions.

- Non-parametric tests offer flexible assumptions.

- Confidence intervals provide estimate precision.

Introduction to Hypotheses Tests

Hypothesis testing is a statistical tool used to make decisions based on data.

It involves making assumptions about a population parameter and testing its validity using a population sample.

Hypothesis tests help us draw conclusions and make informed decisions in various fields like business, research, and science.

Null and Alternative Hypotheses

The null hypothesis (H0) is an initial claim about a population parameter, typically representing no effect or no difference.

The alternative hypothesis (H1) opposes the null hypothesis, suggesting an effect or difference.

Hypothesis tests aim to determine if there is evidence for the null hypothesis rejection in favor of the alternative hypothesis.

Significance Levels and P-values

The significance level (α), often set at 0.05 or 5%, serves as a threshold for determining if we should reject the null hypothesis.

A p-value, calculated during hypothesis testing, represents the probability of observing the test statistic if the null hypothesis is true.

Suppose the p-value is less than the significance level. We reject the null hypothesis, in that case, indicating that the alternative hypothesis is more likely.

Parametric and Non-Parametric Tests

Parametric tests assume the data follows a specific probability distribution, usually the normal distribution. Examples include the Student’s t-test.

Non-parametric tests do not require such assumptions and are helpful when dealing with data that do not meet the assumptions of parametric tests. Examples include the Mann-Whitney U test.

🎓 Master Data Analysis and Skyrocket Your Career

Find Out the Secrets in Our Ultimate Guide! 💼

Commonly Used Hypotheses Tests

Independent samples t-test: This analysis compares the means of two independent groups.

Paired samples t-test: Compares the means of two related groups (e.g., before and after treatment).

Chi-squared test: Determines if there is a significant association, in a contingency table, between two categorical variables.

Analysis of Variance (ANOVA): Compares the means of three or more independent groups to determine whether significant differences exist.

Pearson’s Correlation Coefficient (Pearson’s r): Quantifies the strength and direction of a linear association between two continuous variables.

Simple Linear Regression: Evaluate whether a significant linear relationship exists between a predictor variable (X) and a continuous outcome variable (y).

Logistic Regression: Determines the relationship between one or more predictor variables (continuous or categorical) and a binary outcome variable (e.g., success or failure).

Levene’s Test: Tests the equality of variances between two or more groups, often used as an assumption checks for ANOVA.

Shapiro-Wilk Test: Assesses the null hypothesis that a data sample is drawn from a population with a normal distribution.

Interpreting the Results of Hypotheses Tests

To interpret the hypothesis test results, compare the p-value to the chosen significance level.

If the p-value falls below the significance level, reject the null hypothesis and infer that a notable effect or difference exists.

Otherwise, fail to reject the null hypothesis, meaning there is insufficient evidence to support the alternative hypothesis.

Other Relevant Information

In addition to understanding the basics of hypothesis tests, it’s crucial to consider other relevant information when interpreting the results.

For example, factors such as effect size, statistical power, and confidence intervals can provide valuable insights and help you make more informed decisions.

Effect size

The effect size represents a quantitative measurement of the strength or magnitude of the observed relationship or effect between variables. It aids in evaluating the practical significance of the results. A statistically significant outcome may not necessarily imply practical relevance. At the same time, a substantial effect size can suggest meaningful findings, even when statistical significance appears marginal.

Statistical power

The power of a test represents the likelihood of accurately rejecting the null hypothesis when it is incorrect. In other words, it’s the likelihood that the test will detect an effect when it exists. Factors affecting the power of a test include the sample size, effect size, and significance level. Enhanced power reduces the likelihood of making an error of Type II — failing to reject the null hypothesis when it ought to be rejected.

Confidence intervals

A confidence interval represents a range where the true population parameter is expected to be found with a specified confidence level (e.g., 95%). Confidence intervals provide additional context to hypothesis testing, helping to assess the estimate’s precision and offering a better understanding of the uncertainty surrounding the results.

By considering these additional aspects when interpreting the results of hypothesis tests, you can gain a more comprehensive understanding of the data and make more informed conclusions.

Hypothesis testing is an indispensable statistical tool for drawing meaningful inferences and making informed data-based decisions.

By comprehending the essential concepts such as null and alternative hypotheses, significance levels, p-values, and the distinction between parametric and non-parametric tests, you can proficiently apply hypothesis testing to a wide range of real-world situations.

Additionally, understanding the importance of effect sizes, statistical power, and confidence intervals will enhance your ability to interpret the results and make better decisions.

With many applications across various fields, including medicine, psychology, business, and environmental sciences, hypothesis testing is a versatile and valuable method for research and data analysis.

A comprehensive grasp of hypothesis testing techniques will enable professionals and researchers to strengthen their decision-making processes, optimize strategies, and deepen their understanding of the relationships between variables, leading to more impactful results and discoveries.

Refine your data analysis skills and present meaningful insights with confidence using our latest digital book!

Access FREE samples now and master advanced techniques in data analysis, including optimal sample size determination and effective communication of results.

Don’t miss the chance to immerse yourself in Applied Statistics: Data Analysis and unlock your full potential in data-driven decision making.

Click the link to start exploring!

Can Standard Deviations Be Negative?

Connect with us on our social networks.

DAILY POSTS ON INSTAGRAM!

Hypothesis Tests

Similar posts.

Applied Statistics: Data Analysis

If you’re struggling with statistics while analyzing data for your projects, this is your ultimate solution for Data Analysis!

What is the Difference Between ANOVA and T-Test?

Learn the key differences between t-tests and ANOVA, when to use each, and avoid common errors. Explore more relevant articles on our blog.

How To Lie With Statistics?

Is It Possible To Lie With Statistics? Of Course, It Is! But, How? There Are Several Techniques Presented Here — Never Fall For Such Lies!

Common Mistakes to Avoid in One-Way ANOVA Analysis

Discover how to avoid common one-way ANOVA mistakes, ensuring accurate analysis, valid conclusions, and reliable insights in your research.

Music, Tea, and P-Values: A Tale of Impossible Results and P-Hacking

Uncover the intriguing truth about p-values and the concept of p-hacking in scientific research and its impact on statistical analysis.

Random Forest in Practice: An Essential Guide

Learn the potential of Random Forest in Data Science with our essential guide on practical Python applications for predictive modeling.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

S.3 hypothesis testing.

In reviewing hypothesis tests, we start first with the general idea. Then, we keep returning to the basic procedures of hypothesis testing, each time adding a little more detail.

The general idea of hypothesis testing involves:

- Making an initial assumption.

- Collecting evidence (data).

- Based on the available evidence (data), deciding whether to reject or not reject the initial assumption.

Every hypothesis test — regardless of the population parameter involved — requires the above three steps.

Example S.3.1

Is normal body temperature really 98.6 degrees f section .

Consider the population of many, many adults. A researcher hypothesized that the average adult body temperature is lower than the often-advertised 98.6 degrees F. That is, the researcher wants an answer to the question: "Is the average adult body temperature 98.6 degrees? Or is it lower?" To answer his research question, the researcher starts by assuming that the average adult body temperature was 98.6 degrees F.

Then, the researcher went out and tried to find evidence that refutes his initial assumption. In doing so, he selects a random sample of 130 adults. The average body temperature of the 130 sampled adults is 98.25 degrees.

Then, the researcher uses the data he collected to make a decision about his initial assumption. It is either likely or unlikely that the researcher would collect the evidence he did given his initial assumption that the average adult body temperature is 98.6 degrees:

- If it is likely , then the researcher does not reject his initial assumption that the average adult body temperature is 98.6 degrees. There is not enough evidence to do otherwise.

- either the researcher's initial assumption is correct and he experienced a very unusual event;

- or the researcher's initial assumption is incorrect.

In statistics, we generally don't make claims that require us to believe that a very unusual event happened. That is, in the practice of statistics, if the evidence (data) we collected is unlikely in light of the initial assumption, then we reject our initial assumption.

Example S.3.2

Criminal trial analogy section .

One place where you can consistently see the general idea of hypothesis testing in action is in criminal trials held in the United States. Our criminal justice system assumes "the defendant is innocent until proven guilty." That is, our initial assumption is that the defendant is innocent.

In the practice of statistics, we make our initial assumption when we state our two competing hypotheses -- the null hypothesis ( H 0 ) and the alternative hypothesis ( H A ). Here, our hypotheses are:

- H 0 : Defendant is not guilty (innocent)

- H A : Defendant is guilty

In statistics, we always assume the null hypothesis is true . That is, the null hypothesis is always our initial assumption.

The prosecution team then collects evidence — such as finger prints, blood spots, hair samples, carpet fibers, shoe prints, ransom notes, and handwriting samples — with the hopes of finding "sufficient evidence" to make the assumption of innocence refutable.

In statistics, the data are the evidence.

The jury then makes a decision based on the available evidence:

- If the jury finds sufficient evidence — beyond a reasonable doubt — to make the assumption of innocence refutable, the jury rejects the null hypothesis and deems the defendant guilty. We behave as if the defendant is guilty.

- If there is insufficient evidence, then the jury does not reject the null hypothesis . We behave as if the defendant is innocent.

In statistics, we always make one of two decisions. We either "reject the null hypothesis" or we "fail to reject the null hypothesis."

Errors in Hypothesis Testing Section

Did you notice the use of the phrase "behave as if" in the previous discussion? We "behave as if" the defendant is guilty; we do not "prove" that the defendant is guilty. And, we "behave as if" the defendant is innocent; we do not "prove" that the defendant is innocent.

This is a very important distinction! We make our decision based on evidence not on 100% guaranteed proof. Again:

- If we reject the null hypothesis, we do not prove that the alternative hypothesis is true.

- If we do not reject the null hypothesis, we do not prove that the null hypothesis is true.

We merely state that there is enough evidence to behave one way or the other. This is always true in statistics! Because of this, whatever the decision, there is always a chance that we made an error .

Let's review the two types of errors that can be made in criminal trials:

Table S.3.2 shows how this corresponds to the two types of errors in hypothesis testing.

Note that, in statistics, we call the two types of errors by two different names -- one is called a "Type I error," and the other is called a "Type II error." Here are the formal definitions of the two types of errors:

There is always a chance of making one of these errors. But, a good scientific study will minimize the chance of doing so!

Making the Decision Section

Recall that it is either likely or unlikely that we would observe the evidence we did given our initial assumption. If it is likely , we do not reject the null hypothesis. If it is unlikely , then we reject the null hypothesis in favor of the alternative hypothesis. Effectively, then, making the decision reduces to determining "likely" or "unlikely."

In statistics, there are two ways to determine whether the evidence is likely or unlikely given the initial assumption:

- We could take the " critical value approach " (favored in many of the older textbooks).