Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Systematic Review | Definition, Example, & Guide

Systematic Review | Definition, Example & Guide

Published on June 15, 2022 by Shaun Turney . Revised on November 20, 2023.

A systematic review is a type of review that uses repeatable methods to find, select, and synthesize all available evidence. It answers a clearly formulated research question and explicitly states the methods used to arrive at the answer.

They answered the question “What is the effectiveness of probiotics in reducing eczema symptoms and improving quality of life in patients with eczema?”

In this context, a probiotic is a health product that contains live microorganisms and is taken by mouth. Eczema is a common skin condition that causes red, itchy skin.

Table of contents

What is a systematic review, systematic review vs. meta-analysis, systematic review vs. literature review, systematic review vs. scoping review, when to conduct a systematic review, pros and cons of systematic reviews, step-by-step example of a systematic review, other interesting articles, frequently asked questions about systematic reviews.

A review is an overview of the research that’s already been completed on a topic.

What makes a systematic review different from other types of reviews is that the research methods are designed to reduce bias . The methods are repeatable, and the approach is formal and systematic:

- Formulate a research question

- Develop a protocol

- Search for all relevant studies

- Apply the selection criteria

- Extract the data

- Synthesize the data

- Write and publish a report

Although multiple sets of guidelines exist, the Cochrane Handbook for Systematic Reviews is among the most widely used. It provides detailed guidelines on how to complete each step of the systematic review process.

Systematic reviews are most commonly used in medical and public health research, but they can also be found in other disciplines.

Systematic reviews typically answer their research question by synthesizing all available evidence and evaluating the quality of the evidence. Synthesizing means bringing together different information to tell a single, cohesive story. The synthesis can be narrative ( qualitative ), quantitative , or both.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Systematic reviews often quantitatively synthesize the evidence using a meta-analysis . A meta-analysis is a statistical analysis, not a type of review.

A meta-analysis is a technique to synthesize results from multiple studies. It’s a statistical analysis that combines the results of two or more studies, usually to estimate an effect size .

A literature review is a type of review that uses a less systematic and formal approach than a systematic review. Typically, an expert in a topic will qualitatively summarize and evaluate previous work, without using a formal, explicit method.

Although literature reviews are often less time-consuming and can be insightful or helpful, they have a higher risk of bias and are less transparent than systematic reviews.

Similar to a systematic review, a scoping review is a type of review that tries to minimize bias by using transparent and repeatable methods.

However, a scoping review isn’t a type of systematic review. The most important difference is the goal: rather than answering a specific question, a scoping review explores a topic. The researcher tries to identify the main concepts, theories, and evidence, as well as gaps in the current research.

Sometimes scoping reviews are an exploratory preparation step for a systematic review, and sometimes they are a standalone project.

Prevent plagiarism. Run a free check.

A systematic review is a good choice of review if you want to answer a question about the effectiveness of an intervention , such as a medical treatment.

To conduct a systematic review, you’ll need the following:

- A precise question , usually about the effectiveness of an intervention. The question needs to be about a topic that’s previously been studied by multiple researchers. If there’s no previous research, there’s nothing to review.

- If you’re doing a systematic review on your own (e.g., for a research paper or thesis ), you should take appropriate measures to ensure the validity and reliability of your research.

- Access to databases and journal archives. Often, your educational institution provides you with access.

- Time. A professional systematic review is a time-consuming process: it will take the lead author about six months of full-time work. If you’re a student, you should narrow the scope of your systematic review and stick to a tight schedule.

- Bibliographic, word-processing, spreadsheet, and statistical software . For example, you could use EndNote, Microsoft Word, Excel, and SPSS.

A systematic review has many pros .

- They minimize research bias by considering all available evidence and evaluating each study for bias.

- Their methods are transparent , so they can be scrutinized by others.

- They’re thorough : they summarize all available evidence.

- They can be replicated and updated by others.

Systematic reviews also have a few cons .

- They’re time-consuming .

- They’re narrow in scope : they only answer the precise research question.

The 7 steps for conducting a systematic review are explained with an example.

Step 1: Formulate a research question

Formulating the research question is probably the most important step of a systematic review. A clear research question will:

- Allow you to more effectively communicate your research to other researchers and practitioners

- Guide your decisions as you plan and conduct your systematic review

A good research question for a systematic review has four components, which you can remember with the acronym PICO :

- Population(s) or problem(s)

- Intervention(s)

- Comparison(s)

You can rearrange these four components to write your research question:

- What is the effectiveness of I versus C for O in P ?

Sometimes, you may want to include a fifth component, the type of study design . In this case, the acronym is PICOT .

- Type of study design(s)

- The population of patients with eczema

- The intervention of probiotics

- In comparison to no treatment, placebo , or non-probiotic treatment

- The outcome of changes in participant-, parent-, and doctor-rated symptoms of eczema and quality of life

- Randomized control trials, a type of study design

Their research question was:

- What is the effectiveness of probiotics versus no treatment, a placebo, or a non-probiotic treatment for reducing eczema symptoms and improving quality of life in patients with eczema?

Step 2: Develop a protocol

A protocol is a document that contains your research plan for the systematic review. This is an important step because having a plan allows you to work more efficiently and reduces bias.

Your protocol should include the following components:

- Background information : Provide the context of the research question, including why it’s important.

- Research objective (s) : Rephrase your research question as an objective.

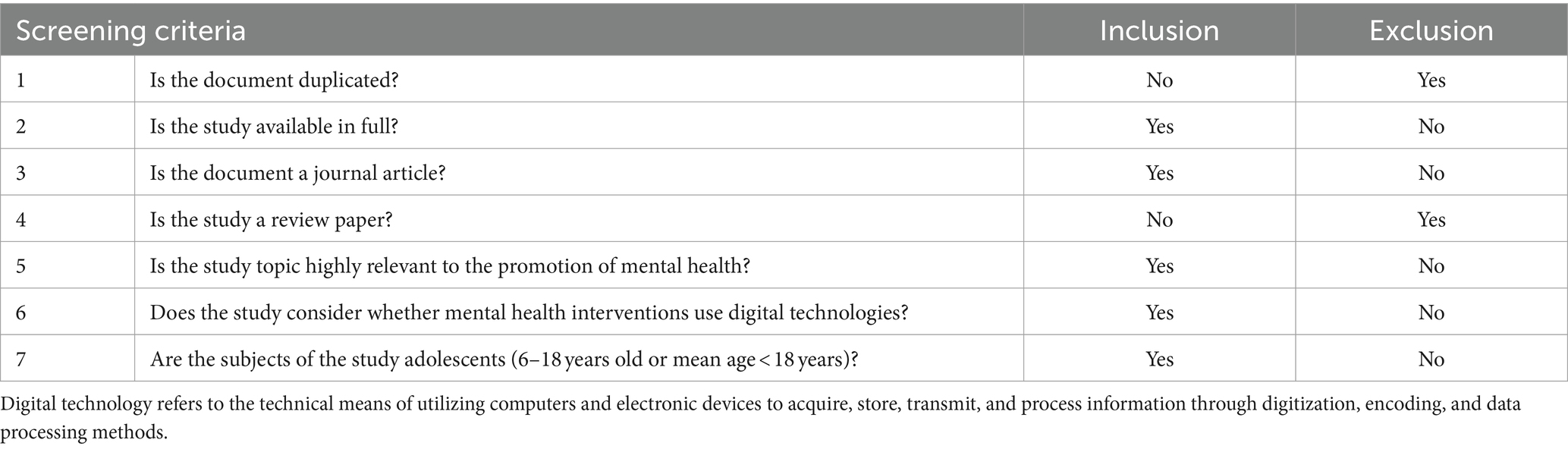

- Selection criteria: State how you’ll decide which studies to include or exclude from your review.

- Search strategy: Discuss your plan for finding studies.

- Analysis: Explain what information you’ll collect from the studies and how you’ll synthesize the data.

If you’re a professional seeking to publish your review, it’s a good idea to bring together an advisory committee . This is a group of about six people who have experience in the topic you’re researching. They can help you make decisions about your protocol.

It’s highly recommended to register your protocol. Registering your protocol means submitting it to a database such as PROSPERO or ClinicalTrials.gov .

Step 3: Search for all relevant studies

Searching for relevant studies is the most time-consuming step of a systematic review.

To reduce bias, it’s important to search for relevant studies very thoroughly. Your strategy will depend on your field and your research question, but sources generally fall into these four categories:

- Databases: Search multiple databases of peer-reviewed literature, such as PubMed or Scopus . Think carefully about how to phrase your search terms and include multiple synonyms of each word. Use Boolean operators if relevant.

- Handsearching: In addition to searching the primary sources using databases, you’ll also need to search manually. One strategy is to scan relevant journals or conference proceedings. Another strategy is to scan the reference lists of relevant studies.

- Gray literature: Gray literature includes documents produced by governments, universities, and other institutions that aren’t published by traditional publishers. Graduate student theses are an important type of gray literature, which you can search using the Networked Digital Library of Theses and Dissertations (NDLTD) . In medicine, clinical trial registries are another important type of gray literature.

- Experts: Contact experts in the field to ask if they have unpublished studies that should be included in your review.

At this stage of your review, you won’t read the articles yet. Simply save any potentially relevant citations using bibliographic software, such as Scribbr’s APA or MLA Generator .

- Databases: EMBASE, PsycINFO, AMED, LILACS, and ISI Web of Science

- Handsearch: Conference proceedings and reference lists of articles

- Gray literature: The Cochrane Library, the metaRegister of Controlled Trials, and the Ongoing Skin Trials Register

- Experts: Authors of unpublished registered trials, pharmaceutical companies, and manufacturers of probiotics

Step 4: Apply the selection criteria

Applying the selection criteria is a three-person job. Two of you will independently read the studies and decide which to include in your review based on the selection criteria you established in your protocol . The third person’s job is to break any ties.

To increase inter-rater reliability , ensure that everyone thoroughly understands the selection criteria before you begin.

If you’re writing a systematic review as a student for an assignment, you might not have a team. In this case, you’ll have to apply the selection criteria on your own; you can mention this as a limitation in your paper’s discussion.

You should apply the selection criteria in two phases:

- Based on the titles and abstracts : Decide whether each article potentially meets the selection criteria based on the information provided in the abstracts.

- Based on the full texts: Download the articles that weren’t excluded during the first phase. If an article isn’t available online or through your library, you may need to contact the authors to ask for a copy. Read the articles and decide which articles meet the selection criteria.

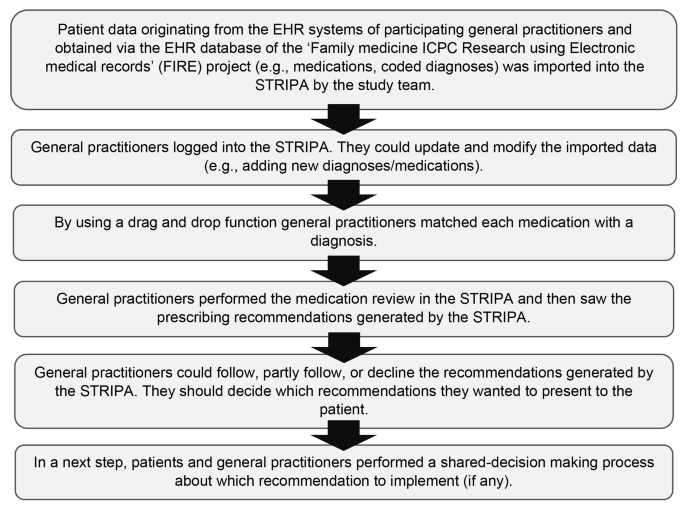

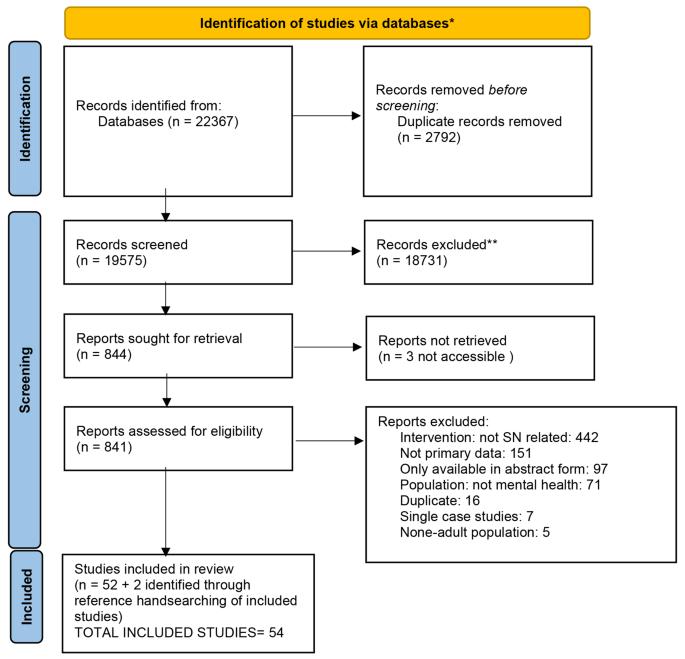

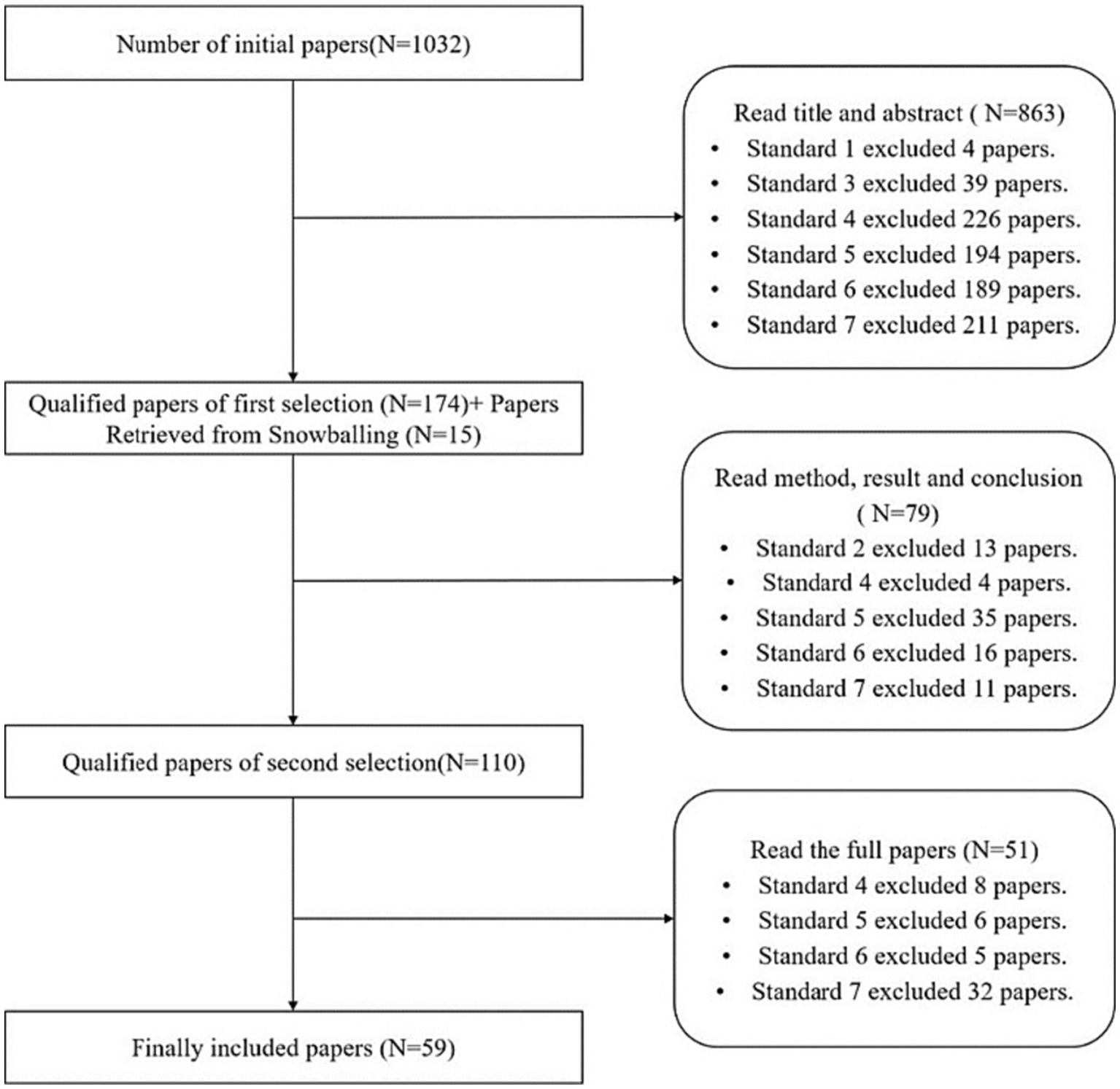

It’s very important to keep a meticulous record of why you included or excluded each article. When the selection process is complete, you can summarize what you did using a PRISMA flow diagram .

Next, Boyle and colleagues found the full texts for each of the remaining studies. Boyle and Tang read through the articles to decide if any more studies needed to be excluded based on the selection criteria.

When Boyle and Tang disagreed about whether a study should be excluded, they discussed it with Varigos until the three researchers came to an agreement.

Step 5: Extract the data

Extracting the data means collecting information from the selected studies in a systematic way. There are two types of information you need to collect from each study:

- Information about the study’s methods and results . The exact information will depend on your research question, but it might include the year, study design , sample size, context, research findings , and conclusions. If any data are missing, you’ll need to contact the study’s authors.

- Your judgment of the quality of the evidence, including risk of bias .

You should collect this information using forms. You can find sample forms in The Registry of Methods and Tools for Evidence-Informed Decision Making and the Grading of Recommendations, Assessment, Development and Evaluations Working Group .

Extracting the data is also a three-person job. Two people should do this step independently, and the third person will resolve any disagreements.

They also collected data about possible sources of bias, such as how the study participants were randomized into the control and treatment groups.

Step 6: Synthesize the data

Synthesizing the data means bringing together the information you collected into a single, cohesive story. There are two main approaches to synthesizing the data:

- Narrative ( qualitative ): Summarize the information in words. You’ll need to discuss the studies and assess their overall quality.

- Quantitative : Use statistical methods to summarize and compare data from different studies. The most common quantitative approach is a meta-analysis , which allows you to combine results from multiple studies into a summary result.

Generally, you should use both approaches together whenever possible. If you don’t have enough data, or the data from different studies aren’t comparable, then you can take just a narrative approach. However, you should justify why a quantitative approach wasn’t possible.

Boyle and colleagues also divided the studies into subgroups, such as studies about babies, children, and adults, and analyzed the effect sizes within each group.

Step 7: Write and publish a report

The purpose of writing a systematic review article is to share the answer to your research question and explain how you arrived at this answer.

Your article should include the following sections:

- Abstract : A summary of the review

- Introduction : Including the rationale and objectives

- Methods : Including the selection criteria, search method, data extraction method, and synthesis method

- Results : Including results of the search and selection process, study characteristics, risk of bias in the studies, and synthesis results

- Discussion : Including interpretation of the results and limitations of the review

- Conclusion : The answer to your research question and implications for practice, policy, or research

To verify that your report includes everything it needs, you can use the PRISMA checklist .

Once your report is written, you can publish it in a systematic review database, such as the Cochrane Database of Systematic Reviews , and/or in a peer-reviewed journal.

In their report, Boyle and colleagues concluded that probiotics cannot be recommended for reducing eczema symptoms or improving quality of life in patients with eczema. Note Generative AI tools like ChatGPT can be useful at various stages of the writing and research process and can help you to write your systematic review. However, we strongly advise against trying to pass AI-generated text off as your own work.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

A literature review is a survey of scholarly sources (such as books, journal articles, and theses) related to a specific topic or research question .

It is often written as part of a thesis, dissertation , or research paper , in order to situate your work in relation to existing knowledge.

A literature review is a survey of credible sources on a topic, often used in dissertations , theses, and research papers . Literature reviews give an overview of knowledge on a subject, helping you identify relevant theories and methods, as well as gaps in existing research. Literature reviews are set up similarly to other academic texts , with an introduction , a main body, and a conclusion .

An annotated bibliography is a list of source references that has a short description (called an annotation ) for each of the sources. It is often assigned as part of the research process for a paper .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, November 20). Systematic Review | Definition, Example & Guide. Scribbr. Retrieved March 21, 2024, from https://www.scribbr.com/methodology/systematic-review/

Is this article helpful?

Shaun Turney

Other students also liked, how to write a literature review | guide, examples, & templates, how to write a research proposal | examples & templates, what is critical thinking | definition & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Supplements

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 4, Issue Suppl 1

- Synthesising quantitative evidence in systematic reviews of complex health interventions

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Julian P T Higgins 1 ,

- José A López-López 1 ,

- Betsy J Becker 2 ,

- Sarah R Davies 1 ,

- Sarah Dawson 1 ,

- Jeremy M Grimshaw 3 , 4 ,

- Luke A McGuinness 1 ,

- Theresa H M Moore 1 , 5 ,

- Eva A Rehfuess 6 ,

- James Thomas 7 ,

- Deborah M Caldwell 1

- 1 Population Health Sciences , Bristol Medical School, University of Bristol , Bristol , UK

- 2 Department of Educational Psychology and Learning Systems, College of Education , Florida State University , Tallahassee , Florida , USA

- 3 Clinical Epidemiology Program , Ottawa Hospital Research Institute, The Ottawa Hospital , Ottawa , Ontario , Canada

- 4 Department of Medicine , University of Ottawa , Ottawa , Ontario , Canada

- 5 NIHR Collaboration for Leadership in Applied Health Care (CLAHRC) West , University Hospitals Bristol NHS Foundation Trust , Bristol , UK

- 6 Institute for Medical Information Processing , Biometry and Epidemiology, Pettenkofer School of Public Health, LMU Munich , Munich , Germany

- 7 EPPI-Centre, Department of Social Science , University College London , London , UK

- Correspondence to Professor Julian P T Higgins; julian.higgins{at}bristol.ac.uk

Public health and health service interventions are typically complex: they are multifaceted, with impacts at multiple levels and on multiple stakeholders. Systematic reviews evaluating the effects of complex health interventions can be challenging to conduct. This paper is part of a special series of papers considering these challenges particularly in the context of WHO guideline development. We outline established and innovative methods for synthesising quantitative evidence within a systematic review of a complex intervention, including considerations of the complexity of the system into which the intervention is introduced. We describe methods in three broad areas: non-quantitative approaches, including tabulation, narrative and graphical approaches; standard meta-analysis methods, including meta-regression to investigate study-level moderators of effect; and advanced synthesis methods, in which models allow exploration of intervention components, investigation of both moderators and mediators, examination of mechanisms, and exploration of complexities of the system. We offer guidance on the choice of approach that might be taken by people collating evidence in support of guideline development, and emphasise that the appropriate methods will depend on the purpose of the synthesis, the similarity of the studies included in the review, the level of detail available from the studies, the nature of the results reported in the studies, the expertise of the synthesis team and the resources available.

- meta-analysis

- complex interventions

- systematic reviews

- guideline development

Data availability statement

No additional data are available.

This is an open access article distributed under the terms of the Creative Commons Attribution IGO License ( CC BY NC 3.0 IGO ), which permits use, distribution,and reproduction in any medium, provided the original work is properly cited. In any reproduction of this article there should not be any suggestion that WHO or this article endorse any specific organization or products. The use of the WHO logo is not permitted. This notice should be preserved along with the article’s original URL.Disclaimer: The author is a staff member of the World Health Organization. The author alone is responsible for the views expressed in this publication and they do not necessarily represent the views, decisions or policies of the World Health Organization.

https://doi.org/10.1136/bmjgh-2018-000858

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Summary box

Quantitative syntheses of studies on the effects of complex health interventions face high diversity across studies and limitations in the data available.

Statistical and non-statistical approaches are available for tackling intervention complexity in a synthesis of quantitative data in the context of a systematic review.

Appropriate methods will depend on the purpose of the synthesis, the number and similarity of studies included in the review, the level of detail available from the studies, the nature of the results reported in the studies, the expertise of the synthesis team and the resources available.

We offer considerations for selecting methods for synthesis of quantitative data to address important types of questions about the effects of complex interventions.

Public health and health service interventions are typically complex. They are usually multifaceted, with impacts at multiple levels and on multiple stakeholders. Also, the systems within which they are implemented may change and adapt to enhance or dampen their impact. 1 Quantitative syntheses ('meta-analyses’) of studies of complex interventions seek to integrate quantitative findings across multiple studies to achieve a coherent message greater than the sum of their parts. Interest is growing on how the standard systematic review and meta-analysis toolkit can be enhanced to address complexity of interventions and their impact. 2 A recent report from the Agency for Healthcare Research and Quality and a series of papers in the Journal of Clinical Epidemiology provide useful background on some of the challenges. 3–6

This paper is part of a series to explore the implications of complexity for systematic reviews and guideline development, commissioned by WHO. 7 Clearly, and as covered by other papers in this series, guideline development encompasses the consideration of many different aspects, 8 such as intervention effectiveness, economic considerations, acceptability 9 or certainty of evidence, 10 and requires the integration of different types of quantitative as well as qualitative evidence. 11 12 This paper is specifically concerned with methods available for the synthesis of quantitative results in the context of a systematic review on the effects of a complex intervention. We aim to point those collating evidence in support of guideline development to methodological approaches that will help them integrate the quantitative evidence they identify. A summary of how these methods link to many of the types of complexity encountered is provided in table 1 , based on the examples provided in a table from an earlier paper in the series. 1 An annotated list of the methods we cover is provided in table 2 .

- View inline

Quantitative synthesis possibilities to address aspects of complexity

Quantitative graphical and synthesis approaches mentioned in the paper, with their main strengths and weaknesses in the context of complex interventions

We begin by reiterating the importance of starting with meaningful research questions and an awareness of the purpose of the synthesis and any relevant background knowledge. An important issue in systematic reviews of complex interventions is that data available for synthesis are often extremely limited, due to small numbers of relevant studies and limitations in how these studies are conducted and their results are reported. Furthermore, it is uncommon for two studies to evaluate exactly the same intervention, in part because of the interventions’ inherent complexity. Thus, each study may be designed to provide information on a unique context or a novel intervention approach. Outcomes may be measured in different ways and at different time points. We therefore discuss possible approaches when data are highly limited or highly heterogeneous, including the use of graphical approaches to present very basic summary results. We then discuss statistical approaches for combining results and for understanding the implications of various kinds of complexity.

In several places we draw on an example of a review undertaken to inform a recent WHO guideline on protecting, promoting and supporting breast feeding. 13 The review seeks to determine the effects of interventions to promote breast feeding delivered in five types of settings (health services, home, community, workplace, policy context or a combination of settings). 8 The included interventions were predominantly multicomponent, and were implemented in complex systems across multiple contexts. The review included 195 studies, including many from low-income and middle-income countries, and concluded that interventions should be delivered in a combination of settings to achieve high breastfeeding rates.

The importance of the research question

The starting point in any synthesis of quantitative evidence is a clear purpose. The input of stakeholders is critical to ensure that questions are framed appropriately, addressing issues important to those commissioning, delivering and affected by the intervention. Detailed discussion of the development of research questions is provided in an earlier paper in the series, 1 and a subsequent paper explains the importance of taking context into account. 9 The first of these papers describes two possible perspectives. A complex interventions perspective emphasises the complexities involved in conceptualising, specifying and implementing the intervention per se, including the array of possibly interacting components and the behaviours required to implement it. A complex systems perspective emphasises the complexity of the systems into which the intervention is introduced, including possible interactions between the intervention and the system, interactions between individuals within the system and how the whole system responds to the intervention.

The simplest purpose of a systematic review is to determine whether a particular type of complex intervention (or class of interventions) is effective compared with a ‘usual practice’ alternative. The familiar PICO framework is helpful for framing the review: 14 in the PICO framework, a broad research question about effectiveness is uniquely specified by describing the participants (‘P’, including the setting and prevailing conditions) to which the intervention is to be applied; the intervention (‘I’) and comparator (‘C’) of interest, and the outcomes (‘O’, including their time course) that might be impacted by the intervention. In the breastfeeding review, the primary synthesis approach was to combine all available studies, irrespective of setting, and perform separate meta-analyses for different outcomes. 15

More useful than a review that asks ‘does a complex intervention work?’ is one that determines the situations in which a complex intervention has a larger or smaller effect. Indeed, research questions targeted by syntheses in the presence of complexity often dissect one or more of the PICO elements to explore how intervention effects vary both within and across studies (ie, treating the PICO elements as ‘moderators’). For instance, analyses may explore variation across participants, settings and prevailing conditions (including context); or across interventions (including different intervention components that may be present or absent in different studies); or across outcomes (including different outcome measures, at different levels of the system and at different time points) on which effects of the intervention occur. In addition, there may be interest in how aspects of the underlying system or the intervention itself mediate the effects, or in the role of intermediate outcomes on the pathway from intervention to impact. 16 In the breastfeeding review, interest moved from the overall effects across interventions to investigations of how effects varied by such factors as intervention delivery setting, high-income versus low-income country, and urban versus rural setting. 15

The role of logic models to inform a synthesis

An earlier paper describes the benefits of using system-based logic models to characterise a priori theories about how the system operates. 1 These provide a useful starting point for most syntheses since they encourage consideration of all aspects of complexity in relation to the intervention or the system (or both). They can help identify important mediators and moderators, and inform decisions about what aspects of the intervention and system need to be addressed in the synthesis. As an example, a protocol for a review of the health effects of environmental interventions to reduce the consumption of sugar-sweetened beverages included a system-based logic model, detailing how the characteristics of the beverages, and the physiological characteristics and psychological characteristics of individuals, are thought to impact on outcomes such as weight gain and cardiovascular disease. 17 The logic model informs the selection of outcomes and the general plans for synthesis of the findings of included studies. However, system-based models do not usually include details of how implementation of an intervention into the system is likely to affect subsequent outcomes. They therefore have a limited role in informing syntheses that seek to explain mechanisms of action.

A quantitative synthesis may draw on a specific proposed framework for how an intervention might work; these are sometimes referred to as process-orientated logic models, and may be strongly driven by qualitative research evidence. 12 They represent causal processes, describing what components or aspects of an intervention are thought to impact on what behaviours and actions, and what the further consequences of these impacts are likely to be. 18 They may encompass mediators of effect and moderators of effect. A synthesis may simply adopt the proposed causal model at face value and attempt to quantify the relationships described therein. Where more than one possible causal model is available, a synthesis may explore which of the models is better supported by the data, for example, by examining the evidence for specific links within the model or by identifying a statistical model that corresponds to the overall causal model. 18 19

A systematic review on community-level interventions for improving access to food in low-income and middle-income countries was based on a logic model that depicts how interventions might lead to improved health status. 20 The model includes direct effects, such as increased financial resources of individuals and decreased food prices; intermediate effects, such as increased quantity of food available and increase in intake; and main outcomes of interest, such as nutritional status and health indicators. The planned statistical synthesis, however, was to tackle these one at a time.

Considering the types of studies available

Studies of the effects of complex interventions may be randomised or non-randomised, and often involve clustering of participants within social or organisational units. Randomised trials, if sufficiently large, provide the most convincing evidence about the effects of interventions because randomisation should result in intervention and comparator groups with similar distributions of both observed and unobserved baseline characteristics. However, randomised trials of complex interventions may be difficult or impossible to undertake, or may be performed only in specific contexts, yielding results that are not generalisable. Non-randomised study designs include so-called ‘quasi-experiments’ and may be longitudinal studies, including interrupted time series and before-after studies, with or without a control group. Non-randomised studies are at greater risk of bias, sometimes substantially so, although may be undertaken in contexts that are more relevant to decision making. Analyses of non-randomised studies often use statistical controls for confounders to account for differences between intervention groups, and challenges are introduced when different sets of confounders are used in different studies. 21 22

Randomised trials and non-randomised studies might both be included in a review, and analysts may have to decide whether to combine these in one synthesis, and whether to combine results from different types of non-randomised studies in a single analysis. Studies may differ in two ways: by answering different questions, or by answering similar questions with different risks of bias. The research questions must be sufficiently similar and the studies sufficiently free of bias for a synthesis to be meaningful. In the breastfeeding review, randomised, quasi-experimental and observational studies were combined; no evidence suggested that the effects differed across designs. 15 In practice, many methodologists generally recommend against combining randomised with non-randomised studies. 23

Preparing for a quantitative synthesis

Before undertaking a quantitative synthesis of complex interventions, it can be helpful to begin the synthesis non-quantitatively, looking at patterns and characteristics of the data identified. Systematic tabulation of information is recommended, and this might be informed by a prespecified logic model. The most established framework for non-quantitative synthesis is that proposed by Popay et al . 24 The Cochrane Consumers and Communication group succinctly summarise the process as an 'investigation of the similarities and the differences between the findings of different studies, as well as exploration of patterns in the data’. 25 Another useful framework was described by Petticrew and Roberts. 26 They identify three stages in the initial narrative synthesis: (1) Organisation of studies into logical categories, the structure of which will depend on the purpose of the synthesis, possibly relating to study design, outcome or intervention types. (2) Within-study analysis, involving the description of findings within each study. (3) Cross-study synthesis, in which variations in study characteristics and potential biases are integrated and the range of effects described. Aspects of this process are likely to be implemented in any systematic review, even when a detailed quantitative synthesis is undertaken.

In some circumstances the available data are too diverse, too non-quantitative or too sparse for a quantitative synthesis to be meaningful even if it is possible. The best that can be achieved in many reviews of complex interventions is a non-quantitative synthesis following the guidance given in the above frameworks.

Options when effect size estimates cannot be obtained or studies are too diverse to combine

Graphical approaches.

Graphical displays can be very valuable to illustrate patterns in results of studies. 27 We illustrate some options in figure 1 . Forest plots are the standard illustration of the results of multiple studies (see figure 1 , panel A), but require a similar effect size estimate from each study. For studies of complex interventions, the diversity of approaches to the intervention, the context, 1 evaluation approaches and reporting differences can lead to considerable variation across studies in what results are available. Some novel graphical approaches have been proposed for such situations. A recent development is the albatross plot, which plots p values against sample sizes, with approximate effect-size contours superimposed (see figure 1 , panel B). 28 The contours are computed from the p values and sample sizes, based on an assumption about the type of analysis that would have given rise to the p values. Although these plots are designed for situations when effect size estimates are not available, the contours can be used to infer approximate effect sizes from studies that are analysed and reported in highly diverse ways. Such an advantage may prove to be a disadvantage, however, if the contours are overinterpreted.

- Download figure

- Open in new tab

- Download powerpoint

Example graphical displays of data from a review of interventions to promote breast feeding, for the outcome of continued breast feeding up to 23 months. 15 Panel A: Forest plot for relative risk (RR) estimates from each study. Panel B: Albatross plot of p value against sample size (effect contours drawn for risk ratios assuming a baseline risk of 0.15; sample sizes and baseline risks extracted from the original papers by the current authors); Panel C: Harvest plot (heights reflect design: randomised trials (tall), quasi-experimental studies (medium), observational studies (short); bar shading reflects follow-up: longest follow-up (black) to shortest follow-up (light grey) or no information (white)). Panel D: Bubble plot (bubble sizes and colours reflect design: randomised trials (large, green), quasi-experimental studies (medium, red), observational studies (small, blue); precision defined as inverse of the SE of each effect estimate (derived from the CIs); categories are: “Potential Harm”: RR <0.8; “No Effect”: RRs between 0.8 and 1.25; “Potential Benefit”: RR >1.25 and CI includes RR=1; “Benefit”: RR >1.25 and CI excludes RR=1).

Harvest plots have been proposed by Ogilvie et al as a graphical extension of a vote counting approach to synthesis (see figure 1 , panel C). 29 However, approaches based on vote counting of statistically significant results have been criticised on the basis of their poor statistical properties, and because statistical significance is an outdated and unhelpful notion. 30 The harvest plot is a matrix of small illustrations, with different outcome domains defining rows and different qualitative conclusions (negative effect, no effect, positive effect) defining columns. Each study is represented by a bar that is positioned according to its measured outcome and qualitative conclusion. Bar heights and shadings can depict features of the study, such as objectivity of the outcome measure, suitability of the study design and study quality. 29 31 A similar idea to the harvest plot is the effect direction plot proposed by Thomson and Thomas. 32

A device to plot the findings from a large and complex collection of evidence is a bubble plot (see figure 1 , panel D). A bubble plot illustrates the direction of each finding (or whether the finding was unclear) on a horizontal scale, using a vertical scale to indicate the volume of evidence, and with bubble sizes to indicate some measure of credibility of each finding. Such an approach can also depict findings of collections of studies rather than individual studies, and was used successfully, for example, to summarise findings from a review of systematic reviews of the effects of acupuncture on various indications for pain. 33

Statistical methods not based on effect size estimates

We have mentioned that a frequent problem is that standard meta-analysis methods cannot be used because data are not available in a similar format from every study. In general, the core principles of meta-analysis can be applied even in this situation, as is highlighted in the Cochrane Handbook , by addressing the questions: ‘What is the direction of effect?’; 'What is the size of effect?’; ‘Is the effect consistent across studies?’; and 'What is the strength of evidence for the effect?’. 34

Alternatives to the estimation of effect sizes could be used more often than they are in practice, allowing some basic statistical inferences despite diversely reported results. The most fundamental analysis is to test the overall null hypothesis of no effect in any of the studies. Such a test can be undertaken using only minimally reported information from each study. At its simplest, a binomial test can be performed using only the direction of effect observed in each study, irrespective of its CI or statistical significance. 35 Where exact p values are available as well as the direction of effect, a more powerful test can be performed by combining these using, for example, Fisher’s combination of p values. 36 It is important that these p values are computed appropriately, however, accounting for clustering or matching of participants within the studies. Rejecting the null model based on such tests provides no information about the magnitude of the effect, providing information only on whether at least one study shows an effect is present, and if so, its direction. 37

Standard synthesis methods

Meta-analysis for overall effect.

Probably the most familiar approach to meta-analysis is that of estimating a single summary effect across similar studies. This simple approach lends itself to the use of forest plots to display the results of individual studies as well as syntheses, as illustrated for the breastfeeding studies in figure 1 (panel A). This analysis addresses the broad question of whether evidence from a collection of studies supports an impact of the complex intervention of interest, and requires that every study makes a comparison of a relevant intervention against a similar alternative. In the context of complex interventions, this is described by Caldwell and Welton as the ‘lumping’ approach, 38 and by Guise et al as the ‘holistic’ approach. 5 6 One key limitation of the simple approach is that it requires similar types of data from each study. A second limitation is that the meta-analysis result may have limited relevance when the studies are diverse in their characteristics. Fixed-effect models, for instance, are unlikely to be appropriate for complex interventions because they ignore between-studies variability in underlying effect sizes. Results based on random-effects models will need to be interpreted by acknowledging the spread of effects across studies, for example, using prediction intervals. 39

A common problem when undertaking a simple meta-analysis is that individual studies may report many effect sizes that are correlated with each other, for example, if multiple outcomes are measured, or the same outcome variable is measured at several time points. Numerous approaches are available for dealing with such multiplicity, including multivariate meta-analysis, multilevel modelling, and strategies for selecting effect sizes. 40 A very simple strategy that has been used in systematic reviews of complex interventions is to take the median effect size within each study, and to summarise these using the median of these effect sizes across studies. 41

Exploring heterogeneity

Diversity in the types of participants (and contexts), interventions and outcomes are key to understanding sources of complexity. 9 Many of these important sources of heterogeneity are most usefully examined—to the extent that they can reliably be understood—using standard approaches for understanding variability across studies, such as subgroup analyses and meta-regression.

A simple strategy to explore heterogeneity is to estimate the overall effect separately for different levels of a factor using subgroup analyses (referring to subgrouping studies rather than participants). 42 As an example, McFadden et al conducted a systematic review and meta-analysis of 73 studies of support for healthy breastfeeding mothers with healthy term babies. 43 They calculated separate average effects for interventions delivered by a health professional, a lay supporter or with mixed support, and found that the effect on cessation of exclusive breast feeding at up to 6 months was greater for lay support compared with professionals or mixed support (p=0.02). Guise et al provide several ways of grouping studies according to their interventions, for example, grouping studies by key components, by function or by theory. 5 6

Meta-regression provides a flexible generalisation to subgroup analyses, whereby study-level covariates are included in a regression model using effect size estimates as the dependent variable. 44 45 Both continuous and categorical covariates can be included in such models; with a single categorical covariate, the approach is essentially equivalent to subgroup analyses. Meta-regression with continuous covariates in theory allows the extrapolation of relationships to contexts that were not examined in any of the studies, but this should generally be avoided. For example, if the effect of an interventional approach appears to increase as the size of the group to which it is applied decreases, this does not mean that it will work even better when applied to a single individual. More generally, the mathematical form of the relationship modelled in a meta-regression requires careful selection. Most often a linear relationship is assumed, but a linear relationship does not permit step changes such as might occur if an interventional approach requires a particular level of some feature of the underlying system before it has an effect.

Several texts provide guidance for using subgroup analysis and meta-regression in a general context 45 46 and for complex interventions. 3 4 47 In principle, many aspects of complexity in interventions can be addressed using these strategies, to create an understanding of the ‘response surface’. 48–50 However, in practice, the number of studies is often too small for reliable conclusions to be drawn. In general, subgroup analysis and meta-regression are fraught with dangers associated with having few studies, many sources of variation across study features and confounding of these features with each other as well as with other, often unobserved, variables. It is therefore important to prespecify a small number of plausible sources of diversity so as to reduce the danger of reaching spurious conclusions based on study characteristics that correlate with the effects of the interventions but are not the cause of the variation. The ability of statistical analyses to identify true sources of heterogeneity will depend on the number of studies, the sizes of the studies and the true differences between effects in studies with different characteristics.

Synthesis methods for understanding components of the intervention

When interventions comprise distinct components, it is attractive to separate out the individual effects of these components. 51 Meta-regression can be used for this, using covariates to code the presence of particular features in each intervention implementation. As an example, Blakemore et al analysed 39 intervention comparisons from 33 independent studies aiming to reduce urgent healthcare use in adults with asthma. 52 Effect size estimates were coded according to components used in the interventions, and the authors found that multicomponent interventions including skills training, education and relapse prevention appeared particularly effective. In another example, of interventions to support family caregivers of people with Alzheimer’s disease, 53 the authors used methods for decomposing complex interventions proposed by Czaja et al , 54 and created covariates that reduced the complexity of the interventions to a small number of features about the intensity of the interventions. More sophisticated models for examining components have been described by Welton et al , 55 Ivers et al 56 and Madan et al . 57

A component-level approach may be useful when there is a need to disentangle the ‘active ingredients’ of an intervention, for example, when adapting an existing intervention for a new setting. However, components-based approaches require assumptions, such as whether individual components are additive or interact with each other. Furthermore, the effects of components can be difficult to estimate if they are used only in particular contexts or populations, or are strongly correlated with use of other components. An alternative approach is to treat each combination of components as a separate intervention. These separate interventions might then be compared in a single analysis using network meta-analysis. A network meta-analysis combines results from studies comparing two or more of a larger set of interventions, using indirect comparisons via common comparators to rank-order all interventions. 47 58 59 As an example, Achana et al examined the effectiveness of safety interventions on the uptake of three poisoning prevention practices in households with children. Each singular combination of intervention components was defined as a separate intervention in the network. 60 Network meta-analysis may also be useful when there is a need to compare multiple interventions to answer an ‘in principle’ question of which intervention is most effective. Consideration of the main goals of the synthesis will help those aiming to prepare guidelines to decide which of these approaches is most appropriate to their needs.

A case study exploring components is provided in box 1 , and an illustration is provided in figure 2 . The component-based analysis approach can be likened to a factorial trial, in that it attempts to separate out the effects of individual components of the complex interventions, and the network meta-analysis approach can be likened to a multiarm trial approach, where each complex intervention in the set of studies is a different arm in the trial. 47 Deciding between the two approaches can leave the analyst caught between the need to ‘split’ components to reflect complexity (and minimise heterogeneity) and ‘lump’ to make an analysis feasible. Both approaches can be used to examine other features of interventions, including interventions designed for delivery at different levels. For example, a review of the effects of interventions for children exposed to domestic violence and abuse included studies of interventions targeted at children alone, parents alone, children and parents together, and parents and children separately. 61 A network meta-analysis approach was taken to the synthesis, with the people targeted by the intervention used as a distinguishing feature of the interventions included in the network.

Example of understanding components of psychosocial interventions for coronary heart disease

Welton et al reanalysed data from a Cochrane review 89 of randomised controlled trials assessing the effects of psychological interventions on mortality and morbidity reduction for people with coronary heart disease. 55 The Cochrane review focused on the effectiveness of any psychological intervention compared with usual care, and found evidence that psychological interventions reduced non-fatal reinfarctions and depression and anxiety symptoms. The Cochrane review authors highlighted the large heterogeneity among interventions as an important limitation of their review.

Welton et al were interested in the effects of the different intervention components. They classified interventions according to which of five key components were included: educational, behavioural, cognitive, relaxation and psychosocial support ( figure 2 ). Their reanalysis examined the effect of each component in three different ways: (1) An additive model assuming no interactions between components. (2) A two-factor interaction model, allowing for interactions between pairs of components. (3) A network meta-analysis, defining each combination of components as a separate intervention, therefore allowing for full interaction between components. Results suggested that interventions with behavioural components were effective in reducing the odds of all-cause mortality and non-fatal myocardial infarction, and that interventions with behavioural and/or cognitive components were effective for reducing depressive symptoms.

Intervention components in the studies integrated by Welton et al (a sample of 18 from 56 active treatment arms). EDU, educational component; BEH, behavioural component; COG, cognitive component; REL, relaxation component; SUP, psychosocial support component.

A common limitation when implementing these quantitative methods in the context of complex interventions is that replication of the same intervention in two or more studies is rare. Qualitative comparative analysis (QCA) might overcome this problem, being designed to address the ’small N; many variables’ problem. 62 QCA involves: (1) Identifying theoretically driven thresholds for determining intervention success or failure. (2) Creating a 'truth table’, which takes the form of a matrix, cross-tabulating all possible combinations of conditions (eg, participant and intervention characteristics) against each study and its associated outcomes. (3) Using Boolean algebra to eliminate redundant conditions and to identify configurations of conditions that are necessary and/or sufficient to trigger intervention success or failure. QCA can usefully complement quantitative integration, sometimes in the context of synthesising diverse types of evidence.

Synthesis methods for understanding mechanisms of action

An alternative purpose of a synthesis is to gain insight into the mechanisms of action behind an intervention, to inform its generalisability or applicability to a particular context. Such syntheses of quantitative data may complement syntheses of qualitative data, 11 and the two forms might be integrated. 12 Logic models, or theories of action, are important to motivate investigations of mechanism. The synthesis is likely to focus on intermediate outcomes reflecting intervention processes, and on mediators of effect (factors that influence how the intervention affects an outcome measure). Two possibilities for analysis are to use these intermediate measurements as predictors of main outcomes using meta-regression methods, 63 or to use multivariate meta-analysis to model the intermediate and main outcomes simultaneously, exploiting and estimating the correlations between them. 64 65 If the synthesis suggests that hypothesised chains of outcomes hold, this lends weight to the theoretical model underlying the hypothesis.

An approach to synthesis closely identified with this category of interventions is model-driven meta-analysis, in which different sources of evidence are integrated within a causal path model akin to a directed acyclic graph. A model-driven meta-analysis is an explanatory analysis. 66 It attempts to go further than a standard meta-analysis or meta-regression to explore how and why an intervention works, for whom it works, and which aspects of the intervention (factors) are driving overall effect. Such syntheses have been described in frequentist 19 67–70 and Bayesian 71 72 frameworks and are variously known as model-driven meta-analysis, linked meta-analysis, meta-mediation analysis and meta-analysis of structural equation models. In their simplest form, standard meta-analyses estimate a summary correlation independently for each pair of variables in the model. The approach is inherently multivariate, requiring the estimation of multiple correlations (which, if obtained from a single study, are also not independent). 73–75 Each study is likely to contribute fragments of the correlation matrix. A summary correlation matrix, combined either by fixed-effects or random-effects methods, then serves as the input for subsequent analysis via a standardised regression or structural equation model.

An example is provided in box 2 . The model in figure 3 postulates that the effect of ‘Dietary adherence’ on ‘Diabetes complications’ is not direct but is mediated by ‘Metabolic control’. 76 The potential for model-driven meta-analysis to incorporate such indirect effects also allows for mediating effects to be explicitly tested and in so doing allows the meta-analyst to identify and explore the mechanisms underpinning a complex intervention. 77

Theoretical diabetes care model (adapted from Brown et al 68 ).

Example of a model-driven meta-analysis for type 2 diabetes

Brown et al present a model-driven meta-analysis of correlational research on psychological and motivational predictors of diabetes outcomes, with medication and dietary adherence factors as mediators. 76 In a linked methodological paper, they present the a priori theoretical model on which their analysis is based. 68 The model is simplified in figure 3 , and summarised for the dietary adherence pathway only. The aim of their full analysis was to determine the predictive relationships among psychological factors and motivational factors on metabolic control and body mass index (BMI), and the role of behavioural factors as possible mediators of the associations among the psychological and motivational factors and metabolic control and BMI outcomes.

The analysis is based on a comprehensive systematic review. Due to the number of variables in their full model, 775 individual correlational or predictive studies reported across 739 research papers met eligibility criteria. Correlations between each pair of variables in the model were summarised using an overall average correlation, and homogeneity assessed. Multivariate analyses were used to estimate a combined correlation matrix. These results were used, in turn, to estimate path coefficients for the predictive model and their standard errors. For the simplified model illustrated here, the results suggested that coping and self-efficacy were strongly related to dietary adherence, which was strongly related to improved glycaemic control and, in turn, a reduction in diabetic complications.

Synthesis approaches for understanding complexities of the system

Syntheses may seek to address complexities of the system to understand either the impact of the system on the effects of the intervention or the effects of the intervention on the system. This may start by modelling the salient features of the system’s dynamics, rather than focusing on interventions. Subgroup analysis and meta-regression are useful approaches for investigating the extent to which an intervention’s effects depend on baseline features of the system, including aspects of the context. Sophisticated meta-regression models might investigate multiple baseline features, using similar approaches to the component-based meta-analyses described earlier. Specifically, aspects of context or population characteristics can be regarded as ‘components’ of the system into which the intervention is introduced, and similar statistical modelling strategies used to isolate effects of individual factors, or interactions between them.

When interventions act at multiple levels, it may be important to understand the effects at these different levels. Outcomes may be measured at different levels (eg, at patient, clinician and clinical practice levels) and analysed separately. Qualitative research plays a particularly important role in identifying the outcomes that should be assessed through quantitative synthesis. 12 Care is needed to ensure that the unit of analysis issues are addressed. For example, if clinics are the unit of randomisation, then outcomes measured at the clinic level can be analysed using standard methods, whereas outcomes measured at the level of the patient within the clinic would need to account for clustering. In fact, multiple dependencies may arise in such data, when patients receive care in small groups. Detailed investigations of effect at different levels, including interactions between the levels, would lend themselves to multilevel (hierarchical) models for synthesis. Unfortunately, individual participant data at all levels of the hierarchy are needed for such analyses.

Model-based approaches also offer possibilities for addressing complex systems; these include economic models, mathematical models and systems science methods generally. 78–80 Broadly speaking, these provide mathematical representations of logic models, and analyses may involve incorporation of empirical data (eg, from systematic reviews), computer simulation, direct computation or a mixture of these. Multiparameter evidence synthesis methods might be used. 81 82 Approaches include models to represent systems (eg, systems dynamics models) and approaches that simulate individuals within the system (eg, agent-based models). 79 Models can be particularly useful when empirical evidence does not address all important considerations, such as ‘real-world’ contexts, long-term effects, non-linear effects and complexities such as feedback loops and threshold effects. An example of a model-based approach to synthesis is provided in box 3 . The challenge when adopting these approaches is often in the identification of system components, and accurately estimating causes and effects (and uncertainties). There are few examples of the use of these analytical tools in systematic reviews, but they may be useful when the focus of analysis is on understanding the causes of complexity in a given system rather than on the impact of an intervention.

Example of a mathematical modelling approach for soft drinks industry levy

Briggs et al examined the potential impact of a soft drinks levy in the UK, considering possible different types of response to the levy by industry. 90 Various scenarios were posited, with effects on health outcomes informed by empirical data from randomised trials and cohort studies of association between sugar intake and body weight, diabetes and dental caries. Figure 4 provides a simple characterisation of how the empirical data were fed into the model. Inputs into the model included levels of consumption of various types of drinks (by age and sex), volume of drinks sales, and baseline levels of obesity, diabetes and dental caries (by age and sex). The authors concluded that health gains would be greatest if industry reacted by reformulating their products to include less sugar.

Simplified version of the conceptual model used by Briggs et al ( a dapted from Briggs et al 90 ).

Considerations of bias and relevance

It is always important to consider the extent to which (1) The findings from each study have internal validity, particularly for non-randomised studies which are typically at higher risk of bias. (2) Studies may have been conducted but not reported because of unexciting findings. (3) Each study is applicable to the purposes of the review, that is, has external validity (or ‘directness’), in the language of the Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group. 83 At minimum, internal and external validity should be examined and reported, and the risk of publication bias assessed, and these can be achieved through the GRADE framework. 10 With sufficient studies, information collected might be used in meta-regression analyses to evaluate empirically whether studies with and without specific sources of bias or indirectness differ in their results.

It may be desirable to learn about a specific setting, intervention type or outcome measure more directly than others. For example, to inform a decision for a low-income setting, emphasis should be placed on results of studies performed in low-income countries. One option is to restrict the synthesis to these studies. An alternative is to model the dependence of an intervention’s effect on some feature(s) related to the income setting, and extract predictions from the model that are most relevant to the setting of interest. This latter approach makes fuller use of available data, but relies on stronger assumptions.

Often, however, the accumulated studies are too few or too disparate to draw conclusions about the impact of bias or relevance. On rare occasions, syntheses might implement formal adjustments of individual study results for likely biases. Such adjustments may be made by imposing prior distributions to depict the magnitude and direction of any biases believed to exist. 84 85 The choice of a prior distribution may be informed by formal assessments of risk of bias, by expert judgement, or possibly by empirical data from meta-epidemiological studies of biases in randomised and/or non-randomised studies. 86 For example, Wolf et al implemented a prior distribution based on findings of a meta-epidemiological study 87 to adjust for lack of blinding in studies of interventions to improve quality of point-of-use water sources in low-income and middle-income settings. 88 Unfortunately, empirical evidence of bias is mostly limited to clinical trials, is weak for trials of public health and social care interventions, and is largely non-existent for non-randomised studies.

Our review of quantitative synthesis methods for evaluating the effects of complex interventions has outlined many possible approaches that might be considered by those collating evidence in support of guideline development. We have described three broad categories: (1) Non-quantitative methods, including tabulation, narrative and graphical approaches. (2) Standard meta-analysis methods, including meta-regression to investigate study-level moderators of effect. (3) More advanced synthesis methods, in which models allow exploration of intervention components, investigation of both moderators and mediators, examination of mechanisms, and exploration of complexities of the system.

The choice among these approaches will depend on the purpose of the synthesis, the similarity of the studies included in the review, the level of detail available from the studies, the nature of the results reported in the studies, the expertise of the synthesis team, and the resources available. Clearly the advanced methods require more expertise and resources than the simpler methods. Furthermore, they require a greater level of detail and typically a sizeable evidence base. We therefore expect them to be used seldomly; our aim here is largely to articulate what they can achieve so that they can be adopted when they are appropriate. Notably, the choice among these approaches will also depend on the extent to which guideline developers and users at global, national or local levels understand and are willing to base their decisions on different methods. Where possible, it will thus be important to involve concerned stakeholders during the early stages of the systematic review process to ensure the relevance of its findings.

Complexity is common in the evaluation of public health interventions at individual, organisational or community levels. To help systematic review and guideline development teams decide how to address this complexity in syntheses of quantitative evidence, we summarise considerations and methods in tables 1 and 2 . We close with the important remark that quantitative synthesis is not always a desirable feature of a systematic review. Whereas some sophisticated methods are available to deal with a variety of complex problems, on many occasions—perhaps even the majority in practice—the studies may be too different from each other, too weak in design or have data too sparse, for statistical methods to provide insight beyond a commentary on what evidence has been identified.

Acknowledgments

The authors thank the following for helpful comments on earlier drafts of the paper: Philippa Easterbrook, Matthias Egger, Anayda Portela, Susan L Norris, Mark Petticrew.

- Petticrew M ,

- Thomas J , et al

- Anderson L ,

- Elder R , et al

- Rehfuess E ,

- Noyes J , et al

- Viswanathan M , et al

- World Health Organization

- Rehfuess EA ,

- Stratil JM ,

- Scheel IB , et al

- Flemming K , et al

- Montgomery P ,

- Movsisyan A ,

- Grant SP , et al

- Flemming K ,

- Garside R , et al

- Moore G , et al

- Richardson WS ,

- Wilson MC ,

- Nishikawa J , et al

- Chowdhury R ,

- Sankar MJ , et al

- Collins D ,

- Johnson K ,

- von Philipsborn P ,

- Burns J , et al

- Schoonees A ,

- Becker BJ ,

- Duvendack M , et al

- Reeves BC ,

- Higgins JPT , et al

- Roberts H ,

- Ryan R , Cochrane Consumers and Communication Review Group

- Anzures-Cabrera J ,

- Harrison S ,

- Martin RM , et al

- Ogilvie D ,

- Petticrew M , et al

- Sterne JA ,

- Davey Smith G

- Crowther M ,

- Avenell A ,

- MacLennan G , et al

- Thomson HJ ,

- Taylor SL ,

- Higgins JPT ,

- Bushman BJ ,

- Caldwell DM ,

- Thompson SG ,

- Spiegelhalter DJ

- López-López JA ,

- Lipsey MW , et al

- Grimshaw JM ,

- Thomas RE ,

- Borenstein M ,

- McFadden A ,

- Renfrew MJ , et al

- van Houwelingen HC ,

- Arends LR ,

- Melendez-Torres GJ ,

- Ioannidis JP ,

- Steckelberg A ,

- Richter B , et al

- Presseau J ,

- Newham JJ , et al

- Blakemore A ,

- Dickens C ,

- Anderson R , et al

- Schulz R , et al

- Lee CC , et al

- Welton NJ ,

- Adamopoulos E , et al

- Tricco AC ,

- Trikalinos TA , et al

- Aveyard P , et al

- Calderbank-Batista T

- Achana FA ,

- Cooper NJ ,

- Bujkiewicz S , et al

- Howarth E ,

- Moore THM ,

- Welton NJ , et al

- O’Mara-Eves A ,

- Thompson SG

- Jackson D ,

- Raudenbush SW ,

- García AA , et al

- Cheung MW ,

- Watson SI ,

- Stewart GB ,

- Mengersen K ,

- García AA ,

- Brown A , et al

- Liu H , et al

- Johnson L ,

- Althaus C , et al

- Stamatakis KA

- Greenwood-Lee J ,

- Nettel-Aguirre A , et al

- Caldwell D , et al

- Colbourn T ,

- Asseburg C ,

- Bojke L , et al

- Guyatt GH ,

- Oxman AD , GRADE Working Group

- Turner RM ,

- Spiegelhalter DJ ,

- Smith GCS , et al

- Carlin JB , et al

- Schulz KF , et al

- Savović J ,

- Altman DG , et al

- Prüss-Ustün A ,

- Cumming O , et al

- Bennett P ,

- West R , et al

- Briggs ADM ,

- Mytton OT ,

- Kehlbacher A , et al

Handling editor Soumyadeep Bhaumik

Contributors JPTH co-led the project, conceived the paper, led discussions and wrote the first draft. JAL-L undertook analyses, contributed to discussions and contributed to writing the manuscript. BJB drafted material on mechanisms, contributed to discussions and contributed extensively to writing the manuscript. SRD screened and categorised the results of the literature searches, collated examples and contributed to discussions. SD undertook searches to identify relevant literature and contributed to discussions. JMG contributed to discussions and commented critically on drafts. LAM undertook analyses, contributed to discussions and commented critically on drafts. THMM contributed examples, contributed to discussions and commented critically on drafts. EAR and JT contributed to discussions and commented critically on drafts. DMC co-led the project, contributed to discussions and drafted extensive parts of the paper. All authors approved the final version of the manuscript.

Funding Funding provided by the World Health Organization Department of Maternal, Newborn, Child and Adolescent Health through grants received from the United States Agency for International Development and the Norwegian Agency for Development Cooperation. JPTH was funded in part by Medical Research Council (MRC) grant MR/M025209/1, by the MRC Integrative Epidemiology Unit at the University of Bristol (MC_UU_12013/9) and by the MRC ConDuCT-II Hub (Collaboration and innovation for Difficult and Complex randomised controlled Trials In Invasive procedures – MR/K025643/1). BJB was funded in part by grant DRL-1252338 from the US National Science Foundation (NSF). JMG holds a Canada Research Chair in Health Knowledge Transfer and Uptake. LAM is funded by a National Institute for Health Research (NIHR) Systematic Review Fellowship (RM-SR-2016-07 26). THMM was funded by the NIHR Collaboration for Leadership in Applied Health Research and Care West (NIHR CLAHRC West). JT is supported by the NIHR Collaboration for Leadership in Applied Health Research and Care North Thames at Bart’s Health NHS Trust. DMC was funded in part by NIHR grant PHR 15/49/08 and by the Centre for the Development and Evaluation of Complex Interventions for Public Health Improvement (DECIPHer –MR/KO232331/1).

Disclaimer The views expressed are those of the authors and not necessarily those of the CRC program, the MRC, the NSF, the NHS, the NIHR or the UK Department of Health.

Competing interests JMG reports personal fees from the Campbell Collaboration. EAR reports being a Methods Editor with Cochrane Public Health.

Patient consent Not required.

Provenance and peer review Not commissioned; externally peer reviewed.

Read the full text or download the PDF:

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Systematic Review | Definition, Examples & Guide

Systematic Review | Definition, Examples & Guide

Published on 15 June 2022 by Shaun Turney . Revised on 17 October 2022.

A systematic review is a type of review that uses repeatable methods to find, select, and synthesise all available evidence. It answers a clearly formulated research question and explicitly states the methods used to arrive at the answer.

They answered the question ‘What is the effectiveness of probiotics in reducing eczema symptoms and improving quality of life in patients with eczema?’

In this context, a probiotic is a health product that contains live microorganisms and is taken by mouth. Eczema is a common skin condition that causes red, itchy skin.

Table of contents

What is a systematic review, systematic review vs meta-analysis, systematic review vs literature review, systematic review vs scoping review, when to conduct a systematic review, pros and cons of systematic reviews, step-by-step example of a systematic review, frequently asked questions about systematic reviews.

A review is an overview of the research that’s already been completed on a topic.

What makes a systematic review different from other types of reviews is that the research methods are designed to reduce research bias . The methods are repeatable , and the approach is formal and systematic:

- Formulate a research question

- Develop a protocol

- Search for all relevant studies

- Apply the selection criteria

- Extract the data

- Synthesise the data

- Write and publish a report

Although multiple sets of guidelines exist, the Cochrane Handbook for Systematic Reviews is among the most widely used. It provides detailed guidelines on how to complete each step of the systematic review process.

Systematic reviews are most commonly used in medical and public health research, but they can also be found in other disciplines.

Systematic reviews typically answer their research question by synthesising all available evidence and evaluating the quality of the evidence. Synthesising means bringing together different information to tell a single, cohesive story. The synthesis can be narrative ( qualitative ), quantitative , or both.

Prevent plagiarism, run a free check.

Systematic reviews often quantitatively synthesise the evidence using a meta-analysis . A meta-analysis is a statistical analysis, not a type of review.

A meta-analysis is a technique to synthesise results from multiple studies. It’s a statistical analysis that combines the results of two or more studies, usually to estimate an effect size .

A literature review is a type of review that uses a less systematic and formal approach than a systematic review. Typically, an expert in a topic will qualitatively summarise and evaluate previous work, without using a formal, explicit method.

Although literature reviews are often less time-consuming and can be insightful or helpful, they have a higher risk of bias and are less transparent than systematic reviews.

Similar to a systematic review, a scoping review is a type of review that tries to minimise bias by using transparent and repeatable methods.

However, a scoping review isn’t a type of systematic review. The most important difference is the goal: rather than answering a specific question, a scoping review explores a topic. The researcher tries to identify the main concepts, theories, and evidence, as well as gaps in the current research.

Sometimes scoping reviews are an exploratory preparation step for a systematic review, and sometimes they are a standalone project.