- Online Students

- Faculty & Staff

- African American/Black

- Asian, Pacific Islander & Desi American

- DACA & Undocumented Students

- First Generation

- Hispanic/Latinx

- Indigenous/Native/First Nations

- International

- Justice Impacted

- Middle Eastern/North African/Arab-American

- Neurodivergent

- Students With Disabilities

- Liberal Arts

- Social Sciences

- Create a Resume / Cover Letter

- Earn Certifications & Badges

- Expand Your Network / Mentor

- Negotiate an Offer

- Prepare for an Interview

- Explore Your Interests / Self Assessment

- Prepare for Graduate School

- Explore Internships

- Search for a Job

How to Research and Write Using Generative AI Tools

- Share This: Share How to Research and Write Using Generative AI Tools on Facebook Share How to Research and Write Using Generative AI Tools on LinkedIn Share How to Research and Write Using Generative AI Tools on X

Instructor: Dave Birss

You’ve probably already heard about ChatGPT, but did you know it can make you better at your job? Join instructor Dave Birss for a crash course in generative AI and learn how to get started with prompt engineering for ChatGPT and other AI chatbots to upskill as a researcher and a writer.

Dave shows you how to create effective prompts that deliver high-quality, task-relevant results. Get an overview of some of the key considerations of working with generative AI with hands-on, practical strategies to improve your research and writing. Find out how to summarize complex information, view subjects from multiple perspectives, build user personas and strategic models, analyze writing style, outline ideas, and generate new content. By the end of this course, you’ll be ready to leverage the power of ChatGPT and other chatbots to deliver more consistent writing outcomes every time.

Note: This course was created by Dave Birss. We are pleased to host this training in our library.

Research with Generative AI

Resources for scholars and researchers

Generative AI (GenAI) technologies offer new opportunities to advance research and scholarship. This resource page aims to provide Harvard researchers and scholars with basic guidance, information on available resources, and contacts. The content will be regularly updated as these technologies continue to evolve. Your feedback is welcome.

Leading the way

Harvard’s researchers are making strides not only on generative AI, but the larger world of artificial intelligence and its applications. Learn more about key efforts.

The Kempner Institute

The Kempner Institute is dedicated to revealing the foundations of intelligence in both natural and artificial contexts, and to leveraging these findings to develop groundbreaking technologies.

Harvard Data Science Initiative

The Harvard Data Science Initiative is dedicated to understanding the many dimensions of data science and propelling it forward.

More AI @ Harvard

Generative AI is only part of the fascinating world of artificial intelligence. Explore Harvard’s groundbreaking and cross-disciplinary academic work in AI.

funding opportunity

GenAI Research Program/ Summer Funding for Harvard College Students 2024

The Office of the Vice Provost for Research, in partnership with the Office of Undergraduate Research and Fellowships, is pleased to offer an opportunity for collaborative research projects related to Generative AI between Harvard faculty and undergraduate students over the summer of 2024.

Learn more and apply

Frequently asked questions

Can i use generative ai to write and/or develop research papers.

Academic publishers have a range of policies on the use of AI in research papers. In some cases, publishers may prohibit the use of AI for certain aspects of paper development. You should review the specific policies of the target publisher to determine what is permitted.

Here is a sampling of policies available online:

- JAMA and the JAMA Network

- Springer Nature

How should AI-generated content be cited in research papers?

Guidance will likely develop as AI systems evolve, but some leading style guides have offered recommendations:

- The Chicago Manual of Style

- MLA Style Guide

Should I disclose the use of generative AI in a research paper?

Yes. Most academic publishers require researchers using AI tools to document this use in the methods or acknowledgements sections of their papers. You should review the specific guidelines of the target publisher to determine what is required.

Can I use AI in writing grant applications?

You should review the specific policies of potential funders to determine if the use of AI is permitted. For its part, the National Institutes of Health (NIH) advises caution : “If you use an AI tool to help write your application, you also do so at your own risk,” as these tools may inadvertently introduce issues associated with research misconduct, such as plagiarism or fabrication.

Can I use AI in the peer review process?

Many funders have not yet published policies on the use of AI in the peer review process. However, the National Institutes of Health (NIH) has prohibited such use “for analyzing and formulating peer review critiques for grant applications and R&D contract proposals.” You should carefully review the specific policies of funders to determine their stance on the use of AI

Are there AI safety concerns or potential risks I should be aware of?

Yes. Some of the primary safety issues and risks include the following:

- Bias and discrimination: The potential for AI systems to exhibit unfair or discriminatory behavior.

- Misinformation, impersonation, and manipulation: The risk of AI systems disseminating false or misleading information, or being used to deceive or manipulate individuals.

- Research and IP compliance: The necessity for AI systems to adhere to legal and ethical guidelines when utilizing proprietary information or conducting research.

- Security vulnerabilities: The susceptibility of AI systems to hacking or unauthorized access.

- Unpredictability: The difficulty in predicting the behavior or outcomes of AI systems.

- Overreliance: The risk of relying excessively on AI systems without considering their limitations or potential errors.

See Initial guidelines for the use of Generative AI tools at Harvard for more information.

- Initial guidelines for the use of Generative AI tools at Harvard

Generative AI tools

- Explore Tools Available to the Harvard Community

- System Prompt Library

- Request API Access

- Request a Vendor Risk Assessment

- Questions? Contact HUIT

Copyright and intellectual property

- Copyright and Fair Use: A Guide for the Harvard Community

- Copyright Advisory Program

- Intellectual Property Policy

- Protecting Intellectual Property

Data security and privacy

- Harvard Information Security and Data Privacy

- Data Security Levels – Research Data Examples

- Privacy Policies and Guidelines

Research support

- University Research Computing and Data (RCD) Services

- Research Administration and Compliance

- Research Computing

- Research Data and Scholarship

- Faculty engaged in AI research

- Centers and initiatives engaged in AI research

- Degree and other education programs in AI

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 18 March 2024

Techniques for supercharging academic writing with generative AI

- Zhicheng Lin ORCID: orcid.org/0000-0002-6864-6559 1

Nature Biomedical Engineering ( 2024 ) Cite this article

2242 Accesses

60 Altmetric

Metrics details

- Developing world

Generalist large language models can elevate the quality and efficiency of academic writing.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

92,52 € per year

only 7,71 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Amano, T. et al. PLoS Biol. 21 , e3002184 (2023).

Article CAS PubMed PubMed Central Google Scholar

Lin, Z. & Li, N. Perspect. Psychol. Sci. 18 , 358–377 (2023).

Article ADS PubMed Google Scholar

Lin, Z. R. Soc. Open Sci. 10 , 230658 (2023).

Article ADS PubMed PubMed Central Google Scholar

Birhane, A., Kasirzadeh, A., Leslie, D. & Wachter, S. Nat. Rev. Phys. 5 , 277–280 (2023).

Article Google Scholar

Thirunavukarasu, A. J. et al. Nat. Med. 29 , 1930–1940 (2023).

Article CAS PubMed Google Scholar

Lin, Z. Nat. Hum. Behav . https://doi.org/10.1038/s41562-024-01847-2 (in the press).

Milano, S., McGrane, J. A. & Leonelli, S. Nat. Mach. Intell. 5 , 333–334 (2023).

White, A. D. Nat. Rev. Chem. 7 , 457–458 (2023).

Golan, R., Reddy, R., Muthigi, A. & Ramasamy, R. Nat. Rev. Urol. 20 , 327–328 (2023).

Article PubMed Google Scholar

Casal, J. E. & Kessler, M. Res. Meth. Appl. Linguist. 2 , 100068 (2023).

Lin, Z. Preprint at https://arxiv.org/abs/2401.15284 (2024).

Wang, H. et al. Nature 620 , 47–60 (2023).

Article ADS CAS PubMed Google Scholar

Dergaa, I., Chamari, K., Zmijewski, P. & Ben Saad, H. Biol. Sport 40 , 615–622 (2023).

Article PubMed PubMed Central Google Scholar

Hwang, S. I. et al. Korean J. Radiol. 24 , 952–959 (2023).

Bell, S. BMC Med. 21 , 334 (2023).

Nazari, N., Shabbir, M. S. & Setiawan, R. Heliyon 7 , e07014 (2021).

Yan, D. Educ. Inf. Technol. 28 , 13943–13967 (2023).

Semrl, N. et al. Hum. Reprod. 38 , 2281–2288 (2023).

Chamba, N., Knapen, J. H. & Black, D. Nat. Astron. 6 , 1015–1020 (2022).

Article ADS Google Scholar

Nat. Biomed. Eng . 2 , 53 (2018).

Croxson, P. L., Neeley, L. & Schiller, D. Nat. Hum. Behav. 5 , 1466–1468 (2021).

Luna, R. E. Nat. Rev. Mol. Cell Biol. 21 , 653–654 (2020).

Merow, C., Serra-Diaz, J. M., Enquist, B. J. & Wilson, A. M. Nat. Ecol. Evol. 7 , 960–962 (2023).

Yurkewicz, K. Nat. Rev. Mater. 7 , 673–674 (2022).

King, A. A. J. Manag. Sci. Rep . https://doi.org/10.1177/27550311231187068 (2023).

Patriotta, G. J. Manag. Stud. 54 , 747–759 (2017).

Gernsbacher, M. A. Adv. Meth. Pract. Psych. 1 , 403–414 (2018).

Google Scholar

Lin, Z. Trends Cogn. Sci. 82 , 85–88 (2024).

Lin, Z. Preprint at https://doi.org/10.31234/osf.io/s6h58 (2023).

Generative AI in Scholarly Communications: Ethical and Practical Guidelines for the Use of Generative AI in the Publication Process (STM, 2023).

Download references

Acknowledgements

The writing of this Comment was supported by the National Key R&D Program of China STI2030 Major Projects (2021ZD0204200), the National Natural Science Foundation of China (32071045) and the Shenzhen Fundamental Research Program (JCYJ20210324134603010). The author used GPT-4 ( https://chat.openai.com ) and Claude ( https://claude.ai ) alongside prompts from Box 1 to help write earlier versions of the text and to edit it. The text was then developmentally edited by the journal’s Chief Editor with basic-editing and structural-editing assistance from Claude, and checked by the author.

Author information

Authors and affiliations.

University of Science and Technology of China, Hefei, China

Zhicheng Lin

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Zhicheng Lin .

Ethics declarations

Competing interests.

The author declares no competing interests.

Peer review

Peer review information.

Nature Biomedical Engineering thanks Serge Horbach and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Lin, Z. Techniques for supercharging academic writing with generative AI. Nat. Biomed. Eng (2024). https://doi.org/10.1038/s41551-024-01185-8

Download citation

Published : 18 March 2024

DOI : https://doi.org/10.1038/s41551-024-01185-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Open Search Box

- Toggle side widget area

- No menu assigned!

QUICK LINKS

Using generative ai for scientific research, a quick user’s guide.

(Last updated: 5/03/2024)

If you’re just getting started with using generative AI (GenAI) in your research, start here.

This guide includes frequently asked questions and shows how GenAI can be used throughout the entire research process, based on published guidelines from journals, funding agencies, professional societies, and our own assessment of GenAI’s benefits and risks.

GenAI is a rapidly evolving technology, and we will update this guide as new information becomes available. Suggestions for improvements or additions? Email [email protected]. We look forward to developing this guide collaboratively with our research community.

Technical Guides for Using Generative AI

GenAI in Coding

This quick-start guide helps researchers with little programming experience learn coding with an AI assistant’s help, assuming a chosen programming language like Python, JavaScript, or C++.

Using ChatGPT’s ‘Data Analysis’

Explore how to use ChatGPT 4’s ‘Data Analysis’ feature effectively. This guide covers code organization, error checking, data visualization, and language translation, maximizing the likelihood of accurate and efficient results.

Using Custom GPTs in ChatGPT 4

Explore how to use ChatGPT 4’s ‘Custom GPT’ feature effectively, which allow users to create custom versions of ChatGPT for specific tasks.

Using Generative AI for Writing

Can i use generative ai to write research papers.

The default stance on using generative AI for writing research papers should generally be NO, particularly for creative contributions, due to issues around authorship, copyright, and plagiarism. However, generative AI can be beneficial for editorial assistance, provided you are aware of what is acceptable at your target publication venue.

Generating text and images for publications in scientific journals raises issues of authorship, copyright and plagiarism, many of which are still unresolved. Therefore, this is a very controversial area and many journals and research conferences are updating their policies. If you want to do this, please read very carefully the guidelines for authors of your target journal.

Here are a few examples of new authorship guidelines.

- Springer Nature journals prohibit the use of generative AI to generate images for manuscripts; texts generated by LLM should be well documented, and AI is not granted authorship.

- Science journals require full disclosure for the use of generative AI to generate text; generative AI-generated images and multimedia can be used only with explicit permission of their editors. AI is not granted authorship.

- JAMA and the JAMA network journals do not allow generative AI to be listed as authors. However, generative AI generated content or assistance in writing / editing are allowed in manuscripts but should be reported in the manuscript.

- Elsevier permits the use of AI tools to enhance text readability but not creating or altering scientific content. Authors should provide full disclosure of the use of AI. It prohibits the use of AI to generate or alter images, unless this is part of the research method. AI authorship is not allowed.

- IEEE mandates disclosure of all AI-generated content in submissions, exceptI for editing and grammar enhancement.

- The International Conference on Machine Learning prohibits content generated by generative AI, unless it is part of the research study being described.

While direct generation of content by generative AI is problematic, its role in the earlier stages of writing can be advantageous. For instance, non-native English speakers may use generative AI to refine the language of their writing. Generative AI can also serve as a tool for providing feedback on writing, similar to a copy editor’s role, by improving voice, argument, and structure. This utility is distinct from using AI for direct writing. As long as the human author assumes full responsibility for the final content, such editing help from generative AI is increasingly being recognized as acceptable in most disciplines where language is not the primary scholarly contribution. However, conservative editorial policies at some venues may limit the use of such techniques in the short term.

Can I use generative AI to write grants?

This should be undertaken only with an understanding of the risks involved. The bottom line is that the investigator is signing off on the proposal and is promising to do the work if funded, and so has to take responsibility for every part of the proposal content, even if generative AI assisted in some parts.

The reasoning is similar to that for writing papers, as discussed above, except that there usually will not be copyright and plagiarism issues. Also, not many funding agencies have well-developed policies as yet in this regard.

For example, although the National Institutes of Health (NIH) does not specifically prohibit the use of generative AI to write grants (they do prohibit use of generative AI technology in the peer review process), they state that an author assumes the risk of using an AI tool to help write an application, noting “[…] when we receive a grant application, it is our understanding that it is the original idea proposed by the institution and their affiliated research team.” If AI generated text includes plagiarism, fabricated citations or falsified information, the NIH “will take appropriate actions to address the non-compliance.” ( Source.)

Similarly, the National Science Foundation (NSF), in its notice dated December 14, 2023, emphasizes the use of generative AI in grant proposal preparation and the merit review process. While NSF acknowledges the potential benefits of AI in enhancing productivity and creativity, it imposes strict guidelines to safeguard the integrity and confidentiality of proposals.

The DOE requires authors to verify any citations suggested by generative AI, due to potential inaccuracies, and does not allow AI-based chatbots like ChatGPT to be credited as authors or co-authors.

Reviewers are prohibited from uploading proposal content to non-approved AI tools, and proposers are encouraged to disclose the extent and manner of AI usage in their proposals. The NSF stresses that any breach in confidentiality or authenticity, especially through unauthorized disclosure via AI, could lead to legal liabilities and erosion of trust in the agency. (Source.)

Can I use generative AI to help me when I write a literature review section for my paper?

Generative AI can offer multiple advantages. Generative AI can help you summarize a particular paper, so this saves you time and enables you to cover a much larger number of publications in the limited time you have. Generative AI can also help you summarize literature around certain research questions by searching through many papers.

However, you should consider a number of factors that may impact how much you can trust such reviews.

- When generative AI encounters a request that it lacks information / knowledge about, sometimes it “makes up” an answer. This “AI hallucination” is well documented and probably many of us have experienced it. You are responsible for verifying the summaries that generative AI gives you.

- Unlike human researchers, generative AI does not have the ability to evaluate the quality of the published work. Therefore, it will indiscriminately include publications of varying quality, perhaps also many studies that cannot be reproduced.

- A generative AI model has a knowledge cutoff date, so newer publications after the cutoff date will not be included in the responses that it gives you.

- Other types of inaccuracies. Generative AI’s effectiveness is based on the training datasets. Even though enormous amounts of training data are now used for generative AI models, there is still no guarantee that the training is unbiased.

Also, please do keep in mind all the limitations discussed above regarding the use of generative AI to assist in writing research papers. Subject to those limitations, this seems to be a reasonable thing to do.

Can I use generative AI to write non-technical summaries, create presentations, and translate my work?

Generative AI can be beneficial for summarizing or translating your work, especially with its ability to adjust the tone of a text, making it easier to create brief but complete summaries that suit different types of readers. Several advanced generative AI models are designed specifically to transform scientific manuscripts into presentations.

However, you should be sure that, while using generative AI to summarize, present, or translate your work, you don’t input confidential information to generative AI. You should also always verify that summaries, presentations and translations created by generative AI accurately represent your work. When using generative AI for translation, it could be challenging if you are not proficient in both languages involved and you need to consult with a fluent speaker for verification. Also note that not all generative AI models are explicitly designed for translation tasks. Therefore, you should explore and identify the most suitable generative AI model that aligns with your specific translation needs.

Using Generative AI to Improve Productivity

Can i use generative ai to review grant proposals or review papers.

No, you should not do this. The National Institutes of Health recently announced that it prohibits the use of generative AI to analyze and formulate critiques of grant proposals . This not only applies to generative AI systems that are publicly available, but also to systems hosted locally (such as a university’s own generative AI), as long as data may be shared with multiple individuals. The main rationale is that this would constitute a breach of confidentiality, which is essential in the grant review process. To use generative AI tools to evaluate and summarize grant proposals, or even let it edit critiques, one would need to feed to the AI system “substantial, privileged, and detailed information.” When we don’t know how the AI system will save, share or use the information that it is fed, we should not feed it such information.

Furthermore, expert review relies upon subject matter expertise, which a generative AI system could not be relied upon to have. So, it is unlikely that generative AI will produce a reliable and high-quality review.

For these reasons, we don’t recommend that you use generative AI for reviewing grant proposals or papers, even if the relevant publication venue or funding agency, unlike NIH, has not issued explicit guidance.

Can I use generative AI to write letters of support?

Generative AI can, in some situations, be useful to help you draft a letter, or edit your draft and to help you adopt a certain tone. We are not aware of any explicit rules against this. However, please keep in mind the following:

- You are still fully responsible for everything in the letter because you are still the author.

- You should consider the issue of confidentiality. Is there confidential information in the letter? If so, generative AI should not “know” it, because, again, we do not know for sure what it does with the information that users feed it.

- Texts written by GPT tend to sound very generic. This is not good for letters of support, whose value may depend on their providing very specific information, and recommendations, about the subject of the letter. You still need to ensure that the letter is what you feel comfortable sending and will convey to the reader the same level of support to the subject of the letter if you’d write it yourself.

How can I use generative AI as a Brainstorming Partner in My Research?

Generative AI can serve as effective brainstorming partners in research. These systems can – when used appropriately – help generate a variety of ideas, perspectives, and potential solutions, particularly useful during the initial stages of research planning. For instance, a researcher can input their basic research concept into the AI system and receive suggestions on experimental approaches, potential methodologies, or alternative research questions. An example prompt may be:

“Analyze recent research on memory consolidation and the influence of emotions on learning and recall. Based on this analysis, generate new hypotheses for potential studies investigating neurobiological mechanisms.”

However, AI-generated ideas must be critically evaluated. While AI can offer diverse insights, these are based on existing data and may not always be novel or contextually appropriate. Researchers should use these suggestions as a starting point for further development rather than as definitive solutions.

Using Generative AI for Data Generation and Analysis

Can i use generative ai to write code.

Yes, provided you can read code! Generative AI can indeed output computer programs. But, just as in the case of text, it is possible you get code that is good-looking but erroneous. To the extent that it is often easier to read code than to write it, you may be better off using generative AI to write code for you. We provide a guide on generating, editing and reviewing code using ChatGPT 4.0 here and a coding tutorial using local software such as GitHub copilot here .

This applies not just to computer programs, but also to databases. You can have generative AI write code for you in SQL to manage and to query databases. In fact, in many cases, you could even do some minimal debugging just by running the code/query on known instances and checking to make sure you get the right answers. While basic tests like these can catch many errors, remember that there is no guarantee your program will work on complex examples just because it worked on simple ones.

Can I use generative AI for data analysis and visualization?

Yes. Generative AI models have been constantly improved to carry out data analysis and visualization. We provide some examples of data analysis and visualizations using ChatGPT 4.0 here .

Can I use generative AI as a substitute for human participants in surveys?

Using generative AI as a substitute for human participants in surveys is not advisable due to significant concerns regarding construct validity. Generative AI, while adept at processing and generating data, cannot authentically replicate the nuances of human behavior and opinions that are the purpose of surveying humans in research.

However, generative AI can be valuable in the preliminary stages of survey design. It can assist in testing the clarity and structure of survey questions, helping address ambiguity and effectively capture the intended information. This application leverages AI’s capability to process language and simulate varied responses, providing insights into how questions may be interpreted by a diverse audience. In short, while generative AI’s use as a direct replacement for human survey participants is not recommended due to validity concerns, its role in enhancing survey design and testing is a viable and beneficial application.

Can generative AI be used for labeling data?

Generative AI can be employed for labeling, such as categorizing text and images. This application can streamline processes that are traditionally time-consuming and labor-intensive for human judges. However, the reliability of AI in these tasks requires careful consideration and validation on a case-by-case basis.

The key concern with AI-based judgment in labeling is its dependence on the quality and bias of training data. AI systems might replicate any inherent biases present in their training datasets, leading to skewed or inaccurate labeling. Researchers must validate the AI’s performance – comparing output with human-labeled benchmarks to ensure accuracy and impartiality.

Can I use generative AI to review data for errors and biases?

Yes! Generative AI can serve as a supplementary tool in the process of data quality assurance, assisting in the identification of errors, inconsistencies, or biases in datasets. Its capability to process extensive data rapidly enables it to spot potential issues that might be missed in manual reviews. Researchers should use Generative AI as one component of a broader data review strategy. It’s essential to corroborate AI-detected anomalies with manual checks and expert assessments.

Reporting the Use of Generative AI

How do i cite contents created or assisted by generative ai.

You used generative AI in the course of writing a research paper. How do you give it credit? And how do you inform the reader of your paper about its use?

Generative AI should not be listed as a co-author, but its use must be noted in the paper, including appropriate detail, e.g. about specific prompts and responses. The Committee on Publication Ethics has a succinct and incisive analysis .

The use of generative AI should be disclosed in the paper, along with a description of the places and manners of use. Typically, such disclosures will be in a “Methods” section of the paper, if it has one. If you rely on generative AI output, you should cite it, just as you would cite a web page look up or a personal communication. Keep in mind that some conversation identifiers may be local to your account, and hence not useful to your reader. Good citation style recommendations have been suggested by the American Psychological Association (APA) and the Chicago Manual of Style .

How do I report the use of generative AI models in a paper?

We provide recommendations on reporting the use of generative AI in research here .

Considerations for Choosing Generative AI Models

How do i decide which generative ai to use in research.

The most important factor is which generative AI system (what data, what model, what computing requirements) fits well with your research questions. In addition, there are some general considerations.

Open source . “Open source” describes software that is published alongside the source code for use and exploration by anyone. This is a consideration because most generative AI models are not developed locally by the researchers themselves (as opposed to the usual Machine Learning models). Open-source generative AIs, as well generative AI systems trained with publicly accessible data, can be advantageous for researchers who would like to fine tune generative AI models, scrutinize the security and functionality of the system, and improve explainability and interpretability of the models.

Accuracy and precision . When outputs of a generative AI can be verified (for example, if it is used in data analytics), you can gauge the efficacy of a generative AI by its precision and accuracy.

Cost. Some models require subscriptions to APIs (application programming interfaces) for research use. Other models may be able to be integrated locally, but also come with integration costs and potentially ongoing costs for maintenance and updates. When selecting otherwise free models, you might need to cover the cost for an expert to set up and maintain the model.

Can I customize generative AI models?

Yes. Some commercial generative AI developers now provide ways for users to easily customize the models, provide their own data and documents to fine tune the models, and specify the styles of model outputs. See our Custom GPT guide for more details.

What uniquely generative AI issues should I consider when I adopt generative AI in my research?

The nature of generative AI gives rise to a number of considerations that the entire research community is trying to grapple with. Transparency and accountability about the generative AI’s operations and decision making processes can be difficult when you operate a closed-source system.

We invite you to think about the following carefully, and be aware that many other issues might arise.

Data privacy concerns. Data privacy is more complicated with generative AI when using cloud-based services, as users don’t know for certain what happens to their input data and whether it could be retained for training future AI models. One way to circumvent these privacy concerns is to use locally-deployed generative AI models that run entirely on your own hardware and do not send data back to the AI provider. An example is Nvidia ChatRTX .

Bias in data. Bias in data, and consequently bias in the AI system’s output, could be a major issue because generative AI is trained on large datasets that you usually can’t access or assess, and may inadvertently learn and reproduce biases, stereotypes, and majority views present in these data. Moreover, many generative AI models are trained with overwhelmingly English texts, Western images and other types of data. Non-Western or non-English speaking cultures, as well as work by minorities and non-English speakers are seriously underrepresented in the training data. Thus, the results created by generative AI are definitely culturally biased. This should be a major consideration when assessing whether generative AI is suitable for your research.

AI hallucination. generative AI can produce outputs that are factually inaccurate or entirely incorrect, uncorroborated, nonsensical or fabricated. These phenomena are dubbed “hallucinations”. Therefore, it is essential for you to verify generative AI-generated output with reliable and credible sources.

Plagiarism. generative AI can only generate new contents based on, or drawn from, the data that it is trained on. Therefore, there is a likelihood that they will produce outputs that are similar to the training data, even to the point of being regarded as plagiarism if the similarity is too high. As such, you should confirm (e.g. by using plagiarism detection tools) that generative AI outputs are not plagiarized but instead “learned” from various sources in the manner humans learn without plagiarizing.

Prompt Engineering. The advent of generative AI has created a new human activity – prompt engineering – because the quality of generative AI responses is heavily influenced by the user input or ‘prompt’. There are courses dedicated to this concept. However, you will need to experiment with how to craft prompts that are clear, specific and appropriately structured so that generative AI will generate the output with the desired style, quality and purpose.

Knowledge Cutoff Date. Many generative AI models are trained on data up to a specific date, and are therefore unaware of any events or information produced beyond that. For example, if a generative AI is trained on data up to March 2019, they would be unaware of COVID-19 and the impact it had on humanity, or who is the current monarch of Britain. You need to know the cutoff date of the generative AI model that you use in order to assess what research questions are appropriate for its use.

Model Continuity . When you use generative AI models developed by external entities / vendors, you need to consider the possibility that one day the vendor might discontinue the model. This might have a big impact on the reproducibility of your research.

Security . As with any computer or online system, a generative AI system is susceptible to security breaches and attacks. We have already mentioned the issue of confidentiality and privacy as you input information or give prompts to the system. But malicious attacks could be a bigger threat. For example, a new type of attack, prompt injection, deliberately feeds harmful or malicious contents into the system to manipulate the results that it generates for users. generative AI developers are designing processes and technical solutions against such risks (for example, see OpenAI’s GPT4 System Card and disallowed usage policy . But as a user, you also need to be aware what is at risk, follow guidelines of your local IT providers, and do due diligence with the results that a generative AI creates for you.

Lack of Standardized Evaluations : The AI Index Report 2024 found that leading developers test their models against different responsible AI benchmarks, making it challenging to systematically compare the risks and limitations of AI models. Be wary when models tout confidence in certain evaluation measures, as the measures may not have been fully tested.

Related Resources

Additional reading.

Many recommendations, guidelines and comments are out there regarding the use of Generative AI in research and in other lines of work. Here are a few examples.

- Best Practices for Using AI When Writing Scientific Manuscripts: Caution, Care, and Consideration: Creative Science Depends on It. Jullian M. Buriak, et al. ACS Nano (2023)

- Science journals set new authorship guidelines for AI-generated text . Jennifer Harker. National Institute of Environmental Health Sciences (2023)

- NIH prohibits the use of Generative AI in peer review . (2023)

- Fighting reviewer fatigue or amplifying bias? Considerations and recommendations for use of ChatGPT and other large language models in scholarly peer review. Mohammad Hosseini and Serge P J M Horbach. Research integrity and peer review (2023)

- Nonhuman “Authors” and Implications for the Integrity of Scientific Publication and Medical Knowledge . Annette Flanagin et al, JAMA (2023)

- 2024 AI Index Report . Stanford University (2024)

For more content including manuscripts, use of generative AI in research and more – see our generative AI resource page .

Sign Up for the MIDAS Newsletter

Copyright © 2020 The Regents of the University of Michigan

- U-M Gateway

- U-M Non-discrimination Statement

- Governance and Advisory Boards

- Annual Highlights

- Newsletters

- Research Pillars

- MIDAS Funded Projects

- Generative AI

- AI in Science Program

- Michigan Data Science Fellows

- Graduate Certificate

- Summer Academies

- Future Leaders Summit

- Summer Camp

- Other Programs

- Mini-Symposia

- 2024 ADSA Annual Meeting

- Past Events

- Academic Partnerships

- Data Science for Social Good

- Working Groups

- Staff Collective for Data Science

- NLP+CSS 201

- Student Organizations Council

- Projects for Students

- Generative AI Resources

- Reproducibility Hub

- Research Datasets

- Drug Discovery IUCRC

Generative Artificial Intelligence

- What is Generative AI?

- When to Use AI for Research

- How to Use AI for Research

- Evaluating Generative AI Tools

- Citing Generative AI Tools

- Examining an AI Chatbot Hallucination

Accuracy of AI

This image was created with Dall-e 2 using the prompt: "a robot hallucinating"

Generative AI is it is only as good as the sources it pulls from and it can hallucinate information.

Treat your results from AI with caution.

Using AI for Research

To use AI effectively for research one of the first things you should ask is what are you trying to accomplish and be intentional about the type of tool you use. You may even want to consider if AI is the best sort of tool for what you are looking for.

There are lots of different AI tools. See [different page for a list of different AI research assistants and what they do].

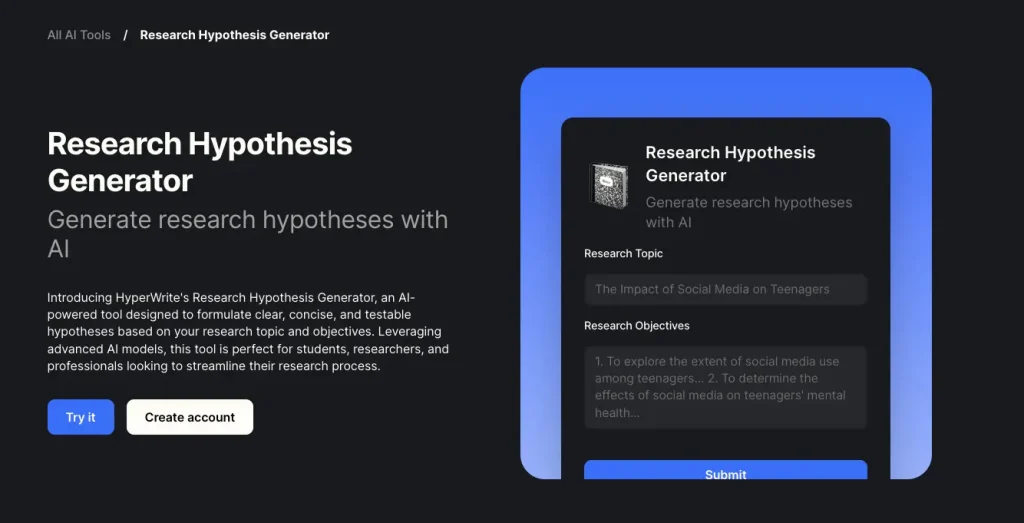

Prompt Engineering for a Research Question

This section will focus on writing prompts for chat based AI tools like ChatGPT, GoogleBard, or Microsoft Bing's chatbot.

Key Terminology

Prompt Engineering is the intentional design of prompts given to artificial intelligence tools so that the response it gives you are helpful.

Prompt Patterns are different structures you can use to engineer AI prompts

Prompt Pattern: Persona

How to create the prompt.

- Act as a certain persona (e.g. "imagine you are an anthropologist"

- You can give additional context, such as what the persona is trying to accomplish

Here's an example of how to apply this in your research

Prompt: Imagine you are an anthropologist. What are keywords and terms you would likely use if you were writing a paper about the formation of social hierarchies in societies.

This prompt will give a list of different search terms you can try to find relevant papers on your topic

See how ChatGPT responded to a persona pattern prompt: https://chat.openai.com/share/8fa6b79a-0a30-4775-bc3f-96f7f8304c8d Compare this to a similar question but asked with the persona being a not specific about the persona who is doing the research: https://chat.openai.com/share/4917eed8-75fc-4e89-81a4-9db328639af1

Prompt Pattern: Simulated Interaction

Instead of asking ChatGPT questions, have it ask questions to you

- Explain to ChatGPT what the interaction will look like (I will prompt you, then I want you to ask me a question based on that prompt).

- You can do this as one-off questions or more in-depth.

Prompt: I want to write a psychology paper about memory but I am not sure what direction to go with my specific topic. Help me come up with a starting research question by asking me questions about what aspects of memory I am interested in. When you have enough information to write a research topic, stop asking questions and write the research question. Ask me one question at a time and wait for my response.

This prompt will make ChatGPT act as a conversation partner to help you think through decisions about narrowing down your research topic.

See how ChatGPT responded to a simulated interaction prompt: https://chat.openai.com/share/ffe72f48-799f-427e-b9dd-bce5ecea6996

Prompt Pattern: Schedule Maker

Tell ChatGPT what you are trying to accomplish and ask it to create a schedule to keep you on task

Prompt: I have three hours tonight I can work on research for my paper on social media's impact on presidential elections. By the end of those three hours I want to have found two peer-reviewed sources and created an annotated bibliography of those sources. Can you create a schedule of what I should do between 7pm and 10 pm to accomplish those things on time?

This prompt will give you a schedule of what to work on during a set amount of time and also breaks down research into concrete steps

See how ChatGPT responded to a prompt to create a schedule; https://chat.openai.com/share/3b867579-d62a-4736-b0d7-de26284cd061

- << Previous: When to Use AI for Research

- Next: Evaluating Generative AI Tools >>

- Last Updated: Apr 5, 2024 10:58 AM

- URL: https://davidson.libguides.com/generative-ai

Mailing Address : Davidson College - E.H. Little Library, 209 Ridge Road, Box 5000, Davidson, NC 28035

Generative AI in Academic Writing

What this handout is about.

You’ve likely heard of AI tools such as ChatGPT, Google Bard, Microsoft Bing, or others by now. These tools fall under a broad, encompassing term called generative AI that describes technology that can create new text, images, sounds, video, etc. based on information and examples drawn from the internet. In this handout, we will focus on potential uses and pitfalls of generative AI tools that generate text.

Before we begin: Stay tuned to your instructor

Instructors’ opinions on the use of AI tools may vary dramatically from one class to the next, so don’t assume that all of your instructors will think alike on this topic. Consult each syllabus for guidance or requirements related to the use of AI tools. If you have questions about if/how/when it may be appropriate to use generative AI in your coursework, be sure to seek input from your instructor before you turn something in for a grade. You are always 100% responsible for whatever writing you chose to turn in to an instructor, so it pays to inquire early.

Note that when your instructors authorize the use of generative AI tools, they will likely assume that these tools may help you think and write—not think or write for you. Keep that principle in mind when you are drafting and revising your assignments. You can maintain your academic integrity and employ the tools with the same high ethical standards and source use practices that you use in any piece of academic writing.

What is generative AI, and how does it work?

Generative AI is an artificial intelligence tool that allows users to ask it questions or make requests and receive quick written responses. It uses Large Language Models (LLMs) to analyze vast amounts of textual data to determine patterns in words and phrases. Detecting patterns allows LLMs to predict what words may follow other words and to transform the content of its corpus (the textual data) into new sentences that respond to the questions or requests. Using complex neural network models, LLMs generate writing that mimics human intelligence and varied writing styles.

The textual data used to train the LLM has been scraped from the internet, though it is unclear exactly which sources have been included in the corpus for each AI tool. As you can imagine, the internet has a vast array of content of variable quality and utility, and generative AI does not distinguish between accurate/inaccurate or biased/unbiased information. It can also recombine accurate source information in ways that generate inaccurate statements, so it’s important to be discerning when you use these tools and to carefully digest what’s generated for you. That said, the AI tools may spark ideas, save you time, offer models, and help you improve your writing skills. Just plan to bring your critical thinking skills to bear as you begin to experiment with and explore AI tools.

As you explore the world of generative AI tools, note that there are both free and paid versions. Some require you to create an account, while others don’t. Whatever tools you experiment with, take the time to read the terms before you proceed, especially the terms about how they will use your personal data and prompt history.

In order to generate responses from AI tools, you start by asking a question or making a request, called a “prompt.” Prompting is akin to putting words into a browser’s search bar, but you can make much more sophisticated requests from AI tools with a little practice. Just as you learned to use Google or other search engines by using keywords or strings, you will need to experiment with how you can extract responses from generative AI tools. You can experiment with brief prompts and with prompts that include as much information as possible, like information about the goal, the context, and the constraints.

You could experiment with some fun requests like “Create an itinerary for a trip to a North Carolina beach.” You may then refine your prompt to “Create an itinerary for a relaxing weekend at Topsail Beach and include restaurant recommendations” or “Create an itinerary for a summer weekend at Topsail Beach for teenagers who hate water sports.” You can experiment with style by refining the prompt to “Rephrase the itinerary in the style of a sailor shanty.” Look carefully at the results for each version of the prompt to see how your changes have shaped the answers.

The more you experiment with generative AI for fun, the more knowledgeable and prepared you will be to use the tool responsibly if you have occasion to use it for your academic work. Here are some ways you might experiment with generative AI tools when drafting or exploring a topic for a paper.

Potential uses

Brainstorming/exploring the instructor’s prompt Generative AI can help spark ideas or categories for brainstorming. You could try taking key words from your topic and asking questions about these ideas or concepts. As you narrow in on a topic, you can ask more specific or in-depth questions.

Based on the answers that you get from the AI tool, you may identify some topics, ideas, or areas you are interested in researching further. At this point, you can start exploring credible academic sources, visit your instructor’s office hours to discuss topic directions, meet with a research librarian for search strategies, etc.

Generating outlines AI tools can generate outlines of writing project timelines, slide presentations, and a variety of writing tasks. You can revise the prompt to generate several versions of the outlines that include, exclude, and prioritize different information. Analyze the output to spark your own thinking about how you’d like to structure the draft you’re working on.

Models of genres or types of writing If you are uncertain how to approach a new format or type of writing, an AI tool may quickly generate an example that may inform how you develop your draft. For example, you may never have written—a literature review, a cover letter for an internship, or an abstract for a research project. With good prompting, an AI tool may show you what type of written product you are aiming to develop, including typical components of that genre and examples. You can analyze the output for the sequence of information to help you get a sense of the structure of that genre, but be cautious about relying on the actual information (see pitfalls below). You can use what you learn about the structures to develop drafts with your own content.

Summarizing longer texts You can put longer texts into the AI tool and ask for a summary of the key points. You can use the summary as a guide to orient you to the text. After reading the summary, you can read the full text to analyze how the author has shaped the argument, to get the important details, and to capture important points that the tool may have omitted from the summary.

Editing/refining AI tools can help you improve your text at the sentence level. While sometimes simplistic, AI-generated text is generally free of grammatical errors. You can insert text you have written into an AI tool and ask it to check for grammatical errors or offer sentence level improvements. If this draft will be turned into your instructor, be sure to check your instructor’s policies on using AI for coursework.

As an extension of editing and revising, you may be curious about what AI can tell you about your own writing. For example, after asking AI tools to fix grammatical and punctuation errors in your text, compare your original and the AI edited version side-by-side. What do you notice about the changes that were made? Can you identify patterns in these changes? Do you agree with the changes that were made? Did AI make your writing more clear? Did it remove your unique voice? Writing is always a series of choices you make. Just because AI suggests a change, doesn’t mean you need to make it, but understanding why it was suggested may help you take a different perspective on your writing.

Translation You can prompt generative AI tools to translate text or audio into different languages for you. But similar to tools like Google Translate, these translations are not considered completely “fluent.” Generative AI can struggle with things like idiomatic phrases, context, and degree of formality.

Transactional communication Academic writing can often involve transactional communication—messages that move the writing project forward. AI tools can quickly generate drafts of polite emails to professors or classmates, meeting agendas, project timelines, event promotions, etc. Review each of the results and refine them appropriately for your audiences and purposes.

Potential pitfalls

Information may be false AI tools derive their responses by reassembling language in their data sets, most of which has been culled from the internet. As you learned long ago, not everything you read on the internet is true, so it follows that not everything culled and reassembled from the internet is true either. Beware of clearly written, but factually inaccurate or misleading responses from AI tools. Additionally, while they can appear to be “thinking,” they are literally assembling language–without human intelligence. They can produce information that seems plausible, but is in fact partly or entirely fabricated or fictional. The tendency for AI tools to invent information is sometimes referred to as “hallucinating.”

Citations and quotes may be invented AI responses may include citations (especially if you prompt them to do so), but beware. While the citations may seem reasonable and look correctly formatted, they may, in fact, not exist or be incorrect. For example, the tools may invent an author, produce a book title that doesn’t exist or incorrectly attribute language to an author who didn’t write the quote or wrote something quite different. Your instructors are conversant in the fields you are writing about and may readily identify these errors. Generative AI tools are not authoritative sources.

Responses may contain biases Again, AI tools are drawing from vast swaths of language from their data sets–and everything and anything has been said there. Accordingly, the tools mimic and repeat distortions in ideas on any topic in which bias easily enters in. Consider and look for biases in responses generated by AI tools.

You risk violating academic integrity standards When you prompt an AI tool, you may often receive a coherent, well written—and sometimes tempting—response. Unless you have received explicit, written guidance from an instructor on use of AI generated text, do not assume it is okay to copy and paste or paraphrase that language into your text—maybe at all. See your instructor’s syllabus and consult with them about how they authorize the use of AI tools and how they expect you to include citations for any content generated by the tool. The AI tools should help you to think and write, not think or write for you. You may find yourself violating the honor code if you are not thoughtful or careful in your use of any AI generated material.

The tools consume personal or private information (text or images) Do not input anything you prefer not to have widely shared into an AI generator. The tools take whatever you put in to a prompt and incorporate it into its systems for others to use.

Your ideas may be changed unacceptably When asked to paraphrase or polish a piece of writing, the tools can change the meaning. Be discerning and thorough in reviewing any generated responses to ensure the meaning captures and aligns with your own understanding.

A final note

Would you like to learn more about using AI in academic writing? Take a look at the modules in Carolina AI Literacy . Acquainting yourself with these tools may be important as your thinking and writing skills grow. While these tools are new and still under development, they may be essential tools for you to understand in your current academic life and in your career after you leave the university. Beginning to experiment with and develop an understanding of the tools at this stage may serve you well along the way.

Note: This tip sheet was created in July 2023. Generative AI technology is evolving quickly. We will update the document as the technology and university landscapes change.

You may reproduce it for non-commercial use if you use the entire handout and attribute the source: The Writing Center, University of North Carolina at Chapel Hill

Make a Gift

Generative AI Can Supercharge Your Academic Research

Explore more.

- Artificial Intelligence

- Perspectives

C onducting relevant scholarly research can be a struggle. Educators must employ innovative research methods, carefully analyze complex data, and then master the art of writing clearly, all while keeping the interest of a broad audience in mind.

Generative AI is revolutionizing this sometimes tedious aspect of academia by providing sophisticated tools to help educators navigate and elevate their research. But there are concerns, too. AI’s capabilities are rapidly expanding into areas that were once considered exclusive to humans, like creativity and ingenuity. This could lead to improved productivity, but it also raises questions about originality, data manipulation, and credibility in research. With a simple prompt, AI can easily generate falsified datasets, mimic others’ research, and avoid plagiarism detection.

As someone who uses generative AI in my daily work, both in academia and beyond, I have spent a lot of time thinking about these potential benefits and challenges—from my popular video to the symposium I organized this year, both of which discuss the impact of AI on research careers. While AI can excel in certain tasks, it still cannot replicate the passion and individuality that motivate educators; however, what it can do is help spark our genius.

Below, I offer several ways AI can inspire your research, elevating the way you brainstorm, analyze data, verify findings, and shape your academic papers.

AI’s potential impact on research, while transformative, does heighten ethical and existential concerns about originality and academic credibility. In addition to scrutiny around data manipulation and idea plagiarism, educators using AI may face questions about the style, or even the value, of their research.

However, what truly matters in academic research is not the tools used, but educators’ approach in arriving at their findings. Transparency, integrity, intellectual curiosity, and a willingness to question and challenge one’s previous beliefs and actions should underpin this approach.

Despite potentially compounding these issues, generative AI can also play a pivotal role in addressing them. For instance, a significant problem in research is the reliance on patterns and correlations without understanding the “why” behind them. We can now ask AI to help us understand causality and mechanisms that are most likely. For example, one could inquire, “ What are the causal explanations behind these correlations? What are the primary factors contributing to spurious correlations in this data? How can we design tests to limit spurious correlations? ”

AI has the potential to revolutionize research validation, ensuring the reliability of findings and bolstering the scientific community’s credibility. AI’s ability to process massive amounts of data efficiently makes it ideal for generating replication studies. Instructions such as “ Suggest a replication study design and provide detailed instructions for independent replication ,” or “ Provide precise guidance for configuring a chatbot to independently replicate these research findings ” can guide educators in replicating and verifying study results.

ChatGPT-4 , OpenAI’s latest and paid version of the large language model (LLM), plays a vital role in enhancing my daily research process; it has the capacity to write, create graphics, analyze data, and browse the internet, seemingly as a human would. Rather than using predefined prompts, I conduct generative AI research in a natural and conversational manner, using prompts that are highly specific to the context.

To use ChatGPT-4 as a valuable resource for brainstorming, I ask it prompts such as, “ I am thinking about [insert topic], but this is not a very novel idea. Can you help me find innovative papers and research from the last 10 years that has discussed [insert topic]? ” and “ What current topics are being discussed in the business press? ” or “ Can you create a table of methods that have and have not been used related to [insert topic] in recent management research? ”

The goal is not to have a single sufficient prompt, but to hone the AI’s output into robust and reliable results, validating each step along the way as a good scholar would. Perhaps the AI sparks an idea that I can then pursue, or perhaps it does not help me at all. But sometimes just asking the questions furthers my own process of getting “unstuck” with hard research problems.

There is still a lot of work to be done after using these prompts, but having an AI research companion helps me quickly get to a better answer. For example, the prompt “ Explore uncharted areas in organizational behavior and strategy research ” led to the discovery of promising niches for future research projects. You might think that this will result in redundant projects, but all you have to do is write, “ I don’t like that, suggest more novel ideas ” or “ I like the second point, suggest 10 ideas related to it and make them more unique ” to come up with some interesting projects.

2. Use AI to gather and analyze data

Although the AI is far from perfect, iterative feedback can help its output become more robust and valuable. It is like an intelligent sounding board that adds clarity to your own ideas. I do not necessarily have a set of similar prompts that I always use to gather data, but I have been able to leverage ChatGPT-4’s capabilities to assist in programming tasks, including writing and debugging code in various programming languages.

Additionally, I have used ChatGPT-4 to craft programs designed for web scraping and data extraction. The tool generates code snippets that are easy to understand and helps find and fix errors, which makes it useful for these tasks. Prior to AI, I would spend far more time debugging software programs than I did writing. Now, I simply ask, “ What is the best way to collect data on [insert topic]? What is the best software to use for this? Can you help get that data? How do I build the code to get this data? What is the best way to analyze this data? If you were a skeptical reviewer, what would you also control for with this analysis? ”

“While the initial results may not be on point, starting from scratch without AI is still more difficult.”

When the AI generates poor responses, I ask, “ That did not work. Here is my code, can you help me find the problem? Can you help me debug this code? Why did that not work? ” or “ No, that is incorrect. Can you suggest two alternative ways to generate the result? ” There have been many occasions when the AI suggests that data will exist; however, like inspiration in the absence of AI, the data is not practically accessible or useful upon further examination. In those situations, I write, “ That data is too difficult to get, can you suggest good substitutes? ” or “ That is not real data, can you suggest more novel data or a data source where I can find the proper data? ”

While the initial results may not be on point, starting from scratch without AI is still more difficult. By incorporating AI into this data gathering and analysis process, researchers can gain valuable insights and solve difficult problems that often have ambiguous and equivocal solutions. For instance, learning how to program more succinctly or think of different data sources can help discovery. It also makes the process much less frustrating and more effective.

3. Use AI to help verify your findings and enhance transparency

AI tools can document the evolution of research ideas, effectively serving as a digital audit trail. This trail is a detailed record of a research process, including queries, critical decision points, alternative hypotheses, and refinements throughout the entire research study creation process. One of the most significant benefits of maintaining a digital audit trail is the ability to provide clear and traceable evidence of the research process. This transparency adds credibility to research findings by demonstrating the methodical steps taken to reach conclusions.

For example, when I was writing some code to download data from an external server, I asked, “ Can you find any bugs or flaws in this software program? ” and “ What will the software program’s output be? ” One of the problems I ran into was that the code was inefficient and required too much memory, taking several days to complete. When I asked, “ Could you write it in simpler and more efficient code? ” the generated code provided an alternative method for increasing data efficiency, significantly reducing the time it took.

“Prior to AI, I would spend far more time debugging software programs than I did writing.”

What excites me the most is the possibility of making it easier for other researchers to replicate what I did. Because writing up these iterations takes time, many researchers skip this step. With generative AI, we can ask it to simplify many of these steps so that others can understand them. For example, I might ask the following:

Can you write summarized notations of this program or of the previous steps so that others can understand what I did here?

Can we reproduce these findings using a different statistical technique?

Can you generate a point-by-point summary diary of what I did in the previous month from this calendar?

Can you create a step-by-step representation of the workflow I used in this study?

Can you help generate an appendix of the parameters, tests, and configuration settings for this analysis?

In terms of qualitative data, I might ask, “ Can you identify places in this text where this idea was discussed? Please put it in an easy-to-understand table ” or “ Can you find text that would negate these findings? What conditions do you believe generated these counterfactual examples? ”

You could even request that the AI create a database of all the prompts you gave it in order for it to generate the results and data. With the advent of AI-generated images and videos, we may soon be able to ask it to generate simple video instructions for recreating the findings or to highlight key moments in a screen recording of researchers performing their analyses. This not only aids validation but also improves the overall reliability and credibility of the research. Furthermore, because researchers incur little cost in terms of time and resources, such demands for video instructions may eventually be quite reasonable.

4. Use AI to predict and then parse reviewer feedback

I try to anticipate reviewer concerns before submitting research papers by asking the AI, “ As a skeptical reviewer who is inclined to reject papers, what potential flaws in my paper do you see? How can I minimize those flaws? ” The results help me think through areas where my logic or analysis may be flawed, and what I might want to refine before submitting my paper to a skeptical scientific audience. The early detection of problems in a competitive scientific arena with high time pressure can be effective and time saving.

Once I receive reviewer feedback, I also like to use ChatGPT to better understand what reviewers expect of me as an author. I’ll ask, “ Help me identify key points in this review, listing them from the easiest and quickest comments to address, up to the most challenging and time-consuming reviewer comments. ” It’s surprising how much more enjoyable the review process becomes once I have a more holistic understanding of what the reviewer or editor is asking.

Balancing AI’s strengths and weaknesses to improve academic research

As educators, we must learn to coexist and co-create with these technological tools. LLMs have the potential to accelerate and improve research, resulting in ground-breaking ideas that push the limits of current possibilities.

But we must be careful. When used incorrectly, AI can speed up the process of achieving surface-level learning outcomes at the expense of a deeper understanding. Educators should approach generative AI with skepticism and curiosity, like they would with any other promising tool.

AI can also democratize research by making it accessible to people of all abilities and levels of expertise. This only makes our human essence—passions, interests, and complexities—even more important. After all, AI might be great at certain tasks, but the one thing it can’t take away is what makes you, well, you.

David Maslach is an associate professor at Florida State University specializing in organizational learning and innovation. He holds a PhD from the Ivey School of Business and serves on multiple academic journal boards. Maslach is also the founder of the R3ciprocity Project , a platform that provides solutions and hope to the global research community.

Related Articles

We use cookies to understand how you use our site and to improve your experience, including personalizing content. Learn More . By continuing to use our site, you accept our use of cookies and revised Privacy Policy .

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

Generative ai and writing.

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- USC Libraries Tutorials and Other Guides

- Bibliography

Research Writing and Generative AI Large Language Models

A rapidly evolving phenomenon impacting higher education is the availability of generative artificial intelligence systems [such as Chat Generative Pre-trained Transformer or ChatGPT]. These systems have been developed from scanning text from millions of books, web sites, and other sources to enable algorithms within the system to learn patterns in how words and sentences are constructed. This allows the platforms to respond to a broad range of questions and prompts, generate stories, compose essays, create lists, and more. Generative AI systems are not actually thinking or understanding like a human, but they are good at mimicking written text based on what it has learned from the sources of input data used to build and enhance its artificial intelligence algorithms, protocols, and standards.

As such, generative AI systems [a.k.a., “Large Language Models”] have emerged , depending on one’s perspective, as either a threat or an opportunity in how faculty create or modify class assignments and how students approach the task of writing a college-level research paper. We are in the early stages of understanding how LLMs may impact learning outcomes associated with information literacy, i.e., fluency in effectively applying the skills needed to effectively identify, gather, organize, critically evaluate, interpret, and report information. However, before this is fully understood, Large Language Models w ill continue to improve and become more sophisticated, as will academic integrity detection programs used to identify AI generated text in student papers.

When assigned to write a research paper, it is up to your professor if using ChatGTP is permitted or not. Some professors embrace using these systems as part of an in-class writing exercise to help understand their limitations, while others will warn against its use because of their current defects and biases. That said, the future of information seeking using LLMs means that the intellectual spaces associated with research and writing will likely collapse into a single online environment in which students will be able to perform in-depth searches for information connected to the Libraries' many electronic resources.

As LLMs quickly become more sophisticated, here are some potential ways generative artificial intelligence programs could facilitate organizing and writing your social sciences research paper:

- Explore a Topic – develop a research problem related to the questions you have about a general subject of inquiry.

- Formulate Ideas – obtain background information and explore ways to place the research problem within specific contexts .

- Zero in on Specific Research Questions and Related Sub-questions – create a query-based framework for how to investigate the research problem.

- Locate Sources to Answer those Questions – begin the initial search for sources concerning your research questions.

- Obtain Summaries of Sources – build a synopsis of the sources to help determine their relevance to the research questions underpinning the problem.

- Outline and Structure an Argument – present information that assists in formulating an argument or an explanation for a stated position.

- Draft and Iterate on a Final Essay – create a final essay based on a process of repeating the action of text generation on the results of each prior action [i.e., ask follow up questions to build on or clarify initial results].

Despite their power to create text, generative AI systems are far from perfect and their ability to “answer” questions can be misleading, deceiving, or outright false. Described below are some current problems adapted from an essay written by Bernard Marr at Forbes Magazine and reiterated by researchers studying LLMs and writing. These issues focus on problems with using ChatGPT, but they are applicable to any current Large Language Model program .

- Not Connected to the Internet . Although the generative AI systems may appear to possess a significant amount of information, most LLM’s are currently not mining the Internet for that information [note that this is changing quickly. For example, an AI chatbot feature is now embedded into Microsoft’s Bing search engine, but you'll probably need to pay for this feature in the future]. Without a connection to the Internet, LLMs cannot provide real-time information about a topic. As a result, the scope of research is limited and any new developments in a particular field of study will not be included in the responses. In addition, the LLMs can only accept input in text format. Therefore, other forms of knowledge such as videos, web sites, audio recordings, or images, are excluded as part of the inquiry prompts.

- The Time-consuming Consequences of AI Generated Hallucinations . If proofreading AI generated text results in discovering nonsensical information or an invalid list of scholarly sources [e.g., the title of a book is not in the library catalog or found anywhere online], you obviously must correct these errors before handing in your paper. The challenge is that you have to replace nonsensical or false statements with accurate information and you must support any AI generated declarative statements [e.g., "Integrated reading strategies are widely beneficial for children in middle school"] with citations to valid academic research that supports this argument . This requires reviewing the literature to locate real sources and real information, which is time consuming and challenging if you didn't actually compose the text. And, of course, if your professor asks you to show what page in a book or journal article you got the information from to support a generated statement of fact, well, that's a problem. Given this, ChatGPT and other systems should be viewed as a help tool and never a shortcut to actually doing the work of investigating a research problem.

- Trouble Generating Long-form, Structured Content . ChatGPT and other systems are inadequate at producing long-form content that follows a particular structure, format, or narrative flow. The models are capable of creating coherent and grammatically correct text and, as a result, they are currently best suited for generating shorter pieces of content like summaries of topics, bullet point lists, or brief explanations. However, they are poor at creating a comprehensive, coherent, and well-structured college-level research paper.

- Limitations in Handling Multiple Tasks . Generative AI systems perform best when given a single task or objective to focus on. If you ask LLMs to perform multiple tasks at the same time [e.g., a question that includes multiple sub-questions], the models struggle to prioritize them, which will lead to a decrease in the accuracy and reliability of the results.

- Biased Responses . This is important to understand. While ChatGPT and other systems are trained on a large set of text data, that data has not been widely shared so that it can be reviewed and critically analyzed. You can ask the systems what sources they are using, but any responses can not be independently verified. Therefore, it is not possible to identify any hidden biases or prejudices that exist within the data [i.e., it doesn't cite its sources]. This means the LLM may generate responses that are biased, discriminatory, or inappropriate in certain contexts .