6.894 : Interactive Data Visualization

Assignment 2: exploratory data analysis.

In this assignment, you will identify a dataset of interest and perform an exploratory analysis to better understand the shape & structure of the data, investigate initial questions, and develop preliminary insights & hypotheses. Your final submission will take the form of a report consisting of captioned visualizations that convey key insights gained during your analysis.

Step 1: Data Selection

First, you will pick a topic area of interest to you and find a dataset that can provide insights into that topic. To streamline the assignment, we've pre-selected a number of datasets for you to choose from.

However, if you would like to investigate a different topic and dataset, you are free to do so. If working with a self-selected dataset, please check with the course staff to ensure it is appropriate for the course. Be advised that data collection and preparation (also known as data wrangling ) can be a very tedious and time-consuming process. Be sure you have sufficient time to conduct exploratory analysis, after preparing the data.

After selecting a topic and dataset – but prior to analysis – you should write down an initial set of at least three questions you'd like to investigate.

Part 2: Exploratory Visual Analysis

Next, you will perform an exploratory analysis of your dataset using a visualization tool such as Tableau. You should consider two different phases of exploration.

In the first phase, you should seek to gain an overview of the shape & stucture of your dataset. What variables does the dataset contain? How are they distributed? Are there any notable data quality issues? Are there any surprising relationships among the variables? Be sure to also perform "sanity checks" for patterns you expect to see!

In the second phase, you should investigate your initial questions, as well as any new questions that arise during your exploration. For each question, start by creating a visualization that might provide a useful answer. Then refine the visualization (by adding additional variables, changing sorting or axis scales, filtering or subsetting data, etc. ) to develop better perspectives, explore unexpected observations, or sanity check your assumptions. You should repeat this process for each of your questions, but feel free to revise your questions or branch off to explore new questions if the data warrants.

- Final Deliverable

Your final submission should take the form of a Google Docs report – similar to a slide show or comic book – that consists of 10 or more captioned visualizations detailing your most important insights. Your "insights" can include important surprises or issues (such as data quality problems affecting your analysis) as well as responses to your analysis questions. To help you gauge the scope of this assignment, see this example report analyzing data about motion pictures . We've annotated and graded this example to help you calibrate for the breadth and depth of exploration we're looking for.

Each visualization image should be a screenshot exported from a visualization tool, accompanied with a title and descriptive caption (1-4 sentences long) describing the insight(s) learned from that view. Provide sufficient detail for each caption such that anyone could read through your report and understand what you've learned. You are free, but not required, to annotate your images to draw attention to specific features of the data. You may perform highlighting within the visualization tool itself, or draw annotations on the exported image. To easily export images from Tableau, use the Worksheet > Export > Image... menu item.

The end of your report should include a brief summary of main lessons learned.

Recommended Data Sources

To get up and running quickly with this assignment, we recommend exploring one of the following provided datasets:

World Bank Indicators, 1960–2017 . The World Bank has tracked global human developed by indicators such as climate change, economy, education, environment, gender equality, health, and science and technology since 1960. The linked repository contains indicators that have been formatted to facilitate use with Tableau and other data visualization tools. However, you're also welcome to browse and use the original data by indicator or by country . Click on an indicator category or country to download the CSV file.

Chicago Crimes, 2001–present (click Export to download a CSV file). This dataset reflects reported incidents of crime (with the exception of murders where data exists for each victim) that occurred in the City of Chicago from 2001 to present, minus the most recent seven days. Data is extracted from the Chicago Police Department's CLEAR (Citizen Law Enforcement Analysis and Reporting) system.

Daily Weather in the U.S., 2017 . This dataset contains daily U.S. weather measurements in 2017, provided by the NOAA Daily Global Historical Climatology Network . This data has been transformed: some weather stations with only sparse measurements have been filtered out. See the accompanying weather.txt for descriptions of each column .

Social mobility in the U.S. . Raj Chetty's group at Harvard studies the factors that contribute to (or hinder) upward mobility in the United States (i.e., will our children earn more than we will). Their work has been extensively featured in The New York Times. This page lists data from all of their papers, broken down by geographic level or by topic. We recommend downloading data in the CSV/Excel format, and encourage you to consider joining multiple datasets from the same paper (under the same heading on the page) for a sufficiently rich exploratory process.

The Yelp Open Dataset provides information about businesses, user reviews, and more from Yelp's database. The data is split into separate files ( business , checkin , photos , review , tip , and user ), and is available in either JSON or SQL format. You might use this to investigate the distributions of scores on Yelp, look at how many reviews users typically leave, or look for regional trends about restaurants. Note that this is a large, structured dataset and you don't need to look at all of the data to answer interesting questions. In order to download the data you will need to enter your email and agree to Yelp's Dataset License .

Additional Data Sources

If you want to investigate datasets other than those recommended above, here are some possible sources to consider. You are also free to use data from a source different from those included here. If you have any questions on whether your dataset is appropriate, please ask the course staff ASAP!

- data.boston.gov - City of Boston Open Data

- MassData - State of Masachussets Open Data

- data.gov - U.S. Government Open Datasets

- U.S. Census Bureau - Census Datasets

- IPUMS.org - Integrated Census & Survey Data from around the World

- Federal Elections Commission - Campaign Finance & Expenditures

- Federal Aviation Administration - FAA Data & Research

- fivethirtyeight.com - Data and Code behind the Stories and Interactives

- Buzzfeed News

- Socrata Open Data

- 17 places to find datasets for data science projects

Visualization Tools

You are free to use one or more visualization tools in this assignment. However, in the interest of time and for a friendlier learning curve, we strongly encourage you to use Tableau . Tableau provides a graphical interface focused on the task of visual data exploration. You will (with rare exceptions) be able to complete an initial data exploration more quickly and comprehensively than with a programming-based tool.

- Tableau - Desktop visual analysis software . Available for both Windows and MacOS; register for a free student license.

- Data Transforms in Vega-Lite . A tutorial on the various built-in data transformation operators available in Vega-Lite.

- Data Voyager , a research prototype from the UW Interactive Data Lab, combines a Tableau-style interface with visualization recommendations. Use at your own risk!

- R , using the ggplot2 library or with R's built-in plotting functions.

- Jupyter Notebooks (Python) , using libraries such as Altair or Matplotlib .

Data Wrangling Tools

The data you choose may require reformatting, transformation or cleaning prior to visualization. Here are tools you can use for data preparation. We recommend first trying to import and process your data in the same tool you intend to use for visualization. If that fails, pick the most appropriate option among the tools below. Contact the course staff if you are unsure what might be the best option for your data!

Graphical Tools

- Tableau Prep - Tableau provides basic facilities for data import, transformation & blending. Tableau prep is a more sophisticated data preparation tool

- Trifacta Wrangler - Interactive tool for data transformation & visual profiling.

- OpenRefine - A free, open source tool for working with messy data.

Programming Tools

- JavaScript data utilities and/or the Datalib JS library .

- Pandas - Data table and manipulation utilites for Python.

- dplyr - A library for data manipulation in R.

- Or, the programming language and tools of your choice...

The assignment score is out of a maximum of 10 points. Submissions that squarely meet the requirements will receive a score of 8. We will determine scores by judging the breadth and depth of your analysis, whether visualizations meet the expressivenes and effectiveness principles, and how well-written and synthesized your insights are.

We will use the following rubric to grade your assignment. Note, rubric cells may not map exactly to specific point scores.

Submission Details

This is an individual assignment. You may not work in groups.

Your completed exploratory analysis report is due by noon on Wednesday 2/19 . Submit a link to your Google Doc report using this submission form . Please double check your link to ensure it is viewable by others (e.g., try it in an incognito window).

Resubmissions. Resubmissions will be regraded by teaching staff, and you may earn back up to 50% of the points lost in the original submission. To resubmit this assignment, please use this form and follow the same submission process described above. Include a short 1 paragraph description summarizing the changes from the initial submission. Resubmissions without this summary will not be regraded. Resubmissions will be due by 11:59pm on Saturday, 3/14. Slack days may not be applied to extend the resubmission deadline. The teaching staff will only begin to regrade assignments once the Final Project phase begins, so please be patient.

- Due: 12pm, Wed 2/19

- Recommended Datasets

- Example Report

- Visualization & Data Wrangling Tools

- Submission form

Python Data Analysis Example: A Step-by-Step Guide for Beginners

- data analysis

Doing real data analysis exercises is a great way to learn. But data analysis is a broad topic, and knowing how to proceed can be half the battle. In this step-by-step guide, we’ll show you a Python data analysis example and demonstrate how to analyze a dataset.

A great way to get practical experience in Python and accelerate your learning is by doing data analysis challenges. This will expose you to several key Python concepts, such as working with different file types, manipulating various data types (e.g. integers and strings), looping, and data visualization. Furthermore, you’ll also learn important data analysis techniques like cleaning data, smoothing noisy data, performing statistical tests and correlation analyses, and more. Along the way, you’ll also learn many built-in functions and Python libraries which make your work easier.

Knowing what steps to take in the data analysis process requires a bit of experience. For those wanting to explore data analysis, this article will show you a step-by-step guide to data analysis using Python. We’ll download a dataset, read it in, and start some exploratory data analysis to understand what we’re working with. Then we’ll be able to choose the best analysis technique to answer some interesting questions about the data.

This article is aimed at budding data analysts who already have a little experience in programming and analysis. If you’re looking for some learning material to get up-to-speed, consider our Introduction to Python for Data Science course, which contains 141 interactive exercises. For more in-depth material, our Python for Data Science track includes 5 interactive courses.

Python for Data Analysis

The process of examining, cleansing, transforming, and modeling data to discover useful information plays a crucial role in business, finance, academia, and other fields. Whether it's understanding customer behavior, optimizing business processes, or making informed decisions, data analysis provides you with the tools to unlock valuable insights from data.

Python has emerged as a preferred tool for data analysis due to its simplicity, versatility, and many o pen-source libraries . With its intuitive syntax and large online community, Python enables both beginners and experts to perform complex data analysis tasks efficiently. Libraries such as pandas, NumPy, and Matplotlib make this possible by providing essential functionalities for all aspects of the data analysis process.

The pandas library simplifies the process of working with structured data (e.g. tabular data, time series). NumPy , which is used for scientific computing in Python, provides powerful array objects and functions for numerical operations. It is essential for the mathematical computations involved in data analysis. It’s particularly useful for working with B ig D ata , as it is very efficient. Matplotlib is a comprehensive library for creating visualizations in Python ; it facilitates the exploration and communication of data insights.

In the following sections, we’ll leverage these libraries to analyze a real-world dataset and demonstrate the process of going from raw data to useful conclusions.

The Sunspots Dataset

For this Python data analysis example, we’ll be working with the Sunspots dataset, which can be downloaded from Kaggle . The data includes a row number, a date, and an observation of the total number of sunspots for each month from 1749 to 2021.

Sunspots are regions of the sun's photosphere that are temporarily cooler than the surrounding material due to a reduction in convective transport of energy. As such, they appear darker and can be relatively easily observed – which accounts for the impressively long time period of the dataset. Sunspots can last anywhere from a few days to a few months, and have diameters ranging from around 16 km to 160,000 km. They can also be associated with solar flares and coronal mass ejections, which makes understanding them important for life on Earth.

Some interesting questions that could be investigated are:

- What is the period of sunspot activity?

- When can we expect the next peak in solar activity?

Python Data Analysis Example

Step 1: import data.

Once you have downloaded the Sunspots dataset, the next step is to import the data into Python. There are several ways to do this; the one you choose depends on the format of your data.

If you have data in a text file, you may need to read the data in line-by-line using a for loop . As an example, take a look at how we imported the atmospheric sounding dataset in the article 7 Datasets to Practice Data Analysis in Python .

Alternatively, the data could be in the JSON format . In this case, you can use Python’s json library . This is covered in the How to Read and Write JSON Files in Python course.

A common way to store data is in either Excel (.xlsx) or comma-separated-value (.csv) files. In both of these cases, you can read the data directly into a pandas DataFrame. This is a useful way to parse data, since you can directly use many helpful pandas functions to manipulate and process the data. The How to Read and Write CSV Files in Python and How to Read and Write Excel Files in Python courses include interactive exercises to demonstrate this functionality.

Since the Sunspots dataset is in the CSV format, we can read it in using pandas. If you haven’t installed pandas yet, you can do so with a quick command:

Now, you can import the data into a DataFrame:

The read_csv() function automatically parses the data. It comes with many arguments to customize how the data is imported. For example, the index_col argument defines which column to use as the row label. The parse_dates argument defines which column holds dates. Our DataFrame, called df, holds our sunspots data with the variable name Monthly Mean Total Sunspot Number and the date of observation with the variable name Date .

Step 2: Data Cleaning and Preparation

Cleaning the data involves handling missing values, converting variables into the correct data types, and applying any filters.

If your data has missing values, there are a number of possible ways to handle them. You could simply just convert them to NaN (not a number). Alternatively, you could do a forward (backward) fill, which copies the previous (next) value into the missing position. Or you could also interpolate by using neighboring values to extrapolate a value into the missing position. The method you choose depends on your use case.

You should also check to see that numerical data is stored as a float or integer; if not, you need to convert it to the correct data type. If there are outliers in your data, you may consider removing them so as not to bias your results.

Or maybe you’re working with text data and you need to remove punctuation and numbers from your text and convert everything to lowercase. All these considerations fall under the umbrella of data cleaning. For some concrete examples, see our article Python Data Cleaning: A How-to Guide for Beginners .

Let’s start by getting an overview of our dataset:

The df.head() function prints the first 5 rows of data. You can see the row number (starting from zero), the date (in yyyy-mm-dd format), and the observation of the number of sunspots for the month. To check the datatypes of the variables, execute the following command:

The date has the datatype datetime64 , which is used to store dates in pandas, and the number of sunspots variable is a float.

Next, here's how to check if there are any missing data points in the Monthly Mean Total Sunspot Number variable:

This takes advantage of the built-in isna() function, which checks to see if there are any missing values. It returns a series of booleans – True if a value is missing, False if not. Then, we use the built-in function any() to check if any of the booleans are True. This returns False , which indicates there are no missing values in our data. You can find more details about this important step in The Most Helpful Python Data Cleaning Modules .

Step 3: Exploratory Data Analysis

The next stage is to start analyzing your data by calculating summary statistics, plotting histograms and scatter plots, or performing statistical tests. The goal is to gain a better understanding of the variables, and then use this understanding to guide the rest of the analysis. After performing exploratory data analysis, you will have a better understanding of what your data looks like and how to use it to answer questions. Our article Python Exploratory Data Analysis Cheat Sheet contains many more details, examples, and ideas about how to proceed.

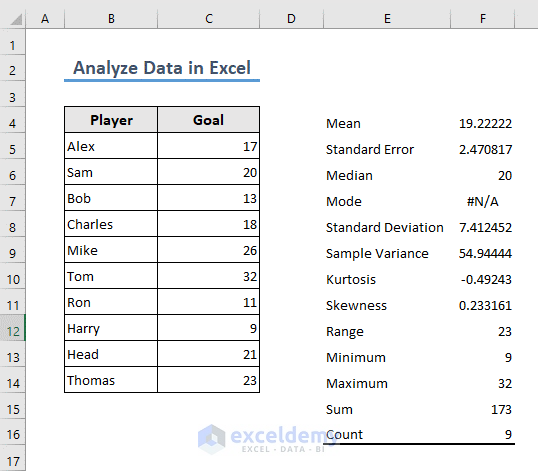

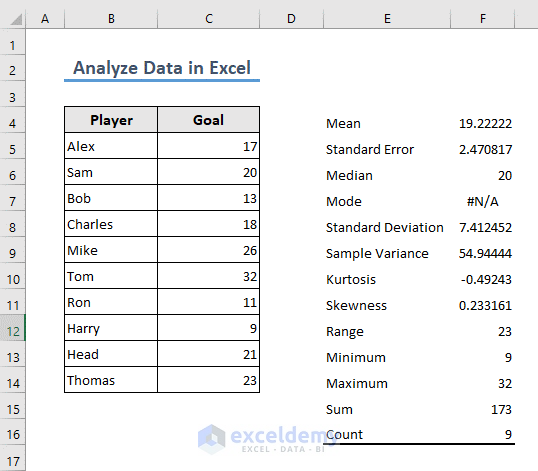

A good starting point is to do a basic statistical analysis to determine the mean, median, standard deviation, etc. This can easily be achieved by using the df.describe() function:

We have a total of 3,265 observations and a mean of over 81 sunspots per month. The minimum is zero and the maximum is 398. This gives us an idea of the range of typical values. The standard deviation is about 67, which gives us an idea about how much the number of sunspots varies.

Notice the 50% percentile is less than the mean. This implies the data is skewed to lower values. This is very useful information if we want to do more advanced statistics since some tests assume a normal distribution.

We can confirm this by plotting a histogram of the number of sunspots per month. Visualization is an important skill in Python data analysis. Check out our article The Top 5 Python Libraries for Data Visualization . For our purposes, we’ll use matplotlib . This too can easily be installed with a quick pip install command. The code to plot a histogram looks like this:

Now we can see the most common value is less than 20 sunspots for the month, and numbers above 200 are quite rare. Finally, let’s plot the time series to see the full dataset:

We can see from the above plot there is a periodic increase and decrease in the number of sunspots. It looks like the maximum occurs roughly every 9 – 12 years. A natural question arises as to exactly how long that period is.

Signal processing is a detailed topic, so we’ll skim over some of the hairy details. To keep it simple, we need to decompose the above signal into a frequency spectrum, then find the dominant frequency. From this we can then compute the period. To compute the frequency spectrum, the Fourier Transform can be used, which is implemented in NumPy :

Try plotting the frequency spectrum and you’ll notice many peaks. One of those hairy details of signal processing is the presence of peaks at the start and end of the array np.abs(fft_result) . We can see from the time series we plotted above the period should be somewhere between 9 – 12 years, so we can safely exclude these peaks by slicing the magnitude array to filter out unwanted frequencies:

The output is as follows:

We used NumPy’s argmax() function to find the index of the maximum frequency, used this to find the frequency, and then converted this to a period. We finally print the results as a period of years.

This is a great example of using the understanding gained from exploratory data analysis to inform our data processing so we get a result that makes sense.

Step 4: Drawing Conclusions from Data

We were able to learn that the average number of sunspots per month is around 81, but the distribution is highly skewed to lower numbers. Indeed, the most common number of sunspots per month is less than 20, but in a period of high solar activity (75 th percentile), there could be over 120.

By plotting the time series, we could see the signal is periodic and get an idea that there is a regular maximum and minimum in the number of sunspots. By doing some signal processing, we determined the maximum number of sunspots is about every 11 years. From the timeseries plot we can see the last maximum was around 2014, meaning the next should be around 2025.

Further Python Data Analysis Examples

Working with the Sunspots dataset presents some unique advantages – e.g. it’s not a common dataset. We discuss this in our article 11 Tips for Building a Strong Data Science Portfolio with Python . This example of Python data analysis can also teach us a lot about programming in Python. We learnt how to read data into a pandas DataFrame and summarize our data using built-in functions. We did some plotting with Matplotlib and got a taste of signal processing with NumPy. We also did a little array slicing to get results that make sense. You’ll learn many of these important topics in the Introduction to Python for Data Science course and the Python for Data Science track.

We just scratched the surface of this analysis of sunspot data in Python. There are many more interesting questions which could be answered. For example, is there a trend in the number of sunspots over the 272 years of data? How long does the maximum last? How many sunspots should there be during our predicted next maximum? These questions can all be answered with Python.

There’s always more to learn on your Python data analysis journey, and books are a great resource. Our article The Best Python Books for Data Science has some great suggestions for your next trip to a bookstore. All the suggestions there will give you the tools to delve deeper into Python and data analysis techniques. Then, it’s a matter of practicing what you learn by starting a new data science project. Here are some Python Data Science Project Ideas . Happy coding!

You may also like

How Do You Write a SELECT Statement in SQL?

What Is a Foreign Key in SQL?

Enumerate and Explain All the Basic Elements of an SQL Query

- Top Courses

- Online Degrees

- Find your New Career

- Join for Free

Introduction to Data Analytics

This course is part of multiple programs. Learn more

This course is part of multiple programs

Taught in English

Some content may not be translated

Instructor: Rav Ahuja

Financial aid available

515,636 already enrolled

(14,880 reviews)

Recommended experience

Beginner level

All you need to get started is basic computer literacy, high school level math, and access to a modern web browser such as Chrome or Firefox.

What you'll learn

Explain what Data Analytics is and the key steps in the Data Analytics process

Differentiate between different data roles such as Data Engineer, Data Analyst, Data Scientist, Business Analyst, and Business Intelligence Analyst

Describe the different types of data structures, file formats, and sources of data

Describe the data analysis process involving collecting, wrangling, mining, and visualizing data

Skills you'll gain

- Data Science

- Spreadsheet

- Data Analysis

- Microsoft Excel

- Data Visualization

Details to know

Add to your LinkedIn profile

See how employees at top companies are mastering in-demand skills

Build your subject-matter expertise

- Learn new concepts from industry experts

- Gain a foundational understanding of a subject or tool

- Develop job-relevant skills with hands-on projects

- Earn a shareable career certificate

Earn a career certificate

Add this credential to your LinkedIn profile, resume, or CV

Share it on social media and in your performance review

There are 5 modules in this course

Ready to start a career in Data Analysis but don’t know where to begin? This course presents you with a gentle introduction to Data Analysis, the role of a Data Analyst, and the tools used in this job. You will learn about the skills and responsibilities of a data analyst and hear from several data experts sharing their tips & advice to start a career. This course will help you to differentiate between the roles of Data Analysts, Data Scientists, and Data Engineers.

You will familiarize yourself with the data ecosystem, alongside Databases, Data Warehouses, Data Marts, Data Lakes and Data Pipelines. Continue this exciting journey and discover Big Data platforms such as Hadoop, Hive, and Spark. By the end of this course you’ll be able to understand the fundamentals of the data analysis process including gathering, cleaning, analyzing and sharing data and communicating your insights with the use of visualizations and dashboard tools. This all comes together in the final project where it will test your knowledge of the course material, and provide a real-world scenario of data analysis tasks. This course does not require any prior data analysis, spreadsheet, or computer science experience.

What is Data Analytics

In this module, you will learn about the different types of data analysis and the key steps in a data analysis process. You will gain an understanding of the different components of a modern data ecosystem, and the role Data Engineers, Data Analysts, Data Scientists, Business Analysts, and Business Intelligence Analysts play in this ecosystem. You will also learn about the role, responsibilities, and skillsets required to be a Data Analyst, and what a typical day in the life of a Data Analyst looks like.

What's included

9 videos 3 readings 4 quizzes 1 discussion prompt

9 videos • Total 39 minutes

- Course Introduction • 2 minutes • Preview module

- Modern Data Ecosystem • 4 minutes

- Key Players in the Data Ecosystem • 5 minutes

- Defining Data Analysis • 5 minutes

- Viewpoints: What is Data Analytics? • 3 minutes

- Responsibilities of a Data Analyst • 4 minutes

- Viewpoints: Qualities and Skills to be a Data Analyst • 4 minutes

- A Day in the Life of a Data Analyst • 5 minutes

- Viewpoints: Applications of Data Analytics • 2 minutes

3 readings • Total 22 minutes

- Data Analytics vs. Data Analysis • 2 minutes

- Summary and Highlights • 10 minutes

4 quizzes • Total 45 minutes

- Practice Quiz • 9 minutes

- Practice Quiz • 6 minutes

- Graded Quiz • 15 minutes

1 discussion prompt • Total 5 minutes

- Introduce yourself • 5 minutes

The Data Ecosystem

In this module, you will learn about the different types of data structures, file formats, sources of data, and the languages data professionals use in their day-to-day tasks. You will gain an understanding of various types of data repositories such as Databases, Data Warehouses, Data Marts, Data Lakes, and Data Pipelines. In addition, you will learn about the Extract, Transform, and Load (ETL) Process, which is used to extract, transform, and load data into data repositories. You will gain a basic understanding of Big Data and Big Data processing tools such as Hadoop, Hadoop Distributed File System (HDFS), Hive, and Spark.

11 videos 2 readings 4 quizzes

11 videos • Total 67 minutes

- Overview of the Data Analyst Ecosystem • 3 minutes • Preview module

- Types of Data • 4 minutes

- Understanding Different Types of File Formats • 4 minutes

- Sources of Data • 7 minutes

- Languages for Data Professionals • 8 minutes

- Overview of Data Repositories • 4 minutes

- RDBMS • 7 minutes

- NoSQL • 7 minutes

- Data Marts, Data Lakes, ETL, and Data Pipelines • 6 minutes

- Foundations of Big Data • 5 minutes

- Big Data Processing Tools • 6 minutes

2 readings • Total 20 minutes

4 quizzes • total 66 minutes.

- Practice Quiz • 15 minutes

- Practice Quiz • 18 minutes

- Graded Quiz • 18 minutes

Gathering and Wrangling Data

In this module, you will learn about the process and steps involved in identifying, gathering, and importing data from disparate sources. You will learn about the tasks involved in wrangling and cleaning data in order to make it ready for analysis. In addition, you will gain an understanding of the different tools that can be used for gathering, importing, wrangling, and cleaning data, along with some of their characteristics, strengths, limitations, and applications.

7 videos 2 readings 4 quizzes

7 videos • Total 39 minutes

- Identifying Data for Analysis • 5 minutes • Preview module

- Data Sources • 4 minutes

- How to Gather and Import Data • 6 minutes

- What is Data Wrangling? • 6 minutes

- Tools for Data Wrangling • 5 minutes

- Data Cleaning • 6 minutes

- Viewpoints: Data Preparation and Reliability • 4 minutes

4 quizzes • Total 48 minutes

Mining & visualizing data and communicating results.

In this module, you will learn about the role of Statistical Analysis in mining and visualizing data. You will learn about the various statistical and analytical tools and techniques you can use in order to gain a deeper understanding of your data. These tools help you to understand the patterns, trends, and correlations that exist in data. In addition, you will learn about the various types of data visualizations that can help you communicate and tell a compelling story with your data. You will also gain an understanding of the different tools that can be used for mining and visualizing data, along with some of their characteristics, strengths, limitations, and applications.

8 videos 2 readings 4 quizzes

8 videos • Total 44 minutes

- Overview of Statistical Analysis • 8 minutes • Preview module

- What is Data Mining? • 5 minutes

- Tools for Data Mining • 6 minutes

- Overview of Communicating and Sharing Data Analysis Findings • 5 minutes

- Viewpoints: Storytelling in Data Analysis • 3 minutes

- Introduction to Data Visualization • 5 minutes

- Introduction to Visualization and Dashboarding Software • 7 minutes

- Viewpoints: Visualization Tools • 3 minutes

Career Opportunities and Data Analysis in Action

In this module, you will learn about the different career opportunities in the field of Data Analysis and the different paths that you can take for getting skilled as a Data Analyst. At the end of the module, you will demonstrate your understanding of some of the basic tasks involved in gathering, wrangling, mining, analyzing, and visualizing data.

7 videos 4 readings 2 quizzes 1 peer review

7 videos • Total 28 minutes

- Career Opportunities in Data Analysis • 5 minutes • Preview module

- Viewpoints: Get into Data Profession • 3 minutes

- Viewpoints: What do Employers look for in a Data Analyst? • 5 minutes

- The Many Paths to Data Analysis • 4 minutes

- Viewpoints: Career Options for Data Professionals • 3 minutes

- Viewpoints: Advice for aspiring Data Analysts • 3 minutes

- Viewpoints: Women in Data Professions • 3 minutes

4 readings • Total 32 minutes

- Using Data Analysis for Detecting Credit Card Fraud • 10 minutes

- Congratulations and Next Steps • 2 minutes

- Course Credits and Acknowledgements • 10 minutes

2 quizzes • Total 21 minutes

1 peer review • total 60 minutes.

- Peer-Graded Final Assignment • 60 minutes

Instructor ratings

We asked all learners to give feedback on our instructors based on the quality of their teaching style.

IBM is the global leader in business transformation through an open hybrid cloud platform and AI, serving clients in more than 170 countries around the world. Today 47 of the Fortune 50 Companies rely on the IBM Cloud to run their business, and IBM Watson enterprise AI is hard at work in more than 30,000 engagements. IBM is also one of the world’s most vital corporate research organizations, with 28 consecutive years of patent leadership. Above all, guided by principles for trust and transparency and support for a more inclusive society, IBM is committed to being a responsible technology innovator and a force for good in the world. For more information about IBM visit: www.ibm.com

Recommended if you're interested in Data Analysis

Excel Basics for Data Analysis

Data Visualization and Dashboards with Excel and Cognos

IBM Data Analyst

Make progress toward a degree

Professional Certificate

IBM Data Analyst Capstone Project

Why people choose coursera for their career.

Learner reviews

Showing 3 of 14880

14,880 reviews

Reviewed on Sep 3, 2022

Good informative course, could be a little more interactive. While each section had quick test at the end, it would've been nice to have had more engaging questions and activities throughout.

Reviewed on May 11, 2021

I must say as a Coursera learner, this course is unmatched in it's rigor, vividness of concepts and precision of demonstration and layout. Thanks to all those who put this piece of art together.

Reviewed on Mar 13, 2021

Great general and broad information on data analytics. Gives good ideas and examples of career paths that can be followed. I especially liked how it ranked the various careers and specializations.

New to Data Analysis? Start here.

Open new doors with Coursera Plus

Unlimited access to 7,000+ world-class courses, hands-on projects, and job-ready certificate programs - all included in your subscription

Advance your career with an online degree

Earn a degree from world-class universities - 100% online

Join over 3,400 global companies that choose Coursera for Business

Upskill your employees to excel in the digital economy

Frequently asked questions

When will i have access to the lectures and assignments.

Access to lectures and assignments depends on your type of enrollment. If you take a course in audit mode, you will be able to see most course materials for free. To access graded assignments and to earn a Certificate, you will need to purchase the Certificate experience, during or after your audit. If you don't see the audit option:

The course may not offer an audit option. You can try a Free Trial instead, or apply for Financial Aid.

The course may offer 'Full Course, No Certificate' instead. This option lets you see all course materials, submit required assessments, and get a final grade. This also means that you will not be able to purchase a Certificate experience.

What will I get if I subscribe to this Certificate?

When you enroll in the course, you get access to all of the courses in the Certificate, and you earn a certificate when you complete the work. Your electronic Certificate will be added to your Accomplishments page - from there, you can print your Certificate or add it to your LinkedIn profile. If you only want to read and view the course content, you can audit the course for free.

What is the refund policy?

If you subscribed, you get a 7-day free trial during which you can cancel at no penalty. After that, we don’t give refunds, but you can cancel your subscription at any time. See our full refund policy Opens in a new tab .

More questions

A Step-by-Step Guide to the Data Analysis Process

Like any scientific discipline, data analysis follows a rigorous step-by-step process. Each stage requires different skills and know-how. To get meaningful insights, though, it’s important to understand the process as a whole. An underlying framework is invaluable for producing results that stand up to scrutiny.

In this post, we’ll explore the main steps in the data analysis process. This will cover how to define your goal, collect data, and carry out an analysis. Where applicable, we’ll also use examples and highlight a few tools to make the journey easier. When you’re done, you’ll have a much better understanding of the basics. This will help you tweak the process to fit your own needs.

Here are the steps we’ll take you through:

- Defining the question

- Collecting the data

- Cleaning the data

- Analyzing the data

- Sharing your results

- Embracing failure

On popular request, we’ve also developed a video based on this article. Scroll further along this article to watch that.

Ready? Let’s get started with step one.

1. Step one: Defining the question

The first step in any data analysis process is to define your objective. In data analytics jargon, this is sometimes called the ‘problem statement’.

Defining your objective means coming up with a hypothesis and figuring how to test it. Start by asking: What business problem am I trying to solve? While this might sound straightforward, it can be trickier than it seems. For instance, your organization’s senior management might pose an issue, such as: “Why are we losing customers?” It’s possible, though, that this doesn’t get to the core of the problem. A data analyst’s job is to understand the business and its goals in enough depth that they can frame the problem the right way.

Let’s say you work for a fictional company called TopNotch Learning. TopNotch creates custom training software for its clients. While it is excellent at securing new clients, it has much lower repeat business. As such, your question might not be, “Why are we losing customers?” but, “Which factors are negatively impacting the customer experience?” or better yet: “How can we boost customer retention while minimizing costs?”

Now you’ve defined a problem, you need to determine which sources of data will best help you solve it. This is where your business acumen comes in again. For instance, perhaps you’ve noticed that the sales process for new clients is very slick, but that the production team is inefficient. Knowing this, you could hypothesize that the sales process wins lots of new clients, but the subsequent customer experience is lacking. Could this be why customers don’t come back? Which sources of data will help you answer this question?

Tools to help define your objective

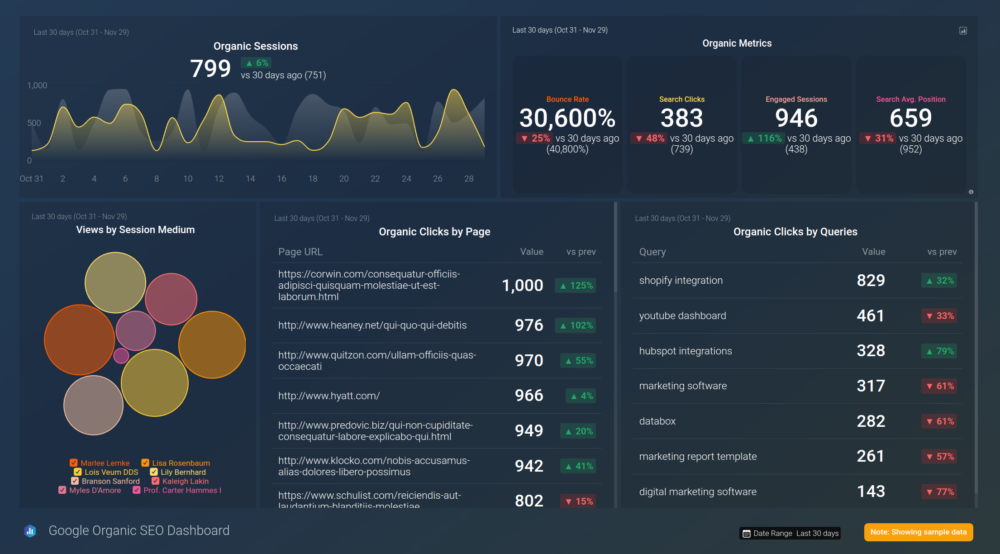

Defining your objective is mostly about soft skills, business knowledge, and lateral thinking. But you’ll also need to keep track of business metrics and key performance indicators (KPIs). Monthly reports can allow you to track problem points in the business. Some KPI dashboards come with a fee, like Databox and DashThis . However, you’ll also find open-source software like Grafana , Freeboard , and Dashbuilder . These are great for producing simple dashboards, both at the beginning and the end of the data analysis process.

2. Step two: Collecting the data

Once you’ve established your objective, you’ll need to create a strategy for collecting and aggregating the appropriate data. A key part of this is determining which data you need. This might be quantitative (numeric) data, e.g. sales figures, or qualitative (descriptive) data, such as customer reviews. All data fit into one of three categories: first-party, second-party, and third-party data. Let’s explore each one.

What is first-party data?

First-party data are data that you, or your company, have directly collected from customers. It might come in the form of transactional tracking data or information from your company’s customer relationship management (CRM) system. Whatever its source, first-party data is usually structured and organized in a clear, defined way. Other sources of first-party data might include customer satisfaction surveys, focus groups, interviews, or direct observation.

What is second-party data?

To enrich your analysis, you might want to secure a secondary data source. Second-party data is the first-party data of other organizations. This might be available directly from the company or through a private marketplace. The main benefit of second-party data is that they are usually structured, and although they will be less relevant than first-party data, they also tend to be quite reliable. Examples of second-party data include website, app or social media activity, like online purchase histories, or shipping data.

What is third-party data?

Third-party data is data that has been collected and aggregated from numerous sources by a third-party organization. Often (though not always) third-party data contains a vast amount of unstructured data points (big data). Many organizations collect big data to create industry reports or to conduct market research. The research and advisory firm Gartner is a good real-world example of an organization that collects big data and sells it on to other companies. Open data repositories and government portals are also sources of third-party data .

Tools to help you collect data

Once you’ve devised a data strategy (i.e. you’ve identified which data you need, and how best to go about collecting them) there are many tools you can use to help you. One thing you’ll need, regardless of industry or area of expertise, is a data management platform (DMP). A DMP is a piece of software that allows you to identify and aggregate data from numerous sources, before manipulating them, segmenting them, and so on. There are many DMPs available. Some well-known enterprise DMPs include Salesforce DMP , SAS , and the data integration platform, Xplenty . If you want to play around, you can also try some open-source platforms like Pimcore or D:Swarm .

Want to learn more about what data analytics is and the process a data analyst follows? We cover this topic (and more) in our free introductory short course for beginners. Check out tutorial one: An introduction to data analytics .

3. Step three: Cleaning the data

Once you’ve collected your data, the next step is to get it ready for analysis. This means cleaning, or ‘scrubbing’ it, and is crucial in making sure that you’re working with high-quality data . Key data cleaning tasks include:

- Removing major errors, duplicates, and outliers —all of which are inevitable problems when aggregating data from numerous sources.

- Removing unwanted data points —extracting irrelevant observations that have no bearing on your intended analysis.

- Bringing structure to your data —general ‘housekeeping’, i.e. fixing typos or layout issues, which will help you map and manipulate your data more easily.

- Filling in major gaps —as you’re tidying up, you might notice that important data are missing. Once you’ve identified gaps, you can go about filling them.

A good data analyst will spend around 70-90% of their time cleaning their data. This might sound excessive. But focusing on the wrong data points (or analyzing erroneous data) will severely impact your results. It might even send you back to square one…so don’t rush it! You’ll find a step-by-step guide to data cleaning here . You may be interested in this introductory tutorial to data cleaning, hosted by Dr. Humera Noor Minhas.

Carrying out an exploratory analysis

Another thing many data analysts do (alongside cleaning data) is to carry out an exploratory analysis. This helps identify initial trends and characteristics, and can even refine your hypothesis. Let’s use our fictional learning company as an example again. Carrying out an exploratory analysis, perhaps you notice a correlation between how much TopNotch Learning’s clients pay and how quickly they move on to new suppliers. This might suggest that a low-quality customer experience (the assumption in your initial hypothesis) is actually less of an issue than cost. You might, therefore, take this into account.

Tools to help you clean your data

Cleaning datasets manually—especially large ones—can be daunting. Luckily, there are many tools available to streamline the process. Open-source tools, such as OpenRefine , are excellent for basic data cleaning, as well as high-level exploration. However, free tools offer limited functionality for very large datasets. Python libraries (e.g. Pandas) and some R packages are better suited for heavy data scrubbing. You will, of course, need to be familiar with the languages. Alternatively, enterprise tools are also available. For example, Data Ladder , which is one of the highest-rated data-matching tools in the industry. There are many more. Why not see which free data cleaning tools you can find to play around with?

4. Step four: Analyzing the data

Finally, you’ve cleaned your data. Now comes the fun bit—analyzing it! The type of data analysis you carry out largely depends on what your goal is. But there are many techniques available. Univariate or bivariate analysis, time-series analysis, and regression analysis are just a few you might have heard of. More important than the different types, though, is how you apply them. This depends on what insights you’re hoping to gain. Broadly speaking, all types of data analysis fit into one of the following four categories.

Descriptive analysis

Descriptive analysis identifies what has already happened . It is a common first step that companies carry out before proceeding with deeper explorations. As an example, let’s refer back to our fictional learning provider once more. TopNotch Learning might use descriptive analytics to analyze course completion rates for their customers. Or they might identify how many users access their products during a particular period. Perhaps they’ll use it to measure sales figures over the last five years. While the company might not draw firm conclusions from any of these insights, summarizing and describing the data will help them to determine how to proceed.

Learn more: What is descriptive analytics?

Diagnostic analysis

Diagnostic analytics focuses on understanding why something has happened . It is literally the diagnosis of a problem, just as a doctor uses a patient’s symptoms to diagnose a disease. Remember TopNotch Learning’s business problem? ‘Which factors are negatively impacting the customer experience?’ A diagnostic analysis would help answer this. For instance, it could help the company draw correlations between the issue (struggling to gain repeat business) and factors that might be causing it (e.g. project costs, speed of delivery, customer sector, etc.) Let’s imagine that, using diagnostic analytics, TopNotch realizes its clients in the retail sector are departing at a faster rate than other clients. This might suggest that they’re losing customers because they lack expertise in this sector. And that’s a useful insight!

Predictive analysis

Predictive analysis allows you to identify future trends based on historical data . In business, predictive analysis is commonly used to forecast future growth, for example. But it doesn’t stop there. Predictive analysis has grown increasingly sophisticated in recent years. The speedy evolution of machine learning allows organizations to make surprisingly accurate forecasts. Take the insurance industry. Insurance providers commonly use past data to predict which customer groups are more likely to get into accidents. As a result, they’ll hike up customer insurance premiums for those groups. Likewise, the retail industry often uses transaction data to predict where future trends lie, or to determine seasonal buying habits to inform their strategies. These are just a few simple examples, but the untapped potential of predictive analysis is pretty compelling.

Prescriptive analysis

Prescriptive analysis allows you to make recommendations for the future. This is the final step in the analytics part of the process. It’s also the most complex. This is because it incorporates aspects of all the other analyses we’ve described. A great example of prescriptive analytics is the algorithms that guide Google’s self-driving cars. Every second, these algorithms make countless decisions based on past and present data, ensuring a smooth, safe ride. Prescriptive analytics also helps companies decide on new products or areas of business to invest in.

Learn more: What are the different types of data analysis?

5. Step five: Sharing your results

You’ve finished carrying out your analyses. You have your insights. The final step of the data analytics process is to share these insights with the wider world (or at least with your organization’s stakeholders!) This is more complex than simply sharing the raw results of your work—it involves interpreting the outcomes, and presenting them in a manner that’s digestible for all types of audiences. Since you’ll often present information to decision-makers, it’s very important that the insights you present are 100% clear and unambiguous. For this reason, data analysts commonly use reports, dashboards, and interactive visualizations to support their findings.

How you interpret and present results will often influence the direction of a business. Depending on what you share, your organization might decide to restructure, to launch a high-risk product, or even to close an entire division. That’s why it’s very important to provide all the evidence that you’ve gathered, and not to cherry-pick data. Ensuring that you cover everything in a clear, concise way will prove that your conclusions are scientifically sound and based on the facts. On the flip side, it’s important to highlight any gaps in the data or to flag any insights that might be open to interpretation. Honest communication is the most important part of the process. It will help the business, while also helping you to excel at your job!

Tools for interpreting and sharing your findings

There are tons of data visualization tools available, suited to different experience levels. Popular tools requiring little or no coding skills include Google Charts , Tableau , Datawrapper , and Infogram . If you’re familiar with Python and R, there are also many data visualization libraries and packages available. For instance, check out the Python libraries Plotly , Seaborn , and Matplotlib . Whichever data visualization tools you use, make sure you polish up your presentation skills, too. Remember: Visualization is great, but communication is key!

You can learn more about storytelling with data in this free, hands-on tutorial . We show you how to craft a compelling narrative for a real dataset, resulting in a presentation to share with key stakeholders. This is an excellent insight into what it’s really like to work as a data analyst!

6. Step six: Embrace your failures

The last ‘step’ in the data analytics process is to embrace your failures. The path we’ve described above is more of an iterative process than a one-way street. Data analytics is inherently messy, and the process you follow will be different for every project. For instance, while cleaning data, you might spot patterns that spark a whole new set of questions. This could send you back to step one (to redefine your objective). Equally, an exploratory analysis might highlight a set of data points you’d never considered using before. Or maybe you find that the results of your core analyses are misleading or erroneous. This might be caused by mistakes in the data, or human error earlier in the process.

While these pitfalls can feel like failures, don’t be disheartened if they happen. Data analysis is inherently chaotic, and mistakes occur. What’s important is to hone your ability to spot and rectify errors. If data analytics was straightforward, it might be easier, but it certainly wouldn’t be as interesting. Use the steps we’ve outlined as a framework, stay open-minded, and be creative. If you lose your way, you can refer back to the process to keep yourself on track.

In this post, we’ve covered the main steps of the data analytics process. These core steps can be amended, re-ordered and re-used as you deem fit, but they underpin every data analyst’s work:

- Define the question —What business problem are you trying to solve? Frame it as a question to help you focus on finding a clear answer.

- Collect data —Create a strategy for collecting data. Which data sources are most likely to help you solve your business problem?

- Clean the data —Explore, scrub, tidy, de-dupe, and structure your data as needed. Do whatever you have to! But don’t rush…take your time!

- Analyze the data —Carry out various analyses to obtain insights. Focus on the four types of data analysis: descriptive, diagnostic, predictive, and prescriptive.

- Share your results —How best can you share your insights and recommendations? A combination of visualization tools and communication is key.

- Embrace your mistakes —Mistakes happen. Learn from them. This is what transforms a good data analyst into a great one.

What next? From here, we strongly encourage you to explore the topic on your own. Get creative with the steps in the data analysis process, and see what tools you can find. As long as you stick to the core principles we’ve described, you can create a tailored technique that works for you.

To learn more, check out our free, 5-day data analytics short course . You might also be interested in the following:

- These are the top 9 data analytics tools

- 10 great places to find free datasets for your next project

- How to build a data analytics portfolio

DATA 275 Introduction to Data Analytics

- Getting Started with SPSS

- Variable View

- Option Suggestions

- SPSS Viewer

- Entering Data

- Cleaning & Checking Your SPSS Database

- Recoding Data: Collapsing Continuous Data

- Constructing Scales and Checking Their Reliability

- Formatting Tables in APA style

- Creating a syntax

- Public Data Sources

Data Analytics Project Assignment

- Literature Review This link opens in a new window

For your research project you will conduct data analysis and right a report summarizing your analysis and the findings from your analysis. You will accomplish this by completing a series of assignments.

Data 275 Research Project Assignment

In this week’s assignment, you are required accomplish the following tasks:

1. Propose a topic for you project

The topic you select for your capstone depends on your interest and the data problem you want to address. Try to pick a topic that you would enjoy researching and writing about.

Your topic selection will also be influenced by data availability. Because, this is a data analytics project, you will need to have access to data. If you have access to your organization’s data, you are free to use it. If you choose to do so, all information presented must be in secure form because Davenport University does not assume any responsibility for the security of corporate data. Otherwise, you can select a topic that is amenable to publicly available data.

Click the link for some useful suggestions: Project Proposal Suggestions

2. Find a data set of your interest and download it

There are many publicly available data sets that you can use for your project. The library has compiled a list of many possible sources of data. Click on the link below to explore these sources.

Public Data Sources

The data set you select must have:

At least 50 observations (50 rows) and at least 4 variables (columns) excluding identification variables At least one dependent variable

You must provide:

A proper citation of the data source using APA style format A discussion on how the data was collected and by whom The number of variables in the data set The number of observations/subjects in the data set A description of each variable together with an explanation of how it is measured (e.g. the unit of measurement).

Deliverable

A minimum of one page description of your data analytics project which must include the following:

A title for your project A brief description of the project Major stakeholders who would use the information that would be generated from your analysis and how they would use/benefit from that information A description of the dataset you will use for your project

- << Previous: Public Data Sources

- Next: Literature Review >>

- Last Updated: Mar 15, 2024 10:33 AM

- URL: https://davenport.libguides.com/data275

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

101 Pandas Exercises for Data Analysis

- April 27, 2018

- Selva Prabhakaran

101 python pandas exercises are designed to challenge your logical muscle and to help internalize data manipulation with python’s favorite package for data analysis. The questions are of 3 levels of difficulties with L1 being the easiest to L3 being the hardest.

You might also like to practice the 101 NumPy exercises , they are often used together.

1. How to import pandas and check the version?

2. how to create a series from a list, numpy array and dict.

Create a pandas series from each of the items below: a list, numpy and a dictionary

3. How to convert the index of a series into a column of a dataframe?

Difficulty Level: L1

Convert the series ser into a dataframe with its index as another column on the dataframe.

4. How to combine many series to form a dataframe?

Combine ser1 and ser2 to form a dataframe.

5. How to assign name to the series’ index?

Give a name to the series ser calling it ‘alphabets’.

6. How to get the items of series A not present in series B?

Difficulty Level: L2

From ser1 remove items present in ser2 .

7. How to get the items not common to both series A and series B?

Get all items of ser1 and ser2 not common to both.

8. How to get the minimum, 25th percentile, median, 75th, and max of a numeric series?

Difficuty Level: L2

Compute the minimum, 25th percentile, median, 75th, and maximum of ser .

9. How to get frequency counts of unique items of a series?

Calculte the frequency counts of each unique value ser .

10. How to keep only top 2 most frequent values as it is and replace everything else as ‘Other’?

From ser , keep the top 2 most frequent items as it is and replace everything else as ‘Other’.

11. How to bin a numeric series to 10 groups of equal size?

Bin the series ser into 10 equal deciles and replace the values with the bin name.

Desired Output

12. How to convert a numpy array to a dataframe of given shape? (L1)

Reshape the series ser into a dataframe with 7 rows and 5 columns

13. How to find the positions of numbers that are multiples of 3 from a series?

Find the positions of numbers that are multiples of 3 from ser .

14. How to extract items at given positions from a series

From ser , extract the items at positions in list pos .

15. How to stack two series vertically and horizontally ?

Stack ser1 and ser2 vertically and horizontally (to form a dataframe).

16. How to get the positions of items of series A in another series B?

Get the positions of items of ser2 in ser1 as a list.

17. How to compute the mean squared error on a truth and predicted series?

Compute the mean squared error of truth and pred series.

18. How to convert the first character of each element in a series to uppercase?

Change the first character of each word to upper case in each word of ser .

19. How to calculate the number of characters in each word in a series?

20. how to compute difference of differences between consequtive numbers of a series.

Difference of differences between the consequtive numbers of ser .

21. How to convert a series of date-strings to a timeseries?

Difficiulty Level: L2

22. How to get the day of month, week number, day of year and day of week from a series of date strings?

Get the day of month, week number, day of year and day of week from ser .

Desired output

23. How to convert year-month string to dates corresponding to the 4th day of the month?

Change ser to dates that start with 4th of the respective months.

24. How to filter words that contain atleast 2 vowels from a series?

Difficiulty Level: L3

From ser , extract words that contain atleast 2 vowels.

25. How to filter valid emails from a series?

Extract the valid emails from the series emails . The regex pattern for valid emails is provided as reference.

26. How to get the mean of a series grouped by another series?

Compute the mean of weights of each fruit .

27. How to compute the euclidean distance between two series?

Compute the euclidean distance between series (points) p and q, without using a packaged formula.

28. How to find all the local maxima (or peaks) in a numeric series?

Get the positions of peaks (values surrounded by smaller values on both sides) in ser .

29. How to replace missing spaces in a string with the least frequent character?

Replace the spaces in my_str with the least frequent character.

30. How to create a TimeSeries starting ‘2000-01-01’ and 10 weekends (saturdays) after that having random numbers as values?

31. how to fill an intermittent time series so all missing dates show up with values of previous non-missing date.

ser has missing dates and values. Make all missing dates appear and fill up with value from previous date.

32. How to compute the autocorrelations of a numeric series?

Compute autocorrelations for the first 10 lags of ser . Find out which lag has the largest correlation.

33. How to import only every nth row from a csv file to create a dataframe?

Import every 50th row of BostonHousing dataset as a dataframe.

34. How to change column values when importing csv to a dataframe?

Import the boston housing dataset , but while importing change the 'medv' (median house value) column so that values < 25 becomes ‘Low’ and > 25 becomes ‘High’.

35. How to create a dataframe with rows as strides from a given series?

36. how to import only specified columns from a csv file.

Import ‘crim’ and ‘medv’ columns of the BostonHousing dataset as a dataframe.

37. How to get the n rows, n columns, datatype, summary stats of each column of a dataframe? Also get the array and list equivalent.

Get the number of rows, columns, datatype and summary statistics of each column of the Cars93 dataset. Also get the numpy array and list equivalent of the dataframe.

38. How to extract the row and column number of a particular cell with given criterion?

Which manufacturer, model and type has the highest Price ? What is the row and column number of the cell with the highest Price value?

39. How to rename a specific columns in a dataframe?

Rename the column Type as CarType in df and replace the ‘.’ in column names with ‘_’.

Desired Solution

40. How to check if a dataframe has any missing values?

Check if df has any missing values.

41. How to count the number of missing values in each column?

Count the number of missing values in each column of df . Which column has the maximum number of missing values?

42. How to replace missing values of multiple numeric columns with the mean?

Replace missing values in Min.Price and Max.Price columns with their respective mean.

43. How to use apply function on existing columns with global variables as additional arguments?

Difficulty Level: L3

In df , use apply method to replace the missing values in Min.Price with the column’s mean and those in Max.Price with the column’s median.

Use Hint from StackOverflow

44. How to select a specific column from a dataframe as a dataframe instead of a series?

Get the first column ( a ) in df as a dataframe (rather than as a Series).

45. How to change the order of columns of a dataframe?

Actually 3 questions.

Create a generic function to interchange two columns, without hardcoding column names.

Sort the columns in reverse alphabetical order, that is colume 'e' first through column 'a' last.

46. How to set the number of rows and columns displayed in the output?

Change the pamdas display settings on printing the dataframe df it shows a maximum of 10 rows and 10 columns.

47. How to format or suppress scientific notations in a pandas dataframe?

Suppress scientific notations like ‘e-03’ in df and print upto 4 numbers after decimal.

48. How to format all the values in a dataframe as percentages?

Format the values in column 'random' of df as percentages.

49. How to filter every nth row in a dataframe?

From df , filter the 'Manufacturer' , 'Model' and 'Type' for every 20th row starting from 1st (row 0).

50. How to create a primary key index by combining relevant columns?

In df , Replace NaN s with ‘missing’ in columns 'Manufacturer' , 'Model' and 'Type' and create a index as a combination of these three columns and check if the index is a primary key.

51. How to get the row number of the nth largest value in a column?

Find the row position of the 5th largest value of column 'a' in df .

52. How to find the position of the nth largest value greater than a given value?

In ser , find the position of the 2nd largest value greater than the mean.

53. How to get the last n rows of a dataframe with row sum > 100?

Get the last two rows of df whose row sum is greater than 100.

54. How to find and cap outliers from a series or dataframe column?

Replace all values of ser in the lower 5%ile and greater than 95%ile with respective 5th and 95th %ile value.

55. How to reshape a dataframe to the largest possible square after removing the negative values?

Reshape df to the largest possible square with negative values removed. Drop the smallest values if need be. The order of the positive numbers in the result should remain the same as the original.

56. How to swap two rows of a dataframe?

Swap rows 1 and 2 in df .

57. How to reverse the rows of a dataframe?

Reverse all the rows of dataframe df .

58. How to create one-hot encodings of a categorical variable (dummy variables)?

Get one-hot encodings for column 'a' in the dataframe df and append it as columns.

59. Which column contains the highest number of row-wise maximum values?

Obtain the column name with the highest number of row-wise maximum’s in df .

60. How to create a new column that contains the row number of nearest column by euclidean distance?

Create a new column such that, each row contains the row number of nearest row-record by euclidean distance.

61. How to know the maximum possible correlation value of each column against other columns?

Compute maximum possible absolute correlation value of each column against other columns in df .

62. How to create a column containing the minimum by maximum of each row?

Compute the minimum-by-maximum for every row of df .

63. How to create a column that contains the penultimate value in each row?

Create a new column 'penultimate' which has the second largest value of each row of df .

64. How to normalize all columns in a dataframe?

- Normalize all columns of df by subtracting the column mean and divide by standard deviation.

- Range all columns of df such that the minimum value in each column is 0 and max is 1.

Don’t use external packages like sklearn.

65. How to compute the correlation of each row with the suceeding row?

Compute the correlation of each row of df with its succeeding row.

66. How to replace both the diagonals of dataframe with 0?

Replace both values in both diagonals of df with 0.

67. How to get the particular group of a groupby dataframe by key?

This is a question related to understanding of grouped dataframe. From df_grouped , get the group belonging to 'apple' as a dataframe.

68. How to get the n’th largest value of a column when grouped by another column?

In df , find the second largest value of 'taste' for 'banana'

69. How to compute grouped mean on pandas dataframe and keep the grouped column as another column (not index)?

In df , Compute the mean price of every fruit , while keeping the fruit as another column instead of an index.

70. How to join two dataframes by 2 columns so they have only the common rows?

Join dataframes df1 and df2 by ‘fruit-pazham’ and ‘weight-kilo’.

71. How to remove rows from a dataframe that are present in another dataframe?

From df1 , remove the rows that are present in df2 . All three columns must be the same.

72. How to get the positions where values of two columns match?

73. how to create lags and leads of a column in a dataframe.

Create two new columns in df , one of which is a lag1 (shift column a down by 1 row) of column ‘a’ and the other is a lead1 (shift column b up by 1 row).

74. How to get the frequency of unique values in the entire dataframe?

Get the frequency of unique values in the entire dataframe df .

75. How to split a text column into two separate columns?

Split the string column in df to form a dataframe with 3 columns as shown.

To be continued . .

More Articles

How to convert python code to cython (and speed up 100x), how to convert python to cython inside jupyter notebooks, install opencv python – a comprehensive guide to installing “opencv-python”, install pip mac – how to install pip in macos: a comprehensive guide, scrapy vs. beautiful soup: which is better for web scraping, add python to path – how to add python to the path environment variable in windows, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

- Data Selection

- Deliverables

- Data Sources

- Data Wrangling

- << Back to home

Assignment 2: Exploratory Data Analysis

In this assignment, you will identify a dataset of interest and perform exploratory analysis to better understand the shape & structure of the data, identify data quality issues, investigate initial questions, and develop preliminary insights & hypotheses. Your final submission will take the form of a report consisting of annotated and/or captioned visualizations that convey key insights gained during your analysis process.

Step 1: Data Selection

First, pick a topic area of interest to you and find a dataset that can provide insights into that topic. To streamline the assignment, we've pre-selected a number of datasets included below for you to choose from (see the Recommended Data Sources section below).

However, if you would like to investigate a different topic and dataset, you are free to do so. If working with a self-selected dataset and you have doubts about its appropriateness for the course, please check with the course staff. Be advised that data collection and preparation (also known as data wrangling ) can be a very tedious and time-consuming process. Be sure you have sufficient time to conduct exploratory analysis, after preparing the data.

After selecting a topic and dataset – but prior to analysis – you should write down an initial set of at least three questions you'd like to investigate. These questions should be clearly listed at the top of your final submission report.

Part 2: Exploratory Visual Analysis

Next, you will perform an exploratory analysis of your dataset using a visualization tool such as Vega-Lite or Tableau. You should consider two different phases of exploration.

In the first phase, you should seek to gain an overview of the shape & stucture of your dataset. What variables does the dataset contain? How are they distributed? Are there any notable data quality issues? Are there any surprising relationships among the variables? Be sure to perform "sanity checks" for any patterns you expect the data to contain.

In the second phase, you should investigate your initial questions, as well as any new questions that arise during your exploration. For each question, start by creating a visualization that might provide a useful answer. Then refine the visualization (by adding additional variables, changing sorting or axis scales, filtering or subsetting data, etc. ) to develop better perspectives, explore unexpected observations, or sanity check your assumptions. You should repeat this process for each of your questions, but feel free to revise your questions or branch off to explore new questions if the data warrants.

Final Deliverable

Your final submission should take the form of a sequence of images – similar to a comic book – that consists of 8 or more visualizations detailing your most important insights.

Your "insights" can include surprises or issues (such as data quality problems affecting your analysis) as well as responses to your analysis questions. Where appropriate, we encourage you to include annotated visualizations to guide viewers' attention and provide interpretive context. (See this page for some examples of what we mean by "annotated visualizations.")

Each image should be a visualization, including any titles or descriptive annotations highlighting the insight(s) shown in that view. For example, annotations could take the form of guidelines and text labels, differential coloring, and/or fading of non-focal elements. You are also free to include a short caption for each image, though no more than 2 sentences: be concise! You may create annotations using the visualization tools of your choice (see our tool recommendations below), or by adding them using image editing or vector graphics tools.

Provide sufficient detail such that anyone can read your report and understand what you've learned without already being familiar with the dataset. For example, be sure to provide a clear overview of what data is being visualized and what the data variables mean. To help gauge the scope of this assignment, see this example report analyzing motion picture data .

You must write up your report as an Observable notebook, similar to the example above. From a private notebook, click the "..." menu button in the upper right and select "Enable link sharing". Then submit the URL of your notebook on the Canvas A2 submission page .

Be sure to enable link sharing, otherwise the course staff will not be able to view your submission and you may face a late submission penalty! Also note that if you make changes to the page after link sharing is enabled, you must reshare the link from Observable.

- To export a Vega-Lite visualization, be sure you are using the "canvas" renderer, right click the image, and select "Save Image As...".

- To export images from Tableau, use the Worksheet > Export > Image... menu item.

- To add an image to an Observable notebook, first add your image as a notebook file attachment: click the "..." menu button and select "File attachments". Then load the image in a new notebook cell: FileAttachment("your-file-name.png").image() .

Recommended Data Sources

To get up and running quickly with this assignment, we recommend using one of the following provided datasets or sources, but you are free to use any dataset of your choice.

The World Bank Data, 1960-2017

The World Bank has tracked global human development by indicators such as climate change, economy, education, environment, gender equality, health, and science and technology since 1960. We have 20 indicators from the World Bank for you to explore . Alternatively, you can browse the original data by indicators or by countries . Click on an indicator category or country to download the CSV file.

Data: https://github.com/ZeningQu/World-Bank-Data-by-Indicators