Reading Assessment: The Key to Science-Based Reading Instruction

Reading assessments are a critical component to ensure equitable, evidence-based literacy instruction. our reading measures provide quick, reliable insights into student literacy that accelerate student growth..

- FastBridge Home

Universal Screening

- Curriculum-Based Measures

- Computer Adaptive Tests

- Progress Monitoring

- Assessments

- Dyslexia Screening

- Social-Emotional Behavior

Help All Students Become Strong Readers with Effective Reading Assessment Tools

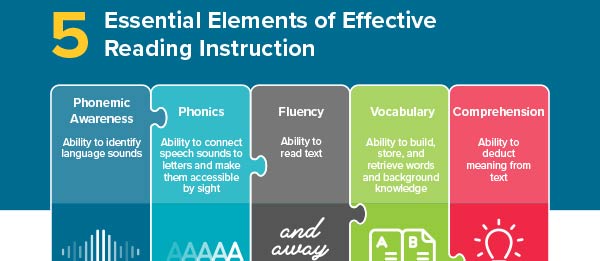

Reading experts and cognitive scientists agree on five core principles of instruction that help all students learn to read—including those with reading difficulties like dyslexia.

Our diagnostic reading assessments help teachers pinpoint the specific reading skills that students are struggling with so that they can target interventions to the five components of effective reading instruction.

Phonemic Awareness

Ability to identify language sounds

Ability to connect speech sounds to letters and make them accessible by sight

Ability to read text

Ability to build, store, and retrieve words and background knowledge

Comprehension

Ability to deduct meaning from text

FastBridge's Reading Assessments Provide Trusted Literacy and Dyslexia Screening

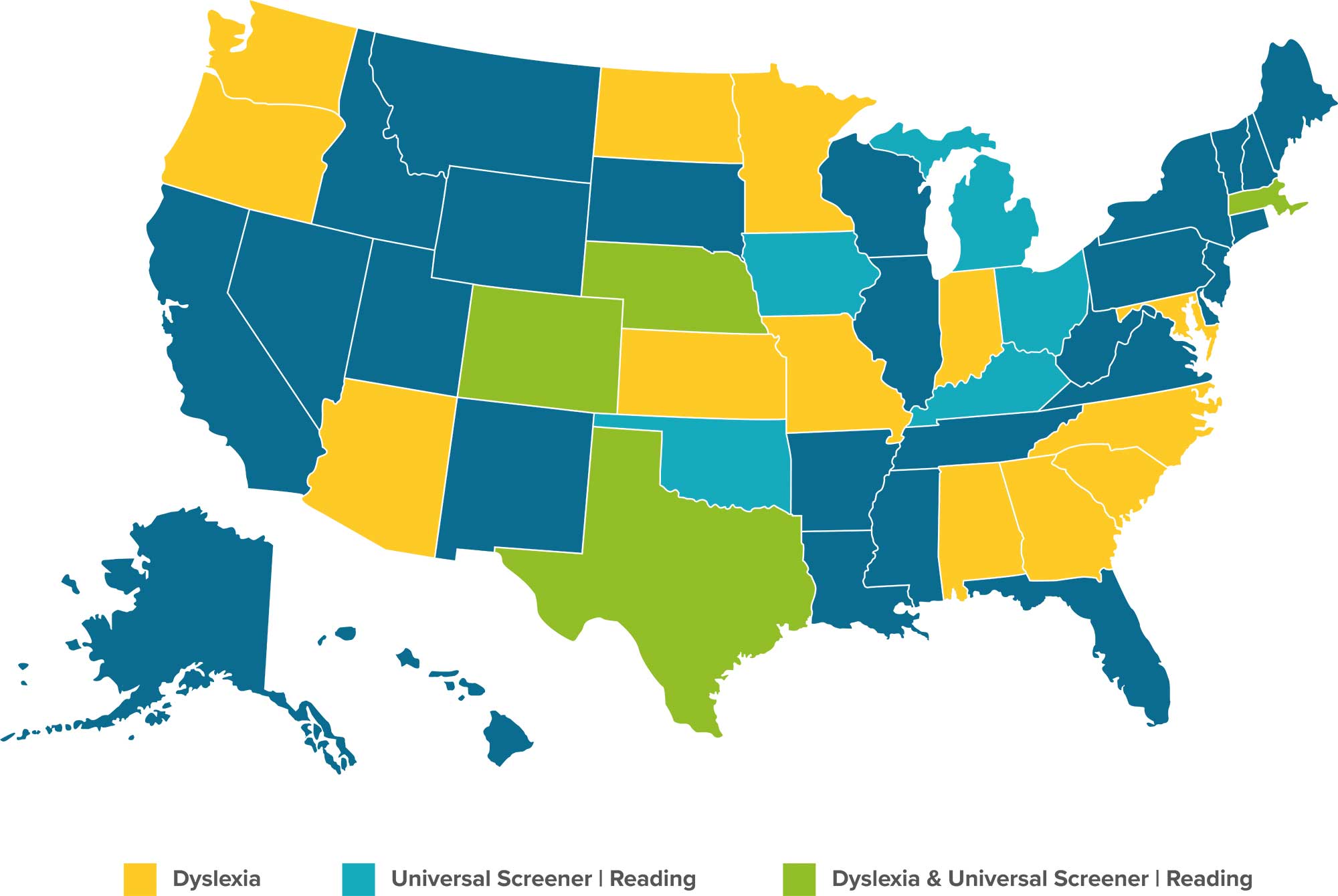

FastBridge combines Computer Adaptive Tests ( CAT ) and Curriculum-Based Measures ( CBM ) to screen students, identify skill gaps, and offer proven recommendations for reading instruction and diagnostic reading interventions. That’s why many states have approved FastBridge for early reading and/or dyslexia screening .

Learn how FastBridge helps students become proficient readers.

Learn more about our reading assessments and the researchers who created them.

Assesses broad reading ability and predicts overall reading achievement in concepts of print, phonological awareness, phonics, vocabulary, comprehension, orthography, and morphology. aReading is aligned to the Lexile® Framework for Reading.

Grades K-12

AUTOreading

Assesses accuracy and automaticity with phonics, spelling, and vocabulary skills.

Universal Screening & Progress Monitoring

Assesses oral reading fluency with connected text.

Available in both English and Spanish

An optional extension of CBMreading that assesses the students' ability to recall and retell information from passages of connected text.

COMPefficiency

Assesses the quality and efficiency of a student's reading comprehension.

earlyReading

Assesses early literacy skills through reading assessment subtests that measure accuracy and automaticity.

Grades PreK-1

The Researchers

Dr. Ted Christ & Colleagues

FastBridge Reading Suite

Co-Founder of FastBridge, Professor of School Psychology, University of Minnesota

Dr. Zoheb Borbora

Co-Founder of FastBridge

Dr. Scott Ardoin

earlyReading & CBMreading English

Professor of School Psychology, University of Georgia

Dr. Tanya Eckert

Associate Professor of School Psychology, Syracuse University

Dr. Lori Helman

earlyReading & CBMreading Spanish

Professor of Literacy Education, University of Minnesota

Dr. Mary Jane White

Research Associate, University of Minnesota

Act on Reading Assessment Data with Skills-Based Interventions

FastBridge offers teacher-led reading interventions to help move struggling students closer to grade-level goals. Our scripted, class-wide and small-group lesson plans make it easier for educators to teach core literacy skills by aligning directly to our diagnostic reading assessments. Each skills-based intervention is backed by the research of Dr. Ted Christ and his colleagues at the University of Minnesota.

Concepts of Print

Proficiency includes an understanding that English words are composed of letters, and sentences are composed of words.

Proficiency includes an understanding that there are individual sounds in words, and words can be split apart into their individual sounds.

Proficiency includes an ability to connect speech sounds to letters and make them accessible by sight.

Proficiency includes reading text accurately, automatically, and at a rate that allows comprehension.

Proficiency includes an ability to build, store, and retrieve words and background knowledge.

Proficiency includes using phonemic awareness, phonics, fluency, and vocabulary to understand written words and making sense of what one reads.

Interested in learning more?

Reading assessment resources, infographic.

The Principles of Effective Reading Instruction

Do you know what it takes for all students to become strong readers? Learn the essentials of effective, science-based reading instruction and structured literacy instruction.

Putting the Science of Reading into Practice

Read expert advice on why reading assessment tools that identify the five critical components of reading and instruction grounded in the science of reading are crucial to addressing inequities and ensuring all students become strong readers.

Profile in Reading Success: How SPPS Uses Reading Assessment Tools to Guide Instruction

See how educators in Saint Paul Public Schools (MN) use diagnostic reading assessment data to implement Tier 1 evidence-based instructional practices and interventions for struggling readers.

Reading Assessments FAQs

How do you assess a student’s reading level.

The best way to assess a student’s reading level is to conduct universal screening three times a year (e.g., fall, winter, spring) for the purpose of identifying students who may benefit from additional instructional support. The earlier a reading difficulty is identified, the easier and more effective it is to provide intervention. After identifying students not on track to meet reading benchmark goals, the screening data can be compared with other sources of information to plan instruction. As needed, additional screeners can be used to provide more specific information about student reading skills, or to get starting scores for progress monitoring .

What makes a quality reading assessment tool?

A quality reading assessment should be grounded in the science of reading research to identify the specific literacy skills a student is struggling with and offer evidence-based recommendations on how to close skill gaps. Quality reading assessments should also be proven valid, reliable, and have multiple sources of high quality research indicating that they help teachers identify and solve reading problems that students have in schools.

What are some reading assessments?

Diagnostic: Diagnostic reading assessments pinpoint specific areas of need. FastBridge’s unique combination of CBMs and CATs of reading provide a comprehensive view of who is at risk and information about which literacy skills to focus on.

Formative: Formative reading assessments are conducted regularly during the school year. Two types of formative assessment are universal screening and progress monitoring. Screening helps teachers know each student’s current reading skills; progress monitoring shows whether reading intervention is working. Both screening and progress monitoring provide data to inform instruction that follows. FastBridge’s reading CBMs can be used for screening and progress monitoring.

Summative: Summative reading assessments certify that learning at the end of a specific period of instruction has occurred. Learn more about Illuminate Education’s summative assessments and reporting platform, DnA .

How do FastBridge’s reading assessments compare to other reading assessment tests?

FastBridge’s reading assessments offer educators the most efficient way to assess reading skill gaps because our solution offers both CBMs and CATs. With FastBridge, teachers conduct screening with recommended reading assessments for each grade level. These include:

- Specific assessments students complete for universal screening.

- Pre-selected assessments that have the capacity to predict future student reading performance and indicate what type of reading instruction is needed

- User-friendly reports that allow teachers to view student scores and see recommended reading instruction plans.

- User-friendly reporting that allows teachers to view student scores and see recommended reading instruction plans.

The R apid O nline A ssessment of R eading

An open-access assessment platform grounded in ongoing research by the Stanford Reading & Dyslexia Research Program

What is the ROAR?

The rapid online assessment of reading is an ongoing research project and online platform for assessing foundational reading skills. roar consists of a suite of measures that have been validated k-12; each is delivered through the web browser and does not require a test administrator. the roar rapidly provides highly reliable indices of reading ability with greater precision than many standardized, individually-administered reading assessments. powered by the rapid online assessment dashboard, roar integrates with most rostering systems used in schools so can be deployed to a whole district with a click of a button and provides instructionally informative score reports to teachers in real time as students complete the assessments., the stanford reading & dyslexia research program aims to investigate the factors contributing to reading difficulties including dyslexia. by developing and rigorously validating automated assessment tools that enable large-scale data collection, we can help researchers and educators understand and support the diversity of learners., scientific approach to validation.

A Research-Based Tool

Administer anywhere online, rapid. automated. online., active research & development.

Active R&D

The roar assessment suite, roar is a tool for schools, clinics, and researchers — we offer a wide range of experiments that explore the domains of reading and language, visual processing, and executive function. the following roar are available for school and community organization partners to pilot: , click on the boxes below to demo the assessments, roar assessments that have been validated at scale, new roar measures under active research, coming soon: rapid online assessments of math (roam) rapid online assessments of vision (roav), how does it work.

ROAR-SWR measures word recognition by rapidly presenting real and made-up words and asking participants to press a key to indicate whether each word is real or made-up. The words span a wide difficulty range, providing an accurate index of ability for 1st through 12th grade in just 5-10 minutes.

Animated characters guide participants through a colorful voiceover narrative, ensuring participants understand the task through brief practice and feedback. In our validation studies, children as young as 2nd grade were able to complete ROAR-SWR without a proctor, and children in 1st grade could complete it with minimal assistance.

After completing the ROAR-SWR, participants will receive information on raw scores, as well as estimated standard scores, percentile scores, and risk designations.

Research-Practice Partnership: A Model for Collaboration

Frequently asked questions.

ROAR is configurable for different applications. ROAR-Word can be collected as a quick screener in about five minutes. Adding ROAR-Sentence provides additional complimentary information for screening. ROAR-Phoneme provides diagnostic information on different aspects of phonological awareness that can be the target of instruction or intervention and takes 12-15 minutes. ROAR can be administered to any number of students at once—individually at home or in a clinic, in small groups, a classroom or whole school district —as long as each student has access to an internet-connected computer or tablet and headphones.

ROAR provides a comprehensive profile of foundational reading skills for students from Kindergarten through Grade 12. The score reports show you how many students in your organization need support on each specific skill. Furthermore, the reports provide individual data on each student in a table that can easily be grouped by skill, level of support needed, and more.

ROAR assessments examine foundational reading skills for students of all ages. Some of the assessments are computer adaptive, so they will give more challenging items to students who are ready for them. Some of the assessments provide different sets of items for students depending on their grade level, such as more challenging texts for older students. The graphics and storylines are omitted for older students. The assessments are generally structured similarly and the scores are comparable across grade levels.

Nothing! The ROAR is an open-access assessment; no payment or subscription is required.

The administration is completely automated – directions are read to students through their headphones, and students respond on the computer. Teachers or guardians may need to help students with logging in. Students may do the assessments in their classrooms as a group, at home, or with a specialist.

Please see our optional Quick Start guide that we provide for partners.

We take research participant data privacy seriously. All assessment data is stored securely and separately from identifiers wherever possible. If your child’s school is engaged in a ROAR partnership, assessment data may be shared back with the school as additional information to support teaching and learning.

We are excited to partner with you to try these research tools in the classroom!

We are happy to come to your school virtually or in-person to discuss the ROAR and how it can support your students and teachers. Please reach out to our Director of Research and Partnerships, Carrie Townley-Flores ( [email protected] ) to make arrangements.

This is standard practice, and we are happy to work through this process with you. Please note that Stanford’s lawyers will need to sign off on any legal documents and this process may take some time. We also require our partners to review our Letter of Agreement or Terms of Service.

Learn more about the Stanford University Reading & Dyslexia Research Program!

Connect with us.

(650) 497-0893

520 Galvez Mall

Stanford, CA 94305

Our Facebook

Lab Website

Advertisement

Designing Reading Comprehension Assessments for Reading Interventions: How a Theoretically Motivated Assessment Can Serve as an Outcome Measure

- Research into Practice

- Published: 25 May 2014

- Volume 26 , pages 403–424, ( 2014 )

Cite this article

- Tenaha O’Reilly 1 ,

- Jonathan Weeks 1 ,

- John Sabatini 1 ,

- Laura Halderman 1 &

- Jonathan Steinberg 1

2582 Accesses

39 Citations

Explore all metrics

When designing a reading intervention, researchers and educators face a number of challenges related to the focus, intensity, and duration of the intervention. In this paper, we argue there is another fundamental challenge—the nature of the reading outcome measures used to evaluate the intervention. Many interventions fail to demonstrate significant improvements on standardized measures of reading comprehension. Although there are a number of reasons to explain this phenomenon, an important one to consider is misalignment between the nature of the outcome assessment and the targets of the intervention. In this study, we present data on three theoretically driven summative reading assessments that were developed in consultation with a research and evaluation team conducting an intervention study. The reading intervention, Reading Apprenticeship, involved instructing teachers to use disciplinary strategies in three domains: literature, history, and science. Factor analyses and other psychometric analyses on data from over 12,000 high school students revealed the assessments had adequate reliability, moderate correlations with state reading test scores and measures of background knowledge, a large general reading factor, and some preliminary evidence for separate, smaller factors specific to each form. In this paper, we describe the empirical work that motivated the assessments, the aims of the intervention, and the process used to develop the new assessments. Implications for intervention and assessment are discussed.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

Similar content being viewed by others

Theories of Motivation in Education: an Integrative Framework

The use of cronbach’s alpha when developing and reporting research instruments in science education.

The Impact of Peer Assessment on Academic Performance: A Meta-analysis of Control Group Studies

Interested readers are encouraged to consult Zygouris-Coe ( 2012 ) for a discussion on the implications of introducing disciplinary reading into the Common Core.

The 14-item background knowledge measure for the literature form was unacceptably low ( α = near zero). Therefore, we do not report the correlation.

Note that this is a different sample of students drawn from the same intervention schools, but in the year preceding the sample used in the dimensional analyses. The purpose of this analysis is to demonstrate convergent validity evidence for each form with a general reading literacy assessment, i.e., 8th grade state ELA outcome tests.

The assessment scores were not intended for individual score reporting, but rather for computing group mean differences. Thus, we were not interested in their technical adequacy for reporting on individuals. Different analyses, modeling, and studies would be conducted to evaluate their functionality for individual score reports.

Achugar, M., & Carpenter, B. D. (2012). Linguistics and Education, 23 , 262–276.

Article Google Scholar

Airey, J., & Linder, C. (2009). A disciplinary discourse perspective on university science learning: achieving fluency in a critical constellation of modes. Journal of Research in Science Teaching, 46 , 27–49.

Bennett, R. E. (2010). Cognitively based assessment of, for, and as learning: a preliminary theory of action for summative and formative assessment. Measurement: Interdisciplinary Research and Perspectives, 8 , 70–91.

Google Scholar

Berkeley, S., Scruggs, T. E., & Mastropieri, M. A. (2010). Reading comprehension instruction for students with learning disabilities, 1995–2006: a meta-analysis. Remedial and Special Education, 31 , 423–436.

Bus, A. G. (1999). Phonological awareness and early reading: a meta-analysis of experimental training studies. Journal of Educational Psychology, 91 , 403–414.

Cai, L. (2012). flexMIRT version 1.88: a numerical engine for multilevel item factor analysis and test scoring. [computer software] . Seattle, WA: Vector Psychometric Group.

Cantrell, S., Almasi, J. F., Carter, J. C., & Rintamaa, M. (2013). Reading intervention in middle and high schools: implementation fidelity, teacher efficacy, and student achievement. Reading Psychology, 34 , 26–58.

Chamberlain, A., Daniels, C., Madden, N. A., & Slavin, R. E. (2007). A randomized evaluation of the success for all middle school reading program. Middle Grades Reading Journal, 2 , 1–22.

Coiro, J. (2009). Rethinking reading assessment in a digital age: How is reading comprehension different and where do we turn now? Educational Leadership, 66 , 59–63.

Coyne, M. D., Little, M., Rawlinson, D., Simmons, D., Kwok, O., Kim, M., & Civetelli, C. (2013). Replicating the impact of a supplemental beginning reading intervention: the role of instructional context. Journal of Research on Educational Effectiveness, 6 , 1–23.

Cutting, L., & Scarborough, H. (2006). Prediction of reading comprehension: relative contributions of word recognition, language proficiency, and other cognitive skills can depend on how comprehension is measured. Scientific Studies of Reading, 10 , 277–299.

Denton, C. A., Wexler, J., Vaughn, S., & Bryan, D. (2008). Intervention provided to linguistically diverse middle school students with severe reading difficulties. Learning Disabilities Research & Practice, 23 , 79–89.

Denton, C. A., Tolar, T. D., Fletcher, J. M., Barth, A. E., Vaughn, S., & Francis, D. J. (2013). Effects of tier 3 intervention for students with persistent reading difficulties and characteristics of inadequate responders. Journal of Educational Psychology, 105 , 633–648.

Ehren, B. J. (2012). Foreword: complementary perspectives from multiple sources on disciplinary literacy. Topics in Language Disorders, 32 , 5–6.

Faggella-Luby, M. N., Graner, S. P., Deshler, D. D., & Drew, S. V. (2012). Building a house on sand: Why disciplinary literacy is not sufficient to replace general strategies for adolescent learners who struggle. Topics in Language Disorders, 32 , 69–84.

Fang, Z. (2012). Language correlates of disciplinary literacy. Topics in Language Disorders, 32 , 19–34.

Fisk, C., & Hurst, C. B. (2003). Paraphrasing for comprehension. Reading Teacher, 57 , 182–185.

Flynn, L. J., Zheng, X., & Swanson, H. (2012). Instructing struggling older readers: a selective meta‐analysis of intervention research. Learning Disabilities Research & Practice, 27 , 21–32.

Franzke, M., Kintsch, E., Caccamise, D., Johnson, N., & Dooley, S. (2005). Summary Street ®: computer support for comprehension and writing. Journal of Educational Computing Research, 33 , 53–80.

Goldman, S. (2012). Adolescent literacy: learning and understanding content. Future of Children, 22 , 89–116.

Goldman, S., & Rakestraw, J. (2000). Structural aspects of constructing meaning from text. In M. Kamil, P. Mosenthal, P. D. Pearson, & R. Barr (Eds.), Handbook of reading research (Vol. III, pp. 311–335). Mahwah, NJ: Erlbaum.

Graesser, A. C., Singer, M., & Trabasso, T. (1994). Constructing inferences during narrative text comprehension. Psychological Review, 101 , 371–395.

Guldenoğlu, İ., Kargin, T., & Miller, P. (2012). Comparing the word processing and reading comprehension of skilled and less skilled readers. Educational Sciences: Theory & Practice, 12 , 2822–2828.

Hammer, S., & Green, W. (2011). Critical thinking in a first year management unit: the relationship between disciplinary learning, academic literacy and learning progression. Higher Education Research & Development, 30 , 303–315.

Kane, M. (2006). Validation. In R. J. Brennan (Ed.), Educational measurement (4th ed., pp. 18–64). Westport, CT: American Council on Education and Praeger.

Keenan, J. M., Betjemann, R. S., & Olson, R. K. (2008). Reading comprehension tests vary in the skills they assess: differential dependence on decoding and oral comprehension. Scientific Studies of Reading, 12 , 281–300.

Kim, J., & Quinn, D. (2013). The effects of summer reading on low-income children’s literacy achievement from kindergarten to grade 8: a meta-analysis of classroom and home interventions. Review of Educational Research, 83 , 386–431.

Kim, J. S., Samson, J. F., Fitzgerald, R., & Hartry, A. (2010). A randomized experiment of a mixed-methods literacy intervention for struggling readers in grades 4–6: effects on word reading efficiency, reading comprehension and vocabulary, and oral reading fluency. Reading and Writing: An Interdisciplinary Journal, 23 , 1109–1129.

Kintsch, W. (1998). Comprehension: a paradigm for cognition . Cambridge, UK: Cambridge University Press.

Lee, C. (2004). Literacy in the academic disciplines and the needs of adolescent struggling readers. Voices in Urban Education, 3 , 14–25.

Lee, C. D., & Spratley, A. (2010). Reading in the disciplines: the challenges of adolescent literacy . New York, NY: Carnegie Corporation.

Liu, O. L., Bridgeman, B., & Adler, R. (2012). Measuring learning outcomes in higher education: motivation matters. Educational Researcher, 41 , 352–362.

Lykins, C. (2012). Why “what works” still doesn't work: how to improve research syntheses at the What Works Clearinghouse. Peabody Journal of Education, 87 , 500–509.

MacGinitie, W. H., MacGinitie, R. K., Katherine, M., & Dreyer, L. G. (2000). Gates MacGinitie tests of reading . Itasca, IL: Riverside.

McCrudden, M. T., & Schraw, G. (2007). Relevance and goal-focusing in text processing. Educational Psychology Review, 19 , 113–139.

McKinley, R. L., & Reckase, M. D. (1983). An extension of the two-parameter logistic model to the multidimensional latent space (Research Report ONR 83–2) . Iowa City, IA: The American College Testing Program.

McNamara, D. S. (2004). SERT: self-explanation reading training. Discourse Processes, 38 , 1–30.

McNamara, D. S. (2007). Reading comprehension strategies: theories, interventions, and technologies . Mahwah, NJ: Erlbaum.

McNamara, D. S., & Kintsch, W. (1996). Learning from texts: effects of prior knowledge and text coherence. Discourse Processes, 22 , 247–288.

Metzger, M. J. (2007). Making sense of credibility on the web: models for evaluating online information and recommendations for future research. Journal of the American Society for Information Science and Technology, 58 , 2078–2091.

Meyer, B., & Wijekumar, K. (2007). A Web based tutoring system for the structure strategy: theoretical background, design, and findings. In D. S. McNamara (Ed.), Reading comprehension strategies: theory, interventions, and technologies (pp. 347–374). Mahwah, NJ: Erlbaum.

Mislevy, R. J., & Haertel, G. (2006). Implications for evidence-centered design for educational assessment. Educational Measurement: Issues and Practice, 25 , 6–20.

Mislevy, R. J., & Sabatini, J. P. (2012). How research on reading and research on assessment are transforming reading assessment (or if they aren’t, how they ought to). In J. Sabatini, E. Albro, & T. O'Reilly (Eds.), Measuring up: advances in how we assess reading ability (pp. 119–134). Lanham, MD: Rowman & Littlefield Education.

Monte-Sano, C. (2010). Disciplinary literacy in history: an exploration of the historical nature of adolescents’ writing. Journal of the Learning Sciences, 19 , 539–568.

National Assessment Governing Board (2010). Reading framework for the 2011 National Assessment of Educational Progress. Washington, DC: U.S. Department of Education. Retrieved from http://www.nagb.org/publications/frameworks/reading-2011-framework.pdf .

National Governors Association, & Council of Chief State School Officers (2010). Common core state standards for English language arts . Washington, DC: Authors Retrieved from http://www.corestandards.org/assets/CCSSI_ELA%20Standards.pdf .

O'Reilly, T., & Sabatini, J. (2013). Reading for understanding: how performance moderators and scenarios impact assessment design (Research Report No. RR-13-31) . Princeton, NJ: Educational Testing Service.

Ozuru, Y., Rowe, M., O'Reilly, T., & McNamara, D. S. (2008). Where’s the difficulty in standardized reading tests: the passage or the question? Behavior Research Methods, 40 , 1001–1015.

Perfetti, C. A., & Adlof, S. M. (2012). Reading comprehension: a conceptual framework from word meaning to text meaning. In J. P. Sabatini, E. Albro, & T. O’Reilly (Eds.), Measuring up: advances in how we assess reading ability (pp. 3–20). Lanham, MD: Rowman & Littlefield Education.

Poitras, E., & Trevors, G. (2012). Deriving empirically-based design guidelines for advanced learning technologies that foster disciplinary comprehension. Canadian Journal of Learning and Technology, 38 , 1–21.

Reynolds, M., Wheldall, K., & Madelaine, A. (2011). What recent reviews tell us about the efficacy of reading interventions for struggling readers in the early years of schooling. International Journal of Disability, Development and Education, 58 , 257–286.

Rouet, J.-F., & Britt, M. A. (2011). Relevance processes in multiple document comprehension. In M. T. McCrudden, J. P. Magliano, & G. Schraw (Eds.), Relevance instructions and goal-focusing in text learning (pp. 19–52). Greenwich, CT: Information Age Publishing.

Rupp, A., Ferne, T., & Choi, H. (2006). How assessing reading comprehension with multiple-choice questions shapes the construct: a cognitive processing perspective. Language Testing, 23 , 441–474.

Sabatini, J., & O’Reilly, T. (2013). Rationale for a new generation of reading comprehension assessments. In B. Miller, L. Cutting, & P. McCardle (Eds.), Unraveling reading comprehension: behavioral, neurobiological, and genetic components (pp. 100–111). Baltimore, MD: Brookes Publishing.

Sabatini, J., O'Reilly, T., & Deane, P. (2013). Preliminary reading literacy assessment framework: foundation and rationale for assessment and system design. (Research Report No. RR-13-30) . Princeton, NJ: Educational Testing Service.

Sabatini, J., O’Reilly, T., Halderman, L., & Bruce, K. (2014). Integrating scenario-based and component reading skill measures to understand the reading behavior of struggling readers. Learning Disabilities Research & Practice, 29 (1), 36–43.

Schoenbach, R., Greenleaf, C., & Murphy, L. (2012). Engaged academic literacy for all. In Reading for understanding: How Reading Apprenticeship improves disciplinary learning in secondary and college classrooms, 2nd edition (pp. 1–6). San Francisco, CA: Jossey-Bass. Retrieved from: http://www.wested.org/online_pubs/read-12-01-sample1.pdf .

Scholin, S. E., & Burns, M. (2010). Relationship between pre-intervention data and post-intervention reading fluency and growth: a meta-analysis of assessment data for individual students. Psychology in the Schools, 49 , 385–398.

Shanahan, T., & Shanahan, C. (2008). Teaching disciplinary literacy to adolescents: rethinking content-area literacy. Harvard Educational Review, 78 , 40–59.

Shanahan, C., Shanahan, T., & Misischia, C. (2011). Analysis of expert readers in three disciplines: history, mathematics, and chemistry. Journal of Literacy Research, 43 , 393–429.

Shapiro, A. M. (2004). How including prior knowledge as a subject variable may change outcomes of learning research. American Educational Research Journal, 41 , 159–189.

Song, M., & Herman, R. (2010). Critical issues and common pitfalls in designing and conducting impact studies in education: lessons learned from the What Works Clearinghouse (phase I). Educational Evaluation and Policy Analysis, 32 , 351–371.

Suggate, S. (2010). Why what we teach depends on when: grade and reading intervention modality moderate effect size. Developmental Psychology, 46 , 1556–1579.

Tunmer, W. E., Chapman, J. W., & Prochnow, J. E. (2004). Why the reading achievement gap in New Zealand won’t go away: evidence from the PIRLS 2001 International Study of Reading Achievement. New Zealand Journal of Educational Studies, 39 , 127–145.

van den Broek, P., Lorch, R. F., Jr., Linderholm, T., & Gustafson, M. (2001). The effects of readers’ goals on inference generation and memory for texts. Memory & Cognition, 29 , 1081–1087.

Vaughn, S., Swanson, E. A., Roberts, G., Wanzek, J., Stillman-Spisak, S. J., Solis, M., & Simmons, D. (2013). Improving reading comprehension and social studies knowledge in middle school. Reading Research Quarterly, 48 , 77–93.

Vidal-Abarca, E., Mãná, A., & Gil, L. (2010). Individual differences for self-regulating task-oriented reading activities. Journal of Educational Psychology, 102 , 817–826.

Wanzek, J., Vaughn, S., Scammacca, N. K., Metz, K., Murray, C. S., Roberts, G., & Danielson, L. (2013). Extensive reading interventions for students with reading difficulties after grade 3. Review of Educational Research, 83 , 163–195.

What Works Clearinghouse (2012). Phonological awareness training (What Works Clearinghouse Intervention Report). U.S. Department of Education, Institute of Education Sciences What Works Clearinghouse, Retrieved from http://files.eric.ed.gov/fulltext/ED533087.pdf .

Zygouris-Coe, V. I. (2012). Disciplinary literacy and the common core state standards. Topics in Language Disorders, 32 , 35–50.

Download references

Acknowledgments

The research reported here was supported by the Institute of Education Sciences, U.S. Department of Education, through grant R305F100005 to Educational Testing Service as part of the Reading for Understanding Research Initiative; and in partnership with WestEd, IMPAQ International, and Empirical Education, Inc. The opinions expressed are those of the authors and do not represent views of the Institute or the U.S. Department of Education, nor the Educational Testing Service. We would also like to thank Cynthia Greenleaf and Ruth Schoenbach of WestEd, Cheri Fancsali and the team at IMPAQ for their partnership and support with school sample recruitment in this study; our Cognitively Based Assessment as, of, and for, Learning ( CBAL ™) Initiative partners; the NAEP team for providing access and use of released items; Kelly Bruce for technical support; and Jennifer Lentini and Kim Fryer for editorial assistance; Paul Deane, Jim Carlson, Shelby Haberman, Matthias Von Davier and anonymous reviewers for their thoughtful reviews and helpful comments.

Author information

Authors and affiliations.

Educational Testing Service Research & Development, 660 Rosedale Road, Princeton, NJ, 08541, USA

Tenaha O’Reilly, Jonathan Weeks, John Sabatini, Laura Halderman & Jonathan Steinberg

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Tenaha O’Reilly .

Rights and permissions

Reprints and permissions

About this article

O’Reilly, T., Weeks, J., Sabatini, J. et al. Designing Reading Comprehension Assessments for Reading Interventions: How a Theoretically Motivated Assessment Can Serve as an Outcome Measure. Educ Psychol Rev 26 , 403–424 (2014). https://doi.org/10.1007/s10648-014-9269-z

Download citation

Published : 25 May 2014

Issue Date : September 2014

DOI : https://doi.org/10.1007/s10648-014-9269-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Reading comprehension

- Outcome measures

- Intervention

- Disciplinary literacy

- Find a journal

- Publish with us

- Track your research

- The LD@school Team

- Collaborate with Us

- Terms Of Use

- Mathematics

- Mental Health

- Executive Functions

- Social-Emotional Development

- What are Non-verbal Learning Disabilities?

- Accommodations, Modifications & Alternative Skill Areas

- Glossary of Terms

- Resources |

- Learning Modules |

- Educators’ Institute |

- Document Library |

Evidence-Based Assessment in the Science of Reading

Print Resource

Written by Una Malcolm, M.A., OCT

Introduction: Why Assess

Assessment for learning plays a critical role in informing and driving literacy instruction. Nearly all students are capable of learning to read; while specific numbers vary, estimates indicate that approximately 95% of students are capable of learning to read (Fletcher & Vaughn, 2009). Rigorous, systematic, and explicit instruction that addresses the essential components of literacy including phonemic awareness, phonics, fluency, vocabulary and comprehension (National Reading Panel, 2000), is key to allowing all children to reach their full potential. This instruction often referred to as structured literacy, must go hand-in-hand with a comprehensive assessment system to allow educators to adjust instruction to meet the specific needs of students (Spear-Swerling, 2018). Assessment is critical for students with learning disabilities (LDs) in terms of both the prevention of reading difficulties and intervention to remediate skill gaps.

Formative Assessment: Answering Questions

Assessment for learning plays a key role in meeting the needs of all students through the creation of equitable learning environments. Assessment for learning, “is used in making decisions that affect teaching and learning in the short-term future” (Growing Success, 2010, p. 30). Multiple research reviews and meta-analyses have identified that systematic formative assessment has consistent strong, positive effects on student learning (Fuchs & Fuchs, 1986; Black & Wiliam, 2005; Hattie, 2009) across a variety of factors including student age, measurement frequency, and disability status. In essence, assessment for learning allows educators to identify where students are and inform plans to get students where they need to be.

Even within assessment for learning, multiple types of assessments exist. Growing Success (2010) distinguishes between formative and diagnostic assessments but emphasizes that “what matters is how the information is used” (p. 31). Assessment answers questions; different types of assessment tools answer different types of questions. In a Response to Intervention model, assessment purposes include universal screening, diagnostic assessments, and progress monitoring; all three of these purposes for assessment answer key questions about shaping instructional environments to meet students’ needs.

1. Screening - Assessment for Learning

The development of skilled reading is a complex process that hinges on the development and the integration of cognitive and language skills. Many of these skills can be observed and measured well before children receive any formal reading instruction (Catts, 2015). With data indicating that reading trajectories are established early and are very stable (Juel, 1988), it is critical to identify reading difficulties early on in a student’s academic career to inform support. Since reading remediation at an earlier age is more effective than later intervention (Lovett et al., 2017), screening plays an important role in preventing early reading difficulties from being exacerbated over time.

All students should be screened with universal screening measures three times per year (Good et al., 2011). These assessments are very brief, standardized measures that quickly and efficiently identify which children are at risk. Standardized assessments are tests that have specific procedures for administration and scoring, perhaps with scripted directions and specific scoring guidelines (Farrall, 2012). Students are assessed on several indicators of early literacy skills appropriate for their age and grade. For example, Kindergarten and grade one students may be screened on phonemic awareness, phonics, and decoding, while older students may be screened on oral reading fluency (ORF) and retell for comprehension.

Universal screening measures are widely available and very accessible. Many of the universal screeners currently available are either low-cost or free. Often there can be a fee for training or optional computer data analysis systems, but many of the testing materials are very accessible.

These assessments are technically reliable and valid, and scores are interpreted in relation to a benchmark criterion. Benchmark cutscores are not determined arbitrarily but instead are derived from research; researchers use longitudinal studies to determine what threshold a child must reach to have a strong chance of meeting future reading goals. This means a child’s score allows educators to predict future reading performance with a reasonable degree of accuracy. Students who fall at or below the cutscore are at risk and will likely need additional support to allow them to meet future goals. For example, a benchmark cutscore on Acadience Reading assessments is the score needed for a child to have an 80 - 90% probability of meeting successive reading goals (Good et al., 2011). In interpreting a child’s score in comparison to a benchmark score, educators can quickly and efficiently assess the level of risk of future reading achievement or difficulty before difficulties emerge or worsen.

Screening allows educators to quickly and easily catch students who are at risk. Screening does not, however, give teachers a full understanding of what the underlying cause of a problem is. Screening is akin to a blood pressure or temperature check at a doctor’s office; these are simple, efficient and cost-effective ways to find problems, but abnormalities on these screeners require additional diagnostic testing to decipher what exactly is wrong.

2. Diagnostic Assessment - Assessment for Learning

Diagnostic assessments allow educators to probe deeper into the learning profiles of students in question. For many students, universal screening three times per year provides all the assessment data necessary to ensure students are making sufficient progress. For students who are at risk, though, careful analysis of reading subskills must occur to differentiate instruction and plan intervention. These assessments allow educators to ascertain which specific skills or knowledge a student has mastered, and which skills or knowledge need to be taught.

If universal screeners are similar to a blood pressure or temperature check to identify problems in a brief and cost-effective manner, diagnostic assessments are similar to more comprehensive blood tests or diagnostic imaging to dig deeper into a problem. The information from these more investigative approaches directly informs treatment, similar to how diagnostic reading assessments allow teachers to differentiate classroom instruction or plan targeted interventions.

Diagnostic assessments are longer and more in-depth than screening assessments and are typically not standardized. While screeners are quick assessments of indicators of basic skills, diagnostic assessments can be thought of as inventories of skills that directly inform instruction. Can a child segment two, three, or four phoneme words? Can a child decode words with short vowels or consonant digraphs? Diagnostic assessments allow teachers to differentiate classroom instruction and plan intervention if necessary.

Diagnostic assessments should be selected strategically. The Simple View of Reading (Gough & Tunmer, 1986) indicates that reading comprehension, the end goal of reading, is a product of two sets of subskills, word recognition and language comprehension. When selecting diagnostic assessments, teachers can probe both areas of this model to clarify a student’s specific patterns of strengths and needs. When weaknesses exist in reading comprehension, is this due to difficulties with word recognition, with language comprehension, or with both?

3. Progress Monitoring - Assessment for Learning

With screening and diagnostic data in hand, teachers can differentiate core instruction and/or provide targeted intervention through intervention supports. Progress monitoring assessments allow educators to quickly measure a student’s response to instruction. Instead of waiting until the next benchmark assessment several months in the future, progress monitoring allows for more rapid adjustment of instruction. While universal screening measures students’ skill level with grade-level material, progress monitoring is at an individual child’s instructional level to directly measure response to instruction. A grade four child reading at a grade one level would be screened at the beginning, middle, and end of the year with grade four assessments. Progress monitoring assessment, though, would be at a grade one level in order to measure the child’s response to intervention.

Many universal screeners also supply progress monitoring materials. These materials are often very similar to universal screening measures in that they are brief and standardized. When selecting an assessment for progress monitoring, it is important to consider practice effects. Progress monitoring assessments typically have multiple forms so students are not tested with the same material. Progress monitoring data are usually graphed so student growth can be compared visually to an aimline , or the line between a child’s current score and the benchmark goal.

Progress monitoring assessments are purposefully brief. Depending on student age and needs, this assessment may take between one and three minutes. Struggling students need to catch up quickly; Torgesen (2004) describes the “devastating downward spiral” that occurs when students do not receive timely, evidence-based intervention. Struggling readers do not have time for a “wait and see” approach. Weekly or biweekly progress monitoring allows for enough data to quickly and clearly establish patterns. Educators can quickly judge if a child’s rate of progress is strong enough to meet the goal. If not, there are several options that can be considered to intensify instruction:

- Increasing frequency or length of support

- Decreasing group size

- Making groups more homogeneous

- Increasing level of explicitness

- Increasing opportunities for practice

To see an example of how the Thunder Bay Catholic DSB uses tier 1, 2 and 3 reading interventions, click here to view the LD@school video The Tiered Approach

In addition to informing instruction, progress monitoring data allow for insight into students learning profiles. Research indicates that data from a child’s response to intervention can give important insight into whether a child may have a learning disability. Patterns of inadequate response to evidence-based instruction, established through progress monitoring data, are key factors to consider when evaluating for a learning disability (Miciak & Fletcher, 2020; Catts & Petscher, 2021).

4. Outcome Evaluation - Assessment of Learning

Outcome assessments measure student achievement. While progress monitoring assessments show if instruction is working, outcome assessments are assessments of learning and identify if instruction worked.

These assessments are typically comprehensive assessments that measure student mastery of provincial curriculum expectations or the specific learning goals outlined. Outcome assessments often occur at the end of a period of learning, including a unit of study, a term, or a school year, for example.

Evidence-Based Assessment in the Science of Reading: Cheat sheet - Comparing and Contrasting Assessment Purposes

Click here to view and download the Evidence-Based Assessment in the Science of Reading: Cheat sheet

Assessment Data and Differentiating Tier 1 Instruction

Patterns in universal screening data can support teachers in differentiating classroom instruction. Instead of using student reading levels from a running record to group students for small group instruction, universal screening data allows educators to use reading skills to more specifically build homogeneous groups of students for instruction. A grade 2 teacher, for example, could split students into several groups based on their universal screening data:

- Fluent and accurate readers needing continued content and vocabulary instruction to build comprehension

- Students who read accurately but need fluency instruction

- Students in need of word-level decoding and phonics instruction.

Data from screeners as well as diagnostic testing for deeper probing allows for more sophisticated and specific differentiation for intervention, instruction, or enrichment since it focuses on specific skills instead of generic reading levels.

Student vs. System Analysis to Strengthen Universal Instruction

One of the strengths of universal screening is it allows educators to analyze not only individual students’ growth but also the strength of classroom core instruction. If more than 20% of students are below the benchmark on universal screening, it is a clear indication that efforts to strengthen core instruction should be made. Prioritizing intervention when a large proportion of the class is at risk misses out on a valuable opportunity to strengthen the universal tier of classroom instruction. With class-wide screening data, teachers are able to better differentiate instruction and potentially implement class-wide interventions , such as Peer-Assisted Learning Strategies (McMaster & Fuchs, 2016). For example, a grade 3 teacher could implement a paired repeated reading instructional routine (Stevens et al., 2017) in response to weak fluency screening data. Instead of just problem-solving at individual student levels, teachers have the data necessary to develop action plans to strengthen core instruction for all students.

To learn more about Peer Assisted Learning, click here to access LD@school’s article and video Peer-Mediated Learning Approaches.

Oral Reading Fluency and Running Records: Frequently Confused

Oral Reading Fluency assessments are commonly used in both screening and progress monitoring for developing readers. While individual assessments vary, generally Oral Reading Fluency (ORF) measures involve timed passage oral reading for one minute. Accuracy rates and words correct per minute scores are calculated and interpreted in relation to a benchmark score. ORF assessments are highly correlated with reading comprehension (Fuchs et al., 2001) and serve as a proxy for this critical outcome skill.

ORF assessments are often confused with running record assessments that are commonly used in literacy programs. While both assessments do involve the reading of connected passages, running records differ in that a text “level” is often indicated as a student’s instructional or independent level based on the accuracy percentage. Fluency is typically assessed through teacher observation or rubrics. Running records do not meet desired characteristics for the selection of a screener. They do not act as brief, reliable and valid indicators of key early literacy skills, and they do not allow for interpretation of scores in relation to a benchmark criterion derived from predictive probabilities. In fact, Parker et al. (2015) found that a commonly used running record assessment had a .54 correlation with a district-provided criterion reading test. These data indicate that running records were only 54% accurate in predicting student reading performance, in comparison to the established technical validity and reliability of ORF measures.

Assessment for Learning: A Case Study

The following case study provides an example of how reading assessment can be used in the classroom to identify at-risk students, pinpoint the skills these students struggle with, and determine the appropriate intervention to get students back on track.

Maya is in grade two. As a part of the beginning-of-year screening process, her teacher administers a brief screening assessment that yields this data about Maya’s reading:

Nonsense Word Fluency:

- Correct Letter Sounds ( phonics) : Below Benchmark

- Whole Words Read ( decoding) : Well Below Benchmark

Oral Reading Fluency:

- Accuracy ( passage reading accuracy ) : Below Benchmark

- Words Correct ( passage reading fluency) : Well Below Benchmark

Maya’s teacher, Ms. Kaur, recognizes that Maya is struggling and is not on track to meet future reading goals. Ms. Kaur realizes that Maya is having difficulty reading the ORF passage accurately and smoothly since she is not an accurate and fluent word reader. Based on these screening data, she decides to dig deeper with some diagnostic assessments to help her differentiate instruction. She decides to give Maya a decoding assessment to understand which specific phonics patterns she has mastered, and which patterns she needs to be taught. She learns that Maya hasn’t yet mastered short vowel sounds. She also uses a phonemic awareness assessment to check her blending and segmenting, which shows skill gaps as well. Ms. Kaur realizes that Maya is having difficulty with word recognition skills. To check on her oral language skills, Ms. Kaur reads a story to Maya and asks her oral comprehension questions. Maya is easily able to answer questions about what she hears, indicating to Ms. Kaur that Maya’s reading difficulties appear to be stemming from decoding and word recognition difficulties and not language comprehension difficulties.

Armed with this information, Ms. Kaur builds her small reading groups. Instead of using reading levels to group students, Ms. Kaur uses reading skills. She groups Maya with other students needing phonemic awareness and short vowel instruction.

As Ms. Kaur uses this screening and diagnostic data to plan her groups and instruction, she also continues to monitor Maya’s progress using weekly Nonsense Word Fluency assessments. Since these progress monitoring assessments are one minute each, Ms. Kaur knows that this is a quick and efficient way to make sure Maya is responding to her instruction without having to wait until the mid-year screening time. If Maya is not making sufficient progress toward the benchmark score, she may need instruction to be intensified to allow her to reach the benchmark goal. Ms. Kaur graphs Maya’s progress monitoring data, as well as the aimline which shows the path between Maya’s current score and the benchmark. Ms. Kaur and her grade-level team can quickly and easily judge if Maya is progressing at a fast enough rate to close the gap.

About the author:

Una Malcolm is a doctoral student in Reading Science and also has a Master’s degree in Child Study and Education. She is a member of the Ontario College of Teachers. Una has trained extensively in several evidence-based teaching methods, such as Direct Instruction, Phono-Graphix and Lindamood-Bell. She is an Acadience Reading K-6 (formerly DIBELS Next) Mentor.

Black, P. & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5 (1), 7 - 74. doi: 10.1080/0969595980050102

Catts, H.W., Nielsen, D. C., Bridges, M. S., Liu, Y. S., & Bontempo, D. E. (2015). Early identification of reading disabilities within an RTI framework. Journal of Learning Disabilities, 48 (3), 281–297.

Catts, H.W., Petscher, Y. (2021). A cumulative risk and resilience model of dyslexia. Journal of Learning Disabilities, doi.org/10.1177/00222194211037062

Eunice Kennedy Shriver National Institute of Child Health and Human Development, NIH, DHHS. (2000). Report of the National Reading Panel: Teaching Children to Read: Reports of the Subgroups. U.S. Government Printing Office.

Farrall, M. L. (2012). Reading assessment: Linking language, literacy, and cognition. John Wiley & Sons, Inc.. https://doi.org/10.1002/9781118092668

Fuchs, L.S. & Fuchs, D. (1986). Effects of systematic formative evaluation: A meta-analysis. Exceptional Children, 53 (3), 199 - 208.

Fuchs, L., Fuchs, D., Hosp, M. & Jenkins, J. (2001). Oral reading fluency as an indicator of reading competence. Scientific Studies of Reading, 5 (3), 239 - 256.

Fletcher, J.M. & Vaughn, S. (2009). Response to intervention: Preventing and remediating academic difficulties. Child Development Perspectives, 3 (1), 30 - 37. doi: 10.1111/j.1750-8606.2008.00072.x

Good, R.H., Kaminski, R.A., Cummings, K.D., Dufour-Martel, C., Petersen, K., Powell-Smith, K.A., Stollar, S. & Wallin, J. (2011). Acadience Reading K-6 Assessment Manual . Acadience Learning Inc.

Gough, P.B. & Tunmer, W.E. (1986). Decoding, reading, and reading disability. Remedial and Special Education, 7 (1), 6 - 10. https://doi.org/10.1177/074193258600700104

Hattie, J. (2009). Visible learning . Routledge.

Juel, C. (1988). Learning to read and write: A longitudinal study of 54 children from first through fourth grades. Journal of Educational Psychology, 80 (4), 437 - 447. https://doi.org/10.1037/0022-0663.80.4.437

Lovett, M., Frijters, J.C., Wolf, M., Steinback, K.A., Sevcik, R.A., Morris, R.D. (2017). Early intervention for children at risk for reading disabilties: The impact of grade at intervention and individual differences on intervention outcomes. Journal of Educational Psychology, 109 (7), 889 - 914. https://doi.org/10.1037/edu0000181

McMaster, K. L., & Fuchs, D. (2016). Classwide intervention using peer-assisted learning strategies. In S. R. Jimerson, M. K. Burns, & A. M. VanDerHeyden (Eds.), Handbook of response to intervention: The science and practice of multi-tiered systems of support (pp. 253–268). Springer. https://doi.org/10.1007/978-1-4899-7568-3_15

Miciak, J. & Fletcher, J.M. (2020). The critical role of instructional response for identifying dyslexia and other learning disabilities. Journal of Learning Disabilities, 53 (5), 343 - 353. https://doi.org/10.1177/0022219420906801

Ontario Ministry of Education. (2010). Growing Success: Assessment, evaluation and reporting in Ontario's schools: Covering grades 1 to 12. Ministry of Education.

Parker, D.C., Zaslofsky, A.F., Burns, M.K., Kanive, R., Hodgson, J., Scholin, S.E. & Klingbeil, D.A. (2015) A brief report of the diagnostic accuracy of oral reading fluency and reading inventory levels for reading failure risk among second and third grade students. Reading & Writing Quarterly, 31 (1), 56 - 67. doi: 10.1080/10573569.2013.857970

Spear-Swerling, L. (2018). Structured literacy and typical literacy practices: Understanding differences to create instructional opportunities. Teaching Exceptional Children, 51 (3), 201–211.

Stevens, E. A., Walker, M. A., & Vaughn, S. (2017). The effects of reading fluency interventions on the reading fluency and reading comprehension performance of elementary students with Learning Disabilities: A synthesis of the research from 2001 to 2014. Journal of Learning Disabilities, 50 (5), 576–590. doi: 10.1177/0022219416638028

Torgesen, J.K. (2004). Avoiding the devastating downward spiral: The evidence that early intervention prevents reading failure. American Educator, 28 , 6-19.

Share This Story, Choose Your Platform!

Related articles.

Signup for our Newsletter

Get notified when new resources are posted on the website!

Sign up to receive our electronic newsletter!

Recent Posts

- Working with SLPs to Support Communication and Literacy

- Webinar Recording: SORing After Primary – Morphing into Meaning

- Using Sound Walls to Support Spelling Skills for Students with Learning Disabilities

- Open access

- Published: 12 November 2022

The impacts of performance-based assessment on reading comprehension achievement, academic motivation, foreign language anxiety, and students’ self-efficacy

- Tahereh Heydarnejad ORCID: orcid.org/0000-0003-0011-9442 1 ,

- Fariba Tagavipour 2 ,

- Indrajit Patra ORCID: orcid.org/0000-0001-6704-2676 3 &

- Ayman Farid Khafaga ORCID: orcid.org/0000-0002-9819-2973 4 , 5

Language Testing in Asia volume 12 , Article number: 51 ( 2022 ) Cite this article

11k Accesses

8 Citations

2 Altmetric

Metrics details

The types of assessment tasks affect the learners’ psychological well-being and the process of learning. For years, educationalists were in search of finding and implementing accurate and convenient approaches to assess learners efficiently. Despite the significant role of performance-based assessment (PBA) in affecting second/foreign language (L2) learning processes, few empirical studies have tried to explore how PBA affects reading comprehension achievement (RCA), academic motivation (AM), foreign language anxiety (FLA), and students’ self-efficacy (SS-E). To fill this lacuna of research, the current study intended to gauge the impact of PBA on the improvement of RCA, AM, FLA, and SS-E in English as a foreign language (EFL) context. In so doing, a sample of 88 intermediate EFL learners were randomly divided into experimental group (EG) and CG (control group). During this research (16 sessions), the learners in the CG ( N = 43) received the tradition assessment. In contrast, the learners in the EG ( N = 45) were exposed to some modification based on the underpinning theories of PBA. Data inspection applying the one-way multivariate analysis of variance (i.e., the one-way MANOVA) indicated that the learners in the EG outperformed their counterparts in the CG. The results highlighted the significant contributions of PBA in fostering RCA, AM, FLA, and S-E beliefs. The implications of this study may redound to the benefits of language learners, teachers, curriculum designers, and policy makers in providing opportunities for further practice of PBA.

Introduction

In educational settings, teaching and assessment are the two sides of the same coin. The way teachers teach implicitly and explicitly affects the assessment. That is, the mode of assessment is associated with the teaching approaches and the employed procedures. Such close relationships call for educational and psychological research to consider practical approaches in the development of curriculum, instruction, and assessment in all fields of study, and EFL context is no exception. In the domain of assessment, the traditional assessment was used for years, which encountered different drawbacks. As a reaction to various deficiencies of the traditional assessment, alternatives were proposed (Xu et al, 2022 ; Yan, 2021 ; Yan & Brown, 2017 ). As Wu et al. ( 2021 ) puts it, learners’ performance should be considered in a social context that was forgotten in the traditional assessment. In the same vein, Koné ( 2021 ) believed that learners’ progress should be evaluated by their cooperation. PBA, as opposed to the traditional assessment (TA), concentrates not only on the product of learning but also on the process of learning.

Reading comprehension refers to the process that involved various meaning-making procedures in inferring the implied meaning of a text (Rezai, Namaziandost, Miri, & Kumar, 2022 , Rezai, Namaziandost, & Rahimi, 2022 ; Paris, 2005 ). The techniques used to teach reading are closely related to the employed techniques to evaluate reading comprehension (Nation, 2009 ). Evidence can be found in the literature that traditional standardized objective achievement tests focusing on multiple choices, matching, and true/false items are considered unsuitable and invalid to evaluate learners’ academic competencies (Azizi et al, 2022 ; O'Malley & Valdez Pierce, 1996 ; Michael, 1993 ; Nation, 2009 ). The traditional reading comprehension tests emphasized lower-order discrete, nonintegrated decoding skills, discrete point grammar, and decontextualized vocabulary items, which cannot provide a valid and reliable picture of the actual competencies of the learners (Jamali Kivi et al, 2021 ; Namaziandost et al, 2022 ). To compensate for the shortcomings of traditional reading comprehension tests, PBA was substituted in the educational context.

Aside the form of assessment, phycological factors may also influence the assessment process and its final results in language learning (Cao, 2022 ; Fong, 2022 ; Howard et al, 2021 ; Khajavy et al, 2021 ). Among psychological factors, AM shines differently because it has a supportive and enhancing role in the learners’ academic functioning and success (Caldarella et al, 2021 ; Martin et al., 2017 ; Peng, 2021 ). Generally, motivation is considered a stimulation force that shapes individuals’ behavior (Brophy, 1983 ). In the realm of education, student motivation, typically known as AM, is associated with their involvement in the process of learning (Hiver & Al-Hoorie, 2020 ). AM inspire learners to “make certain academic decisions, participate in classroom activities, and persist in pursuing the demanding process of learning” (Dörnyei & Ushioda, 2009 , p. 2). That is, student AM pertains to students’ initial drive for launching and continuing the prolonged and tedious learning process (Ushioda, 2008 ). In the realm of language learning, Peng ( 2021 ) defined language learners’ AM as the extent to which they strive to learn a new language and their engagement in the process of learning.

Among student-related constructs, anxiety is one of the most experienced unpleasant emotions among learners (Burić & Frenzel, 2019 ; Shafiee Rad & Jafarpour, 2022 ), particularly among foreign language learners (Khajavy et al, 2018 ; Khajavy et al, 2021 ). Anxiety is a subjective state of fear and apprehension, which triggers rapid heart rate, hyperventilation, and sweating (Eysenck, 1992 ). According to Oteir and Al-Otaibi ( 2019 ), individuals feel anxious when they experience powerlessness in the face of an expected danger. In this regard, language anxiety is defined as situation-specific anxiety, including self-perceptions, beliefs, feelings, and behaviors experienced in the language learning classrooms (Horwitz et al, 1986 ; Liu et al, 2021 ; Sutarto Dwi Hastuti et al, 2022 ).

S-E is another student-attributed construct, which refers to “an individual's belief in his or her capacity to execute behaviors necessary to produce specific performance attainments” (Bandura, 1982 , p.122). Simply put, S-E reflects confidence in the ability to exert control over one’s own motivation, behavior, and social environment. Previous studies reflected that S-E is associated with positive psychological constructs such as L2 grit (Zheng et al, 2022 ; Shabani et al, 2022 ), students’ academic achievement (Ma, 2022 ; Vadivel et al., 2022 ), critical thinking (Li et al, 2022 ), and inter and intra-relationships (Martin & Mulvihill, 2019 ; Vadivel & Beena, 2019 ). Academic S-E refers to “one’s confidence in his ability to successfully perform pro-academic self-regulatory behaviors- the degree to which students metacognitively, motivationally, and behaviorally regulate their learning process” (Gore, 2006 , p.92). Learners’ S-E is influential in learners’ choice of tasks and their involvement in completing them (Olivier et al., 2018 ). According to Lai and Hwang ( 2016 ), efficacious learners show positive attitudes toward learning, and they relate their unsuccessful achievement to lower attempts rather than lower ability.

Due to the potent role of assessment in the realm of successful education, it is worthwhile to study the factors leading to its effective implementation. Yet, there is a dearth of literature about effective and convention assessment, particularly in EFL context. Therefore, more research is needed to fill this gap. PBA is one of the constructive assessments that can contribute to learners’ academic achievement. Despite the crucial role of PBA on RCA, AM, FLA, and S-E beliefs, only a few empirical studies have conducted in this regard, and to the best of our knowledge, no study has ever tried to investigate these variables simultaneously in EFL context. Thereby, it is less known about how PBA could facilitate language learning and inhibit learners’ motivation and S-E, as well as decrease FLA. Having this stand point in mind, the present study aims to explore the impact of performance-based assessment on RCA, AM, FLA, and S-E beliefs in the Iranian EFL context. Thus, the following research questions were put forth in the current study:

RQ1: Does PBA have any significant effect on EFL learners’ RCA?

RQ2: Does PBA have any significant effect on EFL learners’ AM?

RQ3: Does PBA have any significant effect on EFL learners’ FLA?

RQ4: Does PBA have any significant effect on EFL learners’ their S-E beliefs?

Based on the research questions, the following null hypotheses could be formulated:

H01: PBA does not have any significant effect on EFL learners’ RCA.

H02: PBA does not have any significant effect on EFL learners’ AM.

H03: PBA does not have any significant effect on EFL learners’ FLA.

H04: PBA does not have any significant effect on EFL learners’ their S-E beliefs.

Review of the related literature

The performance-based assessment (pba).

The origin of TA is the behaviorist assumption that macro skills should be evaluated individually and sequentially (Salma & Prastikawati, 2021 ). In this regard, closed questions with one possible answer were applied to assess students’ progress. PBA is supported by the social-constructivist theory, which pinpointed that assessment is intervened with all the procedures involved in teaching/learning. The social-constructivist theory viewed that assessment should be designed in authentic tasks with student self-assessment and feedback (Shepard, 2000 ; Yan, 2021 ). The other related theoretical foundation for PBA goes back to Vygotsky’ s sociocultural theory describing learning as a social process and emphasizing on the role of social interaction and socially mediated communication in enhancing the learning process (Lightbrown & Spada, 2006 ; Wang, 2009 ).

Over the years, TA prevails in measuring the students’ skills with too much emphasis on the learning results to the point that they negate the actual learning competence (Alderson et al, 2017 ). Typically, TA involves multiple-choice tests, fill in the blanks, true-false, matching, short-answer types, and recall information. On the other hand, the advantages of performance-based assessment reside in its potential and perspectives to generate significant effects on the learning procedure where the active participation of the learners is emphasized (Koné, 2021 ). That is, performance assessment concentrates on observation and evaluation of the learners’ progress in action and on action (Fetsco & McClure, 2005 ).

In Koné’ s words (Koné, 2021 ), PBA asks the learners to activate their knowledge and skills from various domains to accomplish the required processes of a task. Taking a similar path, Griffith and Lim ( 2012 ) refer to PBA as an engaging tool to encourage the learners to apply their previous knowledge and abilities to solve a task. PBA is designed to put the learners in a situation to practice higher-order thinking skills, analyzing, and synthesizing (Herrera et al, 2013 ). Furthermore, PBA potentially informs how the learners authentically mastered the materials (Salma & Prastikawati, 2021 ) and provided meaningful information about the learners’ actual competencies (Gallardo, 2020 ).

Academic motivation (AM)

AM is a critical element in a student’s psychological well-being and influence the way they behave. This concept refers to learners’ desire to academic subjects, which formulate their behavior, attitudes toward learning, and their attempts in the face of difficulties (Abdollahi et al, 2022 ; Koyuncuoğlu, 2021 ). Brophy ( 1983 ) characterized student AM into state motivation and trait motivation. State motivation captures learners’ tendency toward a special subject (Guilloteaux & Dörnyei, 2008 ). Trait motivation refers to learners’ general attitude toward the learning process (Csizér & Dörnyei, 2005 ). Based on Trad et al. ( 2014 ), trait motivation is static, whereas state motivation is dynamic and may change. Different factors may influence static motivation such as learning atmosphere and course content (Hiver & Al-Hoorie, 2020 ) as well as teachers’ personalities and relationships with the learners (Dörnyei, 2020 ; Kolganov et al, 2022 ).

Self-determination theory (SDT) introduced by Deci and Ryan ( 1985 ) was is the prominent theory in explaining AM. SDT proposed three components of motivation: (1) intrinsic motivation, (2) extrinsic motivation, and (3) amotivation. Extrinsic motivation touches upon the activities involved to reach a reward or avoid a punishment. In this regard, Deci and Ryan ( 2020 ) considered introjected regulation, identified regulation, and integrated regulation as three kinds of extrinsic motivation. Intrinsic motivation was generated from innate inspiration and inherent satisfaction to follow an activity. A motivation on the other hand refers to the condition of lacking the motivation to acquire an activity or to be involved in the learning process (Deci & Ryan, 2000 ; Vadivel et al., 2021 ). Both intrinsic and extrinsic motivations of students are influential in their AM (Al-Hoorie et al, 2022 ; Froiland & Oros, 2014 ). When pupils are highly motivated, they do not stop but enthusiastically follow the learning procedures (Martin, 2013 ). AM even preserves learners in the face of hardships that learners may encounter on the road of learning (Howard et al, 2021 ). Situated expectancy value theory (SEVT) is another theory that concentrated on AM (Eccles & Wigfield, 2020 ). This theory considers contextual determinants of students’ motivational perceptions, such as socializing agents and environmental/cultural influences, which form AM.

A plethora of recent studies highlighted the significant role of teachers’ positive communication skills such as closeness (e.g., Liu, 2021 ; Zheng, 2021 ), personality traits (Khalilzadeh & Khodi, 2018 ), confirmation (Shen & Croucher, 2018 ), and style of teaching (Dom’enech-Betoret & G’omez-Artiga, 2014 ) in improving AM among the learners. Additionally, Peng ( 2021 ) found out EFL teachers’ communication behaviors could foster learners’ AM and engagement. In another study, Koné ( 2021 ) confirmed that learners’ motivational and emotional states were significant in the success of performance-based assessment project. In the same line of inquiry, Arias et al ( 2022 ) examined the relationship between emotional intelligence and AM and their contributions to learners’ well-being. Their findings supported a strong correlation between these two variables. From another perspective, Cao ( 2022 ) discussed the mediating roles of AM and L2 enjoyment in learners’ willingness to communicate in the second language.

Foreign language anxiety (FLA)

Rodríguez and Abreu ( 2003 ) define foreign language anxiety as situation-specific phenomenon caused by language learning in a formal situation, especially in a low self-appraisal of communicative competencies in that language. In the same vein, Horwitz et al ( 1986 ) proposed three components for foreign language anxiety (i.e., communication apprehension, test anxiety, and fear of negative evaluation). The first dimension, communication apprehension, refers to the anxiety in interacting with others, oral communication, or problems in listening comprehension. Test anxiety, the second component, is generated from a fear of failure in an examination. The third dimension, fear of negative evaluation, refers to apprehension about others’ evaluations and avoiding situations that may generate negative evaluation of others.

The attentional control theory (ACT) explains the reason why anxiety hinders learners’ academic achievement (Eysenck et al, 2007 ). ACT is originated from the processing efficiency theory (PET) by Eysenck and Calvo ( 1992 ) and explains that anxiety impairs attentional control by absorbing threat-related stimuli. Both external and internal causes may trigger students’ anxiety. Based on ACT, the high levels of worry and low self-confidence of anxious learners may be the reason of their unsuccessful performance (Eysenck et al, 2007 ). Different sources may evoke FLA. According to Alamer and Almulhim ( 2021 ), learners’ outlook on their language aptitude, their personality traits, the exposed experiences in the classroom, and the levels of difficulty may trigger anxiety. From another perspective, Young ( 1991 ) specified the student, the teacher, and the instructional practice as the potential sources of language anxiety. From Brown et al.’s viewpoint (Brown et al, 2001 ), students’ personality type (introversion vs. extroversion) can be among the possible causes of anxiety. As Cassady ( 2010a ) stipulated, academic anxiety is a general term that refers to a groups of anxieties students experience in academic domain. According to him, students’ anxiety (e.g., test anxiety, math anxiety, foreign language anxiety, and science anxiety) interferes with students’ learning process. The focus of the present study is on FLA.

Leafing through the literature on FLA and its association with other student-related factors was quite rosy. For instance, the reciprocal relationships between foreign language anxiety and the following student-related factors were confirmed in the following studies: learners’ attitudes toward language acquisition (Horwitz et al, 1986 ), test performance (Cassady, 2010b ; Covington et al, 1986 ), language performance (Zheng & Cheng, 2018 ), AM (Omidvar et al, 2013 ), anxiety, and social support as the predictor of AM during Covid-19 (Camacho et al, 2021 ). Furthermore, some studies in the realm of foreign/second language concentrate on the examination of skill-based anxiety and its role in language learning. For instance, speaking anxiety (Çağatay, 2015 ; Prentiss, 2021 ), listening anxiety (Zhang, 2013 ), reading anxiety (Hamada & Takaki, 2021a , 2021b ), and writing anxiety (Zhang, 2019 ) were explored in recent years. These studies confirmed that FLA hinder language acquisition or performance. Via a structural equation modeling approach, Fathi et al ( 2021 ) documented that FLA and grit could predict learners’ WTC in EFL context. Similar findings were reached by Khajavy et al ( 2021 ). They came to conclusion that EFL learners’ FLA, WTC, and enjoyment were integrated. That is, WTC and enjoyment were positively correlated, but the relationship between FLA and WTC was significantly negative.

Self-efficacy (S-E)