Web Scraping Python Tutorial – How to Scrape Data From A Website

Python is a beautiful language to code in. It has a great package ecosystem, there's much less noise than you'll find in other languages, and it is super easy to use.

Python is used for a number of things, from data analysis to server programming. And one exciting use-case of Python is Web Scraping.

In this article, we will cover how to use Python for web scraping. We'll also work through a complete hands-on classroom guide as we proceed.

Note: We will be scraping a webpage that I host, so we can safely learn scraping on it. Many companies do not allow scraping on their websites, so this is a good way to learn. Just make sure to check before you scrape.

Introduction to Web Scraping classroom

If you want to code along, you can use this free codedamn classroom that consists of multiple labs to help you learn web scraping. This will be a practical hands-on learning exercise on codedamn, similar to how you learn on freeCodeCamp.

In this classroom, you'll be using this page to test web scraping: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

This classroom consists of 7 labs, and you'll solve a lab in each part of this blog post. We will be using Python 3.8 + BeautifulSoup 4 for web scraping.

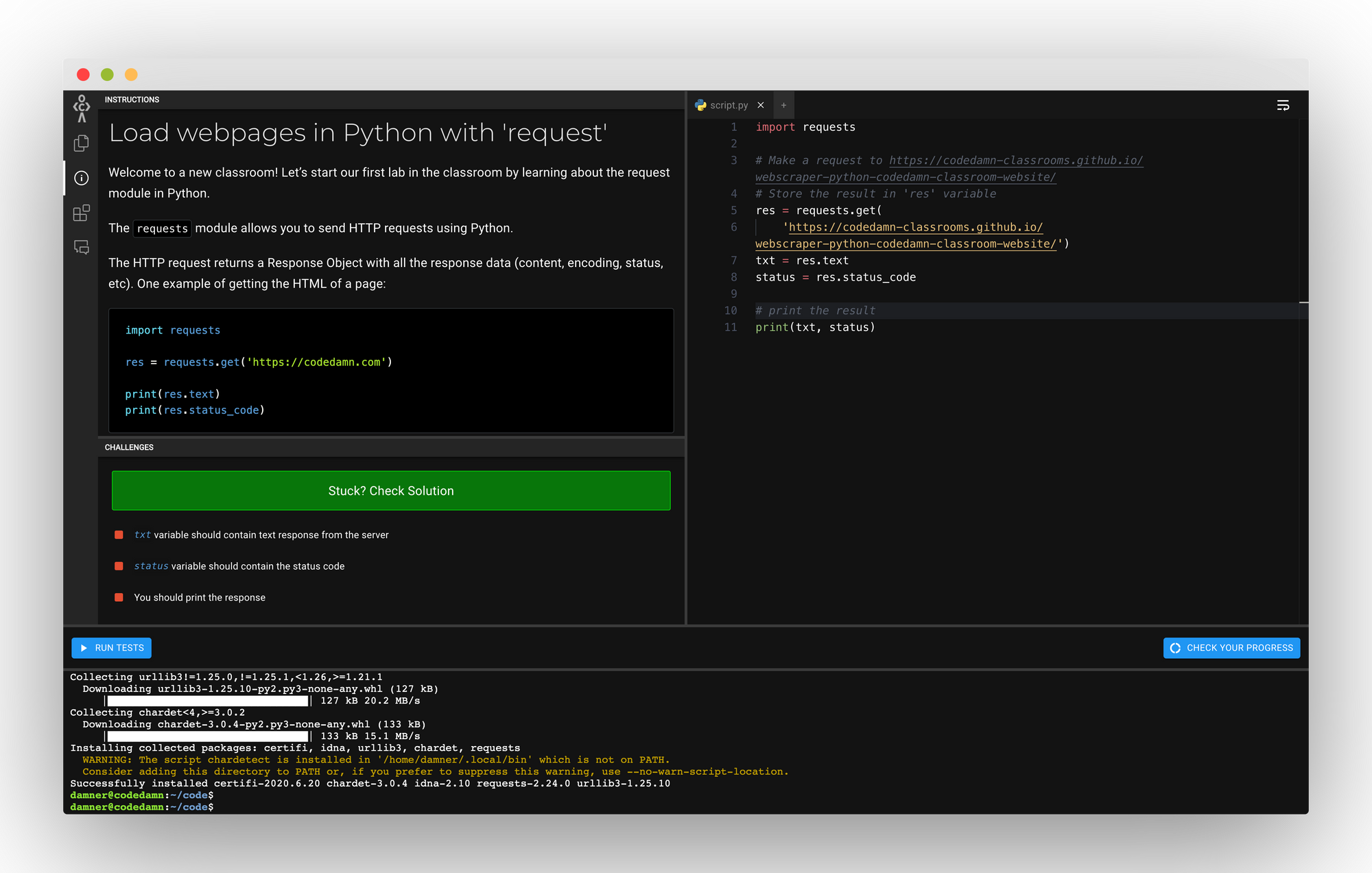

Part 1: Loading Web Pages with 'request'

This is the link to this lab .

The requests module allows you to send HTTP requests using Python.

The HTTP request returns a Response Object with all the response data (content, encoding, status, and so on). One example of getting the HTML of a page:

Passing requirements:

- Get the contents of the following URL using requests module: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

- Store the text response (as shown above) in a variable called txt

- Store the status code (as shown above) in a variable called status

- Print txt and status using print function

Once you understand what is happening in the code above, it is fairly simple to pass this lab. Here's the solution to this lab:

Let's move on to part 2 now where you'll build more on top of your existing code.

Part 2: Extracting title with BeautifulSoup

In this whole classroom, you’ll be using a library called BeautifulSoup in Python to do web scraping. Some features that make BeautifulSoup a powerful solution are:

- It provides a lot of simple methods and Pythonic idioms for navigating, searching, and modifying a DOM tree. It doesn't take much code to write an application

- Beautiful Soup sits on top of popular Python parsers like lxml and html5lib, allowing you to try out different parsing strategies or trade speed for flexibility.

Basically, BeautifulSoup can parse anything on the web you give it.

Here’s a simple example of BeautifulSoup:

- Use the requests package to get title of the URL: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

- Use BeautifulSoup to store the title of this page into a variable called page_title

Looking at the example above, you can see once we feed the page.content inside BeautifulSoup, you can start working with the parsed DOM tree in a very pythonic way. The solution for the lab would be:

This was also a simple lab where we had to change the URL and print the page title. This code would pass the lab.

Part 3: Soup-ed body and head

In the last lab, you saw how you can extract the title from the page. It is equally easy to extract out certain sections too.

You also saw that you have to call .text on these to get the string, but you can print them without calling .text too, and it will give you the full markup. Try to run the example below:

Let's take a look at how you can extract out body and head sections from your pages.

- Repeat the experiment with URL: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

- Store page title (without calling .text) of URL in page_title

- Store body content (without calling .text) of URL in page_body

- Store head content (without calling .text) of URL in page_head

When you try to print the page_body or page_head you'll see that those are printed as strings . But in reality, when you print(type page_body) you'll see it is not a string but it works fine.

The solution of this example would be simple, based on the code above:

Part 4: select with BeautifulSoup

Now that you have explored some parts of BeautifulSoup, let's look how you can select DOM elements with BeautifulSoup methods.

Once you have the soup variable (like previous labs), you can work with .select on it which is a CSS selector inside BeautifulSoup. That is, you can reach down the DOM tree just like how you will select elements with CSS. Let's look at an example:

.select returns a Python list of all the elements. This is why you selected only the first element here with the [0] index.

- Create a variable all_h1_tags . Set it to empty list.

- Use .select to select all the <h1> tags and store the text of those h1 inside all_h1_tags list.

- Create a variable seventh_p_text and store the text of the 7th p element (index 6) inside.

The solution for this lab is:

Let's keep going.

Part 5: Top items being scraped right now

Let's go ahead and extract the top items scraped from the URL: https://codedamn-classrooms.github.io/webscraper-python-codedamn-classroom-website/

If you open this page in a new tab, you’ll see some top items. In this lab, your task is to scrape out their names and store them in a list called top_items . You will also extract out the reviews for these items as well.

To pass this challenge, take care of the following things:

- Use .select to extract the titles. (Hint: one selector for product titles could be a.title )

- Use .select to extract the review count label for those product titles. (Hint: one selector for reviews could be div.ratings ) Note: this is a complete label (i.e. 2 reviews ) and not just a number.

- Create a new dictionary in the format:

- Note that you are using the strip method to remove any extra newlines/whitespaces you might have in the output. This is important to pass this lab.

- Append this dictionary in a list called top_items

- Print this list at the end

There are quite a few tasks to be done in this challenge. Let's take a look at the solution first and understand what is happening:

Note that this is only one of the solutions. You can attempt this in a different way too. In this solution:

- First of all you select all the div.thumbnail elements which gives you a list of individual products

- Then you iterate over them

- Because select allows you to chain over itself, you can use select again to get the title.

- Note that because you're running inside a loop for div.thumbnail already, the h4 > a.title selector would only give you one result, inside a list. You select that list's 0th element and extract out the text.

- Finally you strip any extra whitespace and append it to your list.

Straightforward right?

Part 6: Extracting Links

So far you have seen how you can extract the text, or rather innerText of elements. Let's now see how you can extract attributes by extracting links from the page.

Here’s an example of how to extract out all the image information from the page:

In this lab, your task is to extract the href attribute of links with their text as well. Make sure of the following things:

- You have to create a list called all_links

- In this list, store all link dict information. It should be in the following format:

- Make sure your text is stripped of any whitespace

- Make sure you check if your .text is None before you call .strip() on it.

- Store all these dicts in the all_links

You are extracting the attribute values just like you extract values from a dict, using the get function. Let's take a look at the solution for this lab:

Here, you extract the href attribute just like you did in the image case. The only thing you're doing is also checking if it is None. We want to set it to empty string, otherwise we want to strip the whitespace.

Part 7: Generating CSV from data

Finally, let's understand how you can generate CSV from a set of data. You will create a CSV with the following headings:

- Product Name

- Description

- Product Image

These products are located in the div.thumbnail . The CSV boilerplate is given below:

You have to extract data from the website and generate this CSV for the three products.

Passing Requirements:

- Product Name is the whitespace trimmed version of the name of the item (example - Asus AsusPro Adv..)

- Price is the whitespace trimmed but full price label of the product (example - $1101.83)

- The description is the whitespace trimmed version of the product description (example - Asus AsusPro Advanced BU401LA-FA271G Dark Grey, 14", Core i5-4210U, 4GB, 128GB SSD, Win7 Pro)

- Reviews are the whitespace trimmed version of the product (example - 7 reviews)

- Product image is the URL (src attribute) of the image for a product (example - /webscraper-python-codedamn-classroom-website/cart2.png)

- The name of the CSV file should be products.csv and should be stored in the same directory as your script.py file

Let's see the solution to this lab:

The for block is the most interesting here. You extract all the elements and attributes from what you've learned so far in all the labs.

When you run this code, you end up with a nice CSV file. And that's about all the basics of web scraping with BeautifulSoup!

I hope this interactive classroom from codedamn helped you understand the basics of web scraping with Python.

If you liked this classroom and this blog, tell me about it on my twitter and Instagram . Would love to hear feedback!

Independent developer, security engineering enthusiast, love to build and break stuff with code, and JavaScript <3

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

What Is Web Scraping? A Complete Beginner’s Guide

As the digital economy expands, the role of web scraping becomes ever more important. Read on to learn what web scraping is, how it works, and why it’s so important for data analytics.

The amount of data in our lives is growing exponentially. With this surge, data analytics has become a hugely important part of the way organizations are run. And while data has many sources, its biggest repository is on the web. As the fields of big data analytics , artificial intelligence , and machine learning grow, companies need data analysts who can scrape the web in increasingly sophisticated ways.

This beginner’s guide offers a total introduction to web scraping, what it is, how it’s used, and what the process involves. We’ll cover:

- What is web scraping?

- What is web scraping used for?

- How does a web scraper function?

- How to scrape the web (step-by-step)

- What tools can you use to scrape the web?

- What else do you need to know about web scraping?

Before we get into the details, though, let’s start with the simple stuff…

1. What is web scraping?

Web scraping (or data scraping) is a technique used to collect content and data from the internet. This data is usually saved in a local file so that it can be manipulated and analyzed as needed. If you’ve ever copied and pasted content from a website into an Excel spreadsheet, this is essentially what web scraping is, but on a very small scale.

However, when people refer to ‘web scrapers,’ they’re usually talking about software applications. Web scraping applications (or ‘bots’) are programmed to visit websites, grab the relevant pages and extract useful information. By automating this process, these bots can extract huge amounts of data in a very short time. This has obvious benefits in the digital age, when big data—which is constantly updating and changing—plays such a prominent role. You can learn more about the nature of big data in this post.

What kinds of data can you scrape from the web?

If there’s data on a website, then in theory, it’s scrapable! Common data types organizations collect include images, videos, text, product information, customer sentiments and reviews (on sites like Twitter, Yell, or Tripadvisor), and pricing from comparison websites. There are some legal rules about what types of information you can scrape, but we’ll cover these later on.

2. What is web scraping used for?

Web scraping has countless applications, especially within the field of data analytics. Market research companies use scrapers to pull data from social media or online forums for things like customer sentiment analysis. Others scrape data from product sites like Amazon or eBay to support competitor analysis.

Meanwhile, Google regularly uses web scraping to analyze, rank, and index their content. Web scraping also allows them to extract information from third-party websites before redirecting it to their own (for instance, they scrape e-commerce sites to populate Google Shopping).

Many companies also carry out contact scraping, which is when they scrape the web for contact information to be used for marketing purposes. If you’ve ever granted a company access to your contacts in exchange for using their services, then you’ve given them permission to do just this.

There are few restrictions on how web scraping can be used. It’s essentially down to how creative you are and what your end goal is. From real estate listings, to weather data, to carrying out SEO audits, the list is pretty much endless!

However, it should be noted that web scraping also has a dark underbelly. Bad players often scrape data like bank details or other personal information to conduct fraud, scams, intellectual property theft, and extortion. It’s good to be aware of these dangers before starting your own web scraping journey. Make sure you keep abreast of the legal rules around web scraping. We’ll cover these a bit more in section six.

3. How does a web scraper function?

So, we now know what web scraping is, and why different organizations use it. But how does a web scraper work? While the exact method differs depending on the software or tools you’re using, all web scraping bots follow three basic principles:

Step 1: Making an HTTP request to a server

- Step 2: Extracting and parsing (or breaking down) the website’s code

Step 3: Saving the relevant data locally

Now let’s take a look at each of these in a little more detail.

As an individual, when you visit a website via your browser, you send what’s called an HTTP request. This is basically the digital equivalent of knocking on the door, asking to come in. Once your request is approved, you can then access that site and all the information on it. Just like a person, a web scraper needs permission to access a site. Therefore, the first thing a web scraper does is send an HTTP request to the site they’re targeting.

Step 2: Extracting and parsing the website’s code

Once a website gives a scraper access, the bot can read and extract the site’s HTML or XML code. This code determines the website’s content structure. The scraper will then parse the code (which basically means breaking it down into its constituent parts) so that it can identify and extract elements or objects that have been predefined by whoever set the bot loose! These might include specific text, ratings, classes, tags, IDs, or other information.

Once the HTML or XML has been accessed, scraped, and parsed, the web scraper will then store the relevant data locally. As mentioned, the data extracted is predefined by you (having told the bot what you want it to collect). Data is usually stored as structured data, often in an Excel file, such as a .csv or .xls format.

With these steps complete, you’re ready to start using the data for your intended purposes. Easy, eh? And it’s true…these three steps do make data scraping seem easy. In reality, though, the process isn’t carried out just once, but countless times. This comes with its own swathe of problems that need solving. For instance, badly coded scrapers may send too many HTTP requests, which can crash a site. Every website also has different rules for what bots can and can’t do. Executing web scraping code is just one part of a more involved process. Let’s look at that now.

4. How to scrape the web (step-by-step)

OK, so we understand what a web scraping bot does. But there’s more to it than simply executing code and hoping for the best! In this section, we’ll cover all the steps you need to follow. The exact method for carrying out these steps depends on the tools you’re using, so we’ll focus on the (non-technical) basics.

Step one: Find the URLs you want to scrape

It might sound obvious, but the first thing you need to do is to figure out which website(s) you want to scrape. If you’re investigating customer book reviews, for instance, you might want to scrape relevant data from sites like Amazon, Goodreads, or LibraryThing.

Step two: Inspect the page

Before coding your web scraper, you need to identify what it has to scrape. Right-clicking anywhere on the frontend of a website gives you the option to ‘inspect element’ or ‘view page source.’ This reveals the site’s backend code, which is what the scraper will read.

Step three: Identify the data you want to extract

If you’re looking at book reviews on Amazon, you’ll need to identify where these are located in the backend code. Most browsers automatically highlight selected frontend content with its corresponding code on the backend. Your aim is to identify the unique tags that enclose (or ‘nest’) the relevant content (e.g. <div> tags).

Step four: Write the necessary code

Once you’ve found the appropriate nest tags, you’ll need to incorporate these into your preferred scraping software. This basically tells the bot where to look and what to extract. It’s commonly done using Python libraries, which do much of the heavy lifting. You need to specify exactly what data types you want the scraper to parse and store. For instance, if you’re looking for book reviews, you’ll want information such as the book title, author name, and rating.

Step five: Execute the code

Once you’ve written the code, the next step is to execute it. Now to play the waiting game! This is where the scraper requests site access, extracts the data, and parses it (as per the steps outlined in the previous section).

Step six: Storing the data

After extracting, parsing, and collecting the relevant data, you’ll need to store it. You can instruct your algorithm to do this by adding extra lines to your code. Which format you choose is up to you, but as mentioned, Excel formats are the most common. You can also run your code through a Python Regex module (short for ‘regular expressions’) to extract a cleaner set of data that’s easier to read.

Now you’ve got the data you need, you’re free to play around with it.Of course, as we often learn in our explorations of the data analytics process , web scraping isn’t always as straightforward as it at first seems. It’s common to make mistakes and you may need to repeat some steps. But don’t worry, this is normal, and practice makes perfect!

5. What tools can you use to scrape the web?

We’ve covered the basics of how to scrape the web for data, but how does this work from a technical standpoint? Often, web scraping requires some knowledge of programming languages, the most popular for the task being Python . Luckily, Python comes with a huge number of open-source libraries that make web scraping much easier. These include:

BeautifulSoup

BeautifulSoup is another Python library, commonly used to parse data from XML and HTML documents. Organizing this parsed content into more accessible trees, BeautifulSoup makes navigating and searching through large swathes of data much easier. It’s the go-to tool for many data analysts.

Scrapy is a Python-based application framework that crawls and extracts structured data from the web. It’s commonly used for data mining, information processing, and for archiving historical content. As well as web scraping (which it was specifically designed for) it can be used as a general-purpose web crawler, or to extract data through APIs.

Pandas is another multi-purpose Python library used for data manipulation and indexing. It can be used to scrape the web in conjunction with BeautifulSoup. The main benefit of using pandas is that analysts can carry out the entire data analytics process using one language (avoiding the need to switch to other languages, such as R).

A bonus tool, in case you’re not an experienced programmer! Parsehub is a free online tool (to be clear, this one’s not a Python library) that makes it easy to scrape online data. The only catch is that for full functionality you’ll need to pay. But the free tool is worth playing around with, and the company offers excellent customer support.

There are many other tools available, from general-purpose scraping tools to those designed for more sophisticated, niche tasks. The best thing to do is to explore which tools suit your interests and skill set, and then add the appropriate ones to your data analytics arsenal!

6. What else do you need to know about web scraping?

We already mentioned that web scraping isn’t always as simple as following a step-by-step process. Here’s a checklist of additional things to consider before scraping a website.

Have you refined your target data?

When you’re coding your web scraper, it’s important to be as specific as possible about what you want to collect. Keep things too vague and you’ll end up with far too much data (and a headache!) It’s best to invest some time upfront to produce a clear plan. This will save you lots of effort cleaning your data in the long run.

Have you checked the site’s robots.txt?

Each website has what’s called a robot.txt file. This must always be your first port of call. This file communicates with web scrapers, telling them which areas of the site are out of bounds. If a site’s robots.txt disallows scraping on certain (or all) pages then you should always abide by these instructions.

Have you checked the site’s terms of service?

In addition to the robots.txt, you should review a website’s terms of service (TOS). While the two should align, this is sometimes overlooked. The TOS might have a formal clause outlining what you can and can’t do with the data on their site. You can get into legal trouble if you break these rules, so make sure you don’t!

Are you following data protection protocols?

Just because certain data is available doesn’t mean you’re allowed to scrape it, free from consequences. Be very careful about the laws in different jurisdictions, and follow each region’s data protection protocols. For instance, in the EU, the General Data Protection Regulation (GDPR) protects certain personal data from extraction, meaning it’s against the law to scrape it without people’s explicit consent.

Are you at risk of crashing a website?

Big websites, like Google or Amazon, are designed to handle high traffic. Smaller sites are not. It’s therefore important that you don’t overload a site with too many HTTP requests, which can slow it down, or even crash it completely. In fact, this is a technique often used by hackers. They flood sites with requests to bring them down, in what’s known as a ‘denial of service’ attack. Make sure you don’t carry one of these out by mistake! Don’t scrape too aggressively, either; include plenty of time intervals between requests, and avoid scraping a site during its peak hours.

Be mindful of all these considerations, be careful with your code, and you should be happily scraping the web in no time at all.

7. In summary

In this post, we’ve looked at what data scraping is, how it’s used, and what the process involves. Key takeaways include:

- Web scraping can be used to collect all sorts of data types: From images to videos, text, numerical data, and more.

- Web scraping has multiple uses: From contact scraping and trawling social media for brand mentions to carrying out SEO audits, the possibilities are endless.

- Planning is important: Taking time to plan what you want to scrape beforehand will save you effort in the long run when it comes to cleaning your data.

- Python is a popular tool for scraping the web: Python libraries like Beautifulsoup, scrapy, and pandas are all common tools for scraping the web.

- Don’t break the law: Before scraping the web, check the laws in various jurisdictions, and be mindful not to breach a site’s terms of service.

- Etiquette is important, too: Consider factors such as a site’s resources—don’t overload them, or you’ll risk bringing them down. It’s nice to be nice!

Data scraping is just one of the steps involved in the broader data analytics process. To learn about data analytics, why not check out our free, five-day data analytics short course ? We can also recommend the following posts:

- Where to find free datasets for your next project

- What is data quality and why does it matter?

- Quantitative vs. qualitative data: What’s the difference?

Assignment 3 - Web Scraping Practice

Introduction to programming with python.

In this assignment, you will apply your knowledge of Python and its ecosystem of libraries to scrape information from any website in the given list of websites and create a dataset of CSV file(s). Here are the steps you'll follow:

Pick a website and describe your objective

Pick a site to scrape from the given list of websites below: (NOTE: you can also pick some other site that's not listed below)

- Dataset of Quotes (BrainyQuote) : https://www.brainyquote.com/topics

- Dataset of Movies/TV Shows (TMDb) : https://www.themoviedb.org .

- Dataset of Books (BooksToScrape) : http://books.toscrape.com

- Dataset of Quotes (QuotesToScrape) : http://quotes.toscrape.com

- Scrape User's Reviews (ConsumerAffairs) : https://www.consumeraffairs.com/ .

- Stocks Prices (Yahoo Finance) : https://finance.yahoo.com/quote/TWTR .

- Songs Dataset (AZLyrics) : https://www.azlyrics.com/f.html .

- Scrape a Popular Blog : https://m.signalvnoise.com/search/ .

- Weekly Top Songs (Top 40 Weekly) : https://top40weekly.com .

- Video Games Dataset (Steam) : https://store.steampowered.com/genre/Free%20to%20Play/

- Identify the information you'd like to scrape from the site. Decide the format of the output CSV file.

- Summarize your assignment idea in a paragraph using a Markdown cell and outline your strategy.

Use the requests library to download web pages

- Inspect the website's HTML source and identify the right URLs to download.

- Download and save web pages locally using the requests library.

- Create a function to automate downloading for different topics/search queries.

Use Beautiful Soup to parse and extract information

- Parse and explore the structure of downloaded web pages using Beautiful soup.

- Use the right properties and methods to extract the required information.

- Create functions to extract from the page into lists and dictionaries.

- (Optional) Use a REST API to acquire additional information if required.

Create CSV file(s) with the extracted information

- Create functions for the end-to-end process of downloading, parsing, and saving CSVs.

- Execute the function with different inputs to create a dataset of CSV files.

- Attach the CSV files with your notebook using jovian.commit .

Document and share your work

- Add proper headings and documentation in your Jupyter notebook.

- Publish your Jupyter notebook to Jovian and make a submission.

- (Optional) Write a blog post about your project and share it online.

Review the evaluation criteria on the "Submit" tab and look for project ideas under the "Resources" tab below

There's no starter notebook for this project. Use the "New" button on Jovian, and select "Run on Binder" to get started.

Ask questions, get help, and share your work on the Slack group . Help others by sharing feedback and answering questions.

Record snapshots of your notebook from time to time using ctrl/cmd +s , to ensure that you don't lose any work.

Websites with dynamic content (fetched after page load) cannot be scraped using BeautifulSoup. One way to scrape a dynamic website is by using Selenium.

The "Resume Description" field below should contain a summary of your assignment in no more than 3 points. You'll can use this description to present this assignment as a project on your Resume. Follow this guide to come up with a good description

Evaluation Criteria

Your submission must meet the following criteria to receive a PASS grade in the assignment:

- The Jupyter notebook should run end-to-end without any errors or exceptions

- The Jupyter notebook should contain execution outputs for all the code cells

- The Jupyter notebook should contain proper explanations i.e. proper documentation (headings, sub-headings, summary, future work ideas, references, etc) in Markdown cells

- Your assignment should involve web scraping of at least two web pages

- Your assignment should use the appropriate libraries for web scraping

- Your submission should include the CSV file generated by scraping

- The submitted CSV file should contain at least 3 columns and 100 rows of data

- The Jupyter notebook should be publicly accessible (not "Private" or "Secret")

- Follow this guide for the "Resume Description" field in the submission form: https://jovian.com/program/jovian-data-science-bootcamp/knowledge/08-presenting-projects-on-your-resume-231

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

Assignment 4

NETHRAGOWDA97/Web-Scraping-Assignment-4

Folders and files.

- Jupyter Notebook 100.0%

IMAGES

VIDEO

COMMENTS

Many companies do not allow scraping on their websites, so this is a good way to learn. Just make sure to check before you scrape. Introduction to Web Scraping classroom Preview of codedamn classroom. If you want to code along, you can use this free codedamn classroom that consists of multiple labs to help you learn web scraping. This will be a ...

Web-Scraping-assignment-3. Write a python program which searches all the product under a particular product vertical from www.amazon.in. The product verticals to be searched will be taken as input from user. For e.g. If user input is 'guitar'. Then search for guitars.

The incredible amount of data on the Internet is a rich resource for any field of research or personal interest. To effectively harvest that data, you'll need to become skilled at web scraping.The Python libraries requests and Beautiful Soup are powerful tools for the job. If you like to learn with hands-on examples and have a basic understanding of Python and HTML, then this tutorial is for ...

The web scraping process involves sending a request to a website and parsing the HTML code to extract the relevant data. This data is then cleaned and structured into a format that can be easily ...

Web scraping is a technique to extract data from the web and store it in a structured format. In this tutorial, you'll learn how to use Python and Beautiful Soup, a popular library for web scraping, to scrape data from various websites. You'll also learn how to clean, manipulate, and visualize the data using pandas and matplotlib. Whether you want to analyze web data for research, business, or ...

Web scraping is the process of collecting and parsing raw data from the Web, and the Python community has come up with some pretty powerful web scraping tools.. The Internet hosts perhaps the greatest source of information on the planet. Many disciplines, such as data science, business intelligence, and investigative reporting, can benefit enormously from collecting and analyzing data from ...

To begin our coding project, let's activate our Python 3 programming environment. Make sure you're in the directory where your environment is located, and run the following command: . my_env /bin/activate. With our programming environment activated, we'll create a new file, with nano for instance.

Web scraping is a way for programmers to learn more about websites and users. Sometimes you'll find a website that has all the data you need for a project — but you can't download it. Fortunately, there are tools like Beautiful Soup (which you'll learn how to use in this course) that let you pull data from a web page in a usable format.

Step #4: Obtain data for each book. This is the most lengthy and important step. We will first consider only one book, assume it's the first one in the list. If we open the wiki page of the book we will see the different information of the book enclosed in a table on the right side of the screen.

00:20 Let's get started talking about what is web scraping in the first place. 00:24 So, you've probably heard this term before but maybe you're not entirely sure what it means. Generally, it could be any type of gathering information from the internet. 00:34 So, just pulling information from the web, whether it's you doing it manually ...

While the exact method differs depending on the software or tools you're using, all web scraping bots follow three basic principles: Step 1: Making an HTTP request to a server. Step 2: Extracting and parsing (or breaking down) the website's code. Step 3: Saving the relevant data locally.

Python Web Scraping Tutorial. Web scraping, the process of extracting data from websites, has emerged as a powerful technique to gather information from the vast expanse of the internet. In this tutorial, we'll explore various Python libraries and modules commonly used for web scraping and delve into why Python 3 is the preferred choice for ...

Web Scraping. Web scraping or web data extraction is data scraping used for extracting data from websites. Web scraping softwares are used to access the World Wide Web directly using the Hypertext Transfer Protocol, or through a web browser. While web scraping can be done manually by a software user, the term typically refers to automated ...

4) Octoparse. Octoparse is a web scraping tool perfect for anyone who needs to extract data from websites but wants to save time learning to code. With Octoparse, you can scrape data using a ...

1. Price Monitoring. Web Scraping can be used by companies to scrap the product data for their products and competing products as well to see how it impacts their pricing strategies. Companies can use this data to fix the optimal pricing for their products so that they can obtain maximum revenue. 2.

Your assignment should involve web scraping of at least two web pages. Your assignment should use the appropriate libraries for web scraping. Your submission should include the CSV file generated by scraping. The submitted CSV file should contain at least 3 columns and 100 rows of data.

Web-Scraping-Assignment-4. Read all the problem statements, notes carefully and scrape the required data using any web scraping tool of your choice. You have to handle commonly occurring EXCEPTIONS by using exception handling programing. To get information about selenium Exceptions. You may visit following links: QUE 1 Scrape the details of ...

# WEB-SCRAPING-ASSIGNMENT-4-WEB SCRAPING - ASSIGNMENT 4 FLIPROBO. scrape the required data using any web scraping tool WITH EXCEPTIONS by using exception handling programing.

My first web scraping assignment. ... I used python to perform the web scraping. There are a number of materials online to aid in scraping data from YouTube but these are the caveats I want you to pay attention to. One has to do with the number of searches per request. Google allows for 25,000 rows of data per request but that doesn't come ...

You signed in with another tab or window. Reload to refresh your session. You signed out in another tab or window. Reload to refresh your session. You switched accounts on another tab or window.

Utilizing web scraping techniques, it offers a comprehensive analysis of the entertainment landscape. - shreya1m/JustWatch-Web-Scrapping-This project extracts movie and TV show data from JustWatch using Python, providing insights into streaming platforms, genres, and content availability. Utilizing web scraping techniques, it offers...

Assignment 4. Contribute to NETHRAGOWDA97/Web-Scraping-Assignment-4 development by creating an account on GitHub.