Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Random Assignment in Experiments | Introduction & Examples

Random Assignment in Experiments | Introduction & Examples

Published on March 8, 2021 by Pritha Bhandari . Revised on June 22, 2023.

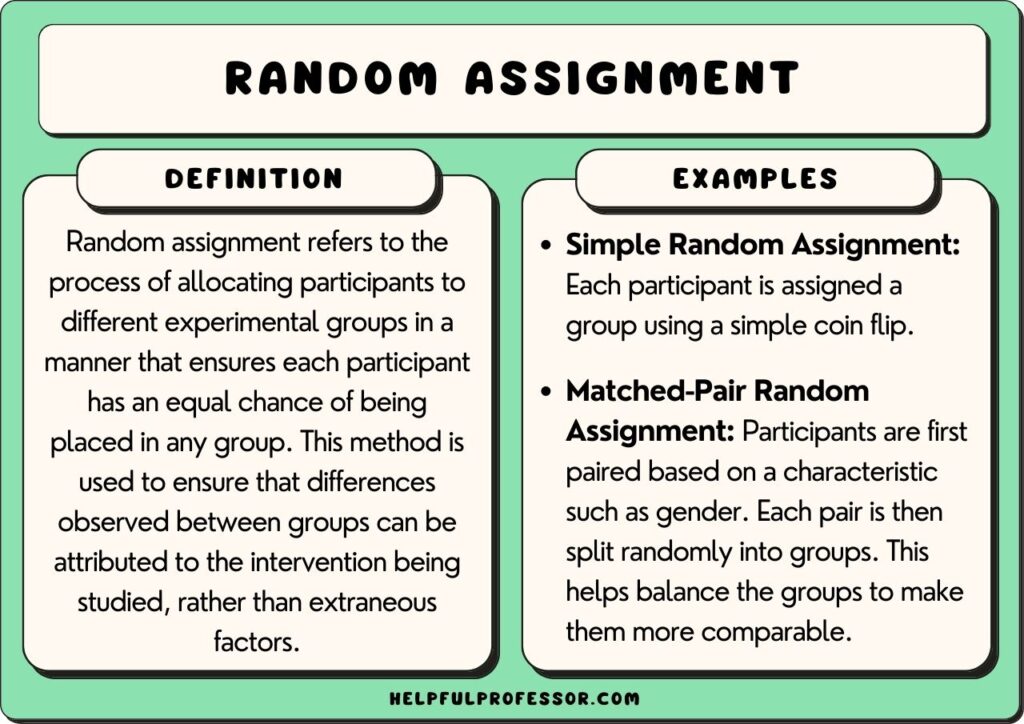

In experimental research, random assignment is a way of placing participants from your sample into different treatment groups using randomization.

With simple random assignment, every member of the sample has a known or equal chance of being placed in a control group or an experimental group. Studies that use simple random assignment are also called completely randomized designs .

Random assignment is a key part of experimental design . It helps you ensure that all groups are comparable at the start of a study: any differences between them are due to random factors, not research biases like sampling bias or selection bias .

Table of contents

Why does random assignment matter, random sampling vs random assignment, how do you use random assignment, when is random assignment not used, other interesting articles, frequently asked questions about random assignment.

Random assignment is an important part of control in experimental research, because it helps strengthen the internal validity of an experiment and avoid biases.

In experiments, researchers manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. To do so, they often use different levels of an independent variable for different groups of participants.

This is called a between-groups or independent measures design.

You use three groups of participants that are each given a different level of the independent variable:

- a control group that’s given a placebo (no dosage, to control for a placebo effect ),

- an experimental group that’s given a low dosage,

- a second experimental group that’s given a high dosage.

Random assignment to helps you make sure that the treatment groups don’t differ in systematic ways at the start of the experiment, as this can seriously affect (and even invalidate) your work.

If you don’t use random assignment, you may not be able to rule out alternative explanations for your results.

- participants recruited from cafes are placed in the control group ,

- participants recruited from local community centers are placed in the low dosage experimental group,

- participants recruited from gyms are placed in the high dosage group.

With this type of assignment, it’s hard to tell whether the participant characteristics are the same across all groups at the start of the study. Gym-users may tend to engage in more healthy behaviors than people who frequent cafes or community centers, and this would introduce a healthy user bias in your study.

Although random assignment helps even out baseline differences between groups, it doesn’t always make them completely equivalent. There may still be extraneous variables that differ between groups, and there will always be some group differences that arise from chance.

Most of the time, the random variation between groups is low, and, therefore, it’s acceptable for further analysis. This is especially true when you have a large sample. In general, you should always use random assignment in experiments when it is ethically possible and makes sense for your study topic.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Random sampling and random assignment are both important concepts in research, but it’s important to understand the difference between them.

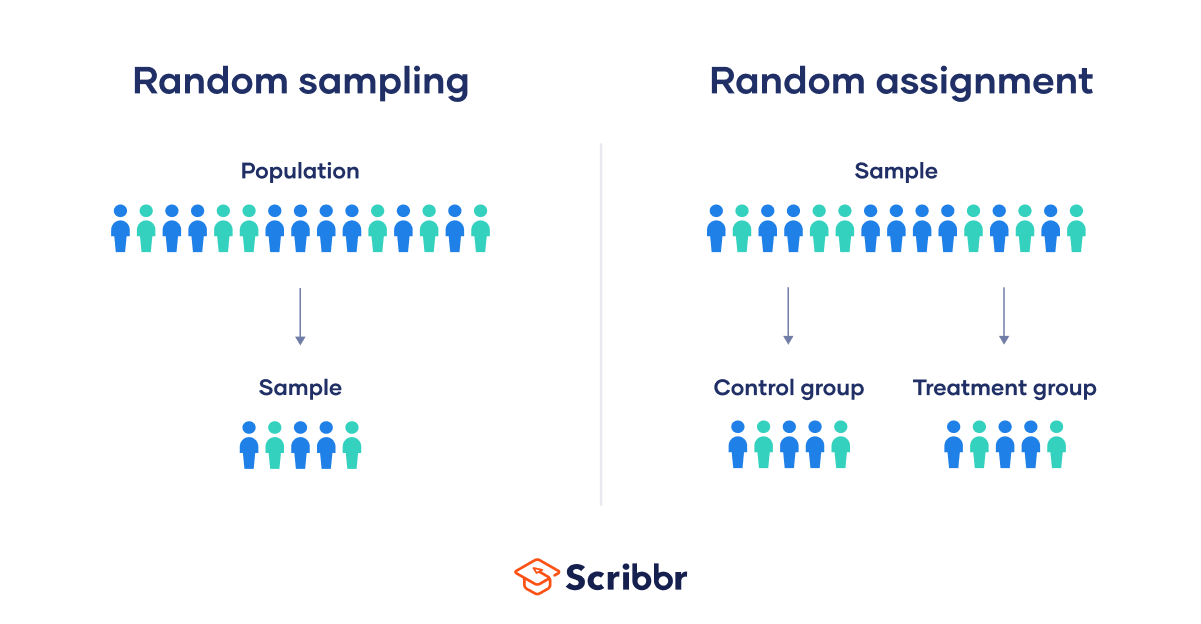

Random sampling (also called probability sampling or random selection) is a way of selecting members of a population to be included in your study. In contrast, random assignment is a way of sorting the sample participants into control and experimental groups.

While random sampling is used in many types of studies, random assignment is only used in between-subjects experimental designs.

Some studies use both random sampling and random assignment, while others use only one or the other.

Random sampling enhances the external validity or generalizability of your results, because it helps ensure that your sample is unbiased and representative of the whole population. This allows you to make stronger statistical inferences .

You use a simple random sample to collect data. Because you have access to the whole population (all employees), you can assign all 8000 employees a number and use a random number generator to select 300 employees. These 300 employees are your full sample.

Random assignment enhances the internal validity of the study, because it ensures that there are no systematic differences between the participants in each group. This helps you conclude that the outcomes can be attributed to the independent variable .

- a control group that receives no intervention.

- an experimental group that has a remote team-building intervention every week for a month.

You use random assignment to place participants into the control or experimental group. To do so, you take your list of participants and assign each participant a number. Again, you use a random number generator to place each participant in one of the two groups.

To use simple random assignment, you start by giving every member of the sample a unique number. Then, you can use computer programs or manual methods to randomly assign each participant to a group.

- Random number generator: Use a computer program to generate random numbers from the list for each group.

- Lottery method: Place all numbers individually in a hat or a bucket, and draw numbers at random for each group.

- Flip a coin: When you only have two groups, for each number on the list, flip a coin to decide if they’ll be in the control or the experimental group.

- Use a dice: When you have three groups, for each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1 or 2 lands them in a control group; 3 or 4 in an experimental group; and 5 or 6 in a second control or experimental group.

This type of random assignment is the most powerful method of placing participants in conditions, because each individual has an equal chance of being placed in any one of your treatment groups.

Random assignment in block designs

In more complicated experimental designs, random assignment is only used after participants are grouped into blocks based on some characteristic (e.g., test score or demographic variable). These groupings mean that you need a larger sample to achieve high statistical power .

For example, a randomized block design involves placing participants into blocks based on a shared characteristic (e.g., college students versus graduates), and then using random assignment within each block to assign participants to every treatment condition. This helps you assess whether the characteristic affects the outcomes of your treatment.

In an experimental matched design , you use blocking and then match up individual participants from each block based on specific characteristics. Within each matched pair or group, you randomly assign each participant to one of the conditions in the experiment and compare their outcomes.

Sometimes, it’s not relevant or ethical to use simple random assignment, so groups are assigned in a different way.

When comparing different groups

Sometimes, differences between participants are the main focus of a study, for example, when comparing men and women or people with and without health conditions. Participants are not randomly assigned to different groups, but instead assigned based on their characteristics.

In this type of study, the characteristic of interest (e.g., gender) is an independent variable, and the groups differ based on the different levels (e.g., men, women, etc.). All participants are tested the same way, and then their group-level outcomes are compared.

When it’s not ethically permissible

When studying unhealthy or dangerous behaviors, it’s not possible to use random assignment. For example, if you’re studying heavy drinkers and social drinkers, it’s unethical to randomly assign participants to one of the two groups and ask them to drink large amounts of alcohol for your experiment.

When you can’t assign participants to groups, you can also conduct a quasi-experimental study . In a quasi-experiment, you study the outcomes of pre-existing groups who receive treatments that you may not have any control over (e.g., heavy drinkers and social drinkers). These groups aren’t randomly assigned, but may be considered comparable when some other variables (e.g., age or socioeconomic status) are controlled for.

Prevent plagiarism. Run a free check.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomization. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalizability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a dice to randomly assign participants to groups.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Random Assignment in Experiments | Introduction & Examples. Scribbr. Retrieved April 9, 2024, from https://www.scribbr.com/methodology/random-assignment/

Is this article helpful?

Pritha Bhandari

Other students also liked, guide to experimental design | overview, steps, & examples, confounding variables | definition, examples & controls, control groups and treatment groups | uses & examples, unlimited academic ai-proofreading.

✔ Document error-free in 5minutes ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Random Assignment in Psychology: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

In psychology, random assignment refers to the practice of allocating participants to different experimental groups in a study in a completely unbiased way, ensuring each participant has an equal chance of being assigned to any group.

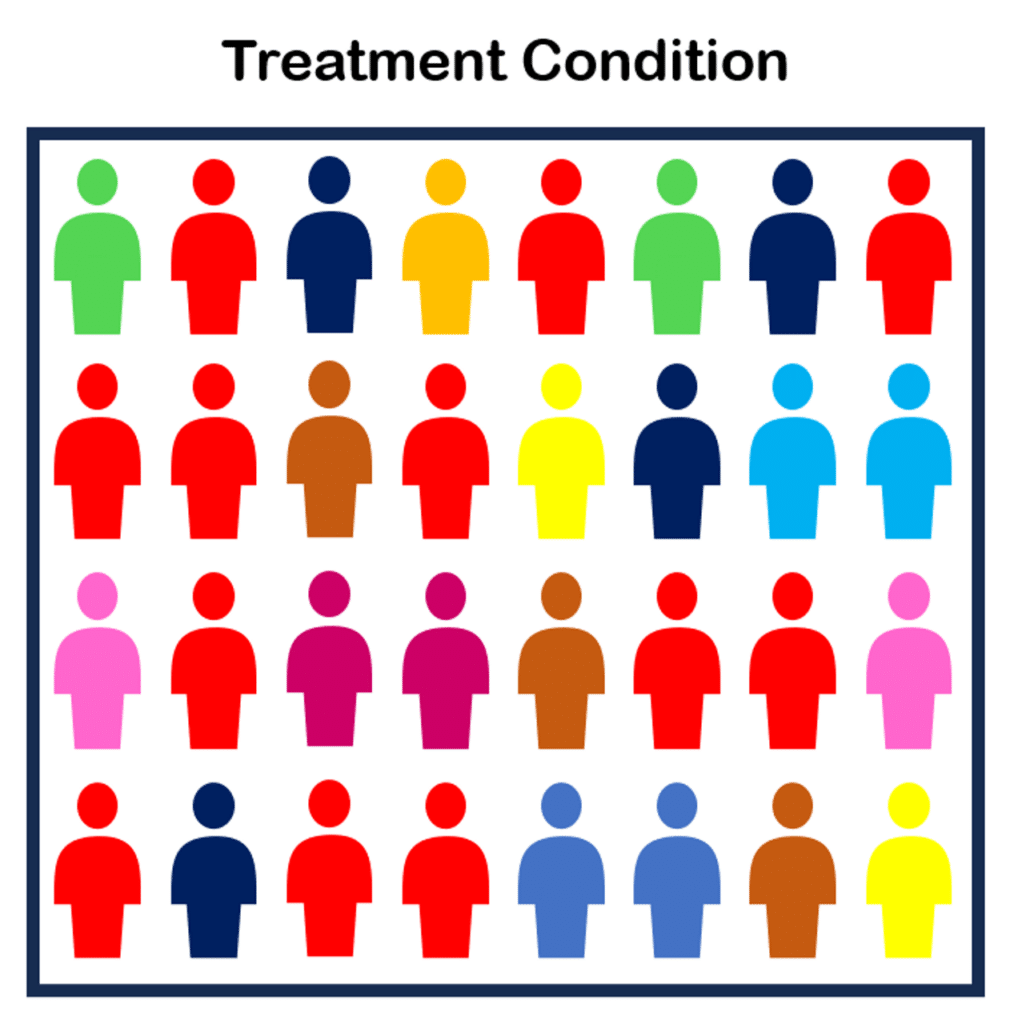

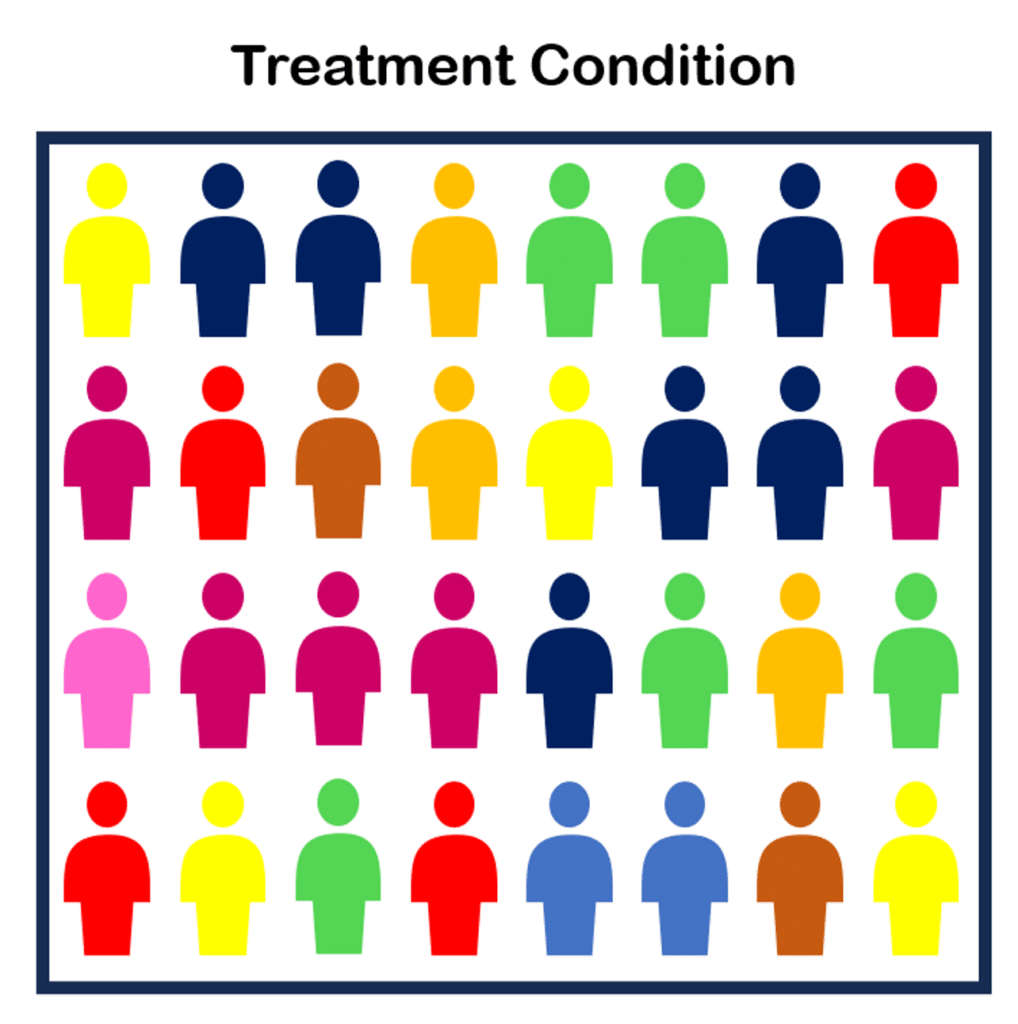

In experimental research, random assignment, or random placement, organizes participants from your sample into different groups using randomization.

Random assignment uses chance procedures to ensure that each participant has an equal opportunity of being assigned to either a control or experimental group.

The control group does not receive the treatment in question, whereas the experimental group does receive the treatment.

When using random assignment, neither the researcher nor the participant can choose the group to which the participant is assigned. This ensures that any differences between and within the groups are not systematic at the onset of the study.

In a study to test the success of a weight-loss program, investigators randomly assigned a pool of participants to one of two groups.

Group A participants participated in the weight-loss program for 10 weeks and took a class where they learned about the benefits of healthy eating and exercise.

Group B participants read a 200-page book that explains the benefits of weight loss. The investigator randomly assigned participants to one of the two groups.

The researchers found that those who participated in the program and took the class were more likely to lose weight than those in the other group that received only the book.

Importance

Random assignment ensures that each group in the experiment is identical before applying the independent variable.

In experiments , researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. Random assignment increases the likelihood that the treatment groups are the same at the onset of a study.

Thus, any changes that result from the independent variable can be assumed to be a result of the treatment of interest. This is particularly important for eliminating sources of bias and strengthening the internal validity of an experiment.

Random assignment is the best method for inferring a causal relationship between a treatment and an outcome.

Random Selection vs. Random Assignment

Random selection (also called probability sampling or random sampling) is a way of randomly selecting members of a population to be included in your study.

On the other hand, random assignment is a way of sorting the sample participants into control and treatment groups.

Random selection ensures that everyone in the population has an equal chance of being selected for the study. Once the pool of participants has been chosen, experimenters use random assignment to assign participants into groups.

Random assignment is only used in between-subjects experimental designs, while random selection can be used in a variety of study designs.

Random Assignment vs Random Sampling

Random sampling refers to selecting participants from a population so that each individual has an equal chance of being chosen. This method enhances the representativeness of the sample.

Random assignment, on the other hand, is used in experimental designs once participants are selected. It involves allocating these participants to different experimental groups or conditions randomly.

This helps ensure that any differences in results across groups are due to manipulating the independent variable, not preexisting differences among participants.

When to Use Random Assignment

Random assignment is used in experiments with a between-groups or independent measures design.

In these research designs, researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables.

There is usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable at the onset of the study.

How to Use Random Assignment

There are a variety of ways to assign participants into study groups randomly. Here are a handful of popular methods:

- Random Number Generator : Give each member of the sample a unique number; use a computer program to randomly generate a number from the list for each group.

- Lottery : Give each member of the sample a unique number. Place all numbers in a hat or bucket and draw numbers at random for each group.

- Flipping a Coin : Flip a coin for each participant to decide if they will be in the control group or experimental group (this method can only be used when you have just two groups)

- Roll a Die : For each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1, 2, or 3 places them in a control group and rolling 3, 4, 5 lands them in an experimental group.

When is Random Assignment not used?

- When it is not ethically permissible: Randomization is only ethical if the researcher has no evidence that one treatment is superior to the other or that one treatment might have harmful side effects.

- When answering non-causal questions : If the researcher is just interested in predicting the probability of an event, the causal relationship between the variables is not important and observational designs would be more suitable than random assignment.

- When studying the effect of variables that cannot be manipulated: Some risk factors cannot be manipulated and so it would not make any sense to study them in a randomized trial. For example, we cannot randomly assign participants into categories based on age, gender, or genetic factors.

Drawbacks of Random Assignment

While randomization assures an unbiased assignment of participants to groups, it does not guarantee the equality of these groups. There could still be extraneous variables that differ between groups or group differences that arise from chance. Additionally, there is still an element of luck with random assignments.

Thus, researchers can not produce perfectly equal groups for each specific study. Differences between the treatment group and control group might still exist, and the results of a randomized trial may sometimes be wrong, but this is absolutely okay.

Scientific evidence is a long and continuous process, and the groups will tend to be equal in the long run when data is aggregated in a meta-analysis.

Additionally, external validity (i.e., the extent to which the researcher can use the results of the study to generalize to the larger population) is compromised with random assignment.

Random assignment is challenging to implement outside of controlled laboratory conditions and might not represent what would happen in the real world at the population level.

Random assignment can also be more costly than simple observational studies, where an investigator is just observing events without intervening with the population.

Randomization also can be time-consuming and challenging, especially when participants refuse to receive the assigned treatment or do not adhere to recommendations.

What is the difference between random sampling and random assignment?

Random sampling refers to randomly selecting a sample of participants from a population. Random assignment refers to randomly assigning participants to treatment groups from the selected sample.

Does random assignment increase internal validity?

Yes, random assignment ensures that there are no systematic differences between the participants in each group, enhancing the study’s internal validity .

Does random assignment reduce sampling error?

Yes, with random assignment, participants have an equal chance of being assigned to either a control group or an experimental group, resulting in a sample that is, in theory, representative of the population.

Random assignment does not completely eliminate sampling error because a sample only approximates the population from which it is drawn. However, random sampling is a way to minimize sampling errors.

When is random assignment not possible?

Random assignment is not possible when the experimenters cannot control the treatment or independent variable.

For example, if you want to compare how men and women perform on a test, you cannot randomly assign subjects to these groups.

Participants are not randomly assigned to different groups in this study, but instead assigned based on their characteristics.

Does random assignment eliminate confounding variables?

Yes, random assignment eliminates the influence of any confounding variables on the treatment because it distributes them at random among the study groups. Randomization invalidates any relationship between a confounding variable and the treatment.

Why is random assignment of participants to treatment conditions in an experiment used?

Random assignment is used to ensure that all groups are comparable at the start of a study. This allows researchers to conclude that the outcomes of the study can be attributed to the intervention at hand and to rule out alternative explanations for study results.

Further Reading

- Bogomolnaia, A., & Moulin, H. (2001). A new solution to the random assignment problem . Journal of Economic theory , 100 (2), 295-328.

- Krause, M. S., & Howard, K. I. (2003). What random assignment does and does not do . Journal of Clinical Psychology , 59 (7), 751-766.

7 Different Ways to Control for Confounding

Confounding can be controlled in the design phase of the study by using:

- Random assignment

- Restriction

Or in the data analysis phase by using:

- Stratification

- Inverse probability weighting

- Instrumental variable estimation

Here’s a quick summary of the similarities and differences between these methods:

In what follows, we will explain how each of these methods works, and discuss its advantages and limitations.

1. Random assignment

How it works.

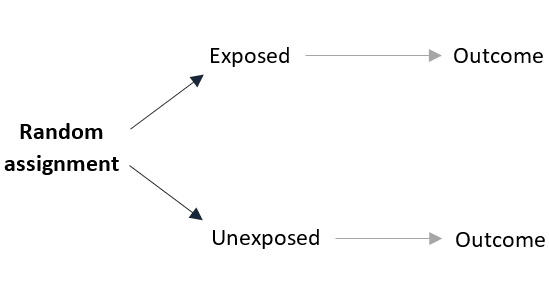

Random assignment is a process by which participants are assigned, with the same chance, to either receive or not a certain exposure.

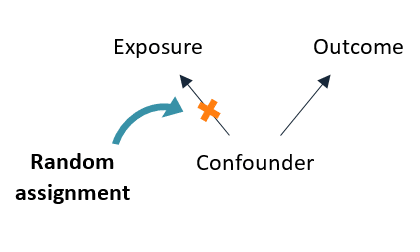

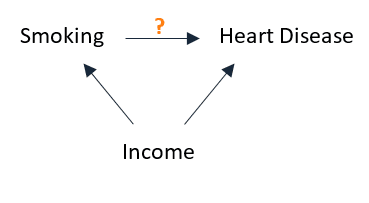

Randomizing the exposure adjusts for confounding by eliminating the influence of the confounder on the probability of receiving the exposure:

Advantage of random assignment:

Random assignment controls for confounding due to both measurable and unmeasurable causes. So it is especially useful when confounding variables are unknown or cannot be measured.

It also controls for time-varying confounders, that is when the exposure and the confounders are measured repeatedly in studies where participants are followed over time.

Limitation of random assignment:

Here are 3 reasons not to use random assignment:

- Ethical reason: Randomizing participants would be unethical when studying the effect of a harmful exposure, or on the contrary, when it is known for certain that the exposure is beneficial.

- Practical reason: Some exposures are very hard to randomize, like air pollution and education. Also, random assignment is not an option when we are analyzing observational data that we did not collect ourselves.

- Financial reason: Random assignment is a part of experimental designs where participants are followed over time, which turns out to be highly expensive in some cases.

Whenever the exposure cannot be randomly assigned to study participants, we will have to use an observational design and control for confounding by using another method from this list.

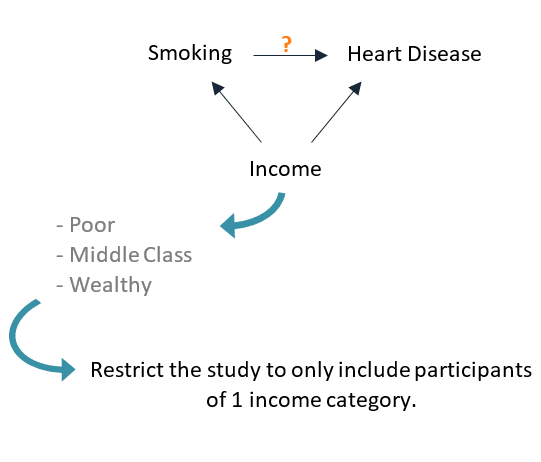

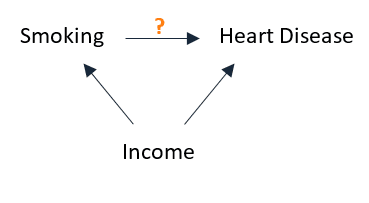

2. Restriction

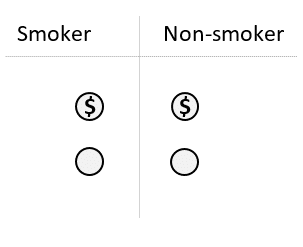

Restriction refers to only including in the study participants of a certain confounder category, thereby eliminating its confounding effect.

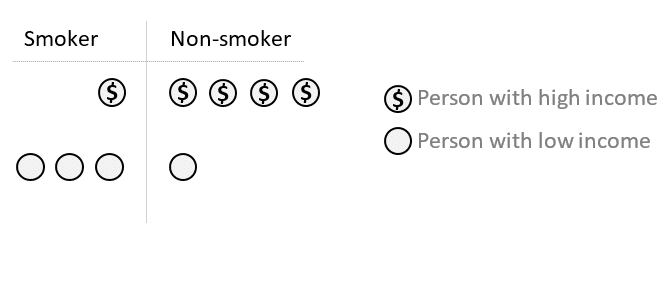

For instance, if the relationship between smoking (the exposure) and heart disease (the outcome) is confounded by income, then restricting our study to only include participants of the same income category will eliminate its confounding effect:

Advantage of restriction:

Unlike random assignment, restriction is easy to apply and also works for observational studies.

Limitation of restriction:

The biggest problem with restricting our study to 1 category of the confounder is that the results will not generalize well to the other categories. So restriction will limit the external validity of the study especially in cases where we have more than 1 confounder to control for.

3. Matching

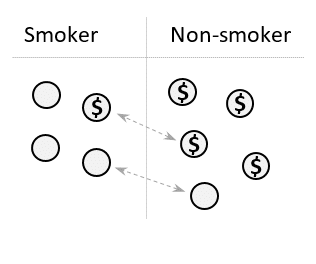

Matching works by distributing the confounding variable evenly between the exposed and the unexposed groups.

The idea is to pair each exposed subject with an unexposed subject that shares the same characteristics regarding the variable that we want to control for. Then, by only analyzing participants for whom we found a match, we eliminate the confounding effect of that variable.

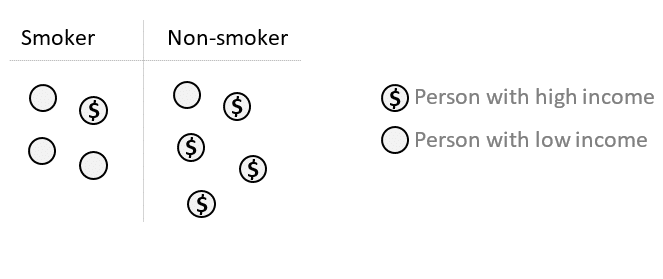

For example, suppose we want to control for income as a confounder of the relationship between smoking (the exposure) and heart disease (the outcome).

In this case, each smoker should be matched with a non-smoker of the same income category.

Here’s a step-by-step description of how this works:

Initially: The confounder is unequally distributed among the exposed and unexposed groups.

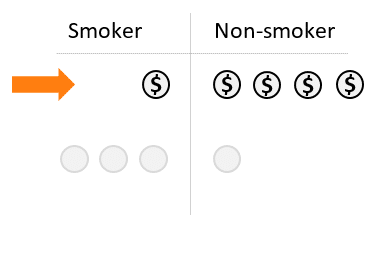

Step 1: Match each smoker with a non-smoker of the same income category.

Step 2: Exclude all unmatched participants from the study.

Result: The 2 groups will be balanced regarding the confounding variable.

Advantage of matching

Matching can be easy to apply in certain cases. For instance, matching on income in the example above can be done by selecting 1 smoker and 1 non-smoker from the same family, therefore having the same household income.

Limitation of matching

The more confounding variables we have to control for, the more difficult matching becomes, especially for continuous variables. The problem with matching on many characteristics is that a lot of participants will end up unmatched.

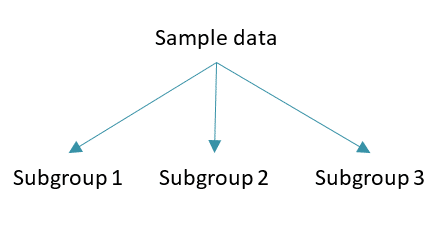

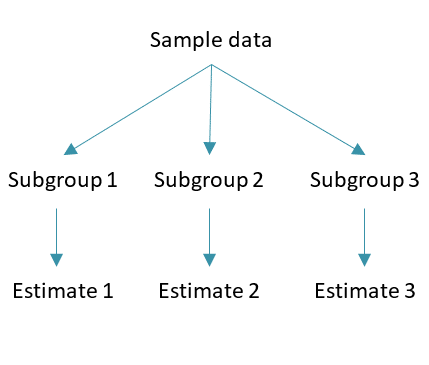

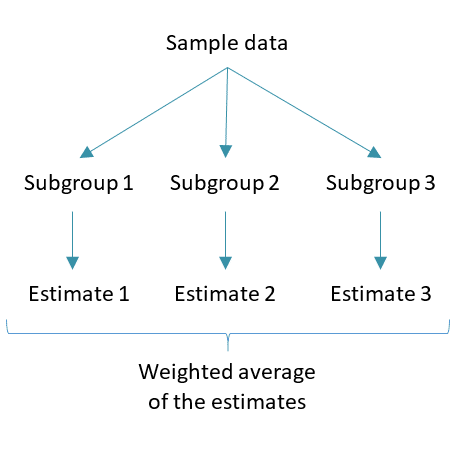

4. Stratification

Stratification controls for confounding by estimating the relationship between the exposure and the outcome within different subsets of the confounding variable, and then pooling these estimates.

Stratification works because, within each subset, the value of the confounder is the same for all participants and therefore cannot affect the estimated effect of the exposure on the outcome.

Here’s a step-by-step description of how to conduct a stratified analysis:

Step 1: Start by splitting the data into multiple subgroups (a.k.a. strata) according to the different categories of the confounding variable.

Step 2: Within each subgroup (or stratum), estimate the relationship between the exposure and the outcome.

Step 3: Pool the obtained estimates:

- By averaging them.

- Or by weighting them by the size of each stratum — a method called standardization.

Result: The pooled estimate will be free of confounding.

Advantage of stratification

Stratified analysis is an old and intuitive method used to teach the logic of controlling for confounding. A more modern and practical approach would be regression analysis, which is next on our list.

Limitation of stratification

Stratification does not scale well, since controlling for multiple confounders simultaneously will lead to:

- Complex calculations.

- Subgroups that contain very few participants, and these will reflect the noise in the data more so than real effects.

5. Regression

Adjusting for confounding using regression simply means to include the confounding variable in the model used to estimate the influence of the exposure on the outcome.

A linear regression model, for example, will be of the form:

Where the coefficient β 1 will reflect the effect of the exposure on the outcome adjusted for the confounder.

Advantage of regression

Regression can easily control for multiple confounders simultaneously, as this simply means adding more variables to the model.

For more details on how to use it in practice, I wrote a separate article: An Example of Identifying and Adjusting for Confounding .

Limitation of regression

A regression model operates under certain assumptions that must be respected. For example, for linear regression these are:

- A linear relationship between the predictors (the exposure and the confounder) and the outcome.

- Independence, normality, and equal variance of the residuals.

6. Inverse probability weighting

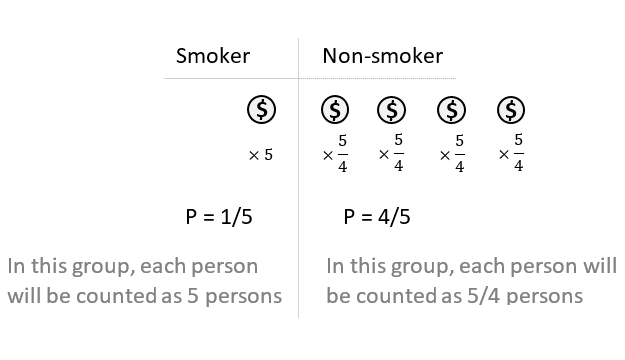

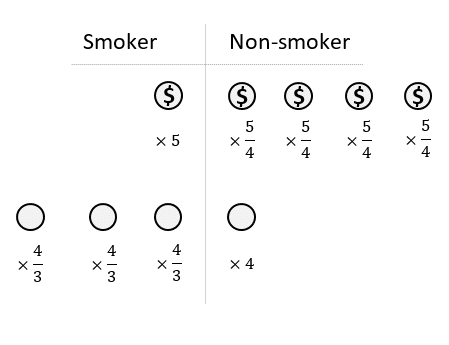

Inverse probability weighting eliminates confounding by equalizing the frequency of the confounder between the exposed and the unexposed groups. This is done by counting each participant as many times as her inverse probability of being in a certain exposure category.

Here’s a step-by-step description of the process:

Suppose we want to control for income as a confounder of the relationship between smoking (the exposure) and heart disease (the outcome):

Initially: Since income and smoking are associated, participants of different income levels will have different probabilities of being smokers.

First, let’s focus on high income participants:

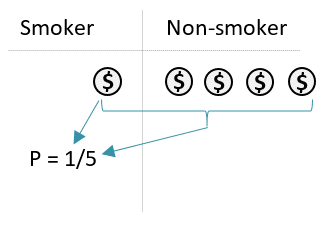

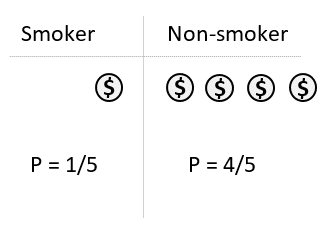

Step 1: Calculate the probability “P” that a person is a smoker.

Step 2: Calculate the probability that a person is a non-smoker.

Step 3: Multiply each person by the inverse of their calculated probability. So each participant will no longer count as 1 person in the analysis. Instead, each will be counted as many times as their calculated inverse probability weight (i.e. 1 person will be 1/P persons).

Now the smoking group has: 1 × 5 = 5 participants. And the non-smoking group also has: 4 × 5/4 = 5 participants

Finally, we have to repeat steps 1, 2, and 3 for participants in the low-income category.

Result: The smoker and non-smoker groups are now balanced regarding income. So its confounding effect will be eliminated because it is no longer associated with the exposure.

Advantage of inverse probability weighting

This method is a type of what is referred to as G-methods that are used to control for time-varying confounders, that is when the exposure and the confounders are measured repeatedly in studies where participants are followed over time.

Limitation of inverse probability weighting

If some participants have very large weights (i.e. when their probability of being in a certain exposure category is very low), then each of these participants would be counted as a large number of people, which leads to instability in the estimation of the causal effect of the exposure on the outcome.

One solution would be to exclude from the study participants with very high or very low weights.

7. Instrumental variable estimation

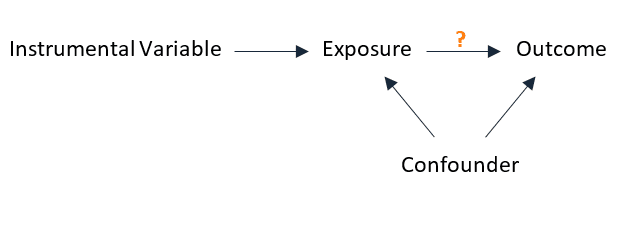

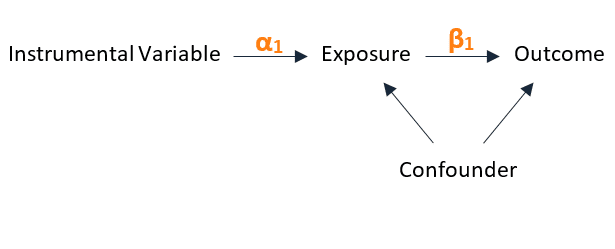

The instrumental variable method estimates the unconfounded effect of the exposure on the outcome indirectly by using a variable — the instrumental variable — that represents the exposure but is not affected by confounding.

An instrumental variable satisfies 3 properties:

- It causes the exposure.

- It does not cause the outcome directly — it affects the outcome only through the exposure.

- Its association with the outcome is unconfounded.

Here’s a diagram that represents the relationship between the instrumental variable, the exposure, and the outcome:

An instrumental variable is chosen so that nothing appears to cause it. So in a sense, it resembles the coin flip in a randomized experiment, because it appears to be randomly assigned.

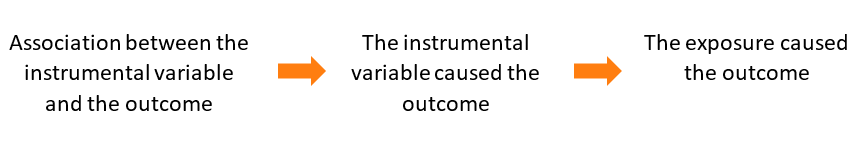

How the instrumental variable can be used to study causality?

Looking at the data, if an association is found between the instrumental variable and the outcome then it must be causal, since according to property (3) above, their relationship is unconfounded. And because the instrumental variable affects the outcome only through the exposure, according to property (2), we can conclude that the exposure has a causal effect on the outcome.

So how to quantify this causal/unconfounded effect of the exposure on the outcome?

Let “α 1 ” denote the magnitude of the causal effect of the instrumental variable on the exposure, and “β 1 ” that of the exposure on the outcome.

So our objective is to find β 1 .

Note that the simple regression model between the exposure and the outcome produces a confounded estimate of β 1 :

And therefore does not reflect the true β 1 that we are searching for.

So how to find this true unconfounded β 1 ?

Technically, if we think in terms of linear regression:

- α 1 is the change in the exposure CAUSED by a 1 unit change in the instrumental variable.

- β 1 is the change in the outcome CAUSED by a 1 unit change in the exposure.

It follows that a 1 unit change in the instrumental variable CAUSES an α 1 × β 1 change in the outcome (since the instrumental variable only affects the outcome through the exposure).

And as discussed above, any association between the instrumental variable and the outcome is causal. So, α 1 × β 1 can be estimated from the following regression model:

Where a 1 = α 1 × β 1

And because any association between the instrumental variable and the exposure is also causal (also unconfounded), the following model can be used to estimate α 1 :

Where b 1 = α 1

We end up with 2 equations:

- α 1 × β 1 = a 1

A simple calculation yields: β 1 = a 1 /b 1 which will be our estimated causal effect of the exposure on the outcome.

Advantage of instrumental variable estimation

Because the calculations that we just did are not dependent on any information about the confounder, we can use the instrumental variable approach to control for any measured, unmeasured, and unknown confounder.

This method is so powerful that it can be used in cases even where we do not know whether there is confounding or not between the exposure and the outcome, and which variables are suspect.

Limitation of instrumental variable estimation

In cases where the instrumental variable and the exposure are weakly correlated, the estimated effect of the exposure on the outcome will be biased.

The use of linear regression is also constrained by its assumptions, especially linearity and constant variance of the residuals.

As a rule of thumb, use the instrumental variable approach in cases where there are unmeasured confounders, otherwise, use other methods from this list since they will, in general, provide a better estimate of the causal relationship between the exposure and the outcome.

If you are interested, here are 3 Real-World Examples of Using Instrumental Variables .

- Hernán M, Robins JM. Causal Inference . Chapman & Hall/CRC; 2020.

- Roy J. A Crash Course in Causality: Inferring Causal Effects from Observational Data | Coursera.

- Pearl J, Mackenzie D. The Book of Why: The New Science of Cause and Effect . First edition. Basic Books; 2018.

Further reading

- Front-Door Criterion to Adjust for Unmeasured Confounding

- 4 Simple Ways to Identify Confounding

- 5 Real-World Examples of Confounding [With References]

- Why Confounding is Not a Type of Bias

- Using the 4 D-Separation Rules to Study a Causal Association

- List of All Biases in Research (Sorted by Popularity)

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.3: Threats to Internal Validity and Different Control Techniques

- Last updated

- Save as PDF

- Page ID 32915

- Yang Lydia Yang

- Kansas State University

Internal validity is often the focus from a research design perspective. To understand the pros and cons of various designs and to be able to better judge specific designs, we identify specific threats to internal validity . Before we do so, it is important to note that the primary challenge to establishing internal validity in social sciences is the fact that most of the phenomena we care about have multiple causes and are often a result of some complex set of interactions. For example, X may be only a partial cause of Y or X may cause Y, but only when Z is present. Multiple causation and interactive effects make it very difficult to demonstrate causality. Turning now to more specific threats, Figure 1.3.1 below identifies common threats to internal validity.

Different Control Techniques

All of the common threats mentioned above can introduce extraneous variables into your research design, which will potentially confound your research findings. In other words, we won't be able to tell whether it is the independent variable (i.e., the treatment we give participants), or the extraneous variable, that causes the changes in the dependent variable. Controlling for extraneous variables reduces its threats on the research design and gives us a better chance to claim the independent variable causes the changes in the dependent variable, i.e., internal validity. There are different techniques we can use to control for extraneous variables.

Random assignment

Random assignment is the single most powerful control technique we can use to minimize the potential threats of the confounding variables in research design. As we have seen in Dunn and her colleagues' study earlier, participants are not allowed to self select into either conditions (spend $20 on self or spend on others). Instead, they are randomly assigned into either group by the researcher(s). By doing so, the two groups are likely to be similar on all other factors except the independent variable itself. One confounding variable mentioned earlier is whether individuals had a happy childhood to begin with. Using random assignment, those who had a happy childhood will likely end up in each condition group. Similarly, those who didn't have a happy childhood will likely end up in each condition group too. As a consequence, we can expect the two condition groups to be very similar on this confounding variable. Applying the same logic, we can use random assignment to minimize all potential confounding variables (assuming your sample size is large enough!). With that, the only difference between the two groups is the condition participants are assigned to, which is the independent variable, then we are confident to infer that the independent variable actually causes the differences in the dependent variables.

It is critical to emphasize that random assignment is the only control technique to control for both known and unknown confounding variables. With all other control techniques mentioned below, we must first know what the confounding variable is before controlling it. Random assignment does not. With the simple act of randomly assigning participants into different conditions, we take care both the confounding variables we know of and the ones we don't even know that could threat the internal validity of our studies. As the saying goes, "what you don't know will hurt you." Random assignment take cares of it.

Matching is another technique we can use to control for extraneous variables. We must first identify the extraneous variable that can potentially confound the research design. Then we want to rank order the participants on this extraneous variable or list the participants in a ascending or descending order. Participants who are similar on the extraneous variable will be placed into different treatment groups. In other words, they are "matched" on the extraneous variable. Then we can carry out the intervention/treatment as usual. If different treatment groups do show differences on the dependent variable, we would know it is not the extraneous variables because participants are "matched" or equivalent on the extraneous variable. Rather it is more likely to the independent variable (i.e., the treatments) that causes the changes in the dependent variable. Use the example above (self-spending vs. others-spending on happiness) with the same extraneous variable of whether individuals had a happy childhood to begin with. Once we identify this extraneous variable, we do need to first collect some kind of data from the participants to measure how happy their childhood was. Or sometimes, data on the extraneous variables we plan to use may be already available (for example, you want to examine the effect of different types of tutoring on students' performance in Calculus I course and you plan to match them on this extraneous variable: college entrance test scores, which is already collected by the Admissions Office). In either case, getting the data on the identified extraneous variable is a typical step we need to do before matching. So going back to whether individuals had a happy childhood to begin with. Once we have data, we'd sort it in a certain order, for example, from the highest score (meaning participants reporting the happiest childhood) to the lowest score (meaning participants reporting the least happy childhood). We will then identify/match participants with the highest levels of childhood happiness and place them into different treatment groups. Then we go down the scale and match participants with relative high levels of childhood happiness and place them into different treatment groups. We repeat on the descending order until we match participants with the lowest levels of childhood happiness and place them into different treatment groups. By now, each treatment group will have participants with a full range of levels on childhood happiness (which is a strength...thinking about the variation, the representativeness of the sample). The two treatment groups will be similar or equivalent on this extraneous variable. If the treatments, self-spending vs. other-spending, eventually shows the differences on individual happiness, then we know it's not due to how happy their childhood was. We will be more confident it is due to the independent variable.

You may be thinking, but wait we have only taken care of one extraneous variable. What about other extraneous variables? Good thinking.That's exactly correct. We mentioned a few extraneous variables but have only matched them on one. This is the main limitation of matching. You can match participants on more than one extraneous variables, but it's cumbersome, if not impossible, to match them on 10 or 20 extraneous variables. More importantly, the more variables we try to match participants on, the less likely we will have a similar match. In other words, it may be easy to find/match participants on one particular extraneous variable (similar level of childhood happiness), but it's much harder to find/match participants to be similar on 10 different extraneous variables at once.

Holding Extraneous Variable Constant

Holding extraneous variable constant control technique is self-explanatory. We will use participants at one level of extraneous variable only, in other words, holding the extraneous variable constant. Using the same example above, for example we only want to study participants with the low level of childhood happiness. We do need to go through the same steps as in Matching: identifying the extraneous variable that can potentially confound the research design and getting the data on the identified extraneous variable. Once we have the data on childhood happiness scores, we will only include participants on the lower end of childhood happiness scores, then place them into different treatment groups and carry out the study as before. If the condition groups, self-spending vs. other-spending, eventually shows the differences on individual happiness, then we know it's not due to how happy their childhood was (since we already picked those on the lower end of childhood happiness only). We will be more confident it is due to the independent variable.

Similarly to Matching, we have to do this one extraneous variable at a time. As we increase the number of extraneous variables to be held constant, the more difficult it gets. The other limitation is by holding extraneous variable constant, we are excluding a big chunk of participants, in this case, anyone who are NOT low on childhood happiness. This is a major weakness, as we reduce the variability on the spectrum of childhood happiness levels, we decreases the representativeness of the sample and generalizabiliy suffers.

Building Extraneous Variables into Design

The last control technique building extraneous variables into research design is widely used. Like the name suggests, we would identify the extraneous variable that can potentially confound the research design, and include it into the research design by treating it as an independent variable. This control technique takes care of the limitation the previous control technique, holding extraneous variable constant, has. We don't need to excluding participants based on where they stand on the extraneous variable(s). Instead we can include participants with a wide range of levels on the extraneous variable(s). You can include multiple extraneous variables into the design at once. However, the more variables you include in the design, the large the sample size it requires for statistical analyses, which may be difficult to obtain due to limitations of time, staff, cost, access, etc.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Clin Epidemiol

Control of confounding in the analysis phase – an overview for clinicians

Johnny kahlert.

1 Department of Clinical Epidemiology, Institute of Clinical Medicine

Sigrid Bjerge Gribsholt

2 Department of Endocrinology and Internal Medicine

Henrik Gammelager

3 Department of Anaesthesiology and Intensive Care Medicine, Aarhus University Hospital, Aarhus, Denmark

Olaf M Dekkers

4 Department of Clinical Epidemiology

5 Department of Medicine, Section Endocrinology, Leiden University Medical Center, Leiden, the Netherlands

George Luta

6 Department of Biostatistics, Bioinformatics, and Biomathematics, Georgetown University Medical Center, Washington, DC, USA

Associated Data

Summary of the pros and cons of five methods used to control confounding in observational studies

Abbreviations: PS, propensity score; HD-PS, high-dimensional propensity score.

Summary of the methodological pros and cons of four different types of PS methods

Abbreviations: PS, propensity score; ATE, average treatment effect for the population (both treated and untreated people); ATT, average treatment effect among treated people.

In observational studies, control of confounding can be done in the design and analysis phases. Using examples from large health care database studies, this article provides the clinicians with an overview of standard methods in the analysis phase, such as stratification, standardization, multivariable regression analysis and propensity score (PS) methods, together with the more advanced high-dimensional propensity score (HD-PS) method. We describe the progression from simple stratification confined to the inclusion of a few potential confounders to complex modeling procedures such as the HD-PS approach by which hundreds of potential confounders are extracted from large health care databases. Stratification and standardization assist in the understanding of the data at a detailed level, while accounting for potential confounders. Incorporating several potential confounders in the analysis typically implies the choice between multivariable analysis and PS methods. Although PS methods have gained remarkable popularity in recent years, there is an ongoing discussion on the advantages and disadvantages of PS methods as compared to those of multivariable analysis. Furthermore, the HD-PS method, despite its generous inclusion of potential confounders, is also associated with potential pitfalls. All methods are dependent on the assumption of no unknown, unmeasured and residual confounding and suffer from the difficulty of identifying true confounders. Even in large health care databases, insufficient or poor data may contribute to these challenges. The trend in data collection is to compile more fine-grained data on lifestyle and severity of diseases, based on self-reporting and modern technologies. This will surely improve our ability to incorporate relevant confounders or their proxies. However, despite a remarkable development of methods that account for confounding and new data opportunities, confounding will remain a serious issue. Considering the advantages and disadvantages of different methods, we emphasize the importance of the clinical input and of the interplay between clinicians and analysts to ensure a proper analysis.

Introduction

During the era of modern epidemiology, we have seen large health care databases and registries emerging, contemporary with technological achievements in computing, which has paved the way for a remarkable increase in observational studies. Confounding is the concept of comparability in observational studies, which hampers causal inference. 1 – 3 Confounding arises when a factor is associated with both the exposure (or treatment) and the outcome, eg, a disease or death, and is not part of the causal pathway from exposure to outcome. Hence, if we study the effect of hypertension on the risk of stroke, we cannot just compare hypertensive people against people without hypertension. The reason is that we may obtain spurious results, if we do not consider confounding factors, such as smoking, diabetes, alcohol intake and cardiovascular diseases, that are likely associated with both stroke and hypertension and are not on the causal pathway from hypertension to stroke. The effect on odds ratio (OR) estimates when controlling for these confounding factors was illustrated in a UK registry-based case–control study that among other things examined the association between stroke and untreated hypertension. The estimated OR for the association of interest increased from 2.9 to 3.5 after controlling for confounding. 4 In this example, the magnitude of the association was underestimated, if not adjusting for confounding; however, confounding may also result in an overestimated effect, if not accounted for.

Once a potential confounding problem has been recognized, it may be dealt with in the design or the analysis phase. 5 Standard methods used in the design phase involve randomization, restriction and matching. In randomized studies, patients are assigned randomly to exposure categories. Restriction means that only subjects with certain values for the potential confounders are selected (eg, certain sex and age groups), while matching involves the selection of the groups to be compared (exposed vs not exposed or cases vs controls) to be comparable with respect to the distribution of potential confounders. In registry-based observational studies, it is often insufficient to control for confounding only during the design phase of the study. Usually, we wish to account for several potential confounders, which may not be possible by either restriction or matching. For example, by restriction, we may end up with a very small cohort, limiting both the precision and the generalizability of the results of the analysis. Likewise, matching on several potential confounders may reduce the likelihood of finding comparison people for the people in the patient cohort. The two approaches are not mutually exclusive. In the UK example with stroke, diagnosed cases were matched with a group of controls with the same sex and age in the design phase, and then, a multivariable regression analysis was performed in which hypertension status and the potential confounders were incorporated in the analysis phase. 4 In general, the control of confounding may involve design, analytical, and statistical concepts by which we can perform statistical adjustment, restructure data, remove certain observations or add comparison groups with certain characteristics (negative controls) to deal with confounding. 6 , 7

In the history of control of confounding during the analysis, we have advanced from simple stratification with only a few potential confounders collated from small manageable hospital files to complex modeling procedures, using high-dimensional propensity scores (HD-PSs) by which hundreds of potential confounders are extracted from large health care databases.

The questions are, however, what have we achieved by this change in the setting of epidemiological research? Did we lose important aspects in the analysis? Are novel analysis methods to control for confounding that have become widely used in recent years, such as PS methods, our main response to the confounding issue in large and complex data sets? In the present article, we attempted to answer these questions, focusing on a registry-based setting. We considered the topic from a hands-on perspective and tried to demystify the control for confounding during analysis by explaining and discussing the nature of the various methods and referring to examples from epidemiological studies.

From the simple to the complex – stratification, standardization and multivariable analysis

Stratification.

Stratification is the starting point in many textbooks dealing with confounding in the analysis phase. 8 , 9 This is probably due to the simplicity of this method in which a data set is broken into a manageable number of subsets, called strata, corresponding to the levels of potential confounders (eg, age groups and sex). By comparing the overall cross-tabulation for the association between an exposure and an outcome (eg, a 2 × 2 table for alcohol consumption and myocardial infarction [MI]) with stratum-specific (eg, age group) cross-tabulations, it becomes evident whether a factor introduces confounding in the analysis. Thus, the stratum-specific associations (eg, measured as ORs) would deviate markedly from the overall association – refer the example in the study by Mannocci 10 on the confounding effect of age on the association between alcohol consumption and MI. Age was a confounder since it was associated with alcohol consumption (alcohol consumption was most frequent among younger people) and with MI (MI was most common among the middle-aged people). The Mantel–Haenszel method 11 is commonly used to deal with confounding using stratification. The method summarizes the stratum-specific ORs by using a weighted average of them. This approach is generally attractive because of its applicability to a number of epidemiological measures such as OR, risk difference, risk ratio and incidence rate difference. 9 , 10

Stratification is an attractive method because of its simplicity; however, there are limitations to the number of factors that can be stratified, so that information can be extracted from the analysis. 9 For example, 10 dichotomous factors would result in 2 10 =1,024 strata, and some strata may contain little or no data. In epidemiological research, we are expected to build on the current knowledge base and select numerous potential confounders, previously recognized, from the wealth of data that are potentially accessible from registries. Hence, when we attempt to control for confounding in the analysis, we will soon face the limitations of the stratification method regarding the number of potential confounders that are practically manageable. Stratification is therefore rarely used exclusively to control for confounding in studies that emanate from large health care databases. These days, it is used as an assisting tool in combination with other methods, and stratification may be used to identify effect measure modifications, ie, to demonstrate that the strength of the association between an exposure and an outcome depends on the value of another factor.

Standardization

Standardization provides another tool that can cope with confounding, although hampered by some of the same constraints as in stratification. Typically, disease or death rates are only standardized to age, and perhaps to sex and race, even in large registry-based studies. If more factors are considered, then separate analyses must be undertaken for specific subgroups. While stratification of confounders relies on information at the individual level in a study population, standardization involves the use of a reference population, obtained either from the data set or from an external source, such as data from a larger geographical scale. As an example, in a study based on the Korean Stroke Registry, age- and sex-standardized mortality ratios in stroke patients were calculated and compared across reasons for stroke, using the overall Korean population in 2003 as the reference population. 12

There are two main approaches that handle confounding by standardization: direct and indirect standardization, resulting in adjusted rates and standardized ratios. Detailed descriptions of the two methods can be found in most introductory textbooks to epidemiology (eg, Kirkwood and Stern 13 ). In general, direct standardization is recommended, because the consistency of comparisons is maintained, ie, a higher rate in one study population compared to another will be preserved also after direct standardization. That said, the very rate is dependent on characteristics of the selected reference population. 14 When unstable rates are encountered across strata, eg, because of small numbers of patients in each stratum, indirect standardization should also be considered. 13 In the example from Korea, indirect standardization was used to show that the standardized mortality ratios were higher among patients with unknown stroke etiology compared to patients with known etiology. 12

Multivariable analysis

Multivariable regression analysis has been one of the most frequently used methods to control for confounding, and the use of this approach was particularly enhanced at a time when modeling tools were made readily available. With multivariable analysis, we get around the main limitation of stratification, as we obtain the possibility to adjust for many confounding variables in just one (assumed true) model. 15 Thus, we can take advantage of more of the information available in a registry than when we use stratification. In epidemiology, multivariable analysis is typically seen in analyses in which ORs or hazard ratios (HRs) are estimated. Control for confounding by multivariable analysis relies on the same principles as stratification, ie, the factors of interest (eg, a risk factor, treatment or exposure) are investigated while the potential confounders are held constant. In multivariable analysis, this is done mathematically in one integrated process, however, under certain assumptions ( Table S1 ) – here as an example of linearity for linear models. This assumption may be compromised when confounders with nonlinear effects are incorporated in a linear model as continuous variables. This leads to residual confounding (confounding remains despite controlled for in the analysis) unless other measures are taken (refer the study by Groenwold et al 16 for examples and solutions).

Selection of potential confounders for multivariable models has been the subject of controversy. 17 Confounder selection would typically rely on prior knowledge, 18 possibly supported by a directed acyclic graph (DAG), that is a graphical depiction of the causal relationship between, eg, an exposure and an outcome together with potential confounders. 6 In large study populations, the researcher would in many cases include all known measured potential confounders in the regression model. In a registry-based German study, 16 potential confounders were included in the analysis of the effect of treatment with tissue plasminogen activator (t-PA) on death (361 cases) among 6,269 ischemic stroke patients. 19 There was indeed a remarkable drop in the OR between t-PA and death derived from a multivariable model, when adjusting for confounding (OR=1.93 compared to OR=3.35 in the crude, unadjusted analysis). Such generous inclusion of potential confounding factors in the multivariable model is unlikely to be a problem in this example, given that there are >20 outcome events (deaths) per factor included in the model. 20 Factors may be omitted from a multivariable model based on preliminary data-driven procedures, such as stepwise selection, change-in-estimate procedure, least absolute shrinkage and selection operator (LASSO), 21 and model selection based on information criteria (eg, Akaike information criterion). 22 It is important to recognize that data-driven variable selection is not related to the presence of confounding factors in the data set, and hence, there is a possibility that important confounders are discarded during such procedures. 23

Modifications of the multivariable model have been developed to better comply with the underlying assumptions or to avoid discarding variables. These include transformations of variables, 16 shrinkage of parameter estimates 23 and random coefficient regression models. 24 Despite great flexibility when exploring associations between an exposure and an outcome while controlling for potential confounders, multivariable analysis does not directly identify whether a factor is a true confounder. Therefore, it is not clear whether residual confounding remains in the model. 25

PS – our main response to confounding?

In recent years, PS methods have become very popular as an approach to deal with confounding in observational studies. The idea of this method is to modify the study so that exposure or treatment groups that we want to compare become comparable without influence from confounding factors. 26 In a cohort study, we want to get rid of confounding due to factors measured at baseline – typically defined as the period before a drug use or treatment of interest. Already in the early history of modern epidemiology, stratification by a multivariate confounder score was recognized as an attractive approach. 27 This is comparable to the PS approach, as it combines information on a number of variables (potential confounders) into a single score for each individual person in a data set. This score is equivalent to the probability of an exposure, given the characteristics measured at baseline. There are four conceptual steps in the PS methods: 1) selection of potential confounders; 2) estimation of the PS; 3) use of the PS to make treatment/exposure groups comparable (covariate balance) and assessment of group comparability and 4) estimation of the association between treatment/exposure and outcome.

In the German study on stroke patients mentioned earlier, PS methods were applied in addition to a multivariable analysis. 19 We will use the setting from this example to outline the principles of the underlying conceptual steps; further details on the methods can be found elsewhere. 26 In the example, we would start estimating the probability of the treatment with t-PA as a function of a number of baseline characteristics, such as the presence or absence of comorbidities (hypertension, diabetes, etc.), or person characteristics (age and sex).

Based on the PS values, we can now group individuals according to baseline characteristics – here, untreated patients and patients treated with t-PA. This can be done in several ways: matching, stratification, covariate adjustment and inverse probability (of treatment) weighting. There is an extensive literature on the different variants and the associated pros and cons 26 , 28 – 30 ( Table S2 ). Different variants were applied in the example, and eventually, affected the results, ie, the ORs between t-PA and death ranged from 1.17 to 1.96, 19 potentially leading to different conclusions, if considered separately. However, it is important to recognize that different variants may imply answering different research questions. 26 , 31 We discriminate between approaches that estimate the average effect of a treatment on the population (both treated and untreated individuals) and the average effect of treatment on those individuals who actually received the treatment. In the example with t-PA, the authors mentioned that differences in ORs between two weighting variants (inverse probability of treatment weighting and standardized mortality ratio weighting) likely derive from the fact that the two approaches are associated with different research questions, 19 and this may also apply to other methods that are evaluated in the study.

An important step is to evaluate whether the treated and untreated groups are comparable. The evaluation cannot be offset by a goodness-of-fit (GOF) test, which is a general approach that provides a measure of how well a statistical model fits the data. However, this approach is usually meaningless in the large data sets that are typically extracted from health care databases. Furthermore, the GOF test may not tell the researcher whether important confounders were excluded from the analysis, neither in multivariable analysis nor in PS modeling. 26 , 32

The evaluation of the comparability of the groups of interest may involve measures of difference, testing or visual inspection of the PS distributions of the two groups – refer the study by Franklin et al 33 for a discussion under what circumstances the different approaches are considered useful. Imbalances between the two groups may necessitate that the estimation of the PS is reconsidered, meaning that the specification of the model that provides the PS is changed, another PS variant is applied or the data set is trimmed. 34 By trimming, a subset of data is extracted according to certain rules, and thus, the sample size is reduced, which in some cases may hamper the feasibility and interpretability of the results obtained by the PS method.

It may be difficult to balance the treatment groups in small samples or if the comparison groups are very different. Hence, the evaluation of balance represents an assurance that eventually we analyze comparable groups in the final analysis of the possible association between treatment and outcome, adjusted for (measured) confounding.

In the German study on stroke patients, there was an imbalance between the t-PA-treated and -untreated groups with a limited overlap of PSs among the two groups due to an exceptionally high proportion of untreated patients with low PS. The authors then restricted the study population to patients with a PS ≥0.05, which increased the comparability of the groups. In this setting, the results were also less sensitive to the choice of PS variant (matching and several regression adjustments) compared to the unrestricted approach. 19

As with multivariable analysis, there is a possibility that unknown, unmeasured and residual confounding still exists after having applied the PS approach. In order to attempt to reduce this drawback, the HD-PS approach was developed. 35 The HD-PS method involves a series of conceptual steps, 35 which in essence can be condensed to: 1) specification of data source; 2) data-driven selection of potential confounders; 3) estimation of PS; 4) use of the PS to make groups of interest comparable and assessment of group comparability and 5) estimation of the association between treatment/exposure and outcome. Essentially, it is the selection process of confounders that makes the HD-PS method differ from the conventional PS methods. For the HD-PS method, large numbers of variables (often hundreds) are selected as potential confounders. As an example in a nationwide study in Taiwan, the HD-PS method was used to adjust for confounding. 36 Well-known prespecified confounders, eg, sex, age and comorbidities related to lifestyle, were incorporated in the analysis together with 500 additional potential confounders. The rationale is that some of these many variables are likely proxies for unmeasured confounders that are not available in the database or the researcher is not aware of. Accordingly, we may be able to deal with at least some of the unmeasured confounding that would not be considered in a conventional PS approach. However, there is little empirical evidence that the HD-PS method is better at controlling for unmeasured confounding than other methods, and adding several hundred empirically identified factors in an HD-PS setting may lead to comparable results to those that could also be obtained from a conventional PS setting. 37 In addition, despite examples of HD-PS analyses that provided estimates closer to the estimates obtained in randomized trials, 38 we cannot conclude that HD-PS is almost as good a tool as randomization.

Given the data greediness of the HD-PS method, its application is dependent on access to large databases, although it has also been demonstrated to be quite robust in a small sample setting (down to 50 exposed patients with an event). 38 It is important to be aware that variable selection in the HD-PS method is mainly data driven and in principle associated with the risk of omitting important confounders. That said, the benefit of including an excessive number of proxies for potential unmeasured confounders possibly outweighs the risk of discarding important confounders. In multivariable analysis and conventional PS analysis, we select the potential confounders to adjust for from a pool of variables that are thought to be possible true confounders. Despite measures taken during the variable selection process in the HD-PS method, 38 the generous inclusion of variables from databases may increase the likelihood that variables are not confounders but mediator, collider or instrumental variables – see definitions elsewhere. 39 – 41 This may lead to inappropriate adjustment that potentially provides spurious results. However, we are limited in our understanding of all the prospects and pitfalls of the HD-PS method, given its relatively recent origin, although exploration and refinements of the approach have already emerged. 42 – 44

Overall, we can conclude that the PS methods have several attractive characteristics in a registry-based setting. For example, PS seems more robust in situations with rare outcomes and common exposures than multivariable analysis. 45 , 46 However, even in a large sample setting, we may face the challenge of rare exposure (or treatment). The disease risk score (DRS) method is suitable to use under these circumstances, such as in the early market phase of a drug when reduction in confounder dimensions is likely important. 47 – 49 DRS is comparable to PS in so far that information from several variables is summarized in one single score.

The PS method cannot handle treatment defined as a continuous variable (eg, drug dosage), unless dosage is categorized, typically dichotomized into the presence or absence of treatment, associated with the risk of losing important information on the association between an exposure and baseline characteristics. DRS may again be an alternative to PS. That said, methods that are based on the inverse probability weighting (IPW) principle represent alternatives with a wide range of applications, because IPW may be generalized to a suite of different circumstances also including dichotomous and non-dichotomous exposure. 50 The German study of stroke comprised an additional analysis, which controlled for confounding by using the IPW principle. 19 Time-varying exposure and thus time-dependent confounding may also be dealt with by methods based on IPW in the form of marginal structural models 51 or structural nested models based on G-estimation. 52

What did we achieve and what have we lost?

It is important to stress that during selection of a method, there is no book of answers, and in many cases, simple methods may be equally valid as the complex methods. In addition to all the pros and cons of the different methods ( Table S1 ), we may face an unusual setting or a data set with an odd structure that necessitates further consideration of the method that controls for confounding. Moreover, the specific research question that we wish to answer may determine the method selected to control for confounding ( Table S2 ).

Both stratification and standardization represent ways of learning about the data, as we look at smaller units of the data set, and we may use these methods as preliminary analysis, before we use other methods such as multivariable analysis or PS methods to adjust for confounding. Thus, applying stratification or standardization assists in the understanding of the data at a detailed level, and we may become aware of associations in specific strata, otherwise overlooked. By the era of multivariable analysis, we may have lost some of this basic understanding of the data, because of the complexity introduced by incorporating numerous potential confounders in models. Nevertheless, we are still capable of understanding which factors substantially confound an association, and we can directly explore interactions between an exposure and other factors. After the introduction of the PS method, there has been an ongoing discussion on the advantages and disadvantages of this method as compared to multivariable analysis. Glynn et al 53 noticed that in the majority of studies that used both multivariable analysis and PS methods, there were no important differences in the results, and this was further confirmed by simulation studies. However, comparable results across different methods do not prove that proper adjustment of confounding was undertaken, eg, if the data quality of important confounders is poor or unmeasured confounding exists. The trend in analysis methods has dictated that we extract more and more information from databases, when attempting to account for confounding. This could potentially entail that we reduce unmeasured confounding just by chance, most notably in the HD-PS approach with the inclusion of hundreds of variables. However, there is no evidence that this method is superior to others, and even the HD-PS method would be flawed in the case that data on important confounders or their proxies are not available or if variables that are not true confounders are adjusted for.

Given the complexities of registries and data analysis, we wish to emphasize the critical importance of clinical input and of the interplay between clinicians and analysts (statisticians) during the statistical analysis. Clinicians may contribute with important scientific input regarding the initial list of potential confounders that should be considered and their availability in health care databases; if potential con-founders are missing, which surrogate factors could then be used as a replacement? Clinicians may also provide essential information on technical elements of the statistical analysis such as how variables should be categorized, the functional forms of continuous variables (eg, linear vs nonlinear) and temporal aspects (eg, the relative importance of an event of MI 1 week vs 1 year ago). Finally, clinicians have expert knowledge on the nature of treatments and treatment allocation that can guide the analyst.

Requirements to the analysis in the future