Popular pages

- Mental Health

- Artificial Intelligence

- Plastic Pollution

- COVID-19 Data Explorer

- CO₂ & Greenhouse Gas Emissions

Research and data to make progress against the world’s largest problems.

12,872 charts across 115 topics All free: open access and open source

Our Mission

What do we need to know to make the world a better place?

To make progress against the pressing problems the world faces, we need to be informed by the best research and data.

Our World in Data makes this knowledge accessible and understandable, to empower those working to build a better world.

We are a non-profit — all our work is free to use and open source. Consider supporting us if you find our work valuable.

Featured work

New polio vaccines are key to preventing outbreaks and achieving eradication

To reach the goal of polio eradication, we can use new vaccines to contain outbreaks and improve testing, outbreak responses, and sanitation.

Saloni Dattani

Browse our Data Insights

Bite-sized insights on how the world is changing, written by our team.

Our World in Data team

The world has become more resilient to disasters, but investment is needed to save more lives

Deaths from disasters have fallen, but we need to build even more resilience to ensure this progress doesn’t reverse.

Hannah Ritchie

The rise in reported maternal mortality rates in the US is largely due to a change in measurement

Maternal mortality rates appear to have risen in the last 20 years in the US. But this reflects a change in measurement rather than an actual rise in mortality.

How much have temperatures risen in countries across the world?

Explore country-by-country data on monthly temperature anomalies.

Veronika Samborska

Latest Data Insights

Bite-sized insights on how the world is changing.

May 27, 2024

One in five democracies is eroding

May 24, 2024

There are huge inequalities in global CO2 emissions

May 23, 2024

In less than a decade, Peru has become the world's second-largest blueberry producer

May 22, 2024

Much more progress can be made against child mortality

Explore our data

Featured data from our collection of more than 12,800 interactive charts.

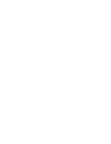

Under-five mortality rate Long-run estimates combining data from UN & Gapminder

What share of children die before their fifth birthday.

What could be more tragic than the death of a young child? Child mortality, the death of children under the age of five, is still extremely common in our world today.

The historical data makes clear that it doesn’t have to be this way: it is possible for societies to protect their children and reduce child mortality to very low rates. For child mortality to reach low levels, many things have to go right at the same time: good healthcare , good nutrition , clean water and sanitation , maternal health , and high living standards . We can, therefore, think of child mortality as a proxy indicator of a country’s living conditions.

The chart shows our long-run data on child mortality, which allows you to see how child mortality has changed in countries around the world.

Share of population living in extreme poverty World Bank

What share of the population is living in extreme poverty.

The UN sets the “International Poverty Line” as a worldwide comparable definition for extreme poverty. Living in extreme poverty is currently defined as living on less than $2.15 per day. This indicator, published by the World Bank, has successfully drawn attention to the terrible depths of poverty of the poorest people in the world.

Two centuries ago, the majority of the world’s population was extremely poor. Back then, it was widely believed that widespread poverty was inevitable. This turned out to be wrong. Economic growth is possible and makes it possible for entire societies to leave the deep poverty of the past behind. Whether or not countries are leaving the worst poverty behind can be monitored by relying on this indicator.

Life expectancy at birth Long-run estimates collated from multiple sources by Our World in Data

How has people’s life expectancy changed over time.

Across the world, people are living longer. In 1900, the global average life expectancy of a newborn was 32 years. By 2021, this had more than doubled to 71 years.

Big improvements were achieved by countries around the world . The chart shows that life expectancy has more than doubled in every region of the world. This improvement is not only due to declining child mortality; life expectancy increased at all ages .

This visualization shows long-run estimates of life expectancy brought together by our team from several different data sources. It also shows that the COVID-19 pandemic led to reduced life expectancy worldwide.

Per capita CO₂ emissions Long-run estimates from the Global Carbon Budget

How have co₂ emissions per capita changed.

The main source of carbon dioxide (CO 2 ) emissions is the burning of fossil fuels. It is the primary greenhouse gas causing climate change .

Globally, CO 2 emissions have remained at just below 5 tonnes per person for over a decade. Between countries, however, there are large differences, and while emissions are rapidly increasing in some countries, they are rapidly falling in others.

The source for this CO 2 data is the Global Carbon Budget, a dataset we update yearly as soon as it is published. In addition to these production-based emissions, they publish consumption-based emissions for the last three decades, which can be viewed in our Greenhouse Gas Emissions Data Explorer .

GDP per capita Long-run estimates from the Maddison Project Database

How do average incomes compare between countries around the world.

GDP per capita is a very comprehensive measure of people’s average income . This indicator reveals how large the inequality between people in different countries is. In the poorest countries, people live on less than $1,000 per year, while in rich countries, the average income is more than 50 times higher.

The data shown is sourced from the Maddison Project Database. Drawing together the careful work of hundreds of economic historians, the particular value of this data lies in the historical coverage it provides. This data makes clear that the vast majority of people in all countries were poor in the past. It allows us to understand when and how the economic growth that made it possible to leave the deep poverty of the past behind was achieved.

Share of people that are undernourished FAO

What share of the population is suffering from hunger.

Hunger has been a severe problem for most of humanity throughout history. Growing enough food to feed one’s family was a constant struggle in daily life. Food shortages, malnutrition, and famines were common around the world.

The UN’s Food and Agriculture Organization publishes global data on undernourishment, defined as not consuming enough calories to maintain a normal, active, healthy life. These minimum requirements vary by a person’s sex, weight, height, and activity levels. This is considered in these national and global estimates.

The world has made much progress in reducing global hunger in recent decades. But we are still far away from an end to hunger, as this indicator shows. Tragically, nearly one in ten people still do not get enough food to eat and in recent years — especially during the pandemic — hunger levels have increased.

Literacy rate Long-run estimates collated from multiple sources by Our World in Data

When has literacy become a widespread skill.

Literacy is a foundational skill. Children need to learn to read so that they can read to learn. When we fail to teach this foundational skill, people have fewer opportunities to lead the rich and interesting lives that a good education offers.

The historical data shows that only a very small share of the population, a tiny elite, was able to read and write. Over the course of the last few generations, literacy levels increased, but it remains an important challenge for our time to provide this foundational skill to all.

At Our World in Data, we investigated the strengths and shortcomings of the available data on literacy. Based on this work, our team brought together the long-run data shown in the chart by combining several different sources, including the World Bank, the CIA Factbook, and a range of research publications.

Share of the population with access to electricity World Bank

Where do people lack access to even the most basic electricity supply.

Light at night makes it possible to get together after sunset; mobile phones allow us to stay in touch with those far away; the refrigeration of food reduces food waste; and household appliances free up time from household chores. Access to electricity improves people’s living conditions in many ways.

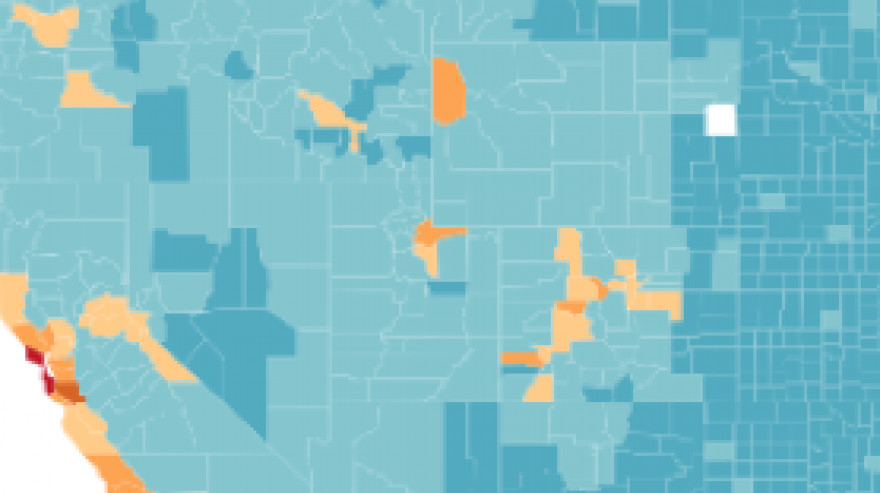

The World Bank data on the world map captures whether people have access to the most basic electricity supply — just enough to provide basic lighting and charge a phone or power a radio for 4 hours per day.

It shows that, especially in several African countries, a large share of the population lacks the benefits that basic electricity offers. No radio and no light at night.

Data explorers

Interactive visualization tools to explore a wide range of related indicators.

Data Explorer

Population & Demography

- Global Health

Subscribe to our newsletter

- Research & Writing RSS Feed

- Data Insights RSS Feed

All our topics

All our data, research, and writing — topic by topic.

Population and Demographic Change

- Population Change:

- Population Growth

- Age Structure

- Gender Ratio

- Births and Deaths:

- Life Expectancy

- Child and Infant Mortality

- Fertility Rate

- Geography of the World Population:

- Urbanization

- Health Risks:

- Lead Pollution

- Alcohol Consumption

- Opioids, Cocaine, Cannabis, and Other Illicit Drugs

- Air Pollution

- Outdoor Air Pollution

- Indoor Air Pollution

- Infectious Diseases:

- Coronavirus Pandemic (COVID-19)

- Mpox (monkeypox)

- Diarrheal Diseases

- Tuberculosis

- Health Institutions and Interventions:

- Vaccination

- Healthcare Spending

- Eradication of Diseases

- Life and Death:

- Causes of Death

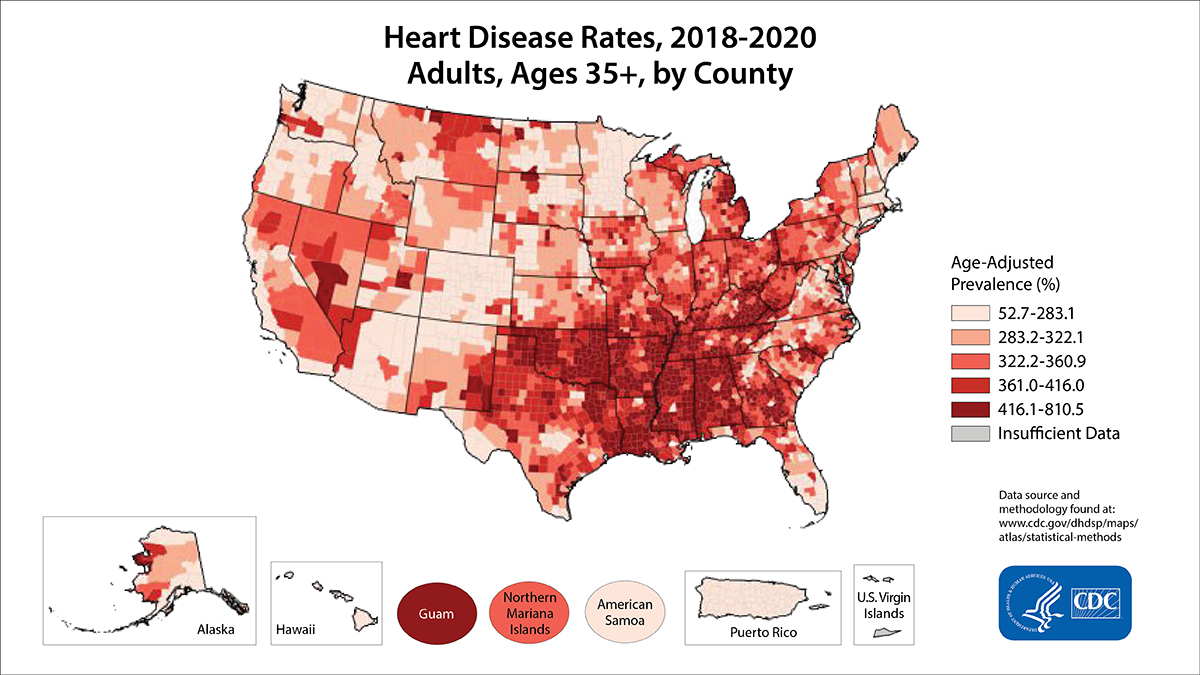

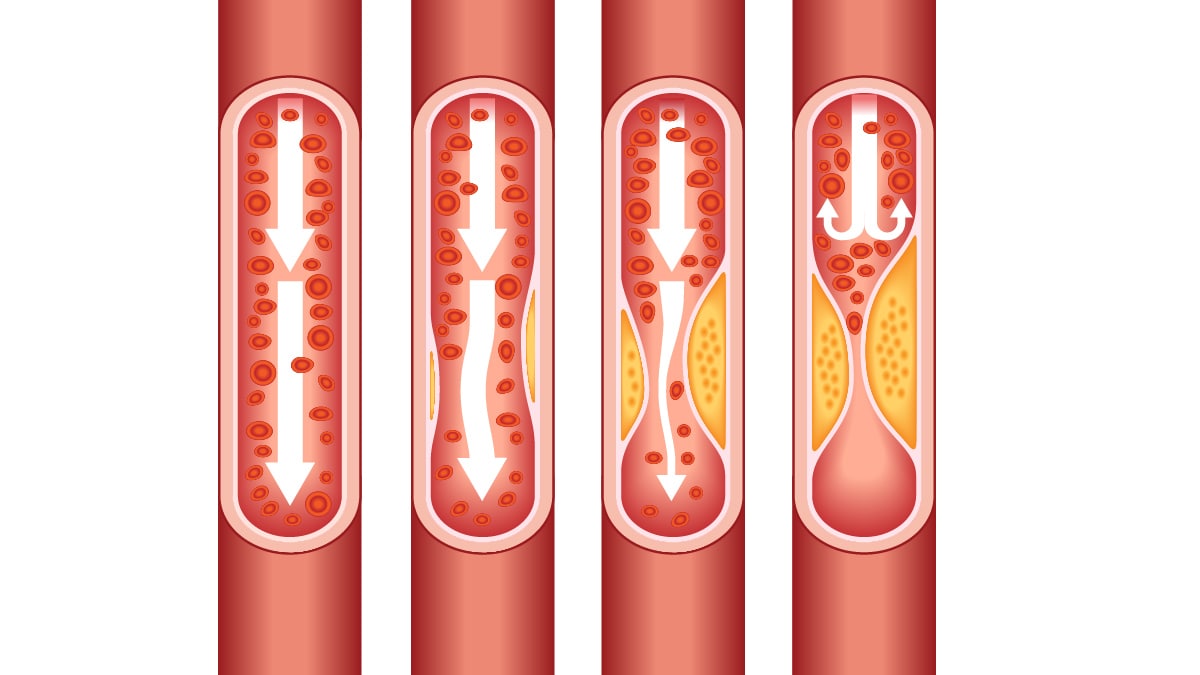

- Cardiovascular Diseases

- Burden of Disease

- Maternal Mortality

Energy and Environment

- Energy Systems:

- Access to Energy

- Fossil Fuels

- Renewable Energy

- Nuclear Energy

- Waste and Pollution:

- Climate and Air:

- CO₂ and Greenhouse Gas Emissions

- Climate Change

- Ozone Layer

- Clean Water and Sanitation

- Clean Water

- Water Use and Stress

- Environment and Ecosystems:

- Natural Disasters

- Biodiversity

- Environmental Impacts of Food Production

- Animal Welfare

- Forests and Deforestation

Food and Agriculture

- Hunger and Undernourishment

- Food Supply

- Food Prices

- Diet Compositions

- Human Height

- Micronutrient Deficiency

- Food Production:

- Agricultural Production

- Crop Yields

- Meat and Dairy Production

- Farm Size and Productivity

- Agricultural Inputs:

- Fertilizers

- Employment in Agriculture

Poverty and Economic Development

- Public Sector:

- State Capacity

- Government Spending

- Education Spending

- Military Personnel and Spending

- Poverty and Prosperity:

- Economic Inequality

- Economic Growth

- Economic Inequality by Gender

- Child Labor

- Working Hours

- Women’s Employment

- Global Connections:

- Trade and Globalization

Education and Knowledge

- Global Education

- Research and Development

Innovation and Technological Change

- Space Exploration and Satellites

- Technological Change

Living Conditions, Community, and Wellbeing

- Housing and Infrastructure:

- Light at Night

- Homelessness

- Relationships:

- Marriages and Divorces

- Loneliness and Social Connections

- Happiness and Wellbeing:

- Human Development Index (HDI)

- Happiness and Life Satisfaction

Human Rights and Democracy

- Human Rights

- Women’s Rights

- LGBT+ Rights

- Violence Against Children and Children’s Rights

Violence and War

- War and Peace

- Nuclear Weapons

- Biological and Chemical Weapons

Our World in Data is free and accessible for everyone.

Help us do this work by making a donation.

- Data, AI, & Machine Learning

- Managing Technology

- Social Responsibility

- Workplace, Teams, & Culture

- AI & Machine Learning

- Diversity & Inclusion

- Big ideas Research Projects

- Artificial Intelligence and Business Strategy

- Responsible AI

- Future of the Workforce

- Future of Leadership

- All Research Projects

AI in Action

- Most Popular

- The Truth Behind the Nursing Crisis

- Work/23: The Big Shift

- Coaching for the Future-Forward Leader

- Measuring Culture

The spring 2024 issue’s special report looks at how to take advantage of market opportunities in the digital space, and provides advice on building culture and friendships at work; maximizing the benefits of LLMs, corporate venture capital initiatives, and innovation contests; and scaling automation and digital health platform.

- Past Issues

- Upcoming Events

- Video Archive

- Me, Myself, and AI

- Three Big Points

Five Key Trends in AI and Data Science for 2024

These developing issues should be on every leader’s radar screen, data executives say.

- Data, AI, & Machine Learning

- AI & Machine Learning

- Data & Data Culture

- Technology Implementation

Carolyn Geason-Beissel/MIT SMR | Getty Images

Artificial intelligence and data science became front-page news in 2023. The rise of generative AI, of course, drove this dramatic surge in visibility. So, what might happen in the field in 2024 that will keep it on the front page? And how will these trends really affect businesses?

During the past several months, we’ve conducted three surveys of data and technology executives. Two involved MIT’s Chief Data Officer and Information Quality Symposium attendees — one sponsored by Amazon Web Services (AWS) and another by Thoughtworks . The third survey was conducted by Wavestone , formerly NewVantage Partners, whose annual surveys we’ve written about in the past . In total, the new surveys involved more than 500 senior executives, perhaps with some overlap in participation.

Get Updates on Leading With AI and Data

Get monthly insights on how artificial intelligence impacts your organization and what it means for your company and customers.

Please enter a valid email address

Thank you for signing up

Privacy Policy

Surveys don’t predict the future, but they do suggest what those people closest to companies’ data science and AI strategies and projects are thinking and doing. According to those data executives, here are the top five developing issues that deserve your close attention:

1. Generative AI sparkles but needs to deliver value.

As we noted, generative AI has captured a massive amount of business and consumer attention. But is it really delivering economic value to the organizations that adopt it? The survey results suggest that although excitement about the technology is very high , value has largely not yet been delivered. Large percentages of respondents believe that generative AI has the potential to be transformational; 80% of respondents to the AWS survey said they believe it will transform their organizations, and 64% in the Wavestone survey said it is the most transformational technology in a generation. A large majority of survey takers are also increasing investment in the technology. However, most companies are still just experimenting, either at the individual or departmental level. Only 6% of companies in the AWS survey had any production application of generative AI, and only 5% in the Wavestone survey had any production deployment at scale.

Surveys suggest that though excitement about generative AI is very high, value has largely not yet been delivered.

Production deployments of generative AI will, of course, require more investment and organizational change, not just experiments. Business processes will need to be redesigned, and employees will need to be reskilled (or, probably in only a few cases, replaced by generative AI systems). The new AI capabilities will need to be integrated into the existing technology infrastructure.

Perhaps the most important change will involve data — curating unstructured content, improving data quality, and integrating diverse sources. In the AWS survey, 93% of respondents agreed that data strategy is critical to getting value from generative AI, but 57% had made no changes to their data thus far.

2. Data science is shifting from artisanal to industrial.

Companies feel the need to accelerate the production of data science models . What was once an artisanal activity is becoming more industrialized. Companies are investing in platforms, processes and methodologies, feature stores, machine learning operations (MLOps) systems, and other tools to increase productivity and deployment rates. MLOps systems monitor the status of machine learning models and detect whether they are still predicting accurately. If they’re not, the models might need to be retrained with new data.

Producing data models — once an artisanal activity — is becoming more industrialized.

Most of these capabilities come from external vendors, but some organizations are now developing their own platforms. Although automation (including automated machine learning tools, which we discuss below) is helping to increase productivity and enable broader data science participation, the greatest boon to data science productivity is probably the reuse of existing data sets, features or variables, and even entire models.

3. Two versions of data products will dominate.

In the Thoughtworks survey, 80% of data and technology leaders said that their organizations were using or considering the use of data products and data product management. By data product , we mean packaging data, analytics, and AI in a software product offering, for internal or external customers. It’s managed from conception to deployment (and ongoing improvement) by data product managers. Examples of data products include recommendation systems that guide customers on what products to buy next and pricing optimization systems for sales teams.

But organizations view data products in two different ways. Just under half (48%) of respondents said that they include analytics and AI capabilities in the concept of data products. Some 30% view analytics and AI as separate from data products and presumably reserve that term for reusable data assets alone. Just 16% say they don’t think of analytics and AI in a product context at all.

We have a slight preference for a definition of data products that includes analytics and AI, since that is the way data is made useful. But all that really matters is that an organization is consistent in how it defines and discusses data products. If an organization prefers a combination of “data products” and “analytics and AI products,” that can work well too, and that definition preserves many of the positive aspects of product management. But without clarity on the definition, organizations could become confused about just what product developers are supposed to deliver.

4. Data scientists will become less sexy.

Data scientists, who have been called “ unicorns ” and the holders of the “ sexiest job of the 21st century ” because of their ability to make all aspects of data science projects successful, have seen their star power recede. A number of changes in data science are producing alternative approaches to managing important pieces of the work. One such change is the proliferation of related roles that can address pieces of the data science problem. This expanding set of professionals includes data engineers to wrangle data, machine learning engineers to scale and integrate the models, translators and connectors to work with business stakeholders, and data product managers to oversee the entire initiative.

Another factor reducing the demand for professional data scientists is the rise of citizen data science , wherein quantitatively savvy businesspeople create models or algorithms themselves. These individuals can use AutoML, or automated machine learning tools, to do much of the heavy lifting. Even more helpful to citizens is the modeling capability available in ChatGPT called Advanced Data Analysis . With a very short prompt and an uploaded data set, it can handle virtually every stage of the model creation process and explain its actions.

Of course, there are still many aspects of data science that do require professional data scientists. Developing entirely new algorithms or interpreting how complex models work, for example, are tasks that haven’t gone away. The role will still be necessary but perhaps not as much as it was previously — and without the same degree of power and shimmer.

5. Data, analytics, and AI leaders are becoming less independent.

This past year, we began to notice that increasing numbers of organizations were cutting back on the proliferation of technology and data “chiefs,” including chief data and analytics officers (and sometimes chief AI officers). That CDO/CDAO role, while becoming more common in companies, has long been characterized by short tenures and confusion about the responsibilities. We’re not seeing the functions performed by data and analytics executives go away; rather, they’re increasingly being subsumed within a broader set of technology, data, and digital transformation functions managed by a “supertech leader” who usually reports to the CEO. Titles for this role include chief information officer, chief information and technology officer, and chief digital and technology officer; real-world examples include Sastry Durvasula at TIAA, Sean McCormack at First Group, and Mojgan Lefebvre at Travelers.

Related Articles

This evolution in C-suite roles was a primary focus of the Thoughtworks survey, and 87% of respondents (primarily data leaders but some technology executives as well) agreed that people in their organizations are either completely, to a large degree, or somewhat confused about where to turn for data- and technology-oriented services and issues. Many C-level executives said that collaboration with other tech-oriented leaders within their own organizations is relatively low, and 79% agreed that their organization had been hindered in the past by a lack of collaboration.

We believe that in 2024, we’ll see more of these overarching tech leaders who have all the capabilities to create value from the data and technology professionals reporting to them. They’ll still have to emphasize analytics and AI because that’s how organizations make sense of data and create value with it for employees and customers. Most importantly, these leaders will need to be highly business-oriented, able to debate strategy with their senior management colleagues, and able to translate it into systems and insights that make that strategy a reality.

About the Authors

Thomas H. Davenport ( @tdav ) is the President’s Distinguished Professor of Information Technology and Management at Babson College, a fellow of the MIT Initiative on the Digital Economy, and senior adviser to the Deloitte Chief Data and Analytics Officer Program. He is coauthor of All in on AI: How Smart Companies Win Big With Artificial Intelligence (HBR Press, 2023) and Working With AI: Real Stories of Human-Machine Collaboration (MIT Press, 2022). Randy Bean ( @randybeannvp ) is an industry thought leader, author, founder, and CEO and currently serves as innovation fellow, data strategy, for global consultancy Wavestone. He is the author of Fail Fast, Learn Faster: Lessons in Data-Driven Leadership in an Age of Disruption, Big Data, and AI (Wiley, 2021).

More Like This

Add a comment cancel reply.

You must sign in to post a comment. First time here? Sign up for a free account : Comment on articles and get access to many more articles.

Comment (1)

Nicolas corzo.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Research data articles from across Nature Portfolio

Research data comprises research observations or findings, such as facts, images, measurements, records and files in various formats, and can be stored in databases. Data publication and archiving is important for the reuse of research data and the reproducibility of scientific research.

Related Subjects

Latest research and reviews.

Best practices for genetic and genomic data archiving

This Review discusses challenges and best practices for archiving genetics and genomics data to make them more accessible and FAIR compliant.

- Deborah M. Leigh

- Amy G. Vandergast

- Ivan Paz-Vinas

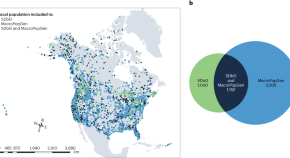

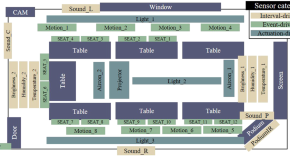

A dataset of ambient sensors in a meeting room for activity recognition

- Dongman Lee

An operational guide to translational clinical machine learning in academic medical centers

- Mukund Poddar

- Jayson S. Marwaha

- Gabriel A. Brat

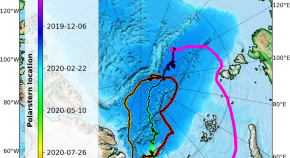

Cloud micro- and macrophysical properties from ground-based remote sensing during the MOSAiC drift experiment

- Hannes J. Griesche

- Patric Seifert

- Andreas Macke

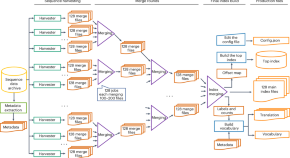

Indexing and searching petabase-scale nucleotide resources

The Pebblescout tool achieves an efficient search for subjects in a large nucleotide database such as runs in Sequence Read Archive data.

- Sergey A. Shiryev

- Richa Agarwala

FAIR assessment of nanosafety data reusability with community standards

- Ammar Ammar

- Chris Evelo

- Egon Willighagen

News and Comment

Ozempic keeps wowing: trial data show benefits for kidney disease

Semaglutide, the same compound in obesity drug Wegovy, slashes risk of kidney failure and death for people with diabetes.

- Rachel Fairbank

Standardized metadata for biological samples could unlock the potential of collections

- Vojtěch Brlík

Big data for everyone

- Henrietta Howells

Japan can embrace open science — but flexible approaches are key

Why it’s essential to study sex and gender, even as tensions rise

Some scholars are reluctant to research sex and gender out of fear that their studies will be misused. In a series of specially commissioned articles, Nature encourages scientists to engage.

Response to “The perpetual motion machine of AI-generated data and the distraction of ChatGPT as a ‘scientist’”

- William Stafford Noble

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- DE (Deutsch)

- Executive Team

- Supervisory Board

- Corporate Governance

- Our Research Division

- Our Education Division

- Our Health Division

- Our Professional Division

- Locations & Contact

- Press Office

- Overview About Us

- Our Communities

- Employee Networks

- Diversity, Equity & Inclusion

- Policies, Reports and Modern Slavery Act

- Overview Taking Responsibility

- Stay Curious

- Why join us

- Develop your curiosity

- Stretch your horizons

- Be yourself

- Life at Springer Nature

- Ways of Working

- Current opportunities

- Editorial & Publishing

- Other teams ↗

- Overview Careers

All Press Releases

The state of open data report 2022: researchers need more support to assist with open data mandates.

New findings provide update on researchers’ attitudes towards open data

London, 13 October 2022

Researchers worldwide will need further assistance to help comply with an increasing number of open data mandates, according to the authors of a new report. The State of Open Data Report 2022 – the latest in an annual collaborative series from Digital Science , Figshare and Springer Nature – is released today. Based on a global survey, the report is now in its seventh year and provides insights into researchers’ attitudes towards and experiences of open data. With more than 5,400 respondents, the 2022 survey is the largest since the COVID-19 pandemic began. This year’s report also includes guest articles from open data experts at the National Institutes of Health (NIH), the White House Office of Science and Technology Policy (OSTP), the Computer Network Information Center of the Chinese Academy of Sciences (CNIC, CAS), publishers and universities. Founder and CEO of Figshare Mark Hahnel says: “This year’s State of Open Data Report comes at a unique point in time when we’re seeing a growing number of open data mandates from funding organizations and policymakers, most notably the NIH and OSTP in the United States, but also recently from the National Health and Medical Research Council (NHMRC) in Australia, and in Europe and the UK.

“What is clear from the findings of our report is that while most researchers embrace the concepts of open data and open science, they also have some reasonable misgivings about how open data policies and practices impact on them. In an environment where open data mandates are increasing, funding organizations would benefit from working even more closely with researchers and providing them with additional support to help smooth the transition to a fully open data future.

“We all have a role to play in driving a better future for open data and accessible research, and one way we can do that through this report is by listening to the voices of researchers, funders, institutions, and publishers." Primary findings from this year’s report indicated that:

- There is a growing trend in researchers being in favour of data being made openly available as common practice (4 out of every five researchers were in agreement with this), supported somewhat by now over 70% of respondents being required to follow a policy on data sharing.

- However, researchers still cite a key need in helping them to share their data as being more training or information on policies for access, sharing and reuse (55%) as well as long-term storage and data management strategies (52%). Credit and recognition were once again a key theme for researchers in sharing their data . Of those who had previously shared data, 66% had received some form of recognition for their efforts – most commonly via full citation in another article (41%) followed by co-authorship on a paper that had used the data.

- Researchers are more inclined to share their research data where it can have an impact on citations (67%) and the visibility of their research (61%) , rather than being motivated by public benefit or journal/publisher mandate (both 56%)

Graham Smith , Open Data Program Manager, Springer Nature, says: “For the past seven years these surveys have helped paint a picture of researcher perspectives on open data. The report shows us not only the progress made but the steps that still need to be taken on the journey towards an open data future in support of the research community. Whether it’s the broad support of researchers for making research data openly available as common practice or the changing attitudes to open data mandates, we must learn from and deliver concrete steps forward to address what the community is telling us.

“Springer Nature is firmly committed to this and we continue to work closely with our partners, such as Figshare and Digital Science, to create better understanding around data sharing.”

Daniel Hook , CEO of Digital Science, says: “Digital Science is committed to making open, collaborative and inclusive research possible, as we believe this environment will lead to the greatest benefit for society. Now in its seventh year, while the articles in The State of Open Data Report represent a unique set of snapshots marking the evolution of attitudes about Open Data in our community, the data behind the survey constitutes a valuable resource to track researcher sentiment regarding open data and their experiences of data sharing. I believe that these data represent an amazing opportunity to understand the challenges and needs of our community so that we can collectively build better infrastructure to support research.” The full report can be accessed on Figshare here .

Notes to Editors

Key findings via theme of the report:

Support for open data

- Four out of every five respondents are in favour of research data being made openly available as common practice.

- 74% of respondents reported sharing their data during publication.

- Approximately one fifth of respondents reported having no concerns about sharing data openly – this proportion has been steadily growing since 2018.

- 88% of researchers surveyed are supportive of making research articles open access (OA) as a common scholarly practice.

Motivations and benefits

- When it comes to researchers sharing their data, citations of research papers (67%) and increased impact and visibility of papers (61%) outweigh public benefit or journal/publisher mandate (both 56%) as motivation.

- Of those who had previously shared data, 66% had received some form of recognition for their efforts – most commonly via full citation in another article (41%) followed by co-authorship on a paper that had used the data.

- A third of respondents indicated they had been involved in a research collaboration as a result of data they had previously shared.

Open data mandates

- 70% of respondents were required to follow a policy on data sharing for their most recent piece of research.

- More than two-thirds of respondents are supportive “to some extent” of a national mandate for making research data openly available. This number has been declining since 2019.

- Just over half (52%) of respondents in the 2022 survey felt that sharing data should be a part of the requirement for awarding research grants. Again, this number has been declining since 2019.

- Only 19% of respondents believe that researchers get sufficient credit for sharing their data, while 75% say they receive too little credit.

- Just under a quarter of respondents indicated that they had previously received support with planning, managing or sharing their research data.

- The greatest concern among respondents is misuse of their data (35%).

- The key needs of researchers which were felt more training or information would improve were better understanding and definitions for policies for access, sharing and reuse (55%) as well as long-term storage and data management strategies (52%) – things that impact both ends of the research cycle.

Key demographics of respondents

- Researchers from China now comprise 11% of all respondents, equal with that of the United States. China and the US are the two countries with the biggest response to the survey, followed by India, Japan, Germany, Italy, UK, Canada, Brazil, France and Spain.

- 31% of respondents were early career researchers (ECRs), while a further 31% classed themselves as senior researchers.

- Most respondents (42%) were from medicine & life sciences; 38% from mathematics, physics and applied sciences; and 17% from humanities and social sciences (an increase of 3%).

- Respondents were broadly categorised as: Open science advocates (32%), Open publishing advocates (26%), Cautiously pro open science (25%), Open science agnostics (11%), and Non-believers of open science (6%).

About Springer Nature

For over 180 years Springer Nature has been advancing discovery by providing the best possible service to the whole research community. We help researchers uncover new ideas, make sure all the research we publish is significant, robust and stands up to objective scrutiny, that it reaches all relevant audiences in the best possible format, and can be discovered, accessed, used, re-used and shared. We support librarians and institutions with innovations in technology and data; and provide quality publishing support to societies.

As a research publisher, Springer Nature is home to trusted brands including Springer, Nature Portfolio, BMC, Palgrave Macmillan and Scientific American. For more information, please visit springernature.com and @SpringerNature

About Figshare

Figshare is a leading provider of out-of-the-box, cloud repository software for research data, papers, theses, teaching materials, conference outputs, and more. Research outputs become more discoverable and impactful with search engine indexing and usage metrics including citations and altmetrics. Figshare provides a proficient platform for all types of research data to be shared and showcased in a FAIR way whilst enabling researchers to receive credit. Visit knowledge.figshare.com and follow @figshare on Twitter.

About Digital Science

Digital Science is a technology company working to make research more efficient. We invest in, nurture and support innovative businesses and technologies that make all parts of the research process more open and effective. Our portfolio includes admired brands including Altmetric, Dimensions, Figshare, ReadCube, Symplectic, IFI CLAIMS, Overleaf, Ripeta and Writefull. We believe that together, we can help researchers make a difference. Visit www.digital-science.com and follow @digitalsci on Twitter.

Sam Sule | Communications | Springer Nature

David Ellis | Digital Science

Simon Linacre | Digital Science

- Communiqués de presse

Stay up to date

Here to foster information exchange with the library community

Connect with us on LinkedIn and stay up to date with news and development.

- Executive team

- Our Research Business

- Our Education Business

- Our Professional Business

- Taking Responsibility

- Policies, Reports & Modern Slavery Act

- Why Work Here?

- Search our vacancies ↗

- Springer Nature

- Nature Portfolio

- Palgrave Macmillan

- Scientific American

- Macmillan Education

- Springer Healthcare

- © 2024 Springer Nature

- General terms and conditions

- Your US State Privacy Rights

- Your Privacy Choices / Manage Cookies

- Accessibility

- Legal notice

- Help us to improve this site, send feedback.

- Contact Tracing

- Pandemic Data Initiative

- Events & News

JHU has stopped collecting data as of

After three years of around-the-clock tracking of COVID-19 data from...

What is the JHU CRC Now?

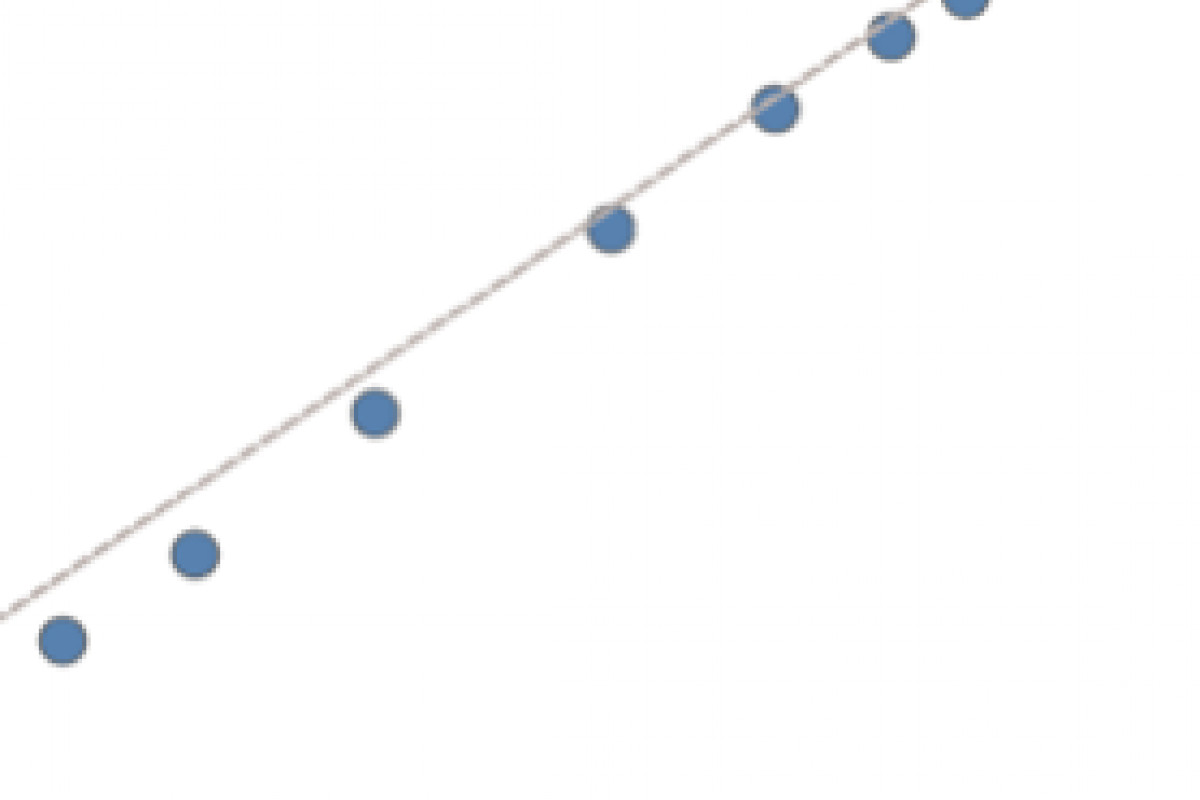

The Johns Hopkins Coronavirus Resource Center established a new standard for infectious disease tracking by publicly providing pandemic data in near real time. It began Jan. 22, 2020 as the COVID-19 Dashboard , operated by the Center for Systems Science and Engineering and the Applied Physics Laboratory . But the map of red dots quickly evolved into the global go-to hub for monitoring a public health catastrophe. By March 3, 2020, Johns Hopkins expanded the site into a comprehensive collection of raw data and independent expert analysis known as the Coronavirus Resource Center (CRC) – an enterprise that harnessed the world-renowned expertise from across Johns Hopkins University & Medicine.

Why did we shut down?

After three years of 24-7 operations, the CRC is ceasing its data collection efforts due to an increasing number of U.S. states slowing their reporting cadences. In addition, the federal government has improved its pandemic data tracking enough to warrant the CRC’s exit. From the start, this effort should have been provided by the U.S. government. This does not mean Johns Hopkins believes the pandemic is over. It is not. The institution remains committed to maintaining a leadership role in providing the public and policymakers with cutting edge insights into COVID-19. See details below.

Ongoing Johns Hopkins COVID-19 Resources

The Hub — the news and information website for Johns Hopkins — publishes the latest updates on COVID-19 research about vaccines, treatments, and public health measures.

The Johns Hopkins Bloomberg School of Public Health maintains the COVID-19 Projects and Initiatives page to share the latest research and practice efforts by Bloomberg faculty.

The Johns Hopkins Center for Health Security has been at the forefront of providing policymakers and the public with vital information on how to mitigate disease spread.

The Johns Hopkins International Vaccine Access Center offers an online, interactive map-based platform for easy navigation of hundreds of research reports into vaccine use and impact.

Johns Hopkins Medicine provides various online portals that provide information about COVID-19 patient care, vaccinations, testing and more.

Accessing past data

Johns Hopkins maintains two data repositories for the information collected by the Coronavirus Resource Center between Jan. 22, 2020 and March 10, 2023. The first features global cases and deaths data as plotted by the Center for Systems Science and Engineering. The second features U.S. and global vaccination data , testing information and demographics that were maintained by Johns Hopkins University’s Bloomberg Center for Government Excellence .

How to use the CSSE GitHub

- Click on csse_covid_19_data to access chronological 'time series' data.

- Click on csse_covid_19_time_series to access daily data for cases and deaths.

- Click on 'confirmed_US' for U.S. cases and “deaths_US” for U.S. fatalities; and “confirmed_global” for international cases and 'deaths_global' for international fatalities.

- Click “View Raw.”

- Left click to “Save As” as spreadsheet.

How to use the GovEx GitHub

- Visit the U.S. 'data dictionary' and the global 'data dictionary' to understand the vaccine, testing and demographic data available for your use.

- Example: Click on either 'us_data' or 'global_data' for vaccine information.

- Click on 'time_series' to access daily reports for either the U.S. or the world.

- Select either 'vaccines' or “doses administered” for the U.S. or the world.

- For either database, click 'view raw' to view the data or to save it to a spreadsheet.

Government Resources

Us cases & deaths.

The U.S. Centers for Disease Control and Prevention maintains a national data tracker.

GLOBAL TRENDS

The World Health Organization provides information about global spread.

The CDC also provides a vaccine data tracker for the U.S., while Our World In Data from Oxford University provides global vaccine information.

HOSPITAL ADMISSIONS

The CDC and the U.S. Department of Health and Human Services have provided hospital admission data in the United States.

THANK YOU TO ALL CONTRIBUTORS TO THE JHU CRC TEAM

Thank you to our partners, thank you to our funders, special thanks to.

Aaron Katz, Adam Lee, Alan Ravitz, Alex Roberts, Alexander Evelo, Amanda Galante, Amina Mahmood, Angel Aliseda Alonso, Anna Yaroslaski, Arman Kalikian, Beatrice Garcia, Breanna Johnson, Cathy Hurley, Christina Pikas, Christopher Watenpool, Cody Meiners, Cory McCarty, Dane Galloway, Daniel Raimi Zlatic, David Zhang, Doug Donovan, Elaine Gehr, Emily Camacho, Emily Pond, Ensheng Dong, Eric Forte, Ethel Wong, Evan Bolt, Fardin Ganjkhanloo, Farzin Ahmadi, Fernando Ortiz-Sacarello, George Cancro, Grant Zhao, Greta Kinsley, Gus Sentementes, Heather Bree, Hongru Du, Ian Price, Jan LaBarge, Jason Williams, Jeff Gara, Jennifer Nuzzo, Jeremy Ratcliff, Jill Rosen, Jim Maguire, John Olson, John Piorkowski, Jordan Wesley, Joseph Duva, Joseph Peterson, Josh Porterfield, Joshua Poplawski, Kailande Cassamajor, Kevin Medina Santiago, Khalil Hijazi, Krushi Shah, Lana Milman, Laura Asher, Laura Murphy, Lauren Kennell, Louis Pang, Mara Blake, Marianne von Nordeck, Marissa Collins, Marlene Caceres, Mary Conway Vaughan, Meg Burke, Melissa Leeds, Michael Moore, Miles Stewart, Miriam McKinney Gray, Mitch Smallwood, Molly Mantus, Nick Brunner, Nishant Gupta, Oren Tirschwell, Paul Nicholas, Phil Graff, Phillip Hilliard, Promise Maswanganye, Raghav Ramachandran, Reina Chano Murray, Roman Wang, Ryan Lau, Samantha Cooley, Sana Talwar, Sara Bertran de Lis, Sarah Prata, Sarthak Bhatnagar, Sayeed Choudury, Shelby Wilson, Sheri Lewis, Steven Borisko, Tamara Goyea, Taylor Martin, Teresa Colella, Tim Gion, Tim Ng, William La Cholter, Xiaoxue Zhou, Yael Weiss

CRC in the Media

Time names crc go-to data source.

TIME Magazine named the Johns Hopkins Coronavirus Resource Center one of its Top 100 Inventions for 2020.

Research!America Praises CRC Work

Research!America awarded the CRC a public health honor for providing reliable real time data about COVID-19.

CRC Earns Award From Fast Company

The Johns Hopkins Coronavirus Resource Center wins Fast Company’s 2021 Innovative Team of the Year award.

Public Service

Lauren Gardner Wins Lasker Award for Service

Lauren Gardner, co-creator of the COVID-19 Dashboard, won the 2022 Lasker-Bloomberg Public Service Award.

Top Science News

Latest top headlines.

- Brain-Computer Interfaces

- Learning Disorders

- Brain Injury

- HIV and AIDS

- New Species

- Bird Flu Research

- Breast Cancer

- Colon Cancer

- Energy Technology

- Energy and Resources

- Electronics

- Wearable Technology

- Nature of Water

- Thermodynamics

- Materials Science

- Engineering and Construction

- Dolphins and Whales

- Global Warming

- Wild Animals

- Insects (including Butterflies)

- Endangered Plants

- Human Evolution

- Anthropology

- Human Brain: New Gene Transcripts

- Epstein-Barr Virus and Resulting Diseases

- Birdsong and Human Voice: Same Genetic Blueprint

- Predicting Individual Cancer Risk

Top Physical/Tech

- Charge Your Laptop in a Minute?

- 'Electronic Spider Silk' Printed On Human Skin

- Engineered Surfaces Made to Shed Heat

- Innovative Material for Sustainable Building

Top Environment

- Future Climate Impacts Put Whale Diet at Risk

- Caterpillars Detect Predators by Electricity

- Symbiotic Bacteria Communicate With Plants

- Early Arrival of Palaeolithic People On Cyprus

Health News

Latest health headlines.

- Immune System

- Medical Topics

- Diseases and Conditions

- Robotics Research

- Today's Healthcare

- Medical Devices

- Alzheimer's Research

- Alzheimer's

- Healthy Aging

- Staying Healthy

- Child Psychology

- K-12 Education

- Child Development

- Intelligence

- Language Acquisition

- Racial Issues

- Industrial Relations

- Breastfeeding

- Infant's Health

Health & Medicine

- Urban Gardening May Improve Human Health

- Enhancing Stereotactic Neurosurgery Precision

- New Molecular Drivers of Alzheimer's

- I'll Have What She's Having!

Mind & Brain

- More School Entry Disadvantages at Age 16-17

- End to Driving for Older Adults

- AI-Powered Headphones Filter Only Unwanted Noise

- US Hockey Players Use Canadian Accent

Living Well

- Does It Matter If Your Teens Listen to You?

- Stress and Busy Bragging at Work

- Health and Economic Benefits of Breastfeeding

- When Older Adults Stop Driving

Physical/Tech News

Latest physical/tech headlines.

- Medical Technology

- Energy and the Environment

- Environmental Science

- Wounds and Healing

- Extrasolar Planets

- Ancient Civilizations

- Black Holes

- Behavioral Science

- Quantum Computers

- Spintronics

- Spintronics Research

- Telecommunications

- Retail and Services

- Computers and Internet

- Privacy Issues

Matter & Energy

- Sulfur Trioxide in the Atmosphere

- Hidden Threats With Advanced X-Ray Imaging

- Recovering Electricity from Heat Storage: 44%

- New Tool to Move Tiny Bioparticles

Space & Time

- Intriguing World Sized Between Earth, Venus

- Cosmic Rays Illuminate the Past

- Star Suddenly Vanish from the Night Sky

- Triple-Star System

Computers & Math

- Finding the Beat of Collective Animal Motion

- Uncharted Territory in Quantum Devices

- 6G and Beyond: Next Gen Wireless

- Hospitality Sector: AI as Concierge

Environment News

Latest environment headlines.

- Molecular Biology

- Cell Biology

- Biotechnology

- Invasive Species

- Sustainability

- Environmental Policies

- Urbanization

- Drought Research

- Recycling and Waste

- World Development

- Evolutionary Biology

- Pests and Parasites

- Charles Darwin

- Animal Learning and Intelligence

- Origin of Life

Plants & Animals

- Tissue-Specific Protein-Protein Interactions

- Biodiversity in Crabs

- World Abuzz With New Bumble Bee Sightings

- Observing Cells With Superfast Soft X-Rays

Earth & Climate

- GBGI Infrastructure Mitigating Urban Heat

- Reduce Streamflow in Colorado River Basin

- Sewage Overflows and Gastrointestinal Illnesses

- Recurring Evolutionary Changes in Insects

Fossils & Ruins

- Elephant Hunting in Chile 12,000 Years Ago

- 250-Year-Old Mystery of the German Cockroach

- Cooperative Hunting Not Brain-Intensive

- Legacy of Indigenous Stewardship of Camas

Society/Education News

Latest society/education headlines.

- Environmental Awareness

- Gender Difference

- Mental Health

- Severe Weather

- Resource Shortage

- ADD and ADHD

- Neuroscience

- Information Technology

- Engineering

- Mathematical Modeling

- Energy Issues

- STEM Education

- Educational Policy

- Educational Technology

- Mathematics

- Computer Modeling

Science & Society

- Influencing Climate-Change Risk Perception

- Transitioning Gender Not Linked to Depression

- Climbing the Social Ladder Slows Dementia

- Drought-Monitoring Outpaced by Climate Changes

Education & Learning

- ADHD and Emotional Problems

- How Practice Forms New Memory Pathways

- No Inner Voice Linked to Poorer Verbal Memory

- AI Knowledge Gets Your Foot in the Door

Business & Industry

- Robot-Phobia and Labor Shortages

- Pulling Power of Renewables

- Can AI Simulate Multidisciplinary Workshops?

- New Sensing Checks Overhaul Manufacturing

- Origins of the Proton's Spin

Trending Topics

Strange & offbeat, about this site.

ScienceDaily features breaking news about the latest discoveries in science, health, the environment, technology, and more -- from leading universities, scientific journals, and research organizations.

Visitors can browse more than 500 individual topics, grouped into 12 main sections (listed under the top navigational menu), covering: the medical sciences and health; physical sciences and technology; biological sciences and the environment; and social sciences, business and education. Headlines and summaries of relevant news stories are provided on each topic page.

Stories are posted daily, selected from press materials provided by hundreds of sources from around the world. Links to sources and relevant journal citations (where available) are included at the end of each post.

For more information about ScienceDaily, please consult the links listed at the bottom of each page.

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- When and how to update...

When and how to update systematic reviews: consensus and checklist

- Related content

Peer review

This article has a correction. please see:.

- Errata - September 06, 2016

- Paul Garner , professor 1 ,

- Sally Hopewell , associate professor 2 ,

- Jackie Chandler , methods coordinator 3 ,

- Harriet MacLehose , senior editor 3 ,

- Elie A Akl , professor 5 6 ,

- Joseph Beyene , associate professor 7 ,

- Stephanie Chang , director 8 ,

- Rachel Churchill , professor 9 ,

- Karin Dearness , managing editor 10 ,

- Gordon Guyatt , professor 4 ,

- Carol Lefebvre , information consultant 11 ,

- Beth Liles , methodologist 12 ,

- Rachel Marshall , editor 3 ,

- Laura Martínez García , researcher 13 ,

- Chris Mavergames , head 14 ,

- Mona Nasser , clinical lecturer in evidence based dentistry 15 ,

- Amir Qaseem , vice president and chair 16 17 ,

- Margaret Sampson , librarian 18 ,

- Karla Soares-Weiser , deputy editor in chief 3 ,

- Yemisi Takwoingi , senior research fellow in medical statistics 19 ,

- Lehana Thabane , director and professor 4 20 ,

- Marialena Trivella , statistician 21 ,

- Peter Tugwell , professor of medicine, epidemiology, and community medicine 22 ,

- Emma Welsh , managing editor 23 ,

- Ed C Wilson , senior research associate in health economics 24 ,

- Holger J Schünemann , professor 4 5

- 1 Cochrane Infectious Diseases Group, Department of Clinical Sciences, Liverpool School of Tropical Medicine, Liverpool L3 5QA, UK

- 2 Oxford Clinical Trials Research Unit, University of Oxford, Oxford, UK

- 3 Cochrane Editorial Unit, Cochrane Central Executive, London, UK

- 4 Department of Clinical Epidemiology and Biostatistics and Department of Medicine, McMaster University, Hamilton, ON, Canada

- 5 Cochrane GRADEing Methods Group, Ottawa, ON, Canada

- 6 Department of Internal Medicine, American University of Beirut, Beirut, Lebanon

- 7 Department of Mathematics and Statistics, McMaster University

- 8 Evidence-based Practice Center Program, Agency for Healthcare and Research Quality, Rockville, MD, USA

- 9 Centre for Reviews and Dissemination, University of York, York, UK

- 10 Cochrane Upper Gastrointestinal and Pancreatic Diseases Group, Hamilton, ON, Canada

- 11 Lefebvre Associates, Oxford, UK

- 12 Kaiser Permanente National Guideline Program, Portland, OR, USA

- 13 Iberoamerican Cochrane Centre, Barcelona, Spain

- 14 Cochrane Informatics and Knowledge Management, Cochrane Central Executive, Freiburg, Germany

- 15 Plymouth University Peninsula School of Dentistry, Plymouth, UK

- 16 Department of Clinical Policy, American College of Physicians, Philadelphia, PA, USA

- 17 Guidelines International Network, Pitlochry, UK

- 18 Children’s Hospital of Eastern Ontario, Ottawa, ON, Canada

- 19 Institute of Applied Health Research, University of Birmingham, Birmingham, UK

- 20 Biostatistics Unit, Centre for Evaluation, McMaster University, Hamilton, ON, Canada

- 21 Centre for Statistics in Medicine, University of Oxford, Oxford, UK

- 22 University of Ottawa, Ottawa, ON, Canada

- 23 Cochrane Airways Group, Population Health Research Institute, St George’s, University of London, London, UK

- 24 Cambridge Centre for Health Services Research, University of Cambridge, Cambridge, UK

- Correspondence to: P Garner Paul.Garner{at}lstmed.ac.uk

- Accepted 26 May 2016

Updating of systematic reviews is generally more efficient than starting all over again when new evidence emerges, but to date there has been no clear guidance on how to do this. This guidance helps authors of systematic reviews, commissioners, and editors decide when to update a systematic review, and then how to go about updating the review.

Systematic reviews synthesise relevant research around a particular question. Preparing a systematic review is time and resource consuming, and provides a snapshot of knowledge at the time of incorporation of data from studies identified during the latest search. Newly identified studies can change the conclusion of a review. If they have not been included, this threatens the validity of the review, and, at worst, means the review could mislead. For patients and other healthcare consumers, this means that care and policy development might not be fully informed by the latest research; furthermore, researchers could be misled and carry out research in areas where no further research is actually needed. 1 Thus, there are clear benefits to updating reviews, rather than duplicating the entire process as new evidence emerges or new methods develop. Indeed, there is probably added value to updating a review, because this will include taking into account comments and criticisms, and adoption of new methods in an iterative process. 2 3 4 5 6

Cochrane has over 20 years of experience with preparing and updating systematic reviews, with the publication of over 6000 systematic reviews. However, Cochrane’s principle of keeping all reviews up to date has not been possible, and the organisation has had to adapt: from updating when new evidence becomes available, 7 to updating every two years, 8 to updating based on need and priority. 9 This experience has shown that it is not possible, sensible, or feasible to continually update all reviews all the time. Other groups, including guideline developers and journal editors, adopt updating principles (as applied, for example, by the Systematic Reviews journal; https://systematicreviewsjournal.biomedcentral.com/ ).

The panel for updating guidance for systematic reviews (PUGs) group met to draw together experiences and identify a common approach. The PUGs guidance can help individuals or academic teams working outside of a commissioning agency or Cochrane, who are considering writing a systematic review for a journal or to prepare for a research project. The guidance could also help these groups decide whether their effort is worthwhile.

Summary points

Updating systematic reviews is, in general, more efficient than starting afresh when new evidence emerges. The panel for updating guidance for systematic reviews (PUGs; comprising review authors, editors, statisticians, information specialists, related methodologists, and guideline developers) met to develop guidance for people considering updating systematic reviews. The panel proposed the following:

Decisions about whether and when to update a systematic review are judgments made for individual reviews at a particular time. These decisions can be made by agencies responsible for systematic review portfolios, journal editors with systematic review update services, or author teams considering embarking on an update of a review.

The decision needs to take into account whether the review addresses a current question, uses valid methods, and is well conducted; and whether there are new relevant methods, new studies, or new information on existing included studies. Given this information, the agency, editors, or authors need to judge whether the update will influence the review findings or credibility sufficiently to justify the effort in updating it.

Review authors and commissioners can use a decision framework and checklist to navigate and report these decisions with “update status” and rationale for this status. The panel noted that the incorporation of new synthesis methods (such as Grading of Recommendations Assessment, Development and Evaluation (GRADE)) is also often likely to improve the quality of the analysis and the clarity of the findings.

Given a decision to update, the process needs to start with an appraisal and revision of the background, question, inclusion criteria, and methods of the existing review.

Search strategies should be refined, taking into account changes in the question or inclusion criteria. An analysis of yield from the previous edition, in relation to databases searched, terms, and languages can make searches more specific and efficient.

In many instances, an update represents a new edition of the review, and authorship of the new version needs to follow criteria of the International Committee of Medical Journal Editors (ICMJE). New approaches to publishing licences could help new authors build on and re-use the previous edition while giving appropriate credit to the previous authors.

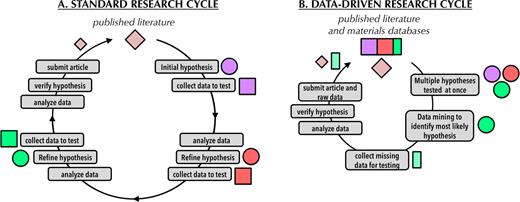

The panel also reflected on this guidance in the context of emerging technological advances in software, information retrieval, and electronic linkage and mining. With good synthesis and technology partnerships, these advances could revolutionise the efficiency of updating in the coming years.

Panel selection and procedures

An international panel of authors, editors, clinicians, statisticians, information specialists, other methodologists, and guideline developers was invited to a two day workshop at McMaster University, Hamilton, Canada, on 26-27 June 2014, organised by Cochrane. The organising committee selected the panel (web appendix 1). The organising committee invited participants, put forward the agenda, collected background materials and literature, and drafted the structure of the report.

The purpose of the workshop was to develop a common approach to updating systematic reviews, drawing on existing strategies, research, and experience of people working in this area. The selection of participants aimed on broad representation of different groups involved in producing systematic reviews (including authors, editors, statisticians, information specialists, and other methodologists), and those using the reviews (guideline developers and clinicians). Participants within these groups were selected on their expertise and experience in updating, in previous work developing methods to assess reviews, and because some were recognised for developing approaches within organisations to manage updating strategically. We sought to identify general approaches in this area, and not be specific to Cochrane; although inevitably most of the panel were somehow engaged in Cochrane.

The workshop structure followed a series of short presentations addressing key questions on whether, when, and how to update systematic reviews. The proceedings included the management of authorship and editorial decisions, and innovative and technological approaches. A series of small group discussions followed each question, deliberating content, and forming recommendations, as well as recognising uncertainties. Large group, round table discussions deliberated further these small group developments. Recommendations were presented to an invited forum of individuals with varying levels of expertise in systematic reviews from McMaster University (of over 40 people), widely known for its contributions to the field of research evidence synthesis. Their comments helped inform the emerging guidance.

The organising committee became the writing committee after the meeting. They developed the guidance arising from the meeting, developed the checklist and diagrams, added examples, and finalised the manuscript. The guidance was circulated to the larger group three times, with the PUGs panel providing extensive feedback. This feedback was all considered and carefully addressed by the writing committee. The writing committee provided the panel with the option of expressing any additional comments from the general or specific guidance in the report, and the option for registering their own view that might differ to the guidance formed and their view would be recorded in an annex. In the event, consensus was reached, and the annex was not required.

Definition of update

The PUGs panel defined an update of a systematic review as a new edition of a published systematic review with changes that can include new data, new methods, or new analyses to the previous edition. This expands on a previous definition of a systematic review update. 10 An update asks a similar question with regard to the participants, intervention, comparisons, and outcomes (PICO) and has similar objectives; thus it has similar inclusion criteria. These inclusion criteria can be modified in the light of developments within the topic area with new interventions, new standards, and new approaches. Updates will include a new search for potentially relevant studies and incorporate any eligible studies or data; and adjust the findings and conclusions as appropriate. Box 1 provides some examples.

Box 1: Examples of what factors might change in an updated systematic review

A systematic review of steroid treatment in tuberculosis meningitis used GRADE methods and split the composite outcome in the original review of death plus disability into its two components. This improved the clarity of the reviews findings in relation to the effects and the importance of the effects of steroids on death and on disability. 11

A systematic review of dihydroartemisinin-piperaquine (DHAP) for treating malaria was updated with much more detailed analysis of the adverse effect data from the existing trials as a result of questions raised by the European Medicines Agency. Because the original review included other comparisons, the update required extracting only the DHAP comparisons from the original review, and a modification of the title and the PICO. 12

A systematic review of atorvastatin was updated with simple uncontrolled studies. 13 This update allowed comparisons with trials and strengthened the review findings. 14

Which systematic reviews should be updated and when?

Any group maintaining a portfolio of systematic reviews as part of their normative work, such as guidelines panels or Cochrane review groups, will need to prioritise which reviews to update. Box 2 presents the approaches used by the Agency for HealthCare Research and Quality (AHRQ) and Cochrane to prioritise which systematic reviews to update and when. Clearly, the responsibility for deciding which systematic reviews should be updated and when they will be updated will vary: it may be centrally organised and resourced, as with the AHRQ scientific resource centre (box 2). In Cochrane, the decision making process is decentralised to the Cochrane Review Group editorial team, with different approaches applied, often informally.

Box 2: Examples of how different organisations decide on updating systematic reviews

Agency for healthcare research and quality (us).

The AHRQ uses a needs based approach; updating systematic reviews depends on an assessment of several criteria:

Stakeholder impact

Interest from stakeholder partners (such as consumers, funders, guideline developers, clinical societies, James Lind Alliance)

Use and uptake (for example, frequency of citations and downloads)

Citation in scientific literature including clinical practice guidelines

Currency and need for update

New research is available

Review conclusions are probably dated

Update decision

Based on the above criteria, the decision is made to either update, archive, or continue surveillance.

Of over 50 Cochrane editorial teams, most but not all have some systems for updating, although this process can be informal and loosely applied. Most editorial teams draw on some or all of the following criteria:

Strategic importance

Is the topic a priority area (for example, in current debates or considered by guidelines groups)?

Is there important new information available?

Practicalities in organising the update that many groups take into account

Size of the task (size and quality of the review, and how many new studies or analyses are needed)

Availability and willingness of the author team

Impact of update

New research impact on findings and credibility

Consider whether new methods will improve review quality

Priority to update, postpone update, class review as no longer requiring an update

The PUGs panel recommended an individualised approach to updating, which used the procedures summarised in figure 1 ⇓ . The figure provides a status category, and some options for classifying reviews into each of these categories, and builds on a previous decision tool and earlier work developing an updating classification system. 15 16 We provide a narrative for each step.

Fig 1 Decision framework to assess systematic reviews for updating, with standard terms to report such decisions

- Download figure

- Open in new tab

- Download powerpoint

Step 1: assess currency

Does the published review still address a current question.

An update is only worthwhile if the question is topical for decision making for practice, policy, or research priorities (fig 1 ⇑ ). For agencies, people responsible for managing a portfolio of systematic reviews, there is a need to use both formal and informal horizon scanning. This type of scanning helps identify questions with currency, and can help identify those reviews that should be updated. The process could include monitoring policy debates around the review, media outlets, scientific (and professional) publications, and linking with guideline developers.

Has the review had good access or use?

Metrics for citations, article access and downloads, and sharing via social or traditional media can be used as proxy or indicators for currency and relevance of the review. Reviews that are widely cited and used could be important to update should the need arise. Comparable reviews that are never cited or rarely downloaded, for example, could indicate that they are not addressing a question that is valued, and might not be worth updating.

In most cases, updated reviews are most useful to stakeholders when there is new information or methods that result in a change in findings. However, there are some circumstances in which an up to date search for information is important for retaining the credibility of the review, regardless of whether the main findings would change or not. For example, key stakeholders would dismiss a review if a study is carried out in a relevant geographical setting but is not included; if a large, high profile study that might not change the findings is not included; or if an up to date search is required for a guideline to achieve credibility. Box 3 provides such examples. If the review does not answer a current question, the intervention has been superseded, then a decision can be made not to update and no further intelligence gathering is required (fig 1 ⇑ ).

Box 3: Examples of a systematic review’s currency

The public is interested in vitamin C for preventing the common cold: the Cochrane review includes over 29 trials with either no or small effects, concluding good evidence of no important effects. 17 Assessment: still a current question for the public.

Low osmolarity oral rehydration salt (ORS) solution versus standard solution for acute diarrhoea in children: the 2001 Cochrane review 18 led the World Health Organization to recommend ORS solution formula worldwide to follow the new ORS solution formula 19 and this has now been accepted globally. Assessment: no longer a current question.

Routine prophylactic antibiotics with caesarean section: the Cochrane review reports clear evidence of maternal benefit from placebo controlled trials but no information on the effects on the baby. 20 Assessment: this is a current question.

A systematic review published in the Lancet examined the effects of artemisinin based combination treatments compared with monotherapy for treating malaria and showed clear benefit. 21 Assessment: this established the treatment globally and is no longer a current question and no update is required.

A Cochrane review of amalgam restorations for dental caries 22 is unlikely to be updated because the use of dental amalgam is declining, and the question is not seen as being important by many dental specialists. Assessment: no longer a current question.

Did the review use valid methods and was it well conducted?

If the question is current and clearly defined, the systematic review needs to have used valid methods and be well conducted. If the review has vague inclusion criteria, poorly articulated outcomes, or inappropriate methods, then updating should not proceed. If the question is current, and the review has been cited or used, then it might be appropriate to simply start with a new protocol. The appraisal should take into account the methods in use when the review was done.

Step 2: identify relevant new methods, studies, and other information

Are there any new relevant methods.

If the question is current, but the review was done some years ago, the quality of the review might not meet current day standards. Methods have advanced quickly, and data extraction and understanding of the review process have become more sophisticated. For example:

Methods for assessing risk of bias of randomised trials, 23 diagnostic test accuracy (QUADAS-2), 24 and observational studies (ROBINS-1). 25

Application of summary of findings, evidence profiles, and related GRADE methods has meant the characteristics of the intervention, characteristics of the participants, and risk of bias are more thoroughly and systematically documented. 26 27

Integration of other study designs containing evidence, such economic evaluation and qualitative research. 28

There are other incremental improvements in a wide range of statistical and methodological areas, for example, in describing and taking into account cluster randomised trials. 29 AMSTAR can assess the overall quality of a systematic review, 30 and the ROBIS tool can provide a more detailed assessment of the potential for bias. 31

Are there any new studies or other information?

If an authoring or commissioning team wants to ensure that a particular review is up to date, there is a need for routine surveillance for new studies that are potentially relevant to the review, by searching and trial register inspection at regular intervals. This process has several approaches, including:

Formal surveillance searching 32

Updating the full search strategies in the original review and running the searches

Tracking studies in clinical trial and other registers

Using literature appraisal services 33

Using a defined abbreviated search strategy for the update 34

Checking studies included in related systematic reviews. 35

How often this surveillance is done, and which approaches to use, depend on the circumstances and the topic. Some topics move quickly, and the definition of “regular intervals” will vary according to the field and according to the state of evidence in the field. For example, early in the life of a new intervention, there might be a plethora of studies, and surveillance would be needed more frequently.

Step 3: assess the effect of updating the review

Will the adoption of new methods change the findings or credibility.

Editors, referees, or experts in the topic area or methodologists can provide an informed view of whether a review can be substantially improved by application of current methodological expectations and new methods (fig 1 ⇑ ). For example, a Cochrane review of iron supplementation in malaria concluded that there was “no significant difference between iron and placebo detected.” 36 An update of the review included a GRADE assessment of the certainty of the evidence, and was able to conclude with a high degree of certainty that iron does not cause an excess of clinical malaria because the upper relative risk confidence intervals of harm was 1.0 with high certainty of evidence. 37

Will the new studies, information, or data change the findings or credibility?

The assessment of new data contained in new studies and how these data might change the review is often used to determine whether an update should go ahead, and the speed with which the update should be conducted. The appraisal of these new data can be carried out in different ways. Initially, methods focused on statistical approaches to predict an overturning of the current review findings in terms of the primary or desired outcome (table 1 ⇓ ). Although this aspect is important, additional studies can add important information to a review, which is more than just changing the primary outcome to a more accurate and reliable estimate. Box 4 gives examples.

Formal prediction tools: how potentially relevant new studies can affect review conclusions

- View inline

Box 4: Examples of new information other than new trials being important

The iconic Cochrane review of steroids in preterm labour was thought to provide evidence of benefit in infants, and this question no longer required new trials. However, a new large trial published in the Lancet in 2015 showed that in low and middle income countries, strategies to promote the uptake of neonatal steroids increased neonatal mortality and suspected maternal infection. 49 This information needs to somehow be incorporated into the review to maintain its credibility.