Classroom Q&A

With larry ferlazzo.

In this EdWeek blog, an experiment in knowledge-gathering, Ferlazzo will address readers’ questions on classroom management, ELL instruction, lesson planning, and other issues facing teachers. Send your questions to [email protected]. Read more from this blog.

Eight Instructional Strategies for Promoting Critical Thinking

- Share article

(This is the first post in a three-part series.)

The new question-of-the-week is:

What is critical thinking and how can we integrate it into the classroom?

This three-part series will explore what critical thinking is, if it can be specifically taught and, if so, how can teachers do so in their classrooms.

Today’s guests are Dara Laws Savage, Patrick Brown, Meg Riordan, Ph.D., and Dr. PJ Caposey. Dara, Patrick, and Meg were also guests on my 10-minute BAM! Radio Show . You can also find a list of, and links to, previous shows here.

You might also be interested in The Best Resources On Teaching & Learning Critical Thinking In The Classroom .

Current Events

Dara Laws Savage is an English teacher at the Early College High School at Delaware State University, where she serves as a teacher and instructional coach and lead mentor. Dara has been teaching for 25 years (career preparation, English, photography, yearbook, newspaper, and graphic design) and has presented nationally on project-based learning and technology integration:

There is so much going on right now and there is an overload of information for us to process. Did you ever stop to think how our students are processing current events? They see news feeds, hear news reports, and scan photos and posts, but are they truly thinking about what they are hearing and seeing?

I tell my students that my job is not to give them answers but to teach them how to think about what they read and hear. So what is critical thinking and how can we integrate it into the classroom? There are just as many definitions of critical thinking as there are people trying to define it. However, the Critical Think Consortium focuses on the tools to create a thinking-based classroom rather than a definition: “Shape the climate to support thinking, create opportunities for thinking, build capacity to think, provide guidance to inform thinking.” Using these four criteria and pairing them with current events, teachers easily create learning spaces that thrive on thinking and keep students engaged.

One successful technique I use is the FIRE Write. Students are given a quote, a paragraph, an excerpt, or a photo from the headlines. Students are asked to F ocus and respond to the selection for three minutes. Next, students are asked to I dentify a phrase or section of the photo and write for two minutes. Third, students are asked to R eframe their response around a specific word, phrase, or section within their previous selection. Finally, students E xchange their thoughts with a classmate. Within the exchange, students also talk about how the selection connects to what we are covering in class.

There was a controversial Pepsi ad in 2017 involving Kylie Jenner and a protest with a police presence. The imagery in the photo was strikingly similar to a photo that went viral with a young lady standing opposite a police line. Using that image from a current event engaged my students and gave them the opportunity to critically think about events of the time.

Here are the two photos and a student response:

F - Focus on both photos and respond for three minutes

In the first picture, you see a strong and courageous black female, bravely standing in front of two officers in protest. She is risking her life to do so. Iesha Evans is simply proving to the world she does NOT mean less because she is black … and yet officers are there to stop her. She did not step down. In the picture below, you see Kendall Jenner handing a police officer a Pepsi. Maybe this wouldn’t be a big deal, except this was Pepsi’s weak, pathetic, and outrageous excuse of a commercial that belittles the whole movement of people fighting for their lives.

I - Identify a word or phrase, underline it, then write about it for two minutes

A white, privileged female in place of a fighting black woman was asking for trouble. A struggle we are continuously fighting every day, and they make a mockery of it. “I know what will work! Here Mr. Police Officer! Drink some Pepsi!” As if. Pepsi made a fool of themselves, and now their already dwindling fan base continues to ever shrink smaller.

R - Reframe your thoughts by choosing a different word, then write about that for one minute

You don’t know privilege until it’s gone. You don’t know privilege while it’s there—but you can and will be made accountable and aware. Don’t use it for evil. You are not stupid. Use it to do something. Kendall could’ve NOT done the commercial. Kendall could’ve released another commercial standing behind a black woman. Anything!

Exchange - Remember to discuss how this connects to our school song project and our previous discussions?

This connects two ways - 1) We want to convey a strong message. Be powerful. Show who we are. And Pepsi definitely tried. … Which leads to the second connection. 2) Not mess up and offend anyone, as had the one alma mater had been linked to black minstrels. We want to be amazing, but we have to be smart and careful and make sure we include everyone who goes to our school and everyone who may go to our school.

As a final step, students read and annotate the full article and compare it to their initial response.

Using current events and critical-thinking strategies like FIRE writing helps create a learning space where thinking is the goal rather than a score on a multiple-choice assessment. Critical-thinking skills can cross over to any of students’ other courses and into life outside the classroom. After all, we as teachers want to help the whole student be successful, and critical thinking is an important part of navigating life after they leave our classrooms.

‘Before-Explore-Explain’

Patrick Brown is the executive director of STEM and CTE for the Fort Zumwalt school district in Missouri and an experienced educator and author :

Planning for critical thinking focuses on teaching the most crucial science concepts, practices, and logical-thinking skills as well as the best use of instructional time. One way to ensure that lessons maintain a focus on critical thinking is to focus on the instructional sequence used to teach.

Explore-before-explain teaching is all about promoting critical thinking for learners to better prepare students for the reality of their world. What having an explore-before-explain mindset means is that in our planning, we prioritize giving students firsthand experiences with data, allow students to construct evidence-based claims that focus on conceptual understanding, and challenge students to discuss and think about the why behind phenomena.

Just think of the critical thinking that has to occur for students to construct a scientific claim. 1) They need the opportunity to collect data, analyze it, and determine how to make sense of what the data may mean. 2) With data in hand, students can begin thinking about the validity and reliability of their experience and information collected. 3) They can consider what differences, if any, they might have if they completed the investigation again. 4) They can scrutinize outlying data points for they may be an artifact of a true difference that merits further exploration of a misstep in the procedure, measuring device, or measurement. All of these intellectual activities help them form more robust understanding and are evidence of their critical thinking.

In explore-before-explain teaching, all of these hard critical-thinking tasks come before teacher explanations of content. Whether we use discovery experiences, problem-based learning, and or inquiry-based activities, strategies that are geared toward helping students construct understanding promote critical thinking because students learn content by doing the practices valued in the field to generate knowledge.

An Issue of Equity

Meg Riordan, Ph.D., is the chief learning officer at The Possible Project, an out-of-school program that collaborates with youth to build entrepreneurial skills and mindsets and provides pathways to careers and long-term economic prosperity. She has been in the field of education for over 25 years as a middle and high school teacher, school coach, college professor, regional director of N.Y.C. Outward Bound Schools, and director of external research with EL Education:

Although critical thinking often defies straightforward definition, most in the education field agree it consists of several components: reasoning, problem-solving, and decisionmaking, plus analysis and evaluation of information, such that multiple sides of an issue can be explored. It also includes dispositions and “the willingness to apply critical-thinking principles, rather than fall back on existing unexamined beliefs, or simply believe what you’re told by authority figures.”

Despite variation in definitions, critical thinking is nonetheless promoted as an essential outcome of students’ learning—we want to see students and adults demonstrate it across all fields, professions, and in their personal lives. Yet there is simultaneously a rationing of opportunities in schools for students of color, students from under-resourced communities, and other historically marginalized groups to deeply learn and practice critical thinking.

For example, many of our most underserved students often spend class time filling out worksheets, promoting high compliance but low engagement, inquiry, critical thinking, or creation of new ideas. At a time in our world when college and careers are critical for participation in society and the global, knowledge-based economy, far too many students struggle within classrooms and schools that reinforce low-expectations and inequity.

If educators aim to prepare all students for an ever-evolving marketplace and develop skills that will be valued no matter what tomorrow’s jobs are, then we must move critical thinking to the forefront of classroom experiences. And educators must design learning to cultivate it.

So, what does that really look like?

Unpack and define critical thinking

To understand critical thinking, educators need to first unpack and define its components. What exactly are we looking for when we speak about reasoning or exploring multiple perspectives on an issue? How does problem-solving show up in English, math, science, art, or other disciplines—and how is it assessed? At Two Rivers, an EL Education school, the faculty identified five constructs of critical thinking, defined each, and created rubrics to generate a shared picture of quality for teachers and students. The rubrics were then adapted across grade levels to indicate students’ learning progressions.

At Avenues World School, critical thinking is one of the Avenues World Elements and is an enduring outcome embedded in students’ early experiences through 12th grade. For instance, a kindergarten student may be expected to “identify cause and effect in familiar contexts,” while an 8th grader should demonstrate the ability to “seek out sufficient evidence before accepting a claim as true,” “identify bias in claims and evidence,” and “reconsider strongly held points of view in light of new evidence.”

When faculty and students embrace a common vision of what critical thinking looks and sounds like and how it is assessed, educators can then explicitly design learning experiences that call for students to employ critical-thinking skills. This kind of work must occur across all schools and programs, especially those serving large numbers of students of color. As Linda Darling-Hammond asserts , “Schools that serve large numbers of students of color are least likely to offer the kind of curriculum needed to ... help students attain the [critical-thinking] skills needed in a knowledge work economy. ”

So, what can it look like to create those kinds of learning experiences?

Designing experiences for critical thinking

After defining a shared understanding of “what” critical thinking is and “how” it shows up across multiple disciplines and grade levels, it is essential to create learning experiences that impel students to cultivate, practice, and apply these skills. There are several levers that offer pathways for teachers to promote critical thinking in lessons:

1.Choose Compelling Topics: Keep it relevant

A key Common Core State Standard asks for students to “write arguments to support claims in an analysis of substantive topics or texts using valid reasoning and relevant and sufficient evidence.” That might not sound exciting or culturally relevant. But a learning experience designed for a 12th grade humanities class engaged learners in a compelling topic— policing in America —to analyze and evaluate multiple texts (including primary sources) and share the reasoning for their perspectives through discussion and writing. Students grappled with ideas and their beliefs and employed deep critical-thinking skills to develop arguments for their claims. Embedding critical-thinking skills in curriculum that students care about and connect with can ignite powerful learning experiences.

2. Make Local Connections: Keep it real

At The Possible Project , an out-of-school-time program designed to promote entrepreneurial skills and mindsets, students in a recent summer online program (modified from in-person due to COVID-19) explored the impact of COVID-19 on their communities and local BIPOC-owned businesses. They learned interviewing skills through a partnership with Everyday Boston , conducted virtual interviews with entrepreneurs, evaluated information from their interviews and local data, and examined their previously held beliefs. They created blog posts and videos to reflect on their learning and consider how their mindsets had changed as a result of the experience. In this way, we can design powerful community-based learning and invite students into productive struggle with multiple perspectives.

3. Create Authentic Projects: Keep it rigorous

At Big Picture Learning schools, students engage in internship-based learning experiences as a central part of their schooling. Their school-based adviser and internship-based mentor support them in developing real-world projects that promote deeper learning and critical-thinking skills. Such authentic experiences teach “young people to be thinkers, to be curious, to get from curiosity to creation … and it helps students design a learning experience that answers their questions, [providing an] opportunity to communicate it to a larger audience—a major indicator of postsecondary success.” Even in a remote environment, we can design projects that ask more of students than rote memorization and that spark critical thinking.

Our call to action is this: As educators, we need to make opportunities for critical thinking available not only to the affluent or those fortunate enough to be placed in advanced courses. The tools are available, let’s use them. Let’s interrogate our current curriculum and design learning experiences that engage all students in real, relevant, and rigorous experiences that require critical thinking and prepare them for promising postsecondary pathways.

Critical Thinking & Student Engagement

Dr. PJ Caposey is an award-winning educator, keynote speaker, consultant, and author of seven books who currently serves as the superintendent of schools for the award-winning Meridian CUSD 223 in northwest Illinois. You can find PJ on most social-media platforms as MCUSDSupe:

When I start my keynote on student engagement, I invite two people up on stage and give them each five paper balls to shoot at a garbage can also conveniently placed on stage. Contestant One shoots their shot, and the audience gives approval. Four out of 5 is a heckuva score. Then just before Contestant Two shoots, I blindfold them and start moving the garbage can back and forth. I usually try to ensure that they can at least make one of their shots. Nobody is successful in this unfair environment.

I thank them and send them back to their seats and then explain that this little activity was akin to student engagement. While we all know we want student engagement, we are shooting at different targets. More importantly, for teachers, it is near impossible for them to hit a target that is moving and that they cannot see.

Within the world of education and particularly as educational leaders, we have failed to simplify what student engagement looks like, and it is impossible to define or articulate what student engagement looks like if we cannot clearly articulate what critical thinking is and looks like in a classroom. Because, simply, without critical thought, there is no engagement.

The good news here is that critical thought has been defined and placed into taxonomies for decades already. This is not something new and not something that needs to be redefined. I am a Bloom’s person, but there is nothing wrong with DOK or some of the other taxonomies, either. To be precise, I am a huge fan of Daggett’s Rigor and Relevance Framework. I have used that as a core element of my practice for years, and it has shaped who I am as an instructional leader.

So, in order to explain critical thought, a teacher or a leader must familiarize themselves with these tried and true taxonomies. Easy, right? Yes, sort of. The issue is not understanding what critical thought is; it is the ability to integrate it into the classrooms. In order to do so, there are a four key steps every educator must take.

- Integrating critical thought/rigor into a lesson does not happen by chance, it happens by design. Planning for critical thought and engagement is much different from planning for a traditional lesson. In order to plan for kids to think critically, you have to provide a base of knowledge and excellent prompts to allow them to explore their own thinking in order to analyze, evaluate, or synthesize information.

- SIDE NOTE – Bloom’s verbs are a great way to start when writing objectives, but true planning will take you deeper than this.

QUESTIONING

- If the questions and prompts given in a classroom have correct answers or if the teacher ends up answering their own questions, the lesson will lack critical thought and rigor.

- Script five questions forcing higher-order thought prior to every lesson. Experienced teachers may not feel they need this, but it helps to create an effective habit.

- If lessons are rigorous and assessments are not, students will do well on their assessments, and that may not be an accurate representation of the knowledge and skills they have mastered. If lessons are easy and assessments are rigorous, the exact opposite will happen. When deciding to increase critical thought, it must happen in all three phases of the game: planning, instruction, and assessment.

TALK TIME / CONTROL

- To increase rigor, the teacher must DO LESS. This feels counterintuitive but is accurate. Rigorous lessons involving tons of critical thought must allow for students to work on their own, collaborate with peers, and connect their ideas. This cannot happen in a silent room except for the teacher talking. In order to increase rigor, decrease talk time and become comfortable with less control. Asking questions and giving prompts that lead to no true correct answer also means less control. This is a tough ask for some teachers. Explained differently, if you assign one assignment and get 30 very similar products, you have most likely assigned a low-rigor recipe. If you assign one assignment and get multiple varied products, then the students have had a chance to think deeply, and you have successfully integrated critical thought into your classroom.

Thanks to Dara, Patrick, Meg, and PJ for their contributions!

Please feel free to leave a comment with your reactions to the topic or directly to anything that has been said in this post.

Consider contributing a question to be answered in a future post. You can send one to me at [email protected] . When you send it in, let me know if I can use your real name if it’s selected or if you’d prefer remaining anonymous and have a pseudonym in mind.

You can also contact me on Twitter at @Larryferlazzo .

Education Week has published a collection of posts from this blog, along with new material, in an e-book form. It’s titled Classroom Management Q&As: Expert Strategies for Teaching .

Just a reminder; you can subscribe and receive updates from this blog via email (The RSS feed for this blog, and for all Ed Week articles, has been changed by the new redesign—new ones won’t be available until February). And if you missed any of the highlights from the first nine years of this blog, you can see a categorized list below.

- This Year’s Most Popular Q&A Posts

- Race & Racism in Schools

- School Closures & the Coronavirus Crisis

- Classroom-Management Advice

- Best Ways to Begin the School Year

- Best Ways to End the School Year

- Student Motivation & Social-Emotional Learning

- Implementing the Common Core

- Facing Gender Challenges in Education

- Teaching Social Studies

- Cooperative & Collaborative Learning

- Using Tech in the Classroom

- Student Voices

- Parent Engagement in Schools

- Teaching English-Language Learners

- Reading Instruction

- Writing Instruction

- Education Policy Issues

- Differentiating Instruction

- Math Instruction

- Science Instruction

- Advice for New Teachers

- Author Interviews

- Entering the Teaching Profession

- The Inclusive Classroom

- Learning & the Brain

- Administrator Leadership

- Teacher Leadership

- Relationships in Schools

- Professional Development

- Instructional Strategies

- Best of Classroom Q&A

- Professional Collaboration

- Classroom Organization

- Mistakes in Education

- Project-Based Learning

I am also creating a Twitter list including all contributors to this column .

The opinions expressed in Classroom Q&A With Larry Ferlazzo are strictly those of the author(s) and do not reflect the opinions or endorsement of Editorial Projects in Education, or any of its publications.

Sign Up for EdWeek Update

Edweek top school jobs.

Sign Up & Sign In

The Institute for Learning and Teaching

College of business, teaching tips, the socratic method: fostering critical thinking.

"Do not take what I say as if I were merely playing, for you see the subject of our discussion—and on what subject should even a man of slight intelligence be more serious? —namely, what kind of life should one live . . ." Socrates

By Peter Conor

This teaching tip explores how the Socratic Method can be used to promote critical thinking in classroom discussions. It is based on the article, The Socratic Method: What it is and How to Use it in the Classroom, published in the newsletter, Speaking of Teaching, a publication of the Stanford Center for Teaching and Learning (CTL).

The article summarizes a talk given by Political Science professor Rob Reich, on May 22, 2003, as part of the center’s Award Winning Teachers on Teaching lecture series. Reich, the recipient of the 2001 Walter J. Gores Award for Teaching Excellence, describes four essential components of the Socratic method and urges his audience to “creatively reclaim [the method] as a relevant framework” to be used in the classroom.

What is the Socratic Method?

Developed by the Greek philosopher, Socrates, the Socratic Method is a dialogue between teacher and students, instigated by the continual probing questions of the teacher, in a concerted effort to explore the underlying beliefs that shape the students views and opinions. Though often misunderstood, most Western pedagogical tradition, from Plato on, is based on this dialectical method of questioning.

An extreme version of this technique is employed by the infamous professor, Dr. Kingsfield, portrayed by John Houseman in the 1973 movie, “The Paper Chase.” In order to get at the heart of ethical dilemmas and the principles of moral character, Dr. Kingsfield terrorizes and humiliates his law students by painfully grilling them on the details and implications of legal cases.

In his lecture, Reich describes a kinder, gentler Socratic Method, pointing out the following:

- Socratic inquiry is not “teaching” per se. It does not include PowerPoint driven lectures, detailed lesson plans or rote memorization. The teacher is neither “the sage on the stage” nor “the guide on the side.” The students are not passive recipients of knowledge.

- The Socratic Method involves a shared dialogue between teacher and students. The teacher leads by posing thought-provoking questions. Students actively engage by asking questions of their own. The discussion goes back and forth.

- The Socratic Method says Reich, “is better used to demonstrate complexity, difficulty, and uncertainty than to elicit facts about the world.” The aim of the questioning is to probe the underlying beliefs upon which each participant’s statements, arguments and assumptions are built.

- The classroom environment is characterized by “productive discomfort,” not intimidation. The Socratic professor does not have all the answers and is not merely “testing” the students. The questioning proceeds open-ended with no pre-determined goal.

- The focus is not on the participants’ statements but on the value system that underpins their beliefs, actions, and decisions. For this reason, any successful challenge to this system comes with high stakes—one might have to examine and change one’s life, but, Socrates is famous for saying, “the unexamined life is not worth living.”

- “The Socratic professor,” Reich states, “is not the opponent in an argument, nor someone who always plays devil’s advocate, saying essentially: ‘If you affirm it, I deny it. If you deny it, I affirm it.’ This happens sometimes, but not as a matter of pedagogical principle.”

Professor Reich also provides ten tips for fostering critical thinking in the classroom. While no longer available on Stanford’s website, the full article can be found on the web archive: The Socratic Method: What it is and How to Use it in the classroom

- More Teaching Tips

- Tags: communication , critical thinking , learning

- Categories: Instructional Strategies , Teaching Effectiveness , Teaching Tips

Center for Teaching

The teaching exchange: fostering critical thinking.

This article was originally published in the Fall 1999 issue of the CFT’s newsletter, Teaching Forum.

The Teaching Exchange is a forum for teachers at Vanderbilt to share their pedagogical strategies, experiments, and discoveries. Every issue will highlight innovations in teaching across the campus. This ‘exchange’ offers strategies from several different instructors for fostering critical thinking among students.

George Becker , Associate Professor of Sociology There are two general approaches that I find helpful in producing a classroom setting conductive to critical inquiry. These involve 1) the establishment of an environment in which both parties, student and teacher, function as partners in inquiry, and 2) the employment of a set of questioning strategies specifically geared to the acquisition of higher-order thinking and reasoning skills.

Central to making students feel they are partners in a community of learners is the creation of a climate of trust, so that students feel safe in offering their own ideas. I try to foster a sense of “we-feeling” by asking, for example, “How can we explain this development? What does it mean to us?” Using plural pronouns creates a dialogue that has less of an adversarial tone and underscores the idea of students and teachers as partners in inquiry. I have also found that learning student names as quickly as possible is essential for developing trust. At the beginning of each semester, I ask everyone to bring me a small snapshot (photocopied student IDs work well), and I can review the photos prior to each class. Student compliance is, of course, voluntary.

I give students a rationale for the value of an interactive classroom. I assure them that interaction is not designed to embarrass them, but rather to facilitate learning and make the subject matter more interesting. This lets students know they have some control over class proceedings and that their insights and contributions will be validated in our mutual quest for understanding.

One particularly effective strategy, adopted from my colleague Larry Griffin, is to provide students with the option to “pass” on a particular question. Interestingly, I find that while students welcome this option, they rarely invoke it. It does serve as a motivator as well as an opportunity to exercise reasonable decision making. Another key ingredient is the element of humor. Laughter causes the release of certain chemicals in the brain that help build long-term memory. I try to let humor evolve naturally from content-related dialogue and present it in a good-natured fashion.

The practice of questioning is also central to the development of critical thinking. There are two relatively simple strategies, dealing with aspects of the question-and-answer sequence, that I have found work well: 1) careful design of the questions, and 2) providing sufficient response time.

Preparing for a class, I construct several pivotal questions that address the key facts and concepts of the lesson. These are designed to help students apply their knowledge and understanding of the course content at the levels of analysis, synthesis, and evaluation. Questions seeking to engage students in analysis usually contain such words as interpret, discover, compare, and contrast. Questions designed to facilitate synthesis usually contain such words as imagine, formulate, generalize, of hypothesize. Questions directed toward evaluative thought generally contain terms such as judge, assess, revise, and criticize.

To maximize the value of questioning, the issue of response time is critical. From the teacher’s point of view, it is considered “wait” time, while for the student, it is “think” time, the time it takes to formulate a response. I make it a practice to build in two specific blocks of wait/think time for each questioning episode. Once I ask a pivotal question, I try to remain silent for three to five seconds to allow students to formulate their answers. When a student responds, I pause for a second time, again without comment of reaction. This prompts further thought and comment on the part of the student and provides an opportunity for others in the class to continue thinking of additional responses.

Leonard Folgarait , Professor and Chair of Fine Arts The following is excerpted from a presentation on “Effective Learning Strategies” offered as part of the Junior Faculty Teaching Series, sponsored by the Center for Teaching and Vanderbilt Alumni Fund. The focus session was co-facilitated by Prof. Folgarait as invited senior faculty.

Even a lecture can be an opportunity to encourage critical thinking among students, as long as the teacher takes the time to be very intentional in planning the content, organization, and presentation on such a way as to promote an interactive experience.

Regarding content, I keep two things in mind: students need objective information, such as historical dates, but they also need a larger, conceptual framework to tie the facts together and produce meaning. This easier in the humanities, but it is possible in any discipline. Our concern should be that the objective information tell us something about the human condition: Why are science and math, for example, important to us?

I try to organize along a theme for the course as a whole and for every lecture, so that each class is self-contained and cohesive, and so that the lectures relate to each other in terms of overall theme, remembering that these are generalizations and need specific, concrete examples.

I try to involve students and create an interactive environment before asking questions that elicit both simple and complex responses. For example, a question seeking a simple answer would be, “What political system was overthrown by the French Revolution of 1789?” A more complex response would be generated by asking, “How do we, today, experience the results of that revolution?” I try to do this often, so that students are given a voice and feel empowered enough to risk thinking critically during a dynamic lecture experience.

HOME | ABOUT CFT | PROGRAMS | SERVICES | RESOURCES

Teaching Guides

- Online Course Development Resources

- Principles & Frameworks

- Pedagogies & Strategies

- Reflecting & Assessing

- Challenges & Opportunities

- Populations & Contexts

Quick Links

- Services for Departments and Schools

- Examples of Online Instructional Modules

Education in the 21st Century pp 29–47 Cite as

Fostering Students’ Creativity and Critical Thinking in Science Education

- Stéphan Vincent-Lancrin 6

- First Online: 31 January 2022

920 Accesses

1 Citations

What does it mean to redesign teaching and learning within existing science curricula (and learning objectives) so that students have more space and appropriate tasks to develop their creative and critical thinking skills? The chapter begins by describing the development of a portfolio of rubrics on creativity and critical thinking, including a conceptual rubric on science tested in primary and secondary education in 11 countries. Teachers in school networks adopted teaching and learning strategies aligned to the development of creativity and critical thinking, to these OECD rubrics. Examples of lesson plans and pedagogies that were developed are given, and some key challenges for teachers and learners are reflected on.

- Critical thinking

- Science education

- Innovation in education

- Lesson plans

The analyses given and the opinions expressed in this chapter are those of the author and do not necessarily reflect the views of the OECD and of its members.

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Many project-based science units/ courses initially develop “Driving Questions” to contextualise the unit and give learners opportunities to connect the unit to their own experiences and prior ideas.

Adler, L., Bayer, I., Peek-Brown, D., Lee, J., & Krajcik, J. (2017). What controls my health . https://www.oecd.org/education/What-Controls-My-Health.pdf

Davies, M. (2015). In R. Barnett (Ed.), The Palgrave handbook of critical thinking in higher education . Palgrave Macmillan.

Chapter Google Scholar

Dennett, D. C. (2013). Intuition pumps and other tools for thinking . England: Penguin.

Google Scholar

Ennis, R. (1996). Critical thinking . Upper Saddle River, NJ: Prentice-Hall.

Ennis, R. (2018). Critical thinking across the curriculum: A vision. Topoi, 37 (1), 165–184. https://doi.org/10.1007/s11245-016-9401-4 .

Article Google Scholar

Facione, P.A. (1990). Critical thinking: A statement of expert consensus for purposes of educational assessment and instruction . Research findings and recommendations prepared for the Committee on Pre-College Philosophy of the American Philosophical Association. Retrieved from http://www.eric.ed.gov/ERICWebPortal/detail?accno=ED315423

Feynman, R. (1963). The Feynman lectures on physics . (Volume I: The New Millennium Edition: Mainly Mechanics, Radiation, and Heat.). Basic Books.

Feynman, R. (1955). The value of science. In R. Leighton (Ed.), What do you care what other people think? Further adventures of a curious character (pp. 240–257). Penguin Books.

Fullan, M., Quinn, J., & McEachen, J. (2018). Deep learning: Engage the world, change the world . Corwin Press and Ontario Principals’ Council.

Guilford, J. P. (1950). Creativity. American Psychologist, 5 (9), 444–454. https://doi.org/10.1037/h0063487 .

Hitchcock, D. (2018). Critical thinking. In Zalta, E.N. (ed.), The Stanford encyclopedia of philosophy (Fall 2018 Edition). Retrieved from : https://plato.stanford.edu/archives/fall2018/entries/critical-thinking .

Kelley, T. (2001). The art of innovation: Lessons in creativity from IDEO . Currency: America’s leading design firm.

Lubart, T. (2000). Models of the creative process: Past, present and future. Creativity Research Journal, 13 (3–4), 295–308. https://doi.org/10.1207/S15326934CRJ1334_07 .

Lucas, B., Claxton, G., & Spencer, E. (2013). Progression in student creativity in school: First steps towards new forms of formative assessments. In OECD education working papers, 86 . Paris: OECD. https://doi.org/10.1787/5k4dp59msdwk-en .

Lucas, B., & Spencer, E. (2017). Teaching creative thinking: Developing learners who generate ideas and can think critically . England: Crown House Publishing.

McPeck, J. E. (1981). Critical thinking and education . New York: St. Martin’s.

Mednick, S. A. (1962). The associative basis of the creative process. Psychological Review, 69 (3), 220–232. https://doi.org/10.1037/h0048850 .

Newton, L. D., & Newton, D. P. (2014). Creativity in 21st century education. Prospects, 44 (4), 575–589. https://doi.org/10.1007/s11125-014-9322-1 .

Paddock, W., Erwin, S., Bielik, T., & Krajcik, J. (2019). Evaporative cooling . Retrieved from : https://www.oecd.org/education/Evaporative-Cooling.pdf

Rennie, L. (2020). Communicating certainty and uncertainty in science in out-of-school contexts. In D. Corrigan, C. Buntting, A. Jones, & A. Fitzgerald (Eds.), Values in science education: The shifting sands (pp. 7–30). Cham, Switzerland: Springer.

Runco, M. A. (2009). Critical thinking. In M. A. Runco & S. R. Pritzker (Eds.), Encyclopedia of creativity (pp. 449–452) . Academic.

Schneider, B., Krajcik, J., Lavonen, J., & Samela-Aro, K. (2020). Learning science: The value of crafting engagement in science environments . United States: Yale University.

Book Google Scholar

Sternberg, R. J., & Lubart, T. (1999). The concept of creativity: Prospects and paradigm. In R. J. Sternberg (Ed.), Handbook of creativity (pp. 3–14). England: Cambridge University.

Torrance, E. P. (1966). Torrance tests of creative thinking: Norms. Technical manual research edition; Verbal Tests, Forms A and B, Figural Tests, Forms A and B . Princeton, NJ: Personnel.

Torrance, E. P. (1970). Encouraging creativity in the classroom . United States: W.C. Brown.

Vincent-Lancrin, S., González-Sancho, C., Bouckaert, M., de Luca, F., Fernández-Barrerra, M., Jacotin, G., Urgel, J., & Vidal, Q. (2019). Fostering students’ creativity and critical thinking in education: What it means in school . Paris: OECD. https://doi.org/10.1787/62212c37-en .

Download references

Author information

Authors and affiliations.

Directorate for Education and Skills, Organisation for Economic Co-operation and Development, Paris, France

Stéphan Vincent-Lancrin

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Stéphan Vincent-Lancrin .

Editor information

Editors and affiliations.

Monash University, Clayton, VIC, Australia

Amanda Berry

University of Waikato, Hamilton, New Zealand

Cathy Buntting

Deborah Corrigan

Richard Gunstone

Alister Jones

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter.

Vincent-Lancrin, S. (2021). Fostering Students’ Creativity and Critical Thinking in Science Education. In: Berry, A., Buntting, C., Corrigan, D., Gunstone, R., Jones, A. (eds) Education in the 21st Century. Springer, Cham. https://doi.org/10.1007/978-3-030-85300-6_3

Download citation

DOI : https://doi.org/10.1007/978-3-030-85300-6_3

Published : 31 January 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-85299-3

Online ISBN : 978-3-030-85300-6

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Open access

- Published: 11 January 2023

The effectiveness of collaborative problem solving in promoting students’ critical thinking: A meta-analysis based on empirical literature

- Enwei Xu ORCID: orcid.org/0000-0001-6424-8169 1 ,

- Wei Wang 1 &

- Qingxia Wang 1

Humanities and Social Sciences Communications volume 10 , Article number: 16 ( 2023 ) Cite this article

12k Accesses

9 Citations

3 Altmetric

Metrics details

- Science, technology and society

Collaborative problem-solving has been widely embraced in the classroom instruction of critical thinking, which is regarded as the core of curriculum reform based on key competencies in the field of education as well as a key competence for learners in the 21st century. However, the effectiveness of collaborative problem-solving in promoting students’ critical thinking remains uncertain. This current research presents the major findings of a meta-analysis of 36 pieces of the literature revealed in worldwide educational periodicals during the 21st century to identify the effectiveness of collaborative problem-solving in promoting students’ critical thinking and to determine, based on evidence, whether and to what extent collaborative problem solving can result in a rise or decrease in critical thinking. The findings show that (1) collaborative problem solving is an effective teaching approach to foster students’ critical thinking, with a significant overall effect size (ES = 0.82, z = 12.78, P < 0.01, 95% CI [0.69, 0.95]); (2) in respect to the dimensions of critical thinking, collaborative problem solving can significantly and successfully enhance students’ attitudinal tendencies (ES = 1.17, z = 7.62, P < 0.01, 95% CI[0.87, 1.47]); nevertheless, it falls short in terms of improving students’ cognitive skills, having only an upper-middle impact (ES = 0.70, z = 11.55, P < 0.01, 95% CI[0.58, 0.82]); and (3) the teaching type (chi 2 = 7.20, P < 0.05), intervention duration (chi 2 = 12.18, P < 0.01), subject area (chi 2 = 13.36, P < 0.05), group size (chi 2 = 8.77, P < 0.05), and learning scaffold (chi 2 = 9.03, P < 0.01) all have an impact on critical thinking, and they can be viewed as important moderating factors that affect how critical thinking develops. On the basis of these results, recommendations are made for further study and instruction to better support students’ critical thinking in the context of collaborative problem-solving.

Similar content being viewed by others

Fostering twenty-first century skills among primary school students through math project-based learning

Nadia Rehman, Wenlan Zhang, … Samia Batool

A meta-analysis to gauge the impact of pedagogies employed in mixed-ability high school biology classrooms

Malavika E. Santhosh, Jolly Bhadra, … Noora Al-Thani

A guide to critical thinking: implications for dental education

Deborah Martin

Introduction

Although critical thinking has a long history in research, the concept of critical thinking, which is regarded as an essential competence for learners in the 21st century, has recently attracted more attention from researchers and teaching practitioners (National Research Council, 2012 ). Critical thinking should be the core of curriculum reform based on key competencies in the field of education (Peng and Deng, 2017 ) because students with critical thinking can not only understand the meaning of knowledge but also effectively solve practical problems in real life even after knowledge is forgotten (Kek and Huijser, 2011 ). The definition of critical thinking is not universal (Ennis, 1989 ; Castle, 2009 ; Niu et al., 2013 ). In general, the definition of critical thinking is a self-aware and self-regulated thought process (Facione, 1990 ; Niu et al., 2013 ). It refers to the cognitive skills needed to interpret, analyze, synthesize, reason, and evaluate information as well as the attitudinal tendency to apply these abilities (Halpern, 2001 ). The view that critical thinking can be taught and learned through curriculum teaching has been widely supported by many researchers (e.g., Kuncel, 2011 ; Leng and Lu, 2020 ), leading to educators’ efforts to foster it among students. In the field of teaching practice, there are three types of courses for teaching critical thinking (Ennis, 1989 ). The first is an independent curriculum in which critical thinking is taught and cultivated without involving the knowledge of specific disciplines; the second is an integrated curriculum in which critical thinking is integrated into the teaching of other disciplines as a clear teaching goal; and the third is a mixed curriculum in which critical thinking is taught in parallel to the teaching of other disciplines for mixed teaching training. Furthermore, numerous measuring tools have been developed by researchers and educators to measure critical thinking in the context of teaching practice. These include standardized measurement tools, such as WGCTA, CCTST, CCTT, and CCTDI, which have been verified by repeated experiments and are considered effective and reliable by international scholars (Facione and Facione, 1992 ). In short, descriptions of critical thinking, including its two dimensions of attitudinal tendency and cognitive skills, different types of teaching courses, and standardized measurement tools provide a complex normative framework for understanding, teaching, and evaluating critical thinking.

Cultivating critical thinking in curriculum teaching can start with a problem, and one of the most popular critical thinking instructional approaches is problem-based learning (Liu et al., 2020 ). Duch et al. ( 2001 ) noted that problem-based learning in group collaboration is progressive active learning, which can improve students’ critical thinking and problem-solving skills. Collaborative problem-solving is the organic integration of collaborative learning and problem-based learning, which takes learners as the center of the learning process and uses problems with poor structure in real-world situations as the starting point for the learning process (Liang et al., 2017 ). Students learn the knowledge needed to solve problems in a collaborative group, reach a consensus on problems in the field, and form solutions through social cooperation methods, such as dialogue, interpretation, questioning, debate, negotiation, and reflection, thus promoting the development of learners’ domain knowledge and critical thinking (Cindy, 2004 ; Liang et al., 2017 ).

Collaborative problem-solving has been widely used in the teaching practice of critical thinking, and several studies have attempted to conduct a systematic review and meta-analysis of the empirical literature on critical thinking from various perspectives. However, little attention has been paid to the impact of collaborative problem-solving on critical thinking. Therefore, the best approach for developing and enhancing critical thinking throughout collaborative problem-solving is to examine how to implement critical thinking instruction; however, this issue is still unexplored, which means that many teachers are incapable of better instructing critical thinking (Leng and Lu, 2020 ; Niu et al., 2013 ). For example, Huber ( 2016 ) provided the meta-analysis findings of 71 publications on gaining critical thinking over various time frames in college with the aim of determining whether critical thinking was truly teachable. These authors found that learners significantly improve their critical thinking while in college and that critical thinking differs with factors such as teaching strategies, intervention duration, subject area, and teaching type. The usefulness of collaborative problem-solving in fostering students’ critical thinking, however, was not determined by this study, nor did it reveal whether there existed significant variations among the different elements. A meta-analysis of 31 pieces of educational literature was conducted by Liu et al. ( 2020 ) to assess the impact of problem-solving on college students’ critical thinking. These authors found that problem-solving could promote the development of critical thinking among college students and proposed establishing a reasonable group structure for problem-solving in a follow-up study to improve students’ critical thinking. Additionally, previous empirical studies have reached inconclusive and even contradictory conclusions about whether and to what extent collaborative problem-solving increases or decreases critical thinking levels. As an illustration, Yang et al. ( 2008 ) carried out an experiment on the integrated curriculum teaching of college students based on a web bulletin board with the goal of fostering participants’ critical thinking in the context of collaborative problem-solving. These authors’ research revealed that through sharing, debating, examining, and reflecting on various experiences and ideas, collaborative problem-solving can considerably enhance students’ critical thinking in real-life problem situations. In contrast, collaborative problem-solving had a positive impact on learners’ interaction and could improve learning interest and motivation but could not significantly improve students’ critical thinking when compared to traditional classroom teaching, according to research by Naber and Wyatt ( 2014 ) and Sendag and Odabasi ( 2009 ) on undergraduate and high school students, respectively.

The above studies show that there is inconsistency regarding the effectiveness of collaborative problem-solving in promoting students’ critical thinking. Therefore, it is essential to conduct a thorough and trustworthy review to detect and decide whether and to what degree collaborative problem-solving can result in a rise or decrease in critical thinking. Meta-analysis is a quantitative analysis approach that is utilized to examine quantitative data from various separate studies that are all focused on the same research topic. This approach characterizes the effectiveness of its impact by averaging the effect sizes of numerous qualitative studies in an effort to reduce the uncertainty brought on by independent research and produce more conclusive findings (Lipsey and Wilson, 2001 ).

This paper used a meta-analytic approach and carried out a meta-analysis to examine the effectiveness of collaborative problem-solving in promoting students’ critical thinking in order to make a contribution to both research and practice. The following research questions were addressed by this meta-analysis:

What is the overall effect size of collaborative problem-solving in promoting students’ critical thinking and its impact on the two dimensions of critical thinking (i.e., attitudinal tendency and cognitive skills)?

How are the disparities between the study conclusions impacted by various moderating variables if the impacts of various experimental designs in the included studies are heterogeneous?

This research followed the strict procedures (e.g., database searching, identification, screening, eligibility, merging, duplicate removal, and analysis of included studies) of Cooper’s ( 2010 ) proposed meta-analysis approach for examining quantitative data from various separate studies that are all focused on the same research topic. The relevant empirical research that appeared in worldwide educational periodicals within the 21st century was subjected to this meta-analysis using Rev-Man 5.4. The consistency of the data extracted separately by two researchers was tested using Cohen’s kappa coefficient, and a publication bias test and a heterogeneity test were run on the sample data to ascertain the quality of this meta-analysis.

Data sources and search strategies

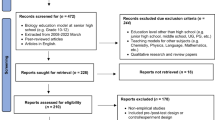

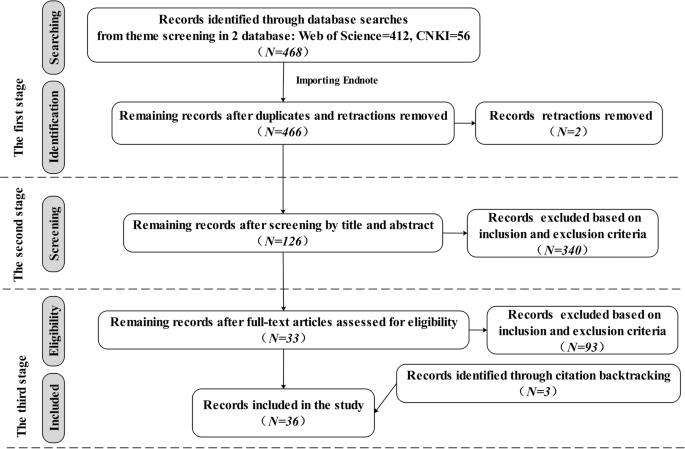

There were three stages to the data collection process for this meta-analysis, as shown in Fig. 1 , which shows the number of articles included and eliminated during the selection process based on the statement and study eligibility criteria.

This flowchart shows the number of records identified, included and excluded in the article.

First, the databases used to systematically search for relevant articles were the journal papers of the Web of Science Core Collection and the Chinese Core source journal, as well as the Chinese Social Science Citation Index (CSSCI) source journal papers included in CNKI. These databases were selected because they are credible platforms that are sources of scholarly and peer-reviewed information with advanced search tools and contain literature relevant to the subject of our topic from reliable researchers and experts. The search string with the Boolean operator used in the Web of Science was “TS = (((“critical thinking” or “ct” and “pretest” or “posttest”) or (“critical thinking” or “ct” and “control group” or “quasi experiment” or “experiment”)) and (“collaboration” or “collaborative learning” or “CSCL”) and (“problem solving” or “problem-based learning” or “PBL”))”. The research area was “Education Educational Research”, and the search period was “January 1, 2000, to December 30, 2021”. A total of 412 papers were obtained. The search string with the Boolean operator used in the CNKI was “SU = (‘critical thinking’*‘collaboration’ + ‘critical thinking’*‘collaborative learning’ + ‘critical thinking’*‘CSCL’ + ‘critical thinking’*‘problem solving’ + ‘critical thinking’*‘problem-based learning’ + ‘critical thinking’*‘PBL’ + ‘critical thinking’*‘problem oriented’) AND FT = (‘experiment’ + ‘quasi experiment’ + ‘pretest’ + ‘posttest’ + ‘empirical study’)” (translated into Chinese when searching). A total of 56 studies were found throughout the search period of “January 2000 to December 2021”. From the databases, all duplicates and retractions were eliminated before exporting the references into Endnote, a program for managing bibliographic references. In all, 466 studies were found.

Second, the studies that matched the inclusion and exclusion criteria for the meta-analysis were chosen by two researchers after they had reviewed the abstracts and titles of the gathered articles, yielding a total of 126 studies.

Third, two researchers thoroughly reviewed each included article’s whole text in accordance with the inclusion and exclusion criteria. Meanwhile, a snowball search was performed using the references and citations of the included articles to ensure complete coverage of the articles. Ultimately, 36 articles were kept.

Two researchers worked together to carry out this entire process, and a consensus rate of almost 94.7% was reached after discussion and negotiation to clarify any emerging differences.

Eligibility criteria

Since not all the retrieved studies matched the criteria for this meta-analysis, eligibility criteria for both inclusion and exclusion were developed as follows:

The publication language of the included studies was limited to English and Chinese, and the full text could be obtained. Articles that did not meet the publication language and articles not published between 2000 and 2021 were excluded.

The research design of the included studies must be empirical and quantitative studies that can assess the effect of collaborative problem-solving on the development of critical thinking. Articles that could not identify the causal mechanisms by which collaborative problem-solving affects critical thinking, such as review articles and theoretical articles, were excluded.

The research method of the included studies must feature a randomized control experiment or a quasi-experiment, or a natural experiment, which have a higher degree of internal validity with strong experimental designs and can all plausibly provide evidence that critical thinking and collaborative problem-solving are causally related. Articles with non-experimental research methods, such as purely correlational or observational studies, were excluded.

The participants of the included studies were only students in school, including K-12 students and college students. Articles in which the participants were non-school students, such as social workers or adult learners, were excluded.

The research results of the included studies must mention definite signs that may be utilized to gauge critical thinking’s impact (e.g., sample size, mean value, or standard deviation). Articles that lacked specific measurement indicators for critical thinking and could not calculate the effect size were excluded.

Data coding design

In order to perform a meta-analysis, it is necessary to collect the most important information from the articles, codify that information’s properties, and convert descriptive data into quantitative data. Therefore, this study designed a data coding template (see Table 1 ). Ultimately, 16 coding fields were retained.

The designed data-coding template consisted of three pieces of information. Basic information about the papers was included in the descriptive information: the publishing year, author, serial number, and title of the paper.

The variable information for the experimental design had three variables: the independent variable (instruction method), the dependent variable (critical thinking), and the moderating variable (learning stage, teaching type, intervention duration, learning scaffold, group size, measuring tool, and subject area). Depending on the topic of this study, the intervention strategy, as the independent variable, was coded into collaborative and non-collaborative problem-solving. The dependent variable, critical thinking, was coded as a cognitive skill and an attitudinal tendency. And seven moderating variables were created by grouping and combining the experimental design variables discovered within the 36 studies (see Table 1 ), where learning stages were encoded as higher education, high school, middle school, and primary school or lower; teaching types were encoded as mixed courses, integrated courses, and independent courses; intervention durations were encoded as 0–1 weeks, 1–4 weeks, 4–12 weeks, and more than 12 weeks; group sizes were encoded as 2–3 persons, 4–6 persons, 7–10 persons, and more than 10 persons; learning scaffolds were encoded as teacher-supported learning scaffold, technique-supported learning scaffold, and resource-supported learning scaffold; measuring tools were encoded as standardized measurement tools (e.g., WGCTA, CCTT, CCTST, and CCTDI) and self-adapting measurement tools (e.g., modified or made by researchers); and subject areas were encoded according to the specific subjects used in the 36 included studies.

The data information contained three metrics for measuring critical thinking: sample size, average value, and standard deviation. It is vital to remember that studies with various experimental designs frequently adopt various formulas to determine the effect size. And this paper used Morris’ proposed standardized mean difference (SMD) calculation formula ( 2008 , p. 369; see Supplementary Table S3 ).

Procedure for extracting and coding data

According to the data coding template (see Table 1 ), the 36 papers’ information was retrieved by two researchers, who then entered them into Excel (see Supplementary Table S1 ). The results of each study were extracted separately in the data extraction procedure if an article contained numerous studies on critical thinking, or if a study assessed different critical thinking dimensions. For instance, Tiwari et al. ( 2010 ) used four time points, which were viewed as numerous different studies, to examine the outcomes of critical thinking, and Chen ( 2013 ) included the two outcome variables of attitudinal tendency and cognitive skills, which were regarded as two studies. After discussion and negotiation during data extraction, the two researchers’ consistency test coefficients were roughly 93.27%. Supplementary Table S2 details the key characteristics of the 36 included articles with 79 effect quantities, including descriptive information (e.g., the publishing year, author, serial number, and title of the paper), variable information (e.g., independent variables, dependent variables, and moderating variables), and data information (e.g., mean values, standard deviations, and sample size). Following that, testing for publication bias and heterogeneity was done on the sample data using the Rev-Man 5.4 software, and then the test results were used to conduct a meta-analysis.

Publication bias test

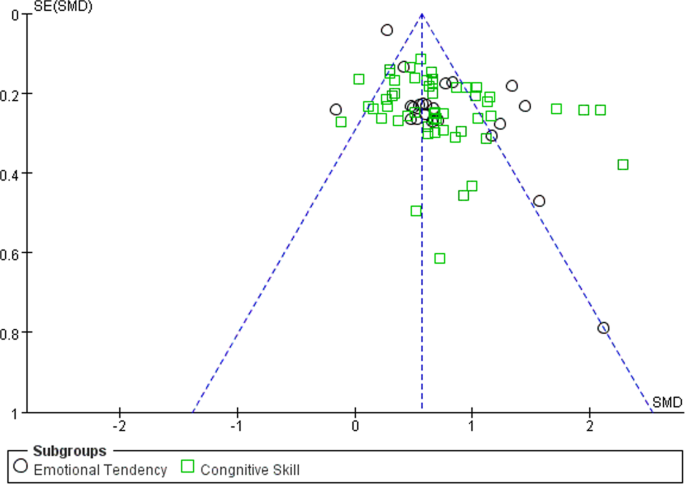

When the sample of studies included in a meta-analysis does not accurately reflect the general status of research on the relevant subject, publication bias is said to be exhibited in this research. The reliability and accuracy of the meta-analysis may be impacted by publication bias. Due to this, the meta-analysis needs to check the sample data for publication bias (Stewart et al., 2006 ). A popular method to check for publication bias is the funnel plot; and it is unlikely that there will be publishing bias when the data are equally dispersed on either side of the average effect size and targeted within the higher region. The data are equally dispersed within the higher portion of the efficient zone, consistent with the funnel plot connected with this analysis (see Fig. 2 ), indicating that publication bias is unlikely in this situation.

This funnel plot shows the result of publication bias of 79 effect quantities across 36 studies.

Heterogeneity test

To select the appropriate effect models for the meta-analysis, one might use the results of a heterogeneity test on the data effect sizes. In a meta-analysis, it is common practice to gauge the degree of data heterogeneity using the I 2 value, and I 2 ≥ 50% is typically understood to denote medium-high heterogeneity, which calls for the adoption of a random effect model; if not, a fixed effect model ought to be applied (Lipsey and Wilson, 2001 ). The findings of the heterogeneity test in this paper (see Table 2 ) revealed that I 2 was 86% and displayed significant heterogeneity ( P < 0.01). To ensure accuracy and reliability, the overall effect size ought to be calculated utilizing the random effect model.

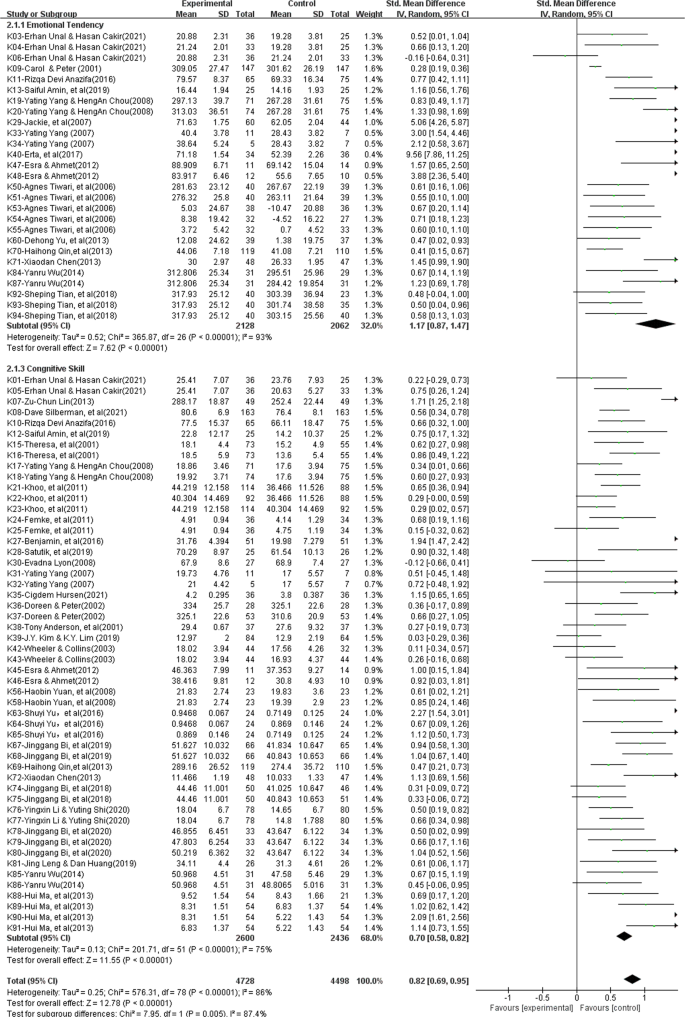

The analysis of the overall effect size

This meta-analysis utilized a random effect model to examine 79 effect quantities from 36 studies after eliminating heterogeneity. In accordance with Cohen’s criterion (Cohen, 1992 ), it is abundantly clear from the analysis results, which are shown in the forest plot of the overall effect (see Fig. 3 ), that the cumulative impact size of cooperative problem-solving is 0.82, which is statistically significant ( z = 12.78, P < 0.01, 95% CI [0.69, 0.95]), and can encourage learners to practice critical thinking.

This forest plot shows the analysis result of the overall effect size across 36 studies.

In addition, this study examined two distinct dimensions of critical thinking to better understand the precise contributions that collaborative problem-solving makes to the growth of critical thinking. The findings (see Table 3 ) indicate that collaborative problem-solving improves cognitive skills (ES = 0.70) and attitudinal tendency (ES = 1.17), with significant intergroup differences (chi 2 = 7.95, P < 0.01). Although collaborative problem-solving improves both dimensions of critical thinking, it is essential to point out that the improvements in students’ attitudinal tendency are much more pronounced and have a significant comprehensive effect (ES = 1.17, z = 7.62, P < 0.01, 95% CI [0.87, 1.47]), whereas gains in learners’ cognitive skill are slightly improved and are just above average. (ES = 0.70, z = 11.55, P < 0.01, 95% CI [0.58, 0.82]).

The analysis of moderator effect size

The whole forest plot’s 79 effect quantities underwent a two-tailed test, which revealed significant heterogeneity ( I 2 = 86%, z = 12.78, P < 0.01), indicating differences between various effect sizes that may have been influenced by moderating factors other than sampling error. Therefore, exploring possible moderating factors that might produce considerable heterogeneity was done using subgroup analysis, such as the learning stage, learning scaffold, teaching type, group size, duration of the intervention, measuring tool, and the subject area included in the 36 experimental designs, in order to further explore the key factors that influence critical thinking. The findings (see Table 4 ) indicate that various moderating factors have advantageous effects on critical thinking. In this situation, the subject area (chi 2 = 13.36, P < 0.05), group size (chi 2 = 8.77, P < 0.05), intervention duration (chi 2 = 12.18, P < 0.01), learning scaffold (chi 2 = 9.03, P < 0.01), and teaching type (chi 2 = 7.20, P < 0.05) are all significant moderators that can be applied to support the cultivation of critical thinking. However, since the learning stage and the measuring tools did not significantly differ among intergroup (chi 2 = 3.15, P = 0.21 > 0.05, and chi 2 = 0.08, P = 0.78 > 0.05), we are unable to explain why these two factors are crucial in supporting the cultivation of critical thinking in the context of collaborative problem-solving. These are the precise outcomes, as follows:

Various learning stages influenced critical thinking positively, without significant intergroup differences (chi 2 = 3.15, P = 0.21 > 0.05). High school was first on the list of effect sizes (ES = 1.36, P < 0.01), then higher education (ES = 0.78, P < 0.01), and middle school (ES = 0.73, P < 0.01). These results show that, despite the learning stage’s beneficial influence on cultivating learners’ critical thinking, we are unable to explain why it is essential for cultivating critical thinking in the context of collaborative problem-solving.

Different teaching types had varying degrees of positive impact on critical thinking, with significant intergroup differences (chi 2 = 7.20, P < 0.05). The effect size was ranked as follows: mixed courses (ES = 1.34, P < 0.01), integrated courses (ES = 0.81, P < 0.01), and independent courses (ES = 0.27, P < 0.01). These results indicate that the most effective approach to cultivate critical thinking utilizing collaborative problem solving is through the teaching type of mixed courses.

Various intervention durations significantly improved critical thinking, and there were significant intergroup differences (chi 2 = 12.18, P < 0.01). The effect sizes related to this variable showed a tendency to increase with longer intervention durations. The improvement in critical thinking reached a significant level (ES = 0.85, P < 0.01) after more than 12 weeks of training. These findings indicate that the intervention duration and critical thinking’s impact are positively correlated, with a longer intervention duration having a greater effect.

Different learning scaffolds influenced critical thinking positively, with significant intergroup differences (chi 2 = 9.03, P < 0.01). The resource-supported learning scaffold (ES = 0.69, P < 0.01) acquired a medium-to-higher level of impact, the technique-supported learning scaffold (ES = 0.63, P < 0.01) also attained a medium-to-higher level of impact, and the teacher-supported learning scaffold (ES = 0.92, P < 0.01) displayed a high level of significant impact. These results show that the learning scaffold with teacher support has the greatest impact on cultivating critical thinking.

Various group sizes influenced critical thinking positively, and the intergroup differences were statistically significant (chi 2 = 8.77, P < 0.05). Critical thinking showed a general declining trend with increasing group size. The overall effect size of 2–3 people in this situation was the biggest (ES = 0.99, P < 0.01), and when the group size was greater than 7 people, the improvement in critical thinking was at the lower-middle level (ES < 0.5, P < 0.01). These results show that the impact on critical thinking is positively connected with group size, and as group size grows, so does the overall impact.

Various measuring tools influenced critical thinking positively, with significant intergroup differences (chi 2 = 0.08, P = 0.78 > 0.05). In this situation, the self-adapting measurement tools obtained an upper-medium level of effect (ES = 0.78), whereas the complete effect size of the standardized measurement tools was the largest, achieving a significant level of effect (ES = 0.84, P < 0.01). These results show that, despite the beneficial influence of the measuring tool on cultivating critical thinking, we are unable to explain why it is crucial in fostering the growth of critical thinking by utilizing the approach of collaborative problem-solving.

Different subject areas had a greater impact on critical thinking, and the intergroup differences were statistically significant (chi 2 = 13.36, P < 0.05). Mathematics had the greatest overall impact, achieving a significant level of effect (ES = 1.68, P < 0.01), followed by science (ES = 1.25, P < 0.01) and medical science (ES = 0.87, P < 0.01), both of which also achieved a significant level of effect. Programming technology was the least effective (ES = 0.39, P < 0.01), only having a medium-low degree of effect compared to education (ES = 0.72, P < 0.01) and other fields (such as language, art, and social sciences) (ES = 0.58, P < 0.01). These results suggest that scientific fields (e.g., mathematics, science) may be the most effective subject areas for cultivating critical thinking utilizing the approach of collaborative problem-solving.

The effectiveness of collaborative problem solving with regard to teaching critical thinking

According to this meta-analysis, using collaborative problem-solving as an intervention strategy in critical thinking teaching has a considerable amount of impact on cultivating learners’ critical thinking as a whole and has a favorable promotional effect on the two dimensions of critical thinking. According to certain studies, collaborative problem solving, the most frequently used critical thinking teaching strategy in curriculum instruction can considerably enhance students’ critical thinking (e.g., Liang et al., 2017 ; Liu et al., 2020 ; Cindy, 2004 ). This meta-analysis provides convergent data support for the above research views. Thus, the findings of this meta-analysis not only effectively address the first research query regarding the overall effect of cultivating critical thinking and its impact on the two dimensions of critical thinking (i.e., attitudinal tendency and cognitive skills) utilizing the approach of collaborative problem-solving, but also enhance our confidence in cultivating critical thinking by using collaborative problem-solving intervention approach in the context of classroom teaching.

Furthermore, the associated improvements in attitudinal tendency are much stronger, but the corresponding improvements in cognitive skill are only marginally better. According to certain studies, cognitive skill differs from the attitudinal tendency in classroom instruction; the cultivation and development of the former as a key ability is a process of gradual accumulation, while the latter as an attitude is affected by the context of the teaching situation (e.g., a novel and exciting teaching approach, challenging and rewarding tasks) (Halpern, 2001 ; Wei and Hong, 2022 ). Collaborative problem-solving as a teaching approach is exciting and interesting, as well as rewarding and challenging; because it takes the learners as the focus and examines problems with poor structure in real situations, and it can inspire students to fully realize their potential for problem-solving, which will significantly improve their attitudinal tendency toward solving problems (Liu et al., 2020 ). Similar to how collaborative problem-solving influences attitudinal tendency, attitudinal tendency impacts cognitive skill when attempting to solve a problem (Liu et al., 2020 ; Zhang et al., 2022 ), and stronger attitudinal tendencies are associated with improved learning achievement and cognitive ability in students (Sison, 2008 ; Zhang et al., 2022 ). It can be seen that the two specific dimensions of critical thinking as well as critical thinking as a whole are affected by collaborative problem-solving, and this study illuminates the nuanced links between cognitive skills and attitudinal tendencies with regard to these two dimensions of critical thinking. To fully develop students’ capacity for critical thinking, future empirical research should pay closer attention to cognitive skills.

The moderating effects of collaborative problem solving with regard to teaching critical thinking

In order to further explore the key factors that influence critical thinking, exploring possible moderating effects that might produce considerable heterogeneity was done using subgroup analysis. The findings show that the moderating factors, such as the teaching type, learning stage, group size, learning scaffold, duration of the intervention, measuring tool, and the subject area included in the 36 experimental designs, could all support the cultivation of collaborative problem-solving in critical thinking. Among them, the effect size differences between the learning stage and measuring tool are not significant, which does not explain why these two factors are crucial in supporting the cultivation of critical thinking utilizing the approach of collaborative problem-solving.

In terms of the learning stage, various learning stages influenced critical thinking positively without significant intergroup differences, indicating that we are unable to explain why it is crucial in fostering the growth of critical thinking.

Although high education accounts for 70.89% of all empirical studies performed by researchers, high school may be the appropriate learning stage to foster students’ critical thinking by utilizing the approach of collaborative problem-solving since it has the largest overall effect size. This phenomenon may be related to student’s cognitive development, which needs to be further studied in follow-up research.

With regard to teaching type, mixed course teaching may be the best teaching method to cultivate students’ critical thinking. Relevant studies have shown that in the actual teaching process if students are trained in thinking methods alone, the methods they learn are isolated and divorced from subject knowledge, which is not conducive to their transfer of thinking methods; therefore, if students’ thinking is trained only in subject teaching without systematic method training, it is challenging to apply to real-world circumstances (Ruggiero, 2012 ; Hu and Liu, 2015 ). Teaching critical thinking as mixed course teaching in parallel to other subject teachings can achieve the best effect on learners’ critical thinking, and explicit critical thinking instruction is more effective than less explicit critical thinking instruction (Bensley and Spero, 2014 ).

In terms of the intervention duration, with longer intervention times, the overall effect size shows an upward tendency. Thus, the intervention duration and critical thinking’s impact are positively correlated. Critical thinking, as a key competency for students in the 21st century, is difficult to get a meaningful improvement in a brief intervention duration. Instead, it could be developed over a lengthy period of time through consistent teaching and the progressive accumulation of knowledge (Halpern, 2001 ; Hu and Liu, 2015 ). Therefore, future empirical studies ought to take these restrictions into account throughout a longer period of critical thinking instruction.

With regard to group size, a group size of 2–3 persons has the highest effect size, and the comprehensive effect size decreases with increasing group size in general. This outcome is in line with some research findings; as an example, a group composed of two to four members is most appropriate for collaborative learning (Schellens and Valcke, 2006 ). However, the meta-analysis results also indicate that once the group size exceeds 7 people, small groups cannot produce better interaction and performance than large groups. This may be because the learning scaffolds of technique support, resource support, and teacher support improve the frequency and effectiveness of interaction among group members, and a collaborative group with more members may increase the diversity of views, which is helpful to cultivate critical thinking utilizing the approach of collaborative problem-solving.